Abstract

Foreign objects such as balloons and nests often lead to widespread power outages by coming into contact with transmission lines. The manual detection of these is labor-intensive work. Automatic foreign object detection on transmission lines is a crucial task for power safety and is becoming the mainstream method, but the lack of datasets is a restriction. In this paper, we propose an advanced model termed YOLOv8 Network with Bidirectional Feature Pyramid Network (YOLOv8_BiFPN) to detect foreign objects on power transmission lines. Firstly, we add a weighted cross-scale connection structure to the detection head of the YOLOv8 network. The structure is bidirectional. It provides interaction between low-level and high-level features, and allows information to spread across feature maps of different scales. Secondly, in comparison to the traditional concatenation and shortcut operations, our method integrates information between different scale features through weighted settings. Moreover, we created a dataset of Foreign Object detection on Transmission Lines from a Drone-view (FOTL_Drone). It consists of 1495 annotated images with six types of foreign object. To our knowledge, FOTL_Drone stands out as the most comprehensive dataset in the field of foreign object detection on transmission lines, which encompasses a wide array of geographic features and diverse types of foreign object. Experimental results showcase that YOLOv8_BiFPN achieves an average precision of 90.2% and an mAP@.50 of 0.896 across various categories of foreign objects, surpassing other models.

1. Introduction

With the increasing demand for electricity, there has been a surge in the construction of overhead transmission lines [1,2,3]. This has been an important task for the protection of transmission lines [4,5,6]. Consequently, incidents involving foreign objects like bird nests and kites coming into contact with these lines have been increasing [7,8,9]. Foreign objects on power lines pose a significant risk of causing tripping incidents, which can severely impact the secure and stable operation of the power grid [10,11,12]. The automatic detection of foreign objects in time can provide useful information for managers or maintenance workers to handle the hidden danger targets.

Traditional methods rely on hand-crafted features. Tiand et al. [13] proposed an insulator detection method based on features fusion and Support Vector Machine (SVM) classifier. However, the reliance on the manual design and selection of features restricts its generalization capability and detection accuracy. Song et al. [14] introduced a local contour detection method that does not consider the relationship between power lines and foreign objects. Although it improves detection speed, it lacks consideration of the physical relationship between power lines. The foreign objects may limit its overall performance in practical applications. In summary, hand-crafted feature-based methods are easy to implement and perform well in detecting foreign objects on transmission lines in simple scenarios. However, when it comes to detecting foreign objects in complex scenarios, these methods exhibit limitations.

Currently, AI-based methods are being applied to object detection with unmanned aerial vehicles [15,16,17,18,19,20]. They are capable of automatically learning features, and identifying the positions of foreign objects. AI-based drone inspection has become an effective means to recognize the foreign objects on transmission lines [21,22,23]. Researchers around the globe have proposed several methods for detecting foreign objects [24,25,26]. According to the stages, these methods can be categorized into end-to-end detectors and multi-stage detectors.

End-to-End Detectors. Li et al. [27] proposed a lightweight model, which leverages YOLOv3, MobileNetV2, and depthwise separable convolutions. They considered various foreign objects such as smoke and fire. The method achieves a smaller model size and higher detection speed compared to existing models. However, its detection accuracy needs improvement, especially in detecting small-sized objects. Wu et al. [28] introduced an improved YOLOX technique for foreign object detection on transmission lines. It enhances recognition accuracy by embedding the convolutional block attention module and optimizes using the Generalized Intersection over Union (GIoU) loss. The results show that the enhanced YOLOX has an mAP improvement of 4.24%. However, the lack of detailed classification of foreign objects hinders the implementation of corresponding removal measures. Wang et al. [29] proposed an improved model based on YOLOv8m. They introduced a global attention module to focus on obstructed foreign objects. The Spatial Pyramid Pooling Fast (SPPF) module is replaced by the Spatial Pyramid Pooling and Cross Stage Partial Connection (SPPCSPC) module to augment the model’s multi-scale feature extraction capability. Ji et al. [30] designs an Multi-Fusion Mixed Attention Module-YOLO (MFMAM-YOLO) to efficiently identify foreign objects on transmission lines in complex environments. It provides a valuable tool for maintaining the integrity of transmission lines. Yang et al. [31] developed a foreign object recognition algorithm based on the Denoising Convolutional Neural Network (DnCNN) and YOLOv8. They considered various types of foreign object like bird nests, kites, and balloons. Its mean average precision (mAP) is 82.9%. However, there is a significant room for improvement in detection performance, and the diversity of categories in the dataset needs to be enriched.

Multi-Stage Detectors. Guo et al. [32] initially constructed a dataset for transmission line images and then utilized the Faster Region-based Convolutional Neural Network (Faster R-CNN) to detect foreign objects such as fallen objects, kites, and balloons. Compared to end-to-end detection, it can handle various shapes and sizes of foreign objects, demonstrating superior versatility and adaptability. Chen et al. [33] employed Mask R-CNN for detecting foreign objects on transmission lines. The method showed strong performance in speed, efficiency, and precision for detecting foreign objects. Dong et al. [34] explored the issue of detecting overhead transmission lines from aerial images captured by Unmanned Aerial Vehicles (UAV). The proposed method combines shifted window and balanced feature pyramid with Cascade R-CNN technology to enhance feature representative capabilities. It achieves 7.8%, 11.8%, and 5.5% higher detection accuracy than the baseline for mAP50, relative small and medium mAP, respectively.

Both end-to-end algorithms and multi-stage algorithms obtain good results on the given datasets. Multi-stage detection algorithms demonstrate clear advantages in detection accuracy, while the computational resources and detection time required are also substantial. In contrast, end-to-end detectors are trained with light models, and expected to achieve a trade-off between detection accuracy and speed. Our work belongs to end-to-end detectors.

The dataset for foreign object detection on transmission lines is crucial for model training. They can be categorized into two groups: simulated datasets [35,36] and realistic datasets [7,37,38,39]. Simulated datasets are generated by data augmentation techniques. Ge et al. [35] augmented their database using image enhancement techniques and manually labeled a dataset comprising 3685 images of bird nests on transmission lines. Similarly, Bi et al. [36] created a synthetic dataset focusing on bird nests on transmission towers under different environmental conditions. The dataset comprised 2864 images for training and 716 images for testing. Although these datasets simulate changes in environmental conditions through data augmentation techniques, they primarily cover a singular category of foreign objects on transmission lines. Realistic datasets are collected from real-world scenarios. Ning et al. [37] expanded the variety in their created dataset, encompassing categories such as construction vehicles, flames, and cranes, a total of 1400 images. Zhang et al. [38] established a dataset, consisting of 20,000 images, including categories like wildfires, additional objects impacting power lines, tower cranes, and regular cranes. Liu et al. [7], based on aerial images, focused on bird nests, hanging objects, wildfires, and smoke as the four categories for detection, creating a dataset comprising 2496 images. Yu et al. [39] refined five types of construction vehicle with bird nests from drone-view images. Li et al. created a dataset including four classes: bird nests, balloons, kites, and trash [40].

Existing datasets are not open source and suffer from limitations such as a limited variety of foreign objects, limited scenarios, and low quality. This significantly impedes progress in research within this field.

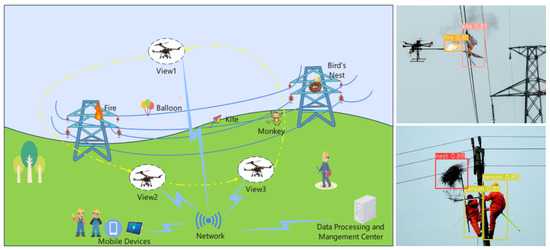

The motivation of this paper is to propose a deep learning-based approach for automatic foreign object detection. Some detection results are presented in the Figure 1. Considering the fact of lacking datasets, we create a comprehensive dataset to facilitate the model training for foreign object detection on power transmission lines.

Figure 1.

The left is the diagram of foreign object detection on transmission lines. The right are the results of foreign object detection (nest, person, fire, kite, etc.) by our detector.

The main contributions of this paper are summarized as follows:

- We propose an end-to-end method YOLOv8_BiFPN to detect foreign objects on power transmission lines. It integrates a weighted bidirectional cross-scale connection structure into the detection head of the YOLOv8 network. It outperforms the base-line model significantly.

- This paper creates a dataset of foreign objects on transmission lines from drone perspective named FOTL_Drone. It covers six distinct types of foreign object with 1495 annotated images, which is currently the most comprehensive dataset for the detection of foreign objects on transmission lines.

- Experimental results on the proposed FOTL_Drone dataset demonstrate YOLOv8_BiFPN model’s effectiveness, with an average precision of 90.2% and an average mAP@.50 of 0.896, outperforming other models.

2. The Proposed Dataset

Recently, drone inspection has gradually emerged as the primary means for detecting foreign objects on transmission lines. In this section, we will present a dataset of foreign objects on transmission lines from drone perspective (FOTL_Drone). This dataset comprises a total of 1495 annotated images, capturing six types of foreign object. The images in the dataset are primarily sourced from the following:

- Image search: Google, Bing, Baidu, Sogou, etc.

- Screenshots from video sites: YouTube, Bilibili, etc.

- Some images are captured from drone-view inspections on transmission lines.

To generate class labels, a visual image annotation tool called labelimg is employed. Annotations are saved in XML format following the PASCAL VOC standard. Six categories are included: nest, kite, balloon, fire, person, and monkey. These images are split into training and testing sets in an 8:2 ratio, with the training set comprising 1196 images and the testing set containing 299 images.

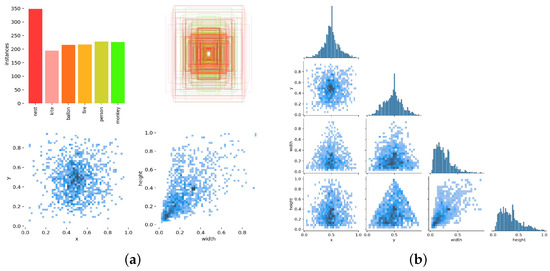

Figure 2 presents the analysis of the proposed FOTL_Drone dataset. Figure 2a sheds light on four key aspects: the data volume of the training set, showing balanced sample quantities across categories except for bird nests; the distribution and quantity of bounding box sizes, indicating diverse box dimensions; the position of bounding box center points within the images; and the distribution of target height-to-width ratios. Figure 2b depicts label correlation modeling, where cell color intensity in the matrix indicates the correlation between labels, with darker cells representing stronger associations, while the lighter cells indicating weaker ones. Figure 3 illustrates six classes of foreign object on transmission lines.

Figure 2.

The label analysis of the proposed FOTL_Drone dataset. (a) Analysis of Transmission Line Foreign Object Detection Dataset. (b) Label correlation modeling analysis for Transmission Line Foreign Object Detection Dataset.

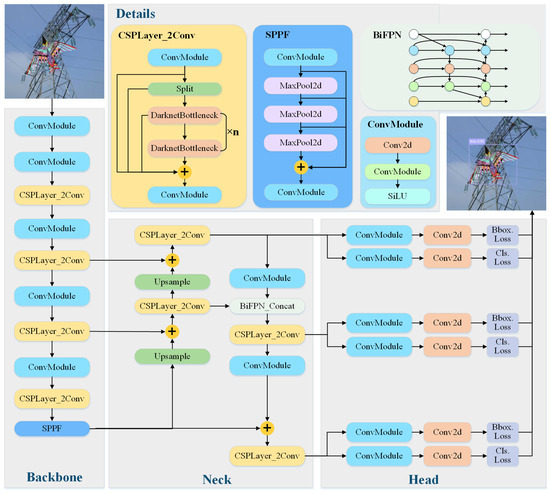

Figure 3.

Some samples belong to FOTL_Drone dataset.

Moreover, the FOTL_Drone dataset is different from other datasets for the following reasons:

- Many existing datasets only have a number of limited categories of foreign objects on transmission lines, for example, two or three. In contrast, our FOTL_Drone expands the breadth of potential foreign objects on transmission lines to six classes.

- The majority of existing datasets cover a limited range of scenarios. The images in the proposed FOTL_Drone dataset are selected from the drone perspective, which adds scene diversity. This is attributed to the variable shooting positions of the drone.

- Existing datasets only include the static foreign objects. In contrast, our dataset not only includes static foreign objects such as kites and balloons, but also moving objects such as power workers and monkeys.

3. Methodology

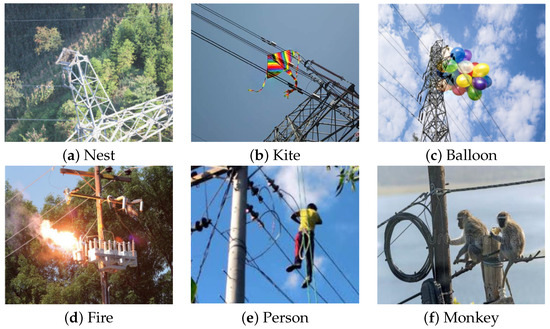

By integrating a weighted bidirectional cross-scale connection structure into the detection head of the YOLOv8 network, we propose a detector fusing YOLOv8 with Bidirectional Feature Pyramid Network (YOLOv8_BiFPN). Its network architecture is depicted in Figure 4.

Figure 4.

The architecture of the proposed YOLOv8_BiFPN. It consists of three components: Backbone, Neck, and Head. The diagram also provides a detailed depiction of four module structures (CSPLayer_2Conv, SPPF, ConvModule, and BiFPN).

3.1. The Proposed YOLOv8_BiFPN

In the task of using drones to detect foreign objects on transmission lines, complex and variable environmental conditions are unavoidable, such as weather, lighting, and background interference. These factors pose limitations on traditional target detection algorithms in terms of extracting target features and adapting to different scenarios. The linear structure of power lines and the diversity of foreign objects make detection diffult, as they may appear in various shapes, scales, and orientations.

YOLOv8 achieves multi-scale feature fusion through its Feature Pyramid Network (FPN) architecture, allowing it to extract features at different scales. However, traditional FPN utilizes a unidirectional top-down pathway for feature fusion. This may result in not fully leveraging feature information to effectively capture and distinguish subtle differences between targets and backgrounds. Therefore, we introduce a Bidirectional Feature Pyramid Network (BiFPN) structure into the detection head of YOLOv8 to enhance the performance of target detection. Specifically, the BiFPN module is integrated during the process of connecting three layers of the network to form the detection head, as illustrated in Figure 4. By enhancing multi-scale feature fusion and bidirectional information transmission, the network can better adapt to complex environments and diverse target shapes.

BiFPN is a simple and efficient pyramid network that is first proposed in EfficientDet [41]. It introduces learnable weights to understand the importance of different input features while repeatedly applying both top-down and bottom-up multi-scale feature fusion. As illustrated in Figure 4, we incorporate the BiFPN_Concat structure into the neck of YOLOv8 to achieve bidirectional flow of feature information across different scales. This bidirectional flow can be seen as an effective exchange of information between different scales. The design of BiFPN_Concat aims to enhance the efficiency and effectiveness of feature fusion by reinforcing the bidirectional flow of features, thereby improving the performance of object detection.

BiFPN adopts a fast normalization-weighted fusion, which can be represented by Equation (1). In this equation, the application of Rectified Linear Unit (ReLU) after each ensures . The term is a very small number used to ensure numerical stability. The normalized weight values obtained through this process are similar to softmax, ranging from 0 to 1. Overall, it demonstrates that this rapid fusion strategy can achieve with high accuracy compared to the fusion strategy of softmax, coupled with faster training speed.

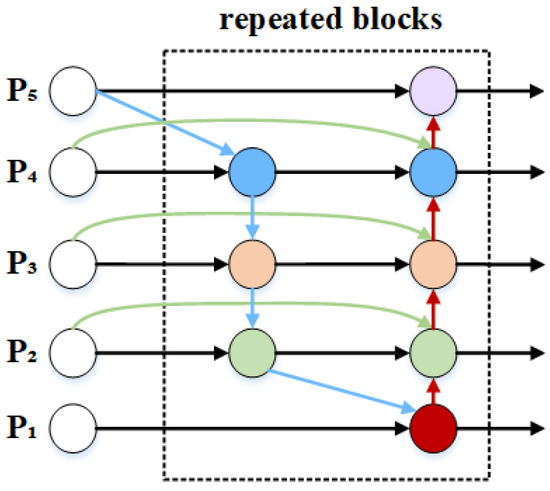

To give a good understanding of the pyramid structure of BiFPN, we have illustrated its detailed architecture in Figure 5. In comparison to FPN, it removes nodes with only one input edge to simplify the bidirectional network. When a node has only one input edge and lacks feature fusion, its contribution to the feature network aimed at integrating different features becomes minimal. As shown in Figure 5, the first node on the right side of is removed. The green lines in the figure represent edges added between the same layer’s original input nodes and output nodes. This is aimed at integrating more features without significantly increasing costs. The labeled “repeated blocks” in the figure represent treating each bidirectional pathway as a feature network layer and repeating the same layer multiple times to achieve higher-level feature fusion.

Figure 5.

The architecture of Bidirectional Feature Pyramid Network (BiFPN).

To illustrate the computational process of weighted feature fusion, we described the two fusion features of the BiFPN shown in the i-th level in the figure using Equation (2) and (3). In these equations, denotes the intermediate feature at the i-th level in the top-down hierarchy, while represents the output feature at the i-th level in the bottom-up hierarchy.

3.2. The Loss Functions

The loss function of YOLOv8_BiFPN comprises multiple components, including classification loss and regression loss. Specifically, the regression loss encompasses two distinct loss functions.

Classification Loss. Binary Cross-Entropy (BCE) is employed as the classification loss in YOLOv8_BiFPN. The computation process of BCE can be represented by Equation (4), where y represents a binary label of 0 or 1, and denotes the probability of the output belonging to the label y. In simple terms, for the case where the label y is 1, if the predicted value approaches 1, the value of loss function will tend towards 0. Conversely, if the predicted value approaches 0 in this case, the value of loss function will be large.

Regression Loss. The regression branch employs Distribution Focal Loss (DFL) and CIoU Loss. The computation process of DFL can be represented by Equation (5). Where, and represent the probability predictions at the i-th and i + 1-th distribution positions. y represents the actual bounding box position. and represent the two discrete positions closest to y, which are the actual values at positions i and . During actual training, DFL is utilized to compute the loss for both the bounding box distribution probability and the label distribution probability, thereby optimizing each boundary. Subsequently, the bounding box distribution probability is restored to prediction boxes, and the CIoU loss is applied to compute the loss between the predicted boxes and the actual boxes, thus optimizing the overall prediction boxes.

4. Experiments

In this section, we conduct a series of experiments to validate the advantages of the proposed YOLOv8_BiFPN model on the FOTL_Drone dataset. Firstly, Section 4.1 introduces the overall experimental environment and evaluation metrics. Section 4.2 elucidates the rationale behind choosing YOLOv8_BiFPN. Thirdly, our method is compared with other methods on the FOTL_Drone dataset in Section 4.3. Section 4.4 presents some discussions.

4.1. Experimental Settings and Evaluation Metrics

The experimental environment is detailed in Table 1. To facilitate comparisons with other models, the wide evaluation metrics are used.

Table 1.

Running environment for experiments.

First, Intersection-over-Union (IoU) represents the overlap or intersection between the candidate bounding box and the ground truth bounding box, as expressed in Equation (6).

Common metrics Precision, Recall, Average Precision (AP) and mean Average Precision (mAP) are often used for statistical analysis and comparison,

where , , and represent the number of true positives, false positives and false negatives. n is the number of classes. is introduced to evaluate the accuracy of each class with Recall and Precision. is adopted to assess the overall performance of models. In this paper, the used mAP metrics are mAP@.50 and mAP@.50:95.

4.2. Model Selection

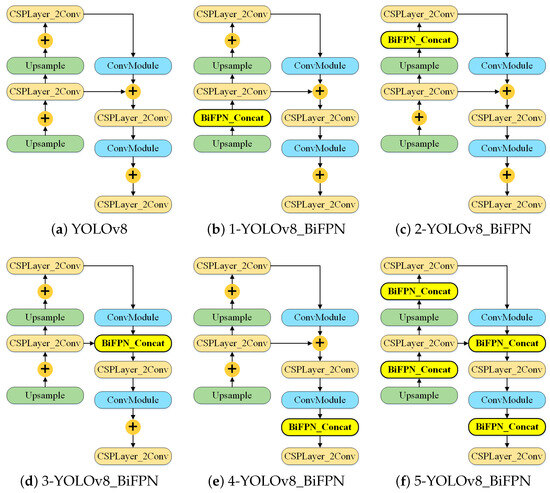

To enhance the performance of the baseline model and investigate the impact of the feature pyramid, a series of experiments have been conducted using BiFPN modules with different embedding forms. We insert the BiFPN module at five distinct positions within the YOLOv8 network. Figure 6 illustrates the detailed Neck structure of improved YOLOv8, denoted as 1-YOLOv8_BiFPN, 2-YOLOv8_BiFPN, 3-YOLOv8_BiFPN, 4-YOLOv8_BiFPN, and 5-YOLOv8_BiFPN, corresponding to the different insertion points. Subsequently, the original YOLOv8 model and the five modified YOLOv8 models were evaluated on the FOTL_Drone dataset.

Figure 6.

Insertion of BiFPN at different positions of the neck structure of YOLOv8. (a) represents the original structure of YOLOv8 Neck, while (b–e) depict the integration of the BiFPN structure at four different locations. (f) represents the simultaneous integration of the BiFPN structure at the aforementioned four locations.

We have divided the FOTL_Drone dataset into a training set and a test set with an 8:2 ratio, and initialized the training process with pre-trained weights. The batch size is set to 4, and the training is conducted for 80 epochs. All models are trained under the same running environment.

The detection performance of the aforementioned models on the FOTL_Drone dataset is shown in Table 2. Table 3 presents the average performance of the models across different categories. Additionally, it includes the inference time of each model. The models with improvements show minimal differences in inference time compared to YOLOv8. In terms of precision, 4-YOLOv8_BiFPN exhibits a 1.5% decrease compared to YOLOv8, while the other improved models keep an increasing tendency. As far as mAP is concerned, only 3-YOLOv8_BiFPN surpasses YOLOv8 in both metrics mAP@.50 and mAP@.50:95. It is evident that the performance of 3-YOLOv8_BiFPN surpasses that of all other models.

Table 2.

Detailed comparison of original YOLOv8 and five variants on FOTL_Drone dataset.

Table 3.

Average comparison of original YOLOv8 and five variants on FOTL_Drone dataset.

We have analyzed and discussed the results from Table 2 and Table 3. Since the drones capture images from various angles and distances, the size of foreign objects varies significantly. This requires the model to effectively detect objects at multiple scales. The 1-YOLOv8_BiFPN introduces BiFPN early into the lower-level feature fusion and results in information loss or excessive processing of features. The 2-YOLOv8_BiFPN and the 4-YOLOv8_BiFPN integrate BiFPN into small and large object detection head, respectively. Detecting small objects requires high-resolution features. However, BiFPN over-processes low-level features, reducing the detailed information. Large objects are usually detected with high-level features, and BiFPN leads to inappropriate changes in the resolution of feature maps. The 5-YOLOv8_BiFPN integrates BiFPN into all concat operations; however, the complex fusion mechanisms across all levels may not meet the task demands. The 3-YOLOv8_BiFPN integrates BiFPN into a medium object detection head. Medium-level features provide balanced resolution and abstraction, ideal for multi-scale feature fusion. As a result, it achieves superior performance.

To enhance the detection performance of the network comprehensively, we explored various improvements to the YOLOv8 architecture. For instance, we have introduced the Slim Neck [42], Coordinate Attention (CoordAtt) [43], and Revisiting Mobile CNN From ViT Perspective (RepViT) [44], respectively. Table 4 shows the detection results of 3-YOLOv8_BiFPN in comparison to other enhanced models across six categories in the FOTL_Drone dataset. The 3-YOLOv8_BiFPN exhibits leading performance metrics in terms of mAP@.50 and mAP@.50:95 across all categories. Apart from YOLOv8_CoordAtt, the difference in inference time among other models is relatively small. In summary, considering the performance among these models, the 3-YOLOv8_BiFPN is selected as the final improved YOLOv8 network, which is also shown in Figure 4.

Table 4.

Detailed comparison of YOLOv8_BiFPN and improved models on FOTL_Drone dataset.

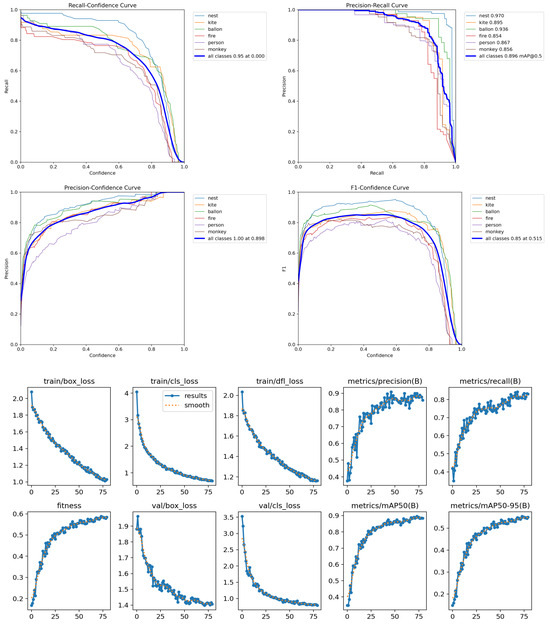

Figure 7 illustrates the key metric curves of the proposed YOLOv8_BiFPN model during the training process. The top four sub-images depict curves of common metrics such as precision, recall, and F1 score as functions of confidence threshold. It shows all the changings of six types of foreign object. The bottom six plots show the variations of loss function values and various evaluation metrics with training iteration numbers increasing in the training process. This clearly sheds light on the model’s dynamic changes.

Figure 7.

This key metric curves of the proposed YOLOv8_BiFPN model during the training process.

4.3. Comparison on FOTL_Drone Dataset

(1) Quantitative Results: To validate the effectiveness of the YOLOv8_BiFPN model, we compared it with four end-to-end models including Gold_YOLO [45], YOLOv8, YOLOv7 [46], and RetinaNet [47]. The experiments were conducted under the same environment with default parameter settings.

Table 5 presents the comparative results on FOTL_Drone dataset. Our YOLOv8_BiFPN achieves an mAP@.50 score of 89.6%, surpassing other comparative models by more than 2%. Taking all metrics into consideration, our model outperforms other end-to-end models in the task of detecting foreign objects on transmission lines.

Table 5.

Quantitative results of various models on FOTL_Drone dataset.

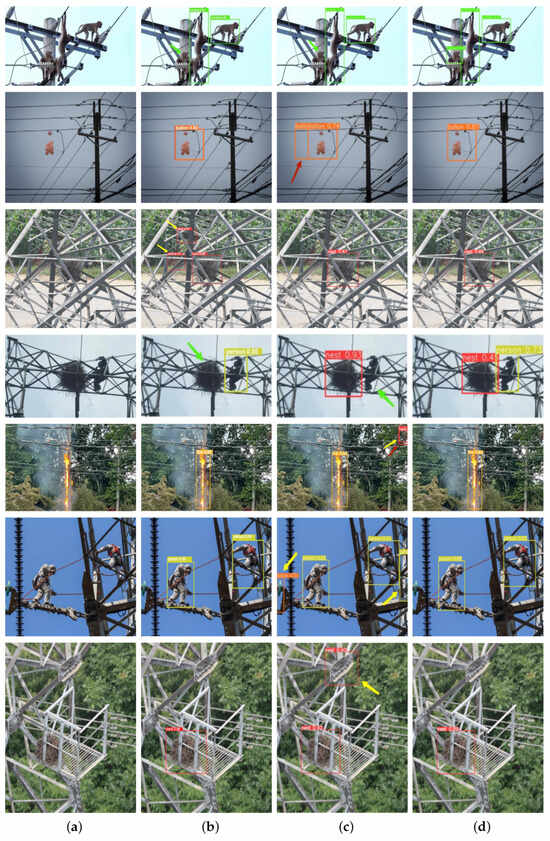

(2) Visual Results: For visual comparison, YOLOv7, YOLOv8, and our method are presented in Figure 8; specifically, the comparative output images obtained from YOLOv7, YOLOv8, and YOLOv8_BiFPN. The output images are annotated with detailed information about the detected targets, including their categories and positional details, along with the corresponding prediction probability values.

Figure 8.

Visual comparison of YOLOv7, YOLOv8, and YOLOv8_BiFPN detection results. The yellow arrows point to false-positive boxes, the red arrows point to overlapping boxes, and the green arrows point to undetected objects. (a) Input images. (b) YOLOv7. (c) YOLOv8. (d) YOLOv8_BiFPN.

As shown in Figure 8, a visual comparison of the methods using the FOTL_Drone dataset is presented. Different colors of predicted boxes represent different classes, i.e., green for the monkey class, orange for the balloon class, red for the nest class, dark yellowish-green for the person class, and brown-orange for the fire class. The colors of arrows represent detection errors, i.e., the yellow arrows point to false positive boxes, the red arrows point to overlapping boxes, and the green arrows point to undetected objects. Figure 8d shows that the proposed method can reduce the detection errors well.

More detection results are shown in Figure 9. The number of targets and complexity progressively increase from the first row to the last row. Some objects tend to be dark, one potential reason for which is that most of images are captured with the sky as the background. For example, when the view is from the bottom to top, foreign objects look like dark objects, such as the nests and the workers in the second row. The occlusion is also a challenging scene, because the objects are easily occluded by the infrastructure of transmission lines, such as steel structures and lines. As far as the fire images are concerned, they are treated as an important issue for the safety of transmission lines. It can be seen from Figure 9 that our method can detect it accurately, even it is caused by a UAV.

Figure 9.

More visual results obtained by the proposed YOLOv8_BiFPN model.

As a consequence, the proposed method performs remarkably well on the FOTL_Drone dataset, especially for moderate and complex scenes. It outperforms other end-to-end models in terms of efficacy and accuracy.

4.4. Discussions

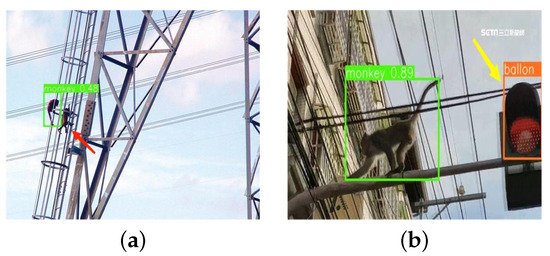

Although the proposed YOLOv8_BiFPN exhibits high mAPs in the detection of foreign objects on transmission lines, we admit that there are some detection failures. Figure 10 illustrates two types of detection error: missed detection and false detection. Missed detection is indicated by the red arrow. In (a), the target is relatively small and unclear, resulting in the missing detection. In (b), a traffic light is detected as a balloon, which is indicated by a yellow arrow. It can be seen from the figure that the traffic light is similar to the red balloon. These failures need to be handled. There is still room for the improvement of the obscured and small target detection.

Figure 10.

Some detection failures. The red arrows point to undetected objects, and the yellow arrows point to false positive boxes. (a) Missed detection. (b) False detection.

To analyze model performance for different sizes of objects, we have classified the test set in FOTL_Drone dataset. Based on the proportion of objects in the images, the 299 images were divided into large objects (83 images), medium objects (110 images) and small objects (106 images). These images were tested on YOLOv8_BiFPN, and the detection results are presented in Table 6. It shows that the model performs well in detecting large and medium objects, achieving mAP@.50 above 90%. However, its performance drops significantly for small objects. Thus, it is a crucial task to improve the model’s performance for small object detection.

Table 6.

The performance of YOLOv8_BiFPN for different sizes of objects.

In the future, we aim to enhance the performance of the detector in two ways: (1) collect more foreign object images on transmission line from a drone perspective to further enrich the FOTL_Drone dataset; (2) explore the incorporation of contextual information for improving detection accuracy.

5. Conclusions

In this paper, we proposed a rapid and efficient detector for foreign object detection on transmission lines. It is an end-to-end method and aims to provide help to ensure the stability of the power grid. The proposed feature fusion model, termed YOLOv8_BiFPN, integrates a weighted interactive feature pyramid into the YOLOv8. Moreover, we have created the FOTL_Drone dataset, comprising 1495 annotated images of foreign objects. To our knowledge, this is the first comprehensive dataset used for foreign object detection on transmission lines. Experimental results on the FOTL_Drone dataset demonstrate an overall mAP@.50 of 89.6% for our approach. In the future, we will invest in research on ways to improve the detection accuracy for obscured and small targets on transmission lines. Hopefully our work can contribute to the development of a more intelligent foreign object detection system on power transmission lines in both the academic and engineering domains.

Author Contributions

Conceptualization, B.W. and C.L.; methodology, C.L.; validation, B.W., C.L. and W.Z.; formal analysis, W.Z.; investigation, Q.Z.; resources, Q.Z.; data curation, C.L.; writing—original draft preparation, C.L.; writing—review and editing, B.W. and Q.Z.; visualization, B.W. and C.L.; supervision, B.W.; project administration, W.Z.; funding acquisition, W.Z. and Q.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by the Basic Research Programs of Taicang, 2023, under number TC2023JC23, and the Guangdong Provincial Key Laboratory, under number 2023B1212060076.

Data Availability Statement

https://github.com/Changping-Li/FOTL_Drone accessed on 20 July 2024.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| BiFPN | Bidirectional Feature Pyramid Network |

| FOTL_Drone | Foreign Object detection on Transmission Lines from a Drone-view |

References

- Sharma, P.; Saurav, S.; Singh, S. Object detection in power line infrastructure: A review of the challenges and solutions. Eng. Appl. Artif. Intell. 2024, 130, 107781. [Google Scholar] [CrossRef]

- Feng, L.; Zhang, L.; Gao, Z.; Zhou, R.; Li, L. Gabor-YOLONet: A lightweight and efficient detection network for low-voltage power lines from unmanned aerial vehicle images. Front. Energy Res. 2023, 10, 960842. [Google Scholar] [CrossRef]

- Liao, J.; Xu, H.; Fang, X.; Miao, Q.; Zhu, G. Quantitative assessment framework for non-structural bird’s nest risk information of transmission tower in high-resolution UAV images. IEEE Trans. Instrum. Meas. 2023, 72, 5013712. [Google Scholar] [CrossRef]

- Song, Z.; Huang, X.; Ji, C.; Zhang, Y. Deformable YOLOX: Detection and rust warning method of transmission line connection fittings based on image processing technology. IEEE Trans. Instrum. Meas. 2023, 72, 1–21. [Google Scholar] [CrossRef]

- Souza, B.J.; Stefenon, S.F.; Singh, G.; Freire, R.Z. Hybrid-YOLO for classification of insulators defects in transmission lines based on UAV. Int. J. Electr. Power Energy Syst. 2023, 148, 108982. [Google Scholar] [CrossRef]

- Boukabou, I.; Kaabouch, N. Electric and magnetic fields analysis of the safety distance for UAV inspection around extra-high voltage transmission lines. Drones 2024, 8, 47. [Google Scholar] [CrossRef]

- Liu, B.; Huang, J.; Lin, S.; Yang, Y.; Qi, Y. Improved YOLOX-S abnormal condition detection for power transmission line corridors. In Proceedings of the 2021 IEEE 3rd International Conference on Power Data Science (ICPDS), IEEE, Harbin, China, 26 December 2021; pp. 13–16. [Google Scholar]

- Wu, K.; Chen, Y.; Lu, Y.; Yang, Z.; Yuan, J.; Zheng, E. SOD-YOLO: A high-precision detection of small targets on high-voltage transmission lines. Electronics 2024, 13, 1371. [Google Scholar] [CrossRef]

- Li, H.; Dong, Y.; Liu, Y.; Ai, J. Design and implementation of uavs for bird’s nest inspection on transmission lines based on deep learning. Drones 2022, 6, 252. [Google Scholar] [CrossRef]

- Bian, H.; Zhang, J.; Li, R.; Zhao, H.; Wang, X.; Bai, Y. Risk analysis of tripping accidents of power grid caused by typical natural hazards based on FTA-BN model. Nat. Hazards 2021, 106, 1771–1795. [Google Scholar] [CrossRef]

- Kovács, B.; Vörös, F.; Vas, T.; Károly, K.; Gajdos, M.; Varga, Z. Safety and security-specific application of multiple drone sensors at movement areas of an aerodrome. Drones 2024, 8, 231. [Google Scholar] [CrossRef]

- Yu, H.; Zhang, K.; Zhao, X.; Zhang, Y.; Cui, B.; Sun, S.; Liu, G.; Yu, B.; Ma, C.; Liu, Y.; et al. Research on data link channel decoding optimization scheme for drone power inspection scenarios. Drones 2023, 7, 662. [Google Scholar] [CrossRef]

- Tiantian, Y.; Guodong, Y.; Junzhi, Y. Feature fusion based insulator detection for aerial inspection. In Proceedings of the 2017 36th Chinese Control Conference (CCC), IEEE, Dalian, China, 26–28 July 2017; pp. 10972–10977. [Google Scholar]

- Song, Z.; Xin, S.; Gui, X.; Qi, G. Power line recognition and foreign objects detection based on image processing. In Proceedings of the 2021 33rd Chinese Control and Decision Conference (CCDC), IEEE, Kunming, China, 22–24 May 2021; pp. 6689–6693. [Google Scholar]

- He, M.; Qin, L.; Deng, X.; Zhou, S.; Liu, H.; Liu, K. Transmission line segmentation solutions for uav aerial photography based on improved unet. Drones 2023, 7, 274. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhang, L.; Wang, Y.; Feng, P.; Sun, B. Bidirectional parallel feature pyramid network for object detection. IEEE Access 2022, 10, 49422–49432. [Google Scholar] [CrossRef]

- Mo, Y.; Wang, L.; Hong, W.; Chu, C.; Li, P.; Xia, H. Small-scale foreign object debris detection using deep learning and dual light modes. Appl. Sci. 2024, 14, 2162. [Google Scholar] [CrossRef]

- Han, G.; Wang, R.; Yuan, Q.; Zhao, L.; Li, S.; Zhang, M.; He, M.; Qin, L. Typical fault detection on drone images of transmission lines based on lightweight structure and feature-balanced network. Drones 2023, 7, 638. [Google Scholar] [CrossRef]

- Chen, M.; Li, J.; Pan, J.; Ji, C.; Ma, W. Insulator extraction from UAV lidar point cloud based on multi-type and multi-scale feature histogram. Drones 2024, 8, 241. [Google Scholar] [CrossRef]

- Zhao, X.; Ma, Y.; Wang, D.; Shen, Y.; Qiao, Y.; Liu, X. Revisiting open world object detection. IEEE Trans. Circuits Syst. Video Technol. 2023, 34, 3496–3509. [Google Scholar] [CrossRef]

- Aminifar, F.; Rahmatian, F. Unmanned aerial vehicles in modern power systems: Technologies, use cases, outlooks, and challenges. IEEE Electrif. Mag. 2020, 8, 107–116. [Google Scholar] [CrossRef]

- Li, X.; Li, Z.; Wang, H.; Li, W. Unmanned aerial vehicle for transmission line inspection: Status, standardization, and perspectives. Front. Energy Res. 2021, 9, 713634. [Google Scholar] [CrossRef]

- Kim, S.; Kim, D.; Jeong, S.; Ham, J.W.; Lee, J.K.; Oh, K.Y. Fault diagnosis of power transmission lines using a UAV-mounted smart inspection system. IEEE Access 2020, 8, 149999–150009. [Google Scholar] [CrossRef]

- Zhang, W.; Liu, X.; Yuan, J.; Xu, L.; Sun, H.; Zhou, J.; Liu, X. RCNN-based foreign object detection for securing power transmission lines. Procedia Comput. Sci. 2019, 147, 331–337. [Google Scholar] [CrossRef]

- Tang, C.; Dong, H.; Huang, Y.; Han, T.; Fang, M.; Fu, J. Foreign object detection for transmission lines based on Swin Transformer v2 and YOLOX. Vis. Comput. 2023, 40, 3003–3021. [Google Scholar] [CrossRef]

- Zhu, J.; Guo, Y.; Yue, F.; Yuan, H.; Yang, A.; Wang, X.; Rong, M. A deep learning method to detect foreign objects for inspecting power transmission lines. IEEE Access 2020, 8, 94065–94075. [Google Scholar] [CrossRef]

- Li, H.; Liu, L.; Du, J.; Jiang, F.; Guo, F.; Hu, Q.; Fan, L. An improved YOLOv3 for foreign objects detection of transmission lines. IEEE Access 2022, 10, 45620–45628. [Google Scholar] [CrossRef]

- Wu, M.; Guo, L.; Chen, R.; Du, W.; Wang, J.; Liu, M.; Kong, X.; Tang, J. Improved YOLOX foreign object detection algorithm for transmission lines. Wirel. Commun. Mob. Comput. 2022, 2022, 835693. [Google Scholar] [CrossRef]

- Wang, Z.; Yuan, G.; Zhou, H.; Ma, Y.; Ma, Y. Foreign-object detection in high-voltage transmission line based on improved yolov8m. Appl. Sci. 2023, 13, 12775. [Google Scholar] [CrossRef]

- Ji, C.; Chen, G.; Huang, X.; Jia, X.; Zhang, F.; Song, Z.; Zhu, Y. Enhancing transmission line safety: Real-time detection of foreign objects using mfmam-yolo algorithm. IEEE Instrum. Meas. Mag. 2024, 27, 13–21. [Google Scholar] [CrossRef]

- Yang, S.; Zhou, Y. Abnormal object detection with an improved yolov8 in the transmission lines. In Proceedings of the 2023 China Automation Congress (CAC), IEEE, Chongqing, China, 17–19 November 2023; pp. 9269–9273. [Google Scholar]

- Guo, S.; Bai, Q.; Zhou, X. Foreign object detection of transmission lines based on faster R-CNN. In Information Science and Applications: ICISA 2019; Springer: Singapore, 2020; pp. 269–275. [Google Scholar]

- Chen, W.; Li, Y.; Li, C. A visual detection method for foreign objects in power lines based on mask R-CNN. Int. J. Ambient Comput. Intell. (IJACI) 2020, 11, 34–47. [Google Scholar] [CrossRef]

- Dong, C.; Zhang, K.; Xie, Z.; Shi, C. An improved cascade RCNN detection method for key components and defects of transmission lines. IET Gener. Transm. Distrib. 2023, 17, 4277–4292. [Google Scholar] [CrossRef]

- Ge, Z.; Li, H.; Yang, R.; Liu, H.; Pei, S.; Jia, Z.; Ma, Z. Bird’s nest detection algorithm for transmission lines based on deep learning. In Proceedings of the 2022 3rd International Conference on Computer Vision, Image and Deep Learning & International Conference on Computer Engineering and Applications (CVIDL & ICCEA), IEEE, Changchun, China, 20–22 May 2022; pp. 417–420. [Google Scholar]

- Bi, Z.; Jing, L.; Sun, C.; Shan, M. YOLOX++ for transmission line abnormal target detection. IEEE Access 2023, 11, 38157–38167. [Google Scholar] [CrossRef]

- Ning, W.; Mu, X.; Zhang, C.; Dai, T.; Qian, S.; Sun, X. Object detection and danger warning of transmission channel based on improved YOLO network. In Proceedings of the 2020 IEEE 4th Information Technology, Networking, Electronic and Automation Control Conference (ITNEC), IEEE, Chongqing, China, 12–14 June 2020; Volume 1, pp. 1089–1093. [Google Scholar]

- Zhang, J.; Wang, J.; Zhang, S. An ultra-lightweight and ultra-fast abnormal target identification network for transmission line. IEEE Sens. J. 2021, 21, 23325–23334. [Google Scholar] [CrossRef]

- Yu, C.; Liu, Y.; Zhang, W.; Zhang, X.; Zhang, Y.; Jiang, X. Foreign objects identification of transmission line based on improved YOLOv7. IEEE Access 2023, 11, 51997–52008. [Google Scholar] [CrossRef]

- Li, M.; Ding, L. DF-YOLO: Highly accurate transmission line foreign object detection algorithm. IEEE Access 2023, 11, 108398–108406. [Google Scholar] [CrossRef]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 10781–10790. [Google Scholar]

- Li, H.; Li, J.; Wei, H.; Liu, Z.; Zhan, Z.; Ren, Q. Slim-neck by GSConv: A better design paradigm of detector architectures for autonomous vehicles. arXiv 2022, arXiv:2206.02424. [Google Scholar]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate attention for efficient mobile network design. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13713–13722. [Google Scholar]

- Wang, A.; Chen, H.; Lin, Z.; Pu, H.; Ding, G. Repvit: Revisiting mobile cnn from vit perspective. arXiv 2023, arXiv:2307.09283. [Google Scholar]

- Wang, C.; He, W.; Nie, Y.; Guo, J.; Liu, C.; Wang, Y.; Han, K. Gold-YOLO: Efficient object detector via gather-and-distribute mechanism. Adv. Neural Inf. Process. Syst. 2024, 36. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).