Abstract

Researchers are actively pursuing advancements in convolutional neural networks and their application in anti-drone systems for drone classification tasks. Our study investigates the hypothesis that the accuracy of drone classification in the radio frequency domain can be enhanced through a hybrid approach. Specifically, we aim to combine fuzzy logic for edge detection in images (the spectrograms of drone radio signals) with convolutional and convolutional recurrent neural networks for classification tasks. The proposed FLEDNet approach introduces a tailored engineering strategy designed to tackle classification challenges in the radio frequency domain, particularly concerning drone detection, the identification of drone types, and multiple drone detection, even within varying signal-to-noise ratios. The strength of this tailored approach lies in implementing a straightforward edge detection method based on fuzzy logic and simple convolutional and convolutional recurrent neural networks. The effectiveness of this approach is validated using the publicly available VTI_DroneSET dataset across two different frequency bands and confirmed through practical inference on the embedded computer NVIDIA Jetson Orin NX with radio frequency receiver USRP-2954. Compared to other approaches, FLEDNet demonstrated a 4.87% increase in accuracy for drone detection, a 13.41% enhancement in drone-type identification, and a 7.26% rise in detecting multiple drones. This enhancement was achieved by integrating straightforward fuzzy logic-based edge detection methods and neural networks, which led to improved accuracy and a reduction in false alarms of the proposed approach, with potential applications in real-world anti-drone systems. The FLEDNet approach contrasts with other research efforts that have employed more complex image processing methodologies alongside sophisticated classification models.

1. Introduction

Unmanned aerial vehicles (UAVs) have experienced a wide spread in different applications, and are constantly improving and expanding because of their affordability, persistent technological improvements, and ease of use (no complex training is needed). In most cases, UAVs or drones are used for aerial surveillance and observation [1,2,3], agricultural applications [4,5], construction [6], small package delivery [7], and search and rescue (SAR) procedures [8,9]. Despite the significant advancements and various beneficial applications associated with drones, these technological innovations also present certain risks, as explained in [10,11,12]. The authors in [13,14] showed that drone advantages could be exploited for malicious endeavors and the misuse of drones to violate privacy, compromise security, and conduct attacks on both civilian and military infrastructures.

This dual-use characteristic of drone technology underscores the necessity for effective countermeasures to mitigate the potential dangers of their misuse. To prevent these occurrences, many countries have imposed stricter conditions on the safe use of drones, their classification, recording and maintenance, and the conditions their drone operators must meet. However, without an adequate system of controls and sanctions, it is inadequate to trust enacted laws and regulations in the hope that everyone will comply. The authors in [15] emphasize the imperative that safety and security technologies must be incorporated into drones themselves (collision avoidance, prevention from entering controlled airspace, and built-in tests for self-diagnostics). Moreover, the decisive solution for such a purpose is the implementation of anti-drone (ADRO) systems to protect security-sensitive facilities and areas from malicious drones.

The basic requirements for modern ADRO systems are presented in [16,17,18], which involves the classification (detection and identification) and neutralization (control, disruption, or physical destruction) of malicious drones. To successfully detect drones, which are particularly challenging targets (high-speed and agile), modern ADRO systems rely on various sensors such as radars, cameras, radio frequency (RF) receivers, and audio microphones [19,20]. Radars and RF receivers are the most widely used sensors for primary detection in modern ADRO systems. In contrast, other sensors or means are used to find closer associations, e.g., to confirm primary detection and identification [21].

This research focuses on enhancing the detection and identification of drones within the RF domain by utilizing a combination of a fuzzy logic-based edge detector (E/D) and convolutional neural networks (CNNs), along with convolutional recurrent neural networks (CRNN). The input for these deep learning (DL) models consists of spectrograms derived from radio signals from 2.4 GHz and 5.8 GHz Industrial, Scientific, and Medical (ISM) frequency bands, is and improved with a simple fuzzy logic-based E/D. Additionally, the study explores the potential for improving the detection of multiple drones operating simultaneously (two or three drones) within the same ISM bands. It should be emphasized that the primary focus of this research was to evaluate the influence of image processing techniques on the accuracy of DL models. The AlexNet [22], one of the most famous CNN models, as well as our simplified CNN (which contains only three convolution layers) and the CRNN (which includes only one convolution and one recurrent layer) models are used for this purpose. In particular, the effects and dependencies of E/D methods applied to spectrograms of drone radio signals by fuzzy logic were studied. The VTI_DroneSET dataset of radio signals emitted from drones was utilized for the experiments and the testing of our hypothesis. This dataset was created at the Military Technical Institute [23] and tested over numerous DL models. Moreover, the additive white Gaussian noise (AWGN) was intentionally added to the original radio signals from the VTI_DroneSET dataset, considering that the signal-to-noise ratio (SNR) influences the accuracy of the CNN and CRNN models. This procedure was deliberately introduced to enable a comparison of the results derived with DL and machine learning (ML) presented in [24,25,26,27,28,29,30,31]. Moreover, the results obtained by applying fuzzy logic-based E/D to spectrograms of drone radio signals were compared with classical (traditional) pixel-level E/D and state-of-the-art DL E/D.

The proposed FLEDNet (Fuzzy Logic Edge Detection Network) approach offers significant advantages by utilizing straightforward fuzzy logic within an image processing method, resulting in enhanced accuracy for CNN and CRNN models. Moreover, the proposed approach is characterized by its minimal computational power requirements and reduced time complexity for operation. This was confirmed through the practical inference of the FLEDNet approach on the embedded computer NVIDIA Jetson Orin NX with RF receiver USRP-2954 in outdoor conditions. Furthermore, it has been shown that the proposed approach has very stable characteristics for different SNRs that challenge the existence of AWGN. The disadvantage of the proposed fuzzy logic-based E/D is that it is tailored for specific spectrograms of drone radio signals, relying on prior knowledge of drone RF communications. The main contributions of this work are as follows:

- −

- The improvement of the classification accuracy in CNN and CRNN models with fuzzy logic-based E/D;

- −

- A classification accuracy comparison between CNN and CRNN models for different SNRs;

- −

- A comparison of classification accuracy between traditional and DL-based E/D;

- −

- An examination of the classification accuracy in both ISM frequency bands;

- −

- The testing of the possibility of detecting multiple drones operating at the same time;

- −

- A time complexity analysis of the proposed approach performed on the embedded computer with real-world radio signals from drones.

2. Literature

2.1. Fuzzy Logic-Based Edge Detectors

Various E/D methods used in image processing are extensively discussed in the literature for enhancing images, focusing on noise reduction, contrast, and image intensity adjustment. According to the authors in [32], these methods can be broadly categorized into three main domains—spatial, frequency, and wavelet. In the first category, spatial methods rely on applying gradient operations, or derivatives, directly to the image pixels to identify edges. In contrast, frequency methods involve transforming the image into the frequency domain, where specific operations can detect and locate edges more effectively. Lastly, wavelet methods convert the image into multiple sub-banded frequency levels. This approach not only overcomes limitations associated with frequency ranges, but also enhances resilience to noise, making it a powerful E/D.

A key advantage of employing a fuzzy logic approach in detecting edges is its inherent flexibility; the fuzzy decision threshold can be established, allowing for customization that transcends rigid decision rules. In our research, we chose not to delve into the potential of detecting edges in image processing. Instead, we concentrated on improving the classification accuracy of CNN and CRNN models for drone classification in the RF domain by integrating fuzzy logic-based methods for detecting edges. To the best of our knowledge, no studies or analyses examine the impact of fuzzy logic-based E/D on the accuracy of DL models used for drone-related classification tasks.

This gap in research can be attributed to the evolution of image processing, which has transitioned from traditional techniques to fuzzy logic and, more recently, to ML- and DL-based approaches. This gap is also due to the development of DL models, which can effectively address various problems without requiring extensive feature extraction. However, it is essential to highlight that detecting edges is a foundational step in many computer vision applications. Its primary objective is to filter out unwanted or redundant information, which helps reduce data volume while retaining essential details necessary for object detection in image processing. Such a phenomenon is also endorsed in the case of using CNN models in drone classification tasks. Enhancing the quality of input data during the learning process, as presented in [26,27,28,29], leads to significant improvements in the predictive performance of CNN models.

Compared to conventional E/D, detecting edges with fuzzy logic represents an improvement. Fuzzy logic is not logic that is fuzzy, but the logic that is used to describe fuzziness. This fuzziness is best characterized by its fuzzy set membership function (FSMF), first introduced in [33]. The fuzzy logic system accepts a feature vector or fuzzy truth for features belonging to different FSMFs, while there is a fuzzy output truth for given FSMFs in various classes. In other words, a fuzzy logic system (or fuzzy interference system, FIS) addresses how likely a pixel is to belong to an edge or region of uniform intensity.

The application of fuzzy logic in image processing has been well-investigated over the past decade, but it still attracts attention in particular research and applications. A problem related to detection edges in digital images was presented in [34], with a conclusion that fuzzy logic is one of the most effective E/D. The authors of this research introduced an optimized rule-based FIS that was compared with traditional E/D (Binary filter, Sobel filter, Prewitt filter, and Robert filter).

The authors in [35,36] studied the type-2 fuzzy models to improve their performance in detecting edges. They evaluated an approach to detect edges in digital images using horizontal and vertical gradient vectors from the original image. These vectors were used as inputs for the proposed FIS based on the following: the Gaussian and triangular FSMFs in [35], the sharpening-guided FSMF used to control edge quality, and a Gaussian FSMF to reduce the noise created by the sharpening in [36]. However, the authors in [37] pointed out the disadvantage of type-2 fuzzy logic related to the demands for higher computational power in parallel. The bounded variation of the smooth FIS was introduced in [36] to couple with non-continuous derivates of such fuzzy models. Such an FIS with smooth compositions can be classified as a type-1 fuzzy model and used for the E/D of digital images. The particular application of fuzzy logic was introduced in [38], where a contour detection-based image-processing algorithm based on type-2 fuzzy rules and DL application is presented for blood vessel segmentation. Moreover, several state-of-the-art techniques, including a fuzzy-based approach, were evaluated in [39] to enhance steganography performance. Similarly, a robust edge detection algorithm in [40] was used to improve traditional Canny E/D with fuzzy logic as a phase of image processing.

It can be determined that fuzzy logic is attractive for detecting edges in image processing. Moreover, some conventional (traditional) E/D like Canny [41], Kapur [42], and Otsu [43] can also be found in related studies on detecting edges. Authors in [36] compared E/D, including traditional (Sobel and Canny), with fuzzy logic-based E/D. Additionally, the DL-based E/D in [44,45,46,47,48] and transformer-based E/D in [49,50,51] are exceptional cases of detecting edges in image processing that benefit CNN models and vision transformers with attention.

Additionally, fuzzy logic is used with DL models as the hybrid approach for solving different problems regarding drones and autonomous vehicles. The authors in [52] merged reinforcement learning and fuzzy logic to cope with multiple UAV targets, mentioning that this approach can be used for safe UAV autonomous navigation under demanding airspace conditions. Similarly, the authors in [53] introduced a new hybrid controller with a DL model and fuzzy logic for motion planning and obstacle avoidance. The authors in [54] proposed the computer vision algorithm and fuzzy logic control for a lane-keeping assist system as a method of steering control for automated guided vehicles. However, to the best of our knowledge, none of the publicly accessible research papers address the issue of drone classification within the RF domain by utilizing a hybrid approach that integrates fuzzy logic with DL models.

2.2. Neural Networks for Drone Classification

Using neural networks depends primarily on well-prepared input data, which can be presented as tensors. Since the topic of this work is drone detection and identification in the RF frequency domain, there are two options for input. The first is using a spectrum of radio signals in the form of 2D tensors (stored as matrices), and the second is the usage of spectrograms in the form of 3D tensors (time-frequency representations of radio signals stored as images). The authors in [55,56,57,58] calculated a spectrum of radio signals to detect and identify drones using fully connected deep neural networks (FC-DNN) or 1D-CNN models. Furthermore, the authors in [24,25,27,29,59,60,61,62,63] used spectrograms with CNN and CRNN models. By performing convolutional operations, CNN and CRNN models can distinguish features and learn to recognize different shapes. Table 1 shows the literature studies that evaluated the influence of AWGN on ML and DL model accuracy (ACC) for drone classification tasks.

Table 1.

Review of the publicly available results from the literature.

The studies in Table 1 investigated the influence of AWGN with different SNR levels on the CNN model accuracy for detecting multiple drones and identifying the drone type. It is essential to highlight the primary limitations of the publicly available studies. Firstly, all studies evaluated scenarios for drone identification, specifically within the 2.4 GHz ISM band. Secondly, only the authors in [24,25] and FLEDNet provided results on detecting multiple drones in an AWGN environment across various SNR levels. Furthermore, publicly available studies do not offer insights into time complexity and hardware resource requirements. Ultimately, the methodologies proposed in these studies necessitate complex preprocessing procedures and the deployment of sophisticated DL models. These models are characterized by numerous parameters and require significant hardware resources.

The authors in [24,25] evaluated the effect of the SNR in the range of 10 to −30 dB on the accuracy of various models (CNN and YOLO Lite introduced in [64]) for drone-type identification. They engaged the energy detector with the Anderson–Darling Goodness-of-Fit (GoF) test for the preprocessing phase. They artificially created a sum of multiple signals together with AWGN with different SNR levels to evaluate the performances of the mentioned models. Furthermore, the authors in [26] examined the impacts of SNR levels ranging from 0 to 25 dB on the performance of ML models, including k-nearest neighbors (kNN), random forest (RandF), and Support Vector Machine (SVM), specifically for drone classification tasks (drone-type identification). They used three-stage wavelet decomposition with two-level discrete filters for the Haar wavelet transform in multiresolution analysis. Authors in [27] studied the accuracy of using the CNN model for time series and spectrograms as inputs while engaging the denoising preprocessing phase. They used a truncation process to establish the lower limit of the density color scales on spectrograms. This enhances the representation of high-density signal components in the spectrogram image, thereby improving model accuracy. Research in [29] evaluated the application of a simple threshold to a drone signal with the same effect as filtering performed in the denoising process. Such filtered drone signals were used to calculate power-based spectrograms and engaged with a CNN model for the drone controller signal classification. Authors in [30] employed a stacked denoising autoencoder (SDAE) for prior drone detection. Following the detection of drones, they utilized the Hilbert–Huang transform (HHT) and wavelet packet transforms to extract features. These features were then applied to a three-level hierarchical classifier (drone-type identification), which leverages extreme gradient boosting classifiers (XGBoost) as the learning algorithm. Next, the authors in [31] evaluated various Visual Geometry Group (VGG) CNN architectures introduced in [65], with small convolutional filters (3 × 3) but with deep architecture, while reducing the number of parameters. The authors in [62] evaluated the use of a multiscale CNN model for drone detection and identification tasks when AWGN is applied to RF signals from the CardRF dataset, achieving an average accuracy of 97.53%. Finally, an example of the usage of a state-of-the-art DL model like the visual transformer (ViT) was presented in [63], as a hybrid technique named SignalFormer with a CNN-based tokenizer method for drone RF signal detection and identification. Consistent with the findings outlined in Table 1, SignalFormer has proven its ability to operate effectively in noisy environments.

3. Materials and Methods

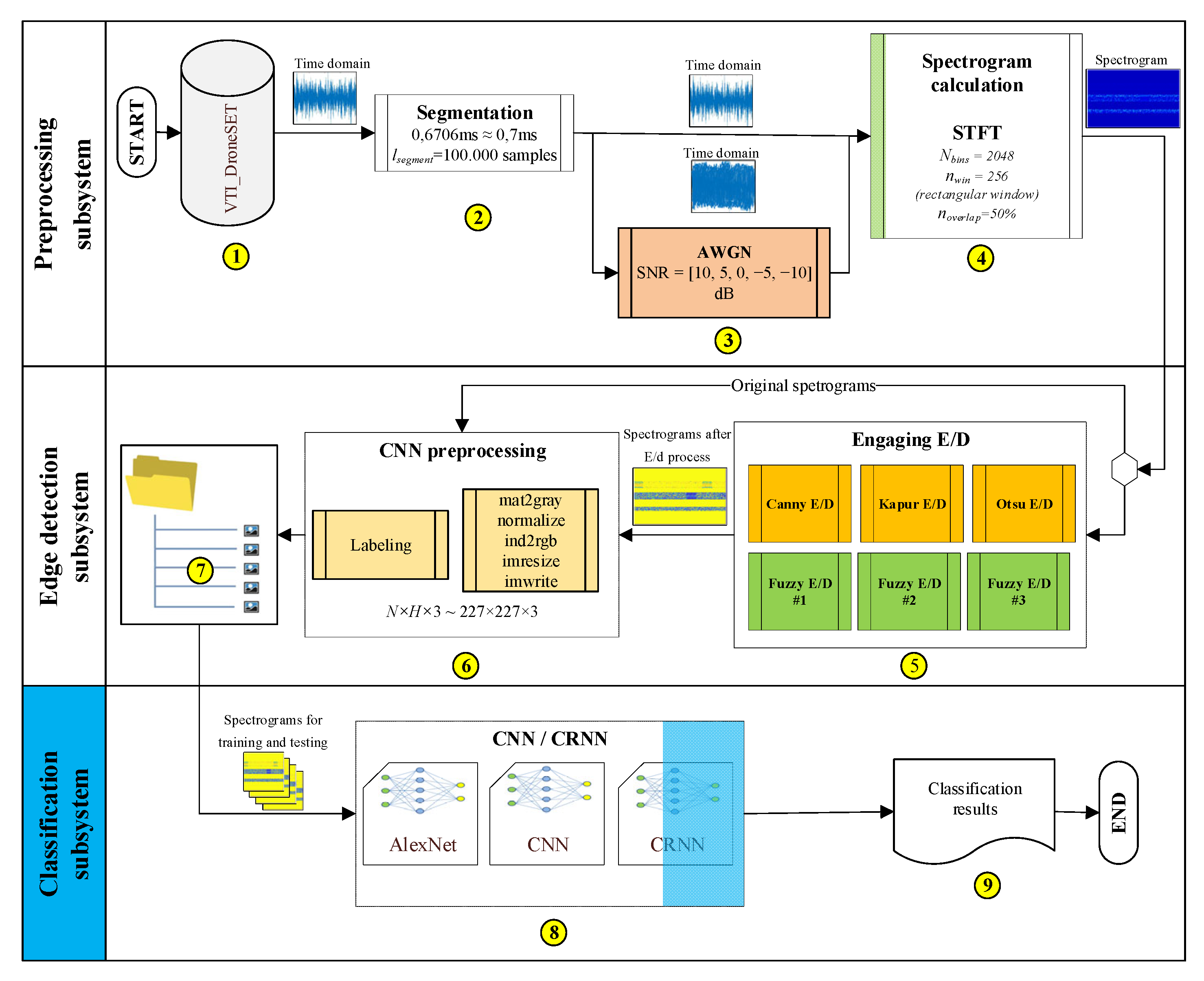

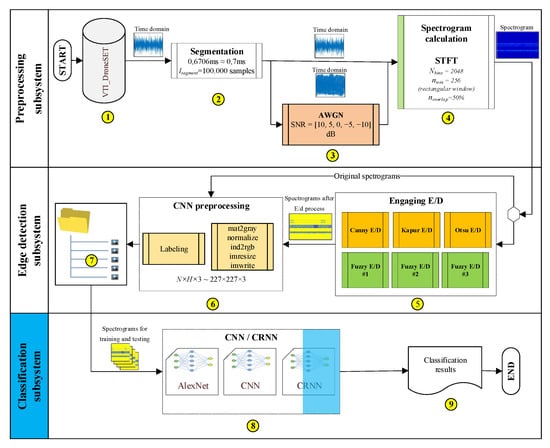

This section presents the system model (methodology) with its subsystems—preprocessing, the E/D, and the classification subsystems. The system model is presented in Figure 1.

Figure 1.

System model: (1) VTI_DroneSET dataset, (2) segmentation of radio signals, (3) adding AWGN, (4) spectrograms calculation, (5) engaging E/D, (6) image resizing, normalization, saving, and labeling, (7) creation of MatLab image datastore objects, (8) CNN and CRNN models, and (9) classification results.

3.1. Preprocessing Subsystem

3.1.1. VTI_DroneSET Dataset

The preprocessing subsystem is employed for loading radio signals, segmentation, and spectrogram calculation. The loading of radio signals or raw IQ data is performed from the VTI_DroneSET dataset (Supplementary Information explains the generation and characteristics of the VTI_DroneSET dataset and the time-frequency representation of drone signals in the RF domain) presented in [23,66]. This dataset was created indoors using three commercial drones (DJI Phantom IV, DJI Mavic 2 Zoom, and DJI Mavic 2 Enterprise with ground control stations). Three different experiments were recorded. In the first experiment, drones were recorded while they were working independently. In the second experiment, two drones were working at the same time. In the third experiment, all three drones were working simultaneously. Moreover, all experiments were conducted in both ISM frequency bands (2.4 and 5.8 GHz).

3.1.2. Segmentation of Signals

The loaded radio signals are in the time domain, with a sampling frequency of 100 MHz and a duration of 450 ms. Straightforward time domain segmentation is performed on loaded radio signals to achieve data augmentation. Segmentation is executed by snapping the whole radio signal into smaller segments of the same length. The segment length was deliberately chosen to be 100,000 samples, like in the studies in [55,58]. The preprocessing subsystem for each radio signal produced 670 segments in total. Moreover, this segmentation parameter was chosen to create more realistic conditions because the decision-making time necessary for classification was reduced by it. It is worth mentioning that the authors in [61] used longer signal segments and, as expected, achieved better results

3.1.3. Adding AWGN

The next step involves adding AWGN to the radio signal segments to investigate the influence of the SNR on the classification accuracy of the proposed FLEDNet approach. The original radio signal was recorded in indoor conditions with SNR = 20 dB, while AWGN was intentionally added, taking care to ensure that SNR be in the range of 10 to –10 dB with steps of 5 dB. The preprocessing subsystem for each segment of the radio signal generates the original (SNR = 20 dB) and five noisy copies (SNR = 10 dB, 5 dB, 0 dB, −5 dB, −10 dB), numbering six in total. The operations of adding noise are presented in [24,25,26,27,28,29], where authors engage different SNRs of AWGN to evaluate the DL algorithm’s accuracy similarly.

3.1.4. Calculation of Spectrograms

The last step in the preprocessing subsystem is spectrogram calculation. The spectrogram is obtained with the Short–Time Fourier Transform (STFT) with the following parameters: the number of frequency bins Nbins = 2048, rectangular window function with the length of nwin = 256 samples, and noverlap = 50% overlapping. The authors in [67] showed that this combination provides the best energy concentration for radio signals. Using the preprocessing subsystem as output provides six spectrograms for each radio signal’s segment, which gives 6⋅670 = 4020 spectrograms.

3.2. Edge Detectors (E/D) Subsystem

3.2.1. Detection of Edges on Spectrograms

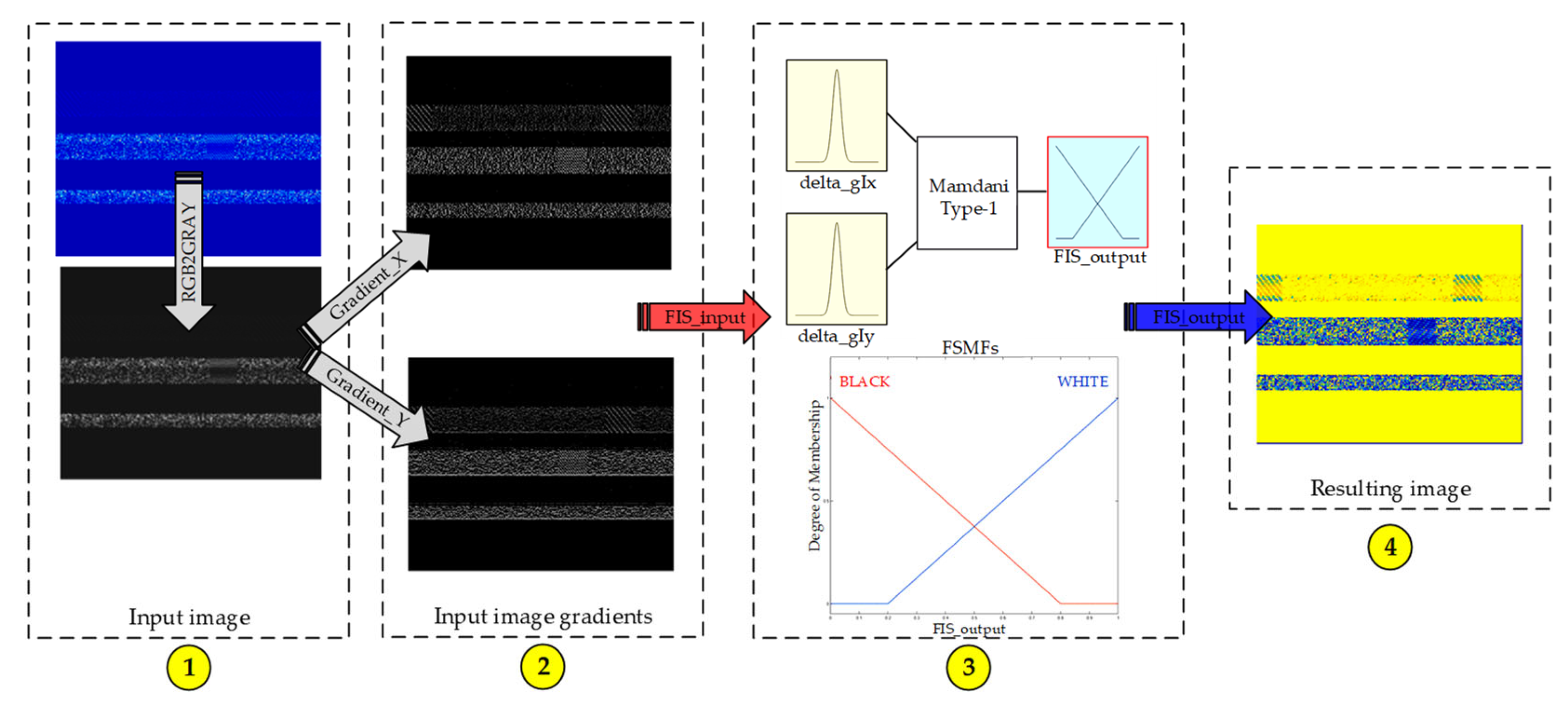

All spectrograms (original and noisy copies) were managed by the next subsystem, which included E/D methods. Moreover, input spectrograms were used as a reference point of comparison for spectrograms obtained after six E/D. Conventional (Canny [41], Kapur [42], Otsu [43]) and three variants of fuzzy logic-based E/D were employed to detect edges on the input spectrograms of drones’ radio signals. The proposed fuzzy logic-based E/D method is relatively straightforward to implement, as it is applied to each spectrogram representing a segment of the drone’s radio signal. Its ease of adaptation is mainly due to the simplicity of the filters, unlike those used in other filtering operations. Furthermore, the functions of the FSMF are also uncomplicated and more easily adaptable compared to other preprocessing techniques. The first operation is converting each loaded image (I) into a gray image (gI), followed by the horizontal () and vertical () gradients of the image to locate discontinuities along the x and y axes. To these ends, the simplest possible filters of size 1 × 2 were used. The following equations describe the introduced filters and :

The convolution operation with the proposed filters produced a pair of images with normalized values from −1 to 1. These new images represent the inputs to the FIS or, more precisely, the Mamdani type of FIS. The proposed FLEDNet approach was defined with the Gaussian FSMF (gaussmf) for each input and the triangular FSMFs (trimf) for the output values, with the following equations:

where the expectation of Gaussian FSMF is zero (x0 = 0), and the standard deviation is σ. Moreover, the triangular FSMF is determined from three points (x1, x2, x3). In this research work, three variants of the fuzzy logic-based E/D were used to find the best combination of FSMF parameters. The first parameter defined with for the Gaussian FSMF was increased from the smallest value (σ = 0.1) to create an FLEDNet that was less sensitive to random illumination. Three fuzzy logic-based E/D were evaluated for the three standard deviation values (σ = [0.1, 0.2, 0.3]). Furthermore, using different points (x1, x2, x3) provides a mechanism to manage the intensity of detected edges. The parameters for three pairs of triangular FSMFs are as follows:

It should be mentioned that parameters for gaussmf and trimf were chosen empirically because σ defines the sensitivity of the fuzzy logic algorithm to edges, and trimf establishes the intensity of the detected edge. These parameter thresholds, which are close to 0 and 1, were deliberately selected to achieve the most dependable detections of edges. Specifically, alternative parameter values for gaussmf and trimf provided minimal enhancements, and did not significantly influence the accuracy of the proposed CNN and CRNN models.

Finally, the rules that decide whether a pixel belongs to an edge (represented in white) or a uniform area (represented in black) were determined. Such rules, or the fuzzy knowledge base in FIS, comprise a set of fuzzy IF-THEN rules, or verbal explanations, that express the relationship between input and output. The FLEDNet is based on just two fuzzy rules that interpret the following two situations for image I:

Equations (8) and (9) can be described using the following expressions:

- −

- If the gradients of the input grey image in both directions, and , are equal to zero, then the given pixel belongs to a uniform area;

- −

- If one of the gradients of the input grey image differs from zero, the pixel is located on the edge.

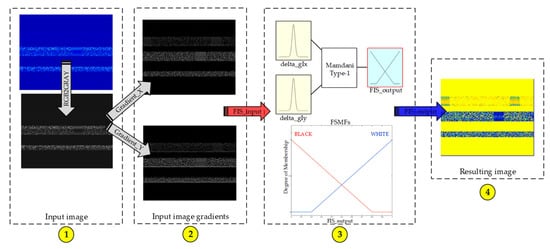

Defuzzification is the final step that refines the fuzzy information obtained from each pixel on the spectrogram, resulting in detected edges. The FLEDNet employs the centroid defuzzification (COD) technique [68]. It transforms fuzzy values, which indicate the degree of pixel membership in edge or non-edge areas, into clear, defined boundaries. The key improvements are the smooth transition of boundaries, noise reduction, and improved accuracy. First, defuzzification translates a range of fuzzy values into crisp boundaries, reflecting the gradual transitions often seen in real-world images. Second, defuzzification reduces uncertainty and improves edge-detection accuracy in noisy images by converting ambiguous FSMF values into definitive classifications. Third, integrating fuzzy information allows for nuanced E/D, offering a better representation of edges, especially in complex or blurry regions, than traditional binary methods. Figure 2 shows the proposed implementation of fuzzy logic-based E/D, which this study uses to better explain the entire process.

Figure 2.

An example of the implementation of E/D based on fuzzy logic: (1) input image and its transformation to gray image, (2) images of horizontal (top) and vertical (bottom) gradients of the input image, (3) the fuzzy logic system, and (4) the output (resulting image).

The fuzzy logic-based E/D (Supplementary Information explains FIS in detail) performs the input of images one-by-one (spectrogram), calculates horizontal and vertical image gradients, and produces the output as a new image. It is essential to note the apparent visual improvement, as three different signals from three drones can be seen in the resulting image. On the other hand, only two signals are visible in the input image, while the third one is less noticeable. The proposed fuzzy logic-based E/D effectively extracts all radio signals from the spectrogram, demonstrating superior performance under low SNR conditions. This advantage arises from its ability to model uncertainty and address imprecision. The inherent flexibility of fuzzy logic allows it to retain crucial features that traditional methods may overlook, as it assigns varying degrees of importance to features based on their relevance, making it less susceptible to minor fluctuations in data. In contrast, traditional E/D methods such as Canny, Kapur, and Otsu, often face significant challenges in similar conditions, as noise can obscure essential features [69,70]. Additionally, the fuzzy logic-based E/D demonstrates enhanced robustness by avoiding fixed thresholds. Instead, it employs an FSMF to assess the degree to which pixels belong to categories such as “edge” or “uniform area”. This approach is particularly effective because fuzzy reasoning permits the selection of soft thresholds that can adapt more effectively to the nonlinearities present in the model inputs [71].

Besides the described E/D subsystem, it is essential to note that the state-of-the-art method for E/D was also evaluated in this paper. We have used a DL-based E/D, namely, DexiNed, which was introduced in [48] and tested with proposed CNN and CRNN models. This DexiNed was inspired by HED (Holistically-NestedEdge Detection), presented in [72], based on the VGG16 model introduced in [73]. The DexiNed was implemented and evaluated using spectrograms from the VTI_DroneSET dataset to compare the obtained results with those derived via the FLEDNet approach. Additionally, the authors in [48] claim that the DexiNed, even without pre-trained data, has better characteristics than the state-of-the-art edge detectors in most cases. Therefore, we have used DexiNed without pre-trained data to employ E/D image processing due to the need for ground truth images for spectrograms. Furthermore, it should be emphasized that a DexiNed time and memory complexity analysis was performed in this study.

3.2.2. Image Resizing, Normalization, Saving, and Labeling

Additionally, it is essential to note that all spectrograms were saved as images after employing E/D. This procedure consists of resizing and saving images, and it is unique due to the specific set of functions used for this purpose. The image resizing was performed under strictly controlled conditions to avoid losing essential features. First, the simple MatLab embedded function “mat2gray” transforms the output from fuzzy logic-based E/D into a grayscale image. Second, the normalization process is used to set values of the given grayscale image in the interval range from 0 to 255. Third, the MatLab function “ind2rgb” uses the color map parameter set “jet” to convert such an indexed image to an RGB image with dimensions N × H × 3. Finally, the images obtained are scaled to 227 × 227 × 3 without losing essential information. It is important to note that the dimensions of the resulting matrix S depend on the Fast Fourier Transform (FFT) bins and the signal duration, which can be described with the following equations:

where , lsegment is the length of a loaded segment of a radio signal, nwin is the size of the rectangular window used for spectrogram calculation, is the FFT bins, and stands for samples of overlap between adjoining window sections. If a signal segment holds 100,000 samples, N and H will take values of 2048 and 780, respectively. In addition, it should be noted that all images after preprocessing are saved in *.tiff format in separate folders for classes. This operation uses the embedded MatLab function “imwrite” to give each image a suitable filename.

3.2.3. Creation of Datastore Objects

Finally, the E/D subsystem produced seven MatLab image_datastore objects (original, plus six spectrograms from six E/D) for each SNR. Thus, forty-two MatLab image_datastore objects were obtained to evaluate the proposed algorithm (combining seven MatLab image_datastore objects and six SNRs).

3.3. Classification Subsystem

The third subsystem is anticipated to be able to train the proposed FLEDNet approach on prepared MatLab image_datastore objects. The CNN and CRNN models are trained in three separate scenarios: drone detection, drone-type identification, and the detection of multiple drones. It should be noted that the specific labeling notation was used for each model, as follows:

- −

- Drone detection. The first scenario’s model is intended for detecting the presence of a drone, so there are only two labels for two classes—“0” indicates that the drone does not exist, and “1” indicates that the drone is present in the corresponding spectrogram of the radio signal’s segment;

- −

- Detection of multiple drones. The second scenario’s model is intended for detecting multiple drones operating simultaneously, so there are four classes—“0” indicates that the drone does not exist, “1” specifies that there is only one drone, “2” specifies that there are two drones, and “3” specifies that there are three drones in the corresponding spectrogram of the radio signal’s segment;

- −

- Identification of the drone type. The third scenario’s model is intended to identify the type of drone, so there are three classes—“1” represents the DJI Phantom IV type of drone, “2” represents the DJI Mavic 2 Zoom type of drone, and “3” represents the DJI Mavic 2 Enterprise type of drone.

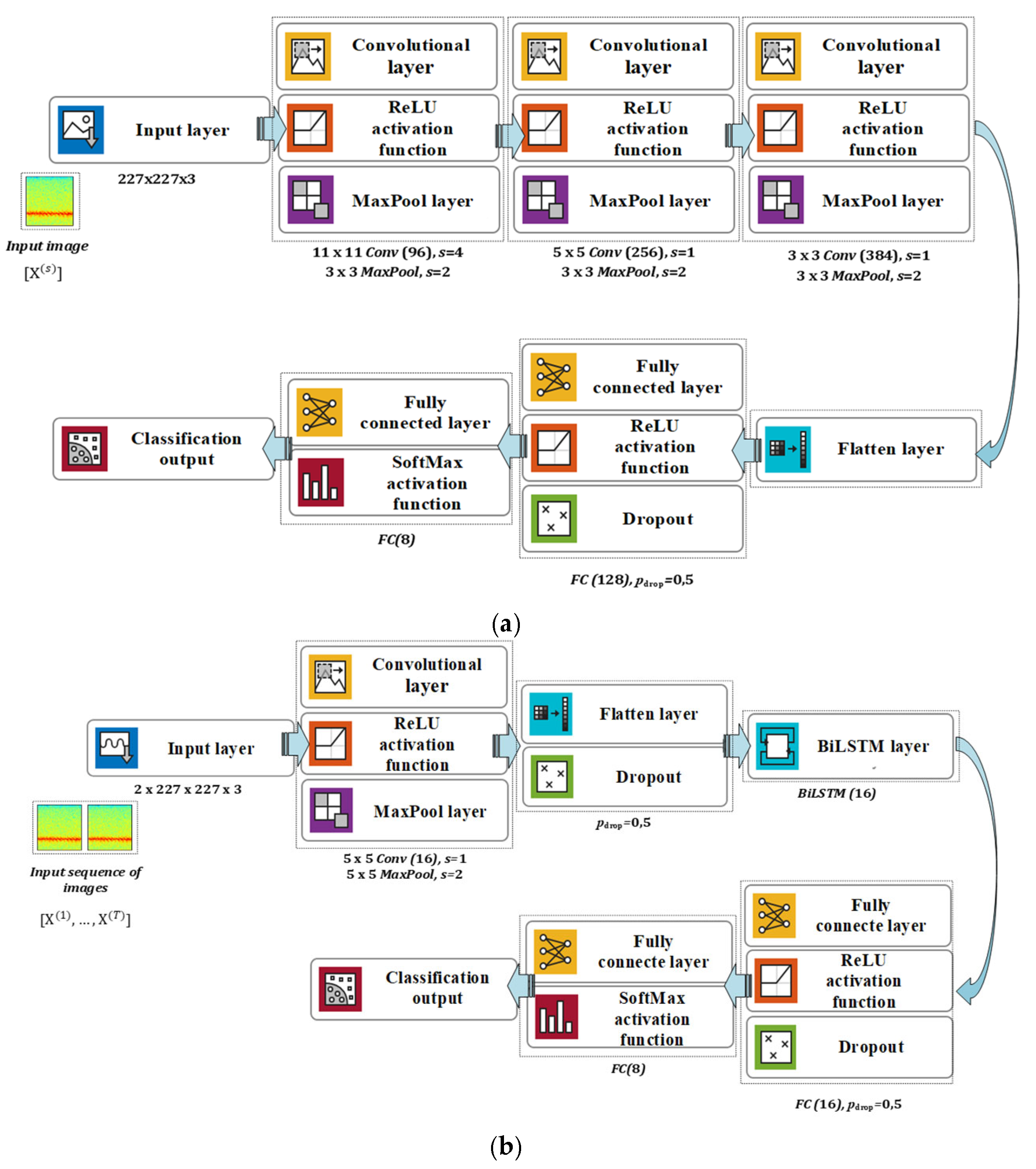

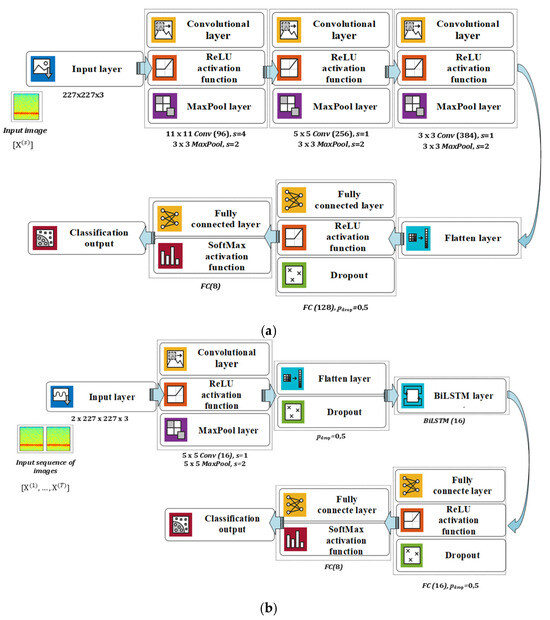

There are genuine and practical reasons for such an approach—to simplify training, reduce resource requirements, and adapt the ADRO system to the requirements of the end users. Explicitly, it was empirically established in practice that ADRO operators initially want information about drone detection, i.e., operators need information about the presence of drones in a security-sensitive area. After that, if the drone’s presence is detected, the ADRO should provide details on detecting multiple drones or whether only one drone is present. Finally, if only one drone is detected, the ADRO should give information on the type of detected drone. This approach was tested through practical inference on the embedded computer NVIDIA Jetson Orin NX, with a radio frequency receiver of type USRP-2954. The proposed FLEDNet approach can successfully detect the presence of drones, determine whether there are one, two, or three drones simultaneously, and identify the type of single drone operating independently. As mentioned, two models were used in the proposed FLEDNet approach: CNN and CRNN models. Moreover, the AlexNet model [22] was employed for comparison. The proposed CNN model consists of only three convolutional layers, two fully connected layers, and one decision layer. The CRNN model combines two of the most famous models, CNN and Recurrent Neural Networks (RNN), forming a new model for temporal–spatial data. The proposed CRNN consists of one convolutional layer, one recurrent Bidirectional Long Short-Term Memory (BiLSTM) module with sixteen units, two fully connected layers, and one decision layer. Moreover, only two successive images (lsequence = 2) were used in sequence as input data for the proposed CRNN model. The corresponding architectures of the employed CNN and CRNN models are presented in Figure 3.

Figure 3.

The architecture of the proposed (a) CNN model and (b) the CRNN model.

The input image was enumerated as X(s) for the proposed CNN model, where X is one training or testing sample in the form of a 3D tensor (spectrogram; color image; 227 × 227 × 3), and s represents samples in the VTI_DroneSET dataset. The input sequence of images was enumerated as [X(1), …, X(T)] for the proposed CRNN model, where X(T) represents a training or testing sample in the form of a 4D tensor (sequence of spectrograms; sequence of two color images; 2 × 227 × 227 × 3) and T denotes time dependence. The primary distinction between the proposed models is their architecture. The CNN model incorporates a substantial number of filters in its convolutional layers, which allows it to extract features for classification effectively. In contrast, the CRNN model utilizes fewer filters within a single convolutional layer paired with a limited quantity of LSTM cells. Both models exhibit a similar architecture in their final layers.

All models were trained and validated using the Mean Square Error (MSE) function in combination with the Stochastic Gradient Descent with Momentum (SGDM) optimization algorithm (momentum = 0.9) to minimize the classification error. Moreover, k-fold cross-validation (K = 10) was used to reduce bias, i.e., to overcome the differences in the number of samples in the classes. The training process was performed with Nbatch = 50 and a total number of Nepoch = 100. Also, the application software MatLab and Python Anaconda version 2.3.0 with Tensorflow 2.8.0 (including Keras 2.8.0) with a GPU environment were used for training purposes. For this purpose, a computer with Intel(R) Core (TM) i5–9400F CPU @ 2.90 GHz and 32 GB RAM, with two graphics cards GPUs GeForce RTX 2060 6 GB GDDR6 (CUDA toolkit version 11.0.2. and cuDNN version 8.5.0), was used. Moreover, the NVIDIA Jetson Orin NX was used to make practical inferences about the FLEDNet approach in outdoor conditions.

4. Results

The main goal of this research was to examine the impact of E/D on the classification accuracy of CNN and CRNN models. Moreover, the focus was on fuzzy logic-based E/D, so the study presents the results on obtained CNN model accuracy when different E/D were engaged with six levels of SNR. It is important to note that only 2.4 GHz ISM band results are presented in this section in detail.

4.1. The Drone Detection Results

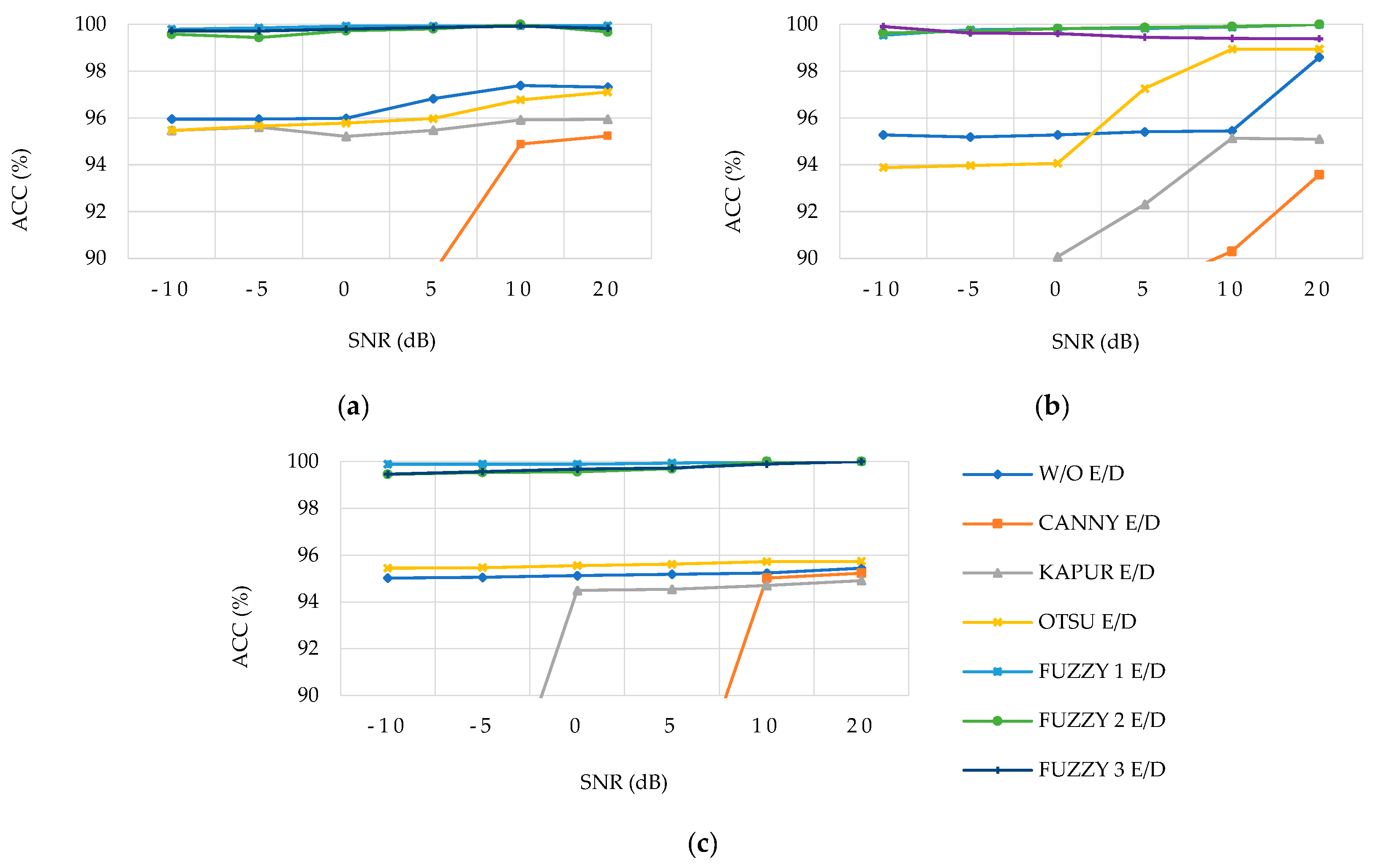

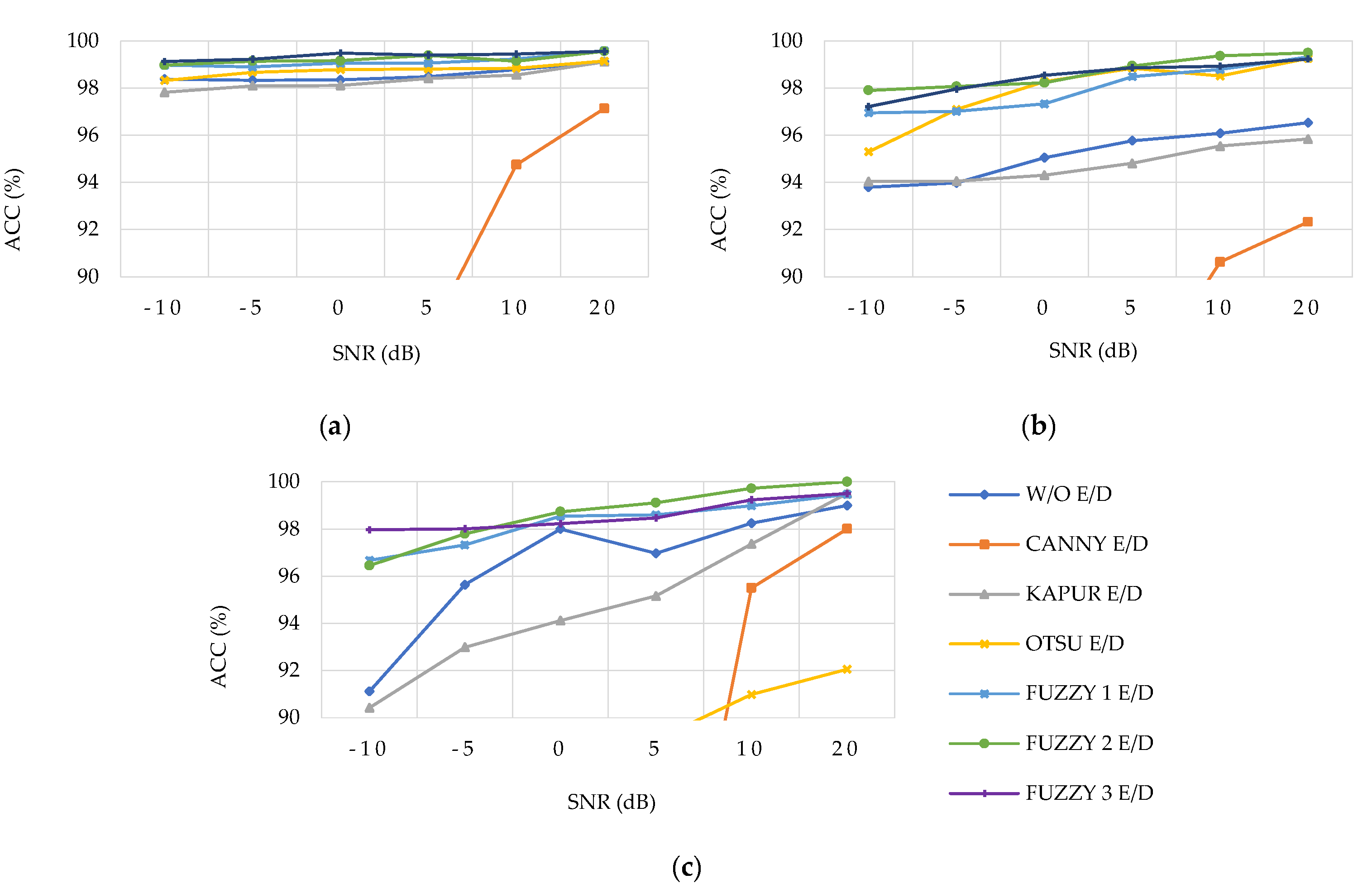

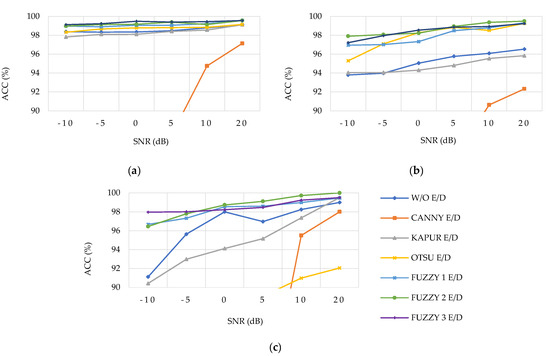

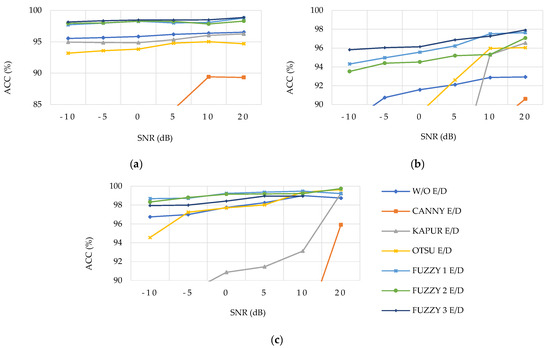

The results of drone presence detection using the proposed FLEDNet approach are shown in Figure 4 for AlexNet and the proposed CNN and CRNN models, with three and only one convolutional (conv) layer, respectively. Please note that E/D notation means “edge detector”, while W/O stands for “without E/D”.

Figure 4.

The drone detection accuracy in the 2.4 GHz ISM band for different SNRs: (a) the AlexNet model, (b) the CNN model, and (c) the CRNN model.

Figure 4 shows that the performances of all models that engaged fuzzy logic-based E/D are better than those for other drone detection scenarios. These results are stable for all SNRs in the 2.4 GHz ISM band. Moreover, all fuzzy logic-based E/D for all models gave comparable results. The worst results show Canny E/D followed by the Kapur E/D for SNRs below 5 dB (Supplementary Information explains results for drone detection in both ISM bands in detail).

4.2. The Number of Drone Detection Results

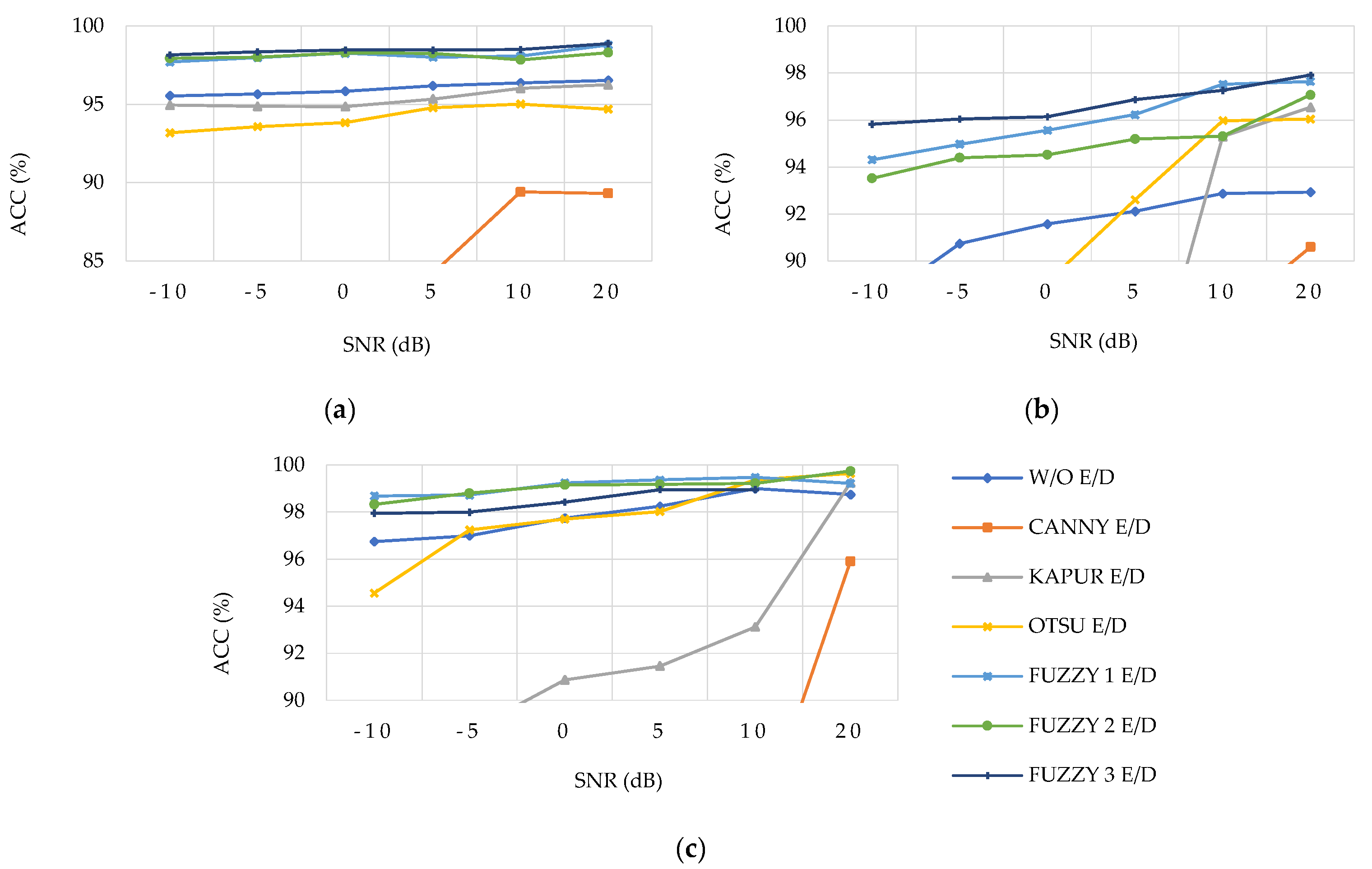

Figure 5 shows the results of multiple drone detection using the proposed FLEDNet approach for AlexNet and the proposed CNN and CRNN models.

Figure 5.

The accuracy of number of drone detection in the 2.4 GHz ISM band for different SNRs: (a) the AlexNet, (b) the CNN model, and (c) the CRNN model.

The performances of all models that engaged fuzzy logic-based E/D are better than those shown in other results for the multiple drone detection scenario. These results are stable for all SNRs in the 2.4 GHz ISM band. All variants of fuzzy logic-based E/D for all proposed models provide equivalent results. The AlexNet and the proposed CRNN models are slightly better than the proposed CNN model. The worst results show Canny E/D for SNRs below 5 dB (Supplementary Information explains results for multiple drone detection in both ISM bands in detail).

4.3. The Drone-Type Identification Results

Figure 6 shows the results of drone-type identification using the FLEDNet approach for AlexNet and the proposed CNN and CRNN models.

Figure 6.

The drone-type identification accuracy in the 2.4 GHz ISM band for different SNRs: (a) the AlexNet model, (b) the CNN model, and (c) the CRNN model.

Like in the previous scenario, the performances of all models that engaged fuzzy logic-based E/D are better than those for drone identification. These results are stable for all SNRs in the 2.4 GHz ISM band. The proposed CRNN model is slightly better compared to other models. The worst results show Canny E/D for SNRs below 10 dB (Supplementary Information explains results for drone-type identification in both ISM bands in detail).

5. Discussion

5.1. The Quantitative Analysis of the FLEDNet Improvement

Compared to the results obtained by applying spectrogram image processing with classical (traditional) E/D, the FLEDNet approach can significantly improve the accuracy in detecting and identifying drones in the presence of low SNR. Also, it was shown that the FLEDNet approach has stable characteristics for different SNRs, i.e., it shows resistance to AWGN. This implies that the proposed FLEDNet approach is a good potential choice for drone classification in the RF domain.

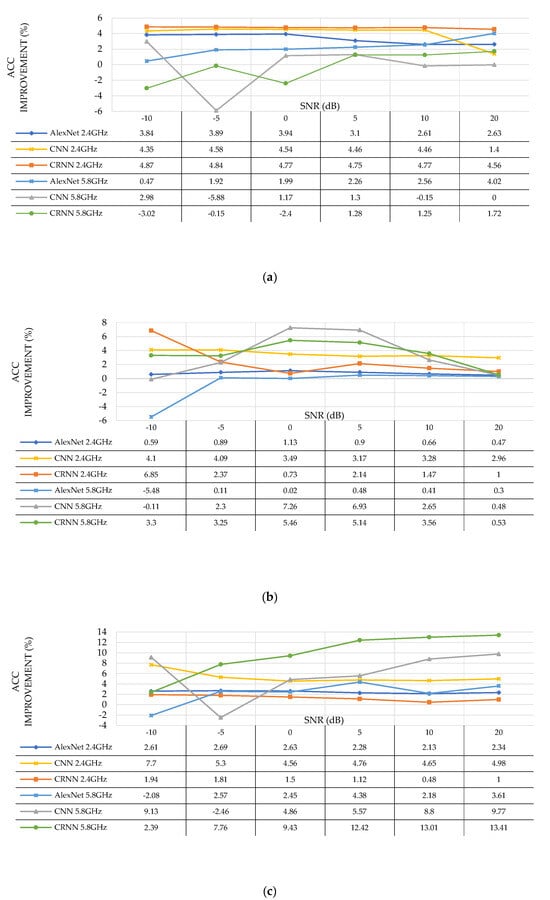

A comparative analysis was performed to show the improvements in accuracy obtained by employing the proposed fuzzy logic-based E/D, depending on the models used. The accuracy improvement is calculated as follows:

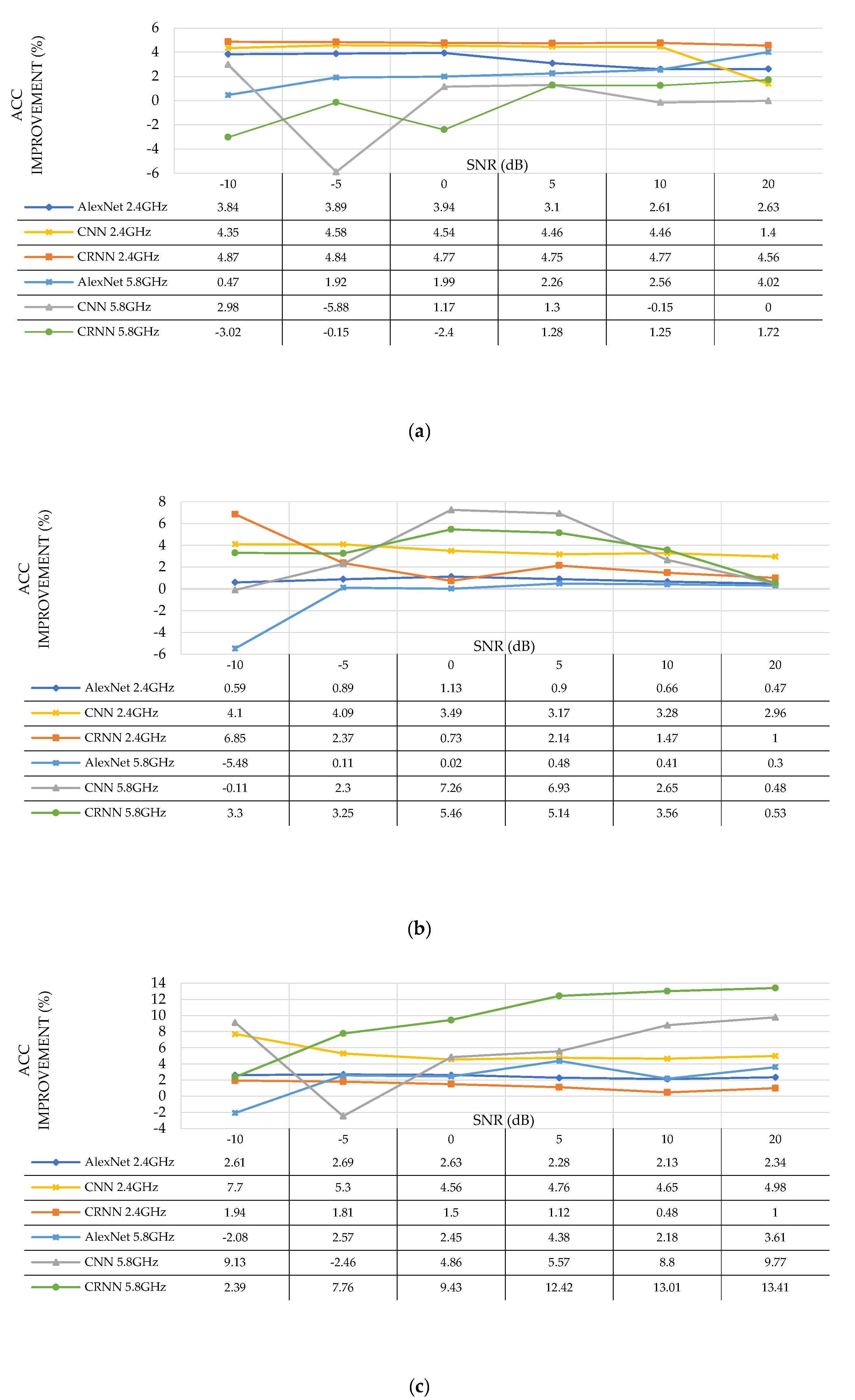

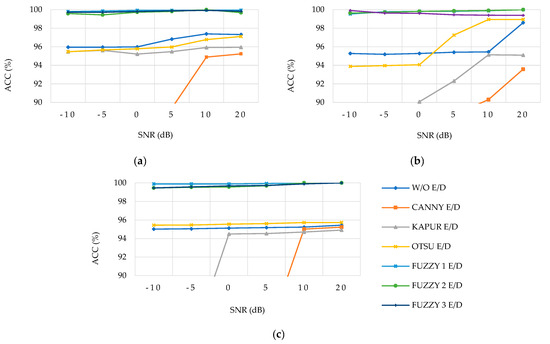

where ∆ACCFLED is the accuracy improvement, ACCFLED is the accuracy obtained with the best fuzzy logic-based E/D, and ACCW/O is accuracy without any E/D. A comparative analysis was performed for drone detection, identification, and multiple drone detection, and the findings are presented in Figure 7.

Figure 7.

The accuracy improvements in both ISM bands for different SNRs: (a) drone detection, (b) detection of the number of drones, and (c) drone-type identification.

It can be concluded from Figure 7 that the usage of fuzzy logic-based E/D contributes to improving the accuracy for all models and for all three scenarios if SNRs are better than 0 dB. Accuracy improvement also exists for worse SNRs in some cases. Additionally, it is observed that there is a performance degradation at SNRs worse than 0 dB in the CNN model and AlexNet model in the 5.8 GHz ISM band. The graph in Figure 7a shows that the greatest accuracy improvement (4.56% to 4.87%) is obtained by combining fuzzy logic-based E/D and the CRNN model in the 2.4 GHz ISM band. Additionally, it can be concluded that, independent of the model, the improvement is more significant in the 2.4 GHz ISM band than in the 5.8 GHz ISM band. Figure 7b shows that the accuracy improvement depends on the SNR, and that the smallest gain is achieved if fuzzy logic-based E/D is combined with the AlexNet model (in both ISM bands). As shown in Figure 7c, the most considerable accuracy improvements are achieved in the 5.8 GHz ISM band using the CRNN model. The accuracy improvement of the CNN model in the 2.4 GHz ISM band is stable; it changes slightly depending on the SNR.

Various electromagnetic disturbances and interferences characterize the RF domain, so it is crucial to have highly accurate classification algorithms capable of swiftly detecting and identifying drones. Specifically, the SNR greatly influences drone classification algorithms’ accuracy, while practical implementation in real-world scenarios requires less excessive resources. It is important to note that the FLEDNet approach addresses the shortcomings of traditional E/D, which often struggles when the SNR is low, resulting in the loss of precise shapes in images (spectrograms).

5.2. The Comparative Analysis of the FLEDNet Improvement

Using the FLEDNet approach, it was shown that applying fuzzy logic-based E/D is a cost-effective image processing technique because it improves the accuracy of the proposed CNN and CRNN models in the presence of noise. Additionally, we have performed a comparative analysis with results from the literature that incorporate a noisy environment as the critical factor in the accuracy testing of CNN models. Table 2 shows the comparative study between the FLEDNet approach and results from the literature. The results that are bold and underlined are the best for each scenario. Moreover, note that the notation “N/A” means no results are available in the literature for such a scenario.

Table 2.

Comparative analysis of the publicly available results.

The key takeaways from Table 2, related to improving CNN and CRNN models’ accuracy when used in the drone classification task using fuzzy logic-based E/D on noisy radio signal spectrograms, are as follows:

- −

- The FLEDNet approach outperforms all previously researched approaches for SNRs below 0 dB;

- −

- In comparison, the FLEDNet approach consistently demonstrates equal or superior performance for all scenarios. Two studies reported better results at 0 dB in a drone-type identification scenario;

- −

- The FLEDNet approach exhibits a performance comparable with those of the AlexNet and CRNN models. However, the CRNN model is significantly less complex, making it the preferred choice for drone classification tasks;

- −

- The FLEDNet approach has demonstrated stable results across a range of SNR levels, specifically from −10 to 20 dB (Figure 7);

- −

- This approach has been tested in three scenarios within the 2.4 and 5.8 GHz ISM frequency bands. Unlike other studies in the literature, it evaluates both ISM bands and accounts for multiple-drone scenarios;

- −

- The FLEDNet approach effectively showcases its capabilities by utilizing the simplest CRNN model, which consists of only one convolutional layer. Furthermore, the CNN model used alongside FLEDNet has been proven effective.

5.3. The Statistical Significance Analysis

To evaluate the improvement in accuracy achieved, we employed statistical measures to quantify the reliability of the results. To assess the traditional E/D methods against the FLEDNet approach, a statistical significance analysis was conducted, employing a confidence interval, as outlined by the following equation:

where is the sample mean, is the sample standard deviation, n = 36 is the sample size, and Z = 1.96 for a 95% confidence level. Table 3 presents the 95% confidence level CI for the FLEDNet accuracy utilizing the CRNN model, as it yielded the most favorable performance.

Table 3.

Accuracy confidence interval (95% confidence level) for FLEDNet accuracy improvement.

The results prove that the application of the FLEDNet approach significantly improved performance across all three usage scenarios. When utilizing FLEDNet, the accuracy CI for a 95% confidence level narrowed, suggesting that these results are more stable and resilient to variations in SNR. In contrast, traditional E/D exhibited a larger accuracy CI, implying a wider range of potential accuracy outcomes. This statistical analysis underscores that the new approach yields better results, particularly given that traditional E/D has struggled to effectively address low-SNR situations (as illustrated in Figure 4, Figure 5 and Figure 6). Moreover, this suggests that the FLEDNet approach is particularly well-suited for the practical ADRO implementation of drone detection, including identifying multiple drones. However, it is less effective in the specific task of drone identification. In real-world scenarios, such as border surveillance or the protection of vital infrastructure (airports, naval ports, refineries, military installations), the primary focus is not necessarily on identifying the exact drone type, but on quickly recognizing and addressing potential threats. The FLEDNet could play a crucial role in a layered security strategy, enabling prompt responses to drone threats by prioritizing detecting drone presence while delegating detailed identification to other ADRO systems.

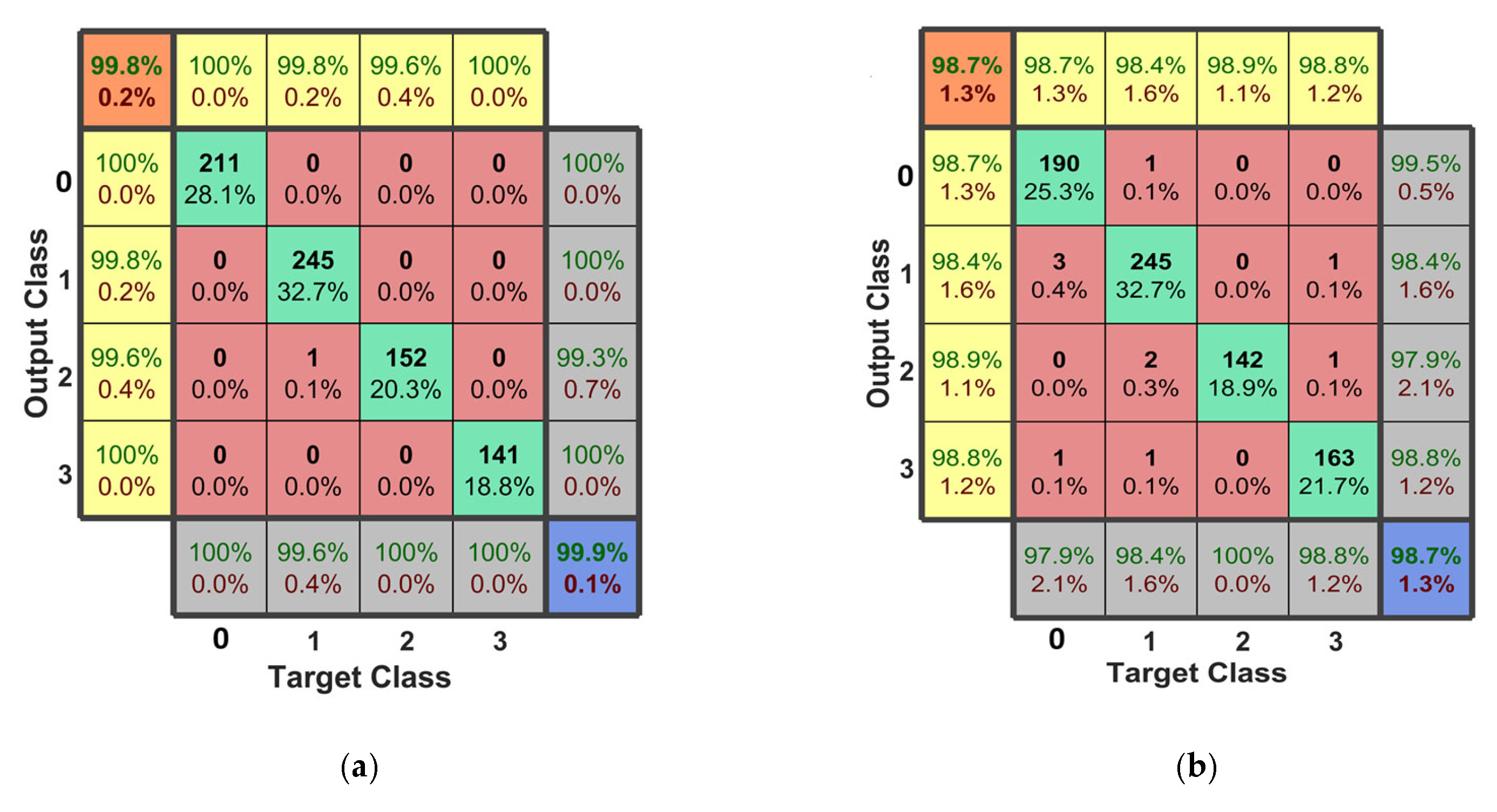

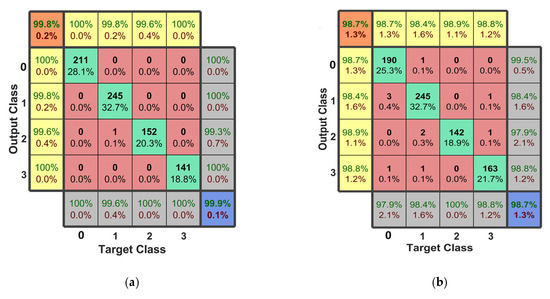

Performance assessment and error analysis are represented with accuracy, precision, recall, error, false discovery rate (FDR), false negative rate (FNR), and F1 scores via appropriate confusion matrices (Supplementary Information explains the confusion matrix in detail). This assessment should provide insights into how robust the FLEDNet approach is, and where it could be improved. Figure 8 shows two confusion matrices to help readers understand the error analysis better.

Figure 8.

The confusion matrices for multiple drone detection scenarios engaging FLEDNet (CRNN): (a) SNR = 20 dBm and (b) SNR = 0 dB.

Figure 8a shows the excellent performance of the FLEDNet approach with the CRNN model for solving multiple drone detection scenarios (“0” indicates that the drone does not exist, “1” specifies that there is only one drone, “2” specifies that there are two drones, and “3” specifies that there are three drones) when SNR = 20 dB, while Figure 8b shows an accuracy of 98.73% for SNR = 0 dB. It is important to note that the F1 score values (yellow cells in the confusion matrix) follow the accuracy (blue cell), precision (turquoise vertical cells), and recall (gray horizontal cells) values, meaning that models show stable characteristics.

5.4. Comparative Analysis with DL-Based E/D

In addition to the above analysis, a comparison with a state-of-the-art DL-based E/D (DexiNed [48]) was also conducted to prove the applicability of the proposed FLEDNet approach. Table 4 shows the accuracy results for the DexiNed and FLEDNet approaches. In both cases, we used spectrograms obtained from the 2.4 GHz ISM band, but for the case wherein the SNR = 20 dB, the bold and underlined values represent the best results for each scenario.

Table 4.

Accuracy of the DexiNed and fuzzy logic-based E/D for SNR = 20 dB.

The FLEDNet approach has surpassed the performance of the DL-based E/D (DexiNed model) across all tested scenarios. Notably, integrating fuzzy logic E/D has substantially enhanced the effectiveness of all DL models evaluated, particularly the CRNN. Furthermore, at the ideal SNR level (SNR = 20 dB), the FLEDNet has outperformed previously published results, as shown in Table 1.

5.5. The Time Complexity Analysis

To analyze the complexity between traditional E/D, fuzzy logic-based E/D, and DL-based E/D, we examined the average execution time of the code on the embedded computer NVIDIA Jetson Orin NX. The average simulation time was calculated from 100 measurements using 1000 successive spectrograms obtained from the RF receiver USRP-2954 in a practical implementation in outdoor conditions. All reported measurements include the time required for the completion of the custom-made software pipeline developed for both E/D and CRNN model predictions. It is essential to recognize that, aside from the number of model parameters and the dimensions of the input data, we cannot directly compare the average simulation times reported in various studies. This limitation arises from using different hardware platforms and the lack of clarity surrounding the procedures used to measure simulation times in the literature. Table 5 shows the average elapsed time for necessary calculations of the proposed FLEDNet approach, the total number of parameters (M denotes million), and input data dimensions.

Table 5.

The time complexity analysis.

The FLEDNet approach demonstrated a nearly twenty-times shorter average simulation time than state-of-the-art DL-based E/D. This significant deflection can be attributed to the necessity of utilizing two distinct DL models requiring computational resources—one for the DL-based edge detection (DexiNed) and the other for the drone classification tasks (CNN). Moreover, the FLEDNet model displays a reduced number of parameters, underlining its simplicity in inference. This advantage is especially highlighted when YOLO Lite or VGG models are engaged, and for the CNN models proposed in [27,29]. The obtained results align with the conclusion presented in [31], which suggests that future studies should aim to balance detection accuracy with the computational demands of real-time processing.

The traditional E/D exhibited better simulation times than the fuzzy logic E/D, which aligns with our expectations. The fuzzy logic E/D employs two filters to compute vertical and horizontal gradients from the input image, while methods such as Otsu, Kapur, and Canny rely on a single filtering operation. Additionally, the fuzzy logic E/D performs the FIS calculation to assess whether each pixel is part of a uniform region or an edge, ultimately generating an output image that highlights the detected edges. Despite this, given the observed accuracy improvement of 4.87% to 13.41% (as illustrated in Figure 4, Figure 5 and Figure 6), we argue that FLEDNet represents a cost-effective solution, especially in low-SNR environments. Another factor contributing to this assessment is that the time complexity analysis was executed on an embedded computer utilizing an RF receiver as the input data source. Finally, the proposed FLEDNet approach can be effectively integrated into a practical ADRO system as follows: the RF receiver scans a range of different frequency bands and sends IQ data to an FPGA module, where STFT calculations and necessary spectrogram preprocessing occur; then, the generated spectrograms feed the GPU-embedded computer, where a DL classification algorithm is implemented.

6. Conclusions

This research focuses on enhancing the accuracy of convolutional neural networks for drone classification by incorporating fuzzy logic-based edge detectors into noisy radio signal spectrogram analysis. The results obtained using the proposed FLEDNet approach demonstrate that integrating a fuzzy logic-based edge detector with convolutional neural networks and convolutional recurrent neural networks significantly improves performance in the following critical scenarios: drone detection, drone-type identification, and the detection of multiple drones. Notably, the FLEDNet approach exhibits robustness against variations in the signal-to-noise ratio across Industrial, Scientific, and Medical frequency bands. Even when the signal-to-noise ratio is reduced to −10 dB, the classification accuracy remains relatively stable, marking a significant achievement. The credibility and applicability of the FLEDNet approach were further validated through comparisons with various machine learning and deep learning models, as well as the state-of-the-art edge detector DexiNed. Additionally, a time complexity analysis was conducted on NVIDIA Jetson Orin NX to support the thesis that the proposed approach is suitable for the practical implementation of an anti-drone system, primarily aimed at detecting drones and counting their numbers in sensitive areas across multiple frequency bands. While the tailored fuzzy logic edge detector is specifically designed for drone radio signals, it may encounter limitations when applied to other scenarios, especially with radio signals with different patterns in spectrograms. Nonetheless, this study is important as it highlights a notable improvement in accuracy: a 4.87% increase in drone detection, a 13.41% enhancement in drone-type identification, and a 7.26% increase in detecting multiple drones.

This research underscores the potential for validating the FLEDNet approach through outdoor experiments focused on practical anti-drone systems across different frequency ranges, which include both commercial and custom-made first-person-view drones. A promising avenue for future studies is the integration of original spectrograms with those enhanced by detected edges through feature-level fusion, along with applying morphological operations to the input spectrograms. Additionally, future research could investigate the evaluation of a priori energy detectors in conjunction with decision-level fusion, utilizing outputs from various neural networks to facilitate multimodal fusion. An especially compelling direction for upcoming studies is the practical implementation of visual transformers.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/drones9040243/s1.

Author Contributions

Conceptualization, B.S.-J.; methodology, B.B.; software, B.S.-J.; validation, I.P., M.A. and B.B.; formal analysis, M.A.; investigation, S.S.; resources, B.S.-J.; data curation, B.S.-J.; writing—original draft preparation, B.S.-J.; writing—review and editing, M.A. and B.B.; visualization, M.A. and S.S.; supervision, I.P., M.A. and B.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the Ministry of Science, Technological Development and Innovation, Republic of Serbia, Contract No. 451-03-137/2025-03/200325. It has also been part of Project No. VA/TT/1/25-27, supported by the Ministry of Defence, Republic of Serbia.

Data Availability Statement

All authors certify that the datasets that support the findings of this study are available from the corresponding author upon reasonable request.

Conflicts of Interest

The authors declare no conflicts of interest.

List of Abbreviations

| AWGN | additive white Gaussian noise |

| ADRO | anti-drone |

| BiLSTM | Bidirectional Long Short-Term Memory |

| E/D | edge detector |

| CNN | convolutional neural network |

| CRNN | convolutional recurrent neural network |

| COD | centroid defuzzification |

| conv | convolutional |

| DL | deep learning |

| GoF | Goodness-of-Fit |

| FC-DNN | fully connected deep neural network |

| FIS | fuzzy interference system |

| FLEDNet | Fuzzy Logic Edge Detection Network |

| FSMF | fuzzy set membership function |

| HED | Holistically-NestedEdge Detection |

| HHT | Hilbert–Huang transform |

| ISM | Industrial, Scientific, and Medical |

| kNN | k-nearest Neighbors |

| LSTM | Long Short-Term Memory |

| ML | machine learning |

| MSE | Mean Square Error |

| RandF | random forest |

| RF | radio frequency |

| SAR | search and rescue |

| SDAE | stacked denoising autoencoder |

| SGDM | Stochastic Gradient Descent with Momentum |

| SNR | signal-to-noise ratio |

| STFT | Short-Time Fourier Transform |

| SVM | Support Vector Machine |

| UAV | Unmanned aerial vehicle |

| VGG | Visual Geometry Group |

| YOLO | You Only Look Once |

| w/o | without |

| 1D-CNN | one-dimensional convolutional neural network |

References

- Zhang, Z.; Zhu, L. A Review on Unmanned Aerial Vehicle Remote Sensing: Platforms, Sensors, Data Processing Methods, and Applications. Drones 2023, 7, 398. [Google Scholar] [CrossRef]

- Gupta, H.; Verma, O.P. Monitoring and Surveillance of Urban Road Traffic Using Low Altitude Drone Images: A Deep Learning Approach. Multimed. Tools Appl. 2022, 81, 19683–19703. [Google Scholar] [CrossRef]

- Tomczyk, A.M.; Ewertowski, M.W.; Creany, N.; Ancin-Murguzur, F.J.; Monz, C. The Application of Unmanned Aerial Vehicle (UAV) Surveys and GIS to the Analysis and Monitoring of Recreational Trail Conditions. Int. J. Appl. Earth Obs. Geoinf. 2023, 123, 103474. [Google Scholar] [CrossRef]

- Rejeb, A.; Abdollahi, A.; Rejeb, K.; Treiblmaier, H. Drones in Agriculture: A Review and Bibliometric Analysis. Comput. Electron. Agric. 2022, 198, 107017. [Google Scholar] [CrossRef]

- Mammarella, M.; Comba, L.; Biglia, A.; Dabbene, F.; Gay, P. Cooperation of Unmanned Systems for Agricultural Applications: A Case Study in a Vineyard. Biosyst. Eng. 2022, 223, 81–102. [Google Scholar] [CrossRef]

- Choi, H.W.; Kim, H.J.; Kim, S.K.; Na, W.S. An Overview of Drone Applications in the Construction Industry. Drones 2023, 7, 515. [Google Scholar] [CrossRef]

- Betti Sorbelli, F.; Corò, F.; Das, S.K.; Palazzetti, L.; Pinotti, C.M. Greedy Algorithms for Scheduling Package Delivery with Multiple Drones. In Proceedings of the ACM International Conference Proceeding Series, Rome, Italy, 24 January 2022; pp. 31–39. [Google Scholar] [CrossRef]

- Dousai, N.M.K.; Lonearic, S. Detecting Humans in Search and Rescue Operations Based on Ensemble Learning. IEEE Access 2022, 10, 26481–26492. [Google Scholar] [CrossRef]

- Hoang, M.T.O.; Grøntved, K.A.R.; van Berkel, N.; Skov, M.B.; Christensen, A.L.; Merritt, T. Drone Swarms to Support Search and Rescue Operations: Opportunities and Challenges. In Cultural Robotics: Social Robots and Their Emergent Cultural Ecologies; Springer Series on Cultural Computing; Springer: Cham, Germany, 2023; pp. 163–176. ISBN 978-3-031-28138-9. [Google Scholar]

- Alzahrani, M.Y. Enhancing Drone Security Through Multi-Sensor Anomaly Detection and Machine Learning. SN Comput. Sci. 2024, 5, 651. [Google Scholar] [CrossRef]

- Warnakulasooriya, K.; Segev, A. Attacks, Detection, and Prevention on Commercial Drones: A Review. In Proceedings of the 3rd International Conference on Image Processing and Robotics, ICIPRoB, Colombo, Sri Lanka, 9–10 March 2024. [Google Scholar] [CrossRef]

- Mekdad, Y.; Aris, A.; Babun, L.; Fergougui, A.E.; Conti, M.; Lazzeretti, R.; Uluagac, A.S. A Survey on Security and Privacy Issues of UAVs. Comput. Netw. 2023, 224, 109626. [Google Scholar] [CrossRef]

- Hunter-Perkins, R.; Shiotani, H.; Rogers, J. Global Report on the Acquisition, Weaponization and Deployment of Unmanned Aircraft Systems by Non-State Armed Groups for Terrorism Purposes. Available online: https://www.un.org/counterterrorism (accessed on 4 November 2024).

- Haugstvedt, H. Still Aiming at the Harder Targets: An Update on Violent Non-State Actors’ Use of Armed UAVs. Perspect. Terror. 2024, 18, 132–143. [Google Scholar] [CrossRef]

- Tubis, A.A.; Poturaj, H.; Dereń, K.; Żurek, A. Risks of Drone Use in Light of Literature Studies. Sensors 2024, 24, 1205. [Google Scholar] [CrossRef] [PubMed]

- Yasmine, G.; Maha, G.; Hicham, M. Survey on Current Anti-Drone Systems: Process, Technologies, and Algorithms. Int. J. Syst. Syst. Eng. 2022, 12, 235. [Google Scholar] [CrossRef]

- Jurn, Y.N.; Mahmood, S.A.; Aldhaibani, J.A. Anti-Drone System Based Different Technologies: Architecture, 704 Threats and Challenges. In Proceedings of the 2021 11th IEEE International Conference on Control System, Computing and Engineering, ICCSCE, Penang, Malaysia, 27–28 August 2021; pp. 114–119. [Google Scholar] [CrossRef]

- Park, S.; Kim, H.T.; Lee, S.; Joo, H.; Kim, H. Survey on Anti-Drone Systems: Components, Designs, and Challenges. IEEE Access 2021, 9, 42635–42659. [Google Scholar] [CrossRef]

- Sazdić-Jotić, B.; Pokrajac, I.; Bajčetić, J.; Stefanović, N. Review of RF-Based Drone Classification: Techniques, Datasets, and Challenges. Vojnoteh. Glas. 2024, 72, 764–789. [Google Scholar] [CrossRef]

- Sazdić-Jotić, B. CUAS_Literature_DataSet. Available online: https://data.mendeley.com/datasets/6jc6w2v5nf/2 (accessed on 29 December 2024).

- Butterworth-Hayes, P. The Counter UAS Directory and Buyer’s Guide. Available online: https://www.unmannedairspace.info/counter-uas-industry-directory/ (accessed on 21 December 2024).

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Sazdić-Jotić, B.M.; Pokrajac, I.; Bajčetić, J.; Bondžulić, B.P.; Joksimović, V.; Šević, T.; Obradović, D.; Sazdic-Jotic, B.M.; Pokrajac, I.; Bajcetic, J.; et al. VTI_DroneSET. Available online: https://data.mendeley.com/datasets/s6tgnnp5n2/1 (accessed on 1 November 2020).

- Basak, S.; Rajendran, S.; Pollin, S.; Scheers, B. Combined RF-Based Drone Detection and Classification. IEEE Trans. Cogn. Commun. Netw. 2022, 8, 111–120. [Google Scholar] [CrossRef]

- Basak, S.; Rajendran, S.; Pollin, S.; Scheers, B. Drone Classification from RF Fingerprints Using Deep Residual Nets. In Proceedings of the International Conference on COMmunication Systems & NETworkS (COMSNETS), Bangalore, India, 5–9 January 2021; pp. 548–555. [Google Scholar]

- Ezuma, M.; Erden, F.; Kumar Anjinappa, C.; Ozdemir, O.; Guvenc, I. Detection and Classification of UAVs Using RF Fingerprints in the Presence of Wi-Fi and Bluetooth Interference. IEEE Open J. Commun. Soc. 2020, 1, 60–76. [Google Scholar] [CrossRef]

- Ozturk, E.; Erden, F.; Guvenc, I. RF-Based Low-SNR Classification of UAVs Using Convolutional Neural Net-works. arXiv 2020, arXiv:2009.05519. [Google Scholar]

- Ezuma, M.; Erden, F.; Anjinappa, C.K.; Ozdemir, O.; Guvenc, I. Micro-UAV Detection and Classification from RF Fingerprints Using Machine Learning Techniques. In Proceedings of the 2019 IEEE Aerospace Conference, IEEE, Big Sky, MT, USA, 2–9 March 2019; pp. 1–13. [Google Scholar] [CrossRef]

- Noh, D., II; Jeong, S.G.; Hoang, H.T.; Pham, Q.V.; Huynh-The, T.; Hasegawa, M.; Sekiya, H.; Kwon, S.Y.; Chung, S.H.; Hwang, W.J. Signal Preprocessing Technique With Noise-Tolerant for RF-Based UAV Signal Classification. IEEE Access 2022, 10, 134785–134798. [Google Scholar] [CrossRef]

- Medaiyese, O.O.; Ezuma, M.; Lauf, A.P.; Adeniran, A.A. Hierarchical Learning Framework for UAV Detection and Identification. IEEE J. Radio Freq. Identif. 2022, 6, 176–188. [Google Scholar] [CrossRef]

- Glüge, S.; Nyfeler, M.; Aghaebrahimian, A.; Ramagnano, N.; Schüpbach, C. Robust Low-Cost Drone Detection and Classification Using Convolutional Neural Networks in Low SNR Environments. IEEE J. Radio Freq. Identif. 2024, 8, 821–830. [Google Scholar] [CrossRef]

- Muntarina, K.; Shorif, S.B.; Uddin, M.S. Notes on Edge Detection Approaches. Evol. Syst. 2022, 13, 169–182. [Google Scholar] [CrossRef]

- Zadeh, L.A. Fuzzy Sets. Inf. Control 1965, 8, 338–353. [Google Scholar] [CrossRef]

- Azimirad, E. Design of an Optimized Fuzzy System for Edge Detection in Images. J. Intell. Fuzzy Syst. 2022, 43, 2363–2373. [Google Scholar] [CrossRef]

- Shrivastav, U.; Singh, S.K.; Khamparia, A. A Novel Approach to Detect Edge in Digital Image Using Fuzzy Logic. In First International Conference on Sustainable Technologies for Computational Intelligence; Advances in Intelligent Systems and Computing; Springer: Berlin/Heidelberg, Germany, 2020; Volume 1045, pp. 63–74. ISBN 9789811500282. [Google Scholar]

- Raheja, S.; Kumar, A. Edge Detection Based on Type-1 Fuzzy Logic and Guided Smoothening. Evol. Syst. 2021, 12, 447–462. [Google Scholar] [CrossRef]

- Sadjadi, E.N.; Sadrian Zadeh, D.; Moshiri, B.; García Herrero, J.; Molina López, J.M.; Fernández, R. Application of Smooth Fuzzy Model in Image Denoising and Edge Detection. Mathematics 2022, 10, 2421. [Google Scholar] [CrossRef]

- Orujov, F.; Maskeliūnas, R.; Damaševičius, R.; Wei, W. Fuzzy Based Image Edge Detection Algorithm for Blood Vessel Detection in Retinal Images. Appl. Soft Comput. 2020, 94, 106452. [Google Scholar] [CrossRef]

- Setiadi, D.R.I.M.; Rustad, S.; Andono, P.N.; Shidik, G.F. Graded Fuzzy Edge Detection for Imperceptibility Optimization of Image Steganography. Imaging Sci. J. 2024, 72, 693–705. [Google Scholar] [CrossRef]

- Kumawat, A.; Panda, S. A Robust Edge Detection Algorithm Based on Feature-Based Image Registration (FBIR) Using Improved Canny with Fuzzy Logic (ICWFL). Vis. Comput. 2022, 38, 3681–3702. [Google Scholar] [CrossRef]

- Canny, J. A Computational Approach to Edge Detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, PAMI-86, 679–698. [Google Scholar] [CrossRef]

- Kapur, J.N.; Sahoo, P.K.; Wong, A.K.C. A New Method for Gray-Level Picture Thresholding Using the Entropy of the Histogram. Comput. Vis. Graph. Image Process 1985, 29, 273–285. [Google Scholar] [CrossRef]

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Deng, R.; Liu, S. Deep Structural Contour Detection. In Proceedings of the 28th ACM International Conference on Multimedia, ACM, New York, NY, USA, 12 October 2020; pp. 304–312. [Google Scholar] [CrossRef]

- He, J.; Zhang, S.; Yang, M.; Shan, Y.; Huang, T. BDCN: Bi-Directional Cascade Network for Perceptual Edge Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 100–113. [Google Scholar] [CrossRef] [PubMed]

- Kelm, A.P.; Rao, V.S.; Zölzer, U. Object Contour and Edge Detection with Refine ContourNet. In Computer Analysis of Images and Patterns; Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Germany, 2019; Volume 11678 LNCS, pp. 246–258. ISBN 9783030298876. [Google Scholar]

- Pu, M.; Huang, Y.; Guan, Q.; Ling, H. RINDNet: Edge Detection for Discontinuity in Reflectance, Illumination, Normal and Depth. arXiv 2021, arXiv:2108.00616. [Google Scholar]

- Soria, X.; Riba, E.; Sappa, A. Dense Extreme Inception Network: Towards a Robust CNN Model for Edge Detection. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision (WACV), Snowmass, CO, USA, 1–5 March 2020; pp. 1912–1921. [Google Scholar]

- Pu, M.; Huang, Y.; Liu, Y.; Guan, Q.; Ling, H. EDTER: Edge Detection with Transformer. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; Volume 2022, pp. 1392–1402. [Google Scholar]

- Sivapriya, M.S.; Suresh, S. ViT-DexiNet: A Vision Transformer-Based Edge Detection Operator for Small Object Detection in SAR Images. Int. J. Remote Sens. 2023, 44, 7057–7084. [Google Scholar] [CrossRef]

- Jie, J.; Guo, Y.; Wu, G.; Wu, J.; Hua, B. EdgeNAT: Transformer for Efficient Edge Detection. arXiv 2024, arXiv:2408.10527. [Google Scholar]

- Xia, B.; Mantegh, I.; Xie, W. UAV Multi-Dynamic Target Interception: A Hybrid Intelligent Method Using Deep Reinforcement Learning and Fuzzy Logic. Drones 2024, 8, 226. [Google Scholar] [CrossRef]

- Xia, B.; Mantegh, I.; Xie, W.F. Hybrid Framework for UAV Motion Planning and Obstacle Avoidance: Integrating Deep Reinforcement Learning with Fuzzy Logic. In Proceedings of the 10th 2024 International Conference on Control, Decision and Information Technologies, CoDIT, Vallette, Malta, 1–4 July 2024; pp. 2662–2669. [Google Scholar] [CrossRef]

- Munadi, M.; Radityo, B.; Ariyanto, M.; Taniai, Y. Automated Guided Vehicle (AGV) Lane-Keeping Assist Based on Computer Vision, and Fuzzy Logic Control under Varying Light Intensity. Results Eng. 2024, 21, 101678. [Google Scholar] [CrossRef]

- Al-Sa’d, M.F.; Al-Ali, A.; Mohamed, A.; Khattab, T.; Erbad, A. RF-Based Drone Detection and Identification Using Deep Learning Approaches: An Initiative towards a Large Open Source Drone Database. Future Gener. Comput. Syst. 2019, 100, 86–97. [Google Scholar] [CrossRef]

- Allahham, M.S.; Khattab, T.; Mohamed, A. Deep Learning for RF-Based Drone Detection and Identification: A Multi-Channel 1-D Convolutional Neural Networks Approach. In Proceedings of the IEEE International Conference on Informatics, IoT, and Enabling Technologies (ICIoT), IEEE, Doha, Qatar, 2–5 February 2020; pp. 112–117. [Google Scholar]

- Yang, S.; Luo, Y.; Miao, W.; Ge, C.; Sun, W.; Luo, C. RF Signal-Based UAV Detection and Mode Classification: A Joint Feature Engineering Generator and Multi-Channel Deep Neural Network Approach. Entropy 2021, 23, 1678. [Google Scholar] [CrossRef]

- Sazdić-Jotić, B.; Pokrajac, I.; Bajčetić, J.; Bondžulić, B.; Obradović, D. Single and Multiple Drones Detection and Identification Using RF Based Deep Learning Algorithm. Expert Syst. Appl. 2022, 187, 115928. [Google Scholar] [CrossRef]

- Basak, S.; Rajendran, S.; Pollin, S.; Scheers, B. Autoencoder Based Framework for Drone RF Signal Classification and Novelty Detection. In Proceedings of the International Conference on Advanced Communication Technology, ICACT, Institute of Electrical and Electronics Engineers Inc., Pyeongchang, Republic of Korea, 19–22 February 2023; Volume 2023, pp. 218–225. [Google Scholar]

- Al-Emadi, S.; Al-Senaid, F. Drone Detection Approach Based on Radio-Frequency Using Convolutional Neural Network. In Proceedings of the IEEE International Conference on Informatics, IoT, and Enabling Technologies (ICIoT), IEEE, Doha, Qatar, 2–5 February 2020; pp. 29–34. [Google Scholar]

- Mokhtari, M.; Bajčetić, J.; Sazdić-Jotić, B.; Pavlović, B. RF-Based Drone Detection and Classification System Using Convolutional Neural Network. In Proceedings of the 29th Telecommunications Forum (TELFOR), IEEE, Belgrade, Serbia, 23–24 November 2021; pp. 1–4. [Google Scholar]

- Alam, S.S.; Chakma, A.; Rahman, M.H.; Bin Mofidul, R.; Alam, M.M.; Utama, I.B.K.Y.; Jang, Y.M. RF-Enabled Deep-Learning-Assisted Drone Detection and Identification: An End-to-End Approach. Sensors 2023, 23, 4202. [Google Scholar] [CrossRef] [PubMed]

- Yan, X.; Han, B.; Su, Z.; Hao, J. SignalFormer: Hybrid Transformer for Automatic Drone Identification Based on Drone RF Signals. Sensors 2023, 23, 9098. [Google Scholar] [CrossRef] [PubMed]

- Huang, R.; Pedoeem, J.; Chen, C. YOLO-LITE: A Real-Time Object Detection Algorithm Optimized for Non-GPU Computers. In Proceedings of the 2018 IEEE International Conference on Big Data, Big Data, Seattle, WA, USA, 10–13 December 2018; pp. 2503–2510. [Google Scholar] [CrossRef]

- Sussillo, D.; Abbott, L.F. Random Walk Initialization for Training Very Deep Feedforward Networks. arXiv 2014, arXiv:1412.6558. [Google Scholar]

- Šević, T.; Joksimović, V.; Pokrajac, I.; Radiana, B.; Sazdić-Jotić, B.; Obradović, D. Interception and Detection of Drones Using RF-Based Dataset of Drones. Sci. Tech. Rev. 2020, 70, 29–34. [Google Scholar] [CrossRef]

- Bujaković, D.; Andrić, M.; Bondžulić, B.; Mitrović, S.; Simić, S. Time–Frequency Distribution Analyses of Ku-Band Radar Doppler Echo Signals. Frequenz 2015, 69, 119–128. [Google Scholar] [CrossRef]

- Van Leekwijck, W.; Kerre, E.E. Defuzzification: Criteria and Classification. Fuzzy Sets Syst. 1999, 108, 159–178. [Google Scholar] [CrossRef]

- Gao, J.; Li, L.; Ren, X.; Chen, Q.; Abdul-Abbass, Y.M. An Effective Method for Salt and Pepper Noise Removal Based on Algebra and Fuzzy Logic Function. Multimed. Tools Appl. 2024, 83, 9547–9576. [Google Scholar] [CrossRef]

- Kushwaha, S. An Effective Adaptive Fuzzy Filter for Speckle Noise Reduction. Multimed. Tools Appl. 2024, 83, 34963–34978. [Google Scholar] [CrossRef]

- Yin, X.X.; Hadjiloucas, S. Digital Filtering Techniques Using Fuzzy-Rules Based Logic Control. J. Imaging 2023, 9, 208. [Google Scholar] [CrossRef]

- Xie, S.; Tu, Z. Holistically-Nested Edge Detection. Int. J. Comput. Vis. 2017, 125, 3–18. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. In Proceedings of the 3rd International Conference on Learning Representations, (ICLR 2015), San Diego, CA, USA, 7–9 May 2015; pp. 1–14. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).