Responses of Artificial Intelligence Chatbots to Testosterone Replacement Therapy: Patients Beware!

Abstract

1. Introduction

2. Materials and Methods

2.1. Selection of Chatbots

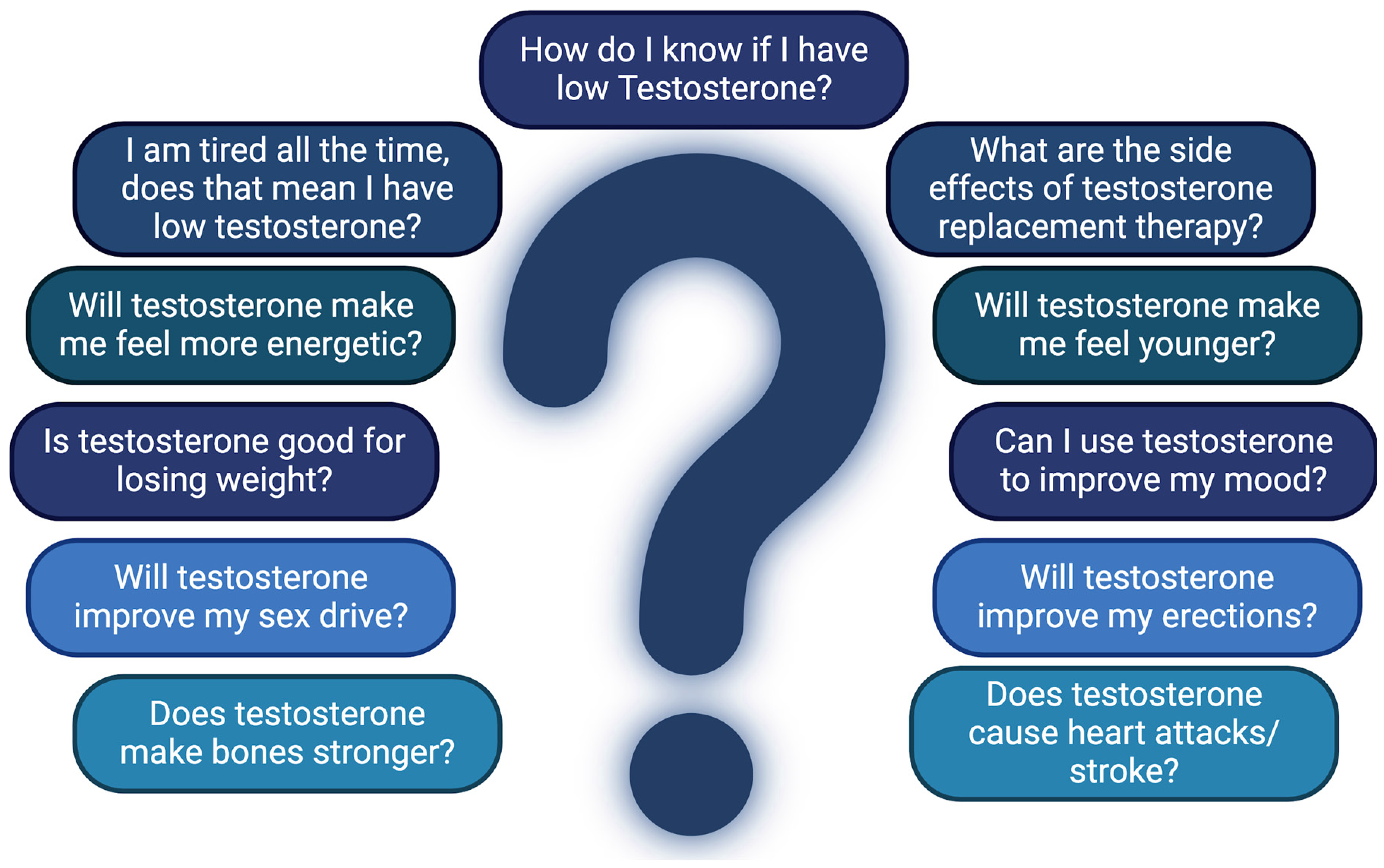

2.2. Question Source

2.3. Quality and Readability Analysis

2.4. Statistical Analysis

2.5. Ethical Clearance

3. Results

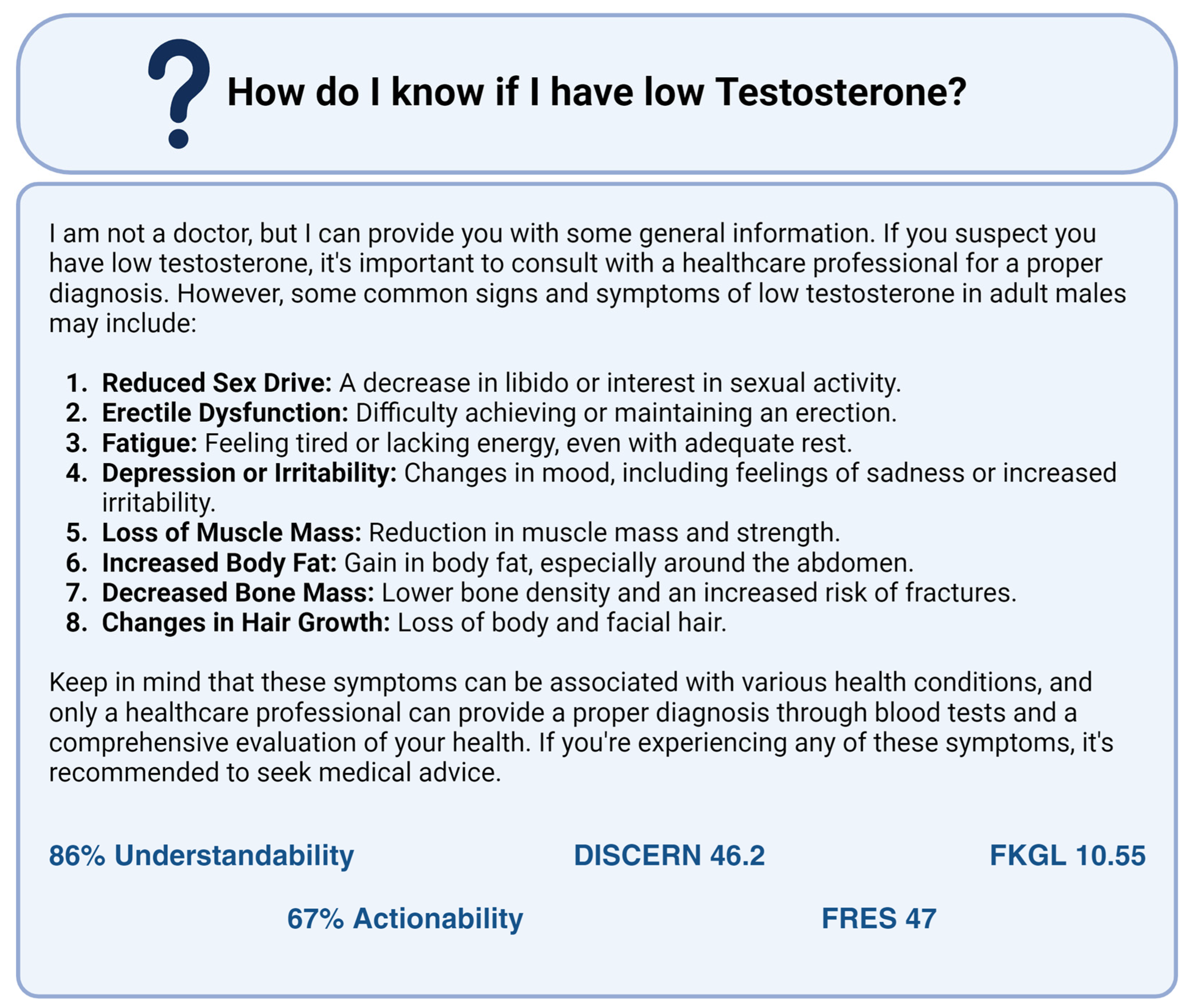

3.1. Quality of Information

3.2. Understandability and Actionability of Information

3.3. Readability

4. Discussion

4.1. Clinical Implications

4.2. Future Development

4.3. Limitations

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Hesse, B.W.; Nelson, D.E.; Kreps, G.L.; Croyle, R.T.; Arora, N.K.; Rimer, B.K.; Viswanath, K. Trust and sources of health information: The impact of the Internet and its implications for health care providers: Findings from the first Health Information National Trends Survey. Arch. Intern. Med. 2005, 165, 2618–2624. [Google Scholar] [CrossRef] [PubMed]

- Calixte, R.; Rivera, A.; Oridota, O.; Beauchamp, W.; Camacho-Rivera, M. Social and demographic patterns of health-related Internet use among adults in the United States: A secondary data analysis of the health information national trends survey. Int. J. Environ. Res. Public Health 2020, 17, 6856. [Google Scholar] [CrossRef] [PubMed]

- Fahy, E.; Hardikar, R.; Fox, A.; Mackay, S. Quality of patient health information on the Internet: Reviewing a complex and evolving landscape. Australas. Med. J. 2014, 7, 24–28. [Google Scholar] [CrossRef] [PubMed]

- Floridi, L.; Chiriatti, M. GPT-3: Its nature, scope, limits, and consequences. Minds Mach. 2020, 30, 681–694. [Google Scholar] [CrossRef]

- Caldarini, G.; Jaf, S.; McGarry, K. A literature survey of recent advances in chatbots. Information 2022, 13, 41. [Google Scholar] [CrossRef]

- Khanna, A.; Pandey, B.; Vashishta, K.; Kalia, K.; Pradeepkumar, B.; Das, T. A study of today’s AI through chatbots and rediscovery of machine intelligence. Int. J. U-E-Serv. Sci. Technol. 2015, 8, 277–284. [Google Scholar]

- Adamopoulou, E.; Moussiades, L. Chatbots: History, technology, and applications. Mach. Learn. Appl. 2020, 2, 100006. [Google Scholar] [CrossRef]

- Ji, Z.; Lee, N.; Frieske, R.; Yu, T.; Su, D.; Xu, Y.; Ishii, E.; Bang, Y.; Chen, D.; Dai, W.; et al. Survey of hallucination in natural language generation. ACM Comput. Surv. 2023, 55, 1–38. [Google Scholar] [CrossRef]

- Weidinger, L.; Mellor, J.; Rauh, M.; Griffin, C.; Uesato, J.; Huang, P.-S.; Cheng, M.; Glaese, M.; Balle, B.; Kasirzadeh, A.; et al. Ethical and social risks of harm from language models. arXiv 2021, arXiv:2112.04359. [Google Scholar]

- Alkaissi, H.; McFarlane, S.I. Artificial hallucinations in ChatGPT: Implications in scientific writing. Cureus 2023, 15, e35179. [Google Scholar] [CrossRef]

- Gilbert, K.; Cimmino, C.B.; Beebe, L.C.; Mehta, A. Gaps in patient knowledge about risks and benefits of testosterone replacement therapy. Urology 2017, 103, 27–33. [Google Scholar] [CrossRef] [PubMed]

- Discern Online—The Discern Instrument. Available online: http://www.discern.org.uk (accessed on 25 January 2024).

- Agency for Healthcare Research and Quality. The Patient Education Materials Assessment Tool (PEMAT) and User’s Guide. October 2013. Updated November 2020. Available online: https://www.ahrq.gov/health-literacy/patient-education/pemat-p.html (accessed on 25 January 2024).

- Weil, A.G.; Bojanowski, M.W.; Jamart, J.; Gustin, T.; Lévêque, M. Evaluation of the quality of information on the Internet available to patients undergoing cervical spine surgery. World Neurosurg. 2014, 82, e31–e39. [Google Scholar] [CrossRef] [PubMed]

- Readability Formulas—Readability Scoring System. Available online: https://readabilityformulas.com/readability-scoring-system.php#formulaResults (accessed on 29 January 2024).

- Liu, J.; Wang, C.; Liu, S. Utility of ChatGPT in clinical practice. J. Med. Internet Res. 2023, 25, e48568. [Google Scholar] [CrossRef] [PubMed]

- Musheyev, D.; Pan, A.; Loeb, S.; Kabarriti, A.E. How well do artificial intelligence chatbots respond to the top search queries about urological malignancies? Eur. Urol. 2024, 85, 13–16. [Google Scholar] [CrossRef] [PubMed]

- Johnson, S.B.; King, A.J.; Warner, E.L.; Aneja, S.; Kann, B.H.; Bylund, C.L. Using ChatGPT to evaluate cancer myths and misconceptions: Artificial intelligence and cancer information. JNCI Cancer Spectr. 2023, 7, pkad015. [Google Scholar] [CrossRef]

- Seth, I.; Lim, B.; Xie, Y.; Cevik, J.; Rozen, W.M.; Ross, R.J.; Lee, M. Comparing the efficacy of large language models ChatGPT, BARD, and Bing AI in providing information on rhinoplasty: An observational study. Aesthetic Surg. J. Open Forum 2023, 5, ojad084. [Google Scholar] [CrossRef] [PubMed]

- Pan, A.; Musheyev, D.; Bockelman, D.; Loeb, S.; Kabarriti, A.E. Assessment of artificial intelligence chatbot responses to top searched queries about cancer. JAMA Oncol. 2023, 9, 1437–1440. [Google Scholar] [CrossRef] [PubMed]

- Weiss, B.D. Health literacy. Am. Med. Assoc. 2003, 253, 358. [Google Scholar]

- Pividori, M. Chatbots in Science: What Can ChatGPT Do for You? Available online: https://www.nature.com/articles/d41586-024-02630-z (accessed on 4 December 2024).

| TOOL | AI CHATBOT | |||

|---|---|---|---|---|

| Bing Chat | ChatGPT | Google Bard | Perplexity AI | |

| DISCERN 1 | 40 (38–44) | 46.2 (43–49) | 56.5 (54–58) | 48.5 (42–53) |

| PEMAT Understandability 2 | 57% (50–60%) | 86% (83–91%) | 96% (86–100%) | 74% (66–73%) |

| PEMAT Actionability 2 | 40% (20–60%) | 67% (60–80%) | 74% (60–83.3%) | 40% (40–40%) |

| FRES 3 | 39.3 (27–62) | 25.1 (11–47) | 32.1 (16–52) | 41.9 (28–69) |

| FKGL 4 | 12.3 (7–14.9) | 14.9 (10.5–17.6) | 12.7 (9.6–16.2) | 10.8 (5.6–14.7) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Published by MDPI on behalf of the Société Internationale d’Urologie. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pabla, H.; Lange, A.; Nadiminty, N.; Sindhwani, P. Responses of Artificial Intelligence Chatbots to Testosterone Replacement Therapy: Patients Beware! Soc. Int. Urol. J. 2025, 6, 13. https://doi.org/10.3390/siuj6010013

Pabla H, Lange A, Nadiminty N, Sindhwani P. Responses of Artificial Intelligence Chatbots to Testosterone Replacement Therapy: Patients Beware! Société Internationale d’Urologie Journal. 2025; 6(1):13. https://doi.org/10.3390/siuj6010013

Chicago/Turabian StylePabla, Herleen, Alyssa Lange, Nagalakshmi Nadiminty, and Puneet Sindhwani. 2025. "Responses of Artificial Intelligence Chatbots to Testosterone Replacement Therapy: Patients Beware!" Société Internationale d’Urologie Journal 6, no. 1: 13. https://doi.org/10.3390/siuj6010013

APA StylePabla, H., Lange, A., Nadiminty, N., & Sindhwani, P. (2025). Responses of Artificial Intelligence Chatbots to Testosterone Replacement Therapy: Patients Beware! Société Internationale d’Urologie Journal, 6(1), 13. https://doi.org/10.3390/siuj6010013