Abstract

The development of science and technology constantly injects new vitality into dance performance and creation. Among them, three-dimensional (3D) vision technology provides novel ideas for the innovation and artistry of dance performances, expands the forms of dance performances and the way to present dance works, and brings a brand-new viewing experience to the audience. Nowadays, 3D vision technology in dance has been widely researched and applied. This review presents the background of the 3D vision technology application in the dance field, analyzes the main types of technology and working principles for realizing 3D vision, summarizes the research and application of the 3D vision technology in dance creation, perception, enhancement, and dance teaching, and finally looks forward to the development prospect of the 3D vision technology in the dance.

1. Introduction

Dance plays a vital role in human culture as a form of artistic expression. Traditional dance communication means and performance forms are relatively single, and the enhancement of the dance performance experience is limited by high-cost and complex stage art design and layout. With the continuous development of science and technology, people are more interested in dance performance content and expression diversity. Numerous dance performances based on new technical means have recently emerged, bringing new audiovisual experiences for dancers and audiences.

Realizing 3D vision with the help of science and technology is an emerging hot design idea in the dance field. That is, through the simulation of 3D space in the real world, the dance works visually present a more realistic, stereoscopic effect. Three-dimensional vision technology breaks through the limitations of the traditional stage, providing dancers with more creative inspiration and expressions. Thus, dancers create more innovative and infectious dance works and convey emotions and stories better. Also, 3D vision technology can give the audience a more realistic, shocking, immersive viewing experience, allowing for them to feel the dance works’ stereoscopic space and dynamic beauty and enhance their appreciation and understanding of the dance art. For dance teaching, 3D vision technology allows for students to observe and learn the details and skills of dance movements more intuitively. It improves the learning efficiency and quality, which inspires students’ interest and enthusiasm in dance. Three-dimensional vision technology has a wide range of application prospects and practical value in dance creation, performance, teaching, and dissemination, providing new possibilities and opportunities for the innovative development and popularizing of dance art.

This review introduces the perception mechanism of 3D vision and the main types of 3D vision technology, summarizes the research and application of 3D vision and its extended technologies (VR and AR) in dance, and finally looks forward to the development prospect of 3D vision technology in dance.

2. Three-Dimensional Vision Technology

As a technique to present a scene or image in stereoscopic form in front of the viewer’s eyes, 3D vision technology allows for people to feel a more realistic visual experience, which is widely applied in the fields of dance, singing, movies, games, medical imaging, education, etc. The development of 3D vision technology can be traced back to the 19th century, when people began to try to realize 3D effects by using the principle of optics. Charles Wheatstone invented the first stereoscopic picture viewer, the stereoscope, in 1838, which realized 3D vision for the first time with the principle of binocular parallax [1]. At the end of the 20th century, with the continuous improvement in computer technology and graphic processing capability, 3D vision technology developed rapidly. In the 1980s, the first commercially available 3D graphics software, Autodesk’s AutoCAD, appeared, laying the foundation for later 3D vision technology [2].

2.1. Three-Dimensional Vision

Three-dimensional imaging is based on visuospatial perception, which results from the joint action of the eye and the brain, that is, the eye’s perception of spatial relationships such as relative position, direction, and distance between object and object, as well as object and subject. Three-dimensional perception of the space mainly consists of depth, height, and width. Among them, the ability to perceive the scene’s depth is 3D vision. The 3D vision system produces stereoscopic sensation mainly based on the mechanisms of binocular parallax, motion parallax, visual accommodation, vergence, and psychological cues, and calculates the distance and depth of an object by comparing the differences in the images.

2.1.1. Binocular Parallax

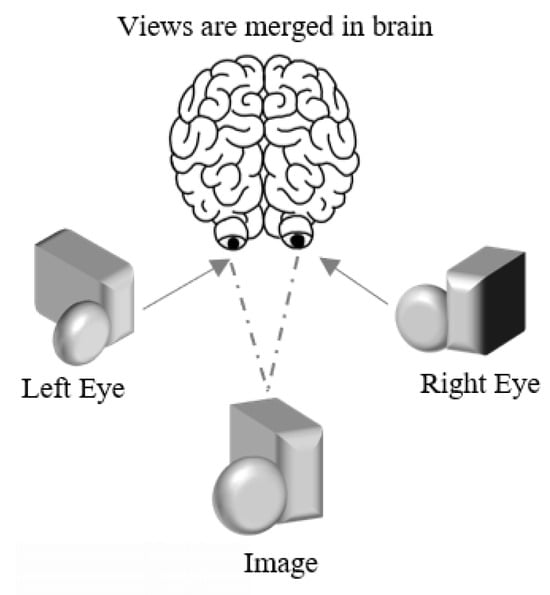

Binocular parallax is the most prominent depth perception mechanism. As shown in Figure 1, binocular parallax refers to a certain distance (~65 mm) between the two eyes. When observing, light enters the left and right eyes from different angles, and the object will form stereo-image pairs with slight differences in the retinas of the left and right eyes. Such differences can provide the depth information of the object [3,4]. When transmitted to the brain via nerve impulses, the brain will compare the two images and calculate the object distance based on the differences, ultimately producing stereoscopic vision [5,6].

Figure 1.

Schematic diagram of parallax principle.

2.1.2. Motion Parallax

Motion parallax is the mechanism by which 3D vision can be formed with only one eye. As shown in Figure 2, when the observer’s eye moves relative to the background and objects, the relative positions between objects change in what the retina imagines. Based on these changes, the brain will perceive the relative depth of information between objects. This process is equivalent to observing the target from multiple directions and angles and completing the spatial 3D modeling in the brain to perceive the depth of information.

Figure 2.

Schematic diagram of motion parallax.

2.1.3. Visual Accommodation

Visual accommodation refers to the active focusing behavior of the eye. To observe objects at different depths and parts of objects at various depths in view, the lens in the eyes makes precise adjustments to focus by adjusting the contraction and diastole of its accessory muscles. With the lens, light rays will converge on the retina to form visual nerve impulses, which are then transmitted to the visual centers to create vision and images in the brain. During this process, information about the movement of the muscles is fed back to the brain, contributing to the perception of 3D vision, even when objects are viewed with one eye.

2.1.4. Vergence

Vergence is an eye movement in which the visual axes of both eyes converge to the same point in space by adjusting the extraocular muscles. Similarly, during this modulation, the movement of the extraocular muscles is fed back to the brain, which perceives information about the depth of an object. The characteristics of the mechanisms for vergence and visual accommodation determine that there will be some associative conflict between them, i.e., the accommodation–vergence conflict. This is mainly because the target point of vergence is usually the virtual image point outside the screen, while the target point of visual accommodation is on the screen. In 3D vision technology, it easily leads to visual fatigue.

2.1.5. Psychological Cues

Under the influence of life experience, some pseudo-3D effects that do not contain depth information can also trick the brain into depth perception. For example, shadows, textures, relative sizes, overlaps, blurring, and haze of objects are 2D information, but after being processed by the human visual system, the depth of the scenes can be judged according to experience.

2.2. Three-Dimensional Vision Technology Types

The development of display technology provides people with more tools to observe objects. The technology for realizing 3D vision is diversified and can be mainly categorized into three levels according to the realism and science of 3D vision, as illustrated in Figure 3. The first level is pseudo-3D vision, based on traditional 2D display technology. It mainly utilizes the mechanism of psychological cues to make observers form depth perception with the help of projection and unique display systems. However, this is not real 3D vision. The second level is the traditional 3D vision technology based on binocular parallax and convergence mechanisms, which have been widely researched and applied and can realize significant 3D visual effects. However, due to accommodation–vergence conflict, observers are prone to dizziness and fatigue under long-term use. Moreover, it cannot provide motion parallax, which is still a gap from real 3D vision. The third level combines motion parallax and visual accommodation based on binocular parallax, which can restore the light field information even further and achieve more realistic 3D visual effects [7].

Figure 3.

Three levels of 3D vision technology.

2.2.1. Pseudo-Three-Dimensional Vision Technology

Real 3D vision technology has the characteristics of multi-viewpoint, physical occlusion restoration, and depth perception, while pseudo-3D vision technology mainly simulates the 3D effect through optical means. A typical type of pseudo-3D vision technology is pseudo-holographic vision technology. Unlike real holographic technology, it does not record and reconstruct the light field information of the hologram. Still, it arranges multiple 2D images at different distances to construct a 3D mis-vision display effect. This type of pseudo-3D vision is produced using Pepper’s ghost and projection. As shown in Figure 4, the pre-prepared playback content is projected onto a particular optical medium (e.g., holographic projection film, water mist, transparent glass, etc.), and utilizing its reflective and transmissive properties, the observer can see both the false image and the real scene on the stage. In addition, some commercial malls have large naked-eye 3D screens. This mainly relies on unique video content design to create the visual illusion of objects that are beyond the boundaries of the screen or building.

Figure 4.

Schematic diagram of pseudo-holographic technology application in stage.

The limited amount of information available in pseudo-3D vision technology requires the observer to stand at a particular angle or position so that the 3D effect can be perceived. However, the mature technology and low cost of pseudo-3D vision have made it widely used in dance, stage design, and commercial display.

2.2.2. Traditional Three-Dimensional Vision Technology

Traditional 3D vision technology is categorized into glasses-type 3D vision technology and naked-eye 3D vision technology according to whether specialized glasses are required [8].

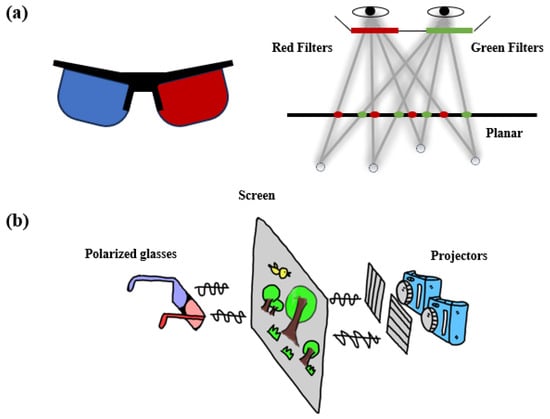

In glasses-type 3D technology, light is processed through special glasses to show different images to the audience’s two eyes, utilizing parallax to deceive the human eye and form a 3D effect. Glasses-type 3D technology is the mainstream of the current market due to its simple technology and low cost. The most common glasses-type 3D technology includes color-multiplexed stereo imaging technology, time-multiplexed stereo imaging technology, and polarization-multiplexed stereo imaging.

In color-multiplexed technology (as known as 3D anaglyph technique), the image is specially processed, and the dominant colors of the left and right images are red and cyan, respectively. The viewers are required to wear special glasses consisting of filters close to complementary colors, such as red and cyan (or blue). As shown in Figure 5a, some colors are reproduced by combining the red component of one eye’s view with the green and blue components of the other eye’s view. Due to the simple imaging principle and low cost, color-multiplexed stereo imaging was widely used in the early days. However, since the two filters on both sides will filter out the light of other colors, each side of the eye can only feel part of the color, which leads to massive information loss. Moreover, the edges of the picture are prone to color deviation. Hence, compared with other stereo imaging technologies, it has a poor 3D effect and cannot be applied on a large scale [9].

Figure 5.

(a) Two-color stereoscopic imaging glasses and the schematic diagram of its principle; (b) schematic diagram of polarized stereoscopic film.

Time-multiplexed stereo imaging technology is based on the ability of the human visual system to merge the components of a stereo pair within 50 ms. This “memory effect” is exploited by time-multiplexed displays, in which an LCD shutter, commanded by a synchronization signal, sequentially opens one eye while blocking the other, and the left- and right-eye views are rapidly alternated. The shutter system is typically integrated into a pair of glasses and controlled via an infrared link. Both constituent images are reproduced by a single monitor or projector at full spatial resolution, thus avoiding geometric and color differences. Both shutters switch to transparency when the observer leaves the screen, and the time-multiplexed display is fully compatible with two-dimensional presentations. This technology has good stereoscopic imaging and does not lead to any loss of color or brightness. However, the glasses are more complex in structure, more costly in playback equipment, and require repeated utilization of the brain for information extraction, which tends to fatigue the viewers. Also, high-frequency shutter operation can lead to flickering on the screen [10].

Polarized light stereo imaging technology is based on the mechanism of polarized light to separate the left and right images. It is one of the most popular technologies with an excellent stereoscopic effect and practicality among all the current 3D vision technologies. During production and broadcasting, two devices are needed to mimic how people observe things with their left and right eyes and synchronously shoot and broadcast different images with horizontal parallax. As shown in Figure 5b, when viewing, it is necessary to equip two non-interfering polarizers in front of the two lenses respectively. At the same time, the viewers are asked to wear special polarized glasses corresponding to the polarization direction of the lenses so that the viewers receive two images that do not interfere with each other. After the brain processes the image, it will generate 3D stereoscopic visual effects. Early polarized light stereoscopic imaging utilizes line polarization technology, requiring the glasses to remain horizontal when viewing. A slight skew will lead to the screen ghosting and stereoscopic effect deterioration. Nowadays, it is mainly based on circularly polarized light technology, where the direction of polarization is rotated regularly, so the light transmission and blocking characteristics are almost unaffected by the rotation angle, making the viewing more accessible. Polarized light stereo imaging technology is suitable for most theaters, TV projectors, etc., featuring full-color reproduction at full resolution, extremely low crosstalk (less than 0.1% when utilizing linear filters), and no 3D vertigo or fatigue. However, the cost of playback equipment for this technology is high, and the picture brightness is significantly reduced as more than 60% of the emitted light is filtered out [11].

Naked-eye 3D technology allows for the left and right eyes to see two images with parallax from a screen without wearing any glasses or additional equipment. Stereoscopic vision is generated when the brain processes them in an integrated manner. When in a different position or state of motion, there will be a difference in the objects viewed. Since objects are considered differently in different positions or motion states, people have a stronger sense of immersion when viewing with the naked eye than with glasses-based 3D vision technology. Naked-eye 3D technology mainly includes parallax barrier technology, column lens array technology, directional backlight technology, integrated imaging technology, and plenoptic imaging technology [12].

Parallax barrier technology is a 3D vision technology that utilizes a special barrier structure on the screen to enable the left and right eyes to view images from different angles [13,14]. As shown in Figure 6a, by placing a special barrier in front of or behind the screen and adding tiny raised vertical stripes on the barrier to separate light, the odd and even columns pixels of the screen display the left and right eye images, respectively [14,15]. When the image is divided into two parts according to different viewing angles, the position and structure of the barrier allows for the left (right) eye to see only the left (right) image, which produces a stereoscopic effect after comprehensive processing in the brain. Parallax barrier technology has higher image clarity, a more comprehensive view field, suitable for multiple viewers, and is compatible with the established LCD liquid crystal process. However, parallax barrier 3D imaging shows low picture brightness and mutual constraints exist between the resolution and the number of viewing angles. Moreover, the viewers are required to maintain a fixed viewing distance and position; otherwise, the stereoscopic effect will be weakened.

Column lens array technology utilizes a column lens array on the screen to enable the viewer’s eyes to see different images [16,17]. The mechanism is to embed a set of microlens arrays with a unique surface structure on the display. These microlenses can project the images corresponding to the left and right eyes at different angles, and each lens focuses on a specific pixel area on the display. As shown in Figure 6b, the image is split into two parts based on different viewing angles, and the viewers receive different images for the left and right eyes when viewing the screen with bare eyes [18]. Lens array technology is characterized by a more extensive range of viewing angles, a more substantial parallax effect, and higher stereoscopic sensation and comfort. However, the same problems of mutual constraints on resolution, number of viewing angles, and stringent viewing requirements exist [19,20].

Directional backlight technology adjusts the position or angle of the light source through the hardware or software on the display device so that both eyes can view different images to realize the naked eye 3D vision effect (Figure 6c) [21]. Pointing light technology is less restricted by viewing distance and angle, has no resolution loss, is a natural and comfortable viewing experience, and has an excellent 3D vision effect [22]. However, the parallax effect is easily affected by the position of the light source and the ambient light, and the realization complexity is high, so the products are not mature.

Figure 6.

(a) Multi-angle parallax barrier technology (Reprinted from Ref. [15]); (b) column lens array technology (Reprinted from Ref. [18]); (c) direction backlight technology (Reprinted from Ref. [21]).

Integrated imaging, proposed by G. Lippmann [23,24], is a true 3D bare-vision autostereoscopic display technique for recording and reproducing a real 3D scene using an array of microlenses arranged in a periodic pattern in a 2D plane [25,26,27]. The traditional integrated imaging technique involves recording the elemental image array and reproducing the 3D image [25,28]. The recording process is to record the microlens array to the object space scene imaging to obtain the spatial information of the object space scene from different viewpoints, forming the elemental image array. The reproduction process puts the elemental image array in the corresponding position of the object space with the same parameters as the reproducing microlens array. According to the principle of reversibility of the light path, the light is gathered through the reproducing microlens array to restore and reconstruct the original shape of the object space scene, which can be viewed from any direction within a limited viewing angle.

Plenoptic imaging is usually based on a plenoptic camera. Plenoptic cameras capture a sampled version of the light map (often called the light field) emitted by a 3D scene [29]. These devices are used for various purposes, such as computing different sets of view maps of a 3D scene, removing occlusions, and changing the plane of focus of the scene. They can also capture images and project them onto an integral imaging display to show a 3D image with full parallax. However, it faces a similar problem to integrated imaging.

2.2.3. Real Three-Dimensional Vision Technology

Real 3D vision technology can provide nearly real 3D image information and physical depth of field, which has essential research value in many fields. Currently, holographic and volumetric 3D vision technologies are the most widely researched.

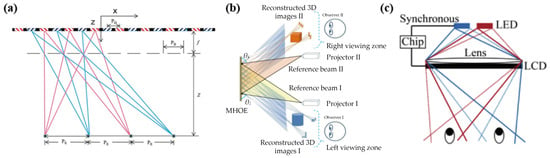

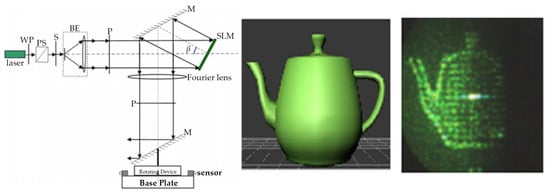

Holographic 3D vision technology records and reproduces an object’s complete light field information by recording and reproducing it, thus enabling viewers to see an image with a real stereoscopic sense under specific conditions [30,31,32]. As shown in Figure 7, the production of holographic images requires two light beams: one is the reference light, and the other is the object light [31]. They are superimposed on the object, and interference occurs; the phase and amplitude of each point on the object are converted into varying-intensity information and recorded, forming a holographic image. When the holographic image is illuminated with appropriate light, the eyes can observe the real 3D image again. Holographic 3D vision technology can have a realistic 3D effect and a broader viewing angle range. Still, the recording and display equipment is more costly and more disturbed by ambient light.

Figure 7.

Holographic technology (Reprinted from Ref. [31] with permission from Elsevier 2024).

Volumetric 3D vision technology utilizes luminous body prime points to present the object stereoscopic sense, which is currently mainly divided into scanning 3D vision technology and solid-state 3D vision technology. In scanning 3D vision technology, a sequence of 2D images is projected onto a rotating or moving screen. At the same time, the screen moves at a speed that is imperceptible to the observer because of the human visual holdover, which creates 3D vision [33]. Therefore, it can realize a true 3D vision in 360°. In the system, light beams of different colors are projected onto the display medium by a light deflector, which allows for the medium to reflect a wide range of colors. At the same time, the medium allows for the light beams to produce discrete visible points, which are voxels, corresponding to any point in the 3D image. As for solid-state 3D vision technology, an excitation source shines two laser beams onto the imaging space in a transparent stereoscopic space made of a special material [34]. After refraction, the two beams of light intersect at a point, forming the smallest unit of the stereoscopic image with its physical depth of field—a voxel. Each voxel point corresponds to an actual point that constitutes a real object. When the two laser beams move rapidly, countless intersections are formed in the imaging space. In this way, the numerous voxel points constitute a true 3D vision with a real physical depth of field.

Volumetric 3D vision technology is mainly realized by projection, interference, or imaging methods. A projection display utilizes a high-speed laser beam to project an image into space, forming a stereoscopic display [35]. Interference display produces interference patterns by overlapping two laser beams, creating a stereoscopic projection. Imaging display utilizes transparent layers and lenses to split the image into multiple layers so that images with different depths can be presented, forming a stereoscopic effect. Volumetric 3D vision can provide a 3D vision effect in the whole observation space, but the complexity and cost of this technology are high [36].

2.3. Virtual Reality and Augmented Reality Based on 3D Vision Technology

VR and AR, as promising next-generation display technologies, offer an attractive new way to perceive the world [37,38,39,40,41]. They are closely related to 3D vision technology (Figure 8) [42].

VR technology is based on a 3D virtual scene simulated by a computer. The scene’s content is usually modeled or recreated based on reality and is widely applied in the dance, entertainment, industrial manufacturing, military and aerospace, and teaching fields. While using it, the user obtains an immersive and realistic virtual world experience based on auditory, visual, and tactile sensations utilizing free observation from multiple viewpoints and human–computer interaction. VR technology combines many advanced technologies, such as digital image processing, artificial intelligence, sensing, and 3D vision technology. Among them, 3D vision technology is one of the critical technologies for users to feel immersive and is also one of the necessary conditions for VR system construction [43,44]. During the external information acquisition, 80–90% of the information is from vision. The objects generally observed by human eyes are 3D, so the information acquisition in VR is mainly based on 3D information such as the object’s shape, size, and proximity. To make the virtual world more spatial, it is important to construct high-quality scene models based on 3D vision technology [45].

AR technology, also known as mixed reality and extended reality, applies virtual elements to the real world through computers to make the two interact more realistically and fittingly in real time. AR is used in dance, entertainment, digital cities, medical care, military, and other fields [46]. Unlike the immersive experience of the virtual world in VR, AR not only displays computer-generated virtual objects but also requires it to be well integrated with various elements in the real world, realizing the superposition of information in the real scene and enhancing visual enjoyment. AR technology mainly relies on 3D vision technology, real-time tracking and localization technology, etc., and 3D vision technology is an essential platform for integrating virtual elements and real scenes [47]. In AR, stereoscopic visual simulation and computation based on the scene and the virtual model begin after identifying and scanning the real scene. The parallax map generated by the calculation is input to both eyes through glasses and other devices, and the final view is the stereoscopic visual effect of the real-time superposition between the virtual object and the real scene.

Most 3D vision technologies in VR and AR are realized through head-mounted displays (HMDs) [48,49,50]. As shown in Figure 8c, the HMD is equipped with two small displays, which are directly connected to the video signal output of the computer to provide two images with parallax to the left and right eyes, respectively, and ultimately form stereoscopic vision under the combination of the brain [51]. The tracking sensing equipment in the helmet can capture information such as head movement and position in real time and transmit it to the computer to adjust the image screen in the display based on the real-time situation. However, helmet displays have many limitations. For example, only helmet wearers can obtain stereoscopic vision, and wearing the device for a long period can easily lead to fatigue.

Figure 8.

Schematic of (a) VR and (b) AR display technology (Reprinted from Ref. [42]); (c) Prototype of the compact off-axis HMD (Reprinted from Ref. [51]).

3. Application of 3D Vision Technology in the Dance Field

Dance has long been an art form that expresses emotions and stories through movement. Contrary to singing, theater, and other arts that focus on presenting content and expressing emotions with sound, dance, as a form that purely utilizes body movements to express feelings and present artistic content, mainly relies on body motions and dance techniques to convey artistic conception. The artistic language of dance has to be understood and appreciated by the audience through visual perception. This requires the audience to have specific aesthetic ability, emotional comprehension, and certain professional knowledge to fully appreciate the artistic charm and expressive power contained in the dance works. Therefore, dance has a higher challenge in the appreciation threshold. Recently, with the continuous development of technology, the dance field has begun to explore various new technologies to enhance the visual effect of performances. Among them, 3D vision technology, with its diversity and multiple advantages, has attracted much attention in dance, stage design, and dance teaching.

3.1. Dance and Stage Design

Traditional dance performances rely on lighting and stage design to create scenes and visual effects, largely limited by budgets and existing stage design techniques and equipment. However, with the help of 3D vision technology, dancers can display more diversified and creative dance works on the stage based on virtual 3D elements, making the stage performance more infectious. The development of 3D vision technology allows for the audience to feel the dancer’s movements and expressions from different angles and viewpoints, thus more vividly feeling the dancer’s dance and emotional expression, increasing the audience’s sense of participation and interest, and making the dance works more stereoscopic and visually impactful.

3.1.1. Pseudo-Holographic 3D Vision Technology

At the Tencent WE annual conference, the creative team Blackbow combined digital visualization, underwater filming, high-speed slow-motion photography, holographic projection, and AR to create a dance opening show that intermingled virtual and reality [52]. The linkage of the 3D vision technology and dancers created a sense of art and technology in which the real and the virtual were intertwined and brought the audience an immersive visual feast. Through 3D mapping multimedia technology, a multi-dimensional space is shaped within the limited space of the stage. The time is materialized in abstract concepts and flows in parallel and interlaced.

The British holographic creative team Musion 3D utilized holographic projection technology to arrange a dance together across time for the 52-year-old ballet dancer Alessandra Ferri and her at 19 years old [53]. In the artwork, the dancer’s image at the age of 19 is presented in the form of holographic projection, mainly realized by using the effects of optical reflection, refraction, shadow, movement, contrast, etc. The flexible application of 3D holographic projection technology brings a new and wonderful experience for the dancer and the audience.

Dianne Buswell collaborated on a dance performance with virtual robots in the well-known BBC dance reality show Strictly Come Dancing [54]. To realize the mixed reality performance, the professional special effects staff created virtual dancers and used Unreal Engine and Xsens MVN motion capture systems for real-time dance recording. With the music playing, the virtual dancers joined the dance performance, bringing a wonderful show to the audience.

Wang Zhigang brought his holographic dance artwork Dream Shadow Qin Island to the Qingdao Film Expo [55]. Multiple illusory and beautiful scenes are constructed by the holographic screen in the dance performance, and they change with the development of the dance content and emotion. The holographic projection technology can realize the rapid switching of the stage scenes, making the dance performance coherent and smooth so that the audience can better feel the profound emotions contained in the dance.

Moment Factory, a Canadian new media art creative team, designed a pseudo-holographic stage play, Keren, based on traditional Japanese elements [56]. The stage design utilizes a large, covered projection screen and movable stage projection equipment to construct a magical world that is both real and fake. The performance shows various thematic dance performances such as ukiyo-e, ninja, shugenja, ghosts, and monsters were staged with the switching of scenes and light pro-jections. The entire performance is presented through the dancers’ body language, and the presence of pseudo-holographic projection technology makes the dance content and scene well-integrated, creating an immersive and surprising stage for the audi-ence

Silk Road Flowers and Rain is a large-scale national stage drama based on Dunhuang frescoes that audiences worldwide have always loved. When holographic projection technology is combined with this classic stage drama, it creates a classic dance work in which science and technology coexist with art through live shooting, stage restoration, digital mapping technology, and holographic projection technology. It allows for the audiences to view the prosperous scene of the Silk Road back then in an immersive way, experience warm and simple national customs, and promote the history and cultural treasures of the Chinese nation [57].

The application of pseudo-holographic 3D vision technology in stage performance has matured. With 3D vision technology, the stage background, props, and dancers can be designed and presented. Dancers can interact with the virtual elements and utilize them to convey the performance intention and inner emotion more clearly, creating more fascinating visual effects and bringing strong visual impact and artistic enjoyment to the audience. Although dance performances based on pseudo-3D vision technology have limitations on the audience’s viewing position and angle, they are popular with many audiences because they significantly enhance the viewing experience and are low cost.

3.1.2. Other 3D Vision Technology

Building imaginary and real stage scenarios with AR and computer technology provides new inspiration and ideas for dance performers.

Based on Claude Debussy’s work La Mer, Alexis joined forces with ballet dancers and AR engineers to create a ballet, Debussy 3.0 [58]. The system consists of three main components: the XSens MVN motion capture suit for capturing the movements of the individual dancers; Unity3D running on a dedicated computer with a professional graphics card for managing the virtual world; and two 8000 lm projectors with polarization filters and a unique rear projection screen for 3D stereoscopic projection. The two dancers are trapped between reality and the virtual, where the female dancer is equipped with motion capture devices to perform in the virtual world, while the male dancer can only dance in the real scene. His dance drama explored 3D vision technology for real-time interaction between AR and the stage, allowing for the dancers to interact with virtual elements on an augmented stage in front of hundreds of people, and was widely recognized by the audience.

Traditionally, dance performances are viewed live, so both the audience and dancers may be subject to many restrictions. Especially during the COVID-19 pandemic, dance troupes could not tour, and audiences were asked to maintain a social distance. This resulted in numerous theaters being forced to temporarily close, creating a huge challenge for the performing arts industry. From this, many forms of presenting dance performances with digital technology gradually emerged [59]. Among them, dance works based on 3D vision technology provide the audience with an immersive feeling, largely satisfying their expectation in watching the dance performance.

The first 3D documentary about dance, Pina, was directed by Wim Wenders for Pina Bauch, the greatest dancer of the 20th century [60]. He utilized 3D images to connect the audience sitting in front of the screen to the theater in the film, creating an immersive illusion and extending the theater from the screen to reality. Such a perspective change frees the audience from being stuck in a fixed position in the theater, allowing for them to participate in the dance performance and experience the world as they perceive and imagine. The Australian Centre for Mobile Imaging and Sydney Dance Company produced Stuck in the Middle With You, a dance film that combines dance and VR technology [61]. With a VR headset, the audience finds themselves in a large auditorium watching a dance performance. Through scene transitions, the audience can move to different positions and enjoy the movement details of the dancers from various angles, and even go on the stage to enjoy the joy and passion of dancing together with the dancers

Evidently, in terms of space, the creative team Blackbow has incorporated a fusion of realistic and sci-fi elements into dance performances through 3D vision technology. Multiple dance scenes have been transformed without the need for transition scenery, making dance performances smoother and more attractive. Secondly, in terms of time, the creative team Musion 3D utilized 3D vision technology to showcase the dance performance of the same ballet dancer at two different ages. This is a very novel and surprising dance and stage design for both dancers and audiences, which cannot be achieved using ordinary stage design techniques. In addition, Dianne Buswell utilized 3D vision technology to dance with lifelike virtual robots on stage. The flashing and emergence of virtual robots brought new vitality to the dance performance, creating a sci-fi dance show that ordinary dance design could not achieve. Overall, in dance and stage design, designs that were previously considered impossible or bold have become content that can be showcased. And this seemingly unrealistic element is precisely due to the existence of 3D vision technology and computer rendering technology that injects new vitality into dance and stage design, ushering in innovative development in the field of dance. Hence, these 3D vision technologies undeniably give more possibilities to dance and stage design, and some fantastic ideas have a chance to be realized.

As mentioned above, the core technology of pseudo-3D vision technology is holographic projection media, and the realization of other 3D vision technology requires more professional equipment. For example, holographic 3D vision technology needs to collect and reproduce light field information; AR and VR technology requires computer rendering technology and motion capture systems, as well as electronic glasses and interactive devices for the audience. Hence, compared with pseudo-3D vision technology, other 3D vision technology requires higher equipment, cost, and dancer skill requirements, and its application in stage and dance design is relatively limited. The design and realization of the technical architecture of a 3D display system requires specialized technical staff as well as a knowledge base, such as the development and creation of open 3D content, graphics rendering, animation, and real-time data processing. In addition, the 3D visual stage also includes the selection of appropriate hardware and software, configuration of display devices, and ensuring system stability and compatibility. Currently, most dance performances are based on traditional non-naked-eye 3D vision technology, requiring the audience to wear appropriate equipment, which has a high technical threshold and viewing cost. Due to the accommodation–vergence conflict, long-term use will likely cause fatigue and dizziness. Compared to non-naked-eye technology, naked-eye 3D vision technology does not rely on viewing devices and has a better viewing experience. However, naked-eye 3D vision technology, especially real 3D vision technology, is still under development and improvement. It is believed that when naked-eye 3D vision technology further reduces the threshold and cost of use, it will bring a more realistic dance viewing effect and a more wonderful experience for more audiences and dance lovers.

3.2. Dance Teaching

Traditional dance teaching and rehearsal is relatively single-form, mainly carried out through teachers’ explanation and demonstration, as well as students’ listening and imitation. This teaching method is less efficient, requiring the teacher to correct each student’s posture and movement individually, and is limited by time and space, resulting in its use in dance teaching being limited. Especially in large-scale dance performance rehearsals, the limitations of traditional teaching methods are more significant. Of course, some students will learn by watching dance videos and imitating them, but for most students, it is difficult to mimic the dance movements accurately without professional guidance. 3D vision technology plays a reforming role in dance teaching, dance rehearsal, and teaching image dissemination [62,63,64].

Unlike the production of dance performances, movies, and plays, dance teaching is more inclined to utilize 3D vision technology to provide dance learners with an all-around free viewing perspective of the teacher and the actual movement of their parts. In other words, the main observation object is changed from the audience to the dance performers themselves. Therefore, unlike the first two applications, mainly utilizing pseudo-3D vision technology, dance teaching is more likely to utilize higher-level 3D vision technologies such as real holography, AR, and VR that can provide binocular parallax and motion parallax. Among them, binocular parallax mainly facilitates dance learners to understand the spatial relationship and dynamic changes in dance movements and to perceive the teacher’s body posture, depth of movement, and spatial layout to understand the artistic expression of dance movements better. Motion parallax further enhances the dance learners’ understanding of the dynamics of the teacher’s dance movements. By observing the visual changes in the teacher’s movements, students can perceive the direction and speed of movement as well as the coherence of the movements. Such visual perception of dynamics is crucial to understanding the fluidity and rhythm of the dance movements, enabling the audience to feel the rhythm and emotional expression of the dance more deeply.

The Whole-body Interaction Learning for Dance Education (WhoLoDancE) pro-ject, funded by the European Union’s Horizon 2020 program, conducted groundbreak-ing research on using digital technology to teach dance and choreography [65,66]. They utilize motion capture technology and 3D vision technology, such as Mi-crosoft HoloLens Mixed Reality headsets, to capture dances (including ballet, modern, flamenco, and Greek folk dances) and preserved them for future dancers and choreog-raphers to study. They also created various programs to annotate and process the cap-tured images. Captured dances are stored in a movement database where choreogra-phers can select and combine movements and then play new dances using holograms of the dancers. In addition, learners can watch life-size holographic dancers perform the dances they want to learn and dance with them [67].

Chan et al. proposed a VR dance training application with motion capture technology. It is a system that comprehensively analyzes the user’s whole body [68]. Dance learners simply put on a motion capture suit, follow the movements of a virtual teacher, and then receive feedback on how to improve their movements. The system’s architecture consists of four main components: 3D graphics, motion matching, a motion database, and an optical motion capture system. Among them, the 3D graphics component visualizes the movements of the dance learners and the virtual teacher so that the movement details can be observed from multiple perspectives. In addition, the system applies a mirroring effect to the virtual teacher on the screen. It marks correct and incorrect body parts with different colors so students can learn the dance efficiently and immersively. At the end of the dance, the system provides a comprehensive score report and a slow-motion replay video so that students can quickly and accurately identify mistakes and make improvements promptly.

Similarly, Matthew et al. developed a system for visualizing ballet dance training to capture and evaluate ballet movements performed by students in a VR environment in real time [69]. The framework is based on the Spherical Self-Organizing Map (SSOM), which unsupervisedly parses ballet movements into structured poses and proposes a unique feature descriptor to more appropriately reflect the subtleties of ballet movements. These movements are represented as gesture trajectories on SSOM through the posture space. The system acquires human movement data by tracking captured skeletal joints through the Kinect camera system and compares it with the instructor’s movement database. Meanwhile, the CAVE virtual environment provides instruction with 3D visualization and feedback in scores to quantify the students’ performance so that the students can learn and train to dance in an immersive environment.

Saxena et al. proposed a conceptual tool for simulating dance, DisDans, which allows for multiple dancers not in the same space to dance together and immersively interact with each other through tactile, visual, and auditory means [70]. In DisDans, real-time motion capture technology with Kinect sensors and 3D holographic projection vision technology is mainly utilized. Each dancer’s body movements is recorded and converted into a projection, which other dancers can synchronously see through the AR glasses. DisDans is a tool with a diversity of uses. When utilized by a single user, it can download pre-choreographed dance animation content to dance with the projections, which provides a new way to learn high-quality private dance lessons. When utilized by multiple users simultaneously, it can be applied for entertainment interactions, allowing for dancers and choreographers to collaborate over long distances and as a tool for recording choreography. During dance teaching, a teacher can teach multiple students simultaneously, who may be located in different places. In addition, the dance teacher can easily switch the display system from a single monitor to numerous monitors (virtual classroom) to see all the students and provide them with the necessary instructions.

Salva et al. proposed an innovative real-time dance training application for close contact between a person and a 3D character. This application is applicable in both VR and AR to satisfy different people’s usage preferences [71]. In use, a 3D-scanned char-acter of the famous Latin dancer Alberto Rodriguez follows the user’s movements and responds to the user’s improvised dance in real-time. He will also perform real-time dances based on the direction guided by the user, which is achieved through Unity’s inverse kinematics.

Three-dimensional vision technology presents images and videos in a 3D way through special equipment, which can help each student independently have a clearer and more intuitive understanding of the trajectory and spatial relationship of dance movements. This significantly enhances the efficiency in dance teaching and learning. In addition, combined with motion capture technology, students can visualize their learning situation to improve their dance skills quickly.

In summary, 3D vision techniques are an important way to perceive the world and an indispensable visual foundation for understanding dance movements. They help us construct spatial and dynamic images of dance movements, thus better im-proving the efficiency of dance teaching. However, most of the current dance teaching platforms based on 3D vision technology require the wearing of corresponding equip-ment, which leads to load-bearing and easy fatigue for users. Moreover, the 3D virtual database is not comprehensive. According to some existing reports, there are currently few 3D visual databases available for dance teaching. This is closely related to the fact that the application research of 3D vision technology in the field of dance is still in its early stages. Therefore, both virtual characters and objects, as well as scenes, cannot meet the diverse dance creative needs of the future. Therefore, it is of great significance to further promote the development of dance teaching platforms based on naked-eye real 3D vision technology and enrich the content of dance teaching databases, provid-ing users with a better experience.

4. Summary and Prospect

The application of 3D vision technology in the dance field has been gradually emphasized and explored. Currently, it covers many aspects, such as dance choreography, performance, and teaching, providing new possibilities for developing dance art. Through 3D vision technology, dance content can be presented more vividly and richly, dance effects can be more shocking and eye-catching, and dance emotions can be more deeply rooted in people’s hearts. Three-dimensional vision technology enhances a dance performance’s ornamental, artistic, and infectious power in many ways, bringing a brand-new audiovisual experience to the dancers and the audience.

However, there are still many obstacles to the 3D vision technology application and promotion in the dance field: (1) Three-dimensional vision technology is costly. The use of 3D vision technology can achieve many exquisite and advanced scenes and props without physical objects, and this is very advantageous for the cost control of repeated dance performances. However, in the early stage, it needs a lot of technical support and equipment investment, as well as equipment maintenance costs in the later stage. (2) High technological barriers. The promotion and application of 3D vision technology in the field of dance require not only dancers with dance skills, but also more technical personnel support for a dance performance, as the use of related equipment requires professional training. (3) There are still fewer dance teaching databases available, mainly related to the relatively high threshold for the use of motion capture technology and 3D vision technology. (4) The sense of use experience has to be enhanced, as devices such as helmets or glasses are prone to fatigue when worn for a long time.

The future development of 3D vision technology in the dance field will mainly focus on the following aspects: (1) further developing and innovating 3D vision technology, cultivating professionals, and reducing the cost of equipment and the difficulty of utilization; (2) integrating artistic innovations, establishing and improving the 3D virtual database, injecting more scientific and technological elements into dance works, and expanding the performance forms of dance works; (3) enhancing the social interaction experience and creating a virtual dance community, so that dancers and audiences can participate in dance creation, performance, and interaction together in the virtual space to promote the dissemination and exchange of dance culture.

Author Contributions

Conceptualization, Y.Z.; formal analysis, H.N.; investigation, X.F. and Z.L.; writing—original draft preparation, X.F. and Z.L.; writing—review and editing, H.N.; funding acquisition, Y.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by Guangzhou City Philosophy and Social Science Development “13th Five-Year Plan” Project (2019GZGJ17), Guangdong Provincial Department of Education 2019 Special Innovation Project for Ordinary Universities (Humanities and Social Sciences) (2019WTSCX001), and the Educational Commission of Guangdong Province (Grant No. 2022ZDZX1002).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Zhao, D. Research on 3D Display Technology and Its Educational Applications. Master’s Thesis, Shaanxi Normal University, Xi’an, China, 2011. [Google Scholar]

- Lv, G.; Hu, Y.; Zhang, T.; Feng, Q. The current situation, opportunities, and suggestions for stereoscopic display. Vac. Electron. Technol. 2011, 24, 22–27. [Google Scholar]

- Chen, H.; Wang, X.; Wang, R. The current status and prospects of the application of 3D display technology. Henan Sci. Technol. 2016, 13, 54–55. [Google Scholar]

- Yan, C. Immersive and unrestrained-free stereoscopic display technology. Mod. Phys. Knowl. 2010, 22, 35–39. [Google Scholar]

- Zhang, L.; Zhang, M. Optical applications in 3D imaging technology. Mod. Phys. Knowl. 2012, 24, 19–23. [Google Scholar]

- Zhang, D. Research on Visual Perception Characteristics of 3D Display. Master’s Thesis, Beijing University of Posts and Telecommunications, Beijing, China, 2017. [Google Scholar]

- Ma, Q.; Cao, L.; He, Z.; Zhang, S. Progress of three-dimensional light-field display [Invited]. Chin. Opt. Lett. 2019, 17, 111001. [Google Scholar] [CrossRef]

- Geng, J. Three-dimensional display technologies. Adv. Opt. Photonics 2013, 5, 456–535. [Google Scholar] [CrossRef]

- Li, C.; Kang, X.; Shi, D. Classification and progress of stereoscopic display technology. Intell. Build. Electr. Technol. 2017, 11, 47–52. [Google Scholar]

- Zhao, L. Analysis of pseudo 3d stereoscopic display technology based on binocular parallax. Light Sources Light. 2022, 6, 67–69. [Google Scholar]

- Hill, L.; Jacobs, A. 3-D liquid crystal displays and their applications. Proc. IEEE 2006, 94, 575–590. [Google Scholar] [CrossRef]

- Sakamoto, K.; Kimura, R.; Takaki, M. Parallax polarizer barrier stereoscopic 3D display systems. In Proceedings of the International Conference on Active Media Technology, Kagawa, Japan, 19–21 May 2005. [Google Scholar]

- Kim, S.K.; Yoon, K.H.; Yoon, S.K.; Ju, H. Parallax barrier engineering for image quality improvement in an autostereoscopic 3D display. Opt. Express 2015, 23, 13230. [Google Scholar] [CrossRef]

- Lv, G.-J.; Wang, Q.-H.; Zhao, W.-X.; Wang, J. 3D display based on parallax barrier with multiview zones. Appl. Opt. 2014, 53, 1339–1342. [Google Scholar] [CrossRef]

- Huang, K.C.; Chou, Y.H.; Lin, L.C.; Lin, H.Y.; Chen, F.H.; Liao, C.C.; Chen, Y.H.; Lee, K.; Hsu, W.H. A study of optimal viewing distance in a parallax barrier 3D display. J. Soc. Inf. Disp. 2013, 21, 263–270. [Google Scholar] [CrossRef]

- Chu, F.; Wang, D.; Liu, C.; Li, L.; Wang, Q.-H. Multi-view 2D/3D switchable display with cylindrical liquid crystal lens array. Crystals 2021, 11, 715. [Google Scholar] [CrossRef]

- Ji, Q.; Deng, H.; Zhang, H.; Jiang, W.; Zhong, F.; Rao, F. Optical see-through 2D/3D compatible display using variable-focus lens and multiplexed holographic optical elements. Photonics 2021, 8, 297. [Google Scholar] [CrossRef]

- Zhang, H.; Deng, H.; He, M.; Li, D.; Wang, Q. Dual-view integral imaging 3D display based on multiplexed lens-array holographic optical element. Appl. Sci. 2019, 9, 3852. [Google Scholar] [CrossRef]

- Han, L. Research on Logic Algorithms for Multi Camera 3D Vision Sensors. Master’s Thesis, Nanchang University, Nanchang, China, 2024. [Google Scholar]

- Wang, X.; Shi, J. The principles and research progress of free stereoscopic display technology. Opt. Optoelectron. Technol. 2017, 15, 90–97. [Google Scholar]

- Li, X.; Ding, J.; Zhang, H.; Chen, M.; Liang, W.; Wang, S.; Fan, H.; Li, K.; Zhou, J. Adaptive glasses-free 3D display with extended continuous viewing volume by dynamically configured directional backlight. OSA Contin. 2020, 3, 1555–1567. [Google Scholar] [CrossRef]

- Fattal, D.; Peng, Z.; Tran, T.; Vo, S.; Fiorentino, M.; Brug, J.; Beausoleil, R.G. A multi-directional backlight for a wide-angle, glasses-free three-dimensional display. Nature 2013, 495, 348–351. [Google Scholar] [CrossRef] [PubMed]

- Lippmann, G. La photographie integrale. Comptes-Rendus 1908, 146, 446–451. [Google Scholar]

- Lippmann, G. Integral Photography a new discovery by Professor Lipmann. Sci. Am. 1911, 19, 191. [Google Scholar]

- Stern, A.; Javidi, B. Three-dimensional image sensing, visualization, and processing using integral imaging. Proc. IEEE 2006, 94, 591–607. [Google Scholar] [CrossRef]

- Cho, M.; Daneshpanah, M.; Moon, I.; Javidi, B. Three-dimensional optical sensing and visualization using integral imaging. Proc. IEEE 2010, 99, 556–575. [Google Scholar]

- Dudnikov, Y.A. Autostereoscopy and integral photography. Opt. Technol. 1970, 37, 422–426. [Google Scholar]

- Hong, J.; Kim, Y.; Choi, H.-J.; Hahn, J.; Park, J.-H.; Kim, H.; Min, S.-W.; Chen, N.; Lee, B. Three-dimensional display technologies of recent interest: Principles, status, and issues. Appl. Opt. 2011, 50, H87–H115. [Google Scholar] [CrossRef]

- Martínez-Corral, M.; Dorado, A.; Navarro, H.; Llavador, A.; Saavedra, G.; Javidi, B. From the plenoptic camera to the flat integral-imaging display. In Three-Dimensional Imaging, Visualization, and Display; SPIE: Bellingham, WA, USA, 2014; pp. 96–101. [Google Scholar]

- Elmorshidy, A. Holographic projection technology: The world is changing. Comput. Sci. 2010, 2, 104–112. [Google Scholar]

- Teng, D.; Liu, L.; Wang, Z.; Sun, B.; Wang, B. All-around holographic three-dimensional light field display. Opt. Commun. 2012, 285, 4235–4240. [Google Scholar] [CrossRef]

- Paturzo, M.; Memmolo, P.; Finizio, A.; Näsänen, R.; Naughton, T.J.; Ferraro, P. Holographic display of synthetic 3D dynamic scene. 3D Res. 2011, 1, 31–35. [Google Scholar] [CrossRef]

- Ding, Q. Research on the Scanning Display Technology of Rotating Body in True 3D Stereoscopic Display. Master’s Thesis, Nanjing University of Aeronautics and Astronautics, Nanjing, China, 2006. [Google Scholar] [CrossRef]

- Li, Y. Research on True 3D Stereoscopic Display Technology. Master’s Thesis, Nanjing University of Aeronautics and Astronautics, Nanjing, China, 2007. [Google Scholar] [CrossRef]

- Grossman, T.; Wigdor, D.; Balakrishnan, R. Multi-Finger Gestural Interaction with 3D Volumetric Displays; Association for Computing Machinery: New York, NY, USA, 2004. [Google Scholar]

- Guo, Y. New ideas for stereoscopic display: Exploration of bare eye 3D display technology based on rotating array LED method. Decoration 2019, 10, 128–129. [Google Scholar]

- Peng, H. Application research of AR holographic technology based on natural interaction in national culture. In Proceedings of the 2019 IEEE 4th Advanced Information Technology, Electronic and Automation Control Conference (IAEAC), Chengdu, China, 20–22 December 2019; pp. 2220–2224. [Google Scholar]

- Ratcliff, J.; Supikov, A.; Alfaro, S.; Azuma, R. ThinVR: Heterogeneous microlens arrays for compact, 180 degree FOV VR near-eye displays. IEEE Trans. Vis. Comput. Graph. 2020, 26, 1981–1990. [Google Scholar] [CrossRef]

- Wang, H. Prospects for Stereoscopic Display Technology-Background Segmentation. Master’s Thesis, Guilin University of Electronic Technology, Guilin, China, 2023. [Google Scholar]

- Zeng, X.; Zhao, Y.; Guo, T.; Lin, Z.; Zhou, X.; Zhang, Y.; Yang, L.; Chen, E. The Application and Development of Stereoscopic Display in Virtual/Augmented Reality Technology. Telev. Technol. 2017, 41, 135–140. [Google Scholar]

- Beira, J.F. 3D (Embodied) Projection Mapping and Sensing Bodies: A Study in Interactive Dance Performance. Master’s Thesis, The University of Texas at Austin, Austin, TX, USA, 2016. [Google Scholar]

- Xiong, J.; Hsiang, E.L.; He, Z.; Zhan, T.; Wu, S.T. Applications, Augmented reality and virtual reality displays: Emerging technologies and future perspectives. Light Sci. Appl. 2021, 10, 1–30. [Google Scholar] [CrossRef]

- Zhang, G.; Sun, J.; Zhang, J. The implementation principle and application of stereoscopic display in virtual reality. In Proceedings of the Symposium on Stereoscopic Image Technology and Its Applications, Tianjin, China, 3 June 2005; pp. 31–34. [Google Scholar]

- Xu, W.; Tan, Z. Development and research of three-dimensional stereoscopic display system. Chin. J. Image Graph. 1997, 2, 64–68. [Google Scholar]

- Jia, H. Research and Implementation of Stereoscopic Display Technology in Virtual Reality. Master’s Thesis, Daqing Petroleum Institute, Daqing, China, 2002. [Google Scholar]

- Liu, L. Research on Key Technologies of Bare Eye 3D Augmented Reality. Master’s Thesis, Zhejiang University, Hangzhou, China, 2012. [Google Scholar]

- Chang, H. Research on Stereoscopic Display and Localization Algorithms in Augmented Reality. Master’s Thesis, Xi’an University of Electronic Science and Technology, Xi’an, China, 2018. [Google Scholar]

- Kumar, V.; Raghuwanshi, S.K. Implementation of helmet mounted display system to control missile 3D movement and object detection. In Proceedings of the 2020 International Conference on Power Electronics & IoT Applications in Renewable Energy and its Control (PARC), Mathura, India, 28–29 February 2020; pp. 175–179. [Google Scholar]

- Micheal, M.; Clarence, E.; James, H. Introduction to helmet mounted displays. In Helmet-Mounted Displays: Sensation, Perception and Cognition Issues; SPIE: Bellingham, WA, USA, 2000; pp. 57–84. [Google Scholar]

- Hua, H. Research progress in head mounted optical field display technology. J. Opt. 2023, 43, 80–89. [Google Scholar]

- Wei, L.; Li, Y.; Jing, J.; Feng, L.; Zhou, J. Design and fabrication of a compact off-axis see-through head-mounted display using a freeform surface. Opt. Express 2018, 26, 8550–8565. [Google Scholar] [CrossRef] [PubMed]

- The Opening Show of the 2016 Tencent WE Conference Surprised Everyone. Available online: https://www.sohu.com/a/118800339_461655 (accessed on 29 June 2024).

- Yimou Zhang + Dancers + Programmers = A Perfect Holographic Art Show. Available online: https://baijiahao.baidu.com/s?id=1594186534383850687&wfr=spider&for=pc (accessed on 29 June 2024).

- Action Capture Empowers Mixed Reality Technology to Enhance Large-Scale Performance Shows, Upgrading the Immersive Experience! Available online: https://zhuanlan.zhihu.com/p/480541499?utm_id=0 (accessed on 29 June 2024).

- Let Me Introduce His New Work “Dream Shadow Qindao” @ Zhigang Wang. Available online: https://mp.weixin.qq.com/s?__biz=MjM5MjEyMDg2MA==&mid=2649879592&idx=1&sn=e84d0bc6ca56ba9c3b4dcaeb1804441a&chksm=beae7d5b89d9f44d621db6689dcc0ef3e4f86167101460e6091da8021b8a4c418b6d3b0b209d&scene=27 (accessed on 29 June 2024).

- It Is Said That This Is a Magical and Creative Stage Play that 99% of People Have Not Watched. Available online: https://www.sohu.com/a/327017670_174981 (accessed on 29 June 2024).

- Large Scale Holographic Light and Shadow Digital Exhibition of “Silk Road Flower Rain”, Reproducing History and Inheriting Culture! Available online: https://www.sohu.com/a/252086220_100224719 (accessed on 29 June 2024).

- Clay, A.; Domenger, G.; Conan, J.; Domenger, A.; Couture, N. Integrating augmented reality to enhance expression, interaction & collaboration in live performances: A ballet dance case study. In Proceedings of the 2014 IEEE International Symposium on Mixed and Augmented Reality-Media, Art, Social Science, Humanities and Design (ISMAR-MASH’D), Munich, Germany, 10–12 September 2014; pp. 21–29. [Google Scholar]

- Liu, C. Exploring the Application of Modern Technology in the Field of Television Dance. Master’s Thesis, Guangxi Academy of Arts, Nanning, China, 2018. [Google Scholar]

- Ting, Y.-W.; Lin, P.-H.; Lin, C.-L. The transformation and application of virtual and reality in creative teaching: A new interpretation of the triadic ballet. Educ. Sci. 2023, 13, 61. [Google Scholar] [CrossRef]

- Virtual Reality Dance Movies: Stage Authority Gives Audience an Immersive Interaction. Available online: http://art.ifeng.com/2016/0311/2780559.shtml (accessed on 29 June 2024).

- Raheb, K.E.; Stergiou, M.; Katifori, A.; Ioannidis, Y. Dance interactive learning systems: A study on interaction workflow and teaching approaches. ACM Comput. Surv. 2019, 52, 1–37. [Google Scholar] [CrossRef]

- Iqbal, J.; Sidhu, M.S. Augmented reality-based dance training system: A study of its acceptance. In Design, Operation and Evaluation of Mobile Communications: Second International Conference, MOBILE 2021, Held as Part of the 23rd HCI International Conference, HCII 2021, Virtual Event, 24–29 July 2021; Proceedings 23; Springer: Berlin/Heidelberg, Germany, 2021; pp. 219–228. [Google Scholar]

- Lee, H. 3D holographic technology and its educational potential. TechTrends 2013, 57, 34–39. [Google Scholar] [CrossRef]

- Camurri, A.; Raheb, K.E.; Even-Zohar, O.; Ioannidis, Y.; Markatzi, A.; Matos, J.M.; Morley-Fletcher, E.; Palacio, P.; Romero, M.; Sarti, A. WhoLoDancE: Towards a methodology for selecting Motion Capture Data across different Dance Learning Practice. In Proceedings of the 3rd International Symposium on Movement and Computing, Thessaloniki, Greece, 5–6 July 2016; pp. 1–2. [Google Scholar]

- Wood, K.; Cisneros, R.E.; Whatley, S. Motion capturing emotions. Open Cult. Stud. 2017, 1, 504–513. [Google Scholar] [CrossRef]

- Wholodance Newsletter | Issue 1. Available online: https://issuu.com/lynkeus-rome/docs/wholodance_newsletter_issue_1 (accessed on 27 August 2024).

- Chan, J.C.; Leung, H.; Tang, J.K.; Komura, T. A virtual reality dance training system using motion capture technology. IEEE Trans. Learn. Technol. 2010, 4, 187–195. [Google Scholar] [CrossRef]

- Kyan, M.; Sun, G.; Li, H.; Zhong, L.; Muneesawang, P.; Dong, N.; Elder, B.; Guan, L. Technology, an approach to ballet dance training through ms kinect and visualization in a cave virtual reality environment. ACM Trans. Intell. Syst. 2015, 6, 1–37. [Google Scholar] [CrossRef]

- Saxena, V.V.; Feldt, T.; Goel, M. Augmented telepresence as a tool for immersive simulated dancing in experience and learning. In Proceedings of the 6th Indian Conference on Human-Computer Interaction, New Delhi, India, 7–9 December 2014; pp. 86–89. [Google Scholar]

- Kirakosian, S.; Daskalogrigorakis, G.; Maravelakis, E.; Mania, K. Near-contact person-to-3d character dance training: Comparing ar and vr for interactive entertainment. In Proceedings of the 2021 IEEE Conference on Games (CoG), Copenhagen, Denmark, 17–20 August 2021; pp. 1–5. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Published by MDPI on behalf of the International Institute of Knowledge Innovation and Invention. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).