Abstract

Deep learning models have demonstrated significant advantages over traditional algorithms in image processing tasks like object detection. However, a large amount of data are needed to train such deep networks, which limits their application to tasks such as biometric recognition that require more training samples for each class (i.e., each individual). Researchers developing such complex systems rely on real biometric data, which raises privacy concerns and is restricted by the availability of extensive, varied datasets. This paper proposes a generative adversarial network (GAN)-based solution to produce training data (palm images) for improved biometric (palmprint-based) recognition systems. We investigate the performance of the most recent StyleGAN models in generating a thorough contactless palm image dataset for application in biometric research. Training on publicly available H-PolyU and IIDT palmprint databases, a total of 4839 images were generated using StyleGAN models. SIFT (Scale-Invariant Feature Transform) was used to find uniqueness and features at different sizes and angles, which showed a similarity score of 16.12% with the most recent StyleGAN3-based model. For the regions of interest (ROIs) in both the palm and finger, the average similarity scores were 17.85%. We present the Frechet Inception Distance (FID) of the proposed model, which achieved a 16.1 score, demonstrating significant performance. These results demonstrated StyleGAN as effective in producing unique synthetic biometric images.

1. Introduction

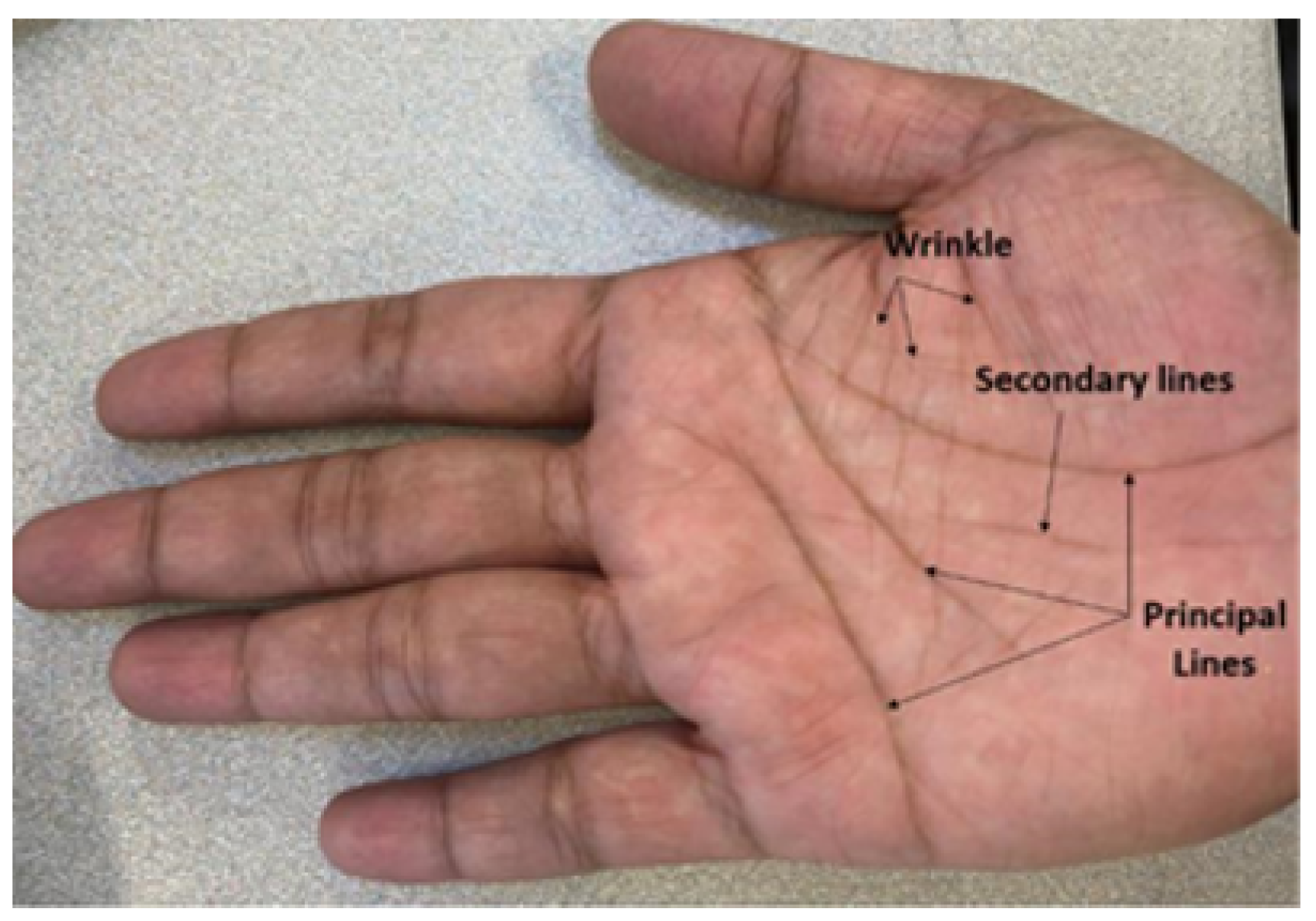

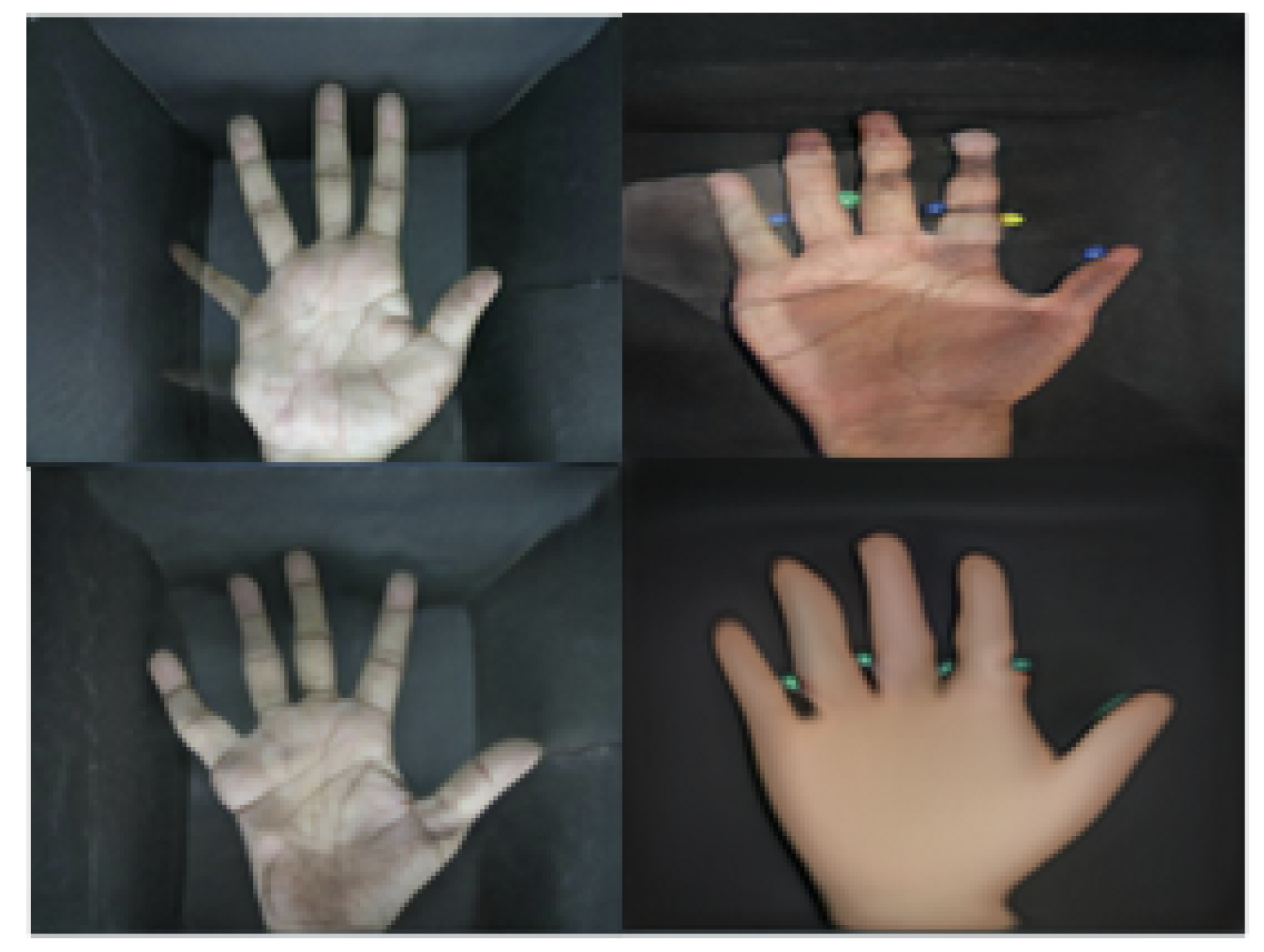

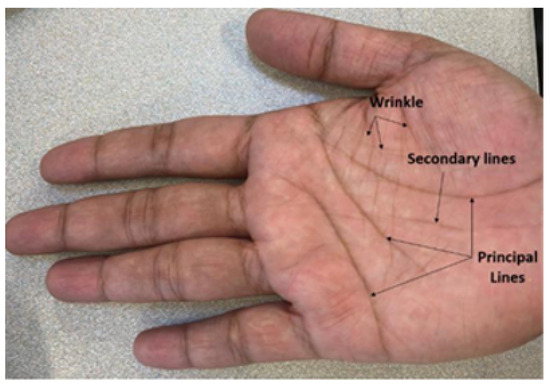

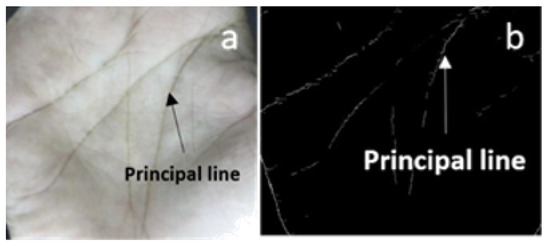

Advanced biometric recognition technology may be enhanced by ensuring that only authorized users can access a system [1]. This technology captures and validates user identities through physiological or behavioral biometrics such as face, iris, fingerprint, EEG, and voice [2,3]. Palmprint, a fast-growing and relatively new biometric, is the inner surface of the hand between the wrist and the fingers [1]. Palmprint refers to an impression of the palm on a surface containing rich intrinsic features, including the principal lines and wrinkles (Figure 1) [1] and abundant ridge and minutia-based features similar to a fingerprint [4,5]. These features result in high accuracy and reliable performance in personal verification and identification [6,7,8].

Figure 1.

Palmprint feature definitions with principal lines and wrinkles [1].

Several techniques for palmprint recognition have been proposed, such as minutia-based, geometry-based, and transformed-based features [7]. Various image processing methods exist to process these features, including encoding-based algorithms, structure-based methods, and statistics-based methods [9]. Many methods in the literature have recently incorporated deep learning due to its ability to achieve high recognition accuracy and adaptability to various biometrics [10]. Training such deep learning models may require large datasets [10]. However, smaller datasets can also be utilized by employing effective data augmentation techniques.

The National Institute of Standards and Technology (NIST) recently discontinued several publicly available datasets from its catalog due to privacy issues [11,12]. To address the limited availability of data, synthetic palmprints have been generated, and the generation tool has been made available for public use. This approach was motivated by previous works like Palm-GAN [13], which aimed to generate palmprint images using generative adversarial networks (GANs).

The primary motivations for creating synthetic images are their affordability, effectiveness, and ability to provide increased privacy during testing. Moreover, significant advancements in the quality and resolution of images generated by GANs have been made recently [7,8,9]. A standard GAN generator’s architecture operates similarly: initially, rough low-resolution attributes are created, which are progressively refined through upsampling layers. These features are blended locally using convolution layers, and additional details are added via nonlinear processes [13]. However, despite these apparent similarities, existing GAN structures do not generate images in a naturally hierarchical way. While broader features primarily influence the existence of enhanced details, they do not precisely determine their locations [12]. Instead, a significant portion of the more detailed information is determined based on fixed pixel coordinates.

Synthetic data can be relied upon instead of real-world data [13]. A generator model can learn over training images to generate a synthetic image. In this scenario, synthetic data have the edge over real data regarding enrollment detection and verification [14]. A large number of synthetic datasets can be produced at low cost and with little effort while posing no privacy risk [15]. A single synthetic image with well-controlled modifications can also alter and expand the dataset [13]. The traditional method to develop synthetic images involves changing the orientation of images and using filters such as the Gabor filter, which changes the final structure of any image [5,10,16]. In other classical approaches, the orientation of the fingerprint or the skin color for facial biometrics is changed [11,12]. There is no traditional approach to generating synthetic palmprints [14].

A framework for generating palm images using a style-based generator named StyleGAN2-ADA, a variation within the StyleGAN family, was previously introduced [1]. The current goal is to create synthetic images using different GANs like StyleGAN2-ADA and StyleGAN3 to demonstrate a more realistic transformation process. In this process, the position of each detail in the image will be entirely determined from the key features. This is the only StyleGAN-based approach to generate high-resolution palm images up to 2048 × 2048 pixels. In a previous study, a TV-GAN-based framework was applied to generate palmprints; however, high-resolution images were not generated in that work [13].

The contributions are as follows:

- Our model utilizes a high-resolution and progressive growth training approach, producing realistic shapes and hand–image boundaries without facing quality issues.

- New quality metrics are developed to assess the usability of generated images and their similarity to original palm images, ensuring that the synthetic palm images do not reveal real identities.

- The generated model is publicly available, representing the first StyleGAN-based palm image synthesis model.

- The SIFT (Scale-Invariant Feature Transform)-based method to filter unwanted images from the generated synthetic images is open-sourced.

- A novel script to detect finger anomalies in the field of palmprint recognition is open-sourced.

The rest of the paper is organized as follows. In Section 2, the pre-processing method and proposed model architecture are presented. In Section 3, we discuss the process of preparing the data for the experiment, and the training method for the model is described in Section 4. The implementation of model training is discussed in Section 5. The results and discussion are in Section 6, and the conclusion is provided in Section 7.

2. Architectural Background of StyleGANs

2.1. Overview of StyleGAN2-ADA

In previous work, the use of a GAN architecture from the StyleGAN family named StyleGAN2-ADA was proposed [17,18]. This is an improved version of StyleGAN that utilizes adaptive discriminator augmentation (ADA). StyleGAN, introduced in 2018, demonstrated the ability to produce synthetic faces indistinguishable from real ones [18]. StyleGAN needs to be trained on tens of thousands of images for optimal results and requires powerful Graphics Processing Unit (GPU) resources [17]. In 2020, StyleGAN2-ADA was introduced, enabling new models to be cross-trained from others. By applying an existing model and enhancing its training with a unique dataset, the desired outcomes with a custom dataset can be achieved.

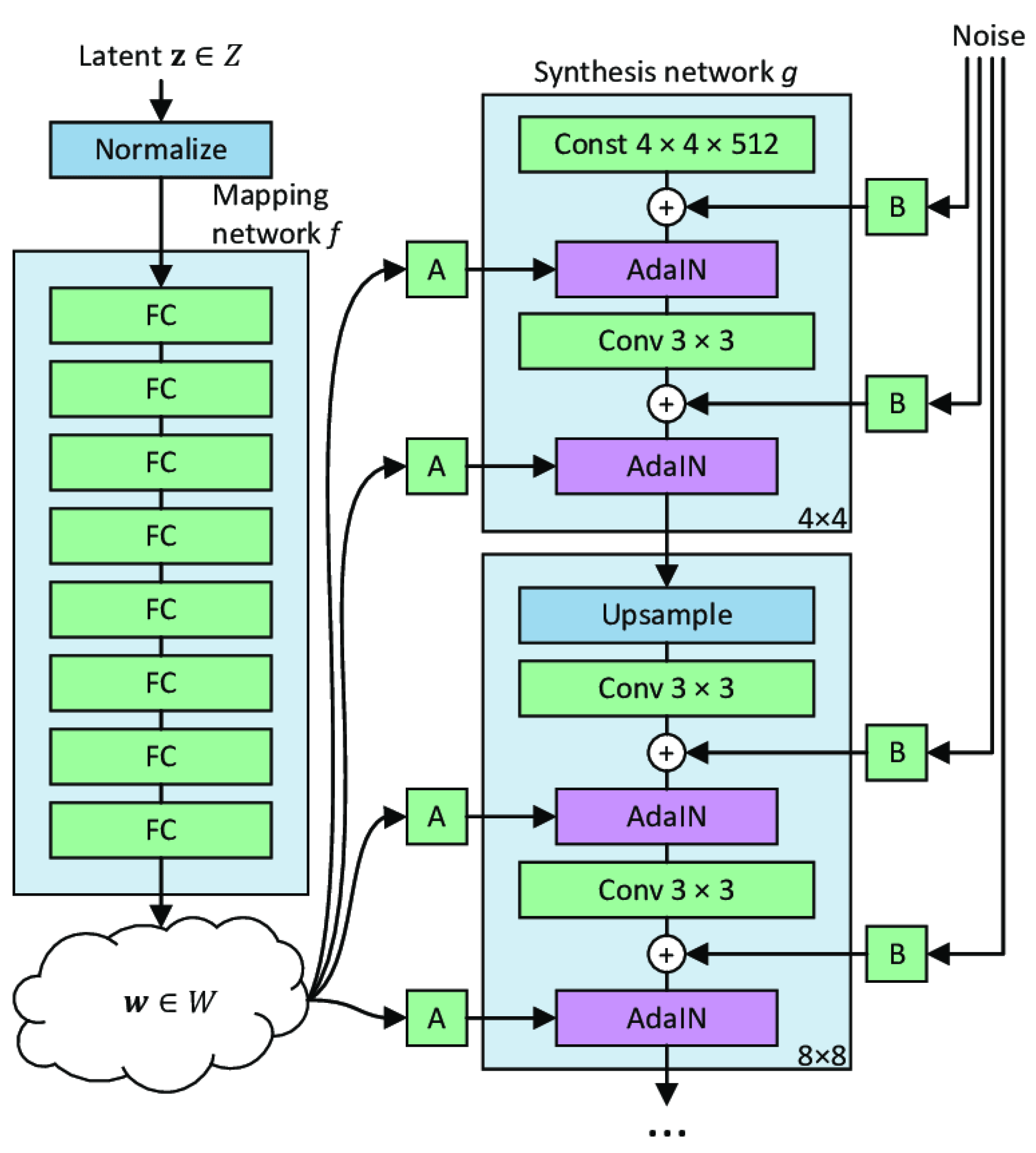

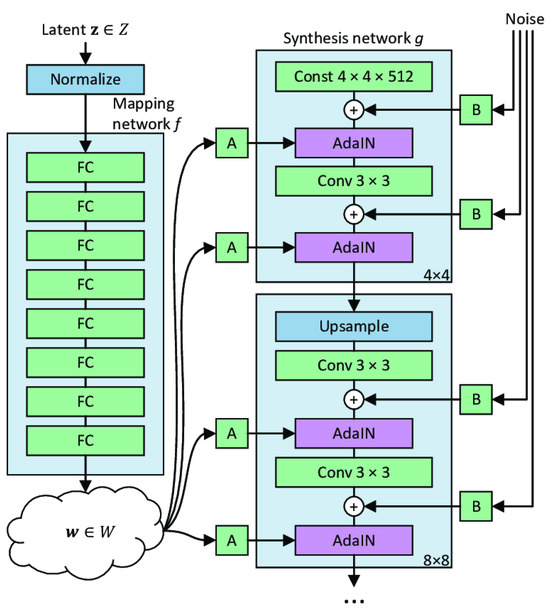

This approach capitalizes on the model’s current efficiency and improves its performance for particular tasks with reduced resources [18,19]. StyleGAN2-ADA [19] is characterized by two main features: generating high-resolution images using progressive growing [18] and incorporating image styles in each layer using adaptive instance normalization (AdaIN) [19]. For instance, images start at 4 × 4 pixels and gradually add high-resolution generators and discriminators up to 2048 × 2048 pixels. The general architecture is shown in Figure 2.

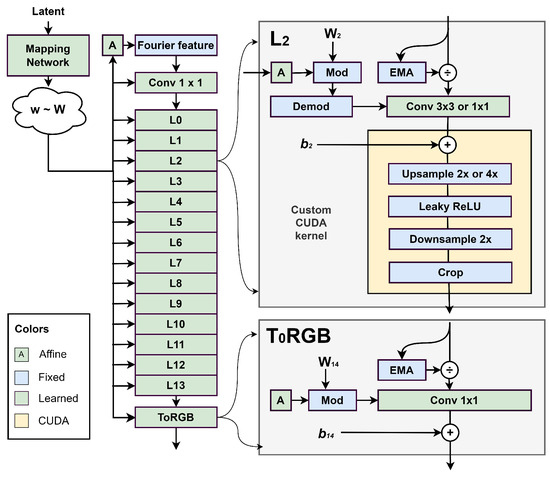

Figure 2.

General architecture of StyleGAN2-ADA.

2.2. Overview of StyleGAN3 Architecture

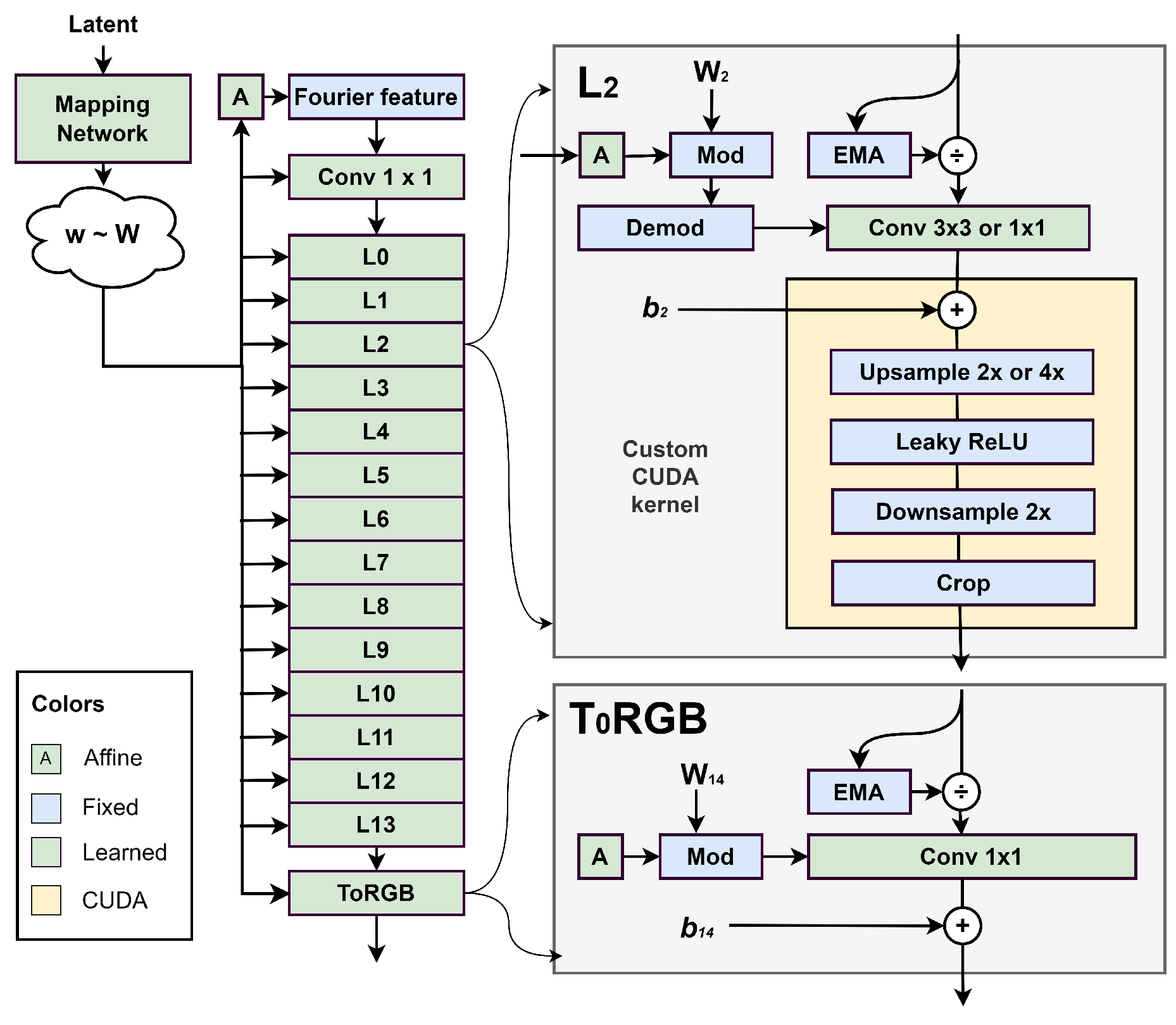

StyleGAN3 [20], the most recent advancement in NVIDIA’s StyleGAN series, includes significant architectural improvements compared to the previous models StyleGAN and StyleGAN2. One of the notable features of StyleGAN3 is its alias-free design, which prevents visual distortions in the generated images [20]. Distorted images, which often appear improperly scaled or misshapen, are effectively addressed by StyleGAN3 [21,22,23,24,25]. Every step in the image creation process is optimized to avoid such issues, resulting in distinct and uniform images even when subjected to various transformations. The architecture of StyleGAN3 is shown in Figure 3.

Figure 3.

StyleGAN3 generator architecture [20].

Meanwhile, StyleGAN3 improves upon the training techniques used in StyleGAN2 and StyleGAN2-ADA by specifically targeting the elimination of visual distortions. This leads to a more reliable training process and the production of higher-quality images. This combination of improved design and training methods makes StyleGAN3 an innovative tool in the field of generative adversarial networks, offering enhanced design and training methods.

3. Datasets

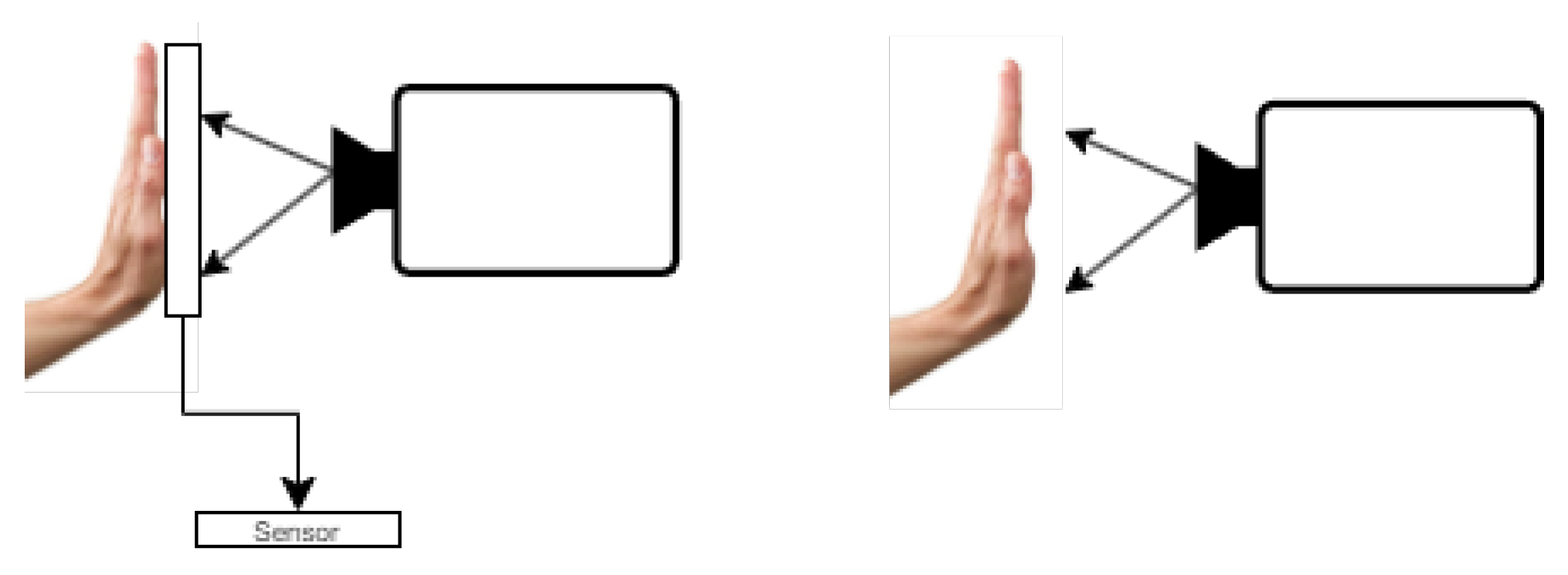

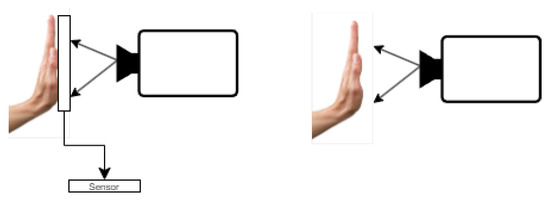

Palmprint images can be captured using both contact and contactless techniques. To collect palmprints, individuals must place their hands in close contact with a sensor. This ensures that the hands are properly positioned for capture, as shown in Figure 4. On the other hand, contactless capture can be performed with easily accessible commercial sensors, as seen in Figure 4.

Figure 4.

Hand position during contact-based (a) and contactless (b) palmprint capture [26].

StyleGAN models can be trained with many public palmprint datasets. However, two publicly available datasets were chosen for this specific research due to the following reasons:

- Each of the two datasets has large amounts of image data with many skin color variations, which translates to better model training.

- Images in both datasets are given in very high resolutions, which means more resizing options.

- They have been the most popular choice of datasets in similar past research [17,19,22].

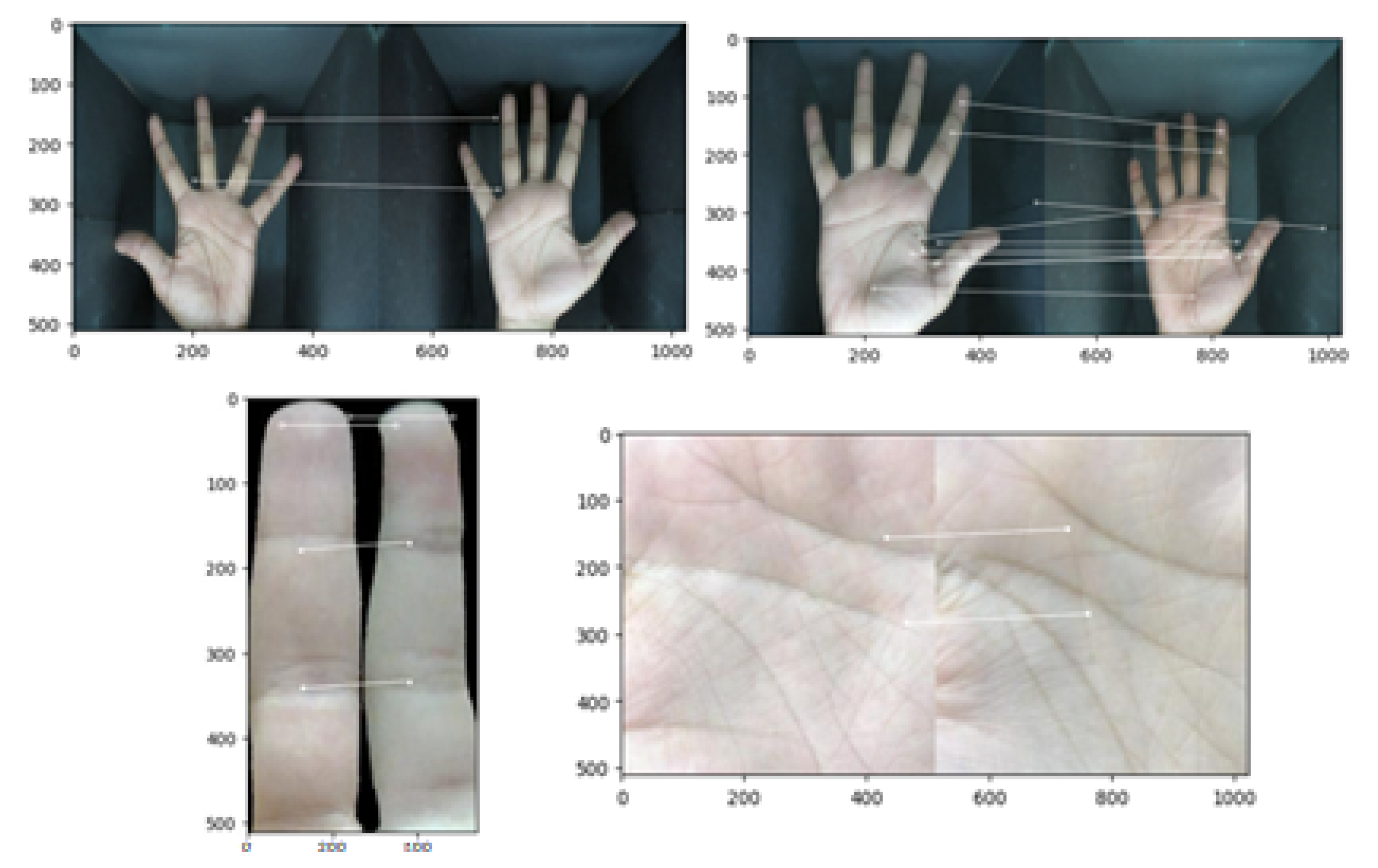

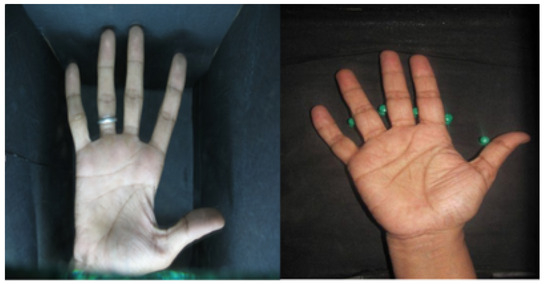

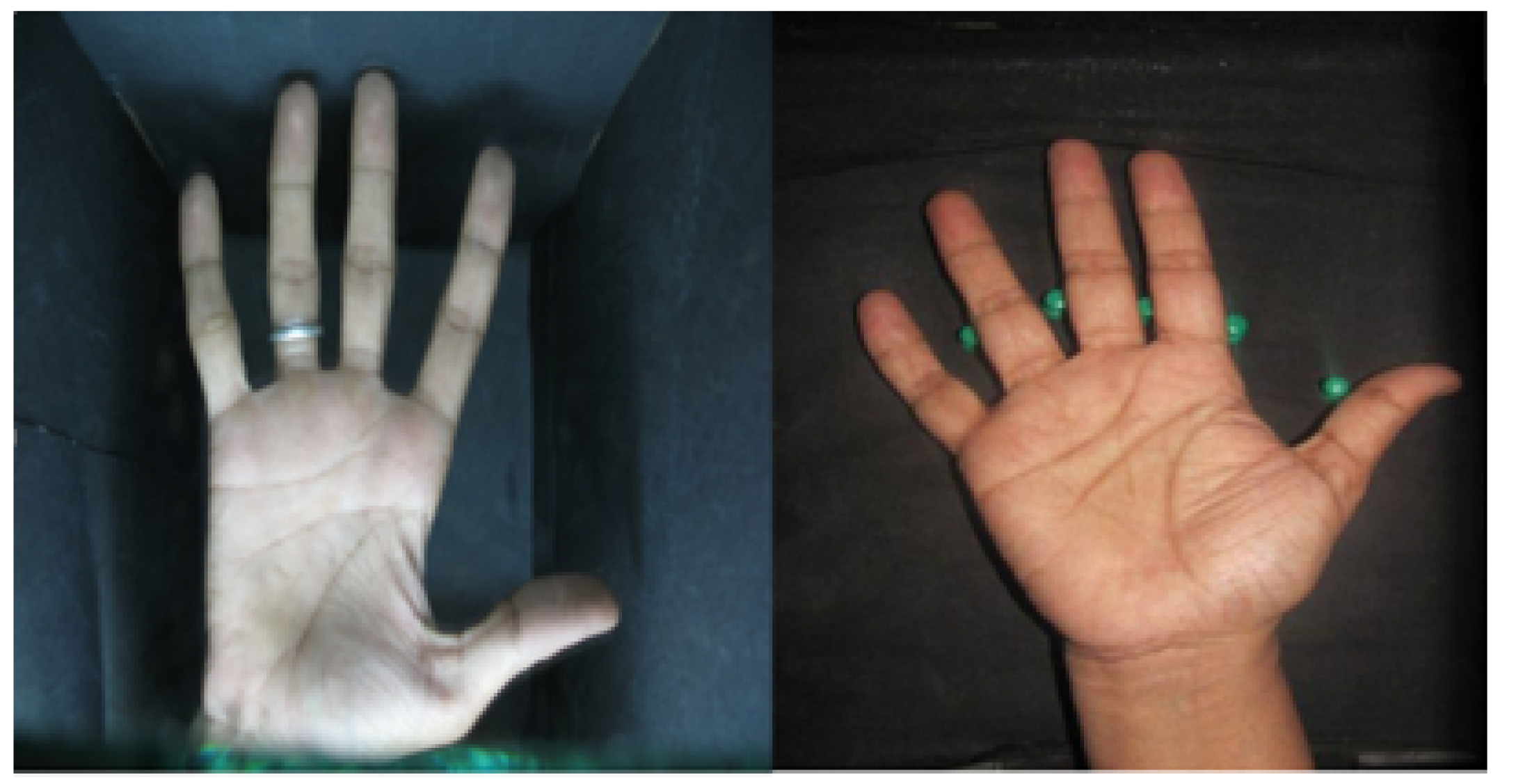

Figure 5 shows sample images from both datasets:

- Polytechnic U [27] (DB1) has 1610 images collected in an indoor environment with circular fluorescent illumination around the camera lens. The dataset contains both the left and right hands of 230 subjects. Approximately seven images of each hand were captured for the age group of 12–57 years. The image resolution was 800 × 600 pixels.

- Touchless palm image dataset [28] (DB2) consists of a total of 1344 images from 168 different people (8 images from each hand) taken with a digital camera at an image resolution of 1600 × 1200 pixels.

Figure 5.

Illustration of dataset images: (Left) Polytechnic U (Right); IIT-Pune [23,28].

Figure 5.

Illustration of dataset images: (Left) Polytechnic U (Right); IIT-Pune [23,28].

4. Methodology

In this work, we considered StyleGAN2-ADA and StyleGAN3 GAN-based models applied to palm images and generated synthetic images. We utilized multi-resolution images for palm image synthesis. Multi-resolution GAN models start the training process by training both the generator (G) and discriminator (D) at lower spatial resolutions and progressively increasing (growing) the spatial resolution throughout the training. Progressive growth-based GANs can effectively capture high-frequency components of the training data and produce high-fidelity and realistic images.

4.1. Training Models

To process the dataset effectively, it should be converted into the .tfrecords format. For this analysis, we followed the training StyleGAN implementation by NVIDIA [18]. We organized the datasets into various combinations. For instance, one might create separate groups such as datasets A and B and a combined dataset C, each containing different but relevant data subsets. This approach enhances performance by adjusting the dataset to emphasize the key features relevant to the study. The number of epochs and iterations must be varied according to the datasets and scripts used. Furthermore, the training time of each model would vary based on the system’s specifications, namely, RAM and GPU. The training procedure will generate synthetic images depending on the number of epochs being run on the script. Quality evaluation will determine the reliability of the models.

4.2. Quality Assessment

Almost all generated images should have ideal quality once an optimum number of epochs is reached. However, some will inevitably have poor quality. To eliminate all poor-quality images, a two-factor assessment was essential. Firstly, a human manual assessment and elimination eliminated the most major and obvious unwanted images. After that, a SIFT-based image processing method was applied. The method works with the following equations:

where and are pixels at the image positions. The Euclidean distance between test sample X and training sample Y is denoted as :

where H and W are the height and width of the test images, respectively. The training samples should be the same size as the test sample. To focus on the region of interest (ROI), all images need to be resized to a standard dimension. This adjustment ensures that the relevant area is consistently and accurately represented across the entire dataset. This approach allows for the detection of the principal lines present in the palm images. It works by detecting and calculating gradient image intensity at each pixel in an image.

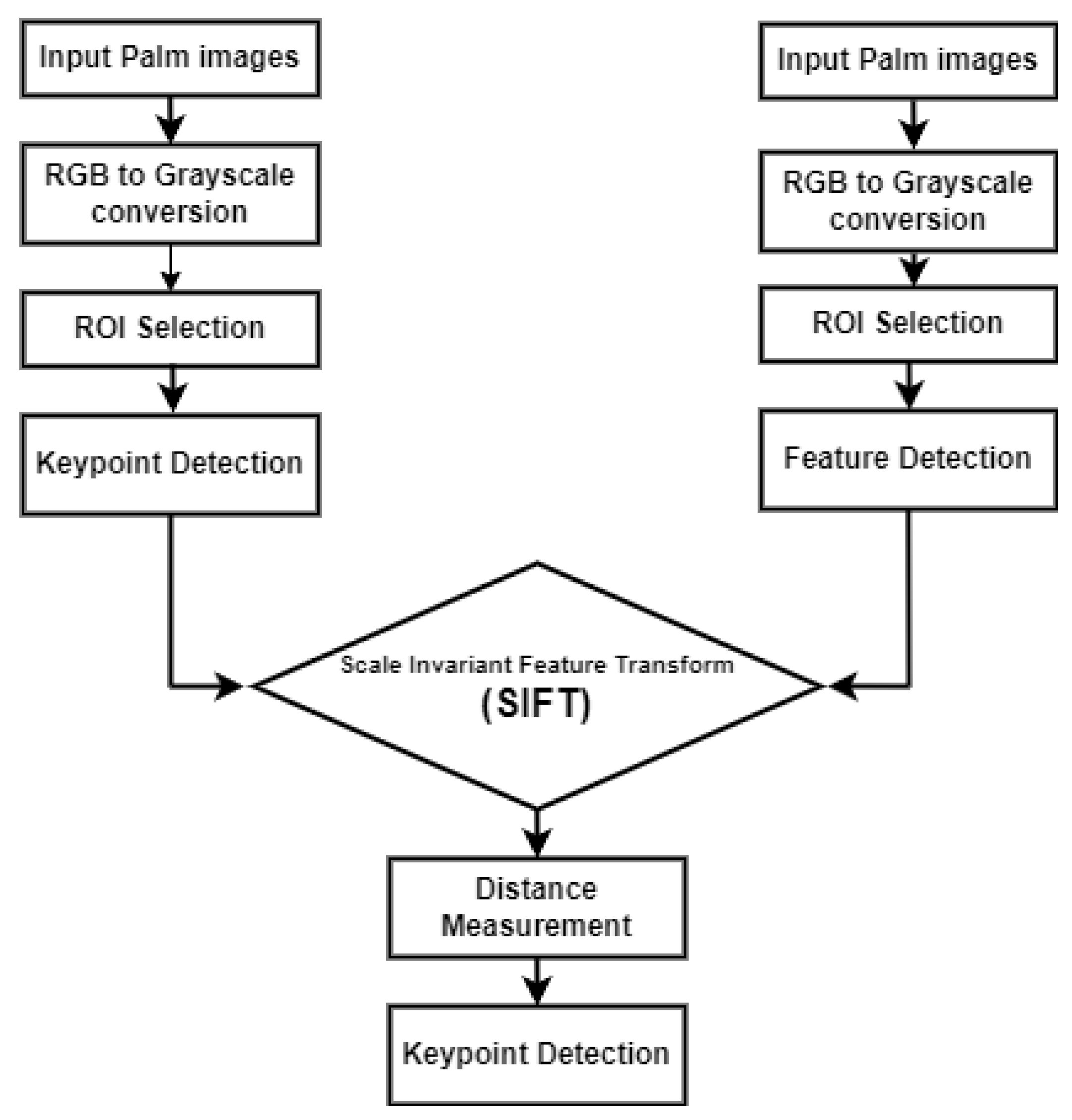

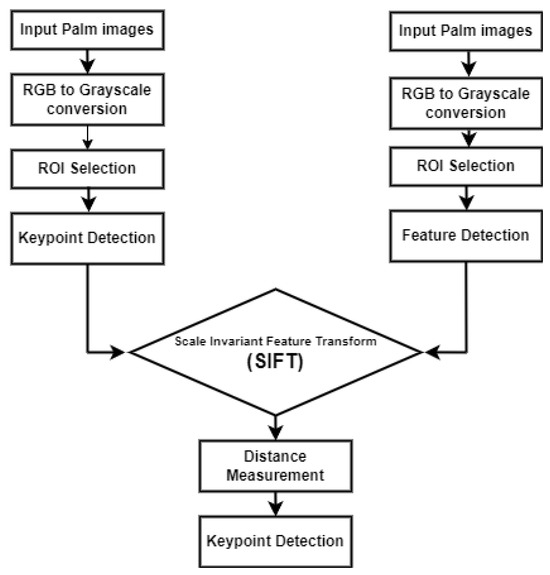

The flowchart in Figure 6 below represents how the SIFT algorithm works from input images to performance evaluations. Both test and reference images must go through the conversion from RGB image to Grayscale image. After ROI, the SIFT algorithm is applied, and later distance measurement is performed; here, we used Euclidean distance. Therefore, for quality assessment, SIFT is used to measure the similarity between each generated image and its corresponding image from the dataset. Thus, a smaller Euclidean distance would mean a lower similarity score, indicating that the generated image is non-ideal and unwanted.

Figure 6.

Flowchart for filtering unwanted images using the SIFT algorithm.

4.3. Performance Evaluation

For each model, after quality assessment, the generated images are divided into two categories: “good” for ideal images and “poor” for unwanted images. Then, the performance evaluation of each model is performed by measuring the uniqueness of the images generated. SIFT can again be used to measure similarity scores between images. A similarity score is calculated by randomly selecting pairs of generated images (unlike in quality assessment where corresponding generated images are compared) and comparing them using the SIFT feature extraction. The lower the score, the greater the uniqueness and thus the better the model’s performance.

5. Implementation

5.1. Preparing Scripts and Datasets

The datasets were initially converted to the .tfrecords format due to requiring less space than the original format. The StyleGAN2-ADA example script provided by NVIDIA [18] was modified to adjust its parameters to suit the dataset’s requirements. To accommodate different palm features of left and right hands, such as principal and secondary lines, the datasets were grouped into various combinations such as only right-hand datasets, only left-hand datasets, Dataset1 right-hand datasets with the rest of the dataset, and Dataset2 left-hand datasets with the rest of the dataset to achieve better output. The training sessions for the database were grouped as follows (Table 1).

Table 1.

Different training sessions with different groups of datasets.

5.2. Training Models

StyleGAN2-ADA: The training was conducted on Google Colaboratory Pro Plus with 52 GB RAM and a P100 GPU. The StyleGAN2-ADA model was trained for 500 epochs in a Jupyter notebook environment. During the training sessions, each dataset from DB1 and DB2 generated a model (.pkl) file every 100 epochs. Starting with 4 × 4 pixels as mentioned in the architecture, the resolution increased progressively, and the training was concluded when it reached 512 × 512 pixels. The final (.pkl) file was used to save the models.

StyleGAN3: The training utilized a high-performance 4080 GPU and a substantial 64 GB of RAM. The training was conducted in a Jupyter notebook environment. The training protocol of the model was organized in the following manner. The training process consisted of multiple steps for monitoring and modifying the model’s performance. Initially, the model performed training for a total of 1000 epochs.

This research focused on ensuring the best quality of the generated images, particularly regarding their resolution, detail, and fidelity to the input data. We also monitored the training progress through periodic snapshots taken every fifty epochs. This approach allowed us to track the model’s evolution closely and make necessary adjustments as needed.

5.3. Quality Assessment

Two quality evaluation factors were assessed: manually checking the images by eye and the Scale Invariant Feature Transform (SIFT) algorithm [29]. Images showing visibly low quality were eliminated after manually checking them by eye. These were classified as “poor-quality images”. Images that did not accurately detect the main lines were filtered and separated from the dataset using a test script. The script verified if the generated images had anomalies such as six fingers or palm marker issues.

The SIFT-based image processing method was applied to further eliminate unwanted images. The training samples were resized to match the test samples, and all images were resized to 205 × 345 pixels to focus on the region of interest (ROI), specifically the palm.

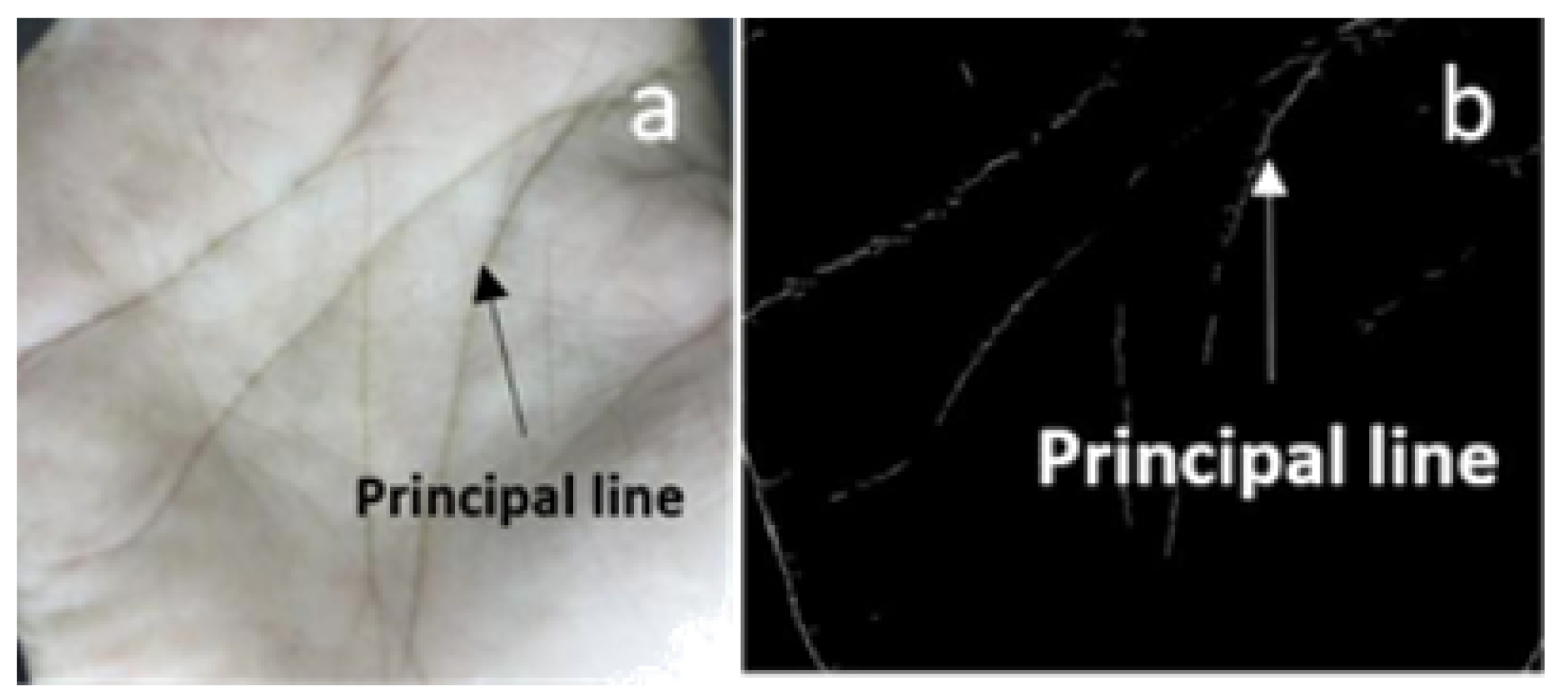

Pixel ratios of the images were calculated, such as distances and between specific points and , respectively, and the ratio of to was then computed. Images not accurately identifying the principal lines were excluded from the dataset using a ‘score value’ threshold. The threshold value for image quality is subjective to the algorithm and dataset being used. A ratio value between 0.2 and 0.8 to determine a well-matched image. For this research, an image pair was considered well matched for SIFT features if the ratio value exceeded 0.5. Figure 7 shows the palm’s scaled and resized images (ROI).

Figure 7.

(a) Resized ROI image of palm and (b) detected principal lines.

5.4. Performance Evaluation

For StyleGAN2-ADA, the images were separated into “good-” and “poor-quality” categories. To ensure the uniqueness of each synthetic palm image, the SIFT algorithm was implemented in the database for both StyleGAN2-ADA and StyleGAN3. Pairs of images were randomly selected and compared using the SIFT feature extraction. Matches between two images were computed based on their indices in the image list, considering their respective keypoints and descriptors. The score was calculated as a matching percentage considering each image’s number of matches and keypoints. This process was performed iteratively eight hundred times, each time with different randomly selected images.

To apply the SIFT algorithm to the ROI images for StyleGAN2-ADA and StyleGAN3, a total of eight hundred ROI palm and finger images were created to thoroughly verify the uniqueness of each synthetic hand image. Pairs of hand images where SIFT had been previously applied were used for comparison. The computed similarity score between the key points and descriptors was represented by the output score. This process was performed iteratively ten times with different pairs.

For StyleGAN2-ADA, the 3439 synthetic images were divided into two classes: “good-quality” and “poor-quality”, as shown in Table 2. With 113 poor-quality images, 3328 images were classified as good. The images were tested to find out how many were high-quality. The Python script is provided in the shared repository: https://github.com/rizvee007/palmphoto (accessed on 9 September 2024).

Table 2.

Summary of anomalies detected through visual observation using 1560 images.

For StyleGAN3, the “good-” and “poor-quality” images were separated into two classes from 1400 synthetic images. With 21 poor-quality images across five different categories, the remaining 1379 images were classified as good. The “good-” and “poor-quality” images were fed into a test script to determine the number of quality images. The Python script is provided in the shared repository: https://github.com/rizvee007/palmphoto (accessed on 9 September 2024).

To measure the quality and diversity of the model numerically, the Fréchet Inception Distance (FID) [25] was computed on the generated palmprint images by the model. FID, an extension of the Inception Score (IS) [26], was previously proposed to assess the quality of images generated by GANs and other generative models and compare the statistics of generated samples to real samples.

6. Results and Discussion

6.1. Model Training

Each segment of the dataset took different amounts of time to train. For example, Training Session 1 (Table 1) took around 24 h. The rest of the training steps, depending on the step, took between 24 and 96 h.

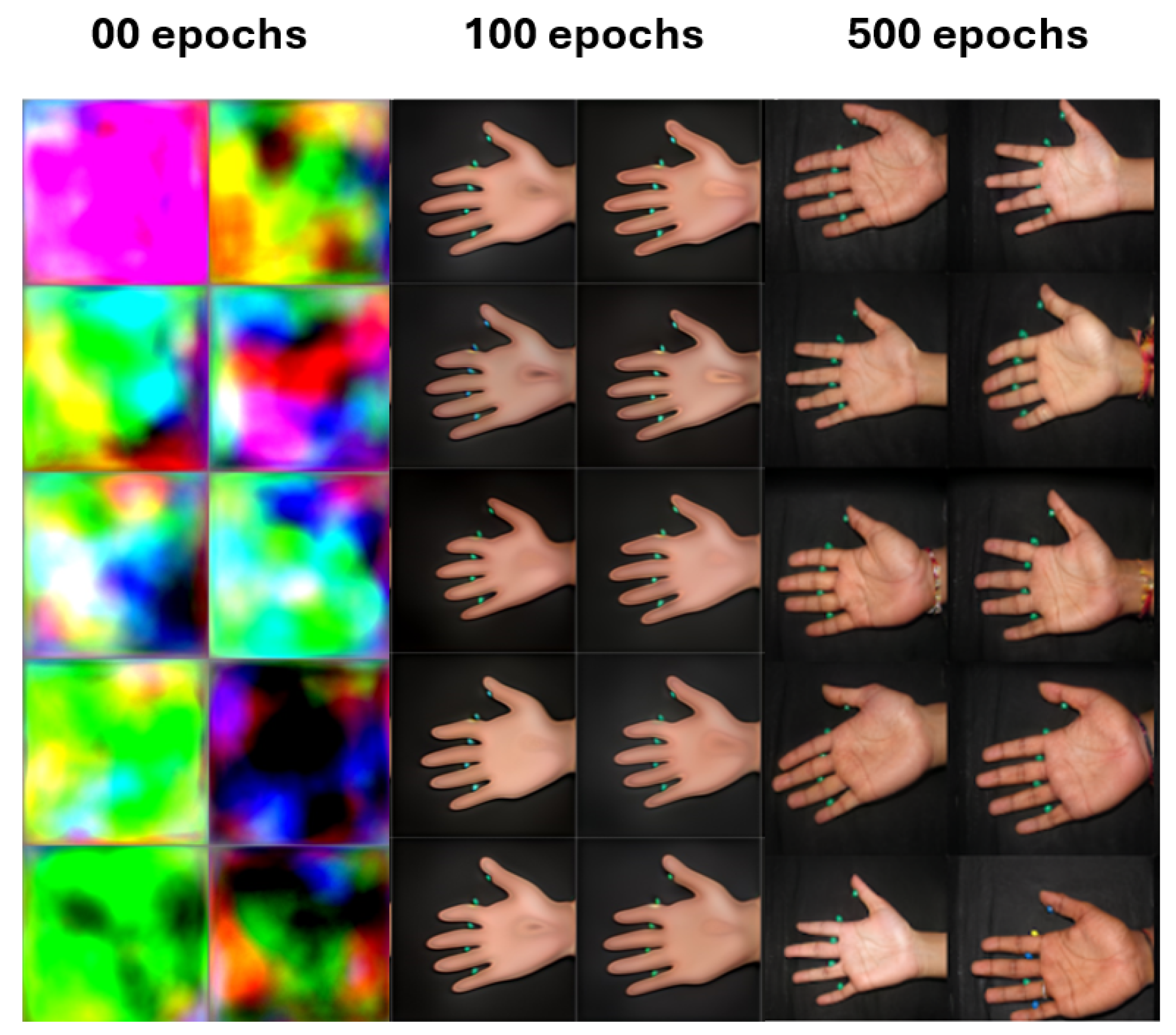

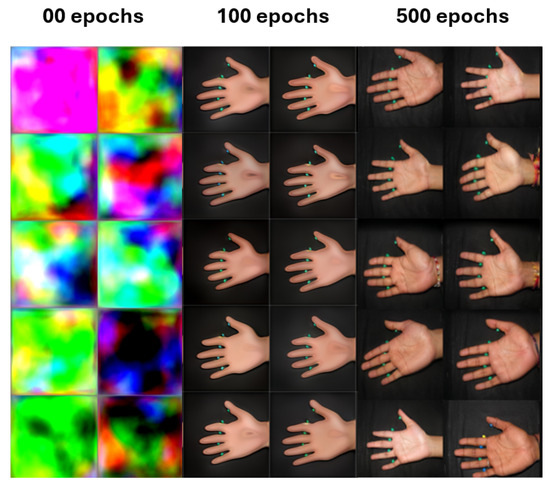

For StyleGAN2-ADA, the training time was approximately three weeks. The first training iteration appeared abnormal as the images transitioned from the original to new ones. During progress, the maximum number of iterations made a noticeable difference from the earlier iterations. For training sessions 1 and 2, 200 epochs were applied as they consisted of either the right or the left hand, while for sessions 3, 4, and 5 (mixed with left and right hand), training was stopped after 500 epochs as further epochs showed no significant change. Figure 8 presents 30 generated palmprint images over different epochs (0th, 100th, and 500th) to illustrate the diversity among the generated images in terms of the position of the principal lines, the color of the palmprint, and their contrast.

Figure 8.

Training situation of the palm photos (from00 epochs to 500 epochs).

The truncation parameter “trunc” determines the variation in the generated images. The default value of ’trunc’ is 0.5, which produces the original image. As the truncation value increases, the images become clearer and more realistic, with the optimal threshold being 1.0. Beyond 1.0, increasing the truncation value further will result in greater diversity, but the images will no longer appear as realistic.

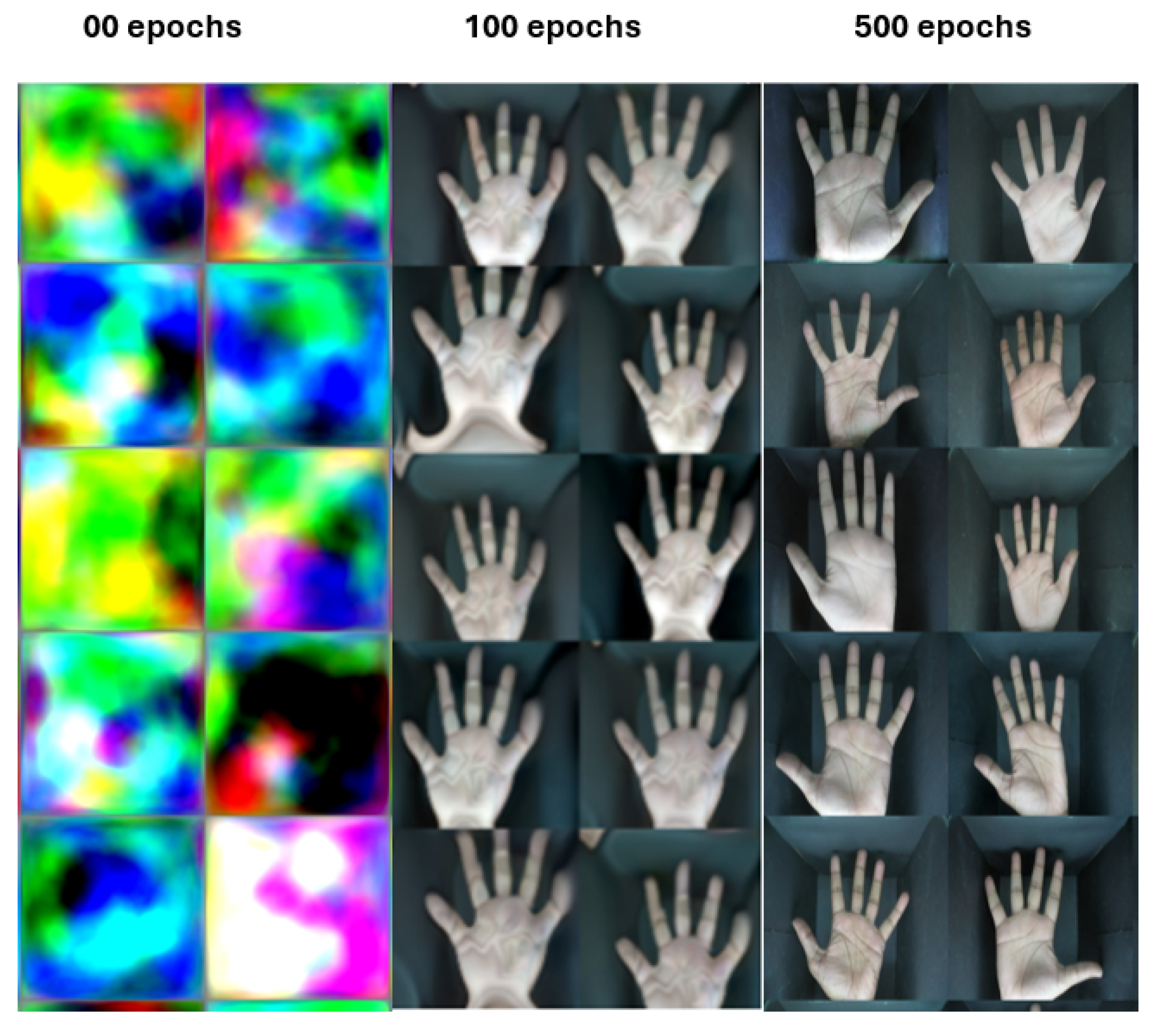

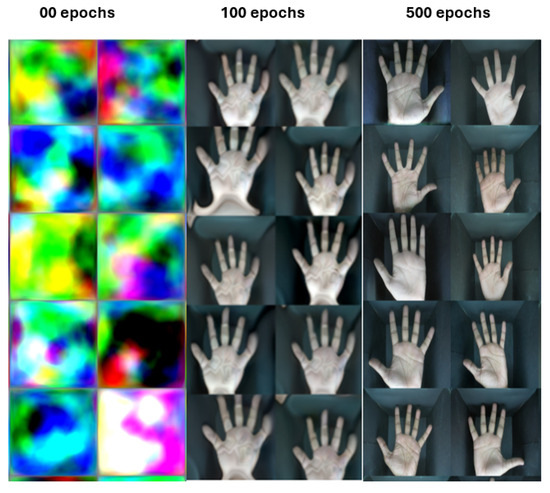

For StyleGAN3, as shown in Figure 9, considerable enhancements in both the quality of the images and the stability of the model were observed during the training process. The final phase of the training resulted in the generation of high-quality images, showcasing the effectiveness of the StyleGAN3 architecture and the implemented training strategy. The model, saved as a (.pkl) file, demonstrated notable improvements in image quality, with enhanced resolution and more realistic texturing, which is a testament to the capa-bilities of the StyleGAN3 model and the tailored training approach.

Figure 9.

Training situation of the palm photos (00 epochs to 500 epochs).

The varying training times highlight the computational intensity required for generating high-quality synthetic palm images, with StyleGAN2-ADA’s lengthy process indicating its complexity. Early training iterations revealed instability, emphasizing the importance of adequate epochs to achieve stable and realistic outputs. Using the truncation parameter ’trunc’ effectively balanced image realism and diversity, showing its critical role in refining model performance. StyleGAN3’s superior results, with its ability to produce high-resolution and realistic images, demonstrate the advanced capabilities of its architecture and tailored training approach. These findings underline the significance of optimizing training strategies and parameters to enhance synthetic image quality and variability. This research confirms the potential of StyleGAN3 in advancing biometric authentication technologies by providing high-quality, diverse datasets essential for robust palmprint recognition systems.

6.2. Quality Assessment

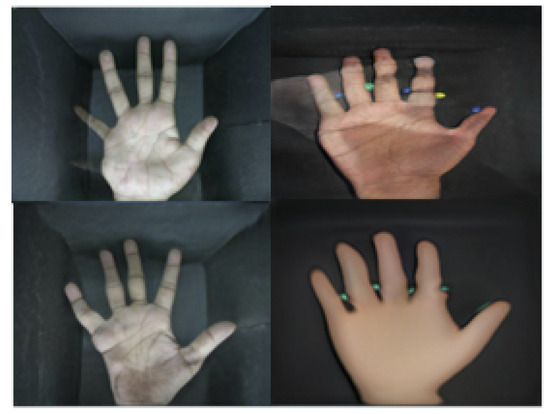

In the quality assessment of the generated images, various anomalies were considered to classify them as “good-quality” or “poor-quality”. Observers used specific annotations to identify and categorize poor-quality images. “Shadow over palm” referred to instances where shadows obscured parts of the palm, reducing image clarity. “Image imbalance” meant issues with uneven lighting or color distribution. “Overlap with two palms” described cases where parts of two palms overlapped. “Finger issue” referred to incomplete or distorted finger depictions, wrong number of fingers, etc. “No palm marker” indicated the absence of the principal line of the palm. Figure 10 shows four different types of anomalies.

Figure 10.

Four types of irregular images: “total imbalance”, “finger issue”, “shadow over palm”, “overlapped with two palms” and “no palm marker”.

Through manually checking by eye, observers categorized the poor images into these annotations. Furthermore, a test script validated these observations by identifying these anomalies. Figure 11 shows the detection of finger anomalies carried out by the contributed script.

Figure 11.

Detecting finger anomalies (six fingers).

A total of 3439 images were successfully generated using StyleGAN2-ADA, while StyleGAN3 produced 1400 images over the course of 500 iterations. Because of limitations in time and resources, two observers visually inspected a randomly selected sample of 1560 images in order to identify any abnormalities. A total of 1000 images were chosen from the set of images produced by StyleGAN2-ADA, while 560 images were selected from the set generated by StyleGAN3. Table 2 presents a concise overview of the anomalies that were identified by the observers.

Table 3 shows the percentage of poor images over the number of images investigated to provide a fair comparison between the two models. The quality assessment of generated images revealed significant differences between StyleGAN2-ADA and StyleGAN3. StyleGAN3 demonstrated improved performance, producing only 21 poor-quality images, indicating a substantial reduction in errors. As StyleGAN3 generated fewer poor-quality image types, Table 2 shows that StyleGAN3 is better at generating high-quality synthetic palm images compared to StyleGAN2-ADA. This improvement signifies the enhanced reliability of StyleGAN3 for creating robust datasets for biometric authentication research.

Table 3.

Total number of generated images and eliminated images.

Once the visual investigation was complete, it was evident that Observer 2 found more poor images than Observer 1. Therefore, the results of Observer 2 were more accurate and were used for the second factor quality assessment step. The ‘good images’ went through a SIFT threshold-based filtration process as a second factor quality assessment to remove further poor images, as mentioned previously in the implementation section under quality assessment. Table 4 shows the final tally of ‘poor-quality’ images.

Table 4.

Randomly selected “hand pairs” and matched similarities.

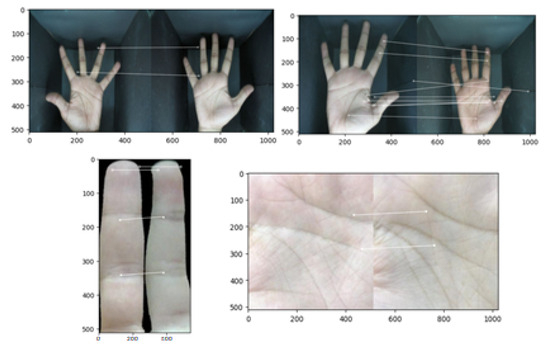

6.3. Performance Evaluation

The SIFT output, i.e., similarity score, represents the similarity between images. Figure 12 shows the comparison results, and Table 5 shows the comparison results of 800 pairs.

Figure 12.

Using the SIFT feature extractor to compare random original images with generated images from StyleGAN3.

Table 5.

Randomly selected “ROI pairs” and matched similarities.

The average similarity score of StyleGAN3 was 16.1%, whereas that of StyleGAN2-ADA was 19.18%. This means that all images are unique [range between 0.15 and 0.45], verifying the distinction of the synthetic ROI of the images of the palm and fingers in our dataset. Table 5 shows the comparison results of 800 random pairs of images and Table 6 shows the FID scores of our proposed StyleGAN models. As we can see, the models achieve relatively low FID scores on public image datasets compared to similar models, e.g., Palm-GAN [14].

Table 6.

FID score comparison.

The performance evaluation involved separating the synthetic images generated by StyleGAN2-ADA and StyleGAN3 into “good-” and “poor-quality” categories. For StyleGAN2-ADA, out of 3439 images, 3328 were classified as good- and 111 as poor-quality. For StyleGAN3, out of 1400 images, 1379 were good- and 21 were poor-quality across five categories. Both sets of images were assessed using a test script available on GitHub. The output similarity scores were 19.55% for StyleGAN2-ADA and 16.1% for StyleGAN3, indicating distinct and unique images. These results, detailed in Table 4 and Table 5, demonstrate StyleGAN3’s superior performance in producing high-quality synthetic palm images, enhancing the dataset’s reliability for biometric authentication research.

7. Conclusions

This study demonstrates the capabilities of StyleGAN models to develop an advanced model for generating synthetic palm images. This approach not only enhances the reliability of the generated images but also addresses a significant issue in biometric authentication: the creation of unique and distinguishable palmprint images. The SIFT algorithm was integrated into the evaluation framework to confirm the uniqueness of these synthetic images. This methodology, renowned for its effectiveness in feature extraction, was crucial in the analysis. Various tests were conducted in which pairs of images from the generated dataset were randomly selected and compared for similarities. Matches were based on key points and descriptors identified by the SIFT algorithm. The results consistently showed a low similarity score across various image pairs. An average similarity score of 16.12% for hand pairs and 12.89% for ROI pairs indicates the distinctness of each image produced by the StyleGAN3 model. This low average score signifies the high variability within the dataset, ensuring that each synthetic palmprint is unique and not a mere duplication. Such findings are essential in demonstrating StyleGAN3’s effectiveness in generating diverse images and serving as a critical benchmark for future developments in this field. The capability to produce a wide range of unique palm images is immensely valuable in enhancing datasets for palmprint recognition tasks, thus contributing to the advancement of biometric authentication technologies. Therefore, if the size of the dataset is increased and made available to the public, it will promote broader research in the field and enable more thorough testing and the improvement of recognition algorithms. Furthermore, this will encourage collaboration and innovation in the community, resulting in the improved accuracy and security of biometric systems.

Author Contributions

Authors A.M.M.C. and M.J.A.K. are contributed equally and M.H.I. supervised the authors. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by the Center for Identification Technology Research and the National Science Foundation under Grant No. 1650503.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author due to privacy reasons.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Chowdhury, A.; Hossain, S.; Sarker, M.; Imtiaz, M. Automatic Generation of Synthetic Palm Images. In Proceedings of the Interdisciplinary Conference on Mechanics, Computers and Electrics, Barcelona, Spain, 6–7 October 2022; pp. 6–7. [Google Scholar]

- Joshi, D.G.; Rao, Y.V.; Kar, S.; Kumar, V.; Kumar, R. Computer-vision-based approach to personal identification using finger crease pattern. Pattern Recognit. 1998, 31, 15–22. [Google Scholar] [CrossRef]

- Chowdhury, A.M.; Imtiaz, M.H. A machine learning approach for person authentication from EEG signals. In Proceedings of the 2023 IEEE 32nd Microelectronics Design & Test Symposium (MDTS), Albany, NY, USA, 8–10 May 2023. [Google Scholar]

- Bhanu, B.; Kumar, A. Deep Learning for Biometrics; Springer: Berlin/Heidelberg, Germany, 2017; Volume 7. [Google Scholar]

- Jain, A.; Ross, A.; Prabhakar, S. Fingerprint matching using minutiae and texture features. In Proceedings of the Proceedings 2001 International Conference on Image Processing (Cat. No. 01CH37205), Thessaloniki, Greece, 7–10 October 2001; Volume 3. [Google Scholar]

- Zhang, D.; Kong, W.K.; You, J.; Wong, M. Online palmprint identification. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 1041–1050. [Google Scholar] [CrossRef]

- You, J.; Li, W.; Zhang, D. Hierarchical palmprint identification via multiple feature extraction. Pattern Recognit. 2002, 35, 847–859. [Google Scholar] [CrossRef]

- Kong, A.; Zhang, D.; Kamel, M. Palmprint identification using feature-level fusion. Pattern Recognit. 2006, 39, 478–487. [Google Scholar] [CrossRef]

- Zhong, D.; Du, X.; Zhong, K. Decade progress of palmprint recognition: A brief survey. Neurocomputing 2019, 328, 16–28. [Google Scholar] [CrossRef]

- Sundararajan, K.; Woodard, D.L. Deep learning for biometrics: A survey. ACM Comput. Surv. (CSUR) 2018, 51, 1–34. [Google Scholar] [CrossRef]

- Podio, F.L. Biometrics—Technologies for Highly Secure Personal Authentication. 2001. Available online: https://www.nist.gov/publications/biometrics-technologies-highly-secure-personal-authentication (accessed on 1 July 2024).

- Grother, P. Biometrics standards. In Handbook of Biometrics; Springer: New York, NY, USA, 2008; pp. 509–527. [Google Scholar]

- Minaee, S.; Minaei, M.; Abdolrashidi, A. Palm-GAN: Generating realistic palmprint images using total-variation regularized GAN. arXiv 2020, arXiv:2003.10834. [Google Scholar]

- Bahmani, K.; Plesh, R.; Johnson, P.; Schuckers, S.; Swyka, T. High fidelity fingerprint generation: Quality, uniqueness, and privacy. In Proceedings of the 2021 IEEE International Conference on Image Processing (ICIP), Anchorage, AK, USA, 19–22 September 2021; pp. 3018–3022. [Google Scholar]

- Zhang, L.; Gonzalez-Garcia, A.; Van De Weijer, J.; Danelljan, M.; Khan, F.S. Synthetic data generation for end-to-end thermal infrared tracking. IEEE Trans. Image Process. 2018, 28, 1837–1850. [Google Scholar] [CrossRef] [PubMed]

- Jain, A.K.; Kumar, A. Biometric recognition: An overview. In Second Generation Biometrics: The Ethical, Legal and Social Context; Springer: Dordrecht, The Netherlands, 2012; pp. 49–79. [Google Scholar]

- Karras, T.; Laine, S.; Aittala, M.; Hellsten, J.; Lehtinen, J.; Aila, T. Analyzing and improving the image quality of stylegan. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020. [Google Scholar]

- Woodland, M.; Wood, J.; Anderson, B.M.; Kundu, S.; Lin, E.; Koay, E.; Odisio, B.; Chung, C.; Kang, H.C.; Venkatesan, A.M.; et al. Evaluating the performance of StyleGAN2-ADA on medical images. In Proceedings of the International Workshop on Simulation and Synthesis in Medical Imaging, Singapore, 18 September 2022; Springer International Publishing: Cham, Switzerland, 2022; pp. 1–34. [Google Scholar]

- Kim, J.; Hong, S.A.; Kim, H. A stylegan image detection model based on convolutional neural network. J. Korea Multimed. Soc. 2019, 22, 1447–1456. [Google Scholar]

- Sauer, A.; Schwarz, K.; Geiger, A. Stylegan-xl: Scaling stylegan to large diverse datasets. In Proceedings of the ACM SIGGRAPH 2022 Conference Proceedings, Vancouver, BC, Canada, 7–11 August 2022. [Google Scholar]

- Doran, S.J.; Charles-Edwards, L.; Reinsberg, S.A.; Leach, M.O. A complete distortion correction for MR images: I. Gradient warp correction. Phys. Med. Biol. 2005, 50, 1343. [Google Scholar] [CrossRef] [PubMed]

- Makrushin, A.; Uhl, A.; Dittmann, J. A survey on synthetic biometrics: Fingerprint, face, iris and vascular patterns. IEEE Access 2023, 11, 33887–33899. [Google Scholar] [CrossRef]

- Sevastopolskiy, A.; Malkov, Y.; Durasov, N.; Verdoliva, L.; Nießner, M. How to boost face recognition with stylegan? In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–3 October 2023. [Google Scholar]

- Marriott, R.T.; Madiouni, S.; Romdhani, S.; Gentric, S.; Chen, L. An assessment of gans for identity-related applications. In Proceedings of the 2020 IEEE International Joint Conference on Biometrics (IJCB), Houston, TX, USA, 28 September–1 October 2020; pp. 1–10. [Google Scholar]

- Sarkar, E.; Korshunov, P.; Colbois, L.; Marcel, S. Are GAN-based morphs threatening face recognition? In Proceedings of the ICASSP 2022—2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 23–27 May 2022. [Google Scholar]

- Izadpanahkakhk, M.; Razavi, S.M.; Taghipour-Gorjikolaie, M.; Zahiri, S.H.; Uncini, A. Novel mobile palmprint databases for biometric authentication. Int. J. Grid Util. Comput. 2019, 10, 465–474. [Google Scholar] [CrossRef]

- Qin, Y.; Zhang, B. Privacy-Preserving Biometrics Image Encryption and Digital Signature Technique Using Arnold and ElGamal. Appl. Sci. 2023, 13, 8117. [Google Scholar] [CrossRef]

- Raghavendra, R.; Busch, C. Texture based features for robust palmprint recognition: A comparative study. EURASIP J. Inf. Secur. 2015, 2015, 5. [Google Scholar] [CrossRef]

- Cruz-Mota, J.; Cruz-Mota, J.; Bogdanova, I.; Paquier, B.; Bierlaire, M.; Thiran, J.P. Scale invariant feature transform on the sphere: Theory and applications. Int. J. Comput. Vis. 2012, 98, 217–241. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).