1. State-of-the-Art

Due to recent developments in the field of quantum computers, the search to build and apply quantum-resistant cryptographic algorithms brings classical cryptography to the next level [

1]. Using those machines, many of today’s most popular cryptosystems can be cracked by the Shor Algorithm [

2]. This is an algorithm that uses quantum computation to equate the prime number phases expressed as sine waves to factor large integers, effectively solving the discreet logarithm problem that many current cryptographic algorithms are focused on [

3,

4,

5]. Quantum computation is still in its infancy and is limited to a handful of mathematical operations that can be reliably determined by Reference [

6]. We do need to build sufficient logical qubits (a logical cubit is stable over time and can be made up of hundreds or thousands of today’s physical qubits) that can be used to fully break cryptographic codes [

7]. In addition to all previous and continuing advances, quantum-resistant cryptography algorithms need to be rigorously checked using old and current data formats or sources to make them compatible with all platforms [

8].

Predominantly, state-of-the-art public key algorithms are based on related problems, three of which are at the top of the list [

9]. These three types of problems are known as the discrete algorithm problem, the entire factoring problem, and the new pre-eminent elliptical curve discrete algorithm problem [

10]. These three groups will be broken by Shor’s quantum PC approximation. This is undoubtedly concerning, considering that these equations are commonly used to ensure the protected sharing of confidential information across the Internet, the development of digital signatures and the securing of other links over unsafe networks [

11].

In view of the inherited shortcomings and major disadvantages involved in the implementation of an effective and smooth Quantum Key Distribution (QKD) [

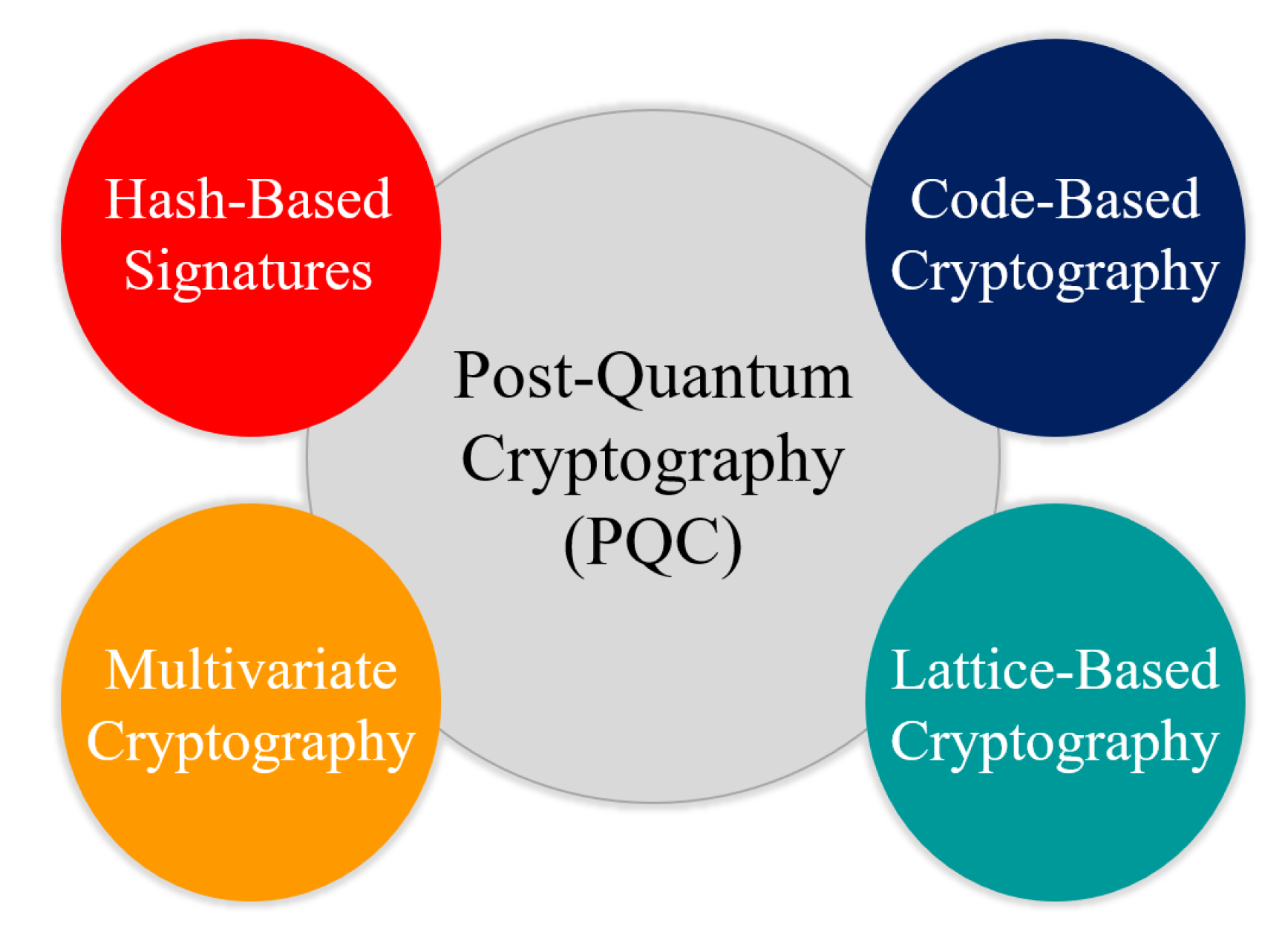

12], the quest for a classic, non-quantum cryptography algorithm that will operate in current real-time infrastructures is an increasingly growing field of study. These quantum robust algorithms are called Post-Quantum Cryptography (PQC) algorithms and are assumed to remain stable after the availability of functional large-scale quantum computing machines [

13], as depicted in

Figure 1. Every modern cryptography must be combined with existing protocols, such as transport layer security. The latest cryptosystem has to weigh:

The size of the encryption keys and the signature.

Time taken to encrypt and decrypt at either end of a contact line, or to sign messages and validate signature.

For each proposed alternative, the amount of traffic sent over the wire needed to complete encryption or decryption or to transmit a signature.

Many NIST (National Institute of Standards and Technology) proposal submissions are also under review. Others have been broken or excluded from the process; some are more conservative or demonstrate how far it would be possible to advance classical cryptography so that it could not be cracked by a quantum computer at a fair expense [

14]. But it is possible to categorize most cryptographic structures into these families: lattice-based, multivariate, hash-based (signatures only), and code-based. These categories are discussed in

Section 2. For certain algorithms, though, there is a concern that they might be too inconvenient to use in the Internet-of-Things (IoT) networks [

1]. With current protocols, such as Secure Shell (SSH) or Transport Layer Security (TLS), we must also be able to integrate new cryptographic schemes. Designers of post quantum cryptosystems need to take these attributes into account for IoT use-cases in order to do so:

Latency induced by encryption and decryption at both ends of the communication line, assuming a number of devices to slow and memory limited IoT devices from large and fast servers.

For ultra low latency, limit the size of public keys and signatures.

Clear network architecture that facilitates crypt-analysis and the detection of vulnerabilities that could be exploited in a dense IoT network.

Seamless integration with the existing infrastructure.

Post-Quantum protocols include a rich collection of primitives that can be used to solve the problems presented by implementation across different computing platforms (e.g., cloud versus IoT ecosystems) and for various use cases [

15,

16,

17]. This involves the ability to compute encrypted data by having resilient (somewhat widely described than ever before) protocols against powerful attackers based on asymmetric key cryptography (using quantum machines and algorithms) and to provide security beyond the context of classical cryptography [

18]. Indeed, PQ cryptosystems are committed to strengthening the protection [

19] of mission-critical infrastructures, especially in energy, medical, surveillance, space exploration, etc. Due to the flexibility and scalability of PQ cryptosystems, these algorithms are also implemented in next generation 5G/NB-IoT networks, as well as for secure communications, for electric vehicle charging infrastructure [

20,

21,

22].

This survey has the following contributions. In

Section 1, we discuss the state-of-the-art of Lattice-Based Cryptography (LBC), including the review papers to date.

Section 2 elaborates the wider implementation of post-quantum cryptography (PQC), including Hash-Based Signatures, Code-Based Signatures, Multivariate Cryptography, and Lattice-Based Cryptography. In

Section 3, we look at the fundamental mathematics and security-proofs of LBC. Moreover, it discusses the Ajtai-Dwork, Learning with Errors (LWE), and N-th degree Truncated polynomial Ring Units (NTRU) cryptosystems in detail. The extended security proofs of LBC against quantum attacks are discussed in

Section 4, whereas

Section 5 deals with the implementation challenges of LBC, both at software and hardware domain for authentication, key sharing, and digital signatures. In addition, the studies are applied to the application of LBC for power-restricted IoT applications, i.e., Lightweight Lattice Cryptography (LW-LBC). To conclude the survey, we review the implementation of LBC at FPGA level for the real-time experimentation of post-quantum cryptography. The key motivation of this survey was to provide comprehensive information on the future issues of quantum robust cryptography for IoT devices through LW-LBC.

4. Lattice Cryptography Against Quantum Attacks

In this section, we will summarize the fact thet LWC algorithm is secure against the known quantum attacks, i.e.,

is

-hard [

108,

109]. We shall show that the problems of approximating the shortest and closest vector in a lattice to within a factor of

lies in the

intersect

[

110]. Different information is available in the literature to test the security standard of LWC post-quantum cryptographic primitives [

110,

111,

112]. Consider factoring the

-Hard and the language to describe factoring is

C=

, where

n has a factor

. Now,

and the factoring is highly dependent on

P [

113], since

, so that there would be a polynomial time algorithm for deciding whether a string is

or not [

114]. If, under some applied conditions, we assume that

C is

complete, but, in cryptography theory, to date, there is no proof available for

, it stays

[

115,

116].

Extended Security Proof

The lattices have have been investigated extensively in mathematics, and many different problems can be explored exclusively related to lattices, such as integer programming [

117], factoring polynomials with rational co-efficients [

118], integer relation finding [

119], integer factoring, and diophantine approximation [

120,

121]. Latest research on the study of lattices gained a lot of attention in the computer science community due to the fact that lattice problems were shown by Ajtai [

43] to possess a particularly desirable property for cryptography: worst-case to average-case reducibility. As discussed previously in

Section 2, the two problems Shortest Vector Problem (SVP) and Closest Vector Problem (CVP) have been widely studied [

122,

123,

124]. The most important parameter of interest here is the factor of approximation

in the given basis

of a lattice to find the shortest non-zero lattice point in the Euclidean norm in the case of SVP, whereas, given the basis

of a lattice and a target vector

, find the closest lattice point to

v in the Euclidean norm for CVP. The problem

constitutes of distinguishing between the instances of SVP in which the length of the shortest vector is maximum 1 or larger than

, where

can be a constant or a fixed function of the dimension of the lattice

n, whereas, for

, basis and the extra vector

decodes whether the distance of

v from the lattice is at most 1 or larger than

. The un-likelihood of the NP-hardness of approximating SVP and CVP within polynomial factors has also been evaluated in [

125]. Here, we formulate the approximation problems associated with the shortest vector problem and the closest vector problem in terms of the following supposition or a promise problem (i.e., a generalization of a decision problem where the input is promised to belong to a particular subset of all the possible inputs of a system):

Definition 1.(approximate SVP): The promise problem (where 1) is a function of the dimension that is defined as follows. Instances are pairs , where is a lattice basis, and d is a positive number and can be expressed as:

is a YES instance if , i.e., for some ,

is a NO instance if , i.e., for all .

Definition 2.(approximate CVP): The promise problem (where 1) is a function of the dimension that is defined as follows. Instances are triples , where is a lattice basis, a vector, and d is a positive number and can be expressed as:

is a YES instance if , i.e., for some ,

is a NO instance if , i.e., for some .

Definition 3.(approximate CVP’): The promise problem (where 1) is a function of the dimension that is defined as follows. Instances are triples , where is a full rank matrix, a vector, and d is a positive number and can be expressed as:

is a YES instance if for some ,

is a NO instance for all and all .

Therefore, it can be characterized that [

125]

,

, and

are NP-hard for any constant factor

. For LWC on the implementation of cryptographic primitives, it is well documented that the security level relies on the hardness of the above mentioned lattice problems [

83,

126]. For example, in cryptographic constructions based on factoring, the assumption is that it is hard to factor numbers chosen from a certain distribution, which is why it is considered as quantum-secured algorithm.

5. Lightweight Lattice-Based Cryptography for IoT Devices

The emergence of new edge computing platforms, such as cloud computing, software-defined networks, and the Internet-of-Things (IoT), calls for the adoption of an increasing number of security frameworks, which in turn require the introduction of a variety of primitive cryptographic elements, but the security is just one vector in the IoT world [

127]. It is also necessary to implement those secure frameworks that consume less on-board processing, memory and power resources [

128]. This presents enormous difficulties in the design and execution of new cryptographic principles in a single embodiment, as diverging priorities and restrictions are accurate for the computing platforms. This involves the development of programmable IoT hardware capable of effectively executing not only individual cryptographic algorithms [

129], but complete protocols, with the subsequent task of agility design, e.g., developing computer devices that achieve the performance of Application-Specific Integrated Circuits (ASICs), while keeping some programmability level [

130,

131].

Recently, many researchers are investigating Lightweight Lattice-Based Cryptography (LW-LBC) [

128,

132], where performance evaluation is fairly measured and benchmarked in terms of low-power footprint, narrow area, lightweight bandwidth requirements and good performance. The main characteristics of post-quantum LBC that makes them well suited for IoT world are: (a) these schemes offer security proofs based on NP-hard problems with average-case to worst-case hardness; (b) secondly, the LBC implementations are noteworthy for their efficiency in addition to being quantum-age stable, largely due to their inherent linear algebra-based matrix/vector operations on integers; and, (c) third, for specialized security, LBC buildings offer expanded features, in addition to the simple classical cryptographic primitives (encryption, signatures, key exchange solutions required in a quantum era, services, such as identity-based encryption (IBE) [

133], attribute-based encryption (ABE) [

11], and fully homomorphic encryption (FHE)) [

134].

Figure 4 depicts the communication bandwidth by calculating the data bytes of various LBC algorithms with sk, pk, and signature variants, as comprehensively analyzed in Reference [

128], while the number manifested at the end of each algorithm is the level of security achieved according to the NIST standards. These security levels can be defined as: (a) Level 1: at least as hard to break as AES-128 (exhaustive key search), (b) Level 2: at least as hard to break as SHA-256 (collision search), (c) Level 3: at least as hard to break as AES-192 (exhaustive key search), (d) Level 4: at least as hard to break as SHA-384 (collision search), and (e) Level 5: at least as hard to break as AES-256 (exhaustive key search). It is also worth mentioning that this security matrix is highly dependent on the hardware/computational resources of IoT-Edge nodes in the network. It can be seen from the analysis that Dilithium algorithms have consumed high bandwidth but are unable to achieve a high level of security, whereas the Falcon algorithms have consumed less bandwidth for achieving high level of security. These algorithms are ideal for lightweight implementation of LBC in the IoT devices.

Figure 5 depicts the communication bandwidths of LBC algorithms implemented with public key encryption (PKE) or with Key encapsulation mechanisms (KEM) schemes [

128,

135,

136]. It can be seen from the results that Saber and ThreeBears variants both consume less bandwidth at diverse NIST security levels and can be considered as the suitable candidates for lightweight implementation of LBC in the IoT networks.

6. Hardware Implementation of Lightweight Lattice-Based Cryptography

In this section, we have discussed the hardware implementation of LW-LBC on different computational platforms [

137]. Many lattice systems originally require large matrices to be stored over integer rings and are very inefficient in both run-time and storage space. The principle of replacing matrices with polynomials in integer rings over ideals enables both to be minimized. Therefore, in very effective structures, the substitution of lattices with perfect lattices occurs [

136,

137]. It is recommended that, for IoT devices (based on communication technologies, such as IEEE 802.11ah, 802.15.4, low-power Wi-Fi, BLE, LoRawan, Sigfox, NB-IoT, etc.), that inherently have reduced computational resources, limited on-board memory, and small form-factor battery banks (based on hardware platforms, such as Raspberry Pi, Beaglebones, etc.), instead of storing huge matrices of space

, where n is larger than 128, it is sufficient to store just

elements. In addition, the Fast Fourier Transform can be used effectively to multiply the elements of ideal lattices (FFT w.r.t time

for serial architecture and

for a parallel architecture rather than complex

computation. This way, the hardware resources available can be utilized to implement LW-LBC in a cost-effective way in an IoT network.

The fundamental modules of lattice-based cryptosystem that guides the actual hardware implementation are the multipliers and samplers. The primary performance bottlenecks are polynomial multiplication for perfect lattices, and matrix multiplication for regular lattices, whereas the discrete Gaussian sampling is used to sample noise and cover hidden information. In the literature, there are different algorithms for the sampler and multiplier, providing the researchers with a particular end-user application [

138]. For the lightweight arithmetic implementation of LBC, matrix multiplication algorithms are adopted for regular LWE schemes, while number theoretical transform (NTT) is a safer alternative in Ring-LWE for polynomial multiplication [

139]. On the other hand the dynamics of large scale implementation of IoT hardware is different. Standard LWE-based systems display a comparatively high memory foot-print when deployed due to the large key scale (hundreds of kilobytes per public key), which makes it impossible to quickly deploy standard LWE-based systems [

140]. The adoption of unique ring architectures, such as Ring-LWE, provides a crucial size reduction by a factor of n compared to regular LWE, rendering Ring-LWE an outstanding candidate for resource-restricted IoT devices.

As we can see in more depth in the coming paragraph, high-performance Intel/AMD processors, which are famously equipped with Advanced Vector Extensions (AVX) and ARM/AVR micro-controllers are common software execution platforms [

140]. Recently, practical software implementations of standard lattices, encryption schemes and key exchanges have been reported [

141]. Other hardware platforms, such as field programmable gate arrays (FPGA) and application-specific integrated circuits (ASICs), have also been used to implement LBC. FPGAs provide flexibility and customization but not agility [

142], whereas ASCIs are less power hungry, while offering compactness and design flexibility.

In this section, we summarize the practical hardware implementation of LBC by comparing the memory usage (bytes), computational time (ms) and clock cycle counts on an ARM CORTEX-M AT 168 MHz platform [

128].

Table 3 depicts the hardware complexity of implementing LBC based on KEMs [

143]. The statistics show that, for a limited memory footprint, Saber stands out both in terms of its resource-constrained existence but also in terms of throughput performance, while it also achieves the level-5 security according to the NIST guidelines. Therefore, it is recommended that Saber can be used as a lightweight LBC algorithm well suited of post-quantum IoT networks.

Table 4 depicts the hardware complexity of implementing LBC via signature scheme [

144,

145]. The data analyzed by Reference [

128] depicts that signature-based schemes are computationally exhaustive as compared to KEMs schemes. Nevertheless, Dilithium performs well as compared to Falcon and qTesla. We can conclude that, for signature implementation, Dilithium can be used in post-quantum IoT networks where level-5 security is not the prime focus but the acceptable range of security is in between 1 and 3.

A perfect post-quantum cryptosystem, such as pseudorandom generators, pseudorandom functions, and digital signatures, enables to identify the best parameters. As, discussed in this section the performance of diverse PQ algorithms is based on the level of acceptable security levels. The compromise on the security level can lead to side-channel attacks in the IoT networks. The computational cycles, time, and stack (bytes) are the key parameters researchers have to take into account while designing the dense IoT networks. In lattice schemes, the problem of storage (memory) occurs when immense operations of matrices are used in an integer ring. It is, therefore, appropriate to use polynomials for the matrix multiplication of elements using Fast Fourier transformation (FFT). Although the computational time of LW-LBC is much faster than classical LBC algorithms, these algorithms still need extensive research in machine-to-machine (M2M) and industrial IoT environments with dense sensor devices in the operational technology.