Securing Big Data Integrity for Industrial IoT in Smart Manufacturing Based on the Trusted Consortium Blockchain (TCB)

Abstract

:1. Introduction

2. Background

2.1. Smart Manufacturing Ecosystem

2.2. Big Data Integrity

2.3. IIoT Trust Styles

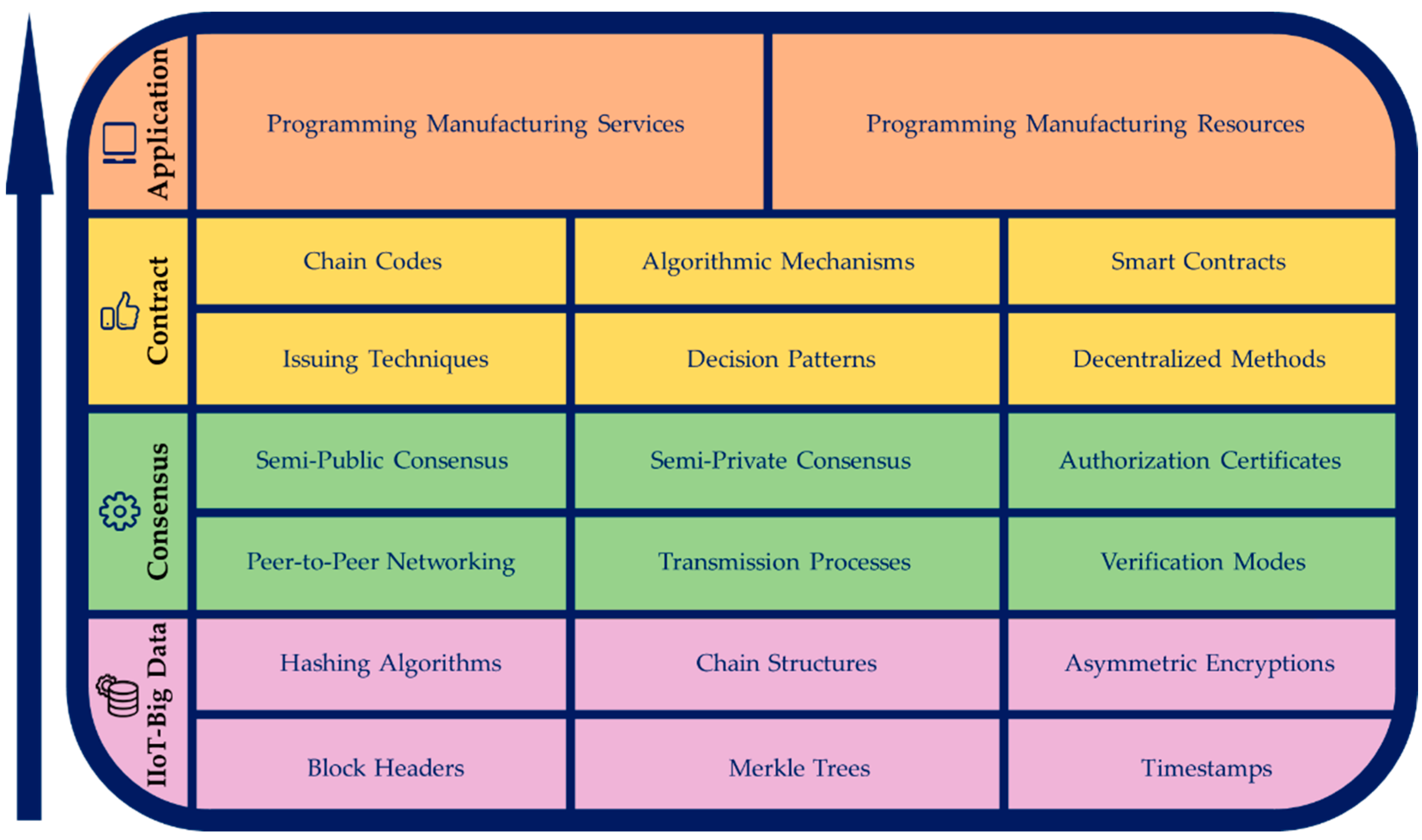

2.4. Consortium Blockchain Stack

3. The Research Gap

4. Related Works

5. The Proposed Solution

5.1. TCB Framework Design and Development

5.1.1. Industrial IoT Layer

- (1)

- IIoT Equipment Connector: it establishes populated connections for industrial equipment, such as robots and remote actuators, with the required information. The associated facility sensors link targeted equipment to specific access points via field bus protocols. After granting access, industrial data collected from several pieces of equipment are metered and synchronized with pre-configured parameters.

- (2)

- IIoT Device Controller: it controls all IIoT big data produced by industrial equipment and manufacturing modulus and flows various devices, such as servo meters, embedded chips, PLC/PIDs, DCS, and CNC. Moreover, the control bus monitors the real-time data in unique source-based identification so that any part of industrial data is observed individually within a decentralized environment.

- (3)

- IIoT Unit Communicator: it provides an intermediary transmission to join physical controllers and computational edge transformers throughout 5G base stations and gateway nodes with high throughput. The RTUs bond between industrial data generated and broadcasted and handle communications using the M2M bus among decentralized devices over vast manufacturing areas to efficiently concentrate small levels of real-time data processing and transmit to central IIoT hubs.

- (4)

- IIoT Edge Transformer: it leverages the I/O senescing of smart edges attached to industrial equipment with rapid response time because of the low latency capturing and handling big data locally across IIoT hubs. The AMQP/MQTT servers acquired real-time data streams from various smart edges and standardized them to optimize the analysis of different security risks. Additionally, the OPC DA/UA servers are monitored the industrial data geographically for secure transmission depending on closing computing to the smart edges that produce the big data.

- (5)

- IIoT Big Data Accumulator: it delivers industrial data from storage nodes to the big data lake for initial pre-processing stages. The storage nodes module simultaneously supports multiple assemblies within the IIoT space, converts partially structured big data into fully structured ones through secure extraction techniques, and sends the processed big data into distinctive chunks. The big data lake achieves filtration, metadata reasoning, and fusing based on locale-time-sensitive.

- (6)

- IIoT Big Data Abstractor: it supports local big data aggregation acquired from heterogeneous manufactured sources and renders efficient real-time data with minimal delay by the substantial number of interoperability events within smart edges. It also manages quality levels of raw industrial data by trimming faulty, incomplete and duplicate big data to minimize the required resources and utilize the limited processing and transmitting capabilities.

- (7)

- IIoT Big Data Loader: it boosts the structured industrial data in historian repositories to improve rational big data computation and enhances decentralized loading capabilities. Afterward, the MES/MOM servers raise the readiness of abstracted IIoT big data by indexing, transferring, and storing the queries and responses among interconnected smart edges for loading directly to the consortium blockchain store in the middle layer of the TCB framework.

5.1.2. Consortium Blockchain Layer

- (1)

- CB Store: it stores designated big data fetched from historian repositories into decentralized storages and manages trusted access to them through the account authority rules. Moreover, it organizes the clean, complete, and error-free industrial data into small well-structured blocks with contextual metadata such as space, time, and location to detect big data integrity faults early.

- (2)

- CB Provider: it encapsulates designated big data into a hyperledger fabric modular (HFM) using testing and tran–chain interfaces. The testing interface receives main big data blocks from decentralized storage, constructs metadata mapping, and composers blocks structure in agreed formats. At the same time, the tran–chain interface detects and analyzes dual transactions to discover the malicious blocks. Both interfaces worked under the standardized policies of the contract governor to identify the correlation and control the chained big data blocks.

- (3)

- CB Encoder: it provides the fundamental requirements of formulating an encryption consensus in addition to customizing the standard contracts to run the consortium blockchain entry functions of given industrial data. Contract unifiers support these functions to normalize the peer-to-peer overlay networks. The contract testers also assess the encrypted blocks during peer consensus to identify errors and avoid vulnerabilities that lead to high exploits.

- (4)

- CB Adapter: it comprises diverse consortium blockchain interactions and builds cohesive capabilities for the chain–chain interoperability, including a registration chain, relay chain, and trans-gateway chain maintained by the trans-backbone chain. The standard API and tasks engine work together on the consortium blockchain to deliver essential adaptation to the cross-peer chain over the manufactured environments.

- (5)

- CB Controller: it employs identify manager to characterize the chains of industrial data blocks and discard the out-of-context ones. Likewise, the transactions manager ensures fast transmission via measuring time series and geolocations of peer chains. The data composers ordered assorted chains of big data blocks corresponding to the volume, speed, and period of chain creation to be ready for representation throughout big data interfaces.

- (6)

- CB Wrapper: it provides a participated multi-chain governor for registration, relay, and trans-gateway chains from the beginning of resources management to the end with permissions management and passing-by tasks management. These three critical mechanisms encompass concurrent focal points to administer the encryption peer consensus and big data integrity.

- (7)

- CB Verifier: it is a verification triad that jointly encompasses peers, credentials, and records verification. Verifying peers checks the structure of peering acting as a linking status. Thus, the verification of credentials confirms consortium consensus modeling. Additionally, verifying records proves the core consistency of in-line chains and off-chains of the big data blocks before enforcing them within access control in the upper layer of the TCB framework.

5.1.3. Big Data Layer

- (1)

- BD Access Control Enforcer: it is responsible for creating authenticity and authority between the big data owners and consortium peers. The authority creator enforces access control policies to all big data requests based on acceptable privileges granted to consortium peers. The big data owners seek and apply authorization rules afforded by the authenticity provider. Afterward, the big data integrity auditing logs are performed on the hyperledger fabric modular (HFM), and the big data blocks charge in the consortium blockchain.

- (2)

- BD Retriever: it retrieves the processed trained big data sets related to the requested big data blocks from the consortium blockchain using the retrieval processing. The features extractor merges and treats the integrity qualities of these blocks. Then, the retrieved blocks from big data contents are subject to a visualization course in order to prepare them for handling with the mechanisms of the big data integrity detector.

- (3)

- BD Integrity Detector: it analyzes the big data blocks to recognize the industrial data integrity aspects according to defined rules. Depending on the determined integrity level, big data blocks are discovered ahead of being labeled differently managing by the detection engine. Additionally, big data blocks are classified previous to placing into the big data source. The integrity metadata are generated during the detection analysis and held on the consortium blockchain to enable integrity capabilities.

- (4)

- BD Distributor: it assigns the big data destinations and maps them to the big data balancer. Formerly, it created scripting components aligned with the big data structure, hinging on the previously stated integrity preferences. Furthermore, these components send copies of the big data blocks from destinations to two distinctive tracks simultaneously. The first track is the big data sets mapper past the big data splitter, and the second is the block reporter passing through the big data reconstructor and then stored on the hyperledger fabric modular (HFM).

- (5)

- BD Splitter: it provides segmentation techniques for an additional coating of securing big data integrity. These techniques split big data blocks by class selector into integral and non-integral data sets based on specified integrity requirements. Next, the leaf calculator used checksum to ensure big data integrity by dint of SHA-512 encryption calculations for the original big data blocks. Then, the class estimator compared the hashing results to the initial encryption parameters after the performance clustering.

- (6)

- BD Reconstructor: it returns the big data blocks to their original forms using the integrity metadata saved in the block reporter over the hyperledger babric modular (HFM). The big data node master performs the segmentation and decryption to reconstruct the original blocks retrieved from big data nodes. The heart beater hardens the segmentation processing for low-integrity big data and decrypts the high-integrity portions of the big data blocks to avoid significant overhead measured by the performance cluster.

- (7)

- BD Integrity Tracker: it traces the streams of big data blocks delivered directly from the refiner cluster to the delivery cluster upon verified transaction queries of consortium peers or big data owners. Additionally, it leverages the event monitoring traceability on the basis of the termed thresholds and specific conditions provided by the hyperledger fabric modular (HFM). By doing this, the real-time execution of the active transaction queries shows continuous results during the industrial data integrity tracking process.

5.2. TCB Framework Implementation and Deployment

5.2.1. Real-Time Transaction Monitoring

| Algorithm 1. Real-time Transaction Monitoring. | |

| 01. | function RealtimeTransactionMonitoring(blockchain) |

| 02. | // Initialize a list to store suspicious transactions |

| 03. | suspiciousTransactions = [] |

| 04. | // Loop through all transactions in the blockchain |

| 05. | for each block in blockchain |

| 06. | for each transaction in block.transactions |

| 07. | // Check if the transaction is suspicious |

| 08. | if (isSuspiciousTransaction(transaction, blockchain)) |

| 09. | // Add the transaction to the list of suspicious transactions |

| 10. | suspiciousTransactions.append(transaction) |

| 11. | // Notify the relevant authorities |

| 12. | notifyAuthorities(transaction) |

| 13. | end if |

| 14. | end for |

| 15. | end for |

| 16. | // Continuously monitor for new transactions |

| 17. | while (true) |

| 18. | newTransaction = getNewTransaction() |

| 19. | // Check if the new transaction is suspicious |

| 20. | if (isSuspiciousTransaction(newTransaction, blockchain)) |

| 21. | // Add the transaction to the list of suspicious transactions |

| 22. | suspiciousTransactions.append(newTransaction) |

| 23. | // Notify the relevant authorities |

| 24. | notifyAuthorities(newTransaction) |

| 25. | end if |

| 26. | end while |

| 27. | end function |

5.2.2. Peer Validation

| Algorithm 2. Peer Validation. | |

| 01. | function PeerValidation(transaction, blockchain) |

| 02. | isValid = True |

| 03. | // Check if the transaction is already in the blockchain |

| 04. | for each block in blockchain |

| 05. | if (block.transaction == transaction) |

| 06. | isValid = False |

| 07. | break |

| 08. | end for |

| 09. | if (isValid) |

| 10. | // Verify the transaction using digital signature |

| 11. | if (verifyTransaction(transaction)) |

| 12. | // Check if the transaction is valid by comparing it to the current state of the network |

| 13. | if (isValidTransaction(transaction, blockchain)) |

| 14. | // Broadcast the transaction to the peer network |

| 15. | broadcast(transaction) |

| 16. | // Add the transaction to the local blockchain |

| 17. | addTransactionToBlockchain(transaction, blockchain) |

| 18. | // Notify peers of new transaction |

| 19. | notifyPeers(transaction) |

| 20. | else |

| 21. | // Discard the transaction if it is invalid |

| 23. | discardTransaction(transaction) |

| 24. | end if |

| 25. | else |

| 26. | // Discard the transaction if digital signature is invalid |

| 27. | discardTransaction(transaction) |

| 28. | end if |

| 29. | else |

| 30. | // Discard the transaction if it already exists in the blockchain |

| 31. | discardTransaction(transaction) |

| 32. | end if |

| 33. | end function |

5.3. TCB Framework Evaluation Metrics and Testbeds

6. Results and Discussion

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Sindhwani, R.; Kumar, R.; Singh, P.L. IIoT implementation challenges: Analysis and mitigation by blockchain. J. Glob. Oper. Strategy. Source. 2021, 15, 363–379. [Google Scholar]

- Xu, H.; Yu, W.; Liu, X.; Griffith, D.; Golmie, N. On data integrity attacks against industrial internet of things. In Proceedings of the 2020 IEEE International Conference on Dependable, Autonomic and Secure Computing, Calgary, AB, Canada, 17–22 August 2020; pp. 21–28. [Google Scholar]

- Androulaki, E.; Barger, A.; Bortnikov, V.; Cachin, C.; De Caro, A.; Yellick, J. Hyperledger fabric: A distributed operating system for permissioned blockchains. In Proceedings of the Thirteenth EuroSys Conference, Porto, Portugal, 23–26 April 2018; pp. 1–15. [Google Scholar]

- Chaurasia, A. IIoT benefits and challenges with blockchain. In Proceedings of the 2020 IEEE International Conference on Decision Aid Sciences and Application (DASA), Sakheer, Bahrain, 8–9 November 2020; pp. 196–203. [Google Scholar]

- Shah, Y.; Sengupta, S. A survey on Classification of Cyber-attacks on IoT and IIoT devices. In Proceedings of the 2020 11th IEEE Annual Ubiquitous Computing, Electronics & Mobile Communication Conference (UEMCON), New York, NY, USA, 28–31 October 2020; pp. 406–413. [Google Scholar]

- Huo, R.; Zeng, S.; Wang, Z.; Shang, J.; Chen, W.; Huang, T.; Liu, Y. A comprehensive survey on blockchain in IIoT: Motivations, research progresses, and future challenges. IEEE Commun. Surv. Tutor. 2022, 24, 88–122. [Google Scholar] [CrossRef]

- Gueta, G.; Abraham, I.; Grossman, S.; Tomescu, A. SBTF: A scalable and decentralized trust infrastructure. In Proceedings of the 2019 49th Annual IEEE/IFIP International Conference on Dependable Systems and Networks, Portland, OR, USA, 24–27 June 2019; pp. 568–580. [Google Scholar]

- Zhang, W.; Bai, Y.; Feng, J. TIIA: A blockchain-enabled Threat Intelligence Integrity Audit scheme for IIoT. Future Gener. Comput. Syst. 2022, 132, 254–265. [Google Scholar] [CrossRef]

- Aleksandrova, B.; Poltavtseva, A.; Shmatov, S. Ensuring Big Data Integrity through Verifiable Zero-Knowledge Operations. In Mobile Internet Security: 5th International Symposium, Jeju Island, Republic of Korea, 7–9 October 2021; Springer: Singapore, 2021; pp. 211–221. [Google Scholar]

- Ali, S.; Shin, W.S.; Song, H. Blockchain-Enabled Open Quality System for Smart Manufacturing: Applications and Challenges. Sustainability 2022, 14, 11677. [Google Scholar] [CrossRef]

- Shang, T.; Chen, X.; Kim, I.; Liu, J. Remote data integrity checking scheme for big data storage. In Proceedings of the 2017 IEEE Second International Conference on Data Science in Cyberspace (DSC), Shenzhen, China, 26–29 June 2017; pp. 53–59. [Google Scholar]

- Zhou, L.; Fu, A.; Yu, S.; Su, M.; Kuang, B. Data integrity verification of the outsourced big data in the cloud environment: A survey. J. Netw. Comput. Appl. 2018, 122, 1–15. [Google Scholar] [CrossRef]

- Arslan, E.; Alhussen, A. A low-overhead integrity verification for big data transfers. In Proceedings of the 2018 IEEE International Conference on Big Data (Big Data), Seattle, WA, USA, 10–13 December 2018; pp. 4227–4236. [Google Scholar]

- Singamaneni, K.K.; Juneja, A.; Abd-Elnaby, M.; Gulati, K.; Kotecha, K.; Kumar, A.P.S. An Enhanced Dynamic Nonlinear Polynomial Integrity-Based QHCP-ABE Framework for Big Data Privacy and Security. Secur. Commun. Netw. 2022, 2022, 4206000. [Google Scholar] [CrossRef]

- Chen, S.; Cai, X.; Wang, X.; Liu, A.; Lu, Q.; Xu, X.; Tao, F. Blockchain applications in PLM towards smart manufacturing. Int. J. Adv. Manuf. Technol. 2022, 118, 2669–2683. [Google Scholar] [CrossRef]

- Buchman, E. Tendermint: Byzantine fault tolerance in the age of blockchains. Ph.D. Thesis, University of Guelph, Guelph, ON, Canada, 2016. [Google Scholar]

- Huang, J. From big data to knowledge: Issues of provenance, trust, and scientific computing integrity. In Proceedings of the 2018 IEEE International Conference on Big Data (Big Data), Seattle, WA, USA, 10–13 December 2018; pp. 2197–2205. [Google Scholar]

- Lee, C.K.; Huo, Y.Z.; Zhang, S.Z.; Ng, K.H. Design of a smart manufacturing system with the application of multi-access edge computing and blockchain technology. IEEE Access 2020, 8, 28659–28667. [Google Scholar] [CrossRef]

- Tariq, N.; Asim, M.; Al-Obeidat, F.; Zubair Farooqi, M.; Baker, T.; Hammoudeh, M.; Ghafir, I. The Security of Big Data in Fog-Enabled IoT Applications Including Blockchain: A Survey. Sensors 2019, 19, 1788. [Google Scholar] [CrossRef]

- Crain, T.; Gramoli, V.; Raynal, M. DBFT: Efficient leaderless byzantine consensus and its application to blockchains. In Proceedings of the 2018 IEEE 17th International Symposium on Network Computing & Applications, Cambridge, MA, USA, 1–3 November 2018; pp. 1–8. [Google Scholar]

- Younan, M.; Houssein, E.H.; Elhoseny, M.; Ali, A.A. Challenges and recommended technologies for the industrial internet of things: A comprehensive review. Measurement 2020, 151, 107198. [Google Scholar] [CrossRef]

- Zuo, Y. Making smart manufacturing smarter—A survey on blockchain technology in Industry 4.0. Enterp. Inf. Syst. 2021, 15, 1323–1353. [Google Scholar] [CrossRef]

- Wei, P.; Wang, D.; Zhao, Y.; Tyagi, S.K.S.; Kumar, N. Blockchain data-based cloud data integrity protection mechanism. Future Gener. Comput. Syst. 2020, 102, 902–911. [Google Scholar] [CrossRef]

- Kasu, P.; Hamandawana, P.; Chung, T.-S. TPBF: Two-Phase Bloom-Filter-Based End-to-End Data Integrity Verification Framework for Object-Based Big Data Transfer Systems. Mathematics 2022, 10, 1591. [Google Scholar] [CrossRef]

- Deepa, N.; Pham, V.; Nguyen, C.; Bhattacharya, S.; Prabhadevi, B.; Gadekallu, R.; Pathirana, N. A survey on blockchain for big data: Approaches, opportunities, and future directions. Future Gener. Comput. Syst. 2022, 131, 209–226. [Google Scholar] [CrossRef]

- Kumar, T.; Harjula, E.; Ejaz, M.; Manzoor, A.; Porambage, P.; Ahmad, I.; Liyanage, M.; Braeken, A.; Ylianttila, M. BlockEdge: Blockchain-Edge Framework for Industrial IoT Networks. IEEE Access 2020, 8, 154166–154185. [Google Scholar] [CrossRef]

- Alam, T. Blockchain-based big data integrity service framework for IoT devices data processing in smart cities. Mindanao J. Sci. Technol. 2021, 19, 1–12. [Google Scholar] [CrossRef]

- Kumari, P.S.; Bucker, N.A.B.A. Data integrity verification using HDFS framework in data flow material environment using cloud computing. Mater. Today Proc. 2022, 60, 13291333. [Google Scholar] [CrossRef]

- Stathakopoulou, C.; David, T.; Pavlovic, M.; Vukolić, M. MIR-BFT: High-throughput robust BFT for decentralized networks. arXiv 2019, arXiv:1906.05552. [Google Scholar]

- Cecchetti, E.; Zhang, F.; Ji, Y.; Juels, A.; Shi, E. Solidus: Confidential distributed ledger transactions via PVORM. In Proceedings of the 2017 ACM SIGSAC Conference on Computer and Communications Security, Dallas, TX, USA, 30 October–3 November 2017; pp. 701–717. [Google Scholar]

- Sengupta, J.; Ruj, S.; Das Bit, S. A Comprehensive Survey on Attacks, Security Issues and Blockchain Solutions for IoT and IIoT. J. Netw. Comput. Appl. 2020, 149, 102481. [Google Scholar] [CrossRef]

- Wu, X.; Kong, F.; Shi, J.; Bao, L.; Gao, F.; Li, J. Blockchain internet of things data integrity detection model. In Proceedings of the International Conference on Advanced Information Science and Systems, Singapore, 15–17 November 2019; pp. 1–7. [Google Scholar]

- Shen, M.; Liu, H.; Zhu, L.; Xu, K.; Yu, H.; Du, X.; Guizani, M. Blockchain-Assisted Secure Device Authentication for Cross-Domain Industrial IoT. IEEE J. Sel. Areas Commun. 2020, 38, 942–954. [Google Scholar] [CrossRef]

- Latif, S.; Idrees, Z.; Ahmad, J.; Zheng, L.; Zou, Z. A blockchain-based architecture for secure and trustworthy operations in the industrial Internet of Things. J. Ind. Inf. Integr. 2021, 21, 100190. [Google Scholar] [CrossRef]

- Kokoris, E.; Jovanovic, P.; Gasser, L.; Gailly, N.; Syta, E.; Ford, B. Omniledger: A secure, scale-out, decentralized ledger via sharding. In Proceedings of the 2018 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 20–24 May 2018; pp. 583–598. [Google Scholar]

- Ur Rehman, M.H.; Yaqoob, I.; Salah, K.; Imran, M.; Jayaraman, P.P.; Perera, C. The role of big data analytics in the industrial Internet of Things. Future Gener. Comput. Syst. 2019, 99, 247–259. [Google Scholar] [CrossRef]

- Li, J.; Liu, Y.; Zhang, Z.; Chao, H. A Method of Data Integrity Check and Repair in Big Data Storage Platform. In 12th EAI International Conference on Bio-Inspired Information and Communication Technologies, Shanghai, China, 7–8 July 2020; Springer: Cham, Switzerland, 2020; pp. 183–188. [Google Scholar]

- Kumar, K.S.; Radhamani, A.S.; Sundaresan, S. Blockchain technology: An insight into architecture, use cases, and its application with industrial IoT and big data. In Blockchain Technology; CRC Press: Boca Raton, FL, USA, 2016; pp. 23–42. [Google Scholar]

- Moniz, H. The Istanbul BFT consensus algorithm. arXiv 2020, arXiv:2002.03613. [Google Scholar]

- Yu, X.L.; Liu, B.; Chen, S.; Xu, X.; Zhu, L. Blockchain-based data integrity service framework for IoT data. In Proceedings of the 2017 IEEE International Conference on Web Services (ICWS), Honolulu, HI, USA, 25–30 June 2017; pp. 468–475. [Google Scholar]

- Schuster, F.; Costa, M.; Fournet, C.; Gkantsidis, C.; Peinado, M.; Mainar, G.; Russinovich, M. VC3: Trustworthy data analytics in the cloud using SGX. In Proceedings of the 2015 IEEE Symposium on Security and Privacy, San Jose, CA, USA, 18–20 May 2015; pp. 38–54. [Google Scholar]

- Shen, Y. The Blockchain-Based System to Guarantee the Data Integrity of IIoT. Bachelor’s Thesis, Mid Sweden University, Sundsvall, Sweden, 2018. [Google Scholar]

- Wang, H.; He, D.; Yu, J.; Xiong, N.N.; Wu, B. RDIC: A blockchain-based remote data integrity checking scheme for IoT in 5G networks. J. Parallel Distrib. Comput. 2021, 152, 1–10. [Google Scholar] [CrossRef]

- Lu, Y. The blockchain: State-of-the-art and research challenges. J. Ind. Inf. Integr. 2019, 15, 80–90. [Google Scholar] [CrossRef]

- Zimmer, M. Addressing Conceptual Gaps in Big Data Research Ethics: An Application of Contextual Integrity. Soc. Media Soc. 2020, 4, 205. [Google Scholar] [CrossRef]

- Yuan, Y.; Zhu, H.; Chen, Y.; Zha, Y.; Xi, W.; Jia, B.; Xin, Y. A secure and efficient data integrity verification scheme for cloud-IoT based on the short signature. IEEE Access 2019, 7, 90036–90044. [Google Scholar]

- Li, M.; Hu, D.; Lal, C.; Conti, M.; Zhang, Z. Blockchain-Enabled Secure Energy Trading with Verifiable Fairness in Industrial Internet of Things. IEEE Trans. Ind. Informatics 2020, 16, 6564–6574. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, K.; Lin, Y.; Xu, W. LightChain: A lightweight blockchain system for the industrial internet of things. IEEE Trans. Ind. Inform. 2019, 15, 3571–3581. [Google Scholar] [CrossRef]

- Jiang, S.; Cao, J.; Wu, H.; Yang, Y. Fairness-Based Packing of Industrial IoT Data in Permissioned Blockchains. IEEE Trans. Ind. Informatics 2020, 17, 7639–7649. [Google Scholar] [CrossRef]

- Zhang, Z.; Huang, L.; Xiang, X. Industrial blockchain of things: Solution for trustless industrial data sharing & beyond. In Proceedings of the 2020 IEEE 16th International Conference on Automation Science & Engineering, Hong Kong, China, 20–21 August 2020; pp. 1187–1192. [Google Scholar]

- Yin, S. Research on the detection algorithm of data integrity verification results in big data storage. J. Phys. Conf. Ser. 2020, 1574, 012008. [Google Scholar] [CrossRef]

- Mrabet, H.; Alhomoud, A.; Jemai, A. Secured IIoT Architecture Based on Blockchain Technology and Machine Learning for Sensor Access Control Systems in Smart Manufacturing. Appl. Sci. 2022, 12, 4641. [Google Scholar] [CrossRef]

- Kosiba, A.; Miller, A.; Shi, E.; Wen, Z.; Papamanthou, C. Hawk: The blockchain model of cryptography and privacy-preserving smart contracts. In Proceedings of the 2016 IEEE Symposium on Security and Privacy (SP), San Jose, CA, USA, 22–26 May 2016; pp. 839–858. [Google Scholar]

- Wang, Y.; Tao, X.; Ni, J.; Yu, Y. Data integrity checking with reliable data transfer for secure cloud storage. Int. J. Web Grid Serv. 2018, 14, 106–121. [Google Scholar] [CrossRef]

- Oktian, Y.E.; Lee, S.-G.; Lee, B.-G. Blockchain-Based Continued Integrity Service for IoT Big Data Management: A Comprehensive Design. Electronics 2020, 9, 1434. [Google Scholar] [CrossRef]

- Puri, V.; Kumar, R.; Kim, C. Blockchain meets IIoT: An architecture for privacy preservation and security in IIoT. In Proceedings of the 2020 International Conference on Computer Science, Engineering and Applications (ICCSEA), Gunupur, India, 13–14 March 2020; pp. 1–7. [Google Scholar]

- Asayag, A.; Cohen, G.; Grayevsky, I.; Leshkowitz, M.; Tamari, R.; Yakira, D. A fair consensus protocol for transaction ordering. In Proceedings of the 2018 IEEE 26th International Conference on Network Protocols (ICNP), Cambridge, UK, 25–27 September 2018; pp. 55–65. [Google Scholar]

- Wu, J.; Haider, S.A.; Bhardwaj, M.; Sharma, A.; Singhal, P. Blockchain-Based Data Audit Mechanism for Integrity over Big Data Environments. Secur. Commun. Netw. 2022, 2022, 8165653. [Google Scholar] [CrossRef]

- Mushtaq, M.S.; Mushtaq, M.Y.; Iqbal, M.W.; Hussain, S.A. Security, integrity, and privacy of cloud computing and big data. In Security and Privacy Trends in Cloud Computing and Big Data; CRC Press: Boca Raton, FL, USA, 2022; pp. 19–51. [Google Scholar]

- Rahman, M.S.; Chamikara, M.; Khalil, I.; Bouras, A. Blockchain-of-blockchains: An interoperable blockchain platform for ensuring IoT data integrity in the smart city. J. Ind. Inf. Integr. 2022, 30, 100408. [Google Scholar] [CrossRef]

- Malkhi, D.; Yin, M.; Reiter, M.K.; Guetta, G.; Abraham, I. Hotstuff: BFT consensus with linearity and responsiveness. In Proceedings of the 2019 ACM Symposium on Principles of Distributed Computing, Toronto, ON, USA, 29 July–2 August 2019; pp. 347–356. [Google Scholar]

- Nakaike, T.; Zhang, Q.; Ohara, M. Hyperledger fabric performance characterization and optimization using Go-level-DB benchmark. In Proceedings of the 2020 IEEE International Conference on Blockchain and Cryptocurrency, Toronto, ON, Canada, 2–6 May 2020; pp. 1–9. [Google Scholar]

- Nasonov, D.; Visheratin, A.A.; Boukhanovsky, A. Blockchain-based transaction integrity in the distributed big data marketplace. In Computational Science—ICCS 2018: 18th International Conference, Wuxi, China, 11–13 June 2018; Springer: Cham, Switzerland, 2018; pp. 569–577. [Google Scholar]

- Liu, X.; Cao, J.; Yang, Y.; Jiang, S. CPS-based smart warehouse for industry 4.0: A survey of the underlying technologies. Computers 2018, 7, 13. [Google Scholar] [CrossRef]

- Devasia, S. Rapid information transfer in swarms under update-rate-bounds using delayed self-reinforcement. J. Dyn. Syst. Meas. Control 2019, 141, 081009. [Google Scholar] [CrossRef]

- Wei, B.; Wang, J.; Zhang, J.; Yu, X.; Sharma, P.K. An optimized transaction verification method for trustworthy blockchain-enabled IIoT. Ad. Hoc. Netw. 2021, 119, 102. [Google Scholar]

- Rana, R.; Sharma, N.; Kaushik, I.; Bhushan, B. Blockchain Technology for Industry 4.0 Applications: Issues, Challenges, and Future Research Directions. In Big Data Analysis for Green Computing; CRC Press: Boca Raton, FL, USA, 2021; pp. 147–171. [Google Scholar]

- Dwivedi, S.K.; Roy, P.; Karda, C.; Agrawal, S.; Amin, R. Blockchain-Based Internet of Things and Industrial IoT: A Comprehensive Survey. Secur. Commun. Netw. 2021, 2021, 7142048. [Google Scholar] [CrossRef]

- Fernandez-Carames, T.M.; Fraga-Lamas, P. A review of the application of blockchain to the next generation of cybersecurity industry 4.0 smart factories. IEEE Access 2019, 7, 45201–45218. [Google Scholar] [CrossRef]

- Dong, G.; Wang, X. A secure IoT data integrity auditing scheme based on a consortium blockchain. In Proceedings of the 2020 5th IEEE International Conference on Big Data Analytics (ICBDA), Xiamen, China, 8–11 May 2020; pp. 246–250. [Google Scholar]

- Suhail, S.; Hussain, R.; Jurdak, R.; Hong, C.S. Trustworthy Digital Twins in the Industrial Internet of Things with Blockchain. IEEE Internet Comput. 2021, 26, 58–67. [Google Scholar] [CrossRef]

- Saltini, R.; Hyland-Wood, D. IBFT 2.0: A safe and live variation of the IBFT blockchain consensus protocol for eventually synchronous networks. arXiv 2019, arXiv:1909.10194. [Google Scholar]

- Kumari, A.; Tanwar, S.; Tyagi, S.; Kumar, N. Blockchain-Based Massive Data Dissemination Handling in IIoT Environment. IEEE Netw. 2020, 35, 318–325. [Google Scholar] [CrossRef]

- Umran, S.; Lu, S.; Abduljabbar, Z.; Zhu, J.; Wu, J. Secure Data of Industrial Internet of Things in a Cement Factory Based on a Blockchain Technology. Appl. Sci. 2021, 11, 6376. [Google Scholar] [CrossRef]

- Vineela, A.; Kasiviswanath, N.; Bindu, C.S. Data Integrity Auditing Scheme for Preserving Security in Cloud-Based Big Data. In Proceedings of the 2022 IEEE 6th International Conference on Intelligent Computing and Control Systems, Madurai, India, 25–27 May 2022; pp. 609–613. [Google Scholar]

- Wang, H.; Zhang, J. Blockchain-Based Data Integrity Verification for Large-Scale IoT Data. IEEE Access 2019, 7, 164996–165006. [Google Scholar] [CrossRef]

- Jiang, S.; Cao, J.; Wu, H.; Yang, Y.; Ma, M.; He, J. Blochie: A blockchain-based platform for healthcare information exchange. In Proceedings of the 2018 IEEE International Conference on Smart Computing (SMARTCOMP), Sicily, Italy, 18–20 June 2018; pp. 49–56. [Google Scholar]

- Winter, J.S.; Davidson, E. Big data governance of personal health information and challenges to contextual integrity. Inf. Soc. 2019, 35, 36–51. [Google Scholar] [CrossRef]

- Setty, S.; Zhang, Y.; Chen, Q.; Zhou, L.; Alvisi, L. Byzantine ordered consensus without Byzantine oligarchy. In Proceedings of the 14th USENIX Symposium on Operating Systems Design and Implementation (OSDI ‘20), Online, 4–6 November 2020; pp. 633–649. [Google Scholar]

- Tao, F.; Zhang, Y.; Xu, X.; Liu, A.; Lu, Q.; Xu, L. Blockchain-based trust mechanism for IoT-based smart manufacturing system. IEEE Trans. Comput. Soc. Syst. 2019, 6, 1386–1394. [Google Scholar]

- Sasikumar, A.; Ravi, L.; Kotecha, K.; Saini, R. Sustainable Smart Industry: A Secure and Energy Efficient Consensus Mechanism for Artificial Intelligence Enabled IIoT. Comput. Intell. Neurosci. 2022, 2022, 1419360. [Google Scholar] [CrossRef]

- Yang, Z.; Yu, H.; Hu, Q.; Liu, H. Efficient continuous big data integrity checking for decentralized storage. IEEE Trans. Netw. Sci. Eng. 2021, 8, 1658–1673. [Google Scholar]

- Russinovich, M.; Ashton, E.; Avanessians, C.; Castro, M.; Chamayou, A.; Clebsch, S. CCF: A framework for building confidential verifiable replicated services. Tech. Rep. Res. AWS 2019, 6, 34–56. [Google Scholar]

- Shamis, A.; Pietzuch, P.; Canakci, B.; Castro, M.; Fournet, C.; Ashton, E.; Russinovich, M. IA-CCF: Individual Accountability for Permissioned Ledgers. In Proceedings of the 19th USENIX Symposium on Networked Systems Design and Implementation (NSDI ‘22), Renton, WA, USA, 4–6 April 2022; pp. 467–491. [Google Scholar]

| Comparative Solutions | Average Throughput | Signed Peer Transfers | Ledger Auditing |

|---|---|---|---|

| IA-CCF | 302,231 | 115,936 | 139,159 |

| Pompe | 487,644 | 204,775 | 330,459 |

| HotStuff | 313,697 | 128,897 | 162,711 |

| TCB | 508,444 | 217,661 | 367,119 |

| Comparative Solutions | Average Latency | 99th Percentile Latency | Round-Trip Latency |

|---|---|---|---|

| IA-CCF | 188 | 198 | 215 |

| Pompe | 391 | 437 | 509 |

| HotStuff | 346 | 398 | 445 |

| TCB | 290 | 254 | 326 |

| Comparative Solutions | Key Value Storing | Functionality Overhead | Checkpoint Intervals |

|---|---|---|---|

| IA-CCF | 57,579 | 11,118 | 53,209 |

| Pompe | 46,845 | 10,763 | 41,018 |

| HotStuff | 60,986 | 12,799 | 55,415 |

| TCB | 61,100 | 13,102 | 56,219 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Juma, M.; Alattar, F.; Touqan, B. Securing Big Data Integrity for Industrial IoT in Smart Manufacturing Based on the Trusted Consortium Blockchain (TCB). IoT 2023, 4, 27-55. https://doi.org/10.3390/iot4010002

Juma M, Alattar F, Touqan B. Securing Big Data Integrity for Industrial IoT in Smart Manufacturing Based on the Trusted Consortium Blockchain (TCB). IoT. 2023; 4(1):27-55. https://doi.org/10.3390/iot4010002

Chicago/Turabian StyleJuma, Mazen, Fuad Alattar, and Basim Touqan. 2023. "Securing Big Data Integrity for Industrial IoT in Smart Manufacturing Based on the Trusted Consortium Blockchain (TCB)" IoT 4, no. 1: 27-55. https://doi.org/10.3390/iot4010002

APA StyleJuma, M., Alattar, F., & Touqan, B. (2023). Securing Big Data Integrity for Industrial IoT in Smart Manufacturing Based on the Trusted Consortium Blockchain (TCB). IoT, 4(1), 27-55. https://doi.org/10.3390/iot4010002