Mixed Reality for Pediatric Brain Tumors: A Pilot Study from a Singapore Children’s Hospital

Abstract

1. Introduction

2. Materials and Methods

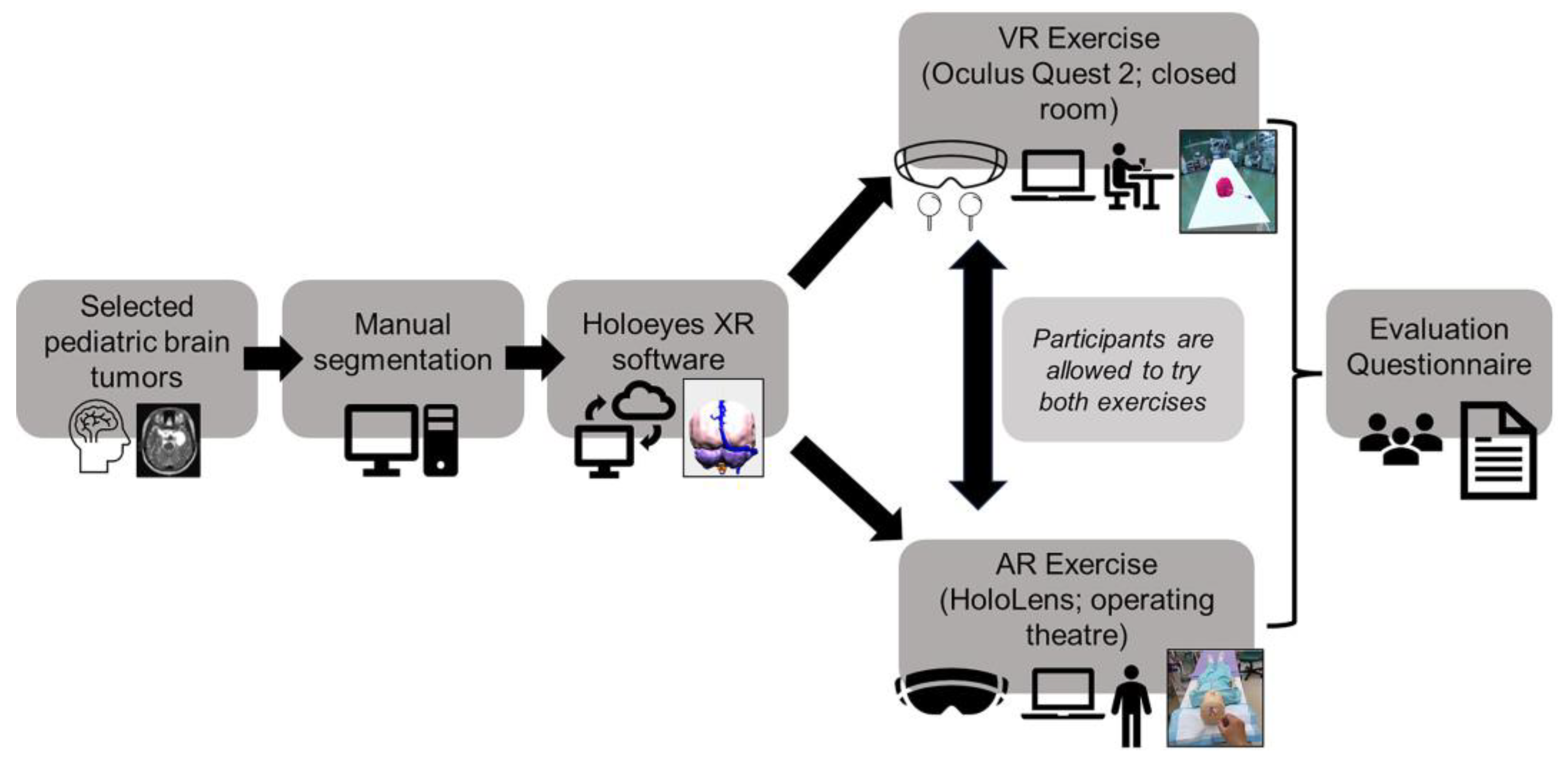

2.1. Overview of Study Design

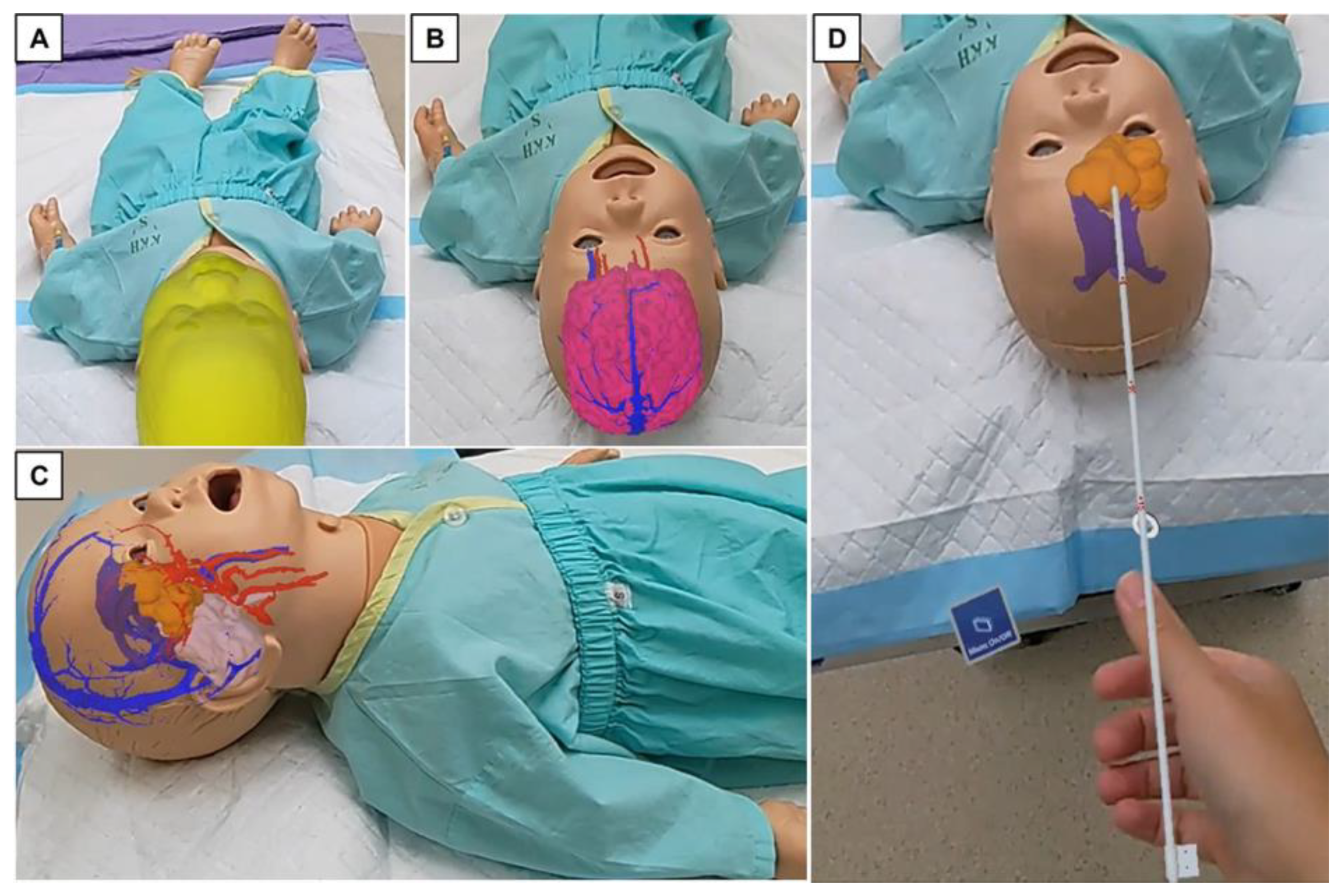

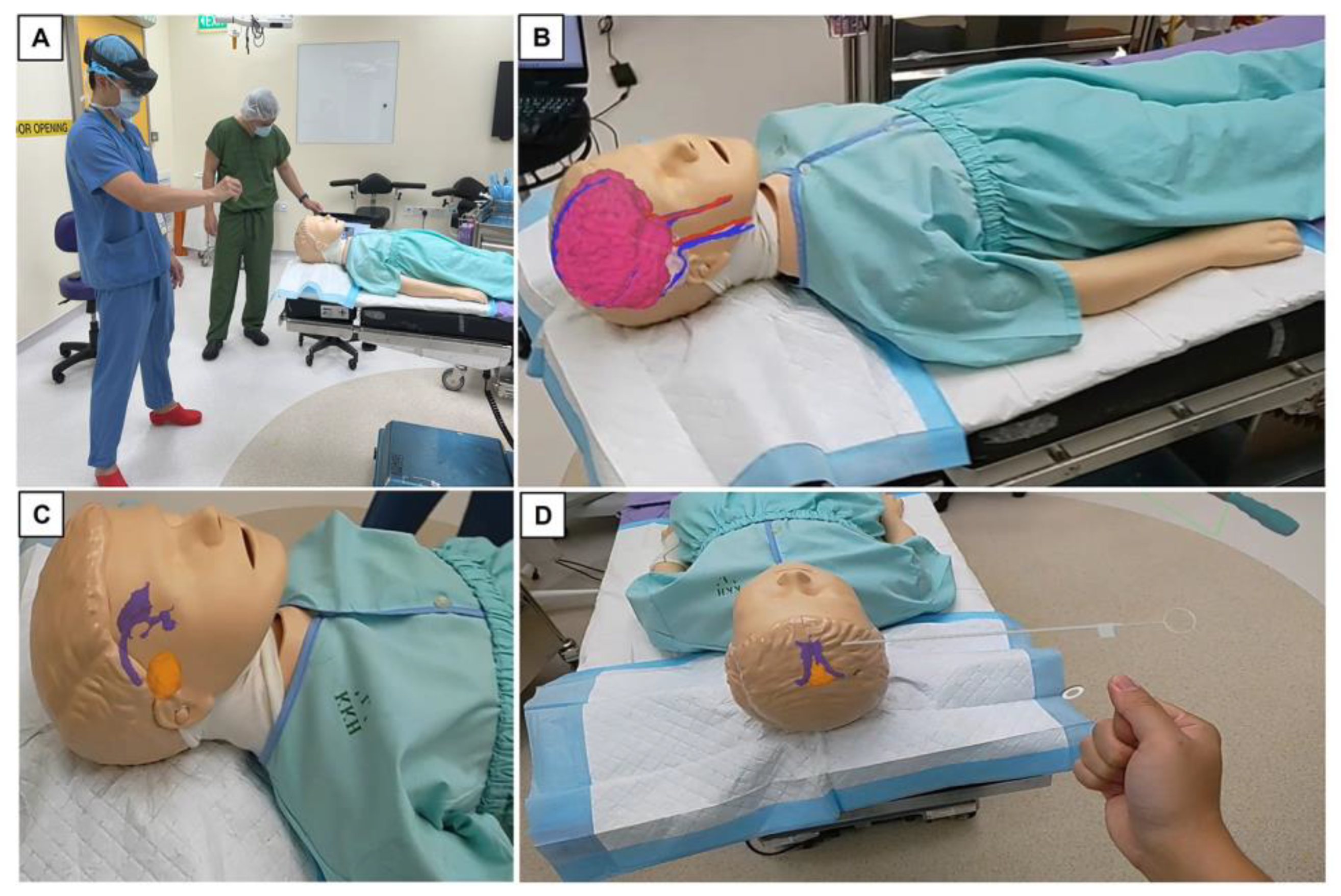

2.2. Outline of Trial with Mixed Reality Models

2.3. Data Analysis

3. Results

3.1. Participant Demographics

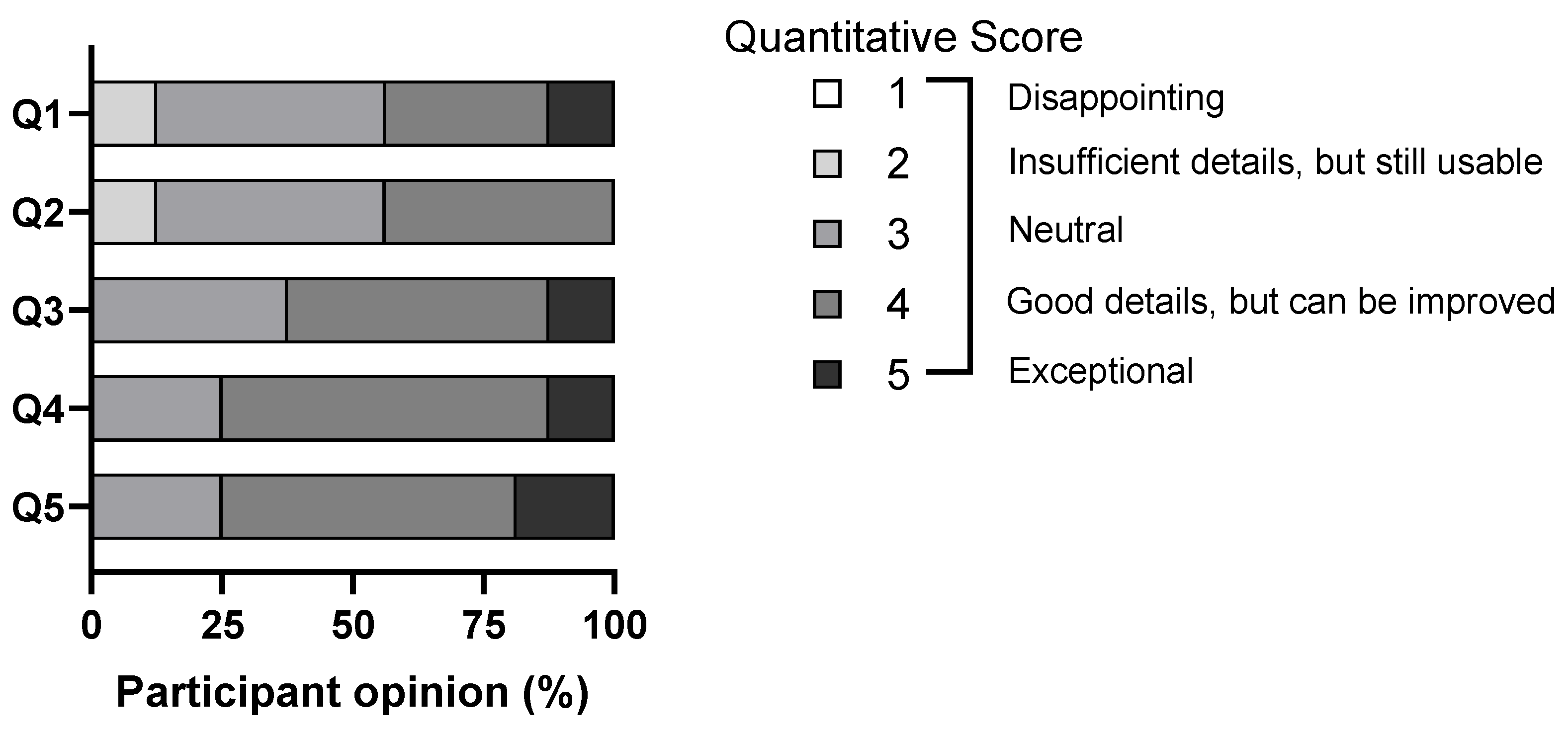

3.2. Evaluation of Visual Quality of Project Models

3.3. Other Relevant Feedback from Study Participants

4. Discussion

4.1. Current Visual–Spatial limitations in Pediatric Brain Tumor Surgery

4.2. The Reality of Learning Neuroanatomy and Neurosurgery in Present Day

4.3. Study Reflections and Practical Limitations Encountered

4.4. Future Work and Directions

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Karajannis, M.; Allen, J.C.; Newcomb, E.W. Treatment of pediatric brain tumors. J. Cell. Physiol. 2008, 217, 584–589. [Google Scholar] [CrossRef]

- Cohen, A.R. Brain Tumors in Children. N. Engl. J. Med. 2022, 386, 1922–1931. [Google Scholar] [CrossRef]

- Rees, J.H. Diagnosis and treatment in neuro-oncology: An oncological perspective. Br. J. Radiol. 2011, 84, S82–S89. [Google Scholar] [CrossRef]

- WHO Classification of Tumours Editorial Board. Central Nervous System Tumours, 5th ed.; WHO Classification of Tumours Editorial Board, Ed.; International Agency for Research on Cancer: Lyon, France, 2021; p. 568. [Google Scholar]

- Durrani, S.; Onyedimma, C.; Jarrah, R.; Bhatti, A.; Nathani, K.R.; Bhandarkar, A.R.; Mualem, W.; Ghaith, A.K.; Zamanian, C.; Michalopoulos, G.D.; et al. The Virtual Vision of Neurosurgery: How Augmented Reality and Virtual Reality are Transforming the Neurosurgical Operating Room. World Neurosurg. 2022, 168, 190–201. [Google Scholar] [CrossRef]

- Tagaytayan, R.; Kelemen, A.; Sik-Lanyi, C. Augmented reality in neurosurgery. Arch. Med. Sci. 2018, 14, 572–578. [Google Scholar] [CrossRef]

- Iizuka, K.; Sato, Y.; Imaizumi, Y.; Mizutani, T. Potential Efficacy of Multimodal Mixed Reality in Epilepsy Surgery. Oper. Neurosurg. 2021, 20, 276–281. [Google Scholar] [CrossRef]

- Jain, S.; Gao, Y.; Yeo, T.T.; Ngiam, K.Y. Use of Mixed Reality in Neuro-Oncology: A Single Centre Experience. Life 2023, 13, 398. [Google Scholar] [CrossRef]

- Chiacchiaretta, P.; Perrucci, M.G.; Caulo, M.; Navarra, R.; Baldiraghi, G.; Rolandi, D.; Luzzi, S.; Del Maestro, M.; Galzio, R.; Ferretti, A. A Dedicated Tool for Presurgical Mapping of Brain Tumors and Mixed-Reality Navigation During Neurosurgery. J. Digit. Imaging 2022, 35, 704–713. [Google Scholar] [CrossRef]

- Al Janabi, H.F.; Aydin, A.; Palaneer, S.; Macchione, N.; Al-Jabir, A.; Khan, M.S.; Dasgupta, P.; Ahmed, K. Effectiveness of the HoloLens mixed-reality headset in minimally invasive surgery: A simulation-based feasibility study. Surg. Endosc. 2020, 34, 1143–1149. [Google Scholar] [CrossRef]

- Tomlinson, S.B.; Hendricks, B.K.; Cohen-Gadol, A. Immersive Three-Dimensional Modeling and Virtual Reality for Enhanced Visualization of Operative Neurosurgical Anatomy. World Neurosurg. 2019, 131, 313–320. [Google Scholar] [CrossRef]

- Meola, A.; Cutolo, F.; Carbone, M.; Cagnazzo, F.; Ferrari, M.; Ferrari, V. Augmented reality in neurosurgery: A systematic review. Neurosurg. Rev. 2017, 40, 537–548. [Google Scholar] [CrossRef]

- Luzzi, S.; Giotta Lucifero, A.; Martinelli, A.; Maestro, M.D.; Savioli, G.; Simoncelli, A.; Lafe, E.; Preda, L.; Galzio, R. Supratentorial high-grade gliomas: Maximal safe anatomical resection guided by augmented reality high-definition fiber tractography and fluorescein. Neurosurg. Focus 2021, 51, E5. [Google Scholar] [CrossRef]

- Coelho, G.; Figueiredo, E.G.; Rabelo, N.N.; Rodrigues de Souza, M.; Fagundes, C.F.; Teixeira, M.J.; Zanon, N. Development and Evaluation of Pediatric Mixed-Reality Model for Neuroendoscopic Surgical Training. World Neurosurg. 2020, 139, e189–e202. [Google Scholar] [CrossRef]

- Mishra, R.; Narayanan, M.D.K.; Umana, G.E.; Montemurro, N.; Chaurasia, B.; Deora, H. Virtual Reality in Neurosurgery: Beyond Neurosurgical Planning. Int. J. Environ. Res. Public Health 2022, 19, 1719. [Google Scholar] [CrossRef]

- Iop, A.; El-Hajj, V.G.; Gharios, M.; de Giorgio, A.; Monetti, F.M.; Edstrom, E.; Elmi-Terander, A.; Romero, M. Extended Reality in Neurosurgical Education: A Systematic Review. Sensors 2022, 22, 6067. [Google Scholar] [CrossRef]

- Condino, S.; Montemurro, N.; Cattari, N.; D’Amato, R.; Thomale, U.; Ferrari, V.; Cutolo, F. Evaluation of a Wearable AR Platform for Guiding Complex Craniotomies in Neurosurgery. Ann. Biomed. Eng. 2021, 49, 2590–2605. [Google Scholar] [CrossRef] [PubMed]

- Kikinis, R.; Pieper, S.D.; Vosburgh, K.G. 3D Slicer: A Platform for Subject-Specific Image Analysis, Visualization, and Clinical Support. In Intraoperative Imaging and Image-Guided Therapy; Jolesz, F.A., Ed.; Springer: New York, NY, USA, 2014; pp. 277–289. [Google Scholar]

- Vayssiere, P.; Constanthin, P.E.; Herbelin, B.; Blanke, O.; Schaller, K.; Bijlenga, P. Application of virtual reality in neurosurgery: Patient missing. A systematic review. J. Clin. Neurosci. 2022, 95, 55–62. [Google Scholar] [CrossRef]

- Tao, G.; Garrett, B.; Taverner, T.; Cordingley, E.; Sun, C. Immersive virtual reality health games: A narrative review of game design. J. NeuroEng. Rehabil. 2021, 18, 31. [Google Scholar] [CrossRef]

- Gonzalez-Romo, N.I.; Mignucci-Jiménez, G.; Hanalioglu, S.; Gurses, M.E.; Bahadir, S.; Xu, Y.; Koskay, G.; Lawton, M.T.; Preul, M.C. Virtual neurosurgery anatomy laboratory: A collaborative and remote education experience in the metaverse. Surg. Neurol. Int. 2023, 14, 90. [Google Scholar] [CrossRef] [PubMed]

- Incekara, F.; Smits, M.; Dirven, C.; Vincent, A. Clinical Feasibility of a Wearable Mixed-Reality Device in Neurosurgery. World Neurosurg. 2018, 118, e422–e427. [Google Scholar] [CrossRef] [PubMed]

- Scott, H.; Griffin, C.; Coggins, W.; Elberson, B.; Abdeldayem, M.; Virmani, T.; Larson-Prior, L.J.; Petersen, E. Virtual Reality in the Neurosciences: Current Practice and Future Directions. Front. Surg. 2021, 8, 807195. [Google Scholar] [CrossRef] [PubMed]

- Kuznietsova, V.; Woodward, R.S. Estimating the Learning Curve of a Novel Medical Device: Bipolar Sealer Use in Unilateral Total Knee Arthroplasties. Value Health 2018, 21, 283–294. [Google Scholar] [CrossRef] [PubMed]

- Subramonian, K.; Muir, G. The ‘learning curve’in surgery: What is it, how do we measure it and can we influence it? BJU Int. 2004, 93, 1173–1174. [Google Scholar] [CrossRef]

- Kirisits, A.; Redekop, W.K. The economic evaluation of medical devices: Challenges ahead. Appl. Health Econ. Health Policy 2013, 11, 15–26. [Google Scholar] [CrossRef] [PubMed]

- Léger, É.; Drouin, S.; Collins, D.L.; Popa, T.; Kersten-Oertel, M. Quantifying attention shifts in augmented reality image-guided neurosurgery. Healthc. Technol. Lett. 2017, 4, 188–192. [Google Scholar] [CrossRef]

- Wang, S.S.; Zhang, S.M.; Jing, J.J. Stereoscopic virtual reality models for planning tumor resection in the sellar region. BMC Neurol. 2012, 12, 146. [Google Scholar] [CrossRef]

- Zawy Alsofy, S.; Sakellaropoulou, I.; Stroop, R. Evaluation of Surgical Approaches for Tumor Resection in the Deep Infratentorial Region and Impact of Virtual Reality Technique for the Surgical Planning and Strategy. J. Craniofacial Surg. 2020, 31, 1865–1869. [Google Scholar] [CrossRef]

- Ille, S.; Ohlerth, A.K.; Colle, D.; Colle, H.; Dragoy, O.; Goodden, J.; Robe, P.; Rofes, A.; Mandonnet, E.; Robert, E.; et al. Augmented reality for the virtual dissection of white matter pathways. Acta Neurochir. 2021, 163, 895–903. [Google Scholar] [CrossRef]

- Vávra, P.; Roman, J.; Zonča, P.; Ihnát, P.; Němec, M.; Kumar, J.; Habib, N.; El-Gendi, A. Recent Development of Augmented Reality in Surgery: A Review. J. Healthc. Eng. 2017, 2017, 4574172. [Google Scholar] [CrossRef]

- Filho, F.V.; Coelho, G.; Cavalheiro, S.; Lyra, M.; Zymberg, S.T. Quality assessment of a new surgical simulator for neuroendoscopic training. Neurosurg. Focus 2011, 30, E17. [Google Scholar] [CrossRef]

- Lemole, G.M., Jr.; Banerjee, P.P.; Luciano, C.; Neckrysh, S.; Charbel, F.T. Virtual reality in neurosurgical education: Part-task ventriculostomy simulation with dynamic visual and haptic feedback. Neurosurgery 2007, 61, 142–148, discussion 148–149. [Google Scholar] [CrossRef]

- Chan, J.; Pangal, D.J.; Cardinal, T.; Kugener, G.; Zhu, Y.-C.; Roshannai, A.; Markarian, N.; Sinha, A.; Anandkumar, A.; Hung, A.J.; et al. A systematic review of virtual reality for the assessment of technical skills in neurosurgery. Neurosurg. Focus 2021, 51, E15. [Google Scholar] [CrossRef]

- Chen, S.; Zhu, J.; Cheng, C.; Pan, Z.; Liu, L.; Du, J.; Shen, X.; Shen, Z.; Zhu, H.; Liu, J.; et al. Can virtual reality improve traditional anatomy education programmes? A mixed-methods study on the use of a 3D skull model. BMC Med. Educ. 2020, 20, 395. [Google Scholar] [CrossRef]

- Kockro, R.A.; Stadie, A.; Schwandt, E.; Reisch, R.; Charalampaki, C.; Ng, I.; Yeo, T.T.; Hwang, P.; Serra, L.; Perneczky, A. A collaborative virtual reality environment for neurosurgical planning and training. Neurosurgery 2007, 61, 379–391, discussion 391. [Google Scholar] [CrossRef] [PubMed]

- Carnevale, A.; Mannocchi, I.; Sassi, M.S.H.; Carli, M.; De Luca, G.; Longo, U.G.; Denaro, V.; Schena, E. Virtual Reality for Shoulder Rehabilitation: Accuracy Evaluation of Oculus Quest 2. Sensors 2022, 22, 5511. [Google Scholar] [CrossRef] [PubMed]

- Cannizzaro, D.; Zaed, I.; Safa, A.; Jelmoni, A.J.M.; Composto, A.; Bisoglio, A.; Schmeizer, K.; Becker, A.C.; Pizzi, A.; Cardia, A.; et al. Augmented Reality in Neurosurgery, State of Art and Future Projections. A Systematic Review. Front. Surg. 2022, 9, 227. [Google Scholar] [CrossRef] [PubMed]

- Alaraj, A.; Charbel, F.T.; Birk, D.; Tobin, M.; Luciano, C.; Banerjee, P.P.; Rizzi, S.; Sorenson, J.; Foley, K.; Slavin, K.; et al. Role of cranial and spinal virtual and augmented reality simulation using immersive touch modules in neurosurgical training. Neurosurgery 2013, 72, 115–123. [Google Scholar] [CrossRef] [PubMed]

- Hultegård, L.; Michaëlsson, I.; Jakola, A.; Farahmand, D. The risk of ventricular catheter misplacement and intracerebral hemorrhage in shunt surgery for hydrocephalus. Interdiscip. Neurosurg. 2019, 17, 23–27. [Google Scholar] [CrossRef]

- Ofoma, H.; Cheaney, B., 2nd; Brown, N.J.; Lien, B.V.; Himstead, A.S.; Choi, E.H.; Cohn, S.; Campos, J.K.; Oh, M.Y. Updates on techniques and technology to optimize external ventricular drain placement: A review of the literature. Clin. Neurol. Neurosurg. 2022, 213, 107126. [Google Scholar] [CrossRef]

- Li, Y.; Chen, X.; Wang, N.; Zhang, W.; Li, D.; Zhang, L.; Qu, X.; Cheng, W.; Xu, Y.; Chen, W.; et al. A wearable mixed-reality holographic computer for guiding external ventricular drain insertion at the bedside. J. Neurosurg. 2018, 131, 1599–1606. [Google Scholar] [CrossRef]

- Fick, T.; van Doormaal, J.A.M.; Tosic, L.; van Zoest, R.J.; Meulstee, J.W.; Hoving, E.W.; van Doormaal, T.P.C. Fully automatic brain tumor segmentation for 3D evaluation in augmented reality. Neurosurg. Focus 2021, 51, E14. [Google Scholar] [CrossRef] [PubMed]

- Panait, L.; Akkary, E.; Bell, R.L.; Roberts, K.E.; Dudrick, S.J.; Duffy, A.J. The Role of Haptic Feedback in Laparoscopic Simulation Training. J. Surg. Res. 2009, 156, 312–316. [Google Scholar] [CrossRef] [PubMed]

- Bugdadi, A.; Sawaya, R.; Bajunaid, K.; Olwi, D.; Winkler-Schwartz, A.; Ledwos, N.; Marwa, I.; Alsideiri, G.; Sabbagh, A.J.; Alotaibi, F.E.; et al. Is Virtual Reality Surgical Performance Influenced by Force Feedback Device Utilized? J. Surg. Educ. 2019, 76, 262–273. [Google Scholar] [CrossRef] [PubMed]

- Moody, L.; Baber, C.; Arvanitis, T.N. Objective surgical performance evaluation based on haptic feedback. Stud. Health Technol. Inform. 2002, 85, 304–310. [Google Scholar]

- Norton, S.P.; Dickerson, E.M.; Kulwin, C.G.; Shah, M.V. Technology that achieves the Triple Aim: An economic analysis of the BrainPath approach in neurosurgery. Clin. Outcomes Res. 2017, 9, 519–523. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, N.Q.; Cardinell, J.; Ramjist, J.M.; Lai, P.; Dobashi, Y.; Guha, D.; Androutsos, D.; Yang, V.X.D. An augmented reality system characterization of placement accuracy in neurosurgery. J. Clin. Neurosci. 2020, 72, 392–396. [Google Scholar] [CrossRef] [PubMed]

- Chytas, D.; Paraskevas, G.; Noussios, G.; Demesticha, T.; Asouhidou, I.; Salmas, M. Considerations for the value of immersive virtual reality platforms for neurosurgery trainees’ anatomy understanding. Surg. Neurol. Int. 2023, 14, 173. [Google Scholar] [CrossRef] [PubMed]

- Gerard, I.J.; Kersten-Oertel, M.; Hall, J.A.; Sirhan, D.; Collins, D.L. Brain Shift in Neuronavigation of Brain Tumors: An Updated Review of Intra-Operative Ultrasound Applications. Front. Oncol. 2020, 10, 618837. [Google Scholar] [CrossRef]

- Gerard, I.J.; Kersten-Oertel, M.; Petrecca, K.; Sirhan, D.; Hall, J.A.; Collins, D.L. Brain shift in neuronavigation of brain tumors: A review. Med. Image Anal. 2017, 35, 403–420. [Google Scholar] [CrossRef]

- Giussani, C.; Trezza, A.; Ricciuti, V.; Di Cristofori, A.; Held, A.; Isella, V.; Massimino, M. Intraoperative MRI versus intraoperative ultrasound in pediatric brain tumor surgery: Is expensive better than cheap? A review of the literature. Child’s Nerv. Syst. 2022, 38, 1445–1454. [Google Scholar] [CrossRef]

- De Benedictis, A.; Sarubbo, S.; Duffau, H. Subcortical surgical anatomy of the lateral frontal region: Human white matter dissection and correlations with functional insights provided by intraoperative direct brain stimulation: Laboratory investigation. J. Neurosurg. 2012, 117, 1053–1069. [Google Scholar] [CrossRef]

- Duffau, H.; Thiebaut de Schotten, M.; Mandonnet, E. White matter functional connectivity as an additional landmark for dominant temporal lobectomy. J. Neurol. Neurosurg. Psychiatry 2008, 79, 492–495. [Google Scholar] [CrossRef]

- Chen, B.; Moreland, J.; Zhang, J. Human brain functional MRI and DTI visualization with virtual reality. Quant. Imaging Med. Surg. 2011, 1, 11–16. [Google Scholar] [CrossRef]

- Yeung, J.T.; Taylor, H.M.; Nicholas, P.J.; Young, I.M.; Jiang, I.; Doyen, S.; Sughrue, M.E.; Teo, C. Using Quicktome for Intracerebral Surgery: Early Retrospective Study and Proof of Concept. World Neurosurg. 2021, 154, e734–e742. [Google Scholar] [CrossRef] [PubMed]

- Voinescu, A.; Sui, J.; Stanton Fraser, D. Virtual Reality in Neurorehabilitation: An Umbrella Review of Meta-Analyses. J. Clin. Med. 2021, 10, 1478. [Google Scholar] [CrossRef] [PubMed]

- Shepherd, T.; Trinder, M.; Theophilus, M. Does virtual reality in the perioperative setting for patient education improve understanding? A scoping review. Surg. Pract. Sci. 2022, 10, 100101. [Google Scholar] [CrossRef]

| Question | Quantitative Score (Based on Likert Scale) |

|---|---|

| Q1: Visual quality of brain tumor examples | 1 |

| 2 | |

| 3 | |

| 4 | |

| 5 | |

| Q2: Visual quality of normal brain structures in relation to brain tumors | 1 |

| 2 | |

| 3 | |

| 4 | |

| 5 | |

| Q3: Visual quality of intracranial blood vessels in relation to brain tumors | 1 |

| 2 | |

| 3 | |

| 4 | |

| 5 | |

| Q4: Visual quality of ventricular system in relation to brain tumors | 1 |

| 2 | |

| 3 | |

| 4 | |

| 5 | |

| Q5: Overall usefulness in understanding brain tumor spatial anatomy using the MR platform | 1 |

| 2 | |

| 3 | |

| 4 | |

| 5 |

| Participant Group * | Representative Free-Text Comments |

|---|---|

| Medical students/ Junior doctors | “Useful to see normal brain models to compare with brain tumor models”; “Able to have name of anatomical structure when tapped on” |

| Neurosurgical residents/ Consultants | “Haptic feedback for catheter placement will make simulation more realistic”; “Addition of rest of the spine structures can help with visualizing where the brain tumor is in relation to the patient’s body during head positioning for surgery”; “Finer details of individual anatomical structures around the tumor will be useful for preoperative planning” |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liang, S.; Teo, J.C.; Coyuco, B.C.; Cheong, T.M.; Lee, N.K.; Low, S.Y.Y. Mixed Reality for Pediatric Brain Tumors: A Pilot Study from a Singapore Children’s Hospital. Surgeries 2023, 4, 354-366. https://doi.org/10.3390/surgeries4030036

Liang S, Teo JC, Coyuco BC, Cheong TM, Lee NK, Low SYY. Mixed Reality for Pediatric Brain Tumors: A Pilot Study from a Singapore Children’s Hospital. Surgeries. 2023; 4(3):354-366. https://doi.org/10.3390/surgeries4030036

Chicago/Turabian StyleLiang, Sai, Jing Chun Teo, Bremen C. Coyuco, Tien Meng Cheong, Nicole K. Lee, and Sharon Y. Y. Low. 2023. "Mixed Reality for Pediatric Brain Tumors: A Pilot Study from a Singapore Children’s Hospital" Surgeries 4, no. 3: 354-366. https://doi.org/10.3390/surgeries4030036

APA StyleLiang, S., Teo, J. C., Coyuco, B. C., Cheong, T. M., Lee, N. K., & Low, S. Y. Y. (2023). Mixed Reality for Pediatric Brain Tumors: A Pilot Study from a Singapore Children’s Hospital. Surgeries, 4(3), 354-366. https://doi.org/10.3390/surgeries4030036