1. Introduction

Immersive technology bridges the gap between the physical and virtual worlds, enabling users to experience a deep sense of immersion [

1]. This term broadly includes virtual reality (VR), augmented reality (AR), mixed reality (MR), and extended reality (XR) technologies. The integration of immersive technologies into the healthcare sector has led to significant advances in areas such as medical education, surgical planning, and diagnosis. These technologies allow for the creation of flexible and interactive environments where complex medical data can be visualised and manipulated in real time. As a result, medical professionals are able to enhance their skills, collaborate more efficiently, and achieve better clinical outcomes.

Additionally, healthcare professionals are rapidly gaining proficiency in the use of advanced technological devices within their field of practice. Robotic surgery enables surgeons to conduct highly accurate and minimally invasive procedures, thus reducing wound trauma, shortening post-operative hospital stays, and lowering the risk of post-operative wound complications [

2]. The da Vinci system, developed by Intuitive Surgical Inc of Sunnyvale, CA, was the first surgical robot to receive FDA (U.S. Food and Drug Administration) approval and has since been used to assist surgeons in performing laparoscopic surgeries [

3]. The console of the robot enables the user to visualise the surgical site in 3D while the robotic arms and tools are managed by haptic devices and buttons. Initially, this method was applied in specialty medical interventions. However, the use of robotic surgery in emergency general surgery between 2013 and 2021 increased significantly year-over-year, as revealed in the study conducted by Lunardi et al. [

4]. The study revealed notable increases in the uptake of robot-assisted surgery by 0.7% for cholecystectomy, 0.9% for colectomy, 1.9% for inguinal hernia repair, and 1.1% for ventral hernia repair.

Immersive environments refer to digital spaces that create a sense of presence and engagement by stimulating multiple senses, thereby enhancing user interaction with virtual content. These environments range from fully virtual experiences, such as those provided by virtual reality (VR) headsets, to augmented reality (AR) and mixed reality (MR) applications that overlay digital elements onto the real world [

1]. The implementation of immersive environments in the medical field can facilitate interaction among various roles within the healthcare sector. In the field of education, there are suggestions concerning online teaching using mixed reality devices [

5] or investigations that exhibit enhanced student engagement through gamified proposals in surgical simulators [

6]. Concerning surgical matters, it is feasible to locate immersive surgical planning systems that enable a more precise segmental border identification in comparison to two-dimensional medical imaging [

7]. Furthermore, many studies that mention the potential for interaction among multiple users provide insufficient detail on the interaction and communication systems.

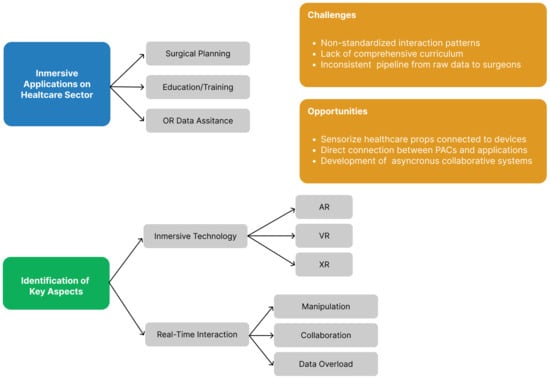

This review highlights the applications of these technologies in clinical practice and medical education, explores the challenges and limitations that remain, and points to opportunities for future research and development. These are particularly relevant as the healthcare industry continues to adopt more personalised and data-driven approaches.

3. Medical Imaging and Computer Graphics

Medical imaging techniques such as X-rays, Magnetic Resonance Imaging (MRI), and Computed Tomography (CT) are fundamental tools in modern healthcare. These modalities generate large volumes of complex data, which can be difficult to interpret in traditional 2D formats.

The standardisation and normalisation of medical imaging have facilitated the development and utilisation of various medical image viewers that allow direct access to data on a computer via the PACS (Picture Archiving and Communication System) of the hospital in question. The DICOM (Digital Imaging and Communication in Medicine) standard provides access not only to image information but also to metadata about the process performed or patient information. This feature enables the use of 3D reconstruction tools from DICOM files. The DICOM file typically presents an image in a 2D format simply by depicting the values stored in the pixel matrix. Nevertheless, the included metadata, like the distance between images or their position in space, enable the construction of an image stack in 3D space in a manner that respects the specific time of acquisition. This also facilitates interpolation techniques for filling in the gaps between images. This set of images can be resized while maintaining the relationships within the study. These techniques can be of great value for generating sample volumes compatible with artificial intelligence algorithms [

36].

The medical field tends to integrate patient-specific 3D models in various areas such as education, planning, or surgical simulation. Advances in the areas of medical engineering and image engineering, together with a reduction in the cost of the necessary hardware and software, have led to an increase in the printing of 3D models generated from medical images. These models cannot be obtained directly from the medical image, but they can be obtained from the masks used for their segmentation. A mask makes it possible to delimit all regions belonging to the same element or tissue in the entire medical image study. These masks can be stored in DICOM format along with the rest of the files that make up the case study. In these cases, the metadata stored in the DICOM standard, such as slice thickness or the region of interest of the study, are of great importance in order to obtain more accurate 3D models [

37].

Following the previous line, it is possible to obtain segmentations of the anatomical structures of the human body automatically thanks to the use of artificial intelligence. The

TotalSegmentator tool stands out, which allows the segmentation of 104 anatomical structures in computed tomography studies in a robust way. This deep learning model is capable of segmenting different types of tissues such as organs, bones, muscles, and veins [

38]. These new techniques facilitate the segmentation process, reducing the time and cost to obtain realistic 3D models of individual clinical cases. These tools could be included as software in healthcare centres and integrated into the PACS network thanks to their compatibility with the DICOM standard.

All the processes described above —such as medical image acquisition, segmentation, and 3D reconstruction from DICOM data— enable the creation of patient-specific models and image volumes. These elements ensure that the clinical detail and validity of the data are preserved when integrated into immersive applications, allowing them to reliably reflect patient-specific information within virtual environments.

4. Design Principles and Technical Requirements for Immersive Applications

Throughout history, there has been a search for new ways to express knowledge in a multi-sensory manner. Today, the convergence of technology and human perception has led to the creation of innovative systems that blend the real and virtual worlds. These experiences enable users to immerse themselves in imaginary environments or integrate virtual elements into their physical surroundings, among other possibilities.

In 1994, an article was published that aimed to define the virtuality continuum [

39]. This concept enabled the comprehension and contextualisation of novel technologies being developed autonomously by various firms and laboratories at that time. To grasp this model, envision a line connecting the physical world to the digital world. Nearing the ends, the terms augmented reality (AR) and virtual reality (VR), which had been previously coined, would be found. On the side nearest to the real world lies AR, a technology permitting the incorporation of virtual information into the physical realm without compromising the user’s viewpoint. On the opposite side, VR is designed to transfer the user to a wholly virtual world, where the experience could be realistic or not, but the user’s absorption is achieved without any allusions to the real world. In addition, the publication introduces a new term, mixed reality (MR), which integrates all the experiences or technologies that use characteristic aspects of any of the previous terms. The recent literature shows a significant rise in the use of the term extended reality (XR). This term encompasses all interactive environments that integrate real and virtual elements, coordinating both human and computer-generated inputs [

40]. As illustrated in

Figure 1, the virtuality continuum concept demonstrates the interconnectedness and synergy between these technologies.

4.1. Virtual Reality

The term virtual reality refers to any experience in which the user feels immersed in an interactive virtual world [

41]. For the correct generation of these experiences, some technologies are of great importance, such as the visual system used, which must block the stimuli coming from the real world, the graphic rendering system, the tracking system, or the use of directional audio. In this kind of experience, devices typically obscure the user’s view of the real world and substitute it with stereo projections of the virtual world, producing a 3D representation of the environment and boosting spatial perception.

Casas-Yrurzum et al. [

8] proposed a teaching system for robot-assisted surgery, enabling students to watch surgeries in stereoscopic 3D on their smartphones via MorganVR-type lenses, shown in

Figure 2. The system’s video playback feature is easily accessible to students, allowing for a more comprehensive understanding of surgical procedures. The introduction of stereoscopy enhances the depth perception of the video, allowing for a more realistic view of robot-assisted surgery. Furthermore, the web-based tool includes an application for the teacher, where he or she can upload and edit videos of surgeries, create viewing sections, add visual annotations to the video, and create rooms for live broadcasting. This low-cost system allows students to access the content easily while being able to understand the position and orientation of the surgical material in relation to the anatomical elements thanks to the 3D visualisation.

In contrast, some devices possess their own rendering system that utilises stereoscopic screens or lenses, known as Head-Mounted Display (HMD). The aforementioned devices are composed of a core hardware that is helmet-shaped, equipped with adjustable binocular lenses, and typically accompanied by a pair of controllers that facilitate interaction with the digital environment. The system has been developed to enable users to experience six degrees of freedom in virtual environments by capturing their movements. This enhances the user’s immersive experience, as they can directly interact with the elements within the environment.

Frame rate is a crucial element in achieving a satisfactory VR experience. It refers to the number of times per second that a device’s screen refreshes. Low frame rates can induce user discomfort because the virtual stimuli generated do not match the natural world’s expected reference by the central nervous system. This parameter is closely related to latency, which is the time that elapses between the stimulus and the response that it generates. Low frame rates lead to high latencies, resulting in a delay between the user’s interaction with the system and its response. This discrepancy underpins the sensory conflict theory, which is currently one of the most applicable hypotheses for comprehending the discomfort caused by virtual reality. This theory posits an additional trigger due to the lack of virtual stimuli in certain sensory systems of the human body, namely, the vestibular and proprioceptive systems [

42]. There could be a transitional phase where the disruptions identified by the body do not result in overall discomfort, but might lead to fatigue. Forced fusion is a phenomenon by which the user’s perceptual systems must work harder to establish the links between real and virtual stimuli. This mechanism is part of the user’s adaptive process to virtual worlds and may improve with repeated exposure over time.

The choice of HMD type has a major impact on the user’s experience, as it defines the user’s interaction with the virtual world and their sensory experience. As previously stated, ensuring the accuracy and integrity of medical data is of paramount importance in the healthcare sector. Thus, it is important to strike a balance between the necessary resources required to complete the task and those available to the device. By maintaining a consistent frame rate throughout the experience, we help to reduce uncomfortable sensations for the user as simulation sickness or improve the user experience and performance. Wang et al. [

43] compared the most common frame rates for VR HMDs (60, 90, 120, and 180 fps), and the results indicated that frame rates above 120 fps tend to enhance user performance and reduce simulation sickness symptoms without significantly impacting the user experience.

4.2. Augmented Reality

The concept of augmented reality was initially introduced in the academic literature in 1992. In the article presented by Caudell and Mizell [

44], the authors defined the term as the utilisation of technology to broaden a user’s visual field for the purpose of accomplishing a specific task. Augmented reality has the ability to incorporate diverse digital information, such as 3D models, videos, text, and images, into the actual physical environment. The extent of immersion perceived by a user through this technology is limited, as there is no isolation from the real environment, but it does allow the user to expand their information about the real world.

There are multiple types of devices that allow augmented reality content to be displayed. They should be selected according to the type of digital information to be added and the type of interaction that the user needs to have with it. It is possible to classify the devices according to the format used for the representation of the real image. Azuma’s survey [

45] identifies three types:

Video see-trough: User does not visualise the real world directly, but is shown the real environment through a screen in front of their eyes. In this scenario, the intensity colour linked to the virtual information can be absolute, as it replaces the real world information provide by a real-time video capture system. The level of the opacity of the virtual elements can be set to a full range, with the pixels from the real environment completely occluded. If the device encounters a malfunction, the user would lose sight of the actual world. Some devices included in this group are Apple Vision Pro, Meta Quest Pro, VIVE XR Elite, or Pico 4;

Optical see-trough: Mirror system for projecting virtual elements, enabling the user to view the real environment simultaneously with the virtual objects. This modality is more intricate than the previous one, leading to a smaller number of available devices on the market. Microsoft Hololens 2 our Magic Leap 2 are good examples for the rendering technology;

Projection based: This group includes all systems in which the augmented information is displayed directly on the associated real object. It is common to find projection systems designed to adjust their projection on the associated real-world area.

In turn, the devices can be classified according to the distance between the user and the point where the final image is reproduced with the mixture of the image coming from the real world and the virtual elements. Bimber and Raskar [

46] established three independent groups: devices that the user must carry on their head (head-attached), devices that the user must carry or hold with their hands (hand-held), and finally those devices that are not required to be carried by the user, but maintain a position in real space (spatial).

The first group is called head-mounted devices. Such devices have a close relationship with the user because they have to be positioned on the user’s head. This classification encompasses instruments presented as helmets, spectacles, or contact lenses. This technology empowers the user to have more autonomy, as it negates the requirement for the use of the hands. Nevertheless, the devices need to be secured to the user’s head, which could instigate discomfort due to the weight of the devices or the need for wiring to operate them. Alternative interaction methods are necessary for these systems, as direct interaction with the display system is impossible due to its proximity to the user’s eyes. Consequently, various input techniques such as voice control, button panels, hand tracking, and external controllers have been employed to facilitate user interaction. Head-mounted display devices are currently predominant, with a wider variety of systems using optical see-through or video see-through to generate the virtual image.

The augmented reality surgical navigation technique provides critical surgical site information to assist the surgeon during complex procedures by enhancing spatial awareness. Unlike conventional visualisation systems, which require the practitioner to alternate their gaze between the operative field and external monitors—often extending procedure time and limiting navigational precision—AR integrates preoperative models directly into the intraoperative scene. This seamless fusion enables real-time instrument tracking and continuous visual guidance within the surgeon’s field of view [

47]. The study presented by Wei et al. [

9] compares the effectiveness of using HMD augmented reality technology with traditional C-arm fluoroscopy in treating osteoporotic vertebral compression fracture (OVCF) with intravertebral vacuum cleft (IVC) during percutaneous kyphoplasty (PKP). Results indicate that augmented reality technology is an efficient and effective method for navigation and positioning, leading to the precise placement of the IVC in OVCF and improved clinical outcomes. The HMD Hololens (Microsoft, Redmond, WA, USA) viewer is used, which employs the optical see-through technique by positioning virtual elements in the surgeon’s field of view, but without totally occluding the surgeon’s view of the real world.

Figure 3 shows several views of the surgeons during the intervention, illustrating the virtual information within the actual environment.

Chen et al. [

10] designed an example of an AR system in the operating theatre using video see-through. Their augmented reality framework allows one to reconstruct the intra-operative blood vessel in 3D space using the preoperative 3D model data on top of the da Vinci surgical robot stereoscopic console view. The result shows that this technique can help the users to avoid the dangerous collision between the instruments and the delicate blood vessel while not introducing an extra load during robot-assisted lymphadenectomy.

The second group includes hand-held devices (HHDs). These gadgets require the user to hold them in their hands. This system restricts the user’s ability to directly interact with the environment, and the available field of vision is restricted to the dimensions of the device being used. Nevertheless, in numerous scenarios, these systems operate on smartphones, which are highly prevalent in today’s society, thus enhancing the accessibility to augmented reality experiences. Furthermore, this technology enables direct user interaction with the display screen, a common preference for most users.

Gavaghan et al. [

11] explained a hand-held solution for projection-based augmented reality surgical navigation. The device is carried by the surgeon or the operating room staff to attain a projection point that is not occluded by other elements in the operating room. This facilitates the accurate visualisation of virtual information in the real environment. However, its use becomes more complex due to the absence of a hands-free carrying system as seen in HMD devices.

Finally, there is the spatial group, encompassing systems with a stationary position and no user relationship. Consequently, it affords users absolute liberty, as they need not wear any external element on their bodies. The goal of this device type is to increase target information in the real world, benefitting from collective visibility in simultaneous installations by numerous people. Within this category are spatial optical see-through displays that generate virtual images aligned with the physical environment through means such as transparent screens or optical holograms. These systems do not allow direct interaction with the objects used in the system, and the optical effect can be broken if the user adopts an incorrect position. In the case of projection-based displays, users can interact with the object that serves as the projection surface.

The worked presented by Sugimoto et al. [

12] is a good example. Their fixed projection system enabled surgeons to visually access virtual information regarding the abdominal region of anatomical structures that were obscured by the patient’s skin. This system proved beneficial in cholecystectomies, gastrectomies, and colectomies. However, this interaction may create shadows and impede the visualisation of information, as shown in

Figure 4. These settings necessitate complex calibrations and sometimes tracking systems in order to reliably match the virtual representation to the real environment. Meier et al. [

13] presented a novel projection-based AR system that reduces the complexity of the initial setup by using a projector capable of automatic calibration through the use of real-time algorithms. The study demonstrates the beneficial use of AR for Deep Inferior Epigastric Artery Perforator (DIEP) flap for breast reconstruction. The system has been developed to project thermal information onto the patient’s abdomen in real-time, facilitating the marking of the Dynamic Infrared Thermography (DIRT) hotspots. This technique achieves a high resemblance to Doppler and Computed Tomography Angiography (CTA) data.

As an alternative to the disadvantages detected in the previous case, Okamoto et al. [

14] opted for the use of a monitor inside the operating theatre where the surgeon can easily direct his gaze to consult the information. This system uses the real-time image from the laparoscopic camera as a basis for correctly positioning the virtual information. The image resulting from the above combination is displayed on the monitor, emphasising depth and spatial relationships by means of 3D visualisation on the monitor and polarised 3D glasses for the operating theatre staff. This method necessitates the surgeon to review the information area, rather than being displayed over the area of interest directly. Nevertheless, the possibility of occlusions on the virtual information during the entire surgery is reduced.

Table 2 summarises immersive applications designed for healthcare sector taking into account the technical details explained in

Section 4.

5. Real-Time Interaction

As previously discussed, both augmented reality and virtual reality require the design of user-interactive content. Typically, the user gives an instruction or command to the programme and the programme changes its appearance to show the response.

There is no ideal interaction system that establishes a pattern, but each experience must have a specific interaction system between the user and the software in order to satisfy the user’s needs in a simple and intuitive way. It is possible to find multiple external devices such as controllers with button panels, as well as sensors included in the augmented reality or virtual reality devices themselves such as voice recognition or eye-tracking systems. By employing several methods, it is possible to create a multimodal interaction system that provides greater flexibility and efficiency to the experience. In any case, the ability to interpret and generate a response in real time must be paramount. Overly complicated systems can cause latency to increase, creating confusion and discomfort for users. Furthermore, these systems may exacerbate user fatigue by imposing a high cognitive burden. Ensuring that tools designed for use in the operating room do not overload surgeons or ward staff with unnecessary complexity is of the utmost importance.

The manipulation of virtual elements is a basic form of user interaction with the environment. This interaction system will make it possible to modify the representative parameters of each element displayed. Additionally, integrating communication and collaboration between users is crucial for interaction in virtual environments. The following section discusses the fundamental forms of interaction, using works related to the healthcare sector.

5.1. Manipulation

Manipulation in virtual environments allows the user to modify the computer-generated elements in their environment. Virtual objects can be positioned differently and their visual features, such as colour or transparency, can be modified. This aspect is more present in virtual reality than in augmented reality, as all of its elements are artificially generated.

In the healthcare sector’s bibliography on XR, the use of direct user interaction systems for applications intended for the operating theatre is noteworthy. The majority of the works uses the Hololens device, which includes hand tracking, eye tracking, and voice control. These interaction techniques empower the surgeon to manage virtual information seamlessly, without physical controllers that may inhibit sterilisation tasks or delay the operation process. Sánchez-Margallo et al. [

15] designed a specific technology for minimally invasive surgeries. The authors proposed an interaction system that allows users to select virtual elements through their gaze and manipulate them using hand gestures or predefined voice commands. This technique illustrates how users can control virtual information without the aid of external elements. However, these systems can involve a high learning curve for some users, requiring prior familiarisation with the system. Furthermore, the study highlights that the language used for voice command recognition can be an added burden for non-native speakers. In contrast, Zeng et al. [

16] presented a pilot study that uses exclusively eye gaze interaction to control virtual loupes in plastic surgery. The project uses video see-through augmented reality, whereby the user is able to see the physical environment in real time and zoom into the viewing area. The study evaluates two interaction systems based on eye gaze for adjusting the virtual loupe: blinking for the continuous adjustment of the zoom level or the selection of predefined zoom levels by fixed sight to each button area. Participants performed anastomotic suturing tasks with progressively finer sutures for the eye gaze interaction methods.

Despite ongoing advancements in technology, the accuracy of gaze in virtual environments is inferior to that achieved during the same tasks in the real world [

48]. An alternative approach to the previous design involves the direct manipulation of virtual elements using hand tracking, as demonstrated in the work presented by Gregory et al. [

17]. Through this method, the surgeon can position virtual content as if it were a physical object and move it around in real space.

In the case of work focused on the areas of training and simulation, the use of physical controls predominates for user interaction with the virtual elements. The use of physical controllers provides a haptic response to the user’s interactions with the environment. The haptic and auditory response is able to increase the effectiveness of immersive environments for training medical people [

49]. Two categories of physical controllers for haptic response generation are prominent in the research literature: those that belong to the display device and haptic robotic arms. In the case of the HMD’s own controllers, it is possible to transmit vibrations homogeneously throughout the controller as an alert. In such instances, the buttons on the controllers are usually mapped so that the movements are as similar as possible to the real world. The research designed by Lohre et al. [

18] showcases the effectiveness of simulators equipped with controllers in training residents to acquire translational technical and nontechnical skills over traditional learning in senior orthopaedic residents.

Table 3 details the manipulation methods used in each work cited above.

5.2. Collaboration

Thus far, we have examined the interaction between a single user and the virtual environment that provides the experience. Nonetheless, it is conceivable to develop frameworks that enable numerous users to interact with each other and with the virtual information contained within. These frameworks are known as Collaborative Virtual Environments, where the participants are depicted by graphical representations, referred to as avatars. The identity, presence, location, and activity of the local user are conveyed to other users via these entities. The origins of Virtual Collaborative Environments lie in the convergence of the development communities interested in mixed reality and the organisation Computer-Supported Collaborative Work (CSCW) [

50]. For this purpose, we employ the space-time matrix (

Figure 5) designed by Ellis et al. [

51] to categorise diverse modalities, contingent upon the users’ spatio-temporal relation within the environment. The varieties of interaction include the following:

Face-to-face. In this mode, all users must remain in the same physical space and time. It is unnecessary to establish a communication channel between users, as they are co-located and experiencing the same temporal conditions;

Asynchronous interaction. Participants may access the experience at various time intervals but are required to share the same physical space;

Synchronous distributed interaction. This type prioritises time over space, as users must remain interconnected simultaneously, but can remain in different spaces;

Asynchronous distributed interaction. In this system, users do not have to maintain any relationship while consulting the virtual environment, although they can see the modifications previously made by the rest of the users.

We consider the generation of collaborative immersive environments in the healthcare sector to be of great importance, as this can favour communication between specialists in complex cases or facilitate assistance to non-specialised personnel in extreme cases, among other cases. These communication environments facilitate the interpretation of space and spatial relationships between tissues or instruments since, thanks to immersive devices, users can freely inspect the collaborative environment, transmitting their presence to the rest of the users through their avatar.

Reviewing the healthcare XR literature, it is worth mentioning that the study presented by Sánchez-Margallo et al. [

15] focused on minimally invasive surgery. It discussed the mixed reality device used by a surgeon that transmits real-time images to a screen placed in the operating theatre. This collaborative approach enables the other staff present in the operating theatre to access the virtual information and offer their opinions to the surgeon. However, the latter group is unable to alter the virtual content or generate their own inquiries. In the work designed for standard reverse shoulder arthroplasty by Gregory et al. [

17], the surgeon utilises an equivalent device to the one used in the previous case, but the transmission is performed via Skype software, allowing real-time communication between surgeons from different regions. This project exemplifies a synchronous distributed interaction that necessitates communication channels that are more intricate than those proposed for conventional face-to-face interactions. Furthermore, this method promotes greater collaboration among all users, as they have the ability to contribute to the surgeon’s mixed reality glasses display window. The integration of this technology did not compromise patient safety, but instead enhanced it because the primary surgeon had direct access to the anatomical structures during the surgery and could receive guidance and advice from other specialists.

In the collaborative works presented above, stream-connected users are limited in their interaction, as they cannot freely move or select elements, as previously discussed. Depending on the scale chosen for the virtual world, there are two main modalities: static systems, which uphold an equivalent scalar relationship for all users, and multi-scale virtual environments, where different types of users can acquire distinct perspectives of the virtual world.

Virtual environments with a fixed scale between users enable the maintenance of an equivalent spatial relationship among individuals connected to the experience. This approach is particularly relevant in systems where users need to interact with objects within the real environment, which cannot be manipulated computationally. One healthcare sector scenario could be telementoring, as maintaining reference to the real world at all points of the connection is crucial for the accurate rendering of virtual information. ARTEMIS [

19] is a platform that merges augmented and virtual reality to enable emergency assistance in the absence of expert personnel qualified to conduct surgery. The emergency surgeon in the operating theatre dons a Hololens helmet, enabling them to view annotations and virtual information presented by the intervention area expert. The expert surgeon uses a virtual reality helmet to visualise a reconstruction of the patient’s body and interact with it using hand tracking. All technical information added by the expert user is accurately displayed in the surgeon’s real-world environment due to the efficient calibration and tracking systems on both communication sides.

Figure 6 shows the on-site and remote user environments and the technological devices needed.

In multi-scale collaborative virtual environments, users maintain disparate spatial relationships. This allows for multiple viewpoints and facilitates a comprehensive understanding of the data being analysed. In these systems, virtual reality typically serves as a platform for users whose scale has been altered with respect to the real world, as the use of immersive helmets with tracking allows for the easy 360-degree visualisation of the virtual environment, completely occluding the surrounding reality. The initial type of interaction encountered is referred to as God-like, as one user possesses an aerial view of the virtual space and interacts directly with their hand from the sky, simulating a deity. In the work introduced by Stafford et al. [

52], known as the first implementation of this technique, a synchronous collaborative navigation aid system is proposed. One user operates a desktop projection system with virtual elements, within an indoor environment. This user can manipulate the virtual elements by pointing or placing them within a virtual rendition of the external world. The second user, situated within the external environment, utilises a mobile augmented reality device to perceive both real and virtual elements, including those produced by the first user. A voice channel within the system promotes network communication between the two users.

Focused on the healthcare sector, the paper presented by Rojas-Muñoz et al. [

20] proposed a collaborative system for synchronous and distributed interaction. This interactive system can be viewed as the amalgamation of two former proposals. The research demonstrates the system’s efficacy in teaching surgical concepts remotely, in particular, the leg fasciotomy procedure. The touchscreen control enables the instructor to view the intervention area through a fixed top-down camera. This allows for direct interaction with the screen to position the instruments correctly or make real-time annotations. The surgeon, in turn, carries an HMD device that employs augmented reality. With the help of depth sensors, it accurately positions all virtual information fed by the instructor in the surgeon’s field of vision. This allows for a high level of interaction between the two parties.

The Giant–Miniature Collaboration interaction mode is presented by Piumsomboon et al. [

53], in which a system utilising augmented and virtual reality in a synchronous collaboration environment is demonstrated. The collaboration involves a participant referred to as “the giant” who employs an augmented reality HMD device in the real world. Furthermore, this user possesses a 360-degree camera with 6DOF tracking, which allows for unrestricted movement in real space. The 360-degree camera serves as the ‘miniature’ user’s representation in the real world. The user can view real-time 360 video captured by the giant through a virtual reality HMD device. This setup allows the miniature user to observe in detail the area selected by the giant and the actions performed on the real world.

Although we did not identify any work in the healthcare sector using the Giant–Miniature Collaboration method in our literature search, the interactive basis of this technique can be found in surgical procedures. The da Vinci surgical robot incorporates a stereoscopic camera, which offers a detailed visualisation of the region of concern. The primary console of the system enables the lead surgeon to manoeuvre within the human body like a miniature, to precisely direct the surgical instruments via robotic arms. On occasion, surgical procedures necessitate the use of more instruments than what the surgical robot can support simultaneously. The da Vinci surgical system’s robot-assisted laparoscopic myomectomy technique exemplifies such a case. To execute this procedure, uterine manipulators are utilised to aid the robot-assisted myomectomy [

54]. This instrument must be controlled by an external surgeon who employs the miniature view of the lead surgeon within the area of interest and applies his or her indications. Due to the similarities presented between the interaction of multiple surgeons in this type of process and the Giant–Miniature Collaboration method, we believe that this technique can be a good starting point for the design of collaborative virtual environments focused on areas such as surgical skills training, surgical planning, and telementoring.

As previously observed, users can interact through various viewpoints and perspectives in virtual environments. Communication between users can occur through different means, such as audio channels, environmental annotations, or signage. It is crucial to take into account the users’ point of view when devising the signals that facilitate user communication as their effectiveness may differ. When users share the same perspective during visual communication, hand tracking is the most effective method for comprehending visual cues and gestures. Nevertheless, in situations where users have different viewpoints, implementing hand tracking impedes the understanding of the task at hand by remote users. In this instance, utilising pointers for element pointing or gesturing enhances co-presence levels in remote users while also reducing task execution time [

55].

Finally, annotation techniques are a complementary factor for enhancing communication and interaction between users in virtual environments. They excel in providing spatial information and disseminating instructions, thereby alleviating the cognitive load associated with comprehending them. Magnoramas is a technique that facilitates the creation of hand-drawn annotations in virtual reality. This approach enhances the precision of annotations by enlarging the region of interest in relation to the standard scale throughout the annotation process. Upon the completion of the annotation, the mechanism reverts to the original size of the focal point alongside the annotations implemented in that region. This tool preserves comparable levels of usability and perceived presence when compared to other reference methods [

21]. Cooperative manipulation techniques are a significant factor in reducing task completion time, as multiple users can manipulate objects. Additionally, the use of collaborative virtual environments enhances situational, social, and task performance awareness, which result in increased productivity during information-seeking and problem-prediction phases in collaborative discussions [

56].

Table 4 details the collaboration methods used in each work cited above.

6. Case Studies: Immersive Technology Advancements in Surgery

Technological advancements have enabled computers to play a pivotal role in the healthcare sector. They are utilised in numerous areas, including medical image processing and review, data management, treatment, consultation tools, and interconnection systems with various healthcare departments and institutions.

Computer graphics is a specialised field of computer science that concentrates on the simulated generation of visual content and image manipulation to alter their information. This area has become very important in the healthcare field for the inspection of medical image studies from various modalities. This analysis is performed at multiple stages of the healthcare career such as in educational levels or within the professional field itself for the detection and treatment of diseases. The Nextmed solution is based on a similar idea to the Slicer3D viewer, but with a superior clinical approach, aiming to be a tool for surgeons. The proprietary software proposes a secure, multi-device system that allows healthcare professionals to store case data on a server, which can then be accessed from multiple locations. This tool allows the automatic visualisation and segmentation of tissues and anatomical structures for each specific case, based on the associated medical image. This expedites the process of obtaining segmented 3D models without the requirement for medical personnel intervention. The acquired models may be viewed through conventional computers, virtual reality devices, or augmented reality devices, unlocking the full potential of the three dimensions [

22].

Figure 7 illustrates the inspection of medical data by health workers using augmented reality.

A noteworthy contribution in this field is presented by Seth et al. [

23], who introduced an augmented reality designed to support preoperative planning for deep inferior epigastric artery perforator (DIEP) flap surgery in breast reconstruction. The system integrates patient-specific computed tomographic angiography (CTA) data with the real-world surgical environment, enabling surgeons to identify perforators and trace their intramuscular routes with greater accuracy. Preoperative vascular visualisation through such methods has the potential to enhance surgical precision and reduce operative time. This work exemplifies the increasing adoption of augmented reality technologies in surgical workflows, particularly in procedures requiring detailed anatomical insight.

In the field of healthcare education, HoloAnatomy is a notable mixed reality application. This application is centred on facilitating the study of human anatomy for healthcare students via visualisation and examination of anatomical models. Additionally, the platform enables teachers to incorporate hypermedia content on the models, including annotations, labels, and representative images, for students’ comprehension. Ruthberg et al. [

5] compared the classical cadaveric dissection techniques, commonly used in the educational healthcare field, and the employment of HoloAnatomy mixed reality app. The findings demonstrate that the utilisation of the mixed reality application effectively decreases the duration of anatomy instruction without compromising the students’ understanding. This platform also facilitates remote teaching via collaborative sessions using a simple infrastructure. This alternative approach enabled the teaching of anatomy to students at Case Western Reserve University (CWRU) during the COVID-19 pandemic, resulting in positive outcomes from the study conducted [

24].

With regard to the utilisation of VR technology, the work presented by Ulbrich et al. [

25] analysed the learning curve in oral and maxillofacial surgery novices. The study presents a case of surgical planning using a VR tool versus a desktop tool. Despite the absence of any qualitative difference between the two modalities, VR participants achieved a segmentation speed almost double that of the desktop tool. The VR environment was perceived by participants as more intuitive and less exhausting than the desktop environment. Furthermore, experiences that increase students’ knowledge and confidence in carrying out interventions can be found in the relevant literature. Virtual reality is a viable platform for generating such environments, as it immerses users in the proposed task, allowing them to focus their attention exclusively on it without distractions from the real world. The VR application designed by Pulijala et al. [

26] offers a Le Fort I osteotomy surgery training experience where students can interact with the environment in the first person using virtual reality headsets and hand tracking. The project aims to teach non-technical skills such as cognition or decision-making to students in complex surgeries where resident surgeons’ steps are challenging to assist or visualise in detail. The experience utilises a 360-degree video of the operating theatre to develop a feeling of presence for the student. Furthermore, it integrates 3D models of patient data, instrumentation, and anatomical features, with which the student can directly interact. In addition, an assessment scene is included so that the user can receive real-time feedback on their task performance. The results of the study show higher levels of confidence to face surgery in users who have used the VR app, especially in first-year students, which could be an important auxiliary tool in the educational process to bring students from early stages to real situations in their career.

By leveraging virtual reality technology, these proposals present cost-effective simulators with superior accessibility and realistic surgical outcomes. This technology is applicable to acquiring surgical skills at all levels, including medical students, residents, and staff surgeons. Employing immersive virtual environments to acquire skills demonstrates higher levels of proficiency than traditional techniques. The results are reflected in reduced task completion time, improved scores, and greater accuracy in implant positioning. Furthermore, the immersive simulation models have garnered positive evaluations from users [

49].

One of the healthcare areas in which the use of immersive simulators is becoming increasingly popular is robotic surgery. The use of virtual reality simulators in the acquisition of robot-assisted surgery skills has many possibilities, as both systems use a native stereoscopic rendering system to provide a greater sense of depth. These platforms provide training programs and exercises designed to assess users’ skills in robotic surgery. Furthermore, the skills acquired through using these systems are comparable to those obtained from dry lab simulation [

49]. Nevertheless, the presence of these simulators in the market is currently low and no base curriculum has been established for developing content on this platform.

In the surgical theatre, the interdisciplinary team consisting of specialised staff from different areas performs and executes the operation correctly. Therefore, it is worthwhile to create virtual simulators that facilitate collective training in the theatre, which in turn fosters communication and mutual comprehension among various profiles. The work introduced by Chheang et al. [

27] outlines a laparoscopic surgery scenario, which replicates the operation phases, encouraging communication and action protocols within the operating theatre’s distinct roles. This proposal has the potential to generate elevated levels of exhilaration and social presence amongst users, illustrating a promising tool for the future. As illustrated in

Figure 8, there is a clear visual distinction between the virtual environment and the real world. The use of 3D models enables users to recognise the positioning of their peers in the virtual environment, while the VR HMD completely isolates them from the real world.

Following on from previous research, Chheang et al. [

28] presented an immersive collaborative environment designed for use in laparoscopic surgeries. This system introduces Simball joysticks as controllers to provide a more realistic experience in line with the instruments used in these surgeries. This platform facilitates cooperation between the main surgeon and the camera assistant whilst simulating the operation on a 3D model generated from the medical imaging of the case.

So far we have examined augmented and virtual reality works that enhance the skills and knowledge of healthcare professionals. Nevertheless, these technologies can also be used for everyday hospital tasks, such as preoperative planning. The preoperative phase of surgery involves an analysis of the patient’s test findings to identify the optimal course of action, which is critical in intricate cases. As discussed previously, there are presently software tools capable of conducting quantitative analyses of medical images and producing segmented 3D models of a particular study. Digital 3D models provide significant visualisation options, including the capability to modify their dimensions or rendering modes (such as colours, transparency, and visibility) through computer graphics, thereby aiding in the examination of the relevant anatomical structures. The study conducted by Huettl et al. [

29], which focused on liver reconstruction surgeries, reveals the preferences of various healthcare professionals such as students, residents, and specialist doctors towards virtual reality applications compared to 3D printing or PDF for examining 3D reconstructions before surgery.

Furthermore, the stereoscopic rendering ability of virtual and augmented reality devices plays a crucial role in enhancing users’ perception of spatial relationships, as well as the depth and volume of the structures. This feature is essential in tumour detection and study as it aids in defining safety margins and tumour resection. Fick et al. [

30] presented a study that analysed the spatial understanding of brain tumours using various methods. These methods include the traditional magnetic resonance imaging study, 3D models presented on conventional screens, and 3D models in mixed reality. To achieve this, every participant must accurately place a virtual tumour inside a virtual head in an identical location to the original tumour. Mixed reality yielded lower levels of deviation in position, volume, and rotation. Additionally, the ease of familiarisation and learning of this technology is noteworthy, as none of the participants had previous experience with it. In contrast, those using magnetic resonance imaging demonstrated a high cognitive load during the tasks.

These tools may be utilised to enable effective communication among healthcare professionals in complex cases, following the guidelines of collaborative systems. Such systems provide versatile options that can be tailored to suit specific needs. Kumar et al. [

31] introduced a face-to-face collaborative system using mixed reality devices, in which several surgeons can jointly inspect a 3D model of the case in cases of laparoscopic liver and heart surgery. This application includes tools for model transformation, marker positioning, 2D medical image viewing, and cutting plane. The surgeons then used this application during surgery as a road map, using the annotations previously applied to the 3D model. In this case, all users connected to the same session can visualise the virtual 3D model in the exact real-world location, as illustrated in

Figure 9. Javaheri et al. [

32] introduced a wearable AR assistance system for pancreatic surgery. The system incorporates the Hololens device, worn by surgeons, with AR markers employed in the surgical area. Multiple surgeons can independently view virtual information in real time. Its effectiveness in preoperative planning and intraoperative vascular identification positions it as a valuable tool for pancreatic surgery and a potential educational resource for future surgical residents.

Chheang et al. [

33] presented a collaborative surgical planner specialised in the approach to hepatic tumours. This system is designed for resection planning between specialist surgeons in-person or remotely. Its tools allow for the virtual definition and adjustment of resections, using both 3D models and patient medical imaging. In addition, the platform integrates a real-time visual risk map for confidence margins around the tumour. In this system, all users use virtual reality HMD devices, permitting the virtual representation of the transformation of each user’s head and hands in real-time.

The work mentioned above facilitates surgeon-to-surgeon interaction in collaborative virtual environments through the use of simple avatars that employ representative geometries, including the geometry of the HMD employed. In contrast, the use of human-like avatars in virtual environments generally increases the level of social presence in users, especially when they are controlled directly by the user rather than by agents implemented by scripts [

57]. Bashkanov et al. [

34] presented an immersive collaborative system focused on liver surgery planning where realistic human-like avatars are used for the users. In this instance, inverse kinematics is applied to depict the movements of the lifelike avatar using data collected from the HMD sensors and virtual reality device controllers. Each controller reflects the user’s actual hand placement as they hold the device, with wrist, elbow, and shoulder joints automatically adjusted using inverse kinematics. The design of the virtual environment replicates a meeting room in which surgeons are seated at the area of interest, in which there is a 2D medical image of the case and a 3D model reconstructed from the previous study. Users can interact with the virtual information elements and with their peers. Additionally, a voice channel enables communication between them. The findings of the study reveal that the participants experienced high levels of immersion. However, there were occasional anomalous avatar positions caused by inverse kinematics technique errors. Finally, the work by Palma et al. [

35] is of particular interest. In this study, the authors proposed a collaborative virtual environment for robot-assisted surgery planning, introducing a cross-platform system. This collaborative environment allows for a connection to the inspection room with other surgeons via PC, tablet, VR, and XR, generating a specific interaction system for each platform to obtain its maximum potential. The authors sought to make access to the tool more flexible, adapting it to the surgeons’ technological skills and resources in each case. It is also noteworthy to mention the trocar position tool (

Figure 10), in which surgeons can simulate the view and range of the trocar settings, including trocar configuration and patient position.

The analysis of these case studies reveals several overarching trends and insights:

Immersive technologies are applied across various surgical contexts, from planning and training to intraoperative assistance;

VR is especially prominent in education and simulation, while AR and MR dominate in real-time surgical guidance and preoperative visualisation;

Several systems are designed to support collaborative and remote interactions, aligning with the rising demand for telementoring and distributed surgical planning workflows;

Collaborative environments are increasingly used to foster communication among surgical teams and across medical specialties;

Promising results across pilot studies suggest that immersive technologies are ready for broader clinical integration, with standardisation emerging as the key step toward widespread adoption.

7. Conclusions and Future Work

In this review, we evaluated healthcare applications in the virtuality continuum. To design and implement such projects, we must consider the requirements of all involved areas. Processing data according to the DICOM standard is a crucial aspect. Its use is essential for accurately processing medical images in immersive visualisation systems. The implementation of this technology will allow for the creation of systems that can establish communication channels with healthcare centre PACS services. This will be especially crucial for systems that utilise 3D models segmented from medical images, as it will simplify data transformation within the software pipeline.

Furthermore, it is imperative to thoroughly comprehend the advantages and drawbacks of every immersive technology and available device to opt for the most suitable platform that aligns with the set goal. As previously demonstrated, the assortment of devices and projections systems is extensive, encompassing varying economic and computational capacities. This diversity of devices presents a broad range of possibilities concerning developing novel systems and modifying them to encourage accessibility. It is deemed that the adoption of systems that are accessible and easy to maintain in medical settings can promote the integration of such tools in the daily operations of healthcare personnel.

The genesis of immersive technologies dates back to the 1960s; nonetheless, the general public has not embraced them fully, resulting in non-standardised interaction patterns associated with these technologies. The application of interaction techniques in future designs and a thorough evaluation of the level of usability and cognitive load of the interactive system are of significant importance. These findings will promote the standardisation of interactive methods and metaphors that aid user familiarisation with these technologies, thereby reducing the cognitive load associated with learning such systems.

After an analysis of the relevant literature, we have identified three main areas of focus for future work.

Surgical planning tools. These tools have garnered numerous publications within the latest academic literature, evincing their efficacy in facilitating surgical case preparation. Presently, a variety of planners are available, contingent on the type of data presented (2D medical image or segmented 3D models of the case), offered tools, surgery type specialisation, or feasible collaborative features. All of the proposals demonstrate encouraging outcomes; nevertheless, their utilisation or integration in habitual healthcare facilities appears remote. We contend that the introduction of a flexible platform capable of aligning various surgical applications—such as those proposed by Kumar et al. [

31], Javaheri et al. [

32], and Chheang et al. [

33]—will encourage the adoption of immersive technology in healthcare centres. The integration of a reliable and scalable system into the hospital’s PACS is of utmost importance to facilitate its use by healthcare staff. Automating the data flow between both platforms, as seen in traditional 2D medical image visualisation programmes, can be achieved.

Robot-assisted surgery simulator. In recent years, there have been significant advancements in this technology, particularly immersive virtual reality simulators that are specifically designed for robotic surgery, which have proven to be particularly effective in improving procedure times as well as task completion and accuracy. These low-cost systems also have the added benefit of generating user feedback, thereby providing an objective assessment of the user’s proficiency in completing complex surgical procedures and potentially serving as a valuable tool for certification exams. Nevertheless, the lack of a comprehensive curriculum for these platforms presents a notable challenge. The closed-source Intuitive Learning platform provides a series of exercises for developing competencies necessary for the da Vinci assisted-surgery robot. This solution allows for the tracking of trainee progress, the evaluation of metrics for individual exercises, and the facilitation of adjustments to robotic training plans based on data [

58]. This tool serves as a feasibility test for a curriculum to develop robot-assisted surgery skills using immersive virtual reality simulators. Additionally, these platforms can introduce new techniques to capture surgical residents’ attention and enhance their interest in the topic. In the study designed by Kerfoot and Kissane [

59], the authors highlight how incorporating game mechanics into simulator education significantly increased the usage of our da Vinci Skills Simulator.

Enhancing collaboration among healthcare professionals. Collaborative systems can enhance communication and promote a sense of co-presence among healthcare workers, as demonstrated by previous research. Establishing collaborative environments has significant implications in various sectors, including education, surgical planning, and telementoring. Such environments aid knowledge transfer between surgeons. Immersive systems can serve as a substitute for video call systems. They enable 3D data analysis and provide greater flexibility in data transformation and visualisation. This point is relevant for future work, as it complements the two points discussed earlier. The development of systems that enable both individual and collaborative virtual rooms can facilitate technology adoption, as the collaborative modality does not require learning a new interaction system. Furthermore, we consider it important to focus future work on the development of collaborative systems with distributed asynchronous interaction. Unlike synchronous collaboration, where all participants must be present at the same time, asynchronous interaction allows healthcare professionals to contribute, review, and modify information at their convenience. This flexibility removes constraints related to time zones and availability, making it especially valuable in global surgical collaboration, remote case reviews, and continuous medical training. A key advantage of asynchronous collaboration is its potential for data unification through integration with PACS and other healthcare data systems. Physicians will be able to examine general patient data within immersive collaborative applications while also accessing their own annotations and those made by other users across different platforms. This cross-platform accessibility enables professionals to take advantage of the unique benefits of each system—such as the detailed imaging capabilities of PACS, the real-time interactivity of immersive applications, and the annotation tools of external platforms—while ensuring that all relevant data remain centralised and easily accessible. However, developing such systems presents several technical challenges, including ensuring data persistence and version control to maintain a structured history of annotations and modifications, enabling seamless interoperability and integration with existing healthcare data systems, and implementing strong security and access control mechanisms to protect sensitive patient information while allowing controlled collaboration among authorised users.