Validation of a Saliency Map for Assessing Image Quality in Nuclear Medicine: Experimental Study Outcomes

Abstract

:Simple Summary

Abstract

1. Introduction

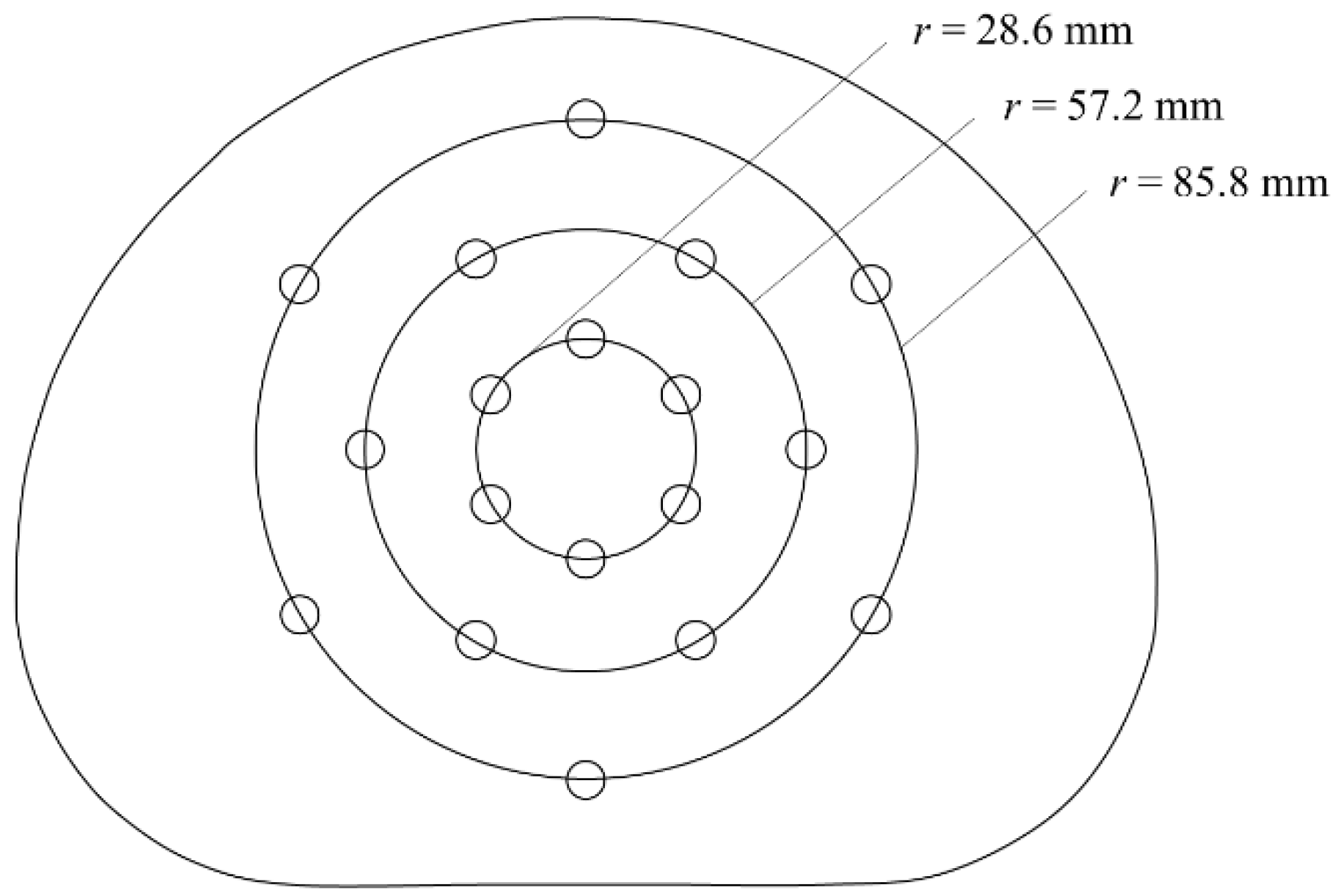

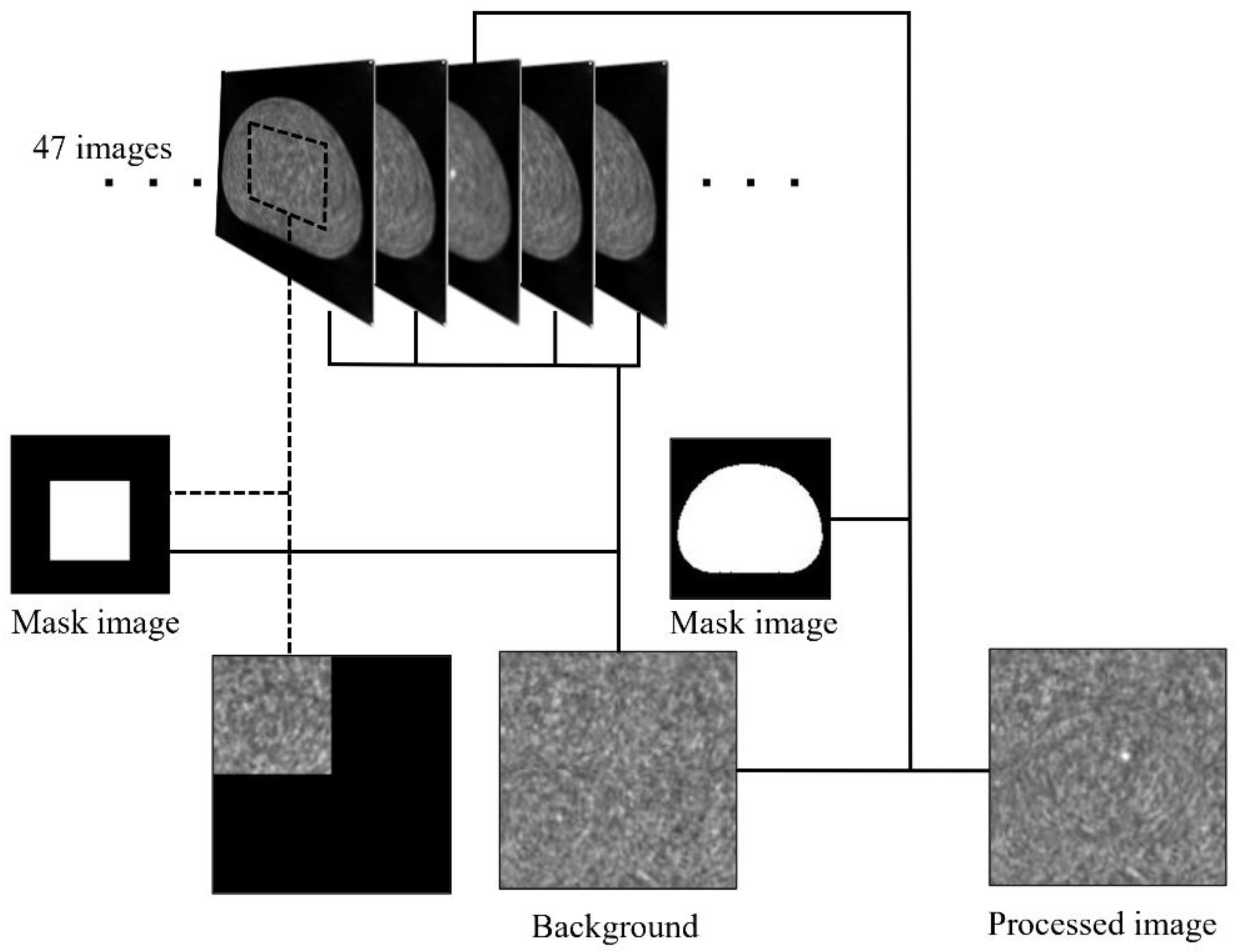

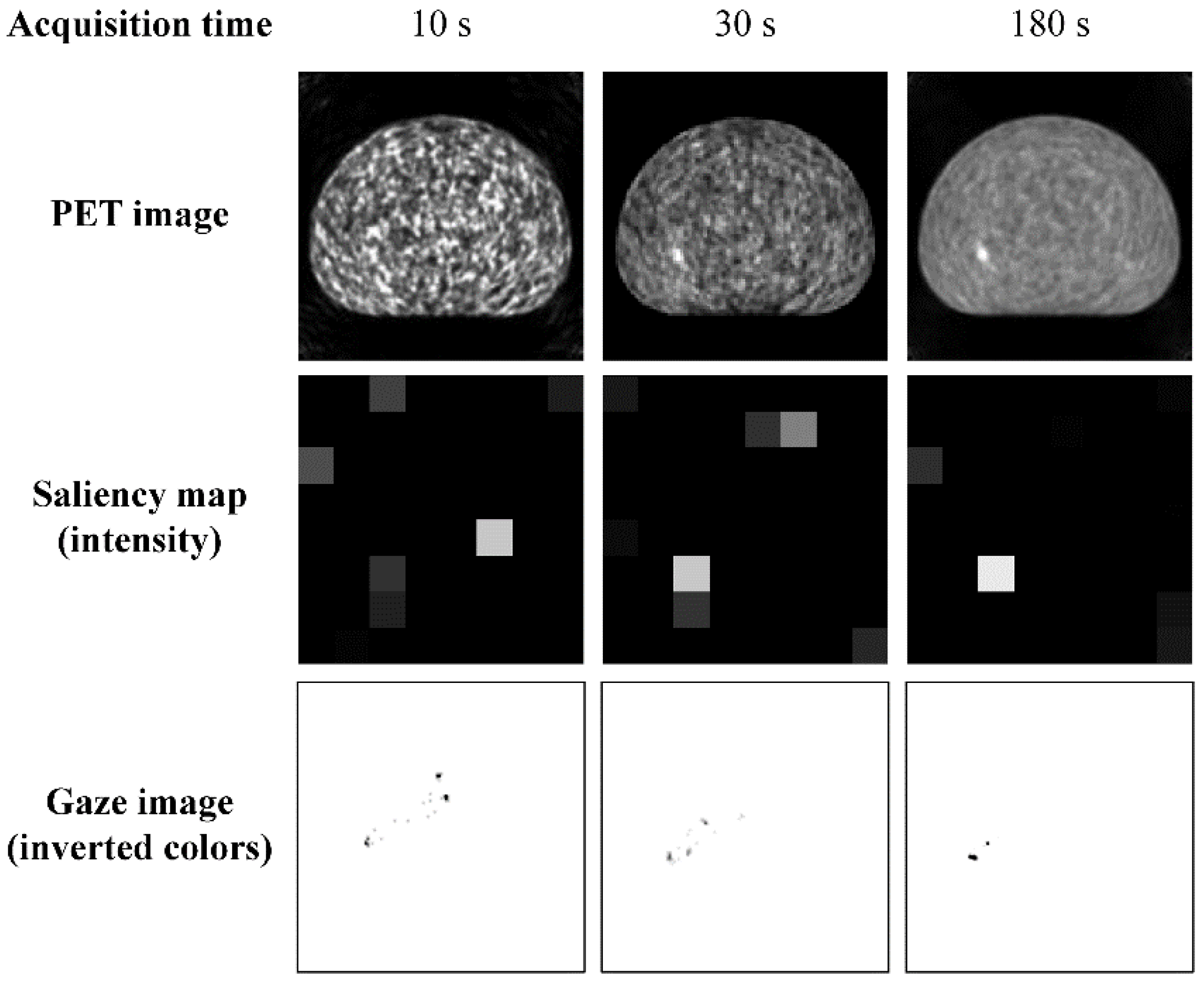

2. Materials and Methods

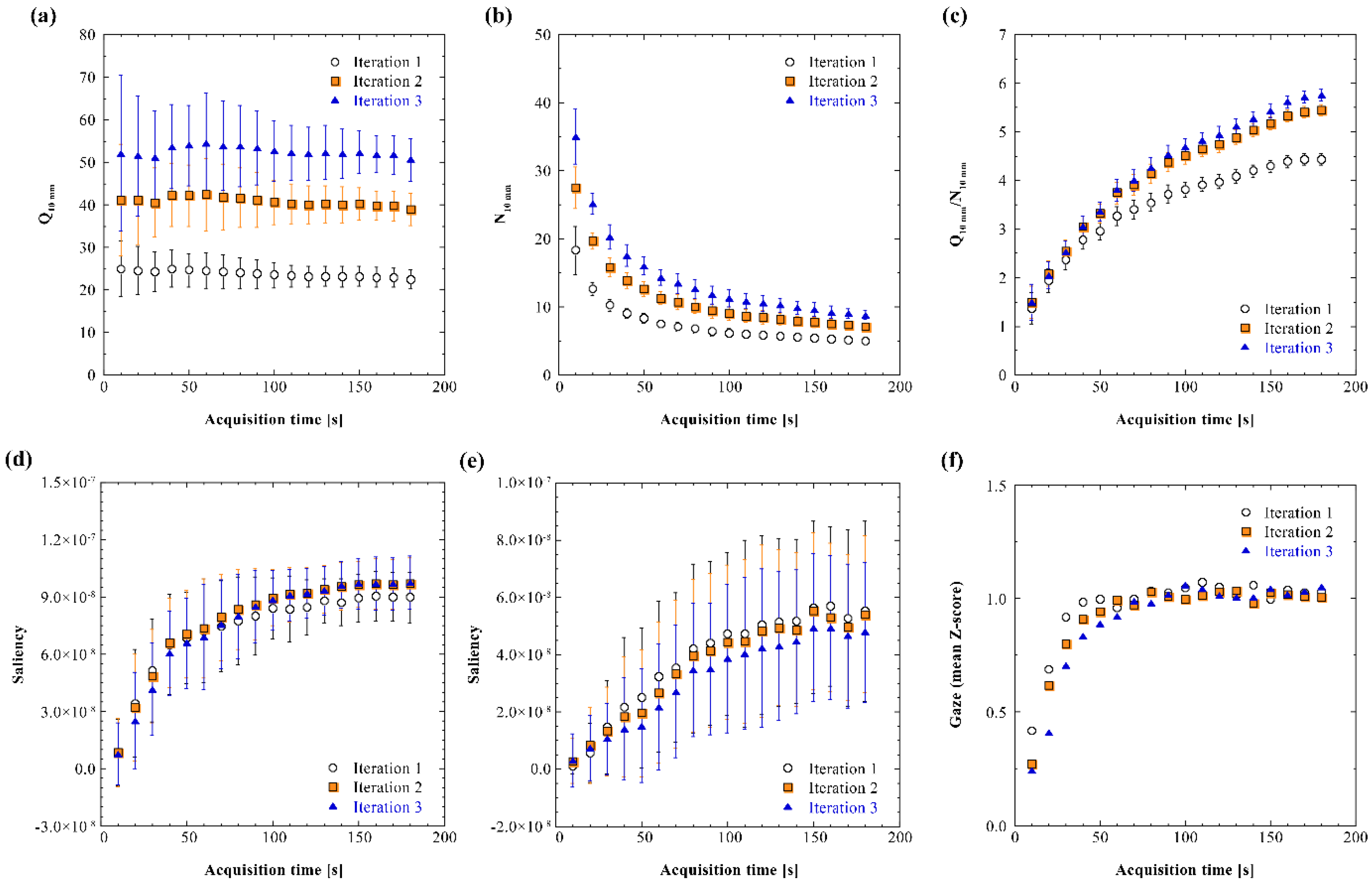

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Reynes-Llompart, G.; Sabate-Llobera, A.; Llinares-Tello, E.; Marti-Climent, J.M.; Gamez-Cenzano, C. Image quality evaluation in a modern PET system: Impact of new reconstructions methods and a radiomics approach. Sci. Rep. 2019, 9, 10640. [Google Scholar] [CrossRef] [PubMed]

- Bertalmío, M.; Gomez-Villa, A.; Martín, A.; Vazquez-Corral, J.; Kane, D.; Malo, J. Evidence for the intrinsically nonlinear nature of receptive fields in vision. Sci. Rep. 2020, 10, 16277. [Google Scholar] [CrossRef] [PubMed]

- Zhai, G.; Min, X. Perceptual image quality assessment: A survey. Sci. China Inf. Sci. 2020, 63, 211301. [Google Scholar] [CrossRef]

- Ujjwal, V.J.; Sivaswamy, J.; Vaidya, V. Assessment of computational visual attention models on medical images. In Proceedings of the Eighth Indian Conference on Computer Vision, Graphics and Image Processing, Mumbai, India, 16–19 December 2012; Association for Computing Machinery: New York, NY, USA. [Google Scholar]

- Alpert, S.; Kisilev, P. Unsupervised detection of abnormalities in medical images using salient features. In Proceedings of thevolume 9034, Medical Imaging 2014, Image Processing, San Diego, CA, USA, 15–20 February 2014; Ourselin, S., Styner, M.A., Eds.; SPIE: Bellingham, WA, USA, 2014; Volume 9034. [Google Scholar]

- Banerjee, S.; Mitra, S.; Shankar, B.U.; Hayashi, Y. A novel GBM saliency detection model using multi-channel MRI. PLoS ONE 2016, 11, e0146388. [Google Scholar] [CrossRef]

- Mitra, S.; Banerjee, S.; Hayashi, Y. Volumetric brain tumour detection from MRI using visual saliency. PLoS ONE 2017, 12, e0187209. [Google Scholar] [CrossRef] [Green Version]

- Hosokawa, S.; Takahashi, Y.; Inoue, K.; Suginuma, A.; Terao, S.; Kano, D.; Nakagami, Y.; Watanabe, Y.; Yamamoto, H.; Fukushi, M. Fundamental study on objective image quality assessment of single photon emission computed tomography based on human vision by using saliency. Jpn. J. Nucl. Med. Technol. 2021, 41, 175–184. [Google Scholar]

- Wen, G.; Aizenman, A.; Drew, T.; Wolfe, J.M.; Haygood, T.M.; Markey, M.K. Computational assessment of visual search strategies in volumetric medical images. J. Med. Imaging 2016, 3, 015501. [Google Scholar] [CrossRef] [Green Version]

- Matsumoto, H.; Terao, Y.; Yugeta, A.; Fukuda, H.; Emoto, M.; Furubayashi, T.; Okano, T.; Hanajima, R.; Ugawa, Y. Where do neurologists look when viewing brain CT images? An eye-tracking study involving stroke cases. PLoS ONE 2011, 6, e28928. [Google Scholar] [CrossRef]

- Motoki, K.; Saito, T.; Onuma, T. Eye-tracking research on sensory and consumer science: A review, pitfalls and future directions. Food Res. Int. 2021, 145, 110389. [Google Scholar] [CrossRef]

- Kredel, R.; Vater, C.; Klostermann, A.; Hossner, E.-J. Eye-tracking technology and the dynamics of natural gaze behavior in sports: A systematic review of 40 years of research. Front. Psychol. 2017, 8, 1845. [Google Scholar] [CrossRef] [Green Version]

- Liu, Z.; Yang, Z.; Gu, Y.; Liu, H.; Wang, P. The effectiveness of eye tracking in the diagnosis of cognitive disorders: A systematic review and meta-analysis. PLoS ONE 2021, 16, e0254059. [Google Scholar] [CrossRef] [PubMed]

- Yamashina, H.; Kano, S.; Suzuki, T.; Yagahara, A.; Ogasawara, K. Assessing visual attention of mammography positioning using eye tracking system: A comparison between experts and novices. Jpn. J. Radiol. Technol. 2019, 75, 1316–1324. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jan, S.; Santin, G.; Strul, D.; Staelens, S.; Assie, K.; Autret, D.; Avner, S.; Barbier, R.; Bardies, M.; Bloomfield, P.M.; et al. GATE: A simulation toolkit for PET and SPECT. Phys. Med. Biol. 2004, 49, 4543–4561. [Google Scholar] [CrossRef] [PubMed]

- Merlin, T.; Stute, S.; Benoit, D.; Bert, J.; Carlier, T.; Comtat, C.; Filipovic, M.; Lamare, F.; Visvikis, D. CASToR: A generic data organization and processing code framework for multi-modal and multi-dimensional tomographic reconstruction. Phys. Med. Biol. 2018, 63, 185005. [Google Scholar] [CrossRef] [Green Version]

- Fukukita, H.; Suzuki, K.; Matsumoto, K.; Terauchi, T.; Daisaki, H.; Ikari, Y.; Shimada, N.; Senda, M. Japanese guideline for the oncology FDG-PET/CT data acquisition protocol: Synopsis of version 2.0. Ann. Nucl. Med. 2014, 28, 693–705. [Google Scholar] [CrossRef] [Green Version]

- Itti, L.; Koch, C.; Niebur, E. A model of saliency-based visual attention for rapid scene analysis. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 1254–1259. [Google Scholar] [CrossRef] [Green Version]

- Schneider, C.A.; Rasband, W.S.; Eliceiri, K.W. NIH Image to ImageJ: 25 years of image analysis. Nat. Methods 2012, 9, 671–675. [Google Scholar] [CrossRef]

- Itti, L.; Dhavale, N.; Pighin, F. Realistic avatar eye and head animation using a neurobiological model of visual attention. SPIE 2003, 5200, 64–78. [Google Scholar]

- Ihaka, R.; Gentleman, R.R. A language for data analysis and graphics. J. Comput. Graph. Stat. 1996, 5, 299–314. [Google Scholar] [CrossRef]

- Parkhurst, D.; Law, K.; Niebur, E. Modeling the role of salience in the allocation of overt visual attention. Vis. Res. 2002, 42, 107–123. [Google Scholar] [CrossRef] [Green Version]

- Perconti, P.; Loew, M.H. Salience measure for assessing scale-based features in mammograms. J. Opt. Soc. Am. A Opt. Image Sci. Vis. 2007, 24, B81–B90. [Google Scholar] [CrossRef] [PubMed]

- Rosenbaum, S.J.; Lind, T.; Antoch, G.; Bockisch, A. False-positive FDG PET uptake—The role of PET/CT. Eur. Raiol. 2006, 16, 1054–1065. [Google Scholar] [CrossRef] [PubMed]

- Puttagunta, M.; Ravi, S. Medical image analysis based on deep learning approach. Multimed. Tools Appl. Multimed. 2021, 80, 24365–24398. [Google Scholar] [CrossRef] [PubMed]

- Zou, X.; Zhao, X.; Yang, Y.; Li, N. Learning-based visual saliency model for detecting diabetic macular edema in retinal image. Comput. Intell. Neurosci. 2016, 2016, 7496735. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Brémond, R.; Petit, J.; Tarel, J.P. Saliency maps of high dynamic range images. In Proceedings of the Trends and Topics in Computer Vision. ECCV 2010 Workshops, Heraklio, Greece, 10–11 September 2010; Kutulakos, K.N., Ed.; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Dong, Y.; Pourazad, M.T.; Nasiopoulos, P. Human visual system-based saliency detection for high dynamic range content. IEEE Trans. Multimedia 2016, 18, 549–562. [Google Scholar] [CrossRef]

- Oszust, M.; Piórkowski, A.; Obuchowicz, R. No-reference image quality assessment of magnetic resonance images with high-boost filtering and local features. Magn. Reason. Med. 2020, 84, 1648–1660. [Google Scholar] [CrossRef]

- Chow, L.S.; Rajagopal, H. Modified-BRISQUE as no reference image quality assessment for structural MR images. Magn. Reason. Imaging 2017, 43, 74–87. [Google Scholar] [CrossRef]

- Borji, A.; Cheng, M.-M.; Hou, Q.; Jiang, H.; Li, J. Salient object detection: A survey. Comp. Vis. Media 2019, 5, 117–150. [Google Scholar] [CrossRef] [Green Version]

| Iteration | Indicator | Correlation Coefficient (95% Confidence Interval) | ||||

|---|---|---|---|---|---|---|

| Saliency (Intensity) | Saliency (Flicker) | Q10 mm | N10 mm | Q10 mm/N10 mm | ||

| 1 | Gaze | 0.942 (0.849, 0.979) | 0.802 (0.537, 0.923) | −0.599 (−0.833, −0.184) | −0.959 (−0.985, −0.890) | 0.837 (0.608, 0.938) |

| Saliency (Intensity) | 0.947 (0.860, 0.980) | −0.773 (−0.911, −0.478) | −0.994 (−0.998, −0.982) | 0.967 (0.912, 0.988) | ||

| Saliency (Flicker) | −0.888 (−0.958, −0.719) | −0.920 (−0.970, −0.795) | 0.991 (0.976, 0.997) | |||

| Q10 mm | 0.757 (0.449, 0.904) | −0.883 (−0.956, −0.708) | ||||

| N10 mm | −0.947 (−0.981, −0.861) | |||||

| 2 | Gaze | 0.942 (0.847, 0.978) | 0.790 (0.511, 0.918) | −0.195 (n.s.) (−0.607, 0.299) | −0.967 (−0.988, −0.912) | 0.835 (0.603, 0.937) |

| Saliency (Intensity) | 0.940 (0.844, 0.978) | −0.436 (n.s.) (−0.750, 0.039) | −0.991 (−0.997, −0.976) | 0.966 (0.909, 0.987) | ||

| Saliency (Flicker) | −0.645 (−0.855, −0.255) | −0.908 (−0.966, −0.766) | 0.985 (0.959, 0.994) | |||

| Q10 mm | 0.410 (n.s.) (−0.071, 0.736) | −0.609 (−0.838, −0.199) | ||||

| N10 mm | −0.943 (−0.979, −0.849) | |||||

| 3 | Gaze | 0.964 (0.905, 0.987) | 0.835 (0.603, 0.937) | 0.157 (n.s.) (−0.334, 0.582) | −0.978 (−0.992, −0.940) | 0.890 (0.725, 0.959) |

| Saliency (Intensity) | 0.942 (0.848, 0.979) | −0.010 (n.s.) (−0.474, 0.459) | −0.982 (−0.993, −0.950) | 0.974 (0.929, 0.990) | ||

| Saliency (Flicker) | −0.244 (n.s.) (−0.638, 0.252) | −0.888 (−0.958, −0.720) | 0.983 (0.955, 0.994) | |||

| Q10 mm | −0.022 (n.s.) (−0.484, 0.449) | −0.173 (n.s.) (−0.592, 0.320) | ||||

| N10 mm | −0.936 (−0.976, −0.834) | |||||

| Indicator | Correlation Coefficient (95% Confidence Interval) | |||

|---|---|---|---|---|

| Saliency (Intensity) | Q10 mm | N10 mm | Q10 mm/N10 mm | |

| Gaze | 0.848 (0.630, 0.942) | 0.516 (0.065, 0.792) | −0.801 (−0.923, −0.534) | 0.832 (0.597, 0.935) |

| Saliency (Intensity) | 0.352 (n.s.) (−0.137, 0.703) | −0.710 (−0.884, −0.364) | 0.910 (0.771, 0.966) | |

| Q10 mm | −0.822 (−0.932, −0.577) | 0.517 (0.066, 0.793) | ||

| N10 mm | −0.870 (−0.951, −0.679) | |||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hosokawa, S.; Takahashi, Y.; Inoue, K.; Nagasawa, C.; Watanabe, Y.; Yamamoto, H.; Fukushi, M. Validation of a Saliency Map for Assessing Image Quality in Nuclear Medicine: Experimental Study Outcomes. Radiation 2022, 2, 248-258. https://doi.org/10.3390/radiation2030018

Hosokawa S, Takahashi Y, Inoue K, Nagasawa C, Watanabe Y, Yamamoto H, Fukushi M. Validation of a Saliency Map for Assessing Image Quality in Nuclear Medicine: Experimental Study Outcomes. Radiation. 2022; 2(3):248-258. https://doi.org/10.3390/radiation2030018

Chicago/Turabian StyleHosokawa, Shota, Yasuyuki Takahashi, Kazumasa Inoue, Chimo Nagasawa, Yuya Watanabe, Hiroki Yamamoto, and Masahiro Fukushi. 2022. "Validation of a Saliency Map for Assessing Image Quality in Nuclear Medicine: Experimental Study Outcomes" Radiation 2, no. 3: 248-258. https://doi.org/10.3390/radiation2030018