1. Introduction

Research has shown that the majority of caries lesions are concentrated in disadvantaged socioeconomic populations [

1]. As a dentist working in a community dental clinic in the United States of America—a high-income nation lacking universal dental care coverage [

2]—the author observes this oral health disparity often. Despite being located only an hour’s drive from a large city center, there are few local providers for this subgroup. As a result, the author treats a disproportionate number of patients with rampant caries. A critical part of this dental care is the quick and accurate radiographic diagnosis of caries by the dentist, but in practice, this does not always occur since the accurate radiographic diagnosis of such large numbers of caries can be quite slow. With advances in artificial intelligence (AI), the computer-assisted detection of radiographic caries on bitewing radiographs (BWRs) could potentially help the dentist with this crucial task.

The caries lesion, due to the net demineralization of a tooth, is the most common sign of dental caries disease [

3]. A 2017 study estimated that 2.3 billion people worldwide suffered from caries of permanent teeth and 532 million from caries of deciduous teeth [

4]. In the USA, an estimated one in five adults has untreated caries, with this number disproportionately distributed among patients of lower socioeconomic status [

5]. In children, dental caries is the most common chronic disease, surpassing asthma and hay fever [

6]. If left untreated in children, dental caries can result in pain and infection, leading to problems with eating, speaking, and learning [

7]. In advanced stages, severe dental caries can cause an abscess, potentially resulting in chronic systemic infection or adverse growth patterns [

8]. Therefore, the diagnosis, prevention, and treatment of caries are important to the health of dental patients, especially in high-risk subgroups.

Presently, the use of the BWR is the gold standard for detecting proximal caries lesions [

9,

10]. However, interpreting BWRs can still be subjective due to many factors [

11]. Focal size, film composition, movement, and target/object receptor distance and alignment can all affect density and contrast, as well as sharpness, magnification, and distortion [

12]. Uneven exposure, sensor variability, and natural variations in density and thickness of normal dentition can create areas of confusion by producing dark regions, even in healthy teeth [

13]. Other factors such as radiographic quality, viewing conditions, the interpreter’s experience and expectations, and examination time can further cause discrepancies in caries detection [

14]. One study investigating the accuracy of digital bitewings in the detection of proximal caries in extracted teeth found that two oral and maxillofacial radiologists with six years of clinical experience had detection sensitivities of 71.9–74.1% [

15]. Thus, the gold standard used in controlled conditions can be inexact, even among specialists.

One method to improve caries detection among dentists is to use AI to pre-identify areas of concern. Indeed, multiple studies have shown that AI can detect caries in BWRs with high precision and sensitivity, surpassing experts in some cases [

16,

17,

18,

19,

20]. Companies such as Pearl AI, Dentrix (VideaHealth), and Carestream already offer software or services able to help dentists diagnose radiographic caries using AI. However, the adoption of these proprietary tools is limited by many factors, including novelty. This “newness” allows the author to envision a tool that would be useful for diagnosing caries in a community clinic setting where the diagnosis of caries by the dentist can be rigorous. The proposed diagnostic aid for dentists would need to be readily accessible, easy to use, and fast. The author opined that an AI app running on a mobile phone would satisfy these requirements; however, no such app currently exists.

To address this gap, the aim of this pilot study was to implement and assess the performance of an experimental AI mobile phone app in the real-time detection of caries lesions on BWRs with the use of the back-facing mobile phone video camera. By doing so, the author hopes to put the vast potential of AI for improving patient care quite literally into dentists’ hands.

3. Materials and Method

3.1. Ethics

The BRANY IRB (File #23-12-406-1490) determined that this study was exempt from IRB review.

3.2. Study Design

The author obtained 190 radiographic images from the Internet for the training and validation of an EfficientDet-Lite1 (EDL1, Google, Mountain View, CA, USA) ANN. The trained model was deployed on a Google Pixel 6 mobile phone (GP6) to detect caries on BWRs displayed on a laptop monitor. To measure the performance of the AI mobile phone app, the author used 10 additional Internet BWRs containing 40 caries lesions.

Figure 1 summarizes the overall study design.

3.3. Data Acquisition and Annotation

The author used Google, Bing, and Yahoo search engines to obtain 190 radiographic images from the Internet, collectively containing 620 caries lesions, for training and validation. After entering terms such as “bitewing and caries” or “dental X-rays and caries” into the search bar, the author selected the “Image” button to graphically view the results. To be included in the study, an image had to have at least one clinically treatable and distinctly visible caries lesion. If there was doubt, the lesion was not classified as caries. The threshold for exclusion was primarily based on the lesion being “clinically treatable and distinctly visible”. For example, incipient caries lesions were not included in the training dataset when the two criteria were applied. Also, since the AI model reduced all images to 384 × 384 pixels before making an inference, the author felt that such a reduction in the size of incipient lesions would hinder training.

The selected images were primarily BWRs but also included some periapical radiographs and magnified sections of panoramic X-rays. All images were converted to JPEG, irrespective of source. The size of these images ranged from 7 KB to 735 KB, averaging 79 KB. Some images were partial radiographs, and some had artifacts such as text, lines, circles, and arrows. However, these artifacts were not annotated, so their presence did not affect the training.

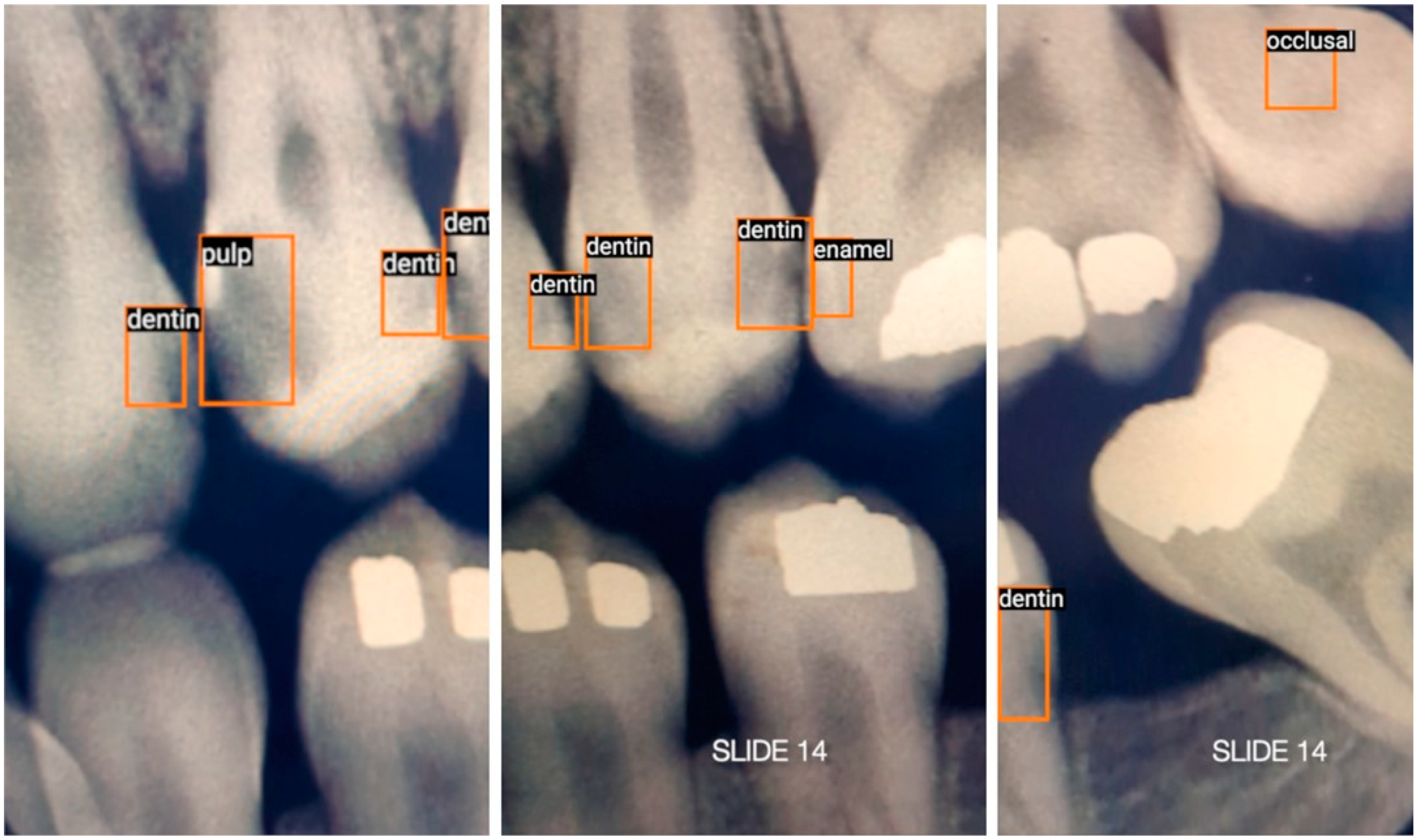

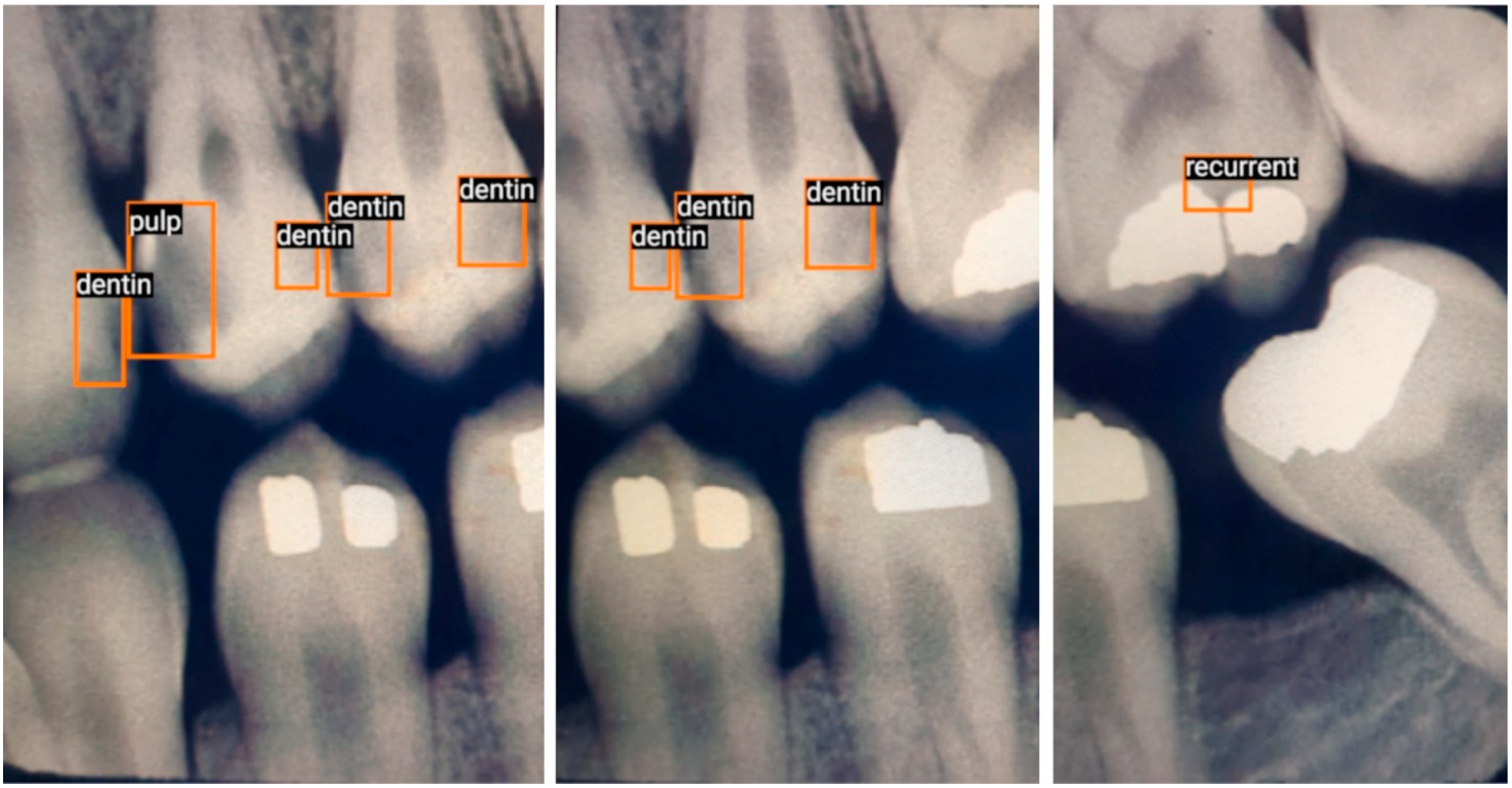

With over 23 years of experience diagnosing caries, the author used LabelImg (Heartex, San Francisco, CA, USA) to annotate (label and draw bounding boxes around) caries lesions in all radiographs. The author identified and classified caries lesions into five ad hoc classes:

Enamel: Interproximal caries visible only within enamel;

Dentin: Interproximal caries visible within dentin. Caries within overlapping contacts were ignored if they did not extend to the dentinoenamel junction;

Pulp: Caries near or extending to the pulp chamber;

Recurrent: Caries under existing restorations;

Occlusal: Caries under the occlusal surface, including superimposed buccal or lingual/palatal caries.

3.4. Training and Augmentation

The training and validation dataset consisted of 31% enamel (n = 195), 24% dentin (n = 150), 16% pulp (n = 99), 15% recurrent (n = 92), and 14% occlusal caries lesions (n = 84).

After annotation, data augmentation created 4960 different presentations of caries for training and validation. Using a 94–6% split, 4640 and 320 annotations were used for training and validation, respectively.

3.5. Testing

For testing, this study used ten BWRs containing 40 caries lesions from Dr. Joen Iannucci’s Radiographic Caries Identification (RCI) website’s test section [

24]. Eight radiographs were randomly selected, one with rampant caries and one with excessive cant in the occlusal plane. The site had been publicly accessible for many years, and the author considered all caries identified on the website to be expert-identified. The author agreed with all 38 pre-identified caries lesions in the ten test BWRs and included two more lesions, thereby totaling 40 lesions.

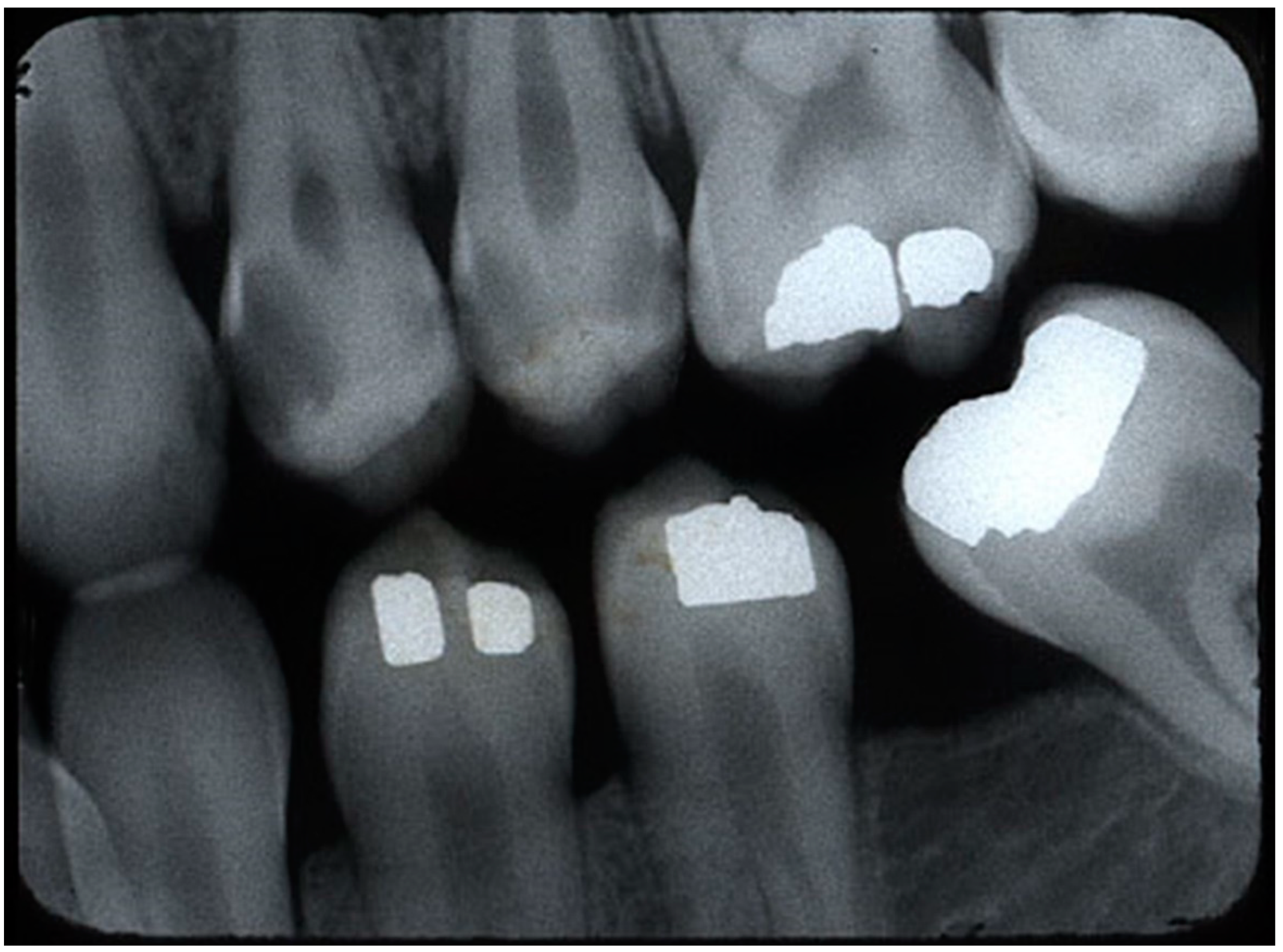

Figure 2 shows a sample of the original BWR from the RCI website.

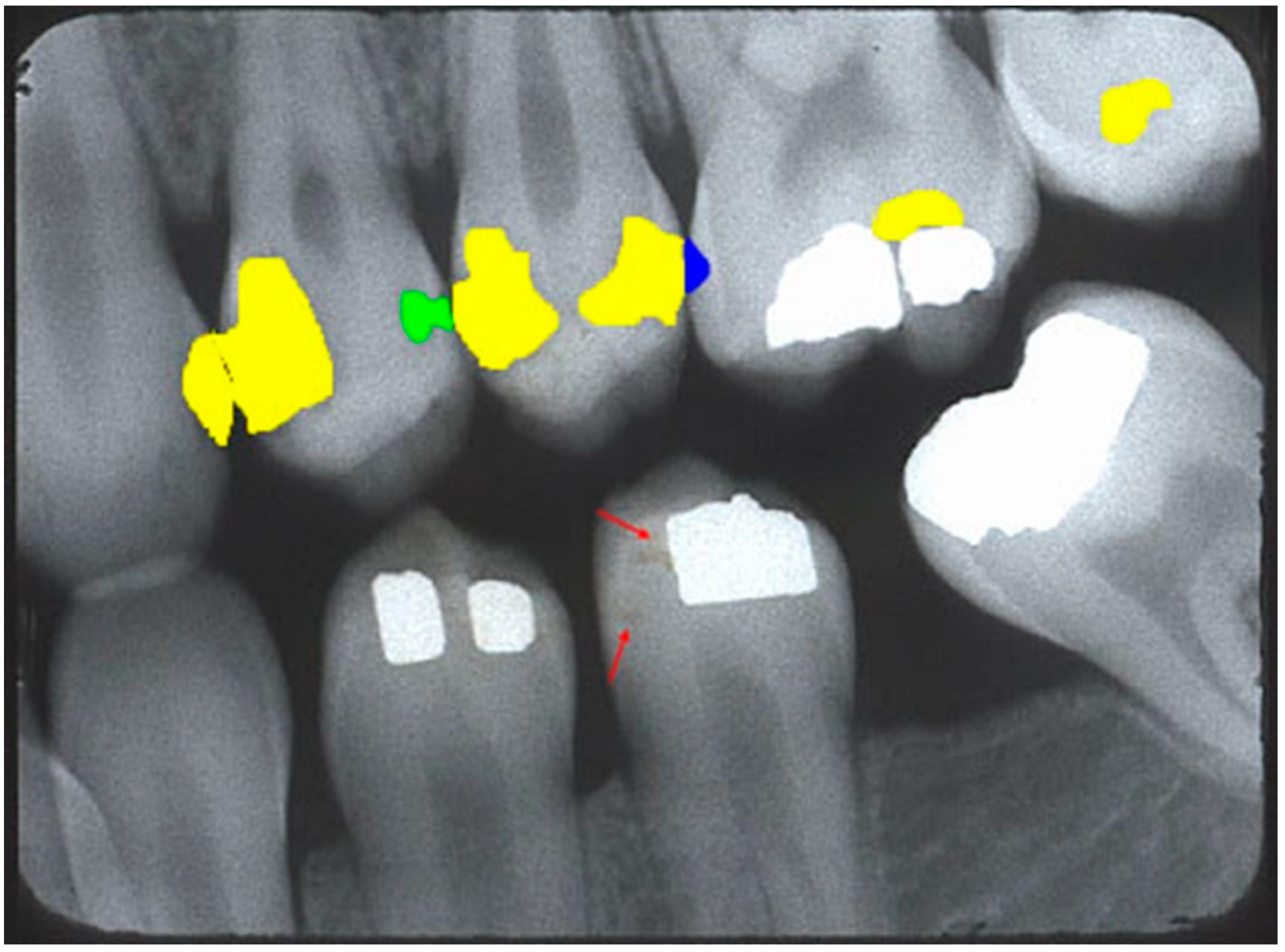

Figure 3 shows a sample BWR containing segmented caries lesions from the website.

3.6. Technical Methodology

All software used in this study began as open-source code and was changed by the author for desired functionality. The author used the TensorFlow ML platform (TF, Google, Mountain View, CA, USA) to create a custom caries detector. For mobile phone deployment, the author used TF Lite (TFL, Google, Mountain View, CA, USA) because it had smaller and more efficient TF models. To optimize performance on the GP6, the author chose the lightweight EDL1 ANN model that is based on the EfficientDet neural network architecture. It is open source, requires no core programming modification, and uses a weighted bi-directional feature pyramid network [

25,

26]. A Dell T140 desktop computer (Dell Inc., Round Rock, Texas, USA) running a virtual Ubuntu OS (Canonical Ltd., London, UK) and Jupyter Notebook provided the environment to run the TFL Model Maker libraries (Google, Mountain View, CA, USA) for training and validation. The model was trained for 25, 50, and 75 epochs using a batch size of eight. The highest average precision at 0.50 Intersection over Union (IoU, AP50) was used to select the best trained model, corresponding to 50 epochs.

Post-training quantization was used to reduce both memory footprint and latency [

27] on the mobile phone. Quantization is the process whereby floating-point representations are converted to fixed integer values to reduce the model size. Thereafter, the quantized model was transferred to Android Studio Electric Eel (ASEE, Google, Mountain View, CA, USA). ASEE compiled an object detection starter app that implemented the trained model and deployed it on the GP6 for testing.

Because the mobile phone scanned in portrait mode, only part of a BWR could be scanned at a given time. To scan the entire BWR, either the phone or the BWR had to be moved from end to end, resulting in two testing methods: handheld (for real-world testing) and stationary scanning of video (for motion standardization). The two results were averaged. A MacBook Pro with a 15” retina display (Apple Inc., Cupertino, CA, USA) displayed both the images and video. During handheld testing, the GP6 scanned the displayed image between 11.5 and 22.7 s and averaged 15.5 s. To standardize the movement of each radiograph during real-time detection, the author used iMovie (Apple Inc., Cupertino, CA, USA) to create a video of the test radiographs by moving each radiograph across the screen, from left to right, over 40 s. In this manner, the GP6 could be held stationary while detecting moving images.

Using the back-facing video camera, the GP6 detected caries, with the detection threshold set to 0.4. Although the app displayed labeled bounding boxes over predicted caries lesions, a detection of any labeled caries lesion was classified as a detection, thereby negating the initial arbitrary classification of caries during annotation. As the images in either the handheld detection of static radiographs or the video changed, bounding boxes would appear and disappear as the detections also changed in real time, thereby increasing the cumulative detection. The native video recorder on the GP6 recorded the real-time detections for later analysis. This video was transferred to the MacBook and imported into iMovie for frame-by-frame counting. Duplicate detections (detection of the same caries lesion in multiple frames) were ignored. Similarly, the same detections of different classes (i.e., the same lesion was detected as “dentin” in one frame and “pulp” in another frame) were also ignored.

3.7. Metrics

For real-time video testing on the GP6, the trained model used the following detection definitions [

28]:

True positive (TP): The number of correct detections in the presence of true caries.

False positive (FP): The number of incorrect detections in the absence of true caries.

False negative (FN): The number of missed detections in the presence of true caries.

Additionally, the following performance metrics were reported:

Sensitivity (Recall, True Positive Rate (TPR)) = TP/(TP + FN).

Precision (Positive Predictive Value (PPV)) = TP/(TP + FP).

F1 Score = 2TP/(2TP + FP + FN).

5. Discussion

5.1. Subjective Goals

Part of this study aimed to implement a diagnostic aid for dentists that was readily accessible, easy to use, and fast. The choice of using a mobile phone satisfied the first feature. With 5.44 billion people using mobile phones in early 2023, which equates to 68% of the total global population [

29], this nearly ubiquitous device is likely used by most dentists. A dentist could easily convert a mobile phone into a caries detector via a simple download, thus making an app-based caries detector potentially globally deployable. As implemented, the caries detector is easy to use. After opening the app, it functions in the same manner as the face-tracking feature commonly found on smartphone cameras, but here, it tracks caries. Since detections occur in real-time video, the user does not have to take individual photos of the BWRs for detection. This obviates the need to continually press the screen to capture selected frames for subsequent inferencing. The experimental app, running on the GP6, made real-time inferences at an estimated 14 FPS (equating to approximately 71 mS per inferenced frame), satisfying the third feature of speed. With newer mobile phones, the speed of inferencing will increase, making detections even faster. Although the app scans in real time, in its current form, all inferences are made on the device. It neither transmits data to the Cloud nor records it. For the GP6 to record on-screen video, multiple steps are needed to activate this native function, making accidental recording unlikely. Thus, by implementing the experimental AI mobile phone app for the real-time detection of caries lesions in BWRs, the author felt that the pilot study achieved the subjective goals.

5.2. Comparing the Results

After implementation, the other focus of this pilot study was to assess the performance of the AI app. When compared with the stationary video test (SVT), the handheld test (HT) detected 17% more true caries at a cost of 33% more incorrect detections, resulting in higher sensitivity and lower precision. Since the HT exhibited greater variations in movement than the SVT and video is a sequential collection of images, the results suggest that using video could potentially increase caries detections without model retraining in existing static studies. The author also noticed this effect in one pilot study [

30]. However, a larger study will be needed to generalize this preliminary observation. Although not measured, the author observed that the model was able to detect some lesions consistently (observed in almost every video frame), while other detections were inconsistent (observed in only one video frame), suggesting that some lesions were harder to detect than others. This mimics the author’s detection abilities, whereby some detections are considered probable, indeterminate, or unlikely, suggesting that near-perfect accuracy may not be possible. Instead, detections could be based on probability, reinforcing the concept that the AI app is a diagnostic aid.

5.3. Comparison with Other Studies

A mid-2023 search of prior investigations of real-time video caries detection in BWRs using DL, ML, or ANN revealed that none used a mobile phone. The few AI studies that exist using mobile phones were limited to the static detection of photographs, with none detecting caries on BWRs or any using real-time video [

31,

32,

33,

34]. The author found only one study reporting real-time caries detection of BWRs that used a tablet computer. Consequently, the author is unaware of any mobile phones being used as a diagnostic aid to assist in the real-time caries detection of BWRs.

When comparing the real-time video results with non-mobile phone static-detection studies, Srivastava et al. produced sensitivity/precision/F1 scores of 0.805/0.615/0.7 [

20]. Lee et al. claimed “quite accurate performance” with scores of 0.65/0.633/0.641 [

14]. The AI detection model of Bayrakdar et al. “showed superiority to assistant specialists” with scores of 084./0.84/0.84 [

17]. Geetha et al. produced near-perfect scores of 0.962/0.963/0.962 using adaptive threshold segmentation on a backpropagation neural network model with 105 images [

19]. The aggregate GP6 detections averaged 0.625/0.706/0.661.

Table 2 summarizes the aggregate average with these selected studies, suggesting its cumulative F1 score is like Lee et al.’s static results, with relatively low performance.

To the best of the author’s knowledge, only one published study investigated real-time video caries detection of BWRs [

30]. However, that pilot study used a Microsoft Surface Pro 3 Tablet computer as the detection device instead of a mobile phone. That tablet study (TS) detected radiographic caries with average aggregate sensitivity, precision, and F1 scores of 68, 88, and 77%, respectively. The averages for this mobile phone study (MPS) were 67.5, 69.2, and 68.4%, respectively. Unsurprisingly, the results are somewhat similar since both studies had many commonalities, including the use of Internet training data and the EfficientDet AI model. However, there were some key differences between the studies. This MPS study used more Internet training data, and the results were averaged using handheld detection and static detection of moving BWRs, while the TS compared handheld detection with non-video static detection. Also, this MPS used the quantized, lightweight EDL1 (384 × 384) object detection model, while the TS used the EfficientDet-D0 (512 × 512) model. This difference could explain the higher precision of the TS since that model was trained and tested with a higher image resolution. Finally, this MPS used Internet radiographs for testing, while the TS used patient (non-Internet) radiographs. Based on the results of the pilot TS and the clinical origins of the MPS’s test (Internet) BWRs, the author believes this mobile phone AI app would be able to detect lesions in a clinical setting. However, another study will be needed to confirm this hypothesis.

5.4. Ethics of Using Internet Images

The ethics of using anonymous dental X-rays acquired from the Internet for AI research need to be reviewed, as this issue is presently evolving. Some ethical parameters to consider include patient privacy, consent, and ownership of the data, among others [

35]. From these issues, questions arise as to whether patients need to consent to secondary use of their anonymous data and who truly owns the images.

Recently, Larson et al. proposed an ethical framework for sharing and using clinical images for AI that, in the author’s opinion, appears to balance individual rights versus societal rights [

36]. The premise of the framework is that once the primary purpose of usage of the clinical data—here, the radiographic image—is fulfilled, the “secondary use of clinical data should be treated as a form of public good, to be used for the benefit of future patients, and not to be sold for profit or under exclusive arrangements” [

36]. Essentially, all participants who benefit from the healthcare system have a moral obligation to contribute towards improving it [

37].

Using some of the recommendations, the author obtained a waiver for an ethics board review and approval (BRANY IRB File # 23-12-406-1490), did not commercialize the data, attempted no steps to deanonymize the data, and acted as an ethical data steward, among other steps, when using Internet data for this study.

5.5. Potential Benefits of Using Internet Images

The author chose Internet radiographs over other dataset curation methods primarily due to their ease of acquisition. The acquisition of the radiographs was quick and consisted of search queries using commonly used search engines. An added benefit was its high variability. As little is known about data quality relevant for clinical AI use [

38], the use of highly variable Internet images could potentially benefit the clinical application of a mobile phone caries detector. For example, John Adeoye et al. found that while ML models for predicting different oral cancer outcomes ranged from 64 to 100%, such models lacked clinical application, partly due to the prevalent use of small, single-center datasets, and partly because they concluded that it was imperative to integrate data from different treatment centers [

39]. This need to obtain “diverse” data by using Internet images from many sources resulted in larger variations than other studies that sourced BWRs from a single center, potentially benefiting the training of the model.

5.6. Limitations

Since this was a preliminary investigation using Internet data, there were many limitations, especially with dataset acquisition, the choice of ANN model, and bias in the ground truth training data.

The Internet images were typically of low resolution and had artifacts such as annotations, lines, and other defects. Because the model transformed all images to 384 × 384 pixels before making an inference, the lower resolution of these images likely did not affect its ability to detect caries. Another drawback of using these images was the comparatively small number of usable radiographs with caries, so the training dataset is small compared to other studies. However, it is important to note that the 190 images had 620 lesions prior to augmentation. Using a 94–6% split for training and validation, the model was trained with 580 distinct caries lesions (later augmented to 4640). The atypical split was used to maximize the amount of training data. Ideally, a larger number of caries lesions for training would have been desirable, but a larger study will be needed.

Given that research in real-time caries detection using a mobile phone is limited and model selection is both technically challenging and an art form, the choice of using the EDL1 ANN model over others was based on deployability. While better-performing models exist, very few can be deployed on a mobile phone. Also, the better-performing EfficientDet-Lite4 model was available, but the lower-performing EDL1 was chosen to minimize latency. Although the experimental AI mobile phone app produced an aggregate average sensitivity of 62.5% and an average precision of 70.6%, the clinical implementation of such a diagnostic aid would require much higher sensitivity and precision. The comparatively low sensitivity is due to a high FN, and the low precision suggests a high FP. Prior to mobile phone deployment, the trained model produced an AP50 of 0.516 for the ten static test images using the TFL Model Maker evaluation function, confirming its low performance. Considering the compromises made to create a functional caries detector for a mobile phone, it is likely that the performance was due to model selection rather than training dataset quality or quantity.

During testing, even with quantization and the selection of the lower-performing but faster EDL1 model, the GP6 made inferences at an estimated 14 FPS (equating to approximately 71 mS per inferenced frame). This lagged the video scanning rate of 30 FPS (equating to a 33 mS screen refresh rate). The difference between the faster refresh and slower inference rate caused a delay in the displayed detection boxes as the camera moved. If the phone moved quickly or jerked, these detection boxes would be displayed off-center. This was especially noticeable during handheld detection. However, with the increased computing power of successive generations of mobile phones, this latency will diminish, allowing for the use of larger, better-performing models in future studies.

Finally, having one author identify and classify all training and validation caries lesions is a major limitation since it introduces bias into the trained model. The trained model’s ability to detect caries was dependent on the author’s detection abilities, which likely differed from those of other practitioners. However, the impact of bias was reduced during testing since another expert pre-identified 38 of the 40 test lesions. Although no calibration was carried out, the close agreement in the ground truth test dataset between the author and an expert suggests that both identified caries similarly.