Human Factors Requirements for Human-AI Teaming in Aviation

Abstract

:1. Artificial Intelligence in Aviation

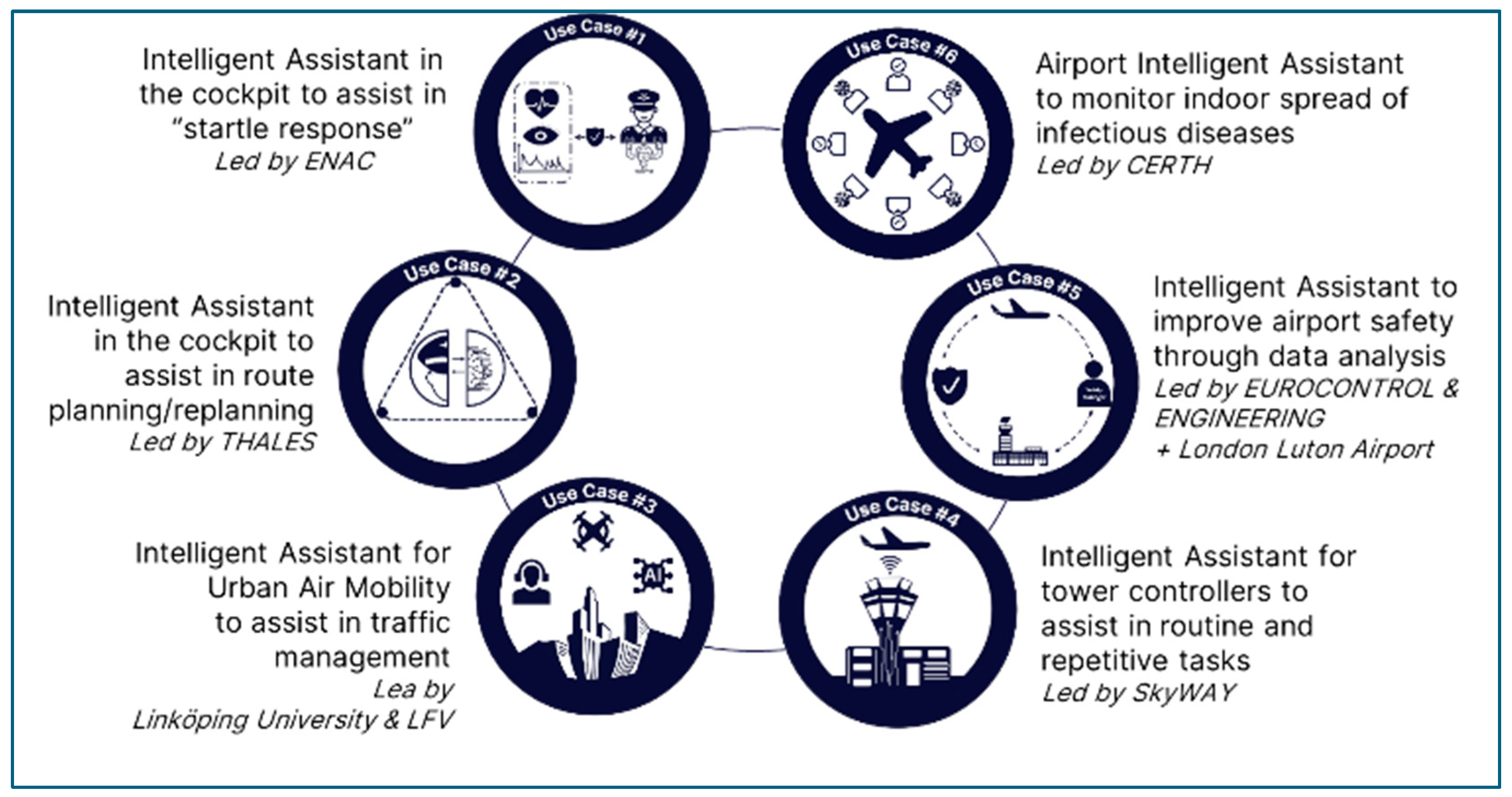

- UC1—a cockpit AI to help a single pilot recover from a sudden event that induces ‘startle response’. Startle response is when a pilot in the cockpit is startled by a sudden, unexpected event in or outside the cockpit, leading to a temporary disruption of cognitive functioning, usually lasting approximately 20 s. [10]. The AI directs the pilot concerning instruments to focus on in order to resolve the emergency situation. Although the AI supports and directs the pilot, the pilot remains in charge throughout.

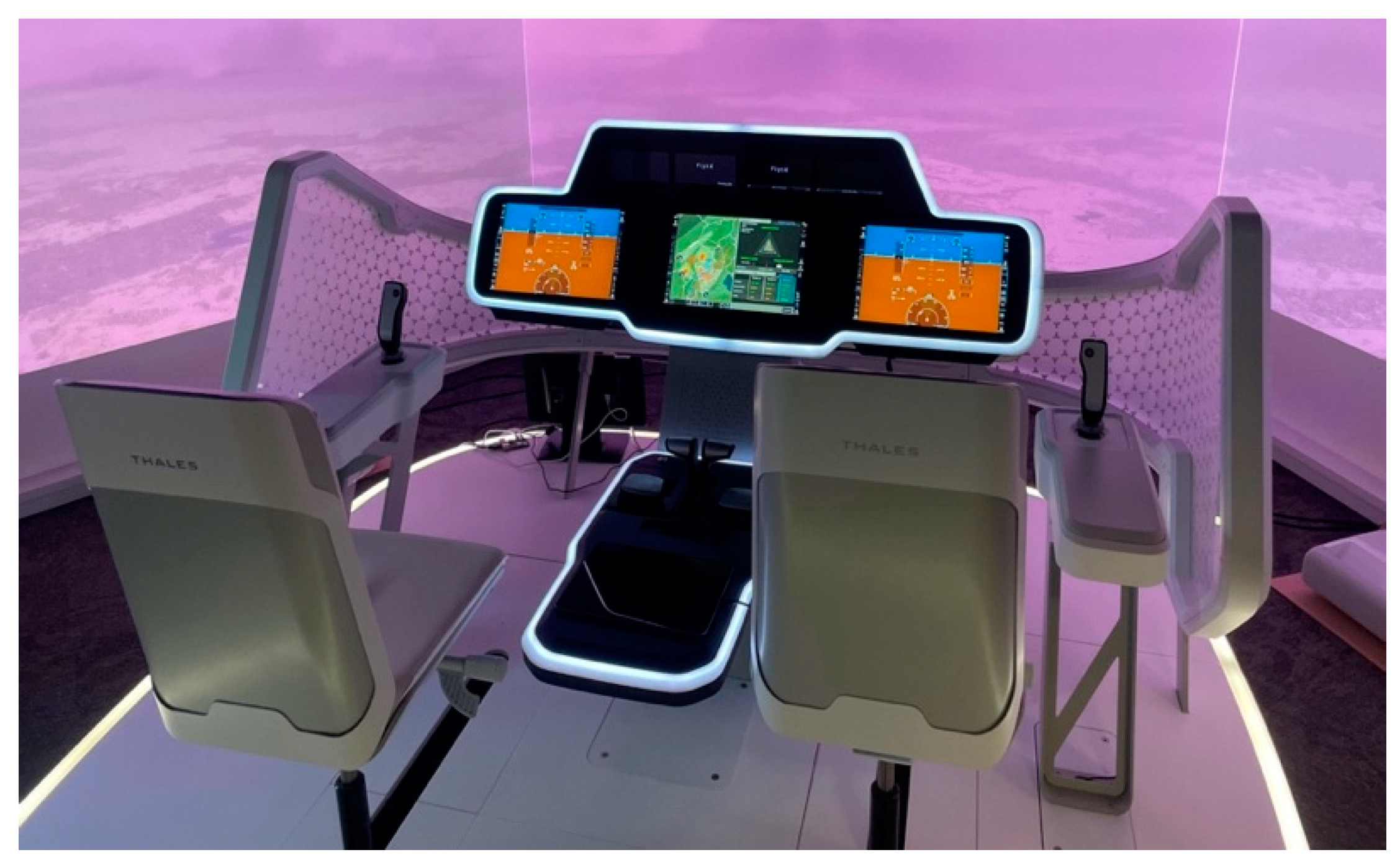

- UC2—a cockpit AI to help flight crew re-route an aircraft to a new airport destination due to deteriorating weather or airport closure, for example, taking into account a large number of factors (e.g., category of aircraft and runway length; fuel available and distance to airport; connections for passengers, etc.). The flight crew remain in charge, but communicate/negotiate with the AI to derive the optimal solution.

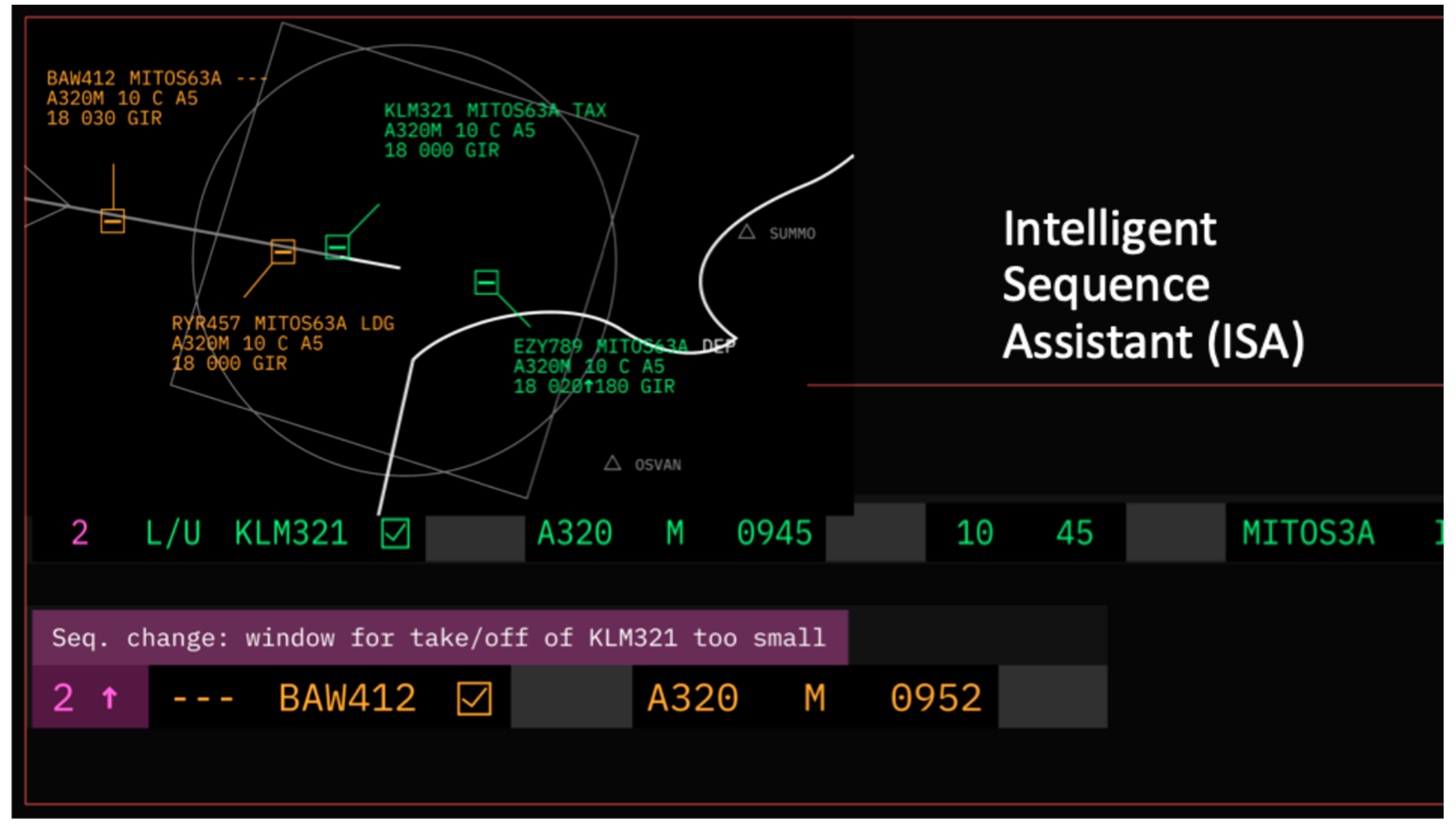

- UC4—a digital assistant for remote tower operations, to alleviate the tower controller’s workload by carrying out repetitive tasks. The tower controller monitors the situation and intervenes if there is a deviation from normal (e.g., a go-around situation, or an aircraft that fails to vacate the runway). The controller is in charge, but the AI can take certain actions unless the controller vetoes them.

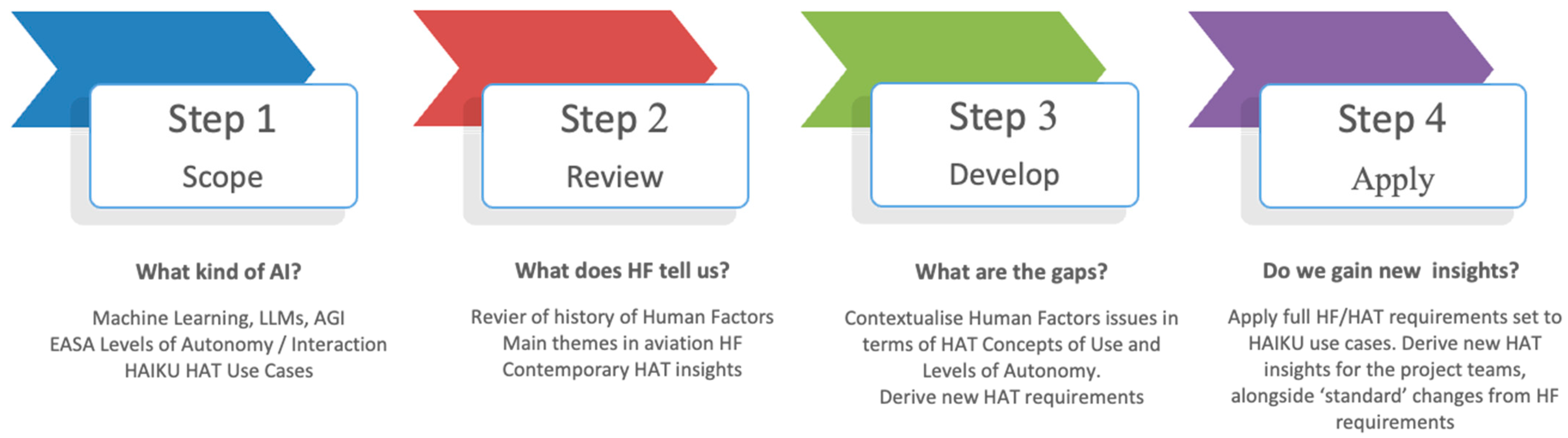

2. Research Questions and Approach

- What type of AI and Human-AI Teaming characteristics are likely in future aviation concepts?

- What does the existing body of Human Factors knowledge suggest we should focus on for HAT systems?

- What are the Human-AI Teaming requirements arising from the gap between what we have currently in HF requirements systems, and the challenges of future HAT concepts of operation?

- Are the new HAT requirements fit for purpose, i.e., can they be used by project teams to identify new system design insights to safeguard and optimise human-AI team performance?

3. Step 1: Scoping the HAT Requirements

3.1. What Kind of AI?

“…the broad suite of technologies that can match or surpass human capabilities, particularly those involving cognition.”

3.2. AI Levels of Autonomy—the European Aviation Regulatory Perspective

- 1A—Machine learning support (already existing today)

- 1B—cognitive assistant (equivalent to advanced automation support)

- 2A—cooperative agent, able to complete tasks as demanded by the operator

- 2B—collaborative agent–an autonomous agent that works with human colleagues, but which can take initiative and execute tasks, as well as being capable of negotiating with its human counterparts

- 3A—AI executive agent–the AI is basically running the show, but there is human oversight, and the human can intervene (sometimes called management by exception)

- 3B—the AI is running everything, and the human cannot intervene.

- Cooperation Level 2A: cooperation is a process in which the AI-based system works to help the end user accomplish his or her own goal. The AI-based system works according to a predefined task-allocation pattern with informative feedback to the end user on the decisions and/or actions implementation. The cooperation process follows a directive approach. Cooperation does not imply a shared situation awareness between the end user and the AI-based system. Communication is not a paramount capability for cooperation.

- Collaboration Level 2B: collaboration is a process in which the end user and the AI-based system work together and jointly to achieve a predefined shared goal and solve a problem through a co-constructive approach. Collaboration implies the capability to share situation awareness and to readjust strategies and task allocation in real time. Communication is paramount to share valuable information needed to achieve the goal.

3.3. Example Intelligent Agent Use Cases

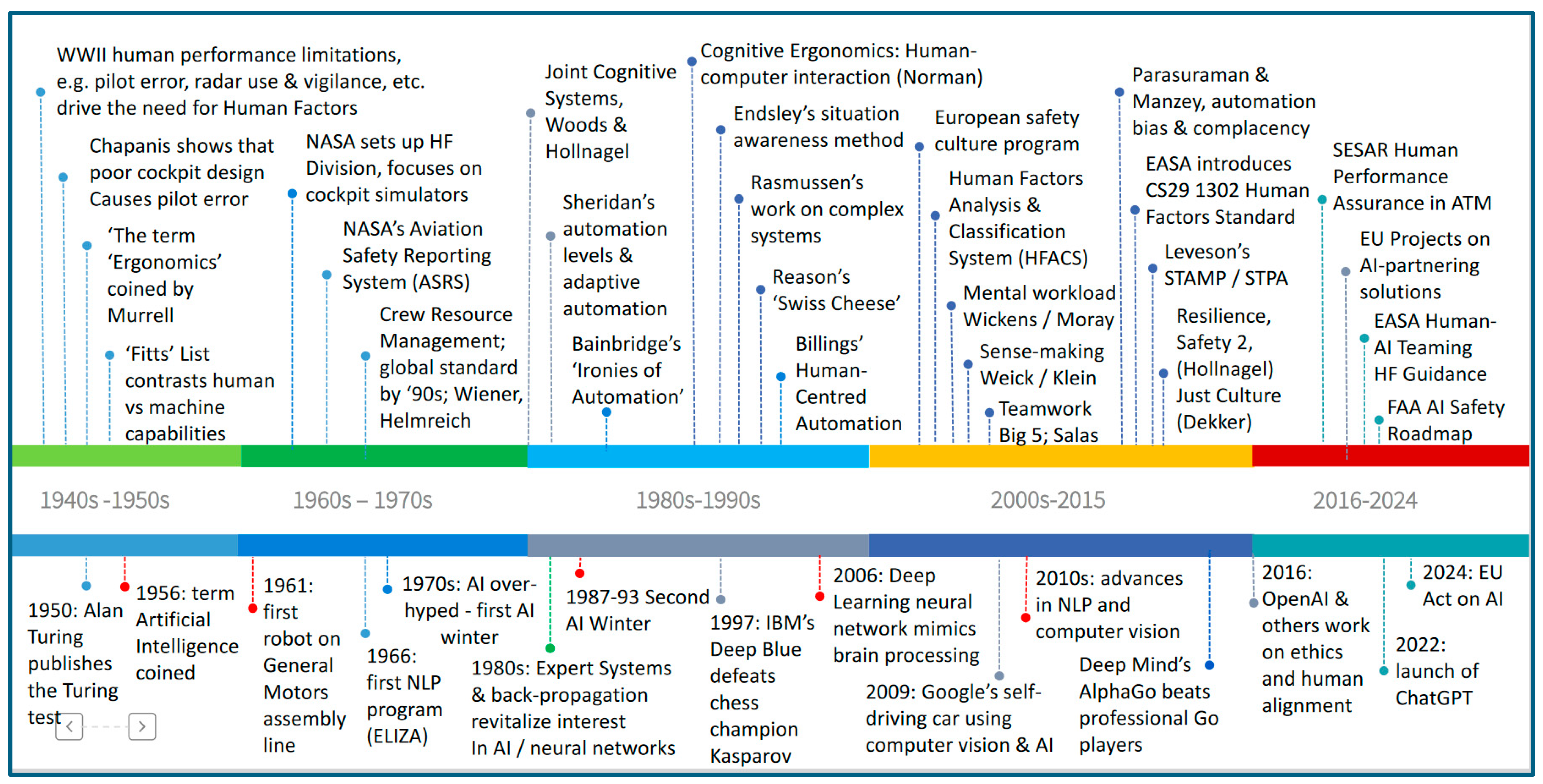

4. Review of Human Factors

4.1. Key Waypoints in Human Factors

4.1.1. Fitts’ List

4.1.2. Aviation Safety Reporting System

4.1.3. Crew Resource Management

4.1.4. Human-Centred Design and Human Computer Interaction

4.1.5. Joint Cognitive Systems

4.1.6. Ironies of Automation

4.1.7. Levels of Automation and Adaptive Automation

- The computer offers no assistance, human must take all decisions and actions

- The computer offers a complete set of decision/action alternatives, or

- Narrows the selection down to a few, or

- Suggests one alternative, and

- Executes that suggestion if the human approves, or

- Allows the human a restricted veto time before automatic execution

- Executes automatically, then necessarily informs the human, and

- Informs the human only if asked, or

- Informs the human only if it, the computer, decides to

- The computer decides everything, acts autonomously, ignores the human

4.1.8. Situation Awareness, Mental Workload, and Sense-Making

4.1.9. Rasmussen and Reason–Complex Systems, Swiss Cheese, and Accident Aetiology

4.1.10. Human-Centred Automation

- The human must be in command

- To command effectively, the human must be involved

- To be involved, the human must be informed

- The human must be able to monitor the automated system

- Automated systems must be predictable

- Automated systems must be able to monitor the human

- Each element of the system must have knowledge of the others’ intent

- Functions should be automated only if there is good reason to do so

- Automation should be designed to be simple to train, learn, and operate

4.1.11. HFACS (and NASA–HFACS and SHIELD)

4.1.12. Safety Culture

4.1.13. Teamwork and the Big Five

4.1.14. Bias and Complacency

4.1.15. SHELL, STAMP/STPA, HAZOP, and FRAM

4.1.16. Just Culture and AI

4.1.17. HF Requirements Systems–EASA CS25.1302, SESAR HP, SAFEMODE, FAA

4.2. Contemporary Human Factors and AI Perspectives

4.2.1. HACO—A Human-AI Teaming Taxonomy

- Directing attention to critical features, suggestions and warnings during an emergency or complex work situation. This could be of particular benefit in flight upset conditions in aircraft suffering major disturbances, as in UC1.

- Calibrated Trust wherein the humans learn when to trust and when to ignore the suggestions or override the decisions of the AI, an over-riding concern in UC2.

- Adaptability to the tempo and state of the team functioning, as in the controllers’ approach to using ISA in UC4.

4.2.2. AI Anthropomorphism and Emotional AI

5. Human Factors Requirements for Human-AI Systems

- It must capture the key Human Factors areas of concern with Human-AI systems.

- It must specify these requirements in ways that are answerable and justifiable via evidence.

- It must accommodate the various forms of AI that humans may need to interact with in safety-critical systems (note—this currently excludes LLMs) both now and in the medium future, including ML and Intelligent Agents or Assistants (EASA’s Categories 1A through to 3A).

- It must be capable of working at different stages of design maturity of the Human-AI system, from early concept through to deployment into operations.

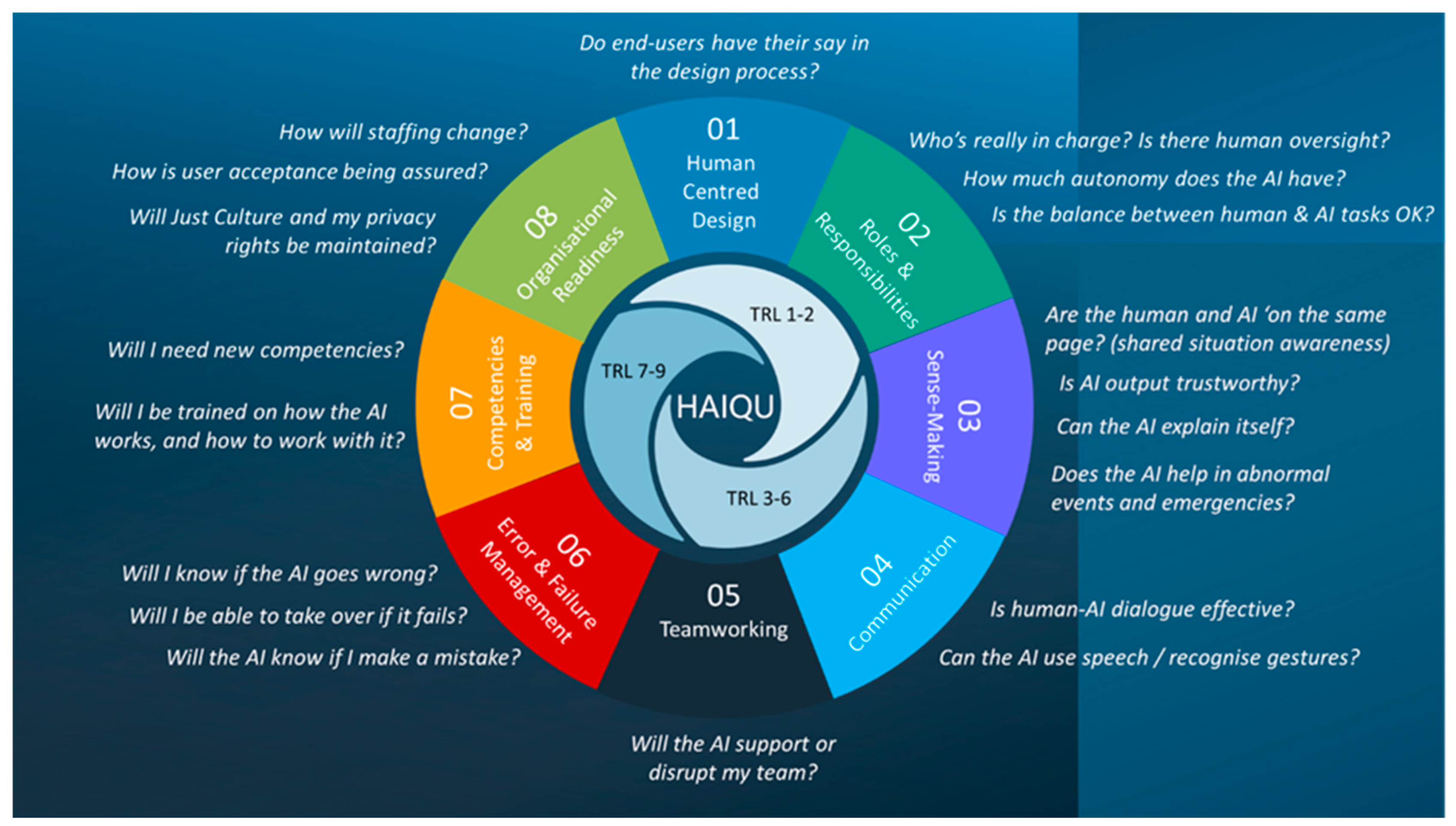

5.1. HAT Requirements and a Design Life-Cycle Framework

5.2. The Human Factors HAT Requirements Set Architecture

- Human-Centred Design—this is an over-arching Human Factors area, aimed at ensuring the HAT is developed with the human end-user in mind, seeking their involvement in every design stage.

- Roles and responsibilities—this area is crucial if the intent is to have a powerful productive human-AI partnership, and helps ensure the human retains both agency and ‘final authority’ of the HAT system’s output. It is also a reminder that only humans can have responsibility—an AI, no matter how sophisticated, is computer code. It also aims to ensure the end user still has a viable and rewarding role.

- Sense-Making—this is where shared situation awareness, operational explainability, and human-AI interaction sit, and as such has the largest number of requirements. Arguably, this area could be entitled (shared) situation awareness, but sense-making includes not only what is happening and going to happen, but why it is happening, and why the AI makes certain assessments and predictions.

- Communication—this area will no doubt evolve as HATs incorporate natural language processing (NLP), whether using pilot/ATCO ‘procedural’ phraseology or natural language.

- Teamworking—this is possibly the area in most urgent need of research for HAT, in terms of how such teamworking should function in the future. For now, the requirements are largely based on existing knowledge and practices.

- Errors and Failure Management—the requirements here focus on identification of AI ‘aberrant behaviour’ and the subsequent ability of human end users to detect, correct, or ‘step in’ to recover the system safely.

- Competencies and Training—these requirements are typically applied once the design is fully formalised, tested, and stable (TRL 7 onwards). The requirements for preparing end users to work with and manage AI-based systems will not be ‘business as usual’; new training approaches and practices will almost certainly be required (e.g., pilots and controllers who participated in UC1, 2 and 4 simulations stated they would want specialised training).

- Organisational Readiness—the final phase of integration into an operational system is critical if the system is to be accepted and used by its intended user population. In design integration, it is easy to fall at this last fence. Impacts on staffing levels and levels of pay, concerns of staff and unions, as well as ethical and well-being issues are key considerations at this stage to ensure a smooth HAT-system integration. This is therefore where socio-technical considerations come to the fore.

- Human-Centred Design (5)

- Roles and Responsibilities

- Human and AI Autonomy (7)

- Balance of Human and AI Tasks (13)

- Human Oversight (10)

- Sense-Making

- (a)

- Shared Situation Awareness (12)

- (b)

- Trustworthy Information (12)

- (c)

- Explainability (13)

- (d)

- Abnormal Events, Degraded Modes and Emergencies (7)

- Communication

- Human-AI Dialogue (10)

- Speech and Gestures (11)

- Teamworking (11)

- Errors and Failure Management (13)

- Competencies and Training

- New Competencies (9)

- New Training Needs (9)

- Organisational Readiness

- Staffing (8)

- User Acceptance (9)

- Ethics and Wellbeing (8)

5.3. Detailed HAT Requirements

6. Application of Human Factors Requirements to Three HAT Prototypes

7. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Burkov, A. The Hundred Page Machine Learning Book; Andriy Burkov: Quebec City, QC, Canada, 2019; ISBN 199957950X. [Google Scholar]

- Morgan, G.; Grabowski, M. Human machine teaming in Mobile Miniaturized Aviation Logistics systems in safety-critical settings. J. Saf. Sustain. 2025, in press. [CrossRef]

- Dalmau-Codina, R.; Gawinowski, G. Learning with Confidence the Likelihood of Flight Diversion Due to Adverse Weather at Destination. IEEE Trans. Intell. Transp. Syst. 2023, 24, 5615–5624. [Google Scholar] [CrossRef]

- European Commission. CORDIS Results Pack on AI In Air Traffic Management: A Thematic Collection of Innovative EU-Funded Research Results; European Commission: Luxembourg, 2022; Available online: https://www.sesarju.eu/node/4254 (accessed on 20 March 2025).

- OpenAI. GPT-4 Technical Report. ArXiv Preprint abs/2303.08774. 2023. Available online: https://arxiv.org/abs/2303.08774 (accessed on 20 March 2025).

- European Parliament. EU AI Act: First Regulation on Artificial Intelligence. 2023. Available online: https://www.europarl.europa.eu/topics/en/article/20230601STO93804/eu-ai-act-first-regulation-on-artificial-intelligence (accessed on 20 March 2025).

- Weinberg, J.; Goldhardt, J.; Patterson, S.; Kepros, J. Assessment of accuracy of an early artificial intelligence large language model at summarizing medical literature: ChatGPT 3.5 vs. ChatGPT 4.0. J. Med. Artif. Intell. 2024, 7, 33. [Google Scholar] [CrossRef]

- Huang, L.; Yu, W.; Ma, W.; Zhong, W.; Feng, Z.; Wang, H.; Chen, Q.; Peng, W.; Feng, J.; Qin, B.; et al. A Survey on Hallucination in Large Language Models: Principles, Taxonomy, Challenges, and Open Question. ACM Trans. Inf. Syst. 2024, 43, 1–55. [Google Scholar] [CrossRef]

- Bjurling, O.; Müller, H.; Burgén, J.; Bouvet, C.J.; Berberian, B. Enabling Human-Autonomy Teaming in Aviation: A Framework to Address Human Factors in Digital Assistants Design. J. Phys. Conf. Ser. 2024, 2716, 012076. [Google Scholar] [CrossRef]

- Duchevet, A.; Dong-Bach, V.; Peyruqueou, V.; De-La-Hogue, T.; Garcia, J.; Causse, M.; Imbert, J.-P. FOCUS: An Intelligent Startle Management Assistant for Maximizing Pilot Resilience. In Proceedings of the ICCAS 2024, Toulouse, France, 16–17 May 2024. [Google Scholar]

- SAFETEAM EU Project. 2023. Available online: https://safeteamproject.eu/1186 (accessed on 20 March 2025).

- Duchevet, A.; Imbert, J.-P.; De La Hoguea, T.; Ferreirab, A.; Moensb, L.; Colomerc, A.; Canteroc, J.; Bejaranod, C.; Rodríguez Vázquez, A.L. HARVIS: A digital assistant based on cognitive computing for non-stabilized approaches in Single Pilot Operations. In Proceedings of the 34th Conference of the European Association for Aviation Psychology, Athens, Transportation Research Procedia, Gibraltar, UK, 26–30 September 2022; Volume 66, pp. 253–261. [Google Scholar] [CrossRef]

- Minaskan, N.; Alban-Dormoy, C.; Pagani, A.; Andre, J.-M.; Stricker, D. Human Intelligent Machine Teaming in Single Pilot Operation: A Case Study. In Augmented Cognition; Bd. 13310; Springer International Publishing: Cham, Switzerland, 2022; pp. 348–360. [Google Scholar]

- Kirwan, B.; Venditti, R.; Giampaolo, N.; Villegas Sanchez, M. A Human Centric Design Approach for Future Human-AI Teams in Aviation. In Human Interactions and Emerging Technologies, Proceedings of the IHIET 2024, Venice, Italy, 26–28 August 2024; Ahram, T., Casarotto, L., Costa, P., Eds.; AHFE International: Orlando, FL, USA, 2024. [Google Scholar] [CrossRef]

- Billings, C.E. Human-Centred Aviation Automation: Principles and guidelines (Report No. NASA-TM-110381); National Aeronautics and Space Administration: Washington, DC, USA, 1996. Available online: https://ntrs.nasa.gov/citations/19960016374 (accessed on 20 March 2025).

- Bergh, L.I.; Teigen, K.S. AI safety: A regulatory perspective. In Proceedings of the 2024 4th International Conference on Applied Artificial Intelligence (ICAPAI)|979-8-3503-4976-4/24/$31.00 ©2024 IEEE|, Halden, Norway, 16 April 2024. [Google Scholar] [CrossRef]

- EASA. EASA Concept Paper: First Usable Guidance for level 1 & 2 Machine Learning Applications. February 2023. Available online: https://www.easa.europa.eu/en/newsroom-and-events/news/easa-artificial-intelligence-roadmap-20-published (accessed on 20 March 2025).

- EASA. Artificial Intelligence Concept Paper Issue 2. Guidance for Level 1 & 2 Machine-Learning Applications. 2024. Available online: https://www.easa.europa.eu/en/document-library/general-publications/easa-artificial-intelligence-concept-paper-issue-2 (accessed on 20 March 2025).

- Naikar, N.; Brady, A.; Moy, G.M.; Kwok, H.W. Designing human-AI systems for complex settings: Ideas from distributed, joint and self-organising perspectives of sociotechnical systems and cognitive work analysis. Ergonomics 2023, 55, 1669–1694. [Google Scholar] [CrossRef] [PubMed]

- Macey-Dare, R. How Soon is Now? Predicting the Expected Arrival Date of AGI- Artificial General Intelligence. 1204. 2023. Available online: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4496418 (accessed on 20 March 2025).

- Lamb, H.; Levy, J.; Quigley, C. Simply Artificial Intelligence. In DK Simply Books; Penguin: London, UK, 2023. [Google Scholar]

- Turing, A.M.; Copeland, B.J. The Essential Turing: Seminal Writings in Computing, Logic, Philosophy, Artificial Intelligence, and Artificial Life Plus The Secrets of Enigma; Oxford University Press: Oxford, UK, 2004. [Google Scholar]

- Turing, A.M. Computing machinery and intelligence. Mind 1950, 49, 433–460. [Google Scholar] [CrossRef]

- Subrata, D. It Began with Babbage: The Genesis of Computer Science; Oxford University Press: Oxford, UK, 2014; p. 22. ISBN 978-0-19-930943-6. [Google Scholar]

- Mueller, J.P.; Massaron, L.; Diamond, S. Artificial Intelligence for Dummies, 3rd ed.; John Wiley & Sons: Hoboken, NJ, USA, 2024. [Google Scholar]

- Lighthill, J. Artificial Intelligence: A General Survey. In Artificial Intelligence—A Paper Symposium; UK Science Research Council: Swindon, UK, 1973; Available online: http://www.chilton-computing.org.uk/inf/literature/reports/lighthill_report/p001.htm (accessed on 20 March 2025).

- Available online: https://www.perplexity.ai/page/a-historical-overview-of-ai-wi-A8daV1D9Qr2STQ6tgLEOtg (accessed on 20 March 2025).

- DeCanio, S. Robots and Humans—Complements or substitutes? J. Macroecon. 2016, 49, 280–291. [Google Scholar] [CrossRef]

- Defoe, A. AI Governance » A Research Agenda; Future of Humanity Institute: Oxford, UK, 2017; Available online: https://www.fhi.ox.ac.uk/wp-content/uploads/GovAI-Agenda.pdf (accessed on 20 March 2025).

- Dubey, P.; Dubey, P.; Hitesh, G. Enhancing sentiment analysis through deep layer integration with long short-term memory networks. Int. J. Electr. Comput. Eng. 2025, 15, 949–957. [Google Scholar] [CrossRef]

- Luan, H.; Yang, K.; Hu, T.; Hu, J.; Liu, S.; Li, R.; He, J.; Yan, R.; Guo, X.; Qian, X.; et al. Review of deep learning-based pathological image classification: From task-specific models to foundation models. Future Gener. Comput. Syst. 2025, 164, 107578. [Google Scholar] [CrossRef]

- Elazab, A.; Wang, C.; Abdelaziz, M.; Zhang, J.; Gu, J.; Gorriz, J.M.; Zhang, Y.; Chang, C. Alzheimer’s disease diagnosis from single and multimodal data using machine and deep learning models: Achievements and future directions. Expert Syst. Appl. 2024, 255, 124780. [Google Scholar] [CrossRef]

- Lopes, N.M.; Aparicio, M.; Neves, F.T. Challenges and Prospects of Artificial Intelligence in Aviation: Bibliometric Study, Data Science and Management. J. Clean Prod. 2016, 112, 521–531. [Google Scholar] [CrossRef]

- O’Neill, T.; McNeese, N.J.; Barron, A.; Schelble, B.G. Human- autonomy teaming: A review and analysis of the empirical literature. Hum. Fact. 2022, 64, 904–938. [Google Scholar] [CrossRef]

- Berretta, S.; Tausch, A.; Ontrup, G.; Gilles, B.; Peifer, C.; Kluge, A. Defining human-AI teaming the human-centered way: A scoping review and network analysis. Front. Artif. Intell. 2023, 6, 1250725. [Google Scholar] [CrossRef]

- Hicks, M.T.; Humphries, J.; Slater, J. ChatGPT is bullshit. Ethics Inf. Technol. 2024, 26, 38. [Google Scholar] [CrossRef]

- Kaliardos, W. Enough Fluff: Returning to Meaningful Perspectives on Automation; FAA, US Department of Transportation: Washington, DC, USA, 2023. Available online: https://rosap.ntl.bts.gov/view/dot/64829 (accessed on 20 March 2025).

- FAA. Roadmap for Artificial Intelligence Safety. Assurance. 2024. Available online: https://www.faa.gov/aircraft/air_cert/step/roadmap_for_AI_safety_assurance (accessed on 20 March 2025).

- Kirwan, B. 2B or not 2B? The AI Challenge to Civil Aviation Human Factors. In Contemporary Ergonomics and Human Factors 2024; Golightly, D., Balfe, N., Charles, R., Eds.; Chartered Institute of Ergonomics & Human Factors: Kenilworth, UK, 2024; pp. 36–44. ISBN 978-1-9996527-6-0. [Google Scholar]

- Kilner, A.; Pelchen-Medwed, R.; Soudain, G.; Labatut, M.; Denis, C. Exploring Cooperation and Collaboration in Human AI Teaming (HAT)—EASA AI Concept paper V2.0. In Proceedings of the 14th European Association for Aviation Psychology Conference EAAP 35, Thessaloniki, Greece, 8–11 October 2024. [Google Scholar]

- Fitts, P.M. Human Engineering for an Effective Air-Navigation and Traffic-Control System; Ohio State University: Columbus, OH, USA, 1951; Available online: https://psycnet.apa.org/record/1952-01751-000 (accessed on 20 March 2025).

- Chapanis, A. On the allocation of functions between men and machines. Occup. Psychol. 1965, 39, 1–11. [Google Scholar]

- De Winter, J.C.F.; Hancock, P.A. Reflections on the 1951 Fitts list: Do humans believe now that machines surpass them? Paper presented at 6th International Conference on Applied Human Factors and Ergonomics (AHFE 2015) and theAffiliated Conferences, AHFE 2015. Procedia Manuf. 2015, 3, 5334–5341. [Google Scholar] [CrossRef]

- Helmreich, R.L.; Merritt, A.C.; Wilhelm, J.A. The Evolution of Crew Resource Management Training in Commercial Aviation. Int. J. Aviat. Psychol. 1999, 9, 19–32. [Google Scholar] [CrossRef]

- Available online: https://en.wikipedia.org/wiki/Crew_resource_management (accessed on 20 March 2025).

- Available online: https://skybrary.aero/articles/team-resource-management-trm (accessed on 20 March 2025).

- Available online: https://en.wikipedia.org/wiki/Maritime_resource_management (accessed on 20 March 2025).

- Norman, D.A. The Design of Everyday Things (Revised and Expanded Editions ed.); The MIT Press: Cambridge, MA, USA; London, UK, 2013; ISBN 978-0-262-52567-1. [Google Scholar]

- Norman, D.A. User Centered System Design: New Perspectives on Human-Computer Interaction; CRC: Boca Raton, FL, USA, 1986; ISBN 978-0-89859-872-8. [Google Scholar]

- Schneiderman, B.; Plaisant, C. Designing the User Interface: Strategies for Effective Human-Computer Interaction; Addison-Wesley: Boston, MA, USA, 2010; ISBN 9780321537355. [Google Scholar]

- Schneiderman, B. Human-Centred AI; Oxford University Press: Oxford, UK, 2022. [Google Scholar]

- Gunning, D.; Aha, D. DARPA’s Explainable Artificial Intelligence (XAI) Program. AI Mag. 2019, 40, 44–58. [Google Scholar] [CrossRef]

- Hollnagel, E.; Woods, D.D. Cognitive Systems Engineering: New wine in new bottles. Int. J. Man-Mach. Stud. 1983, 18, 583–600. [Google Scholar] [CrossRef]

- Hollnagel, E.; Woods, D.D. Joint Cognitive Systems: Foundations of Cognitive Systems Engineering; CRC Press: Boca Raton, FL, USA, 2005. [Google Scholar]

- Edwin, H. How a Cockpit Remembers Its Speeds. Cogn. Sci. 1995, 19, 265–288. [Google Scholar]

- Bainbridge, L. Ironies of automation. Automatica 1983, 19, 775–779. [Google Scholar] [CrossRef]

- Endsley, M.R. Ironies of artificial intelligence. Ergonomics 2023, 66, 11. [Google Scholar]

- Sheridan, T.B.; Verplank, W.L. Human and Computer Control of Undersea Teleoperators; Department of Mechanical Engineering, MIT: Cambridge, MA, USA, 1978. [Google Scholar]

- Sheridan, T.B. Automation, authority and angst—Revisited. In Human Factors Society, Proceedings of the Human Factors Society 35th Annual Meeting, San Francisco, CA, USA, 2–6 September 1991; Human Factors & Ergonomics Society Press: New York, NY, USA, 1991; pp. 18–26. [Google Scholar]

- Parasuraman, R.; Sheridan, T.B.; Wickens, C.D. A Model for Types and Levels of Human Interaction with Automation. IEEE Trans. Syst. Man Cybern.—Part A Syst. Hum. 2000, 30, 286–297. [Google Scholar] [CrossRef]

- Wickens, C.; Hollands, J.G.; Banbury, S.; Parasuraman, R. Engineering Psychology and Human Performance; Taylor and Francis: London, UK, 2012. [Google Scholar]

- Endsley, M.R. Toward a Theory of Situation Awareness in Dynamic Systems. Hum. Factors 1995, 37, 32–64. [Google Scholar] [CrossRef]

- Moray, N. (Ed.) Mental workload—Its theory and measurement. In NATO Conference Series III on Human Factors, Greece, 1979; Springer: New York, NY, USA, 2013; ISBN 9781475708851. [Google Scholar]

- Klein, G.; Moon, B.; Hoffman, R. Making Sense of Sensemaking 1: Alternative Perspectives. IEEE Intell. Syst. 2006, 21, 70–73. [Google Scholar] [CrossRef]

- Rasmussen, J. Skills, rules, knowledge; signals, signs, and symbols; and other distinctions in human performance models. IEEE Trans. Syst. Man Cybern. 1983, 3, 257–266. [Google Scholar]

- Vicente, K.J.; Rasmussen, J. Ecological interface design: Theoretical foundations. IEEE Trans. Syst. Man Cybern. 1992, 22, 589–606. [Google Scholar]

- Reason, J.T. Human Error; Cambridge University Press: Cambridge, UK, 1990. [Google Scholar]

- Shappel, S.A.; Wiegmann, D.A. The Human Factors Analysis and Classification System—HFACS; DOT/FAA/AM-00/7; U.S. Department of Transportation: Washington, DC, USA, 2000.

- Dillinger, T.; Kiriokos, N. NASA Office of Safety and Mission Assurance Human Factors Handbook: Procedural Guidance and Tools; NASA/SP-2019-220204; National Aeronautics and Space Administration (NASA): Washington, DC, USA, 2019. [Google Scholar]

- Stroeve, S.; Kirwan, B.; Turan, O.; Kurt, R.E.; van Doorn, B.; Save, L.; Jonk, P.; Navas de Maya, B.; Kilner, A.; Verhoeven, R.; et al. SHIELD Human Factors Taxonomy and Database for Learning from Aviation and Maritime Safety Occurrences. Safety 2023, 9, 14. [Google Scholar] [CrossRef]

- IAEA. Safety Culture; Safety Series No. 75-INSAG-4; International Atomic Energy Agency: Vienna, Austria, 1991. [Google Scholar]

- Kirwan, B.; Shorrock, S.T. A view from elsewhere: Safety culture in European air traffic management. In Patient Safety Culture; Waterson, P., Ed.; Ashgate: Aldershot, UK, 2015; pp. 349–370. [Google Scholar]

- Kirwan, B. The Impact of Artificial Intelligence on Future Aviation Safety Culture. Future Transp. 2024, 4, 349–379. [Google Scholar] [CrossRef]

- Salas, E.; Sims, D.E.; Burke, C.S. Is There a “Big Five” in Teamwork? Small Group Res. 2005, 36, 555–599. [Google Scholar] [CrossRef]

- Parasuraman, R. Humans and Automation: Use, Misuse, Disuse, and Abuse. Hum. Factors 1997, 39, 230–253. [Google Scholar]

- Parasuraman, R.; Manzey, D.H. Complacency and bias in human use of automation: An attentional integration. Hum. Factors 2012, 52, 3. [Google Scholar] [CrossRef]

- Tversky, A.; Kahneman, D. Judgment under Uncertainty: Heuristics and Biases. Science 1974, 185, 1124–1131. [Google Scholar] [PubMed]

- Klayman, J. Varieties of Confirmation Bias. Psychol. Learn. Motiv. 1995, 32, 385–418. [Google Scholar] [CrossRef]

- Hawkins, F.H. Human Factors in Flight, 2nd ed.; Orlady, H.W., Ed.; Routledge: London, UK, 1993. [Google Scholar] [CrossRef]

- Leveson, N.G. Safety Analysis in Early Concept Development and Requirements Generation. In Paper Presented at the 28th Annual INCOSE International Symposium; Wiley: Hoboken, NJ, USA, 2018; Available online: https://hdl.handle.net/1721.1/126541 (accessed on 20 March 2025).

- Kletz, T. HAZOP and HAZAN: Identifying and Assessing Process Industry Hazards, 4th ed.; Taylor and Francis: Oxfordshire, UK, 1999. [Google Scholar]

- Single, J.J.; Schmidt, J.; Denecke, J. Computer-Aided Hazop: Ontologies and Ai for Hazard Identification and Propagation. Comput. Aided Chem. Eng. 2020, 48, 1783–1788. [Google Scholar] [CrossRef]

- Hollnagel, E. FRAM: The Functional Resonance Analysis Method. Modelling Complex Socio-Technical Systems; CRC Press: Boca Raton, FL, USA, 2012. [Google Scholar] [CrossRef]

- Hollnagel, E. A Tale of Two Safeties. Int. J. Nucl. Saf. Simul. 2013, 4, 1–9. Available online: https://www.erikhollnagel.com/A_tale_of_two_safeties.pdf (accessed on 20 March 2025).

- Kumar, R.S.S.; Snover, J.; O’Brien, D.; Albert, K.; Viljoen, S. Failure Modes in Machine Learning; Microsoft Corporation & Berkman Klein Center for Internet and Society at Harvard University: Cambridge, MA, USA, 2019. [Google Scholar]

- Available online: https://skybrary.aero/articles/just-culture (accessed on 20 March 2025).

- Franchina, F. Artificial Intelligence and the Just Culture Principle. Hindsight 35, November, pp. 39–42. EUROCONTROL, rue de la Fusée 96, B-1130, Brussels. 2023. Available online: https://skybrary.aero/articles/hindsight-35 (accessed on 20 March 2025).

- MARC Baumgartner; Malakis, S. Just Culture and Artificial Intelligence: Do We Need to Expand the Just Culture Playbook? Hindsight 35, November, pp43-45. EUROCONTROL, Rue de la Fusee 96, B-1130 Brussels. 2023. Available online: https://skybrary.aero/articles/hindsight-35 (accessed on 20 March 2025).

- Dubey, A.; Kumar, A.; Jain, S.; Arora, V.; Puttaveerana, A. HACO: A Framework for Developing Human-AI Teaming. In Proceedings of the 13th Innovations in Software Engineering Conference on Formerly Known as India Software Engineering Conference, Kurukshetra, India, 20–22 February 2025; Association for Computing Machinery: New York, NY, USA, 2020; pp. 1–9. [Google Scholar] [CrossRef]

- Rochlin, G.I. Reliable Organizations: Present Research and Future Directions. J. Contingencies Crisis Manag. 1996, 4, 55–59, ISSN 1468-5973. [Google Scholar] [CrossRef]

- Schecter, A.; Hohenstein, J.; Larson, L.; Harris, A.; Hou, T.; Lee, W.; Lauharatanahirun, N.; DeChurch, L.; Contractor, N.; Jung, M. Vero: An accessible method for studying human-AI teamwork. Comput. Hum. Behav. 2023, 141, 107606. [Google Scholar]

- Zhang, G.; Chong, L.; Kotovsky, K.; Cagan, J. Trust in an AI versus a Human teammate: The effects of teammate identity and performance on Human-AI cooperation. Comput. Hum. Behav. 2023, 139, 107536. [Google Scholar]

- Ho, M.-T.; Mantello, P. An analytical framework for studying attitude towards emotional AI: The three-pronged approach. MethodsX 2023, 10, 102149. [Google Scholar] [PubMed]

- Lees, M.J.; Johnstone, M.C. Implementing safety features of Industry 4.0 without compromising safety culture. Int. Fed. Autom. Control (IFAC) Pap. Online 2021, 54, 680–685. [Google Scholar]

- HAIKU EU Project (2023-25). Available online: https://cordis.europa.eu/project/id/101075332 (accessed on 20 March 2025).

| New HAT Requirement | HUMAN FACTORS AREA | Origin HF Area |

| HUMAN-CENTRED DESIGN | Joint Cognitive Systems/ Cognitive Systems Engineering/ Human-Centred Automation (HCA) |

| SHELL, STAMP, HAZOP &FRAM | |

| ROLES and RESPONSIBILITIES

| Levels of automation/adaptive automation HCA HCD |

| ||

| ||

| ||

| Situation Awareness HCA Ironies of Automation/AI | |

| Joint Cognitive Systems/ Cognitive Systems Engineering/ Situation Awareness HACO–goal awareness and predictability | |

| HCA Fitts List HACO goal awareness | |

| ||

| ||

| HCA, Complex Systems, SA, Sense-Making, Fitts List HACO goal alignment | |

| SENSE-MAKINGShared Situation Awareness | Sense-Making JCS, HCD, HCA Situation Awareness (SA) Ecological Interface Design HACO |

| ||

| ||

| Complex Systems (KBB) SA (current/predictive) Ironies of Automation/AI | |

| HCD, HCA SA & SM | |

| Trustworthy Information | JCS/CSE, HCA HCD |

| Sense-Making, HCA Bias and Complacency | |

| JCS/CSE, UCD, HCA, HACO Sense-Making Complex Systems/KBB | |

| Ironies of Automation/AI, HCA | |

| Sense-Making, JCS/CSE Situation Awareness, HCA | |

| SA and SM Bias & Complacency | |

| Explainability | Sense-Making |

| HRO theory: sensitivity to operations, JCS, Sense-Making, SA | |

| Complex Systems/KBB HRO–reluctance to simplify explanations Big 5 (shared mental models) Sense-Making, SA | |

| Sense-Making Big 5 shared mental model | |

| HCD/HCA Ironies of Automation/AI | |

| Abnormal Events and Emergencies | Mental Workload Ironies of Automation/AI |

| Complex Systems/KBB Ironies of Automation/AI | |

| Safety Culture | |

| COMMUNICATIONS | Big 5 (closed-loop comms; shared mental models) HCA, HCD, UCD HACO Emotional Mimicry Non-Personification of AI |

| ||

| ||

| ||

| Teamwork | CRM/TRM/BRM The Big 5 SA and Sense-Making Complex Systems/KBB |

| ||

| ||

| ERROR and FAILURE MANAGEMENT | HAZOP/STPA/FRAM Bias and Complacency SA and SM Complex Systems/KBB JCS/CSE ASRS, HFACS, SHIELD Swiss Cheese Accident Aetiology |

| ||

| ||

| ||

|

| Human Factors Requirement Question | Y/N N/A TBD | Justification |

|---|---|---|

| Human-Centred Design | ||

| Are licensed end users participating in design exercises such as focus groups, scenario-based testing, prototyping, and simulation (e.g., ranging from desk-top simulation to full scope simulation)? | Y | For UC1, ENAC pilots and commercial airline pilots are involved in Val1 (five pilots) and Val2 (12 pilots) real-time simulation exercises in a static A320 cockpit simulator. For UC2, 12 pilots participated in simulations. For UC4 a number of controllers have participated in design activities and real-time simulations in Skyway’s Tower simulator in Madrid. |

| Are end user opinions helping to inform and validate the design concept, as part of an integrated project team including product owner, data scientists, safety, security, Human Factors, and operational expertise? | Y | Pilots are involved in UC1, and the product owner is a pilot. Additionally, there are Data Science and Human Factors experts in the design team. Security is outside the scope of HAIKU, and UC1 is TRL4-5. For UC2, a Thales test pilot is involved with the design team. For UC4, the product owner is a Tower air traffic supervisor. |

| Are end users involved in any hazard identification exercises (e.g., HAZOP, STPA, FRAM etc.)? | Y | All three use cases have undertaken HAZOPs with end users (pilots and controllers) who have experienced the AI in simulations participating in the HAZOP process. |

| Roles and Responsibilities | ||

| Are there any new roles, or suppressed roles? | Y | For UC1 this is a Single Pilot Operation (SPO) concept study, so one flight crew member is no longer in the cockpit. There are no staffing or role impacts on UC2 or UC4. |

| What is the level of autonomy—is the human still in charge? | Y | The end user remains in charge in all three use cases. |

| Does this level of autonomy change dynamically? Who/what determines when it changes? | TBD | In UC1 the IA is triggered by detection of pilot startle; the pilot can activate/deactivate the IA at any time. In UC2 the IA is triggered by certain circumstances, and the pilots can ignore it if they choose to do so. For UC4 the IA suggests changes when required, and if the ATCO does nothing the change of landing/take-off sequence will be automatically implemented. The ATCO can also switch the IA on and off. |

| Are the new/residual human roles consistent, and seen as meaningful by the intended users? | Y | Yes, for pilots in UC1 the AI is like a clever flight director or attention director, but the pilot remains in control. For UC2 the IA’s advice on three airports is very quick, with the supervised training still being fine-tuned to ensure the recommendations fit with pilots’ expertise and preferences. For UC4 the advice is seen as useful, giving them forewarning of arrivals/departures pressures. |

| Sense-Making | ||

| Is the interaction medium appropriate for the task, e.g., keyboard, touchscreen, voice, and even gesture recognition? | Y/ TBD | Startle and SA support colour-coding was appreciated in Val 1. Supporting displays on the Electronic Flight Bag (EFB) were not used due to the emergency nature of the event. Red was seen as too strong. Voice was suggested to back up the visual direction of SA (this has since been implemented). Changes have been tested in Val2 experiment (still under analysis). For UC2 the touchscreen display is seen as appropriate, and for UC4 display elements have been integrated into their normal radar displays. |

| Does the AI build its own situation representation? | Y | Yes, for UC1 coming from the aircraft data-bus, and from the pilot’s attentional behaviour (eye-tracking). Context is also from the SOPs (Standard Operating Procedures) for the events. For UC2 extensive details of all European airports plus dynamic weather information, plus aircraft characteristics and passenger manifests, as well as remaining fuel, altitude, etc., are used to compute optimum alternates. For UC4 it is computing times to land and separation distances for a specified single-runway airport (Alicante in Spain), with a database of tens of thousands of landings in varied conditions to render predictions accurate. |

| Is the AI’s situation representation made accessible to the end user, via visualisation and/or dialogue? | Y | For UC1, the EFB (electronic flight bag) to the captain’s left summarises the AI’s situation assessment. For UC2 the results of the three airports selected are shown on the moving map display and in an icon-based display, with a further explainability layer accessible to the flight crew. For UC4 only the output is shown, with a single line of explainability (usually, this is enough), due to the short timescale for accepting or rejecting the advice. |

| Does the AI-human interface reinforce the end user’s situation awareness, so that human and AI can remain ’on the same page’? | Y | Pilots in UC1 felt it helped their SA and speed of regaining a situational picture. In UC2 the icon display and explainability layer unpack the AI’s computation, showing which factors were prioritized. This helps when the pilots are unfamiliar with the airports available. For UC4 the display is clear and sharpens their own SA and ‘look-ahead’ time (Level 3 SA). |

| Can the human modify the AI’s parameters to explore alternative courses of action? | N/ TBD | In UC1, no. The pilot can follow an alternative course of action, though there is no ’interaction’ on this with the AI, as it is an emergency. In UC2 the flight crew can modify the goal priorities of the IA. In UC4 the ATCOs cannot modify the parameters. |

| Is at least some operational explainability possible, rather than the AI being a ‘black box’? | Y | In UC1, explainability is via the EFB. However, due to the very short response times in a loss of control in flight scenario, pilots had little time for explainability in the two simulations. This could differ in a scenario where the event was less clear cut, e.g., electronics failures, bus-bar failures, automation malfunction, etc. For UC2, there is a high degree of explainability. In UC4, the explainability needs are basic (aircraft on approach coming in too fast/too slow, etc.) and are deemed sufficient. |

| Does the AI possess the ability to detect human errors or misjudgement and notify them or directly correct them? | Y | In UC1, the AI is intended to detect temporary performance decrement due to startle, and to guide the pilot, but does not go as far as correcting his/her action. However, the dynamic highlighting of key instruments, along with callouts (e.g., “vertical speed!”) could be considered a form of error correction. UC2 has the potential to aid error detection, e.g., failing to consider one of the variables in airport selection, since all the key parameters are used and displayed by the IA. UC4 has the potential to correct errors of judgment and memory failures/omissions or vigilance failures, or at the least alert controllers to something they have overlooked or misjudged. |

| Does the level of human workload enable the human to remain proactive rather than reactive, except for short periods? | Y | For the types of sudden scenarios in UC1, the pilot is in reactive mode. The answer ‘yes’ is given as it is a short period, and the aim is to reduce cognitive stress, giving them more ‘headspace’ to deal with the event. For UC2, it is a very clear ‘yes’, since it will save them at least 10 min and considerable work for the PNF (pilot not flying/first officer). For UC4, the ATCOs suggested its primary benefit may be when workload increases. |

| Can the human detect errors by the AI and intercede accordingly? | Y | In all three simulations, pilots noticed if something was incorrect, usually due to an error in the IA’s database. Such errors should largely be eradicated by TRL 9. No edge cases or hallucinations nor alignment errors have arisen so far. |

| Does the human trust the AI, but not over-trust it? Is the human taught how to recognise AI malfunction or bad judgement? | Y | Pilots and controllers did not over-trust the IAs, knowing they were prototypes. They were not taught how to recognize aberrant IA behaviour. A general comment, though, from many end users, is that if such tools are to be implemented in the cockpit or ATC tower, they must be trustworthy and highly reliable; one serious mistake would irrevocably break trust and lead to non-use of the IA. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kirwan, B. Human Factors Requirements for Human-AI Teaming in Aviation. Future Transp. 2025, 5, 42. https://doi.org/10.3390/futuretransp5020042

Kirwan B. Human Factors Requirements for Human-AI Teaming in Aviation. Future Transportation. 2025; 5(2):42. https://doi.org/10.3390/futuretransp5020042

Chicago/Turabian StyleKirwan, Barry. 2025. "Human Factors Requirements for Human-AI Teaming in Aviation" Future Transportation 5, no. 2: 42. https://doi.org/10.3390/futuretransp5020042

APA StyleKirwan, B. (2025). Human Factors Requirements for Human-AI Teaming in Aviation. Future Transportation, 5(2), 42. https://doi.org/10.3390/futuretransp5020042