Evaluation of the Omni-Secure Firewall System in a Private Cloud Environment

Abstract

1. Introduction

2. Literature Review

2.1. Cloud Security

- Sensitive Data Protection in Healthcare: The importance of protecting sensitive data, particularly in the healthcare sector, is undeniable. Ahmad et al. [10] proposed a secure architecture specifically designed for healthcare applications in the cloud. Their framework focuses on data security, mobility, scalability, low latency, and real-time processing, keeping in view the critical need for secure healthcare-data management in cloud environments.

- Threats and Defense Strategies in Cloud Computing: Hong et al. [7] conducted a systematic survey of threats and defense strategies in cloud computing. By categorizing threats and outlining defense mechanisms, their study focuses on the evolving threat landscape within cloud environments. This work emphasizes the importance of proactive security measures in today’s cloud-based systems.

- Four-Step Security Model for Cloud Data: Adee and Mouratidis [11] introduced a four-step security model for securing cloud data, using a mix of cryptography and steganography techniques. Their model offers a robust security approach, acknowledging the critical role that cryptographic methodologies play in securing data stored and processed in the cloud.

- Integration of Quantum Key Distribution with Cloud Computing: Li et al. [8] explored the integration of quantum key distribution (QKD) with cloud computing, emphasizing its potential to enhance the security of smart grid networks. As cloud services expand, their work highlights the opportunities and challenges presented by emerging quantum technologies.

- Continuous Growth in Cloud Computing: The continuous growth in cloud computing is emphasized by Wang et al. [12]. They discuss the proliferation of cloud services and applications, emphasizing the critical need for robust security measures across various domains.

2.1.1. Firewalls

- Best Practices for Securing Healthcare Environments: Anwar et al. [13] conducted a review of best practices for securing healthcare environments. Their study not only focuses on the importance of firewall systems but also suggests detailed security policies specific to the healthcare domain.

- Multi-Layered Firewall Model for DDoS Protection: The multi-layered firewall model presented by Pandeeswari & Kumar [14] adds an extra layer of defense against distributed denial of service (DDoS) attacks. This approach is particularly pertinent in cloud environments, where the risk of DDoS attacks is a constant concern.

- Dynamic Application-Aware Firewalls in SDNs: Work by Alghofaili et al. [15] emphasizes the significance of dynamic application-aware firewalls in software-defined networks (SDNs). Keeping in view network virtualization, their study emphasizes the adaptability of firewall systems to ensure security in evolving network architectures.

2.1.2. Integration of Machine Learning with Firewalls

- Markov and Semi-Markov Models for Cloud Security: Ref. [17] proposes a method for assessing cloud availability and security, offering a new perspective on understanding and enhancing security in cloud environments.

- Secure Authentication Scheme for E-Healthcare Cloud Systems: Ref. [18] presents a secure authentication scheme tailored to e-healthcare cloud systems, acknowledging the importance of secure authentication mechanisms for emerging telemedicine platforms and digital health records.

- Multi-Layered Security Designs for Cloud-Based Applications: Ref. [19] evaluates multi-layered security designs for cloud-based web applications, emphasizing the multifaceted nature of security in cloud environments through a case study of a human-resource-management system.

- Performance Modeling for Firewalls and VPNs: Ref. [20] highlights performance modeling as a crucial approach to understanding firewall efficiency. This work proposes optimized algorithms for traffic analysis, supporting the creation of stronger firewall policies. VPNs are emphasized for their vital role in enhancing cloud security and the quality of data transmission [9].

2.1.3. Anomaly-Based Network-Intrusion Detection for IoT Attacks Using Deep Learning

2.1.4. Cyber Threat Intelligence in Cloud Environments

2.1.5. Machine Learning and Deep Learning for Cloud Security

2.1.6. APT Detection and Mitigation in Cloud Environments

2.2. Key Findings and Future Directions

| Key Findings and Contributions | Gaps and Limitations | Solutions by the Omni-Secure Firewall |

|---|---|---|

| Proposal of a secure architecture for healthcare applications in the cloud, emphasizing mobility, scalability, and low latency [10]. | Lack of integrated security frameworks | An integrated architecture securing the entire private cloud fabric |

| Exploration of the integration of quantum key distribution (QKD) with cloud computing for enhanced smart grid network security [8]. | Limited adoption of machine learning and AI | Advanced machine learning models for adaptive threat detection |

| Discussion of the growth in cloud computing and the imperative need for robust security measures [12]. | Lack of automation in threat response | Unified policy management and automation |

| Presentation of a multi-layered firewall model to counter distributed denial of service (DDoS) attacks [14]. | Insufficient incorporation of high availability | Resilience-focused availability design |

3. Methodology

3.1. Research Design

3.2. Data Collection

3.2.1. Network Logs

- Key Variables: timestamp, source IP, destination IP, protocol, source port, destination port, bytes sent, and bytes received.

3.2.2. Web Access Logs

- Key Variables: timestamp, user IP, URL, HTTP status code, and request method.

3.2.3. Firewall Logs

- Key Variables: timestamp, source IP, destination IP, and action.

3.2.4. Syslog Logs

- Key Variables: timestamp, device IP, facility, severity, and message.

3.2.5. Security Event Logs

- Key Variables: timestamp, source IP, destination IP, protocol, and security event.

3.3. Data Preprocessing

3.3.1. Data Cleaning

- Handling Missing Data: Missing data in logs was handled using listwise deletion.

- Duplicate Entry Removal: Duplicate entries were identified and removed to ensure data integrity.

3.3.2. Data Transformation

- Normalization: Numerical variables such as packet size were normalized to a common scale for consistency.

- Encoding of Categorical Variables: Categorical variables like log types and protocols were encoded using one-hot encoding, a technique that represents each category as a binary vector. Each category is converted into a binary vector wherein all elements are zero except for the index corresponding to the category, which is marked as one.

- Anonymization: Sensitive information, such as IP addresses, was anonymized to protect user privacy.

3.3.3. Feature Engineering

- URL Extraction: From web access logs, domain names were extracted from URLs for further analysis.

3.4. Ethical Considerations

3.4.1. Protection of User Privacy

- Data Anonymization: During the log analysis and threat-detection processes, any collected data related to network activities should undergo anonymization. Personally identifiable information (PII) should be stripped or encrypted, preventing the identification of specific users involved in network traffic.

- Limited Data Collection: The framework adheres to the principle of limited data collection. Only data necessary for effective threat detection and firewall rule management should be collected. Avoiding the gathering of excessive, irrelevant information ensures that the framework focuses solely on elements directly relevant to the study.

- Secure Storage and Handling: All components, including threat detection API, firewall API, and availability API, implement secure storage measures. Collected data are encrypted, and access controls must be in place to prevent unauthorized access. This process applies to both real-time data processing and the storage of historical data for analysis.

- Data Retention Policies: Clear data retention policies need to be established, especially within the threat detection API and the firewall API. These policies dictate the duration for which data are retained for analysis. Once data are no longer needed for threat detection or rule management, they are deleted or anonymized, minimizing the risk of potential misuse.

3.4.2. Informed Consent and Responsible Tool Use

- Voluntary Participation: Participation in vulnerability testing is entirely voluntary. In the experimental setup, it should be explicitly stated that participants, including system owners or administrators, have the right to withdraw from the study at any point without facing negative consequences.

- Legal and Authorized Access: Ensure that the threat detection algorithm and related tools operate within legal and authorized parameters. Unauthorized access to systems for testing can lead to legal consequences.

- Disclosure of Findings: If any vulnerabilities are discovered during threat detection, notify the affected parties or system owners promptly, allowing them an opportunity to address the issues before public disclosure. This procedure ensures responsible use of the tools and mitigates potential harm.

- Avoiding Harm: Take precautions within the threat detection API to avoid causing harm to systems, networks, or individuals during vulnerability testing. Implement safeguards to prevent unintended damage, aligning with the principle of avoiding harm during the testing process.

- Continuous Monitoring and Review: Regularly review and update ethical guidelines within the experimental setup based on emerging standards, legal requirements, and advancements in technology. Ethical considerations should be an ongoing part of the research process, ensuring that the framework adapts to evolving ethical standards.

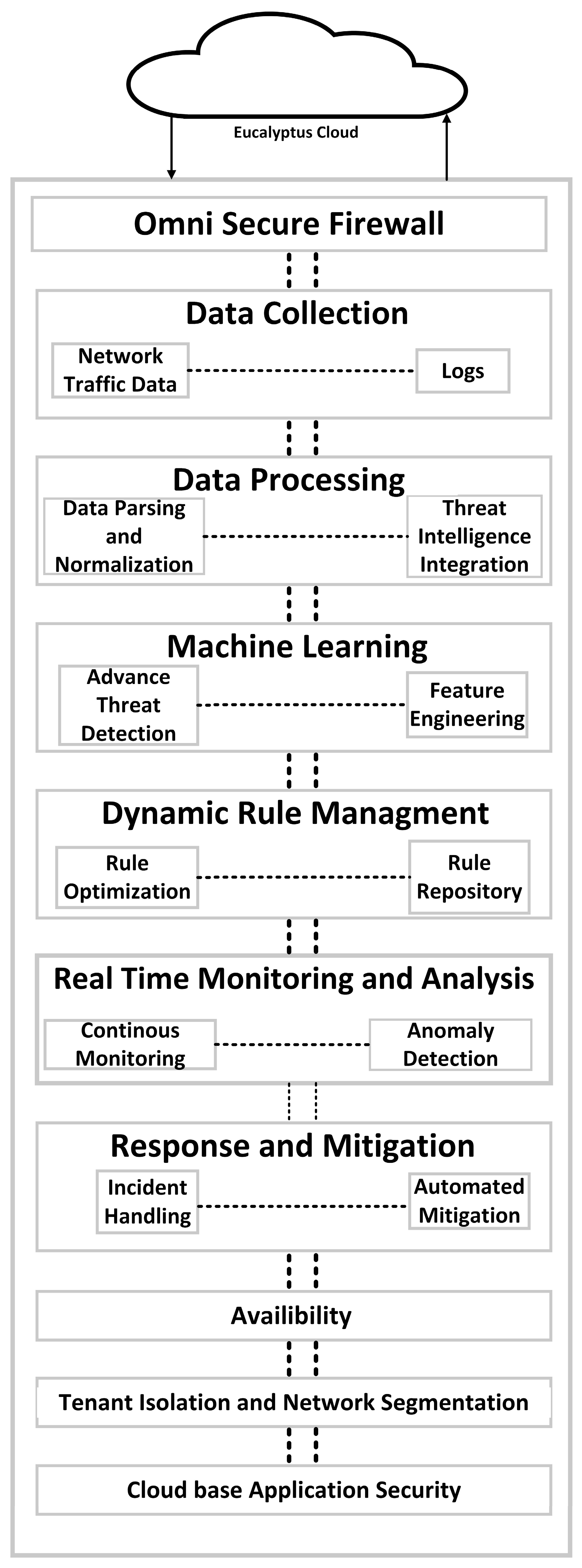

4. Omni-Secure Firewall Framework

4.1. Proposed Framework

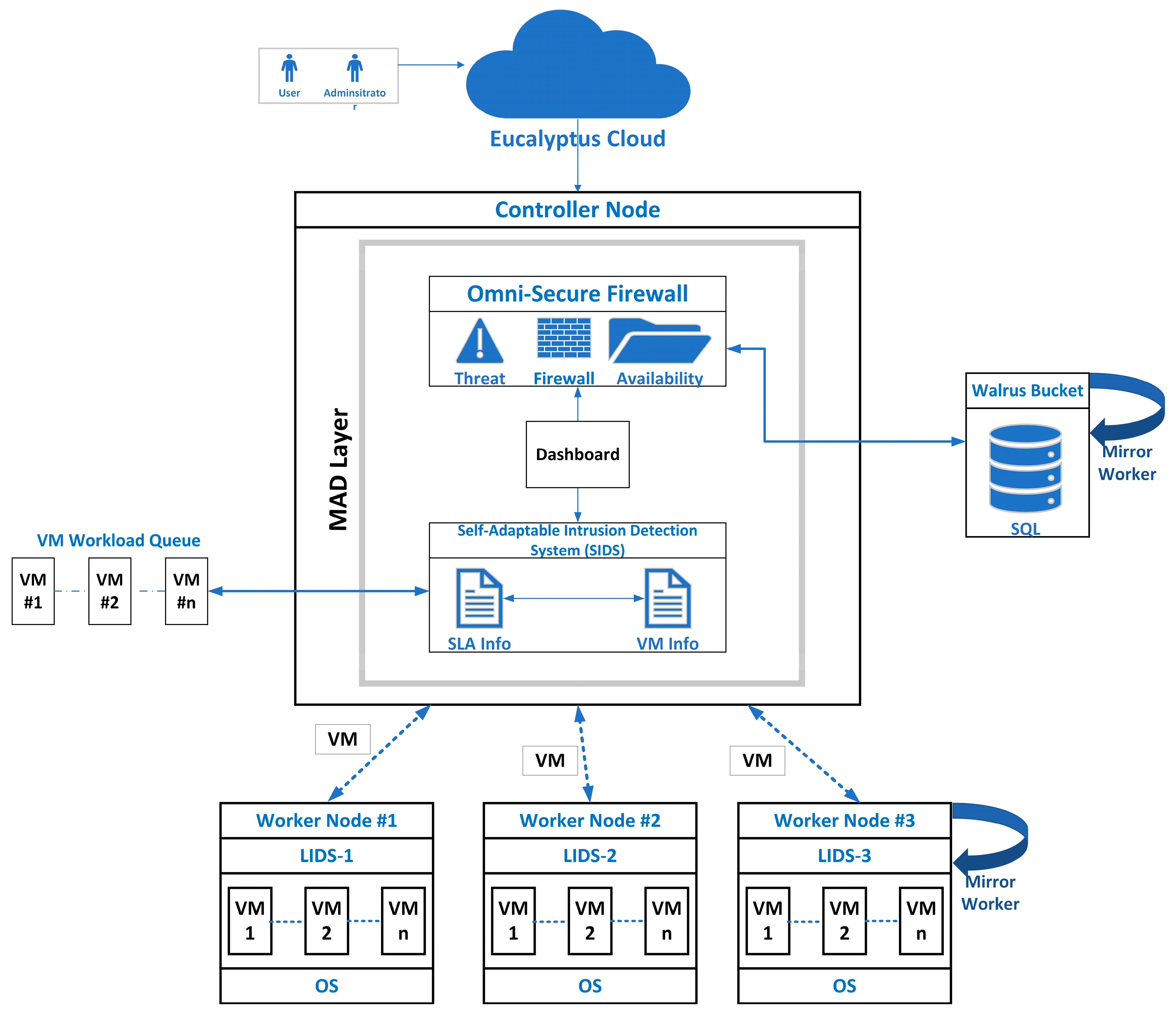

4.1.1. IaaS Cloud Environment

4.1.2. Monitoring through SLA and API

4.2. Modular API Development

- Threat detection API: Analyzes network logs to identify suspicious patterns. Employs a signature-based approach to detect known attack signatures. Utilizes predefined threat patterns to detect anomalies in network traffic.

- Firewall API: Categorizes and prioritizes incoming network traffic and provides dynamic rule management capabilities for optimizing firewall rules.

- Availability API: Monitors critical network resources for uptime optimization and simulates high-stress scenarios to actively reduce network downtime.

4.3. Framework Design

Design Principles

- Modularity: The adoption of a modular structure is a cornerstone of the Omni-Secure Firewall System, enhancing flexibility and scalability. Each module functions independently, allowing for seamless updates or additions without disruption to the entire system. This design principle ensures that the firewall can be tailored to specific organizational needs and that new features can be incorporated with minimal impact on existing functionalities. Modularity simplifies maintenance, troubleshooting, and future expansions, making the framework adaptable to evolving security requirements.

- Adaptability: The Omni-Secure Firewall System is designed for adaptability, responding dynamically to changing network conditions. The dynamic rule-management capability enables the firewall to adjust its rule set in real time based on emerging threats or alterations in network behavior. Real-time threat-response mechanisms ensure swift reactions to potential security incidents, minimizing response times and reducing the need for manual intervention. This adaptability is crucial in addressing the evolving nature of cyber threats, providing a proactive defense mechanism that evolves with the network environment.

- Collaborative Synergy: Seamless collaboration among components forms the backbone of the framework, significantly enhancing overall network security and performance. The collaborative synergy ensures that threat intelligence gathered by the threat detection API informs rule adjustments in the firewall API. The availability API, in turn, is informed about potential stress scenarios identified by both the threat detection and firewall APIs. This cohesive collaboration optimizes the response mechanism, creating a unified defense strategy that surpasses the sum of its parts. The collaborative approach enhances the system’s ability to detect, respond, and adapt collectively, thereby fortifying network security.

4.4. Architecture

4.5. Implementation

4.5.1. Threat-Detection Algorithm

- Start Network: The system initiates the network components.

- Check Network Connectivity: The system ensures that the network is operational before proceeding.

- Initialize Firewall Rule: The firewall rule (R) is set to allow FTP and HTTP traffic.

- Receive the Packet: The system receives a network packet for inspection.

- Network Self-Test: The MAC address (mac) is retrieved from the packet header (H) for inspection.

- Check MAC Address: If the MAC address is all zeros, indicating an invalid address, the packet is dropped.

- Inspect State Table: The state table (ST) is checked for existing traffic-flow records.

- Check Existing Flow: If the packet matches an existing flow in the state table, it is sent to the server (Sr).

- No Existing Flow: If no match is found in the state table within the network devices, the packet is matched against the firewall rule table (RT).

- Apply Firewall Filtering: If the rule allows the packet, the packet is sent to the state table (ST) for further inspection or tracking.

- Drop Packet: If the packet does not match any rule or is not allowed, the packet is dropped.

- End

4.5.2. Firewall Rule Management Algorithm

- Start Network: Initialization of network components.

- Check Network Connectivity: Ensuring network operability.

- Initialize Firewall Rules: Definition and initialization of firewall rules based on security policies.

- Receive Packet: Receipt of a network packet by the system for analysis.

- Inspect Network Traffic: Analysis of the incoming network traffic using predefined rules.

- Dynamic Rule Optimization: Dynamic optimization of firewall rules based on network conditions.

- Real-time Adaptation: Adaptation of the firewall rules in real time based on detected threats.

- End

4.5.3. Availability-Optimization Algorithm

- Start Network: Initialization of network components.

- Check Network Connectivity: Verification of network availability.

- Continuous Monitoring: Monitoring of critical network resources for uptime optimization.

- Implement Redundancy: Introduction of redundancy mechanisms to enhance availability.

- Failover Mechanisms: Implementation of failover mechanisms for seamless transition during network disruptions.

- Stress Testing: Simulation of high-stress scenarios to actively reduce network downtime.

- End

4.6. Experimental Setup

4.6.1. Network Configuration

- Physical Segments: Eucalyptus facilitates the deployment of physical servers, load balancers, and routers within its infrastructure. These components can emulate the physical servers in a real-world datacenter, hosting critical databases, payment gateways, and inventory-management systems.

- Virtual Segments: The network configuration involved utilizing Eucalyptus virtual machines (VMs) for web servers, application servers, and caching layers. It leverages Eucalyptus Network overlays to ensure secure communication between VMs, mirroring the complexities of a dynamic e-commerce network.

4.6.2. Traffic Generation Tools

4.6.3. Attack Scenarios

- SQL Injection Attacks: Malicious SQL queries targeting the e-commerce database were injected. The ability of the Omni-Secure Firewall System to detect and block such attacks was evaluated.

- Cross-Site Scripting (XSS): Malicious scripts were injected into e-commerce web pages. The firewall’s effectiveness in preventing script execution was assessed.

- Brute Force Login Attempts: The firewall’s ability to detect and respond to excessive login failures in the e-commerce platform was tested.

4.6.4. Performance Matrix

- Throughput: The number of e-commerce transactions processed per second was measured.

- Latency: The response time for user interactions on the e-commerce platform was evaluated.

- Resource Utilization: CPU, memory, and network usage specific to e-commerce workloads were monitored.

- False Positives/Negatives: The accuracy of threat detection within the e-commerce context was assessed.

5. Results and Discussion

5.1. Exploratory Data Analysis (EDA)

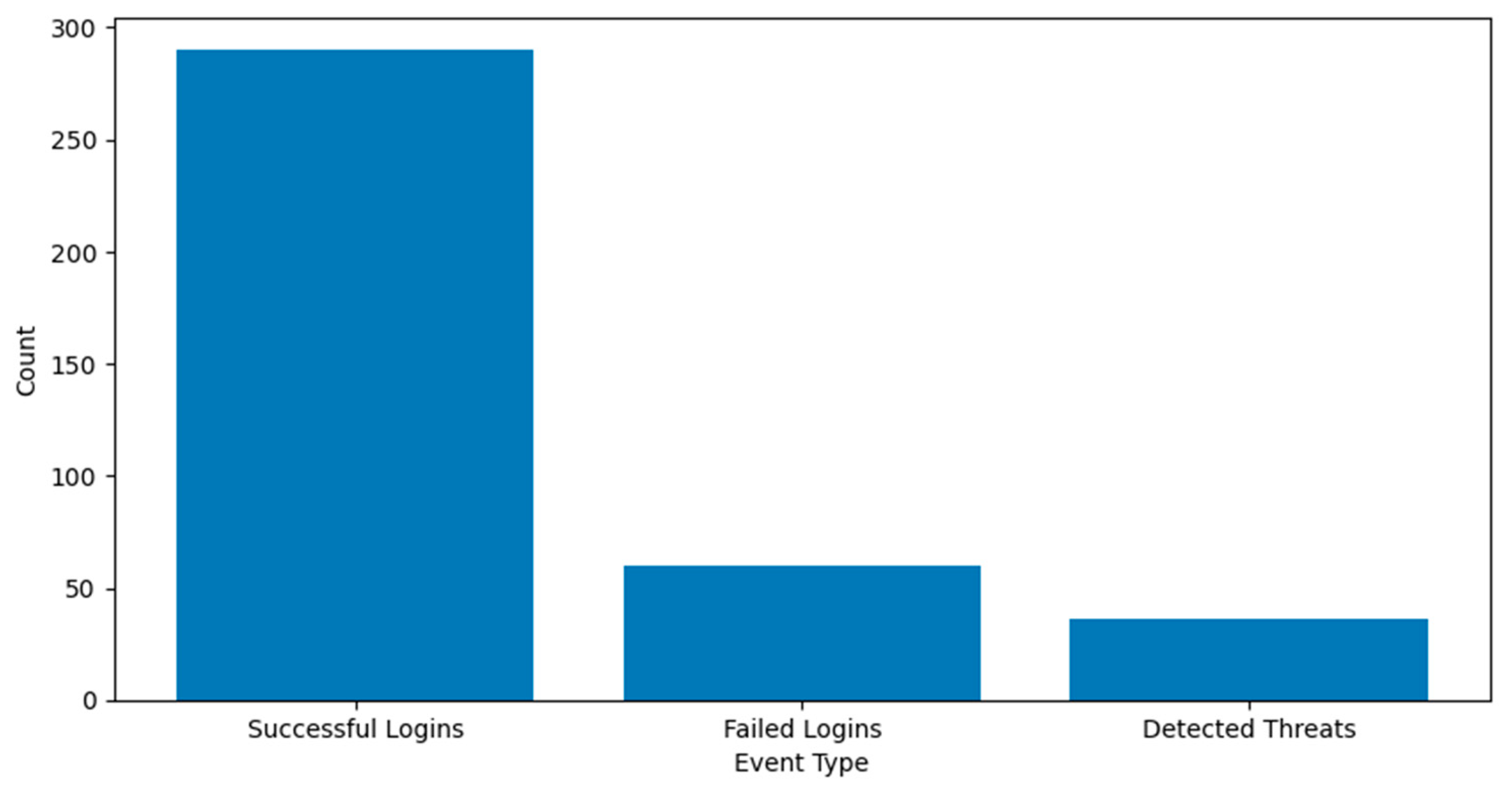

5.1.1. Analysis of Counts of Security Event

5.1.2. Analyzing SLA Performance Trends through Line Charts

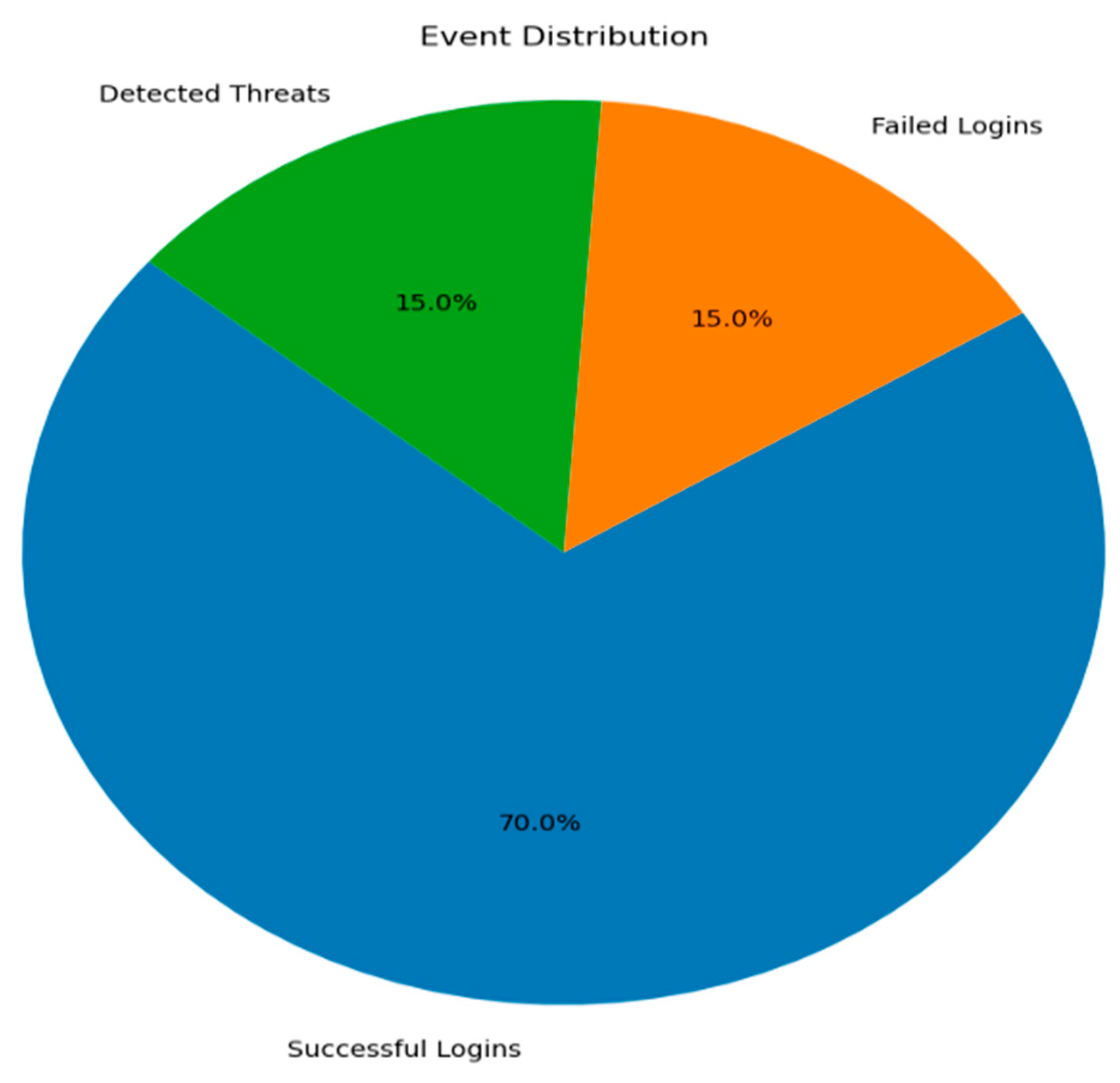

5.1.3. Visualizing Security Event Distribution with Pie Charts

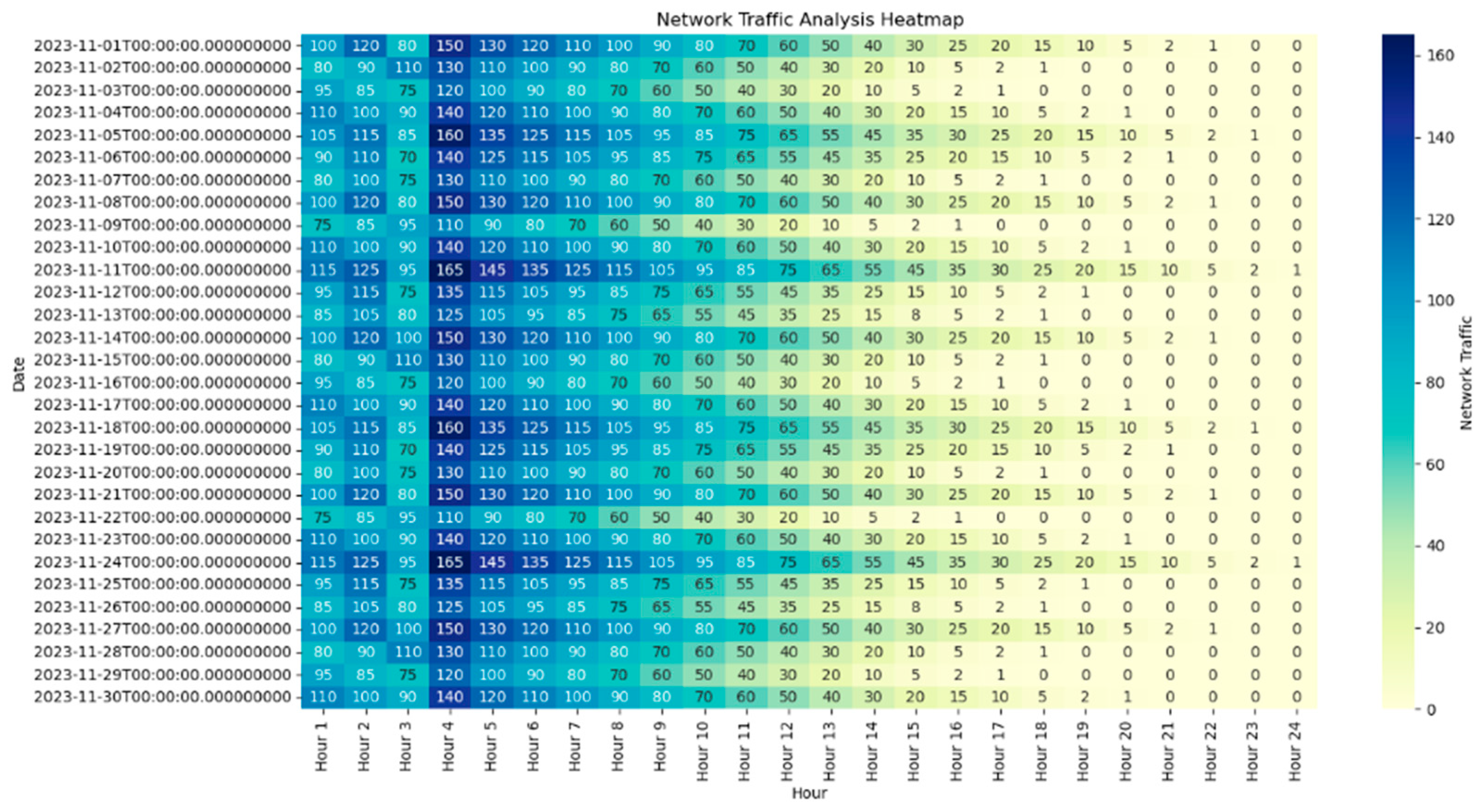

5.1.4. Analyzing Network Traffic Patterns with Heatmaps

5.1.5. Exploring Signature-Based Detection with Histograms

5.1.6. Mapping Threat Origins with Geospatial Maps

5.1.7. Analyzing Event Trends with Stacked Area Charts

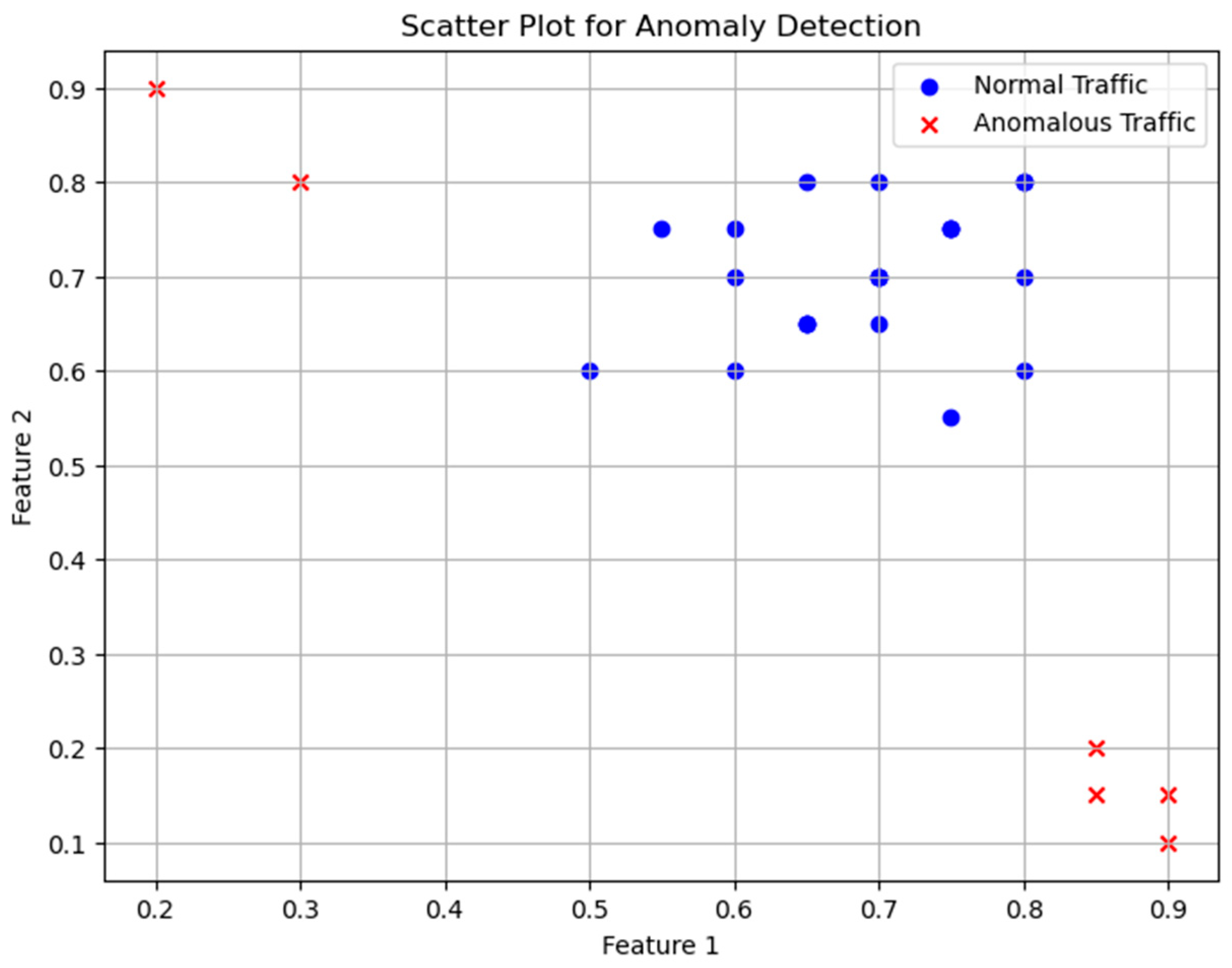

5.1.8. Detecting Anomalies with Scatterplots

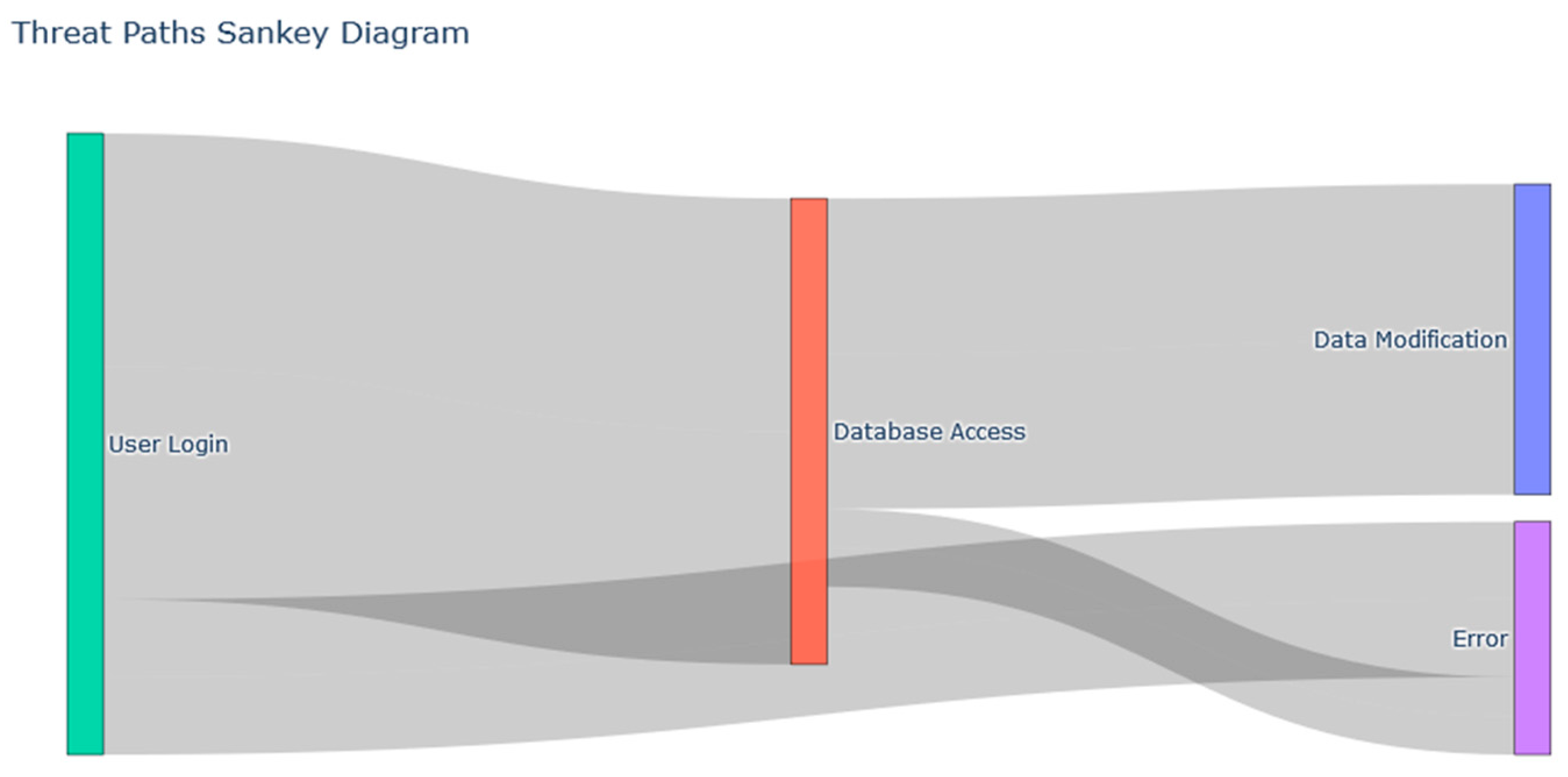

5.1.9. Visualizing Threat Paths with Sankey Diagrams

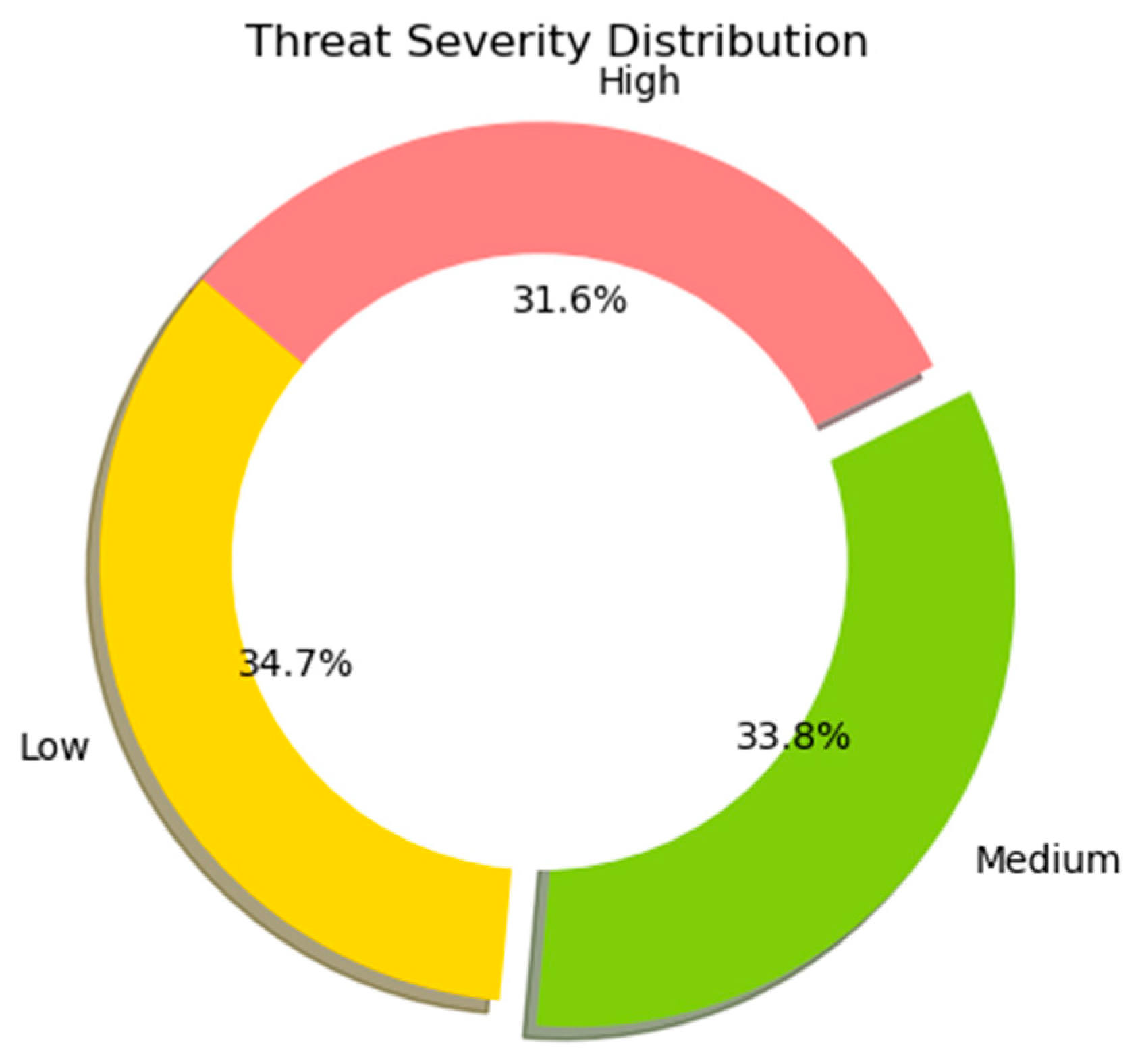

5.1.10. Prioritizing Threat Response with Doughnut Charts

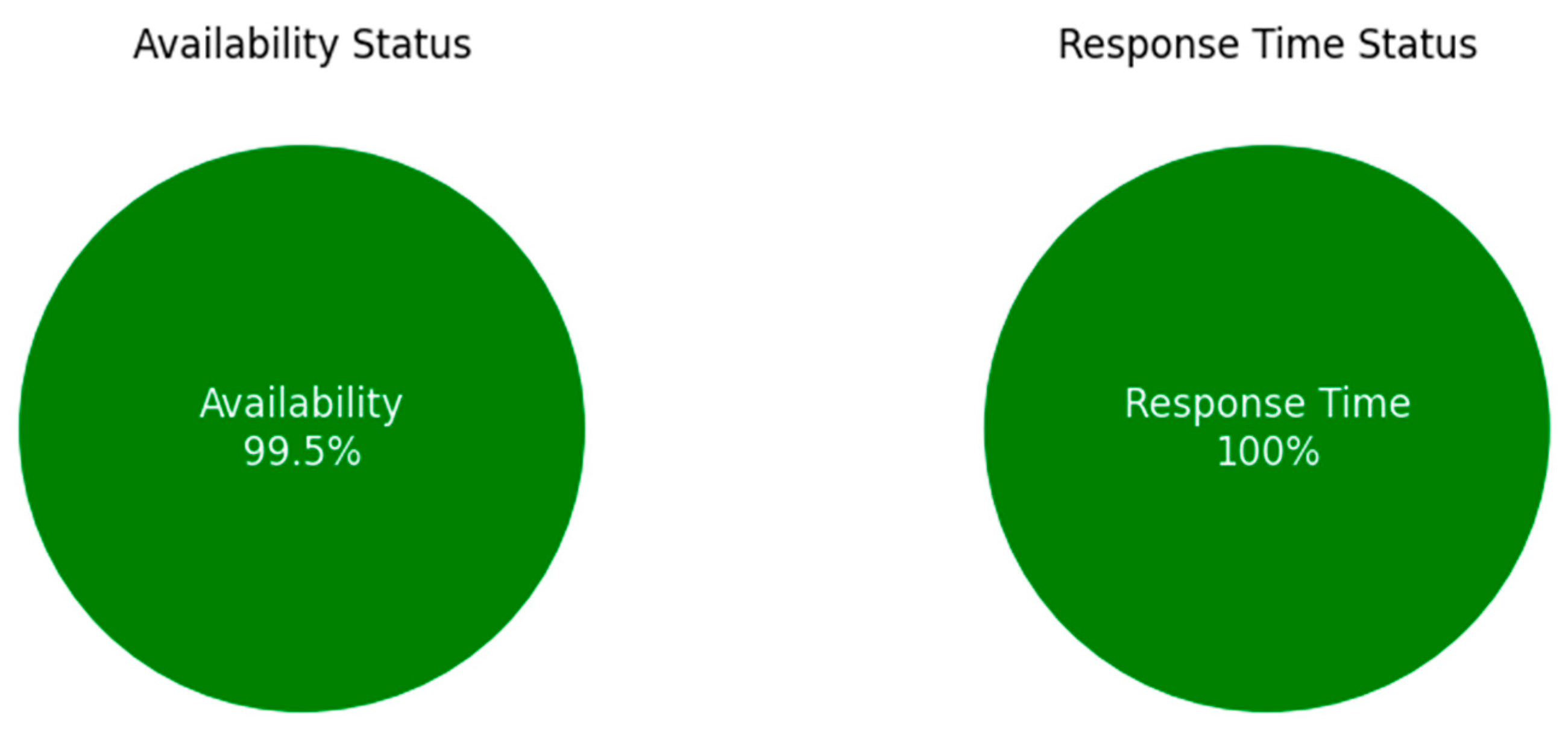

5.1.11. Displaying Critical SLA Metrics with Status Indicators

5.2. Benchmarking

5.3. Performance Metrics

5.3.1. Prediction Latency

5.3.2. CPU Usage

5.3.3. Memory Consumption

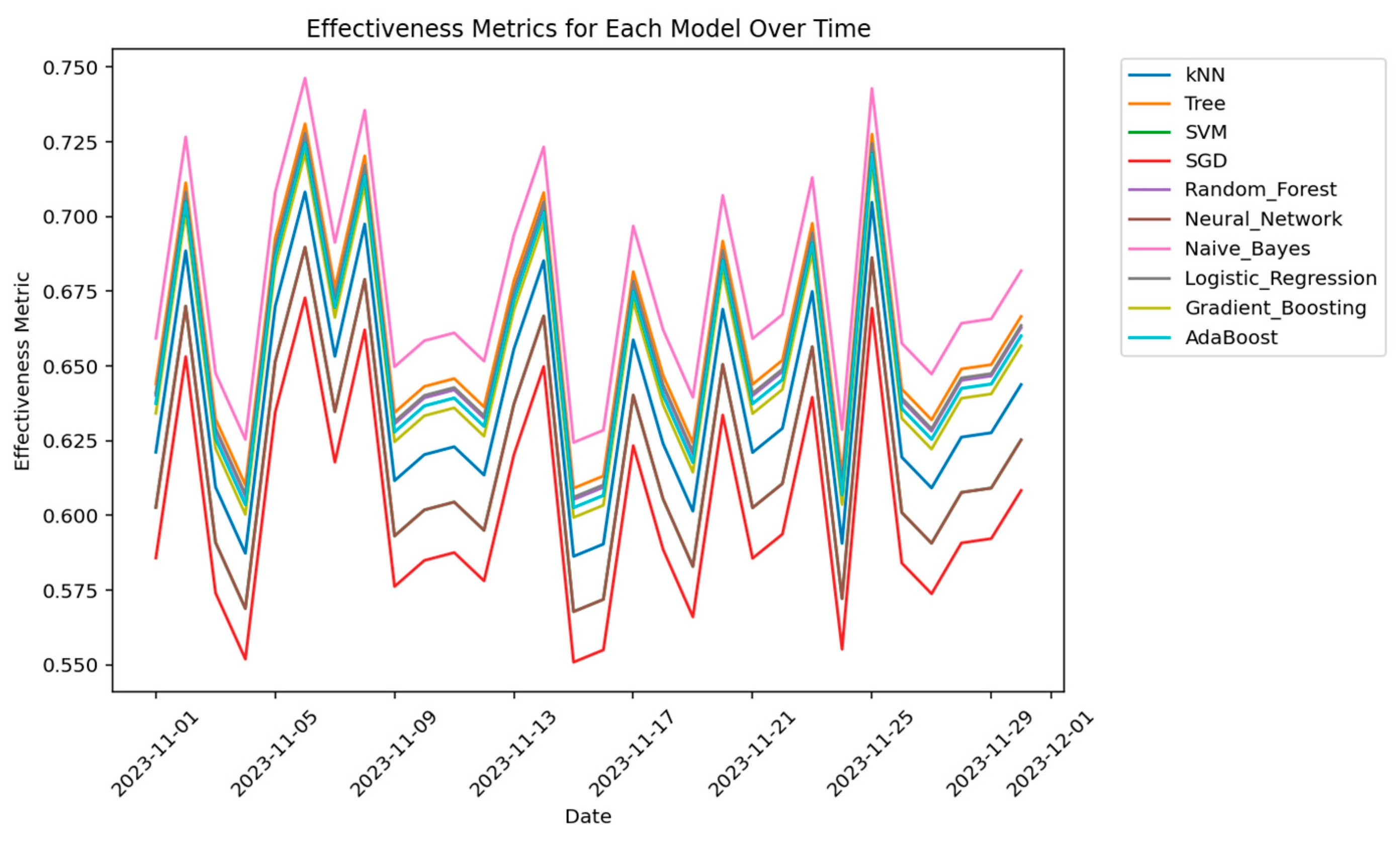

5.4. Effectiveness Metric (E)

5.4.1. Precision (Weight: 0.3)

5.4.2. Recall (Weight: 0.2)

5.4.3. F1 Score (Weight: 0.2)

5.4.4. Throughput (Weight: 0.1)

5.4.5. Mitigation Time (Weight: 0.05)

5.4.6. Rule Latency (Weight: 0.05)

5.4.7. Redundancy (Weight: 0.1)

- Step 1: Define the Weights (w1, w2, w3, w4, w5, w6, w7)

- Step 2: Calculate the Effectiveness Metric (E) for Each Model

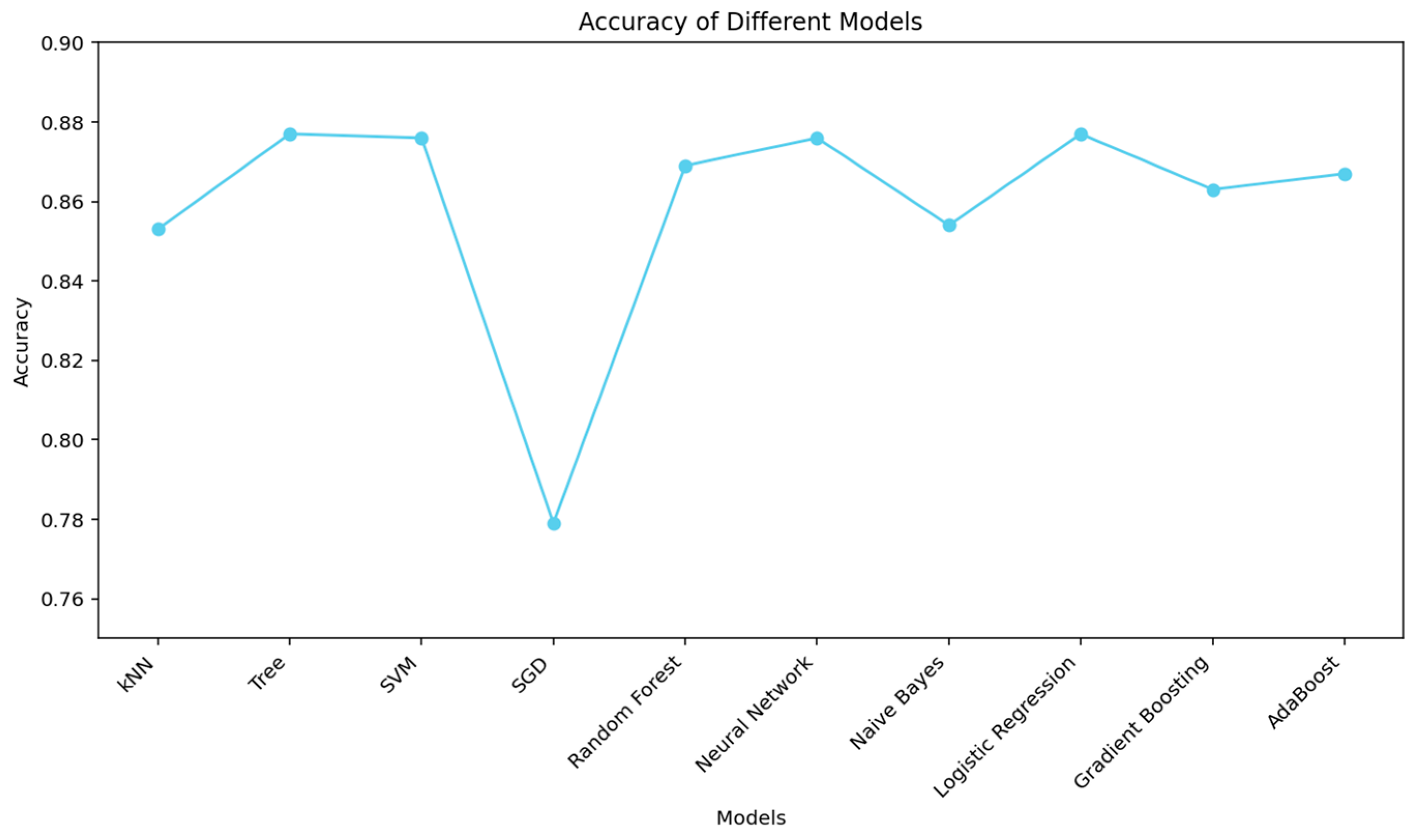

5.5. Machine Learning Model Evaluation

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Jabbar, A.A.; Bhaya, W.S. Security of Private Cloud Using Machine Learning and Cryptography. Bull. Electr. Eng. Inform. 2023, 12, 561–569. [Google Scholar] [CrossRef]

- Qureshi, A.; Dashti, W.; Jahangeer, A.; Zafar, A. Security Challenges over Cloud Environment from Service Provider Prospective. Cloud Comput. Data Sci. 2020, 12, 12–20. [Google Scholar] [CrossRef]

- Kumar, K.D.; Umamaheswari, E. An Authenticated, Secure Virtualization Management System in Cloud Computing. Asian J. Pharm. Clin. Res. 2017, 10, 45. [Google Scholar] [CrossRef][Green Version]

- Ahmadi, S.; Salehfar, M. Privacy-Preserving Cloud Computing: Ecosystem, Life Cycle, Layered Architecture and Future Roadmap. arXiv 2022, arXiv:2204.11120. [Google Scholar]

- Khaleel, T.A. Analysis and Implementation of Kerberos Protocol in Hybrid Cloud Computing Environments. Eng. Technol. J. 2021, 39, 41–52. [Google Scholar] [CrossRef]

- Borse, Y.; Gokhale, S. Cloud Computing Platform for Education System: A Review. Int. J. Comput. Appl. 2019, 177, 41–45. [Google Scholar] [CrossRef]

- Hong, J.B.; Nhlabatsi, A.; Kim, D.S.; Hussein, A.; Fetais, N.; Khan, K.M. Systematic Identification of Threats in the Cloud: A Survey. Comput. Netw. 2019, 150, 46–69. [Google Scholar] [CrossRef]

- Li, Z.; Jin, H.; Zou, D.; Yuan, B. Exploring New Opportunities to Defeat Low-Rate DDoS Attack in Container-Based Cloud Environment. IEEE Trans. Parallel Distrib. Syst. 2020, 31, 695–706. [Google Scholar] [CrossRef]

- Shah, H.; ud Din, A.; Khan, A.; Din, S. Enhancing the Quality of Service of Cloud Computing in Big Data Using Virtual Private Network and Firewall in Dense Mode. Int. J. Adv. Comput. Sci. Appl. 2020, 11, 10351. [Google Scholar] [CrossRef]

- Ahmad, W.; Rasool, A.; Javed, A.R.; Baker, T.; Jalil, Z. Cyber Security in IoT-Based Cloud Computing: A Comprehensive Survey. Electronics 2021, 11, 16. [Google Scholar] [CrossRef]

- Adee, R.; Mouratidis, H. A Dynamic Four-Step Data Security Model for Data in Cloud Computing Based on Cryptography and Steganography. Sensors 2022, 22, 1109. [Google Scholar] [CrossRef] [PubMed]

- Wang, Q.; Tai, W.; Tang, Y.; Zhu, H.; Zhang, M.; Zhou, D. Coordinated Defense of Distributed Denial of Service Attacks against the Multi-Area Load Frequency Control Services. Energies 2019, 12, 2493. [Google Scholar] [CrossRef]

- Anwar, R.W.; Abdullah, T.; Pastore, F. Firewall Best Practices for Securing Smart Healthcare Environment: A Review. Appl. Sci. 2021, 11, 9183. [Google Scholar] [CrossRef]

- Pandeeswari, N.; Kumar, G. Anomaly Detection System in Cloud Environment Using Fuzzy Clustering Based ANN. Mob. Netw. Appl. 2016, 21, 494–505. [Google Scholar] [CrossRef]

- Alghofaili, Y.; Albattah, A.; Alrajeh, N.; Rassam, M.A.; Al-rimy, B.A.S. Secure Cloud Infrastructure: A Survey on Issues, Current Solutions, and Open Challenges. Appl. Sci. 2021, 11, 9005. [Google Scholar] [CrossRef]

- Abu Al-Haija, Q.; Ishtaiwi, A. Machine Learning Based Model to Identify Firewall Decisions to Improve Cyber-Defense. Int. J. Adv. Sci. Eng. Inf. Technol. 2021, 11, 1688. [Google Scholar] [CrossRef]

- Kharchenko, V.; Ponochovnyi, Y.; Ivanchenko, O.; Fesenko, H.; Illiashenko, O. Combining Markov and Semi-Markov Modelling for Assessing Availability and Cybersecurity of Cloud and IoT Systems. Cryptography 2022, 6, 44. [Google Scholar] [CrossRef]

- Lin, H.-Y. A Secure Heterogeneous Mobile Authentication and Key Agreement Scheme for E-Healthcare Cloud Systems. PLoS ONE 2018, 13, e0208397. [Google Scholar] [CrossRef] [PubMed]

- Wijaya, G.; Surantha, N. Multi-Layered Security Design and Evaluation for Cloud-Based Web Application: Case Study of Human Resource Management System. Adv. Sci. Technol. Eng. Syst. J. 2020, 5, 674–679. [Google Scholar] [CrossRef]

- Shahsavari, Y.; Shahhoseini, H.; Zhang, K.; Elbiaze, H. A Theoretical Model for Analysis of Firewalls Under Bursty Traffic Flows. IEEE Access 2019, 7, 183311–183321. [Google Scholar] [CrossRef]

- Sharma, B.; Sharma, L.; Lal, C.; Roy, S. Anomaly Based Network Intrusion Detection for IoT Attacks Using Deep Learning Technique. Comput. Electr. Eng. 2023, 107, 108626. [Google Scholar] [CrossRef]

- Mozo, A.; Karamchandani, A.; de la Cal, L.; Gómez-Canaval, S.; Pastor, A.; Gifre, L. A Machine-Learning-Based Cyberattack Detector for a Cloud-Based SDN Controller. Appl. Sci. 2023, 13, 4914. [Google Scholar] [CrossRef]

- Tiwari, G.; Jain, R. Detecting and Classifying Incoming Traffic in a Secure Cloud Computing Environment Using Machine Learning and Deep Learning System. In Proceedings of the 2022 IEEE 7th International Conference on Smart Cloud (SmartCloud), Shanghai, China, 8–10 October 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 16–21. [Google Scholar]

- Alshaikh, A.; Alanesi, M.; Yang, D.; Alshaikh, A. Advanced Techniques for Cyber Threat Intelligence-Based APT Detection and Mitigation in Cloud Environments. In Proceedings of the International Conference on Cyber Security, Artificial Intelligence, and Digital Economy (CSAIDE 2023), Nanjing, China, 1 June 2023; Loskot, P., Niu, S., Eds.; SPIE: Bellingham, WA, USA, 2023; p. 65. [Google Scholar]

- Alshaer, H. An Overview of Network Virtualization and Cloud Network as a Service. Int. J. Netw. Manag. 2015, 25, 1–30. [Google Scholar] [CrossRef]

- Mahmood, S.; Yahaya, N.A. Exploring Virtual Machine Scheduling Algorithms: A Meta-Analysis. Sir. Syed Univ. Res. J. Eng. Technol. 2023, 13, 89–100. [Google Scholar] [CrossRef]

- Mahmood, S.; Yahaya, N.A.; Hasan, R.; Hussain, S.; Malik, M.H.; Sarker, K.U. Self-Adapting Security Monitoring in Eucalyptus Cloud Environment. Int. J. Adv. Comput. Sci. Appl. 2023, 14, 140310. [Google Scholar] [CrossRef]

- Panker, T.; Nissim, N. Leveraging Malicious Behavior Traces from Volatile Memory Using Machine Learning Methods for Trusted Unknown Malware Detection in Linux Cloud Environments. Knowl. Based Syst. 2021, 226, 107095. [Google Scholar] [CrossRef]

- Kim, H.; Kim, J.; Kim, Y.; Kim, I.; Kim, K.J. Design of Network Threat Detection and Classification Based on Machine Learning on Cloud Computing. Clust. Comput. 2019, 22, 2341–2350. [Google Scholar] [CrossRef]

- Sharma, V.; Verma, V.; Sharma, A. Detection of DDoS Attacks Using Machine Learning in Cloud Computing. In Advanced Informatics for Computing Research: Third International Conference, ICAICR 2019, Shimla, India, 15–16 June 2019; Springer: Singapore, 2019; pp. 260–273. [Google Scholar]

- Gao, X.; Hu, C.; Shan, C.; Liu, B.; Niu, Z.; Xie, H. Malware Classification for the Cloud via Semi-Supervised Transfer Learning. J. Inf. Secur. Appl. 2020, 55, 102661. [Google Scholar] [CrossRef]

- Landman, T.; Nissim, N. Deep-Hook: A Trusted Deep Learning-Based Framework for Unknown Malware Detection and Classification in Linux Cloud Environments. Neural Netw. 2021, 144, 648–685. [Google Scholar] [CrossRef]

- Nadeem, M.; Arshad, A.; Riaz, S.; Zahra, S.; Rashid, M.; Band, S.S.; Mosavi, A. Preventing Cloud Network from Spamming Attacks Using Cloudflare and KNN. Comput. Mater. Contin. 2023, 74, 2641–2659. [Google Scholar] [CrossRef]

- Muthulakshmi, K.; Valarmathi, K. Attaining Cloud Security Solution Over Machine Learning Techniques. Smart Intell. Comput. Communiation Technol. 2021, 38, 246. [Google Scholar]

- Agafonov, A.; Yumaganov, A. Performance Comparison of Machine Learning Methods in the Bus Arrival Time Prediction Problem. In Proceedings of the V International Conference Information Technology and Nanotechnology 2019, Samara, Russia, 21–24 May 2019; CEUR-WS: Aachen, Germany, 2020; pp. 57–62. [Google Scholar]

- Liu, L.; Su, J.; Zhao, B.; Wang, Q.; Chen, J.; Luo, Y. Towards an Efficient Privacy-Preserving Decision Tree Evaluation Service in the Internet of Things. Symmetry 2020, 12, 103. [Google Scholar] [CrossRef]

- Gonzales, D.; Kaplan, J.M.; Saltzman, E.; Winkelman, Z.; Woods, D. Cloud-Trust-a Security Assessment Model for Infrastructure as a Service (IaaS) Clouds. IEEE Trans. Cloud Comput. 2017, 5, 523–536. [Google Scholar] [CrossRef]

- Bhamare, D.; Salman, T.; Samaka, M.; Erbad, A.; Jain, R. Feasibility of Supervised Machine Learning for Cloud Security. In Proceedings of the 2016 International Conference on Information Science and Security (ICISS), Jaipur, India, 19–22 December 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1–5. [Google Scholar]

- Zhang, T. Solving Large Scale Linear Prediction Problems Using Stochastic Gradient Descent Algorithms. In Proceedings of the Twenty-First International Conference on Machine Learning—ICML’04, New York, NY, USA, 4 July 2004; ACM Press: New York, NY, USA, 2004; p. 116. [Google Scholar]

- Galanti, T.; Siegel, Z.S.; Gupte, A.; Poggio, T. Characterizing the Implicit Bias of Regularized SGD in Rank Minimization. arXiv 2022, arXiv:2206.05794. [Google Scholar]

- Amjad, A.; Alyas, T.; Farooq, U.; Tariq, M. Detection and Mitigation of DDoS Attack in Cloud Computing Using Machine Learning Algorithm. ICST Trans. Scalable Inf. Syst. 2018, 11, 159834. [Google Scholar] [CrossRef]

- Yu, X.; Zhao, W.; Huang, Y.; Ren, J.; Tang, D. Privacy-Preserving Outsourced Logistic Regression on Encrypted Data from Homomorphic Encryption. Secur. Commun. Netw. 2022, 2022, 1321198. [Google Scholar] [CrossRef]

- Mishra, N.; Singh, R.K.; Yadav, S.K. Detection of DDoS Vulnerability in Cloud Computing Using the Perplexed Bayes Classifier. Comput. Intell. Neurosci. 2022, 2022, 9151847. [Google Scholar] [CrossRef] [PubMed]

- Mahmood, H.A. Network Intrusion Detection System (NIDS) in Cloud Environment Based on Hidden Naïve Bayes Multiclass Classifier. Al-Mustansiriyah J. Sci. 2018, 28, 134–142. [Google Scholar] [CrossRef]

- Edemacu, K.; Kim, J.W. Scalable Multi-Party Privacy-Preserving Gradient Tree Boosting over Vertically Partitioned Dataset with Outsourced Computations. Mathematics 2022, 10, 2185. [Google Scholar] [CrossRef]

- Guo, W.; Luo, Z.; Chen, H.; Hang, F.; Zhang, J.; Al Bayatti, H. AdaBoost Algorithm in Trustworthy Network for Anomaly Intrusion Detection. Appl. Math. Nonlinear Sci. 2023, 8, 1819–1830. [Google Scholar] [CrossRef]

- Akter, M.S.; Shahriar, H.; Bhuiya, Z.A. Automated Vulnerability Detection in Source Code Using Quantum Natural Language Processing; Springer Nature Singapore: Singapore, 2023; pp. 83–102. [Google Scholar]

- Bhamare, D.; Zolanvari, M.; Erbad, A.; Jain, R.; Khan, K.; Meskin, N. Cybersecurity for Industrial Control Systems: A Survey. Comput. Secur. 2020, 89, 101677. [Google Scholar] [CrossRef]

| Metric | Predefined Target | Actual Performance |

|---|---|---|

| Availability | 99.90% | 99.50% |

| Response time | <250 ms | 270 ms |

| Incident resolution | <1 h | 45 min |

| Event-detection rate | >95% | 97% |

| False-positive rate | <2% | 1.50% |

| Data-loss prevention | Zero incidents | Zero incidents |

| Date | Throughput | Mitigation Time | Rule Latency | Redundancy |

|---|---|---|---|---|

| 1 November 2023 | 0.28929595 | 0.8917 | 0.7962881 | 0.8917 |

| 2 November 2023 | 0.98387161 | 0.0875 | 0.0341269 | 0.0875 |

| 3 November 2023 | 0.38698068 | 0.6517 | 0.9855579 | 0.6517 |

| 4 November 2023 | 0.34434674 | 0.038 | 0.7293986 | 0.038 |

| 5 November 2023 | 0.5245148 | 0.7073 | 0.1063354 | 0.7073 |

| 6 November 2023 | 0.91092528 | 0.9599 | 0.3668571 | 0.9599 |

| 7 November 2023 | 0.68082267 | 0.4252 | 0.4716305 | 0.4252 |

| 8 November 2023 | 0.8535908 | 0.8651 | 0.3720456 | 0.8651 |

| 9 November 2023 | 0.24218537 | 0.6883 | 0.6896314 | 0.6883 |

| 10 November 2023 | 0.36671421 | 0.0795 | 0.1554277 | 0.0795 |

| 11 November 2023 | 0.51110631 | 0.1712 | 0.4832821 | 0.1712 |

| 12 November 2023 | 0.2342037 | 0.7822 | 0.7297936 | 0.7822 |

| 13 November 2023 | 0.60699445 | 0.3307 | 0.1803124 | 0.3307 |

| 14 November 2023 | 0.79729603 | 0.7759 | 0.4157502 | 0.7759 |

| 15 November 2023 | 0.1906621 | 0.48 | 0.8846323 | 0.48 |

| 16 November 2023 | 0.1467283 | 0.5816 | 0.8164887 | 0.5816 |

| 17 November 2023 | 0.87989661 | 0.3408 | 0.6752265 | 0.3408 |

| 18 November 2023 | 0.33379969 | 0.3796 | 0.3160406 | 0.3796 |

| 19 November 2023 | 0.14054365 | 0.0665 | 0.0681799 | 0.0665 |

| 20 November 2023 | 0.94991187 | 0.2171 | 0.4864879 | 0.2171 |

| 21 November 2023 | 0.65476431 | 0.1774 | 0.8152713 | 0.1774 |

| 22 November 2023 | 0.51084315 | 0.5485 | 0.737099 | 0.5485 |

| 23 November 2023 | 0.65674763 | 0.9641 | 0.5284131 | 0.9641 |

| 24 November 2023 | 0.25529718 | 0.4955 | 0.9427088 | 0.4955 |

| 25 November 2023 | 0.99447671 | 0.4862 | 0.1296516 | 0.4862 |

| 26 November 2023 | 0.10539093 | 0.7968 | 0.3671343 | 0.7968 |

| 27 November 2023 | 0.14675979 | 0.9389 | 0.7981871 | 0.9389 |

| 28 November 2023 | 0.43394038 | 0.3534 | 0.4468312 | 0.3534 |

| 29 November 2023 | 0.65324843 | 0.1333 | 0.6362162 | 0.1333 |

| 30 November 2023 | 0.86412882 | 0.0975 | 0.6999324 | 0.0975 |

| Date | kNN | Tree | SVM | SGD | Random Forest | Neural Network | Naive Bayes | Logistic Regression | Gradient Boosting | AdaBoost |

|---|---|---|---|---|---|---|---|---|---|---|

| 1 November 2023 | 0.62110019 | 0.64390019 | 0.60260019 | 0.58570019 | 0.64010019 | 0.60260019 | 0.65920019 | 0.64090019 | 0.63410019 | 0.63740019 |

| 2 November 2023 | 0.688455816 | 0.711255816 | 0.669955816 | 0.653055816 | 0.707455816 | 0.669955816 | 0.726555816 | 0.708255816 | 0.701455816 | 0.704755816 |

| 3 November 2023 | 0.609405173 | 0.632205173 | 0.590905173 | 0.574005173 | 0.628405173 | 0.590905173 | 0.647505173 | 0.629205173 | 0.622405173 | 0.625705173 |

| 4 November 2023 | 0.587264744 | 0.610064744 | 0.568764744 | 0.551864744 | 0.606264744 | 0.568764744 | 0.625364744 | 0.607064744 | 0.600264744 | 0.603564744 |

| 5 November 2023 | 0.66989971 | 0.69269971 | 0.65139971 | 0.63449971 | 0.68889971 | 0.65139971 | 0.70799971 | 0.68969971 | 0.68289971 | 0.68619971 |

| 6 November 2023 | 0.708144673 | 0.730944673 | 0.689644673 | 0.672744673 | 0.727144673 | 0.689644673 | 0.746244673 | 0.727944673 | 0.721144673 | 0.724444673 |

| 7 November 2023 | 0.653160742 | 0.675960742 | 0.634660742 | 0.617760742 | 0.672160742 | 0.634660742 | 0.691260742 | 0.672960742 | 0.666160742 | 0.669460742 |

| 8 November 2023 | 0.6974118 | 0.7202118 | 0.6789118 | 0.6620118 | 0.7164118 | 0.6789118 | 0.7355118 | 0.7172118 | 0.7104118 | 0.7137118 |

| 9 November 2023 | 0.611551967 | 0.634351967 | 0.593051967 | 0.576151967 | 0.630551967 | 0.593051967 | 0.649651967 | 0.631351967 | 0.624551967 | 0.627851967 |

| 10 November 2023 | 0.620275036 | 0.643075036 | 0.601775036 | 0.584875036 | 0.639275036 | 0.601775036 | 0.658375036 | 0.640075036 | 0.633275036 | 0.636575036 |

| 11 November 2023 | 0.622906526 | 0.645706526 | 0.604406526 | 0.587506526 | 0.641906526 | 0.604406526 | 0.661006526 | 0.642706526 | 0.635906526 | 0.639206526 |

| 12 November 2023 | 0.61344069 | 0.63624069 | 0.59494069 | 0.57804069 | 0.63244069 | 0.59494069 | 0.65154069 | 0.63324069 | 0.62644069 | 0.62974069 |

| 13 November 2023 | 0.655618825 | 0.678418825 | 0.637118825 | 0.620218825 | 0.674618825 | 0.637118825 | 0.693718825 | 0.675418825 | 0.668618825 | 0.671918825 |

| 14 November 2023 | 0.685137093 | 0.707937093 | 0.666637093 | 0.649737093 | 0.704137093 | 0.666637093 | 0.723237093 | 0.704937093 | 0.698137093 | 0.701437093 |

| 15 November 2023 | 0.586234595 | 0.609034595 | 0.567734595 | 0.550834595 | 0.605234595 | 0.567734595 | 0.624334595 | 0.606034595 | 0.599234595 | 0.602534595 |

| 16 November 2023 | 0.590328395 | 0.613128395 | 0.571828395 | 0.554928395 | 0.609328395 | 0.571828395 | 0.628428395 | 0.610128395 | 0.603328395 | 0.606628395 |

| 17 November 2023 | 0.658668336 | 0.681468336 | 0.640168336 | 0.623268336 | 0.677668336 | 0.640168336 | 0.696768336 | 0.678468336 | 0.671668336 | 0.674968336 |

| 18 November 2023 | 0.623957939 | 0.646757939 | 0.605457939 | 0.588557939 | 0.642957939 | 0.605457939 | 0.662057939 | 0.643757939 | 0.636957939 | 0.640257939 |

| 19 November 2023 | 0.60137037 | 0.62417037 | 0.58287037 | 0.56597037 | 0.62037037 | 0.58287037 | 0.63947037 | 0.62117037 | 0.61437037 | 0.61767037 |

| 20 November 2023 | 0.668921792 | 0.691721792 | 0.650421792 | 0.633521792 | 0.687921792 | 0.650421792 | 0.707021792 | 0.688721792 | 0.681921792 | 0.685221792 |

| 21 November 2023 | 0.620982866 | 0.643782866 | 0.602482866 | 0.585582866 | 0.639982866 | 0.602482866 | 0.659082866 | 0.640782866 | 0.633982866 | 0.637282866 |

| 22 November 2023 | 0.629054365 | 0.651854365 | 0.610554365 | 0.593654365 | 0.648054365 | 0.610554365 | 0.667154365 | 0.648854365 | 0.642054365 | 0.645354365 |

| 23 November 2023 | 0.674859108 | 0.697659108 | 0.656359108 | 0.639459108 | 0.693859108 | 0.656359108 | 0.712959108 | 0.694659108 | 0.687859108 | 0.691159108 |

| 24 November 2023 | 0.590569278 | 0.613369278 | 0.572069278 | 0.555169278 | 0.609569278 | 0.572069278 | 0.628669278 | 0.610369278 | 0.603569278 | 0.606869278 |

| 25 November 2023 | 0.704675091 | 0.727475091 | 0.686175091 | 0.669275091 | 0.723675091 | 0.686175091 | 0.742775091 | 0.724475091 | 0.717675091 | 0.720975091 |

| 26 November 2023 | 0.619422378 | 0.642222378 | 0.600922378 | 0.584022378 | 0.638422378 | 0.600922378 | 0.657522378 | 0.639222378 | 0.632422378 | 0.635722378 |

| 27 November 2023 | 0.609111624 | 0.631911624 | 0.590611624 | 0.573711624 | 0.628111624 | 0.590611624 | 0.647211624 | 0.628911624 | 0.622111624 | 0.625411624 |

| 28 November 2023 | 0.626122478 | 0.648922478 | 0.607622478 | 0.590722478 | 0.645122478 | 0.607622478 | 0.664222478 | 0.645922478 | 0.639122478 | 0.642422478 |

| 29 November 2023 | 0.627579033 | 0.650379033 | 0.609079033 | 0.592179033 | 0.646579033 | 0.609079033 | 0.665679033 | 0.647379033 | 0.640579033 | 0.643879033 |

| 30 November 2023 | 0.643691262 | 0.666491262 | 0.625191262 | 0.608291262 | 0.662691262 | 0.625191262 | 0.681791262 | 0.663491262 | 0.656691262 | 0.659991262 |

| Model | Accuracy | Precision | Recall | F1 |

|---|---|---|---|---|

| kNN | 0.853 | 0.830 | 0.853 | 0.839 |

| Tree | 0.877 | 0.868 | 0.877 | 0.872 |

| SVM | 0.876 | 0.767 | 0.876 | 0.818 |

| SGD | 0.779 | 0.796 | 0.779 | 0.787 |

| Random forest | 0.869 | 0.864 | 0.869 | 0.867 |

| Neural network | 0.876 | 0.767 | 0.876 | 0.818 |

| Naive Bayes | 0.854 | 0.933 | 0.854 | 0.874 |

| Logistic regression | 0.877 | 0.892 | 0.877 | 0.821 |

| Gradient boosting | 0.863 | 0.854 | 0.863 | 0.858 |

| AdaBoost | 0.867 | 0.859 | 0.867 | 0.863 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mahmood, S.; Hasan, R.; Yahaya, N.A.; Hussain, S.; Hussain, M. Evaluation of the Omni-Secure Firewall System in a Private Cloud Environment. Knowledge 2024, 4, 141-170. https://doi.org/10.3390/knowledge4020008

Mahmood S, Hasan R, Yahaya NA, Hussain S, Hussain M. Evaluation of the Omni-Secure Firewall System in a Private Cloud Environment. Knowledge. 2024; 4(2):141-170. https://doi.org/10.3390/knowledge4020008

Chicago/Turabian StyleMahmood, Salman, Raza Hasan, Nor Adnan Yahaya, Saqib Hussain, and Muzammil Hussain. 2024. "Evaluation of the Omni-Secure Firewall System in a Private Cloud Environment" Knowledge 4, no. 2: 141-170. https://doi.org/10.3390/knowledge4020008

APA StyleMahmood, S., Hasan, R., Yahaya, N. A., Hussain, S., & Hussain, M. (2024). Evaluation of the Omni-Secure Firewall System in a Private Cloud Environment. Knowledge, 4(2), 141-170. https://doi.org/10.3390/knowledge4020008