Three-Dimensional Particle-Discrete Datasets: Enabling Multidimensional Particle System Characterization Using X-Ray Tomography

Abstract

:1. Introduction

1.1. The Need for 3D Particle-Discrete Data

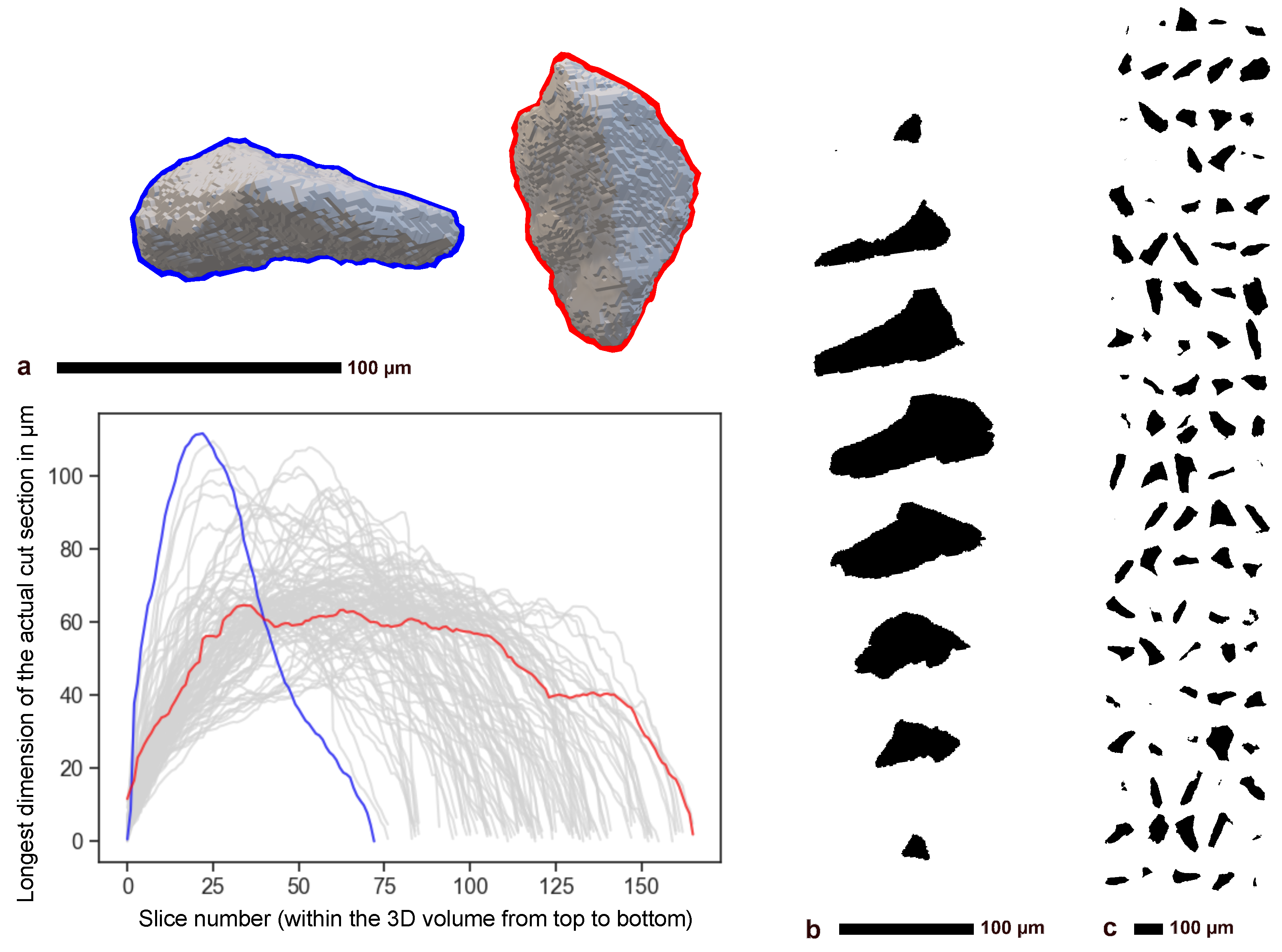

1.2. Discretization of Particle Data

1.2.1. The Particle Interface to Multidimensional Analysis Methods

1.2.2. From 2D to 3D Description via Direct Imaging

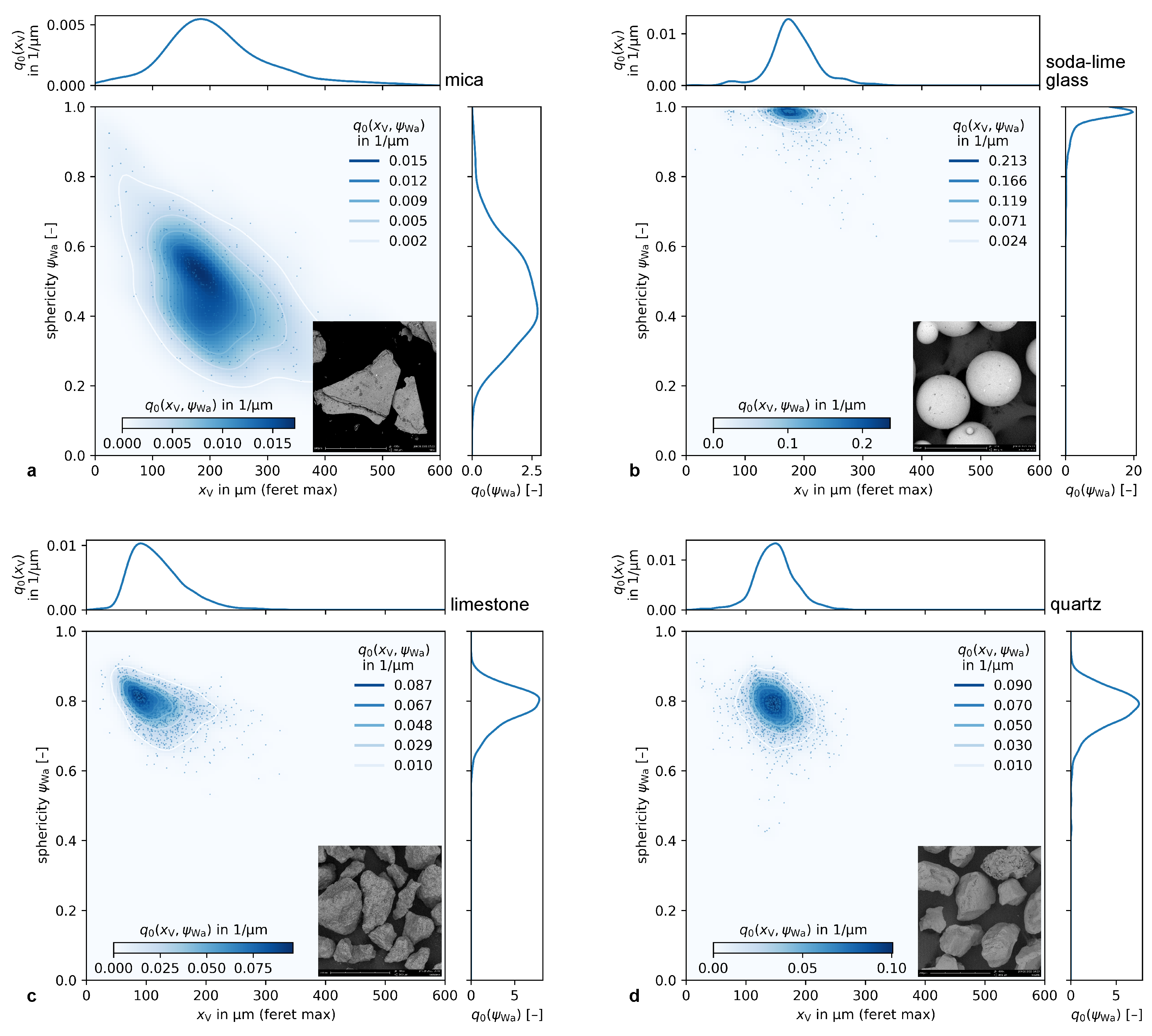

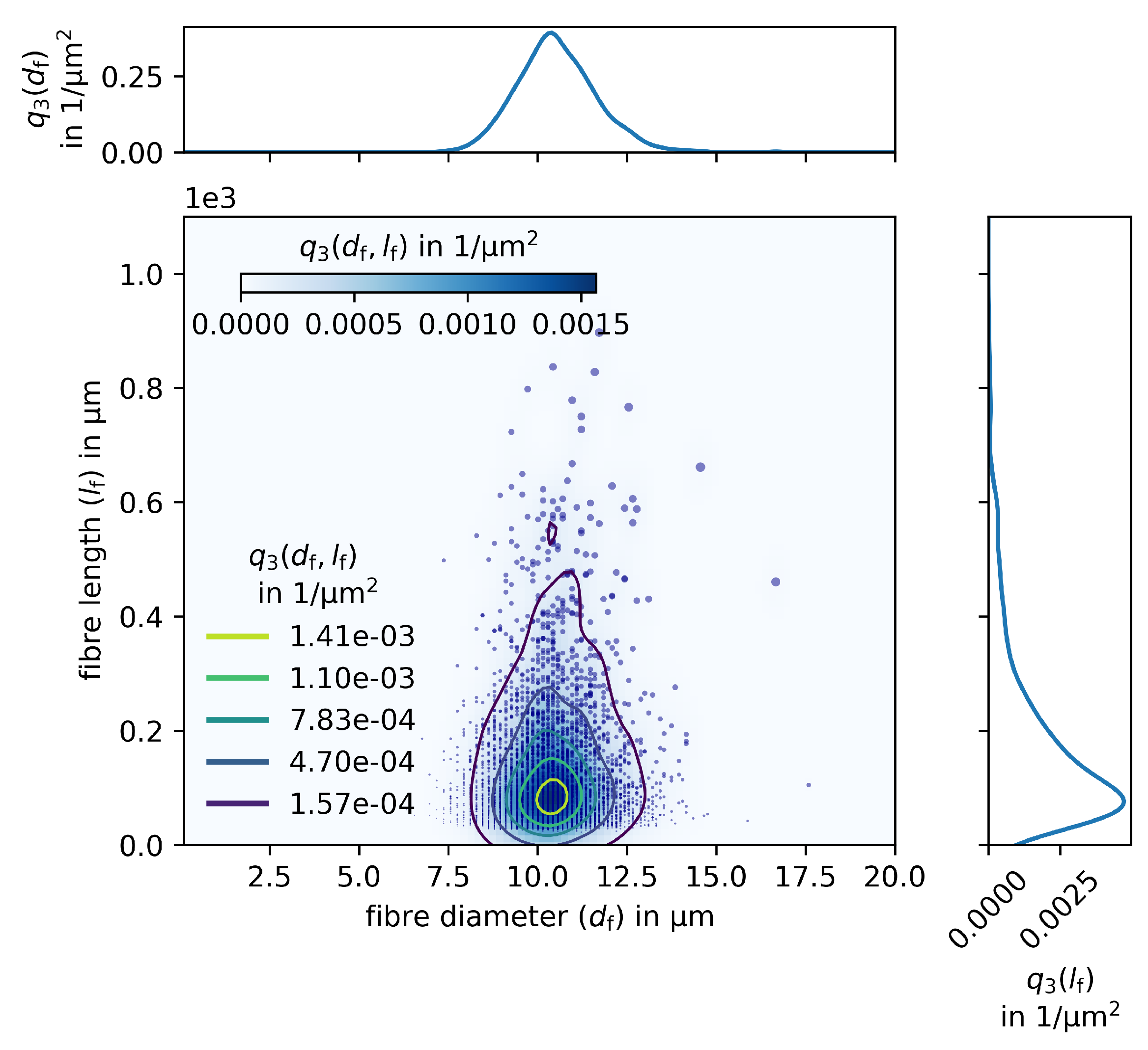

1.3. Multidimensional Characterization

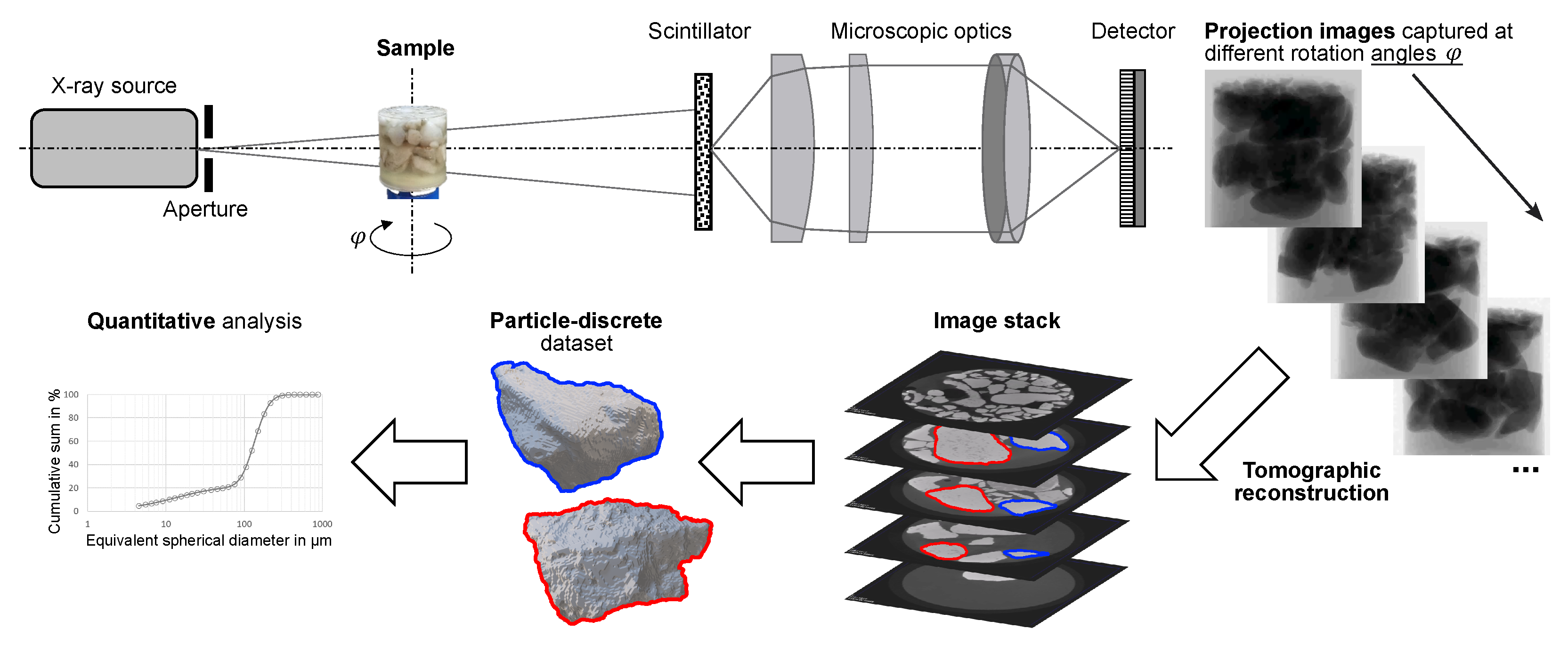

2. X-Ray Tomography as a Key Technique for 3D Particle-Discrete Analysis

2.1. X-Ray Microscopy

2.2. Main Factors Influencing Quantitative Particle Analysis

2.2.1. Particle Sample

2.2.2. Measurement Setup

2.2.3. Artifacts

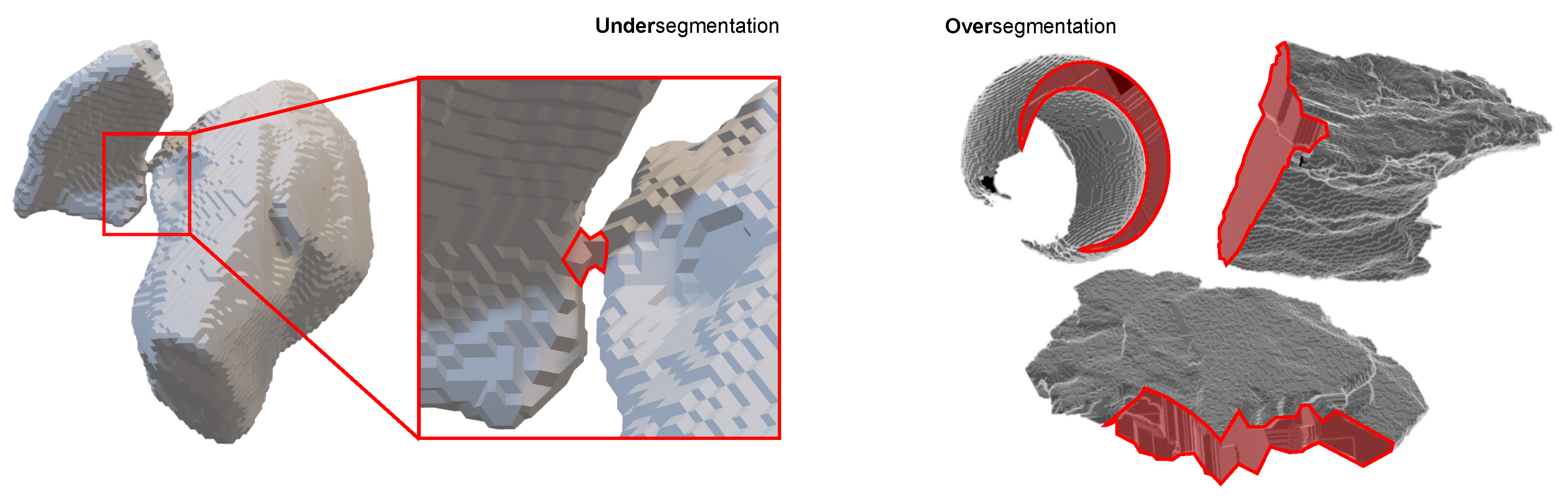

2.2.4. Image Processing

2.2.5. Summary

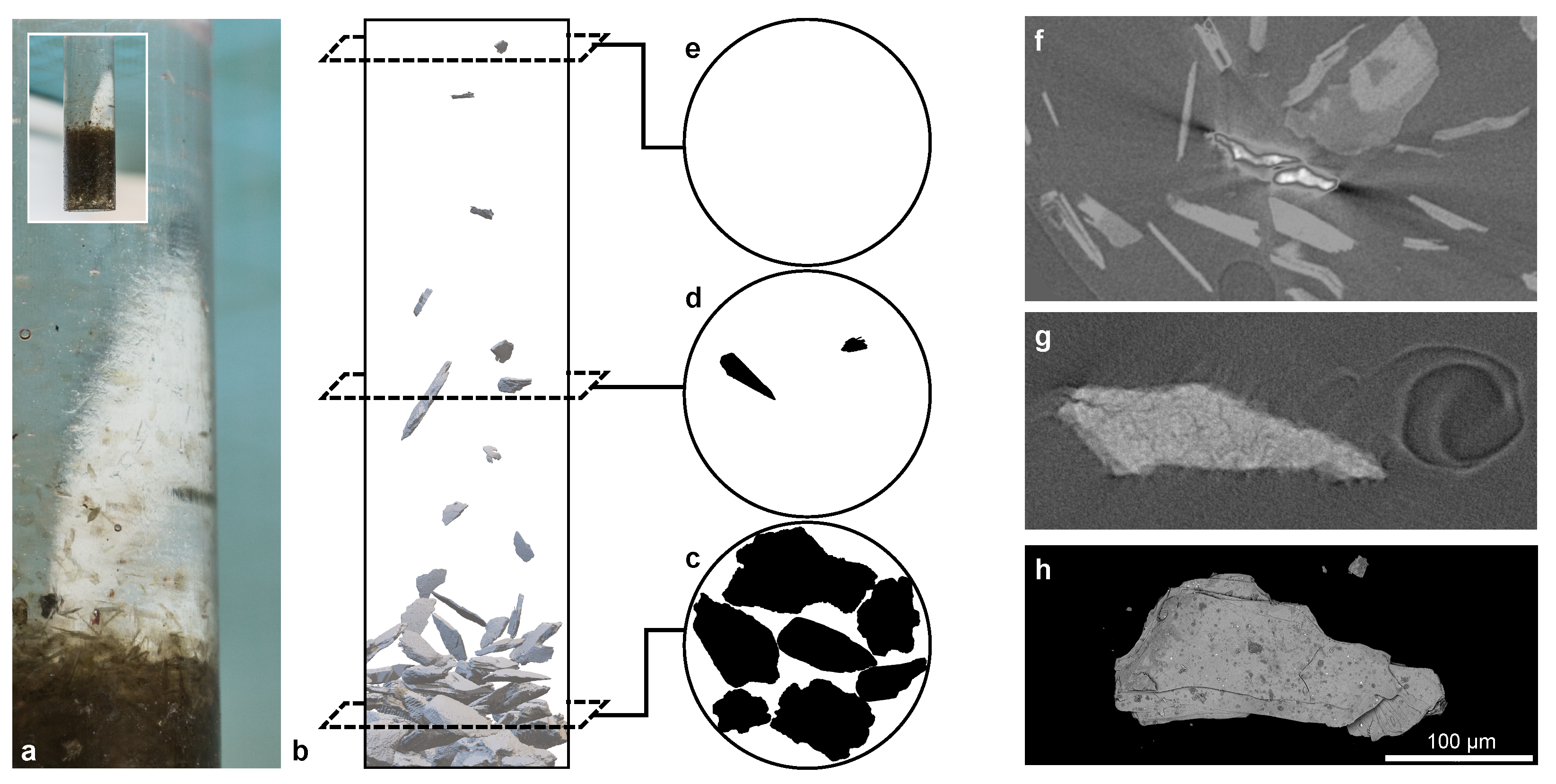

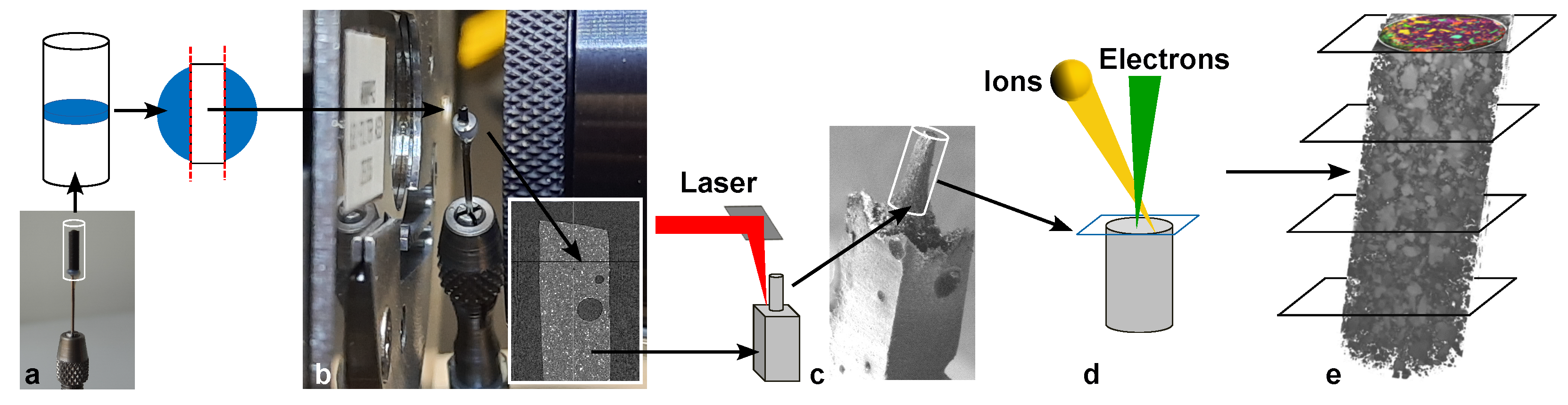

3. Supporting Particle Sample Preparation Techniques

3.1. General Aspects

3.2. Particle Sample Requirements

3.2.1. Geometry

3.2.2. Dispersity and Homogeneity

3.2.3. Statistics

3.2.4. Validation

3.3. Shock Freezing of a Highly Viscous Matrix

3.4. Epoxy Embedding Adding Low-X-Ray-Attenuating Spacer Particles

4. Application

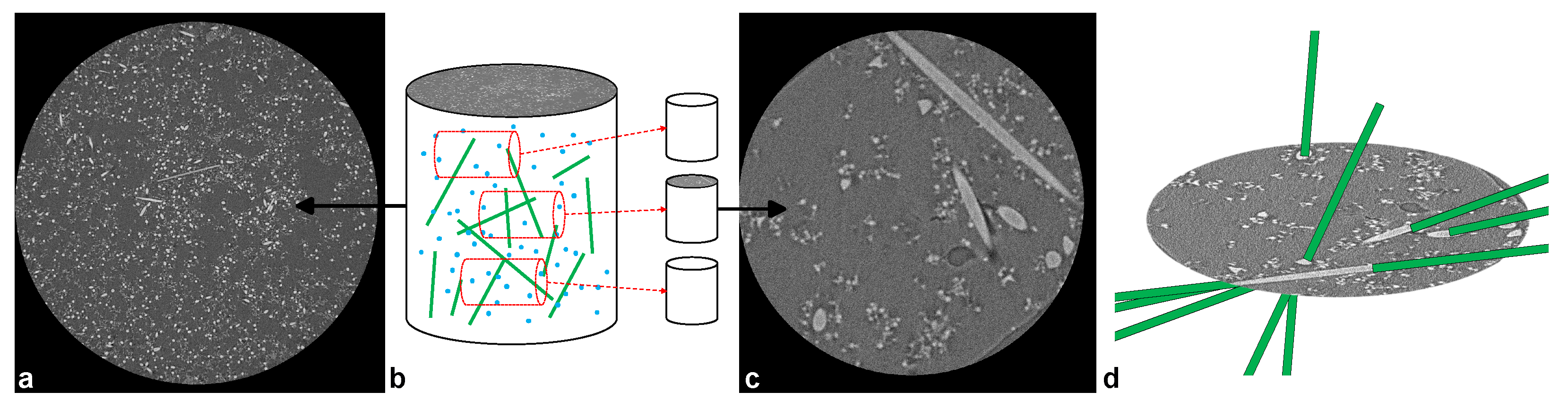

4.1. Multi-Scale Analysis

4.2. Correlative Analysis

5. Challenges and Future Directions

5.1. The FAIR Principle Applied to Particle Data

5.2. 3D Multi-Phase Particle-Discrete Datasets

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| BH | Beam Hardening |

| CT | Computed Tomography |

| DFG | German Research Foundation |

| DIA | Dynamic Image Analysis |

| DOI | Digital Object Identifier |

| EDS | Energy Dispersive Spectroscopy |

| FAIR | Findable, Accessible, Interoperable, and Reusable |

| FBP | Filtered Back-Projection |

| FIB | Focused Ion Beam |

| FOV | Field of View |

| KDE | Kernel Density Estimation |

| PARROT | Open Access Archive for Particle Discrete Tomographic Datasets |

| PVE | Partial Volume Effect |

| ROI | Region of Interest |

| SEM | Scanning Electron Microscopy |

| SNR | Signal-to-Noise Ratio |

| TEM | Transmission Electron Microscopy |

| Voxel | Volumetric Pixel (isometric in the case of X-ray tomography) |

| XRM | X-ray Microscope/X-ray Microscopy |

References

- Erdoğan, S.T.; Garboczi, E.T.; Fowler, D.W. Shape and size of microfine aggregates: X-ray microcomputed tomography vs. laser diffraction. Powder Technol. 2007, 177, 53–63. [Google Scholar] [CrossRef]

- Demeler, B.; Nguyen, T.L.; Gorbet, G.E.; Schirf, V.; Brookes, E.H.; Mulvaney, P.; El-Ballouli, A.O.; Pan, J.; Bakr, O.M.; Demeler, A.K.; et al. Characterization of Size, Anisotropy, and Density Heterogeneity of Nanoparticles by Sedimentation Velocity. Anal. Chem. 2014, 86, 7688–7695. [Google Scholar] [CrossRef] [PubMed]

- Whitby, K. The Mechanics of Fine Sieving. In Symposium on Particle Size Measurement; ASTM International: West Conshohocken, PA, USA, 1959; pp. 3–25. [Google Scholar] [CrossRef]

- Rueden, C.T.; Schindelin, J.; Hiner, M.C.; DeZonia, B.E.; Walter, A.E.; Arena, E.T.; Eliceiri, K.W. ImageJ2: ImageJ for the next generation of scientific image data. BMC Bioinform. 2017, 18, 529. [Google Scholar] [CrossRef] [PubMed]

- Pham, D.T.; Dimov, S.S.; Petkov, P.V.; Petkov, S.P. Laser milling. Proc. Inst. Mech. Eng. Part B J. Eng. Manuf. 2002, 216, 657–667. [Google Scholar] [CrossRef]

- Spowart, J.E. Automated serial sectioning for 3-D analysis of microstructures. Scr. Mater. 2006, 55, 5–10. [Google Scholar] [CrossRef]

- Burnett, T.L.; Kelley, R.; Winiarski, B.; Contreras, L.; Daly, M.; Gholinia, A.; Burke, M.G.; Withers, P.J. Large volume serial section tomography by Xe Plasma FIB dual beam microscopy. Ultramicroscopy 2016, 161, 119–129. [Google Scholar] [CrossRef]

- Lätti, D.; Adair, B. An assessment of stereological adjustment procedures. Miner. Eng. 2001, 14, 1579–1587. [Google Scholar] [CrossRef]

- Sutherland, D.N.; Gottlieb, P. Application of automated quantitative mineralogy in mineral processing. Miner. Eng. 1991, 4, 753–762. [Google Scholar] [CrossRef]

- Schulz, B.; Sandmann, D.; Gilbricht, S. SEM-Based Automated Mineralogy and Its Application in Geo- and Material Sciences. Minerals 2020, 10, 1004. [Google Scholar] [CrossRef]

- Shaffer, M. Sample preparation methods for imaging analysis. In Proceedings of the Geometallurgy and Appl. Mineralogy 2009, Conference of Mineralogists, Cape Town, South Africa, 23–27 November 2009. [Google Scholar]

- Macho, O.; Kabát, J.; Gabrišová, L.; Peciar, P.; Juriga, M.; Fekete, R.; Galbavá, P.; Blaško, J.; Peciar, M. Dimensionless criteria as a tool for creation of a model for predicting the size of granules in high-shear granulation. Part. Sci. Technol. 2019, 38, 381–390. [Google Scholar] [CrossRef]

- Köhler, U.; Stübinger, T.; Witt, W. Laser-Diffraction Results From Dynamic Image Analysis Data. In Proceedings of the Conference Proceedings WCPT6, Nuremberg, Germany, 26–29 April 2010. [Google Scholar]

- Gay, S.L.; Morrison, R.D. Using Two Dimensional Sectional Distributions to Infer Three Dimensional Volumetric Distributions—Validation using Tomography. Part. Part. Syst. Charact. 2006, 23, 246–253. [Google Scholar] [CrossRef]

- Ueda, T.; Oki, T.; Koyanaka, S. Experimental analysis of mineral liberation and stereological bias based on X-ray computed tomography and artificial binary particles. Adv. Powder Technol. 2018, 29, 462–470. [Google Scholar] [CrossRef]

- Ulusoy, U.; Igathinathane, C. Particle size distribution modeling of milled coals by dynamic image analysis and mechanical sieving. Fuel Process. Technol. 2016, 143, 100–109. [Google Scholar] [CrossRef]

- Schach, E.; Buchmann, M.; Tolosana-Delgado, R.; Leißner, T.; Kern, M.; van den Boogaart, G.; Rudolph, M.; Peuker, U.A. Multidimensional characterization of separation processes—Part 1: Introducing kernel methods and entropy in the context of mineral processing using SEM-based image analysis. Miner. Eng. 2019, 137, 78–86. [Google Scholar] [CrossRef]

- Buchmann, M.; Schach, E.; Leißner, T.; Kern, M.; Mütze, T.; Rudolph, M.; Peuker, U.A.; Tolosana-Delgado, R. Multidimensional characterization of separation processes—Part 2: Comparability of separation efficiency. Miner. Eng. 2020, 150, 106284. [Google Scholar] [CrossRef]

- Schach, E.; Buchwald, T.; Furat, O.; Tischer, F.; Kaas, A.; Kuger, L.; Masuhr, M.; Sygusch, J.; Wilhelm, T.; Ditscherlein, R.; et al. Progress in the Application of Multidimensional Particle Property Distributions: The Separation Function. KONA Powder Part. J. 2024, 42, 134–155. [Google Scholar] [CrossRef]

- Buchwald, T.; Schach, E.; Peuker, U.A. A framework for the description of multidimensional particle separation processes. Powder Technol. 2024, 433, 119165. [Google Scholar] [CrossRef]

- Ditscherlein, R.; Furat, O.; Löwer, E.; Mehnert, R.; Trunk, R.; Leißner, T.; Krause, M.J.; Schmidt, V.; Peuker, U.A. PARROT: A Pilot Study on the Open Access Provision of Particle-Discrete Tomographic Datasets. Microsc. Microanal. 2022, 28, 350–360. [Google Scholar] [CrossRef]

- Nelsen, R.B. An Introduction to Copulas; Springer: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Durante, F.; Sempi, C. Principles of Copula Theory; Chapman and Hall/CRC: Boca Raton, FL, USA, 2015. [Google Scholar]

- Furat, O.; Leißner, T.; Bachmann, K.; Gutzmer, J.; Peuker, U.A.; Schmidt, V. Stochastic Modeling of Multidimensional Particle Properties Using Parametric Copulas. Microsc. Microanal. 2019, 25, 720–734. [Google Scholar] [CrossRef]

- Ditscherlein, R.; Leißner, T.; Peuker, U.A. Self-constructed automated syringe for preparation of micron-sized particulate samples in X-ray microtomography. MethodsX 2020, 7, 100757. [Google Scholar] [CrossRef]

- Bellman, R.; Page, E.S. Adaptive Control Processes: A Guided Tour; Princeton University Press: Princeton, NJ, USA, 1961. [Google Scholar] [CrossRef]

- Ditscherlein, R.; Furat, O.; de Langlard, M.; Martins de Souza e Silva, J.; Sygusch, J.; Rudolph, M.; Leißner, T.; Schmidt, V.; Peuker, U.A. Multiscale Tomographic Analysis for Micron-Sized Particulate Samples. Microsc. Microanal. 2020, 26, 676–688. [Google Scholar] [CrossRef] [PubMed]

- Baruchel, J.; Buffiere, J.Y.; Maire, E.; Merle, P.; Peix, G. X-Ray Tomography in Material Science; Hermes Science Publications: Paris, France, 2000. [Google Scholar]

- Stock, S.R. Recent advances in X-ray microtomography applied to materials. Int. Mater. Rev. 2008, 53, 129–181. [Google Scholar] [CrossRef]

- Mizutani, R.; Suzuki, Y. X-ray microtomography in biology. Micron 2012, 43, 104–115. [Google Scholar] [CrossRef] [PubMed]

- Wildenschild, D.; Vaz, C.M.P.; Rivers, M.L.; Rikard, D.; Christensen, B.S.B. Using X-ray computed tomography in hydrology: Systems, resolutions, and limitations. J. Hydrol. 2002, 267, 285–297. [Google Scholar] [CrossRef]

- Ketcham, R.A.; Carlson, W.D. Acquisition, optimization and interpretation of X-ray computed tomographic imagery: Applications to the geosciences. Comput. Geosci. 2001, 27, 381–400. [Google Scholar] [CrossRef]

- Brown, D.J.; Vickers, G.T.; Collier, A.P.; Reynolds, G.K. Measurement of the size, shape and orientation of convex bodies. Chem. Eng. Sci. 2005, 60, 289–292. [Google Scholar] [CrossRef]

- Zschech, E.; Yun, W.; Schneider, G. High-resolution X-ray imaging—A powerful nondestructive technique for applications in semiconductor industry. Appl. Phys. A 2008, 92, 423–429. [Google Scholar] [CrossRef]

- Lin, C.L.; Miller, J.D.; Cortes, A. Applications of X-Ray Computed Tomography in Particulate Systems. KONA Powder Part. J. 1992, 10, 88–95. [Google Scholar] [CrossRef]

- Miller, J.D.; Lin, C.L. Three-dimensional analysis of particulates in mineral processing systems by cone beam X-ray microtomography. Miner. Metall. Process. 2004, 21, 113–124. [Google Scholar] [CrossRef]

- Miller, J.D.; Lin, C.L. Treatment of polished section data for detailed liberation analysis. Int. J. Miner. Process. 1988, 22, 41–58. [Google Scholar] [CrossRef]

- Withers, P.J.; Bouman, C.; Carmignato, S.; Cnudde, V.; Grimaldi, D.; Hagen, C.K.; Maire, E.; Manley, M.; du Plessis, A.; Stock, S.R. X-ray computed tomography. Nat. Rev. Methods Prim. 2021, 1, 18. [Google Scholar] [CrossRef]

- Withers, P.J. X-ray nanotomography. Mater. Today 2007, 10, 26–34. [Google Scholar] [CrossRef]

- Buzug, T.M. Computed Tomography from Photon Statistics to Modern Cone-Beam CT; Springer: Berlin/Heidelberg, Germany, 2008. [Google Scholar] [CrossRef]

- Echlin, P. Handbook of Sample Preparation for Scanning Electron Microscopy and X-Ray Microanalysis; Springer: Cambridge Analytical Microscopy: Cambridge, UK, 2009. [Google Scholar]

- Ayache, J.; Beaunier, L.; Boumendil, J.; Ehret, G.; Laub, D. Sample Preparation Handbook for Transmission Electron Microscopy: Techniques; Springer: Berlin/Heidelberg, Germany, 2010; p. 338. [Google Scholar]

- Ditscherlein, R.; Leißner, T.; Peuker, U.A. Preparation techniques for micron-sized particulate samples in X-ray microtomography. Powder Technol. 2019, 360, 989–997. [Google Scholar] [CrossRef]

- Ditscherlein, R.; Leißner, T.; Peuker, U.A. Preparation strategy for statistically significant micrometer-sized particle systems suitable for correlative 3D imaging workflows on the example of X-ray microtomography. Powder Technol. 2022, 395, 235–242. [Google Scholar] [CrossRef]

- Davis, G.R.; Elliott, J.C. Artefacts in X-ray microtomography of materials. Mater. Sci. Technol. 2006, 22, 1011–1018. [Google Scholar] [CrossRef]

- Boas, F.E.; Fleischmann, D. CT artifacts: Causes and reduction techniques. Imaging Med. 2012, 4, 229–240. [Google Scholar] [CrossRef]

- Ditscherlein, R. A Contribution to the Multidimensional and Correlative Tomographic Characterization of Micron-Sized Particle Systems. Ph.D. Thesis, TU-Bergakademie Freiberg, Freiberg, Germany, 2022. [Google Scholar]

- Soret, M.; Bacharach, S.L.; Buvat, I. Partial-Volume Effect in PET Tumor Imaging. J. Nucl. Med. 2007, 48, 932–945. [Google Scholar] [CrossRef]

- Berg, S.; Kutra, D.; Kroeger, T.; Straehle, C.N.; Kausler, B.X.; Haubold, C.; Schiegg, M.; Ales, J.; Beier, T.; Rudy, M.; et al. ilastik: Interactive machine learning for (bio)image analysis. Nat. Methods 2019, 16, 1226–1232. [Google Scholar] [CrossRef]

- Kyrieleis, A.; Titarenko, V.; Ibison, M.; Connolley, T.; Withers, P.J. Region-of-interest tomography using filtered backprojection: Assessing the practical limits. J. Microsc. 2010, 241, 69–82. [Google Scholar] [CrossRef]

- Katsumata, A.; Hirukawa, A.; Okumura, S.; Naitoh, M.; Fujishita, M.; Ariji, E.; Langlais, R.P. Effects of image artifacts on gray-value density in limited-volume cone-beam computerized tomography. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. Endodontol. 2007, 104, 829–836. [Google Scholar] [CrossRef]

- Tsuchiyama, A.; Sakurama, T.; Nakano, T.; Uesugi, K.; Ohtake, M.; Matsushima, T.; Terakado, K.; Galimov, E.M. Three-dimensional shape distribution of lunar regolith particles collected by the Apollo and Luna programs. Earth Planets Space 2022, 74, 172. [Google Scholar] [CrossRef]

- Gy, P.M. The sampling of particulate materials—A general theory. Int. J. Miner. Process. 1976, 3, 289–312. [Google Scholar] [CrossRef]

- Hutschenreiter, W. Fehlerrechnung und Optimierung bei der Probenahme. Freib. Forschungshefte 1975, A 531, 8. [Google Scholar]

- Leone, C.; Papa, I.; Tagliaferri, F.; Lopresto, V. Investigation of CFRP laser milling using a 30W Q-switched Yb:YAG fiber laser: Effect of process parameters on removal mechanisms and HAZ formation. Compos. Part A Appl. Sci. Manuf. 2013, 55, 129–142. [Google Scholar] [CrossRef]

- Furat, O.; Leißner, T.; Ditscherlein, R.; Šedivý, O.; Weber, M.; Bachmann, K.; Gutzmer, J.; Peuker, U.A.; Schmidt, V. Description of Ore Particles from X-Ray Microtomography (XMT) Images, Supported by Scanning Electron Microscope (SEM)-Based Image Analysis. Microsc. Microanal. 2018, 24, 461–470. [Google Scholar] [CrossRef]

- Englisch, S.; Ditscherlein, R.; Kirstein, T.; Hansen, L.; Furat, O.; Drobek, D.; Leißner, T.; Apeleo Zubiri, B.; Weber, A.P.; Schmidt, V.; et al. 3D analysis of equally X-ray attenuating mineralogical phases utilizing a correlative tomographic workflow across multiple length scales. Powder Technol. 2023, 419, 118343. [Google Scholar] [CrossRef]

- Wilkinson, M.D.; Dumontier, M.; Aalbersberg, I.J.; Appleton, G.; Axton, M.; Baak, A.; Blomberg, N.; Boiten, J.W.; da Silva Santos, L.B.; Bourne, P.E.; et al. The FAIR Guiding Principles for scientific data management and stewardship. Sci. Data 2016, 3, 160018. [Google Scholar] [CrossRef]

- OpARA. Open Access Repository and Archive. Available online: https://opara.zih.tu-dresden.de/xmlui/ (accessed on 12 February 2025).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ditscherlein, R.; Peuker, U.A. Three-Dimensional Particle-Discrete Datasets: Enabling Multidimensional Particle System Characterization Using X-Ray Tomography. Powders 2025, 4, 12. https://doi.org/10.3390/powders4020012

Ditscherlein R, Peuker UA. Three-Dimensional Particle-Discrete Datasets: Enabling Multidimensional Particle System Characterization Using X-Ray Tomography. Powders. 2025; 4(2):12. https://doi.org/10.3390/powders4020012

Chicago/Turabian StyleDitscherlein, Ralf, and Urs A. Peuker. 2025. "Three-Dimensional Particle-Discrete Datasets: Enabling Multidimensional Particle System Characterization Using X-Ray Tomography" Powders 4, no. 2: 12. https://doi.org/10.3390/powders4020012

APA StyleDitscherlein, R., & Peuker, U. A. (2025). Three-Dimensional Particle-Discrete Datasets: Enabling Multidimensional Particle System Characterization Using X-Ray Tomography. Powders, 4(2), 12. https://doi.org/10.3390/powders4020012