Methodological Rigor in Laboratory Education Research

Abstract

:1. Introduction

2. Contextualizing Rigor

2.1. Theory-Informed Research Questions

2.2. Researcher and Participant Biases

2.3. Iteration and Triangulation

3. Illustrative Case

3.1. Context

3.2. Methods

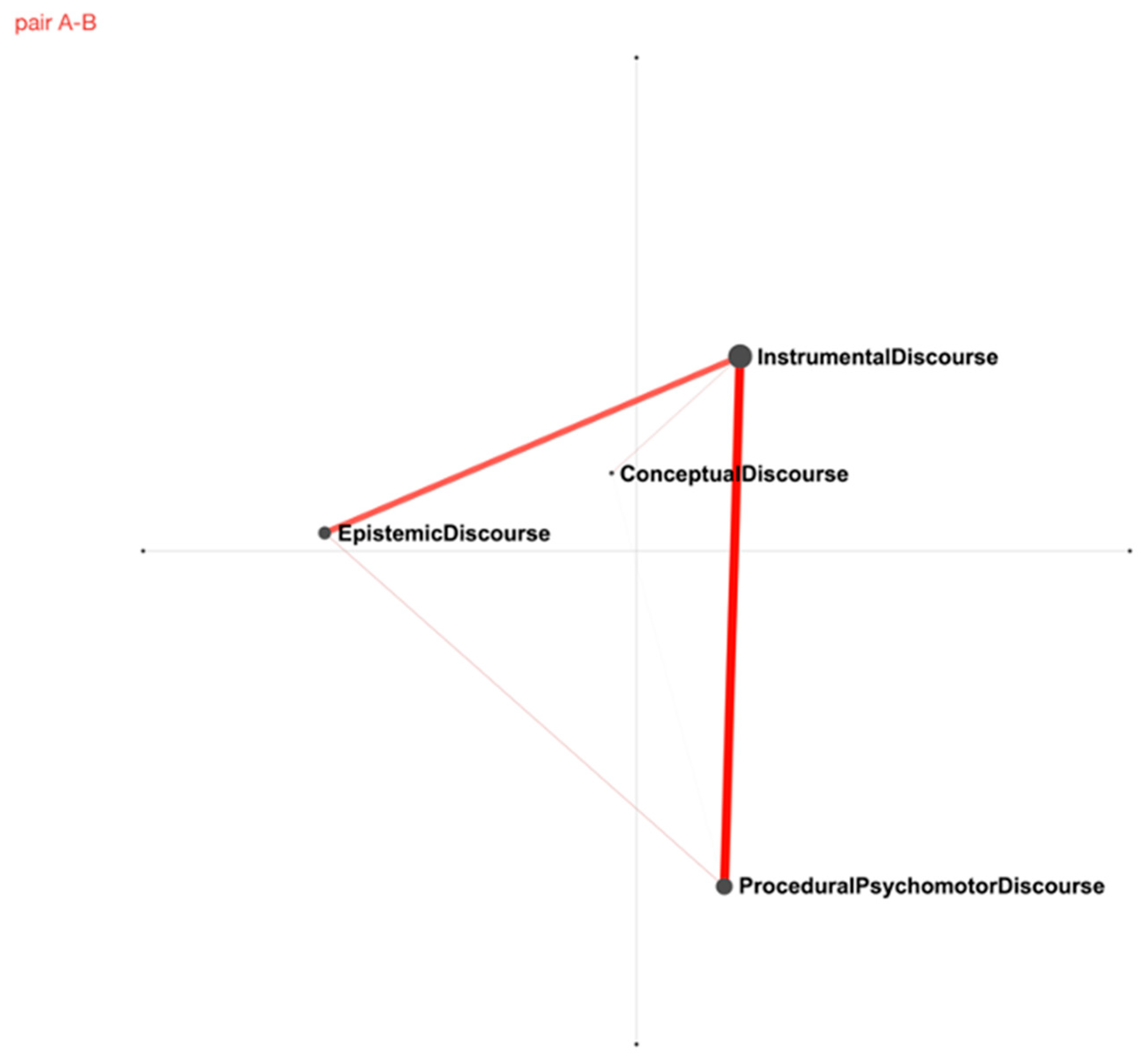

4. Results and Discussion

4.1. Multiperspectivity on Laboratory Learning across the Work Packages

4.2. Triangulation and the Comprehensive Assessment

5. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Agustian, H.Y.; Finne, L.T.; Jørgensen, J.T.; Pedersen, M.I.; Christiansen, F.V.; Gammelgaard, B.; Nielsen, J.A. Learning Outcomes of University Chemistry Teaching in Laboratories: A Systematic Review of Empirical Literature. Rev. Educ. 2022, 10, e3360. [Google Scholar] [CrossRef]

- Bretz, S.L. Evidence for the Importance of Laboratory Courses. J. Chem. Educ. 2019, 96, 193–195. [Google Scholar] [CrossRef]

- Hofstein, A.; Lunetta, V.N. The Laboratory in Science Education: Foundations for the Twenty-First Century. Sci. Educ. 2003, 88, 28–54. [Google Scholar] [CrossRef]

- Kirschner, P.A. Epistemology, Practical Work and Academic Skills in Science Education. Sci. Educ. 1992, 1, 273–299. [Google Scholar] [CrossRef]

- Sandoval, W.A.; Kawasaki, J.; Clark, H.F. Characterizing Science Classroom Discourse across Scales. Res. Sci. Educ. 2021, 51, 35–49. [Google Scholar] [CrossRef]

- Tang, K.S.; Park, J.; Chang, J. Multimodal Genre of Science Classroom Discourse: Mutual Contextualization between Genre and Representation Construction. Res. Sci. Educ. 2022, 52, 755–772. [Google Scholar] [CrossRef]

- Current, K.; Kowalske, M.G. The Effect of Instructional Method on Teaching Assistants’ Classroom Discourse. Chem. Educ. Res. Pract. 2016, 17, 590–603. [Google Scholar] [CrossRef]

- Wieselmann, J.R.; Keratithamkul, K.; Dare, E.A.; Ring-Whalen, E.A.; Roehrig, G.H. Discourse Analysis in Integrated STEM Activities: Methods for Examining Power and Positioning in Small Group Interactions. Res. Sci. Educ. 2021, 51, 113–133. [Google Scholar] [CrossRef]

- Zawacki-Richter, O.; Kerres, M.; Bedenlier, S.; Bond, M.; Buntins, K. Systematic Reviews in Educational Research: Methodology, Perspectives and Application; Springer VS: Wiesbaden, Germany, 2020. [Google Scholar]

- Coryn, C.L.S. The Holy Trinity of Methodological Rigor: A Skeptical View. J. MultiDiscip. Eval. 2007, 4, 26–31. [Google Scholar] [CrossRef]

- Erickson, F.; Gutierrez, K. Culture, Rigor, and Science in Educational Research. Educ. Res. 2002, 31, 21–24. [Google Scholar] [CrossRef]

- Johnson, J.L.; Adkins, D.; Chauvin, S. A Review of the Quality Indicators of Rigor in Qualitative Research. Am. J. Pharm. Educ. 2020, 84, 138–146. [Google Scholar] [CrossRef] [PubMed]

- Arfken, C.L.; MacKenzie, M.A. Achieving Methodological Rigor in Education Research. Acad. Psychiatry 2022, 46, 519–521. [Google Scholar] [CrossRef] [PubMed]

- Bunce, D.M. Constructing Good and Researchable Questions. In Nuts and Bolts of Chemical Education Research; ACS Publications: Washington, DC, USA, 2008; pp. 35–46. [Google Scholar]

- Agustian, H.Y. Considering the Hexad of Learning Domains in the Laboratory to Address the Overlooked Aspects of Chemistry Education and Fragmentary Approach to Assessment of Student Learning. Chem. Educ. Res. Pract. 2022, 23, 518–530. [Google Scholar] [CrossRef]

- Kiste, A.L.; Scott, G.E.; Bukenberger, J.; Markmann, M.; Moore, J. An Examination of Student Outcomes in Studio Chemistry. Chem. Educ. Res. Pract. 2017, 18, 233–249. [Google Scholar] [CrossRef]

- Wei, J.; Mocerino, M.; Treagust, D.F.; Lucey, A.D.; Zadnik, M.G.; Lindsay, E.D.; Carter, D.J. Developing an Understanding of Undergraduate Student Interactions in Chemistry Laboratories. Chem. Educ. Res. Pract. 2018, 19, 1186–1198. [Google Scholar] [CrossRef]

- Ellström, P.-E.; Kock, H. Competence Development in the Workplace: Concepts, Strategies and Effects. Asia Pac. Educ. Rev. 2008, 9, 5–20. [Google Scholar] [CrossRef]

- Sandberg, J. Understanding of Work: The Basis for Competence Development. In International Perspectives on Competence in the Workplace; Springer: Dordrecht, The Netherlands, 2009; pp. 3–20. [Google Scholar]

- Hamilton, J.B. Rigor in Qualitative Methods: An Evaluation of Strategies Among Underrepresented Rural Communities. Qual. Health Res. 2020, 30, 196–204. [Google Scholar] [CrossRef] [PubMed]

- Lalu, M.M.; Presseau, J.; Foster, M.K.; Hunniford, V.T.; Cobey, K.D.; Brehaut, J.C.; Ilkow, C.; Montroy, J.; Cardenas, A.; Sharif, A.; et al. Identifying Barriers and Enablers to Rigorous Conduct and Reporting of Preclinical Laboratory Studies. PLoS Biol. 2023, 21, e3001932. [Google Scholar] [CrossRef] [PubMed]

- Markow, P.G.; Lonning, R.A. Usefulness of Concept Maps in College Chemistry Laboratories: Students’ Perceptions and Effects on Achievement. J. Res. Sci. Teach. 1998, 35, 1015–1029. [Google Scholar] [CrossRef]

- Winberg, T.M.; Berg, C.A.R. Students’ Cognitive Focus during a Chemistry Laboratory Exercise: Effects of a Computer-Simulated Prelab. J. Res. Sci. Teach. 2007, 44, 1108–1133. [Google Scholar] [CrossRef]

- Hartner, M. Multiperspectivity. In Handbook of Narratology; Hühn, P., Meister, J.C., Pier, J., Schmid, W., Eds.; De Gruyter: Berlin, Germany, 2014; pp. 353–363. [Google Scholar]

- Kropman, M.; van Drie, J.; van Boxtel, C. The Influence of Multiperspectivity in History Texts on Students’ Representations of a Historical Event. Eur. J. Psychol. Educ. 2023, 38, 1295–1315. [Google Scholar] [CrossRef]

- Feyerabend, P.K. Knowledge, Science and Relativism; Preston, J., Ed.; Cambridge University Press: Cambridge, UK, 1999; Volume 3. [Google Scholar]

- Gillespie, A.; Cornish, F. Intersubjectivity: Towards a Dialogical Analysis. J. Theory Soc. Behav. 2010, 40, 19–46. [Google Scholar] [CrossRef]

- Bretz, S.L.; Fay, M.; Bruck, L.B.; Towns, M.H. What Faculty Interviews Reveal about Meaningful Learning in the Undergraduate Chemistry Laboratory. J. Chem. Educ. 2013, 90, 281–288. [Google Scholar] [CrossRef]

- Bruck, L.B.; Towns, M.H.; Bretz, S.L. Faculty Perspectives of Undergraduate Chemistry Laboratory: Goals and Obstacles to Success. J. Chem. Educ. 2010, 87, 1416–1424. [Google Scholar] [CrossRef]

- DeKorver, B.K.; Towns, M.H. General Chemistry Students’ Goals for Chemistry Laboratory Coursework. J. Chem. Educ. 2015, 92, 2031–2037. [Google Scholar] [CrossRef]

- Mack, M.R.; Towns, M.H. Faculty Beliefs about the Purposes for Teaching Undergraduate Physical Chemistry Courses. Chem. Educ. Res. Pract. 2016, 17, 80–99. [Google Scholar] [CrossRef]

- Santos-Díaz, S.; Hensiek, S.; Owings, T.; Towns, M.H. Survey of Undergraduate Students’ Goals and Achievement Strategies for Laboratory Coursework. J. Chem. Educ. 2019, 96, 850–856. [Google Scholar] [CrossRef]

- Vitek, C.R.; Dale, J.C.; Homburger, H.A.; Bryant, S.C.; Saenger, A.K.; Karon, B.S. Development and Initial Validation of a Project-Based Rubric to Assess the Systems-Based Practice Competency of Residents in the Clinical Chemistry Rotation of a Pathology Residency. Arch. Pathol. Lab. Med. 2014, 138, 809–813. [Google Scholar] [CrossRef]

- Chopra, I.; O’Connor, J.; Pancho, R.; Chrzanowski, M.; Sandi-Urena, S. Reform in a General Chemistry Laboratory: How Do Students Experience Change in the Instructional Approach? Chem. Educ. Res. Pract. 2017, 18, 113–126. [Google Scholar] [CrossRef]

- Denzin, N.K. Moments, Mixed Methods, and Paradigm Dialogs. Qual. Inq. 2010, 16, 419–427. [Google Scholar] [CrossRef]

- Gorard, S.; Taylor, C. Combining Methods in Educational and Social Research; Open University Press: Maidenhead, UK, 2004. [Google Scholar]

- Mayoh, J.; Onwuegbuzie, A.J. Toward a Conceptualisation of Mixed Methods Phenomenological Research. J. Mix. Methods Res. 2015, 9, 91–107. [Google Scholar] [CrossRef]

- Walker, J.P.; Sampson, V. Learning to Argue and Arguing to Learn: Argument-driven Inquiry as a Way to Help Undergraduate Chemistry Students Learn How to Construct Arguments and Engage in Argumentation during a Laboratory Course. J. Res. Sci. Teach. 2013, 50, 561–596. [Google Scholar] [CrossRef]

- Flick, U. Triangulation in Qualitative Research. In A Companion to Qualitative Research; SAGE Publications: London, UK, 2004. [Google Scholar]

- Lawrie, G.A.; Grøndahl, L.; Boman, S.; Andrews, T. Wiki Laboratory Notebooks: Supporting Student Learning in Collaborative Inquiry-Based Laboratory Experiments. J. Sci. Educ. Technol. 2016, 25, 394–409. [Google Scholar] [CrossRef]

- Horowitz, G. The Intrinsic Motivation of Students Exposed to a Project-Based Organic Chemistry Laboratory Curriculum. Master’s Thesis, Columbia University, New York, NY, USA, 2009. [Google Scholar]

- Seery, M.K.; Agustian, H.Y.; Christiansen, F.V.; Gammelgaard, B.; Malm, R.H. 10 Guiding Principles for Learning in the Laboratory. Chem. Educ. Res. Pract. 2024, 25, 383–402. [Google Scholar] [CrossRef]

- Agustian, H.Y. The Critical Role of Understanding Epistemic Practices in Science Teaching Using Wicked Problems. Sci. Educ. 2023. [Google Scholar] [CrossRef]

- Kelly, G.J.; Licona, P. Epistemic Practices and Science Education. In Science: Philosophy, History and Education; Springer Nature: Berlin, Germany, 2018; pp. 139–165. [Google Scholar]

- Braun, V.; Clarke, V. Using Thematic Analysis in Psychology. Qual. Res. Psychol. 2006, 3, 77–101. [Google Scholar] [CrossRef]

- Marton, F.; Pong, W.Y. On the Unit of Description in Phenomenography. High. Educ. Res. Dev. 2005, 24, 335–348. [Google Scholar] [CrossRef]

- Jiménez-Aleixandre, M.P.; Reigosa Castro, C.; Diaz de Bustamante, J. Discourse in the Laboratory: Quality in Argumentative and Epistemic Operations. In Science Education Research in the Knowledge-Based Society; Psillos, D., Kariotoglou, P., Tselfes, V., Hatzikraniotis, E., Fassoulopoulos, G., Kallery, M., Eds.; Springer: Dordrecht, The Netherlands, 2003; pp. 249–257. [Google Scholar]

- Van Rooy, W.S.; Chan, E. Multimodal Representations in Senior Biology Assessments: A Case Study of NSW Australia. Int. J. Sci. Math. Educ. 2017, 15, 1237–1256. [Google Scholar] [CrossRef]

- Agustian, H.Y. Students’ Learning Experience in the Chemistry Laboratory and Their Views of Science: In Defence of Pedagogical and Philosophical Validation of Undergraduate Chemistry Laboratory Education. Ph.D. Thesis, The University of Edinburgh, Edinburgh, UK, 2020. [Google Scholar]

- Galloway, K.R.; Bretz, S.L. Development of an Assessment Tool to Measure Students’ Meaningful Learning in the Undergraduate Chemistry Laboratory. J. Chem. Educ. 2015, 92, 1149–1158. [Google Scholar] [CrossRef]

- McCann, E.J.; Turner, J.E. Increasing Student Learning through Volitional Control. Teach. Coll. Rec. 2004, 106, 1695–1714. [Google Scholar] [CrossRef]

- Pintrich, P.R.; Smith, D.A.F.; Garcia, T.; McKeachie, W.J. Reliability and Predictive Validity of the Motivated Strategies for Learning Questionnaire (MSLQ). Educ. Psychol. Meas. 1993, 53, 801–813. [Google Scholar] [CrossRef]

- Yoon, H.; Woo, A.J.; Treagust, D.; Chandrasegaran, A.L. The Efficacy of Problem-Based Learning in an Analytical Laboratory Course for Pre-Service Chemistry Teachers. Int. J. Sci. Educ. 2014, 36, 79–102. [Google Scholar] [CrossRef]

- Cai, Z.; Siebert-Evenstone, A.; Eagan, B.; Shaffer, D.W.; Hu, X.; Graesser, A.C. nCoder+: A Semantic Tool for Improving Recall of nCoder Coding. In Communications in Computer and Information Science; Springer: Berlin/Heidelberg, Germany, 2019; Volume 1112, pp. 41–54. [Google Scholar]

- Shaffer, D.W.; Collier, W.; Ruis, A.R. A Tutorial on Epistemic Network Analysis: Analyzing the Structure of Connections in Cognitive, Social, and Interaction Data. J. Learn. Anal. 2016, 3, 9–45. [Google Scholar] [CrossRef]

- Green, J.L.; Dixon, C.N. Exploring Differences in Perspectives on Microanalysis of Classroom Discourse: Contributions and Concerns. Appl. Linguist. 2002, 23, 393–406. [Google Scholar] [CrossRef]

- Agustian, H.Y.; Pedersen, M.I.; Finne, L.T.; Jo̷rgensen, J.T.; Nielsen, J.A.; Gammelgaard, B. Danish University Faculty Perspectives on Student Learning Outcomes in the Teaching Laboratories of a Pharmaceutical Sciences Education. J. Chem. Educ. 2022, 99, 3633–3643. [Google Scholar] [CrossRef]

- Hofstein, A.; Kind, P.M. Learning in and from Science Laboratories. In Second International Handbook of Science Education; Fraser, B.J., Tobin, K.G., McRobbie, C.J., Eds.; Springer Science & Business Media: Dordrecht, The Netherlands, 2012. [Google Scholar]

- Johnstone, A.H. Chemical Education Research in Glasgow in Perspective. Chem. Educ. Res. Pract. 2006, 7, 49–63. [Google Scholar] [CrossRef]

- Finne, L.T.; Gammelgaard, B.; Christiansen, F.V. When the Lab Work Disappears: Students’ Perception of Laboratory Teaching for Quality Learning. J. Chem. Educ. 2021, 99, 1766–1774. [Google Scholar] [CrossRef]

- Domin, D.S. A Review of Laboratory Instruction Styles. J. Chem. Educ. 1999, 76, 543. [Google Scholar] [CrossRef]

- Pabuccu, A.; Erduran, S. Investigating Students’ Engagement in Epistemic and Narrative Practices of Chemistry in the Context of a Story on Gas Behavior. Chem. Educ. Res. Pract. 2016, 17, 523–531. [Google Scholar] [CrossRef]

- Davidson, S.G.; Jaber, L.Z.; Southerland, S.A. Emotions in the Doing of Science: Exploring Epistemic Affect in Elementary Teachers’ Science Research Experiences. Sci. Educ. 2020, 104, 1008–1040. [Google Scholar] [CrossRef]

- Ton, G. The Mixing of Methods: A Three-Step Process for Improving Rigour in Impact Evaluations. Evaluation 2012, 18, 5–25. [Google Scholar] [CrossRef]

- Lederman, N.G.; Zeidler, D.L.; Lederman, J.S. Handbook of Research on Science Education: Volume III, 1st ed.; Routledge: New York, NY, USA, 2023. [Google Scholar]

- Johnson, R.B.; Onwuegbuzie, A.J. Mixed Methods Research: A Research Paradigm Whose Time Has Come. Educ. Res. 2004, 33, 14–26. [Google Scholar] [CrossRef]

| Speakers | Utterances | Non-Verbal Cues and Action |

|---|---|---|

| Eliana | And then there is the tailing factor? | Eliana invokes a concept specific to chromatography. |

| Felix | The tailing factor… | Felix intends to type, but discusses the concept instead. |

| Eliana | We have… | Eliana looks at Felix. |

| Felix | This is the extent to which it is loop-sided. | Felix looks at Eliana, explains the concept using hand gestures. |

| Felix | i.e. one of them runs down, and the other it is like that where there is a tail, is it not like that? | Felix looks at Eliana, explains the concept using hand gestures. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Agustian, H.Y. Methodological Rigor in Laboratory Education Research. Laboratories 2024, 1, 74-86. https://doi.org/10.3390/laboratories1010006

Agustian HY. Methodological Rigor in Laboratory Education Research. Laboratories. 2024; 1(1):74-86. https://doi.org/10.3390/laboratories1010006

Chicago/Turabian StyleAgustian, Hendra Y. 2024. "Methodological Rigor in Laboratory Education Research" Laboratories 1, no. 1: 74-86. https://doi.org/10.3390/laboratories1010006

APA StyleAgustian, H. Y. (2024). Methodological Rigor in Laboratory Education Research. Laboratories, 1(1), 74-86. https://doi.org/10.3390/laboratories1010006