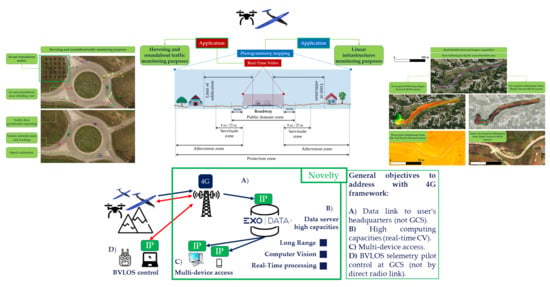

StratoTrans: Unmanned Aerial System (UAS) 4G Communication Framework Applied on the Monitoring of Road Traffic and Linear Infrastructure

Abstract

:1. Introduction

2. Materials and Methods

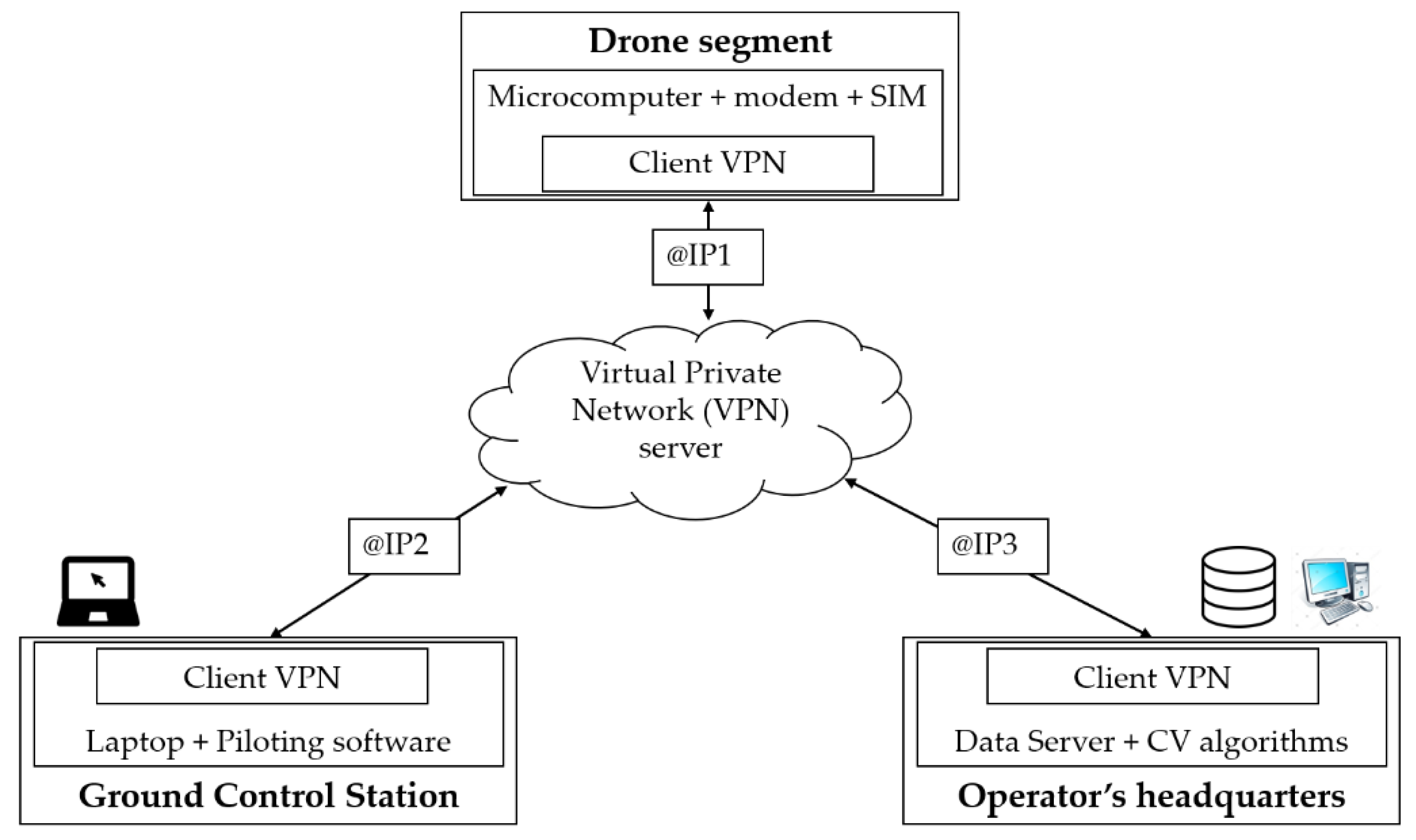

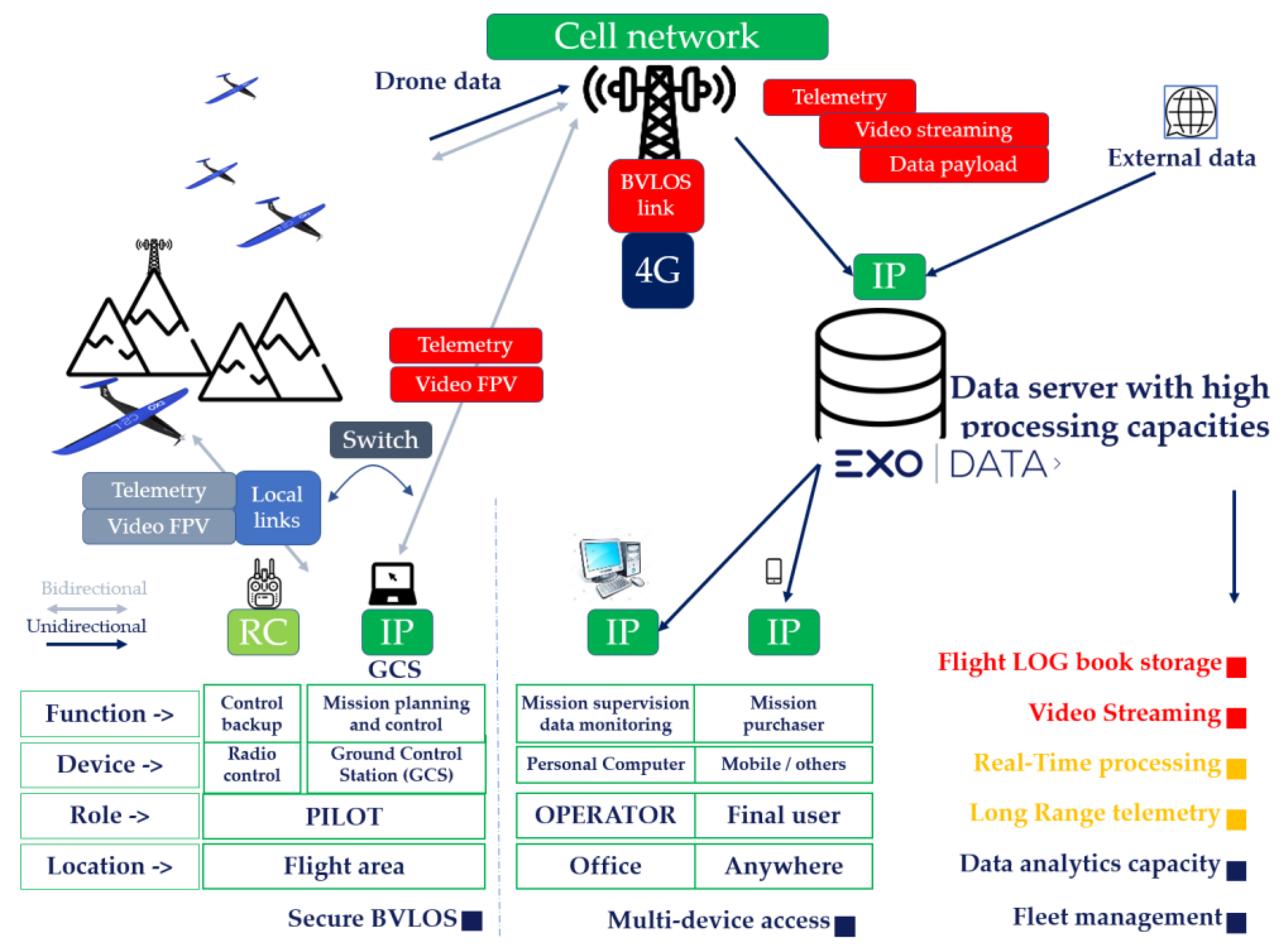

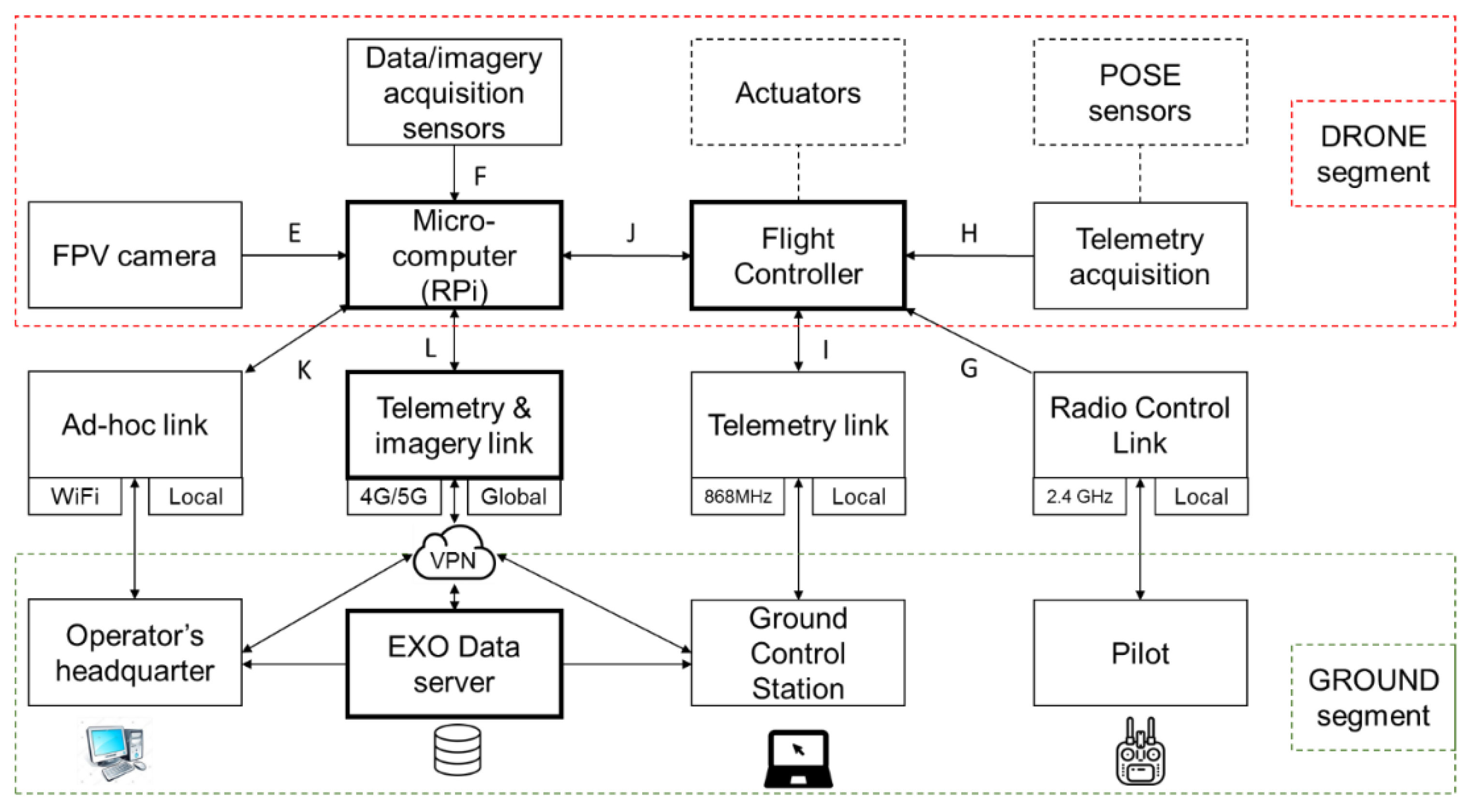

2.1. Methodological Framework for UAS—4G Communications

2.2. Hardware, Software, and Methodology under Use Case

3. Results and Discussion

3.1. Telecommunications Analysis and Applied Results

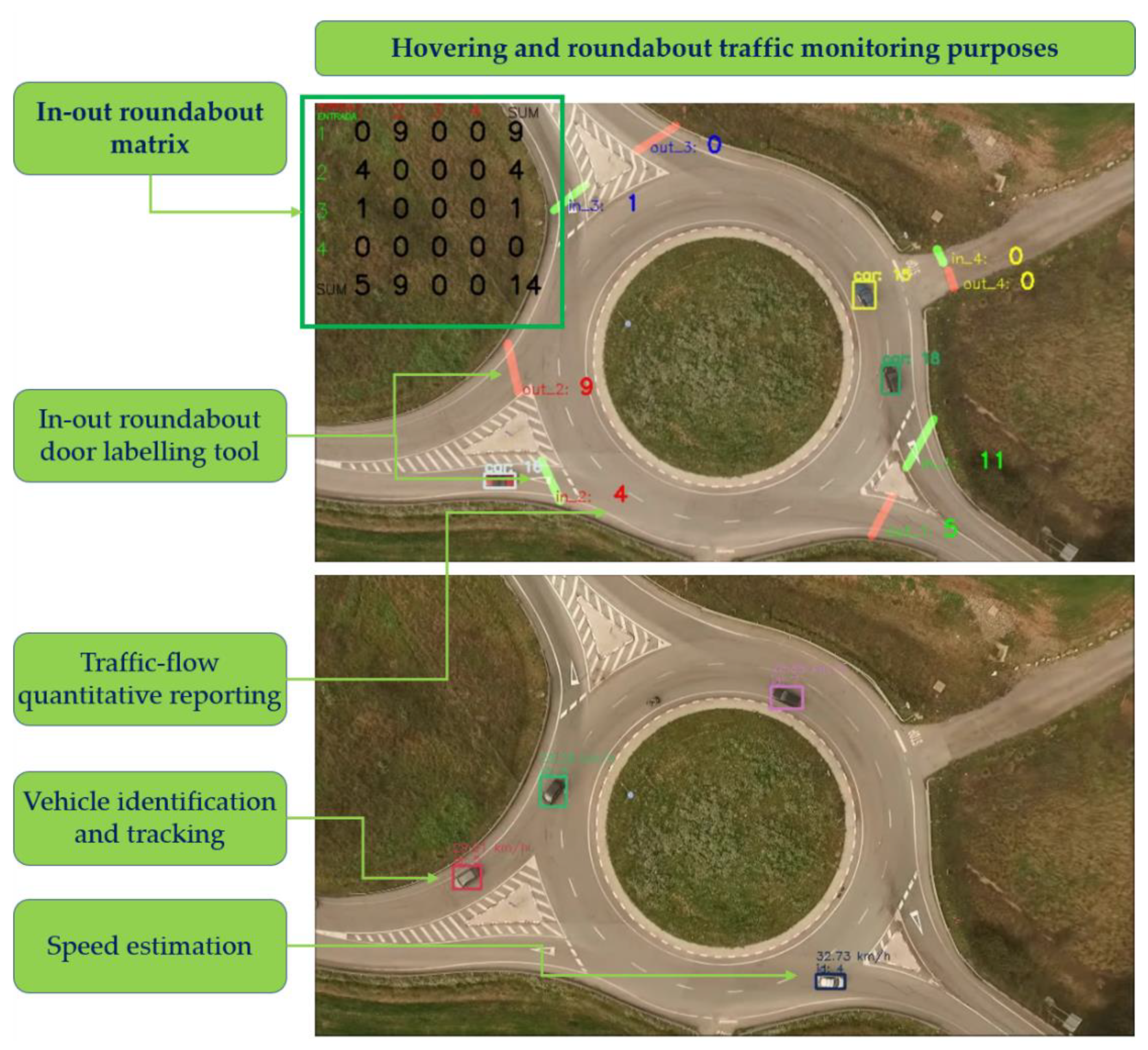

3.2. Traffic Monitoring Applied Results

- (a)

- Generation of in-out matrices in roundabouts per vehicle type.

- (b)

- Estimation of vehicle velocities and trajectories.

- (c)

- Heatmap generation per each vehicle class.

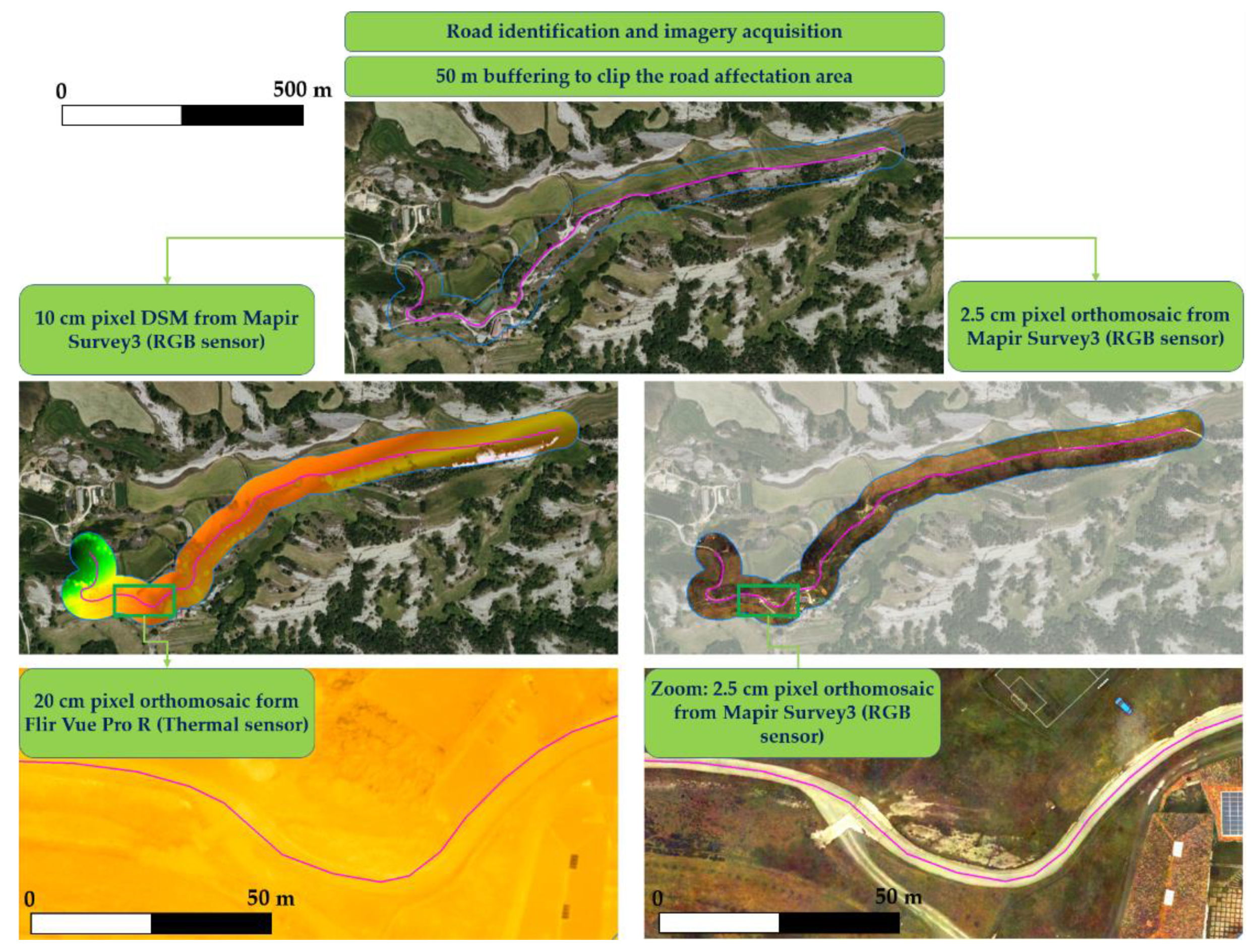

3.3. Linear Infrastructure Monitoring Applied Results

- (a)

- Map update of constructed elements surrounding the linear infrastructure.

- (b)

- Detection of horizontal signing and concrete degradation.

- (c)

- Monitoring of conservation/maintenance works.

- (d)

- Locate wildlife paths or other thermal indicators of fauna activity.

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

References

- Whitehead, K.; Hugenholtz, C.H. Remote sensing of the environment with small unmanned aircraft systems (UASs), part 1: A review of progress and challenges. J. Unmanned Veh. Syst. 2014, 2, 69–85. [Google Scholar] [CrossRef]

- Whitehead, K.; Hugenholtz, C.H.; Myshak, S.; Brown, O.; LeClair, A.; Tamminga, A.; Barchyn, T.; Moorman, B.; Eaton, B. Remote sensing of the environment with small unmanned aircraft systems (UASs), part 2: Scientific and commercial applications. J. Unmanned Veh. Syst. 2014, 2, 86–102. [Google Scholar] [CrossRef] [Green Version]

- Aasen, H.; Honkavaara, E.; Lucieer, A.; Zarco-Tejada, P.J. Quantitative remote sensing at ultra-high resolution with UAV spectroscopy: A review of sensor technology, measurement procedures, and data correction workflows. Remote Sens. 2018, 10, 1091. [Google Scholar] [CrossRef] [Green Version]

- Gharibi, M.; Boutaba, R.; Waslander, S.L. Internet of drones. IEEE Access 2016, 4, 1148–1162. [Google Scholar] [CrossRef]

- Yan, C.; Fu, L.; Zhang, J.; Wang, J. A Comprehensive Survey on UAV Communication Channel Modeling. IEEE Access 2019, 7, 107769–107792. [Google Scholar] [CrossRef]

- Sharma, V. Advances in Drone Communications, State-of-the-Art and Architectures. Drones 2019, 3, 21. [Google Scholar] [CrossRef] [Green Version]

- Ivancic, W.D.; Kerczewski, R.J.; Murawski, R.W.; Matheou, K.; Downey, A.N. Flying Drones Beyond Visual Line of Sight Using 4g LTE: Issues and Concerns. In Proceedings of the 2019 Integrated Communications, Navigation and Surveillance Conference (ICNS), Herndon, VA, USA, 9–11 April 2019; pp. 1–13. [Google Scholar] [CrossRef] [Green Version]

- Muruganathan, S.D.; Lin, X.; Maattanen, H.L.; Zou, Z.; Hapsari, W.A.; Yasukawa, S. An Overview of 3GPP Release-15 Study on Enhanced LTE Support for Connected Drones. arXiv 2018, arXiv:1805.00826. [Google Scholar]

- Sundqvist, L. Cellular Controlled Drone Experiment: Evaluation of Network Requirements. Master’s Thesis, Aalto University, Espoo, Finland, 2015. Available online: https://aaltodoc2.org.aalto.fi/handle/123456789/19152 (accessed on 8 December 2020).

- Azari, M.M.; Rosas, F.; Pollin, S. Cellular Connectivity for UAVs: Network Modeling, Performance Analysis, and Design Guidelines. IEEE Trans. Wirel. Commun. 2019, 18, 3366–3381. [Google Scholar] [CrossRef] [Green Version]

- Azari, M.M.; Geraci, G.; Garcia-Rodriguez, A.; Pollin, S. UAV-to-UAV Communications in Cellular Networks. IEEE Trans. Wirel. Commun. 2020, 19, 6130–6144. [Google Scholar] [CrossRef]

- LARUS Research Project Website. 2019. Available online: http://larus.kn.e-technik.tu-dortmund.de (accessed on 8 December 2020).

- Güldenring, J.; Gorczak, P.; Eckermann, F.; Patchou, M.; Tiemann, J.; Kurtz, F.; Wietfeld, C. Reliable Long-Range Multi-Link Communication for Unmanned Search and Rescue Aircraft Systems in Beyond Visual Line of Sight Operation. Drones 2020, 4, 16. [Google Scholar] [CrossRef]

- Azari, M.M.; Arani, A.H.; Rosas, F. Mobile Cellular-Connected UAVs: Reinforcement Learning for Sky Limits. arXiv 2020, arXiv:2009.09815. [Google Scholar]

- Chmielewski, P.; Wróblewski, W. Selected issues of designing and testing of a HALE-class unmanned aircraft. J. Mar. Eng. Technol. 2017, 16, 365–376. [Google Scholar] [CrossRef] [Green Version]

- GMV. Pseudo-Satellites, a World of Solutions and Applications. 2019. Available online: https://www.gmv.com/en/Company/Communication/News/2019/10/Hapsview.html (accessed on 8 December 2020).

- Singh, L.A.; Whittecar, W.R.; DiPrinzio, M.D.; Herman, J.D.; Ferringer, M.P.; Reed, P.M. Low cost satellite constellations for nearly continuous global coverage. Nat. Commun. 2020, 11, 200. [Google Scholar] [CrossRef] [PubMed]

- Exodronics. Cervera-CDTI StratoTrans Project. 2019. Available online: https://exodronics.com/cervera-cdti-programme/ (accessed on 8 December 2020).

- Shelhamer, E.; Long, J.; Darrell, T. Fully convolutional networks for semantic segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 640–651. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar] [CrossRef] [Green Version]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bochinski, E.; Senst, T.; Sikora, T. Extending IOU Based Multi-Object Tracking by Visual Information. In Proceedings of the 15th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Auckland, New Zealand, 27–30 November 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Li, J.; Chen, S.; Zhang, F.; Li, E.; Yang, T.; Lu, Z. An Adaptive Framework for Multi-Vehicle Ground Speed Estimation in Airborne Videos. Remote Sens. 2019, 11, 1241. [Google Scholar] [CrossRef] [Green Version]

- Dahl, M.; Javadi, S. Analytical Modeling for a Video-Based Vehicle Speed Measurement Framework. Sensors 2020, 20, 160. [Google Scholar] [CrossRef] [Green Version]

- Barcelona Drone Center (BDC). Barcelona Drone Center Test Site. 2020. Available online: https://www.barcelonadronecenter.com/uav-test-site/ (accessed on 8 December 2020).

- Eacomsa. Eacomsa Telecomunicacions. 2020. Available online: https://www.eacomsa.com/ (accessed on 8 December 2020).

- UAVmatrix. UAV Cast-pro. 2020. Available online: https://uavmatrix.com/ (accessed on 8 December 2020).

- Nexiona. Miimetiq Composer IoT. 2020. Available online: https://nexiona.com/miimetiq-composer/ (accessed on 8 December 2020).

- Eurecat. Audiovisual Technologies. 2020. Available online: https://eurecat.org/en/field-of-knowledge/audiovisual-technologies/ (accessed on 8 December 2020).

- Exodronics. EXO C2-L Fixed Wing Drone. 2019. Available online: https://exodronics.com/exo-c2-l/ (accessed on 8 December 2020).

- ProfiCNC. Pixhawk 2.1 Cube Black. 2020. Available online: http://www.proficnc.com/ (accessed on 8 December 2020).

- Ardupilot. The Cube Overview. 2020. Available online: https://ardupilot.org/copter/docs/common-thecube-overview.html (accessed on 8 December 2020).

- Raspberry Pi. Raspberry Pi Zero W. 2020. Available online: https://www.raspberrypi.org/products/raspberry-pi-zero-w/ (accessed on 8 December 2020).

- Huawei. HUAWEI 4G Dongle E3372. 2020. Available online: https://consumer.huawei.com/en/routers/e3372/specs/ (accessed on 8 December 2020).

- Wirelesslogic. SIMpro Management Platform. 2020. Available online: https://www.wirelesslogic.com/simpro/ (accessed on 8 December 2020).

- Wirelesslogic. Case Study. Exodronics: Optimizing Drones through IoT Connectivity. 2020. Available online: https://www.wirelesslogic.com/case-study/exodronics/ (accessed on 8 December 2020).

- Raspberry Pi. ZeroCam FishEye. 2020. Available online: https://raspberrypi.dk/en/product/zerocam-fisheye/ (accessed on 8 December 2020).

- Raspberry Pi. Camera Module V2. 2020. Available online: https://www.raspberrypi.org/products/camera-module-v2/ (accessed on 8 December 2020).

- ZeroTier. ZeroTier VPN Server. Available online: https://www.zerotier.com/ (accessed on 8 December 2020).

- Ardupilot. Mission Planner. 2020. Available online: https://ardupilot.org/planner/ (accessed on 8 December 2020).

- Ferro, E.; Gennaro, C.; Nordio, A.; Paonessa, F.; Vairo, C.; Virone, G.; Argentieri, A.; Berton, A.; Bragagnini, A. 5G-Enabled Security Scenarios for Unmanned Aircraft: Experimentation in Urban Environment. Drones 2020, 4, 22. [Google Scholar] [CrossRef]

- European Union Aviation Safety Agency (EASA). Drones—Regulatory Framework Background. 2019. Available online: https://www.easa.europa.eu/easa-and-you/civil-drones-rpas/drones-regulatory-framework-background (accessed on 8 December 2020).

- Thiele, L.; Wirth, T.; Börner, K.; Olbrich, M.; Jungnickel, V.; Rumold, J.; Fritze, S. Modeling of 3D field patterns of downtilted antennas and their impact on cellular systems. In Proceedings of the ITG International Workshop on Smart Antennas (WSA), Berlin, Germany, 16–19 February 2009. [Google Scholar]

- Padró, J.C.; Muñoz, F.J.; Planas, J.; Pons, X. Comparison of four UAV georeferencing methods for environmental monitoring purposes focusing on the combined use with airborne and satellite remote sensing platforms. Int. J. Appl. Earth Obs. Geoinf. 2019, 79, 130–140. [Google Scholar] [CrossRef]

- Padró, J.C.; Muñoz, F.J.; Avila, L.A.; Pesquer, L.; Pons, X. Radiometric Correction of Landsat-8 and Sentinel-2A Scenes Using Drone Imagery in Synergy with Field Spectroradiometry. Remote Sens. 2018, 10, 1687. [Google Scholar] [CrossRef] [Green Version]

- Padró, J.C.; Carabassa, V.; Balagué, J.; Brotons, L.; Alcañiz, J.M.; Pons, X. Monitoring opencast mine restorations using Unmanned Aerial System (UAS) imagery. Sci. Total Environ. 2018, 657, 1602–1614. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guirado, R.; Padró, J.-C.; Zoroa, A.; Olivert, J.; Bukva, A.; Cavestany, P. StratoTrans: Unmanned Aerial System (UAS) 4G Communication Framework Applied on the Monitoring of Road Traffic and Linear Infrastructure. Drones 2021, 5, 10. https://doi.org/10.3390/drones5010010

Guirado R, Padró J-C, Zoroa A, Olivert J, Bukva A, Cavestany P. StratoTrans: Unmanned Aerial System (UAS) 4G Communication Framework Applied on the Monitoring of Road Traffic and Linear Infrastructure. Drones. 2021; 5(1):10. https://doi.org/10.3390/drones5010010

Chicago/Turabian StyleGuirado, Robert, Joan-Cristian Padró, Albert Zoroa, José Olivert, Anica Bukva, and Pedro Cavestany. 2021. "StratoTrans: Unmanned Aerial System (UAS) 4G Communication Framework Applied on the Monitoring of Road Traffic and Linear Infrastructure" Drones 5, no. 1: 10. https://doi.org/10.3390/drones5010010

APA StyleGuirado, R., Padró, J.-C., Zoroa, A., Olivert, J., Bukva, A., & Cavestany, P. (2021). StratoTrans: Unmanned Aerial System (UAS) 4G Communication Framework Applied on the Monitoring of Road Traffic and Linear Infrastructure. Drones, 5(1), 10. https://doi.org/10.3390/drones5010010