1. Introduction

Breast cancer is one of the most invasive and deadly cancers in women around the world. According to the World Health Organization (WHO), 2.3 million women will have breast cancer in 2020, and there have been 685,000 deaths worldwide from breast cancer [

1]. According to statistics, 7.8 million women have been living with breast cancer in the past five years, and it has classified breast cancer as the deadliest cancer in the world [

2]. Breast cancer occurs in all countries of the world in women at any age after puberty, but it will increase later in life [

3]. Breast cancer is the most common disease among Saudi women, with a prevalence of 21.8% [

4]. A cancer survey has shown that breast cancer is one of the ninth leading causes of death among Saudi women [

2]. In China, breast cancer is becoming the most common type of cancer diagnosed in women, accounting for 12.2% of global cases and 9.6% of all deaths worldwide.

Mammogram images are scanned for analysis of breast cancer, and it plays an essential role in detecting breast cancer early and helps reduce the death rate. But many factors affect the viewing of mammography images, and it is difficult for the radiologist to make a proper diagnosis [

5]. These factors are breast tissue density, and mammography quality because the effectiveness of mammography ranges from 60 to 90%, and radiologists can only observe 10 to 30% of all mammographic lesions [

6,

7,

8].

BI-RADS (Breast Imaging Reporting and Data System) is the mammographic standardization and quality assurance lexicon report represented by the American College of Radiology (ACR). The main objective of BI-RADS is to organize mammography report among radiologists and make it homogenize for clinicians [

9]. BI-RADS mammography classifies qualitative characteristics and characterizes the shape, margin, and density of the mass of breast tissue. Radiologists categorized the mass in BI-RADS according to the defined features, as explained in

Table 1.

Depending on the biological structure of the breast, cancer cells spread to the lymph nodes and affect other parts of the body, such as the lungs. Many researchers also found that hormonal lifestyle and environmental changes also lead to increased breast cancer [

10]. The breast’s internal structures are obtained from a low dose X-ray of the breast, and this process is called mammography in biological terminology. Mammography is one of the most necessary methods of observing breast cancer because mammography radiates much lower radiation doses than other devices previously used. Recently, it has proven to be the safest modality for breast cancer screening [

5].

There are two factors for the detection of breast cancer at an early stage: the proper acquisition process to obtain mammogram images and, secondly, accurate analysis of the mammogram images for the diagnosis of breast cancer. The manual process takes time and could delay the processing process. Accurate viewing of mammogram images is always a difficult task, especially with a large number of databases. This challenge can be overcome by using a computational method such as image processing techniques or the breast cancer analysis algorithm. These algorithms lead to rapid analysis and reduce the workload of medical experts. However, it is essential to study the nature of mammography images before using the algorithm based on the image process to detect breast cancer [

11].

Computerized analysis of mammography is also challenging because one of the most important challenges is observing the pectoral muscles. The geometric shape of the pectoral muscles and their location depend on the mammographic images’ specific view [

12,

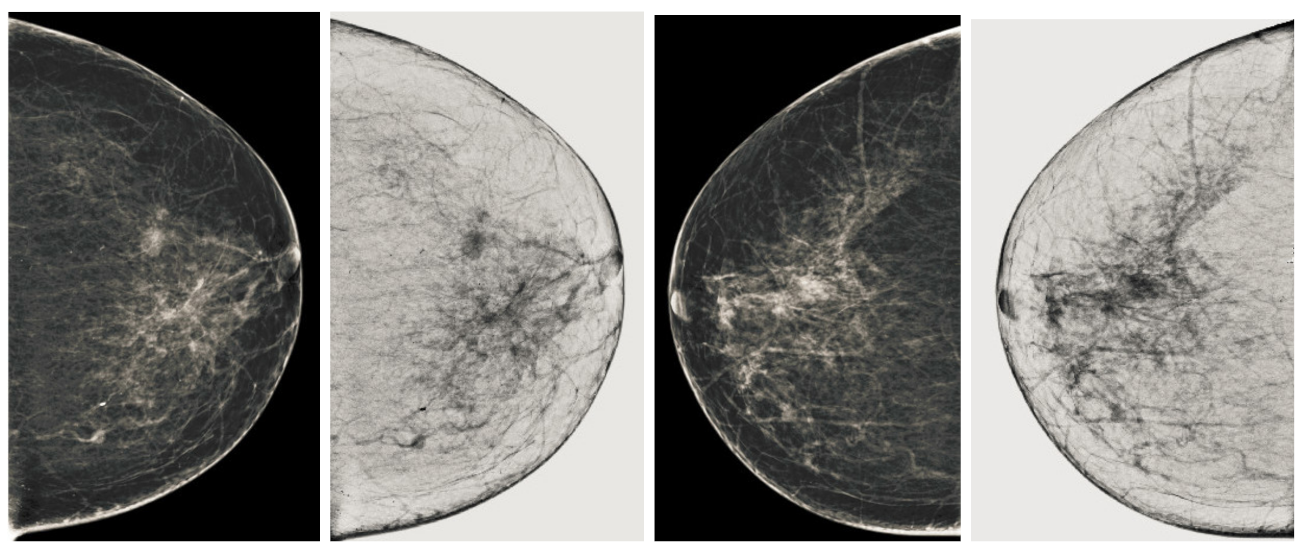

13]. There are two types of mammographic image views: the carnio-caudal (CC) view and the mediolateral oblique (MLO) view, and these views are shown in the

Figure 1. The pectoral muscle on the CC view is semi-elliptical along the breast wall. In contrast, the MLO view covers most of the upper mammogram coverage and roughly corresponds to the overlapping right-angled triangle, as shown in

Figure 1. Due to their appearance, both views suffered from low contrast, which made it difficult to see cancerous areas in some cases. Image enhancement requires correct observation and helps segment abnormal regions for disease classification. The quality of mammography images in terms of noise reduction and contrast enhancement is improved by using the image enhancement technique. The main purpose of implementing the image enhancement technique is to help the computerized breast cancer detection system to detect mammographic lesions with poor visibility and improve low contrast. Low contrast regions with small abnormalities are mostly hidden in the tissue of mammogram images, which makes it challenging to analyze the abnormal region, and also provides false detection.

Image enhancement techniques are generally divided into three categories. These categories are spatial domain, frequency domain and a combination of these two [

14]. Further, these category-based image enhancement techniques are characterized into four types based on their use on the medical image and the nature of the image, namely conventional image enhancement techniques, region-based, feature-based, and fuzzy-based [

15]. Conventional techniques are mainly used because they can be adapted for local as well as global enhancement of mammograms. Conventional techniques have the ability to improve the noise figure and enhance the image. Region-based image enhancement methods are best suited for contrast enhancement of a specified region with varying shape and size, but sometimes they give artifacts that lead to misclassification. Feature-based enhancement techniques are used for calcification on mammograms as well as their masses. Most transform-based techniques are used for enhancements of mammogram images, but the high frequencies remained, and this resulted in noise. Fuzzy enhancement is also used to normalize the image and give good contrast output, but it has also lost detail in service cases of mammography images.

In this research work, we proposed an image enhancement technique for mammography images for early detection of breast cancer. The image enhancement technique we propose has four steps. In the first and second steps, we will process the image and categorize it into three channels as mammography images are in jpeg format in almost all databases. We remove uneven illumination using morphological techniques to achieve a uniform background image. The third step contains obtaining a well-contrasted or grayscale image that gives us a good breast image with more observation of the abnormal region. The fourth step is to remove the pectoral muscle from the mammogram images, as the mammography images contain a lot of the background. We used a technique based on the seeds region points of the image depending on the orientation of the muscle. This four-step module is known as the Breast Cancer Image Enhancement Technique, and it gives us a computerized process of early detection of breast cancer. However, the main challenging task is the impact of the image enhancement technique on the segmentation and of the abnormal region. We have proposed a post-processing module based on second-order Gaussian filtering, diffusion filtering, and K-means for the segmentation of cancer regions to detect breast cancer. The main contributions of this research work are:

Implementation of novel image enhancement techniques for mammogram images and improves the overall quality of appearance.

This image enhancement technique can be used with post-processing steps for the early detection of breast cancer.

The contrast analysis for observation of abnormal lesions improves the segmentation and helps to diagnose the progress of breast cancer.

These pre-processing steps have improved the performance of existing methods based on machine learning.

The image enhancement may improve the training processing of an extensive database in supervised methods for breast cancer detection.

The article is organized as follows.

Section 2 summarizes the related work.

Section 3 explains in detail the proposed method and each step of the proposed method.

Section 4 deals with databases and measurement parameters.

Section 5 reports the experimental results. Finally, the conclusion and future directions are presented in

Section 6.

2. Related Work

Mendez et al. [

16] implemented the method based on filtering techniques to detect breast cancers from original mammogram images. They used the spatial averaging filter to smooth the image and the histogram-based thresholding, and after achieving the filtered image. They applied a gradient to track the abnormal region. Abdel et al. [

17] proposed a technique based on different threshold values. Mario et al. [

18] implemented the method based on wavelet decomposition and depth reduction of the background of mammograms images to get the cancerous area. They achieved 85% accuracy in their process. Karssemeijer and Brake [

19] implemented a multiresolution scheme based on Hough transform to predicate the pectoral muscle accurately. Camillus et al. [

20] developed a graph-cut-based breast segmentation method to identify the pectoral muscle. The result of their proposed method produced ragged lines with corrected using of Bezier curve. Ferrari et al. [

21] modified the method proposed by Karssemeijer and Brake to detect the pectoral muscle. Kwok et al. [

22] implemented the method for the detection of pectoral muscle by using the iterative threshold method. Wirth and Stapinski [

23] implemented the active contour based method for breast region segmentation in mammographic images. Kwok et al. [

24] implemented the method of computerized segmentation of pectoral muscles on a mediolateral oblique view of mammography images. They used the basic geometry concept, whereby the pectoral edge was estimated using a straight line and validated based on the location and orientation of mammography images. They removed the noise using the median filter, but their method still gave a false detection. Ferrari et al. [

25] implemented the breast boundary identification method in mammography images. The method contained the modification of the contrast of the image, and they used the chain code algorithm for binarization. Next, they used the approximate contour as input to an active contour model algorithm to obtain the breast boundary for breast cancer analysis.

Raba et al. [

26] developed the method based on an adaptive histogram to achieve the breast region from the background image. Then, the region growing-based method was used to get the pectoral muscle. Raba et al. [

26] proposed the mammography segmentation technique in the breast region and background with pectoral muscle removal. They used an adaptive histogram approach to separate the breast from the background, then after using the region growing algorithm to remove the pectoral muscle to obtain a mammogram image. They tested 320 images and achieved around 98% accuracy, but achieved around 86% pectoral muscle suppression, and their method resulted in over-segmentation in dense image cases. Mirzaalian et al. [

27] implemented a non-linear diffusion algorithm to remove the pectoral muscle. Martin et al. [

28] implemented a method to obtain breast skin line in mammographic images. The edge of the breast provided the important information about the shape of the breast, as well as the deformation, which is generally used by image processing techniques and used image registration and anomaly detection. Kinsosita et al. [

29] implemented a method using radon’s transform to estimate the pectoral muscle boundaries. Wang et al. [

30] implemented the automated pectoral muscle detection based on discrete-time Markov chain and active contour model. Hu et al. [

31] implementation a method for suspicious lesion segmentation in mammography images based on adaptive thresholding contained in multiresolution analysis. Their thresholding method based on global and adaptive local thresholding segmentation method and test on 170 images of mammograms, but then gave noise after using a morphological filter to remove noise, but still lost details and gave false detections. Chakraborty et al. [

32] implemented a shape-based function with an average gradient to detect the position of the pectoral muscle boundary as a straight line. Chen and Zwiggelaar [

33] implemented another histogram-based thresholding method to separate the breast area from the background. Connected components were used for the algorithm for labeling the segmented binary image of the abnormal region. After that, we used a region-based technique to remove the pectoral muscle, starting at a seed point closer to the pectoral muscle boundary.

Maitra et al. [

34] implemented the method based on a triangular region to isolated the pectoral muscle from the rest of the tissue. Then, a region growing technique was used to remove the pectoral muscle. All these methods used either thresholding, region growing, or seed point image techniques. These techniques did not give us a good performance because the uniformity of image is required. However, image contrast enhancement techniques have been used for videos and images for decades to make image details more observable. Peng et al. [

35] implemented the mammography image processing pipeline, which estimated the skin–air boundary using the gradient weight map to detect the breast pectoral boundary by adopting the method of unsupervised pixel labeling, and the final step was based on detection of the breast region with use of the texture filter. Bena et al. [

36] implemented the mammography image segmentation method based on watershed segmentation and classification using K-NN classifier. The output is based on the grayscale co-occurrence matrices based on the Halarick texture function, and it was extracted from 60 mammography images. Kaitouni et al. [

37] implemented breast tumor segmentation by pectoral muscle removal based on hidden Markov and region growth method. The purpose of the method was to separate the pectoral muscle from the mammographic images and to extract the breast tumor. The method contains two phases: Otsu thresholding and k-means based on image classification. Podgornova et al. [

38] carried out the comparative study of methods for segmenting microcalcifications on mammography images. They used the watershed, mean shift, and k-means techniques in their comparative study, and the detection of k-means was comparable to the watershed and mean shift method, but yielded about 57.2% of false detections.

A few contrast enhancement techniques are useful for mammogram images to improve the performance of segmentation method, but most convention enhancement techniques are not useful for enhancing the contrast of mammogram images. In the last 21 years, many enhancement techniques have been implemented to enhance the low and varying contrast of mammogram images. Many detailed review papers are presented based on contrast enhancement techniques for mammogram images. Cheng et al. [

39] discussed the conventional methods to features-based contrast enhancement techniques and their advantages and disadvantages. Stojic et al. [

40] implemented mammogram images based on local contrast enhancement techniques and background noise removal. An improved method based on histograms-based contrast enhancement technique for X-ray images was implemented by Ming et al. [

41]. Jianmin et al. [

42] implemented an approach based on structure tensor and fuzzy enhancement operators for contrast enhancement of mammogram images. After reviewing all the methods for early detection of breast cancer, it was observed that a new enhancement technique is required to be used as a pre-processing module for breast cancer segmentation. We propose an image enhancement technique based on contrast sensitivity techniques in this research work for the early detection of breast cancer.

6. Conclusions and Future Direction

The output image is considered to be an enhanced image compared to the original image by evaluation of the measurement parameters, as well as by visual observation. In this research work, the new image enhancement method is proposed and tested on all BI-RADS categories for the detection of breast cancer. The performance of the proposed method is analyzed on the basis of visual perception and quantitative measurements. We obtained the best enhanced image, and the performance of the proposed method is compared to state of the art contrast enhancement techniques such as HE, CLAHE and BBHE. Our proposed image enhancement technique is validated against a large database that contains approximately 11,000 images, but we have used approximately 700 images to validate this database. As in the basis on state-of-the-art, previous methods for breast cancer, whether enhancement-, segmentation-, or classification-based methods, did not use all categories of BI-RADS, but we have validated our technique from negative (BI-RADS-1) to malignant (BI-RADS-5) images.

Our proposed image enhancement technique contains the different steps to improve the contrast of the image in terms of visual perception, noise reduction as well as contrast enhancement. We used new imaging techniques to obtain a well-contrasted image as well as coherence filtering to obtain a well-normalized image. The main objective of the image enhancement technique is to aid the post processing steps of any image processing method or machine learning method. There is still room to improve the image enhancement technique as it gives an improved performance on all categories, but it decreases performance on the higher level of BI-RADS. Therefore, the tuning parameters-based filters like scaled normalized Gaussian filtering can be used to set parameters according to the properties of the image in order to obtain a well contrasted image. This image enhancement technique can also be used to improve the performance of a deep learning-based method for breast cancer, as it can be used as an input technique for the training process. Because good training of the database provides the best segmented output, and this is one of the contributions that we propose. This image enhancement technique can improve the training process of the machine learning method and it also improves the performance of traditional methods when used as a pre-treatment module.