Abstract

Freezing of gait (FOG) is a serious gait disturbance, common in mid- and late-stage Parkinson’s disease, that affects mobility and increases fall risk. Wearable sensors have been used to detect and predict FOG with the ultimate aim of preventing freezes or reducing their effect using gait monitoring and assistive devices. This review presents and assesses the state of the art of FOG detection and prediction using wearable sensors, with the intention of providing guidance on current knowledge, and identifying knowledge gaps that need to be filled and challenges to be considered in future studies. This review searched the Scopus, PubMed, and Web of Science databases to identify studies that used wearable sensors to detect or predict FOG episodes in Parkinson’s disease. Following screening, 74 publications were included, comprising 68 publications detecting FOG, seven predicting FOG, and one in both categories. Details were extracted regarding participants, walking task, sensor type and body location, detection or prediction approach, feature extraction and selection, classification method, and detection and prediction performance. The results showed that increasingly complex machine-learning algorithms combined with diverse feature sets improved FOG detection. The lack of large FOG datasets and highly person-specific FOG manifestation were common challenges. Transfer learning and semi-supervised learning were promising for FOG detection and prediction since they provided person-specific tuning while preserving model generalization.

1. Introduction

Parkinson’s disease (PD) is a progressive neurodegenerative condition that presents numerous life-altering symptoms, including the characteristic upper-limb trembling [1]. In moderate to advanced PD, locomotion can deteriorate into a flexed upper body posture with small shuffling steps, an anteriorly-shifted centre of mass, decreased walking speed, poor balance, increased gait variability, and freezing of gait (FOG) [2,3,4,5,6].

A FOG episode is a complex and highly-variable phenomenon defined as a “brief, episodic absence or marked reduction of forward progression of the feet despite the intention to walk” [7]. Freezing is often described as the sensation of having one’s feet glued to the floor with an inability to initiate the next step, and becomes increasing common as PD progresses [2,8]. Although typically lasting only a few seconds [9], freezes can lead to falls [10,11,12]. Since FOG can occur multiple times a day, most commonly between doses when medication is wearing off [11,13], FOG related fall risk is an ever-present concern. Fall-related injury, reduced mobility, fear of future falls, and decreased independence are all linked to FOG and can contribute to a reduced quality of life [14,15,16,17,18].

FOG occurrences are difficult to anticipate and may not manifest during clinic visits [3]. Therefore, assessing and adjusting FOG treatments can be challenging for medical professionals. In-home monitoring and automatic freeze-detection systems have been developed [19,20] and used to objectively track freezes over extended periods; however, these systems do not prevent or reduce freezing occurrences. Cueing devices that provide an external stimulus have emerged for preventing imminent or overcoming occurring FOG episodes [21,22]. Continuous stimuli include auditory (e.g., a rhythmic tone), visual (e.g., lines projected on the floor in front of the person), or tactile (e.g., a vibrating device on the skin). As an alternative to continuous cueing, some cueing systems detect an occurring freeze and provide a cue to help the person resume normal walking [23,24,25,26]. If FOG could be predicted, a cue could be provided before the event to prevent the freeze from occurring [27,28].

Accurate and automatic FOG detection and prediction are essential for long-term symptom monitoring or preemptive mitigation via cueing. Wearable sensors are vital for FOG detection and prediction systems, to ensure unrestricted daily use in a person’s chosen environment. The complexity of FOG symptoms and its highly-variable manifestations have led to the creation of systems with numerous sensors on various body parts and a wide array of FOG detection methods, ranging from simple thresholds to machine-learning approaches. While good results have been reported, automatic and reliable FOG detection and prediction is far from resolved. Previous review of the state of the art technology in this field has shown the widespread use and effectiveness of wearable sensors in FOG detection [26]. To help guide further research, the current paper presents and up-to-date review of the state of the art of FOG prediction, and provides further details on the study populations, classification methods, and features used. The current state of the art is also assessed to highlight current challenges and limitations in FOG detection and prediction using wearable sensors. The outcomes from this review provide guidance on current knowledge and identify knowledge gaps that need to be filled to advance wearable-sensor-based assistive technologies that can improve the lives of people with PD.

2. Materials and Methods

A literature review was performed by searching the Scopus, PubMed, and Web of Science databases. Keywords included “sensor” or “device” or “wearable”, “Parkinson”, “freeze” or “freezing”, “detect”, or “predict”. The final search was performed on April 16, 2019. Results were curated using the Mendeley Desktop software (v. 1.19.4) (Elsevier, Amsterdam, the Netherlands).

Duplicates were removed and the results were pooled for screening, using article title, abstract, and keywords to determine relevance. Following screening, the remaining documents were reviewed in full.

Eligibility for analysis was based on:

- Use wearable sensor data as input (direct from sensor or wearable sensor datasets).

- Involve people with PD, or data from people with PD, who experience FOG.

- Primary goal of detecting or predicting FOG. Articles were not included if they examined cueing using a FOG detection method developed in previous research and reported in another article, or if they only classified individuals as freezers or non-freezers, rather than detecting freezing episodes.

Articles were excluded if they were not published in English, if they were not full texts (abstract only publications were excluded), or if they lacked adequate descriptions and explanations of the detection or prediction methods (i.e., training and testing methods not described, important variables not defined, results not presented).

Eligible articles were used to extract, where available, the following characteristics: population, data collection location and summary, sensor type and location, FOG detection and prediction method (i.e., classifier or machine-learning algorithm), features, whether feature extraction and selection were used, classification performance, and evaluation in real-time.

Article characteristics included:

- Population: The number of participants in the study, i.e.: healthy controls (HC), people with FOG symptoms (FOG-PD), people with no FOG symptoms (NFOG-PD), and FOG symptom status unknown or not reported (UFOG-PD); the number of PD participants who froze during data collection, medication state during data collection (ON or OFF), number of FOG episodes.

- Data collection location and summary: Whether data collection was performed in a laboratory setting or in the participant’s home. Summary of walking tasks performed.

- Sensor type and location: The type and number of sensors used, sensor location on the body.

- FOG detection method: Methods used to detect and predict FOG, i.e., general approach (e.g., machine-learning model), model training method (person-specific: trained using data from a single person; or person-independent: trained using data from multiple people and not customized for an individual), whether the data was windowed, window length, and extent of detection (i.e., detection performed on each data point, window, or FOG event, etc.). Where multiple methods were attempted, the method with the best performance or research focus was reported.

- Feature extraction and feature selection: Features are variables calculated from sensor data. Feature selection uses feature ranking, filtering, or other techniques to produce an appropriate feature subset with fewer redundant features. Reporting features that performed best in FOG detection or comparing detection performance of different features after model testing was not considered as feature selection.

- Classifier performance: Sensitivity, specificity, other performance metrics reported.

- Real-time: Reporting the detection of a FOG episode as it occurs. In this review, real-time refers to detection using a live wearable-sensor data stream.

Feature analysis included:

- Feature Name: Feature name or a short description if not named in the cited article.

- Sensor Type: The type of sensor to calculate the feature: accelerometer (Acc), gyroscope (Gyro), force sensitive resistor (FSR), electromyography (EMG), electroencephalogram (EEG), galvanic skin response (GSR), goniometer, telemeter, or camera-based motion capture (CBMC) (included if used with wearable-sensor).

- Sensor Location: Body location where the sensor was placed.

- Feature Description: Brief explanation of the feature.

- Source: Articles that used the feature as input for FOG detection or prediction.

3. Results

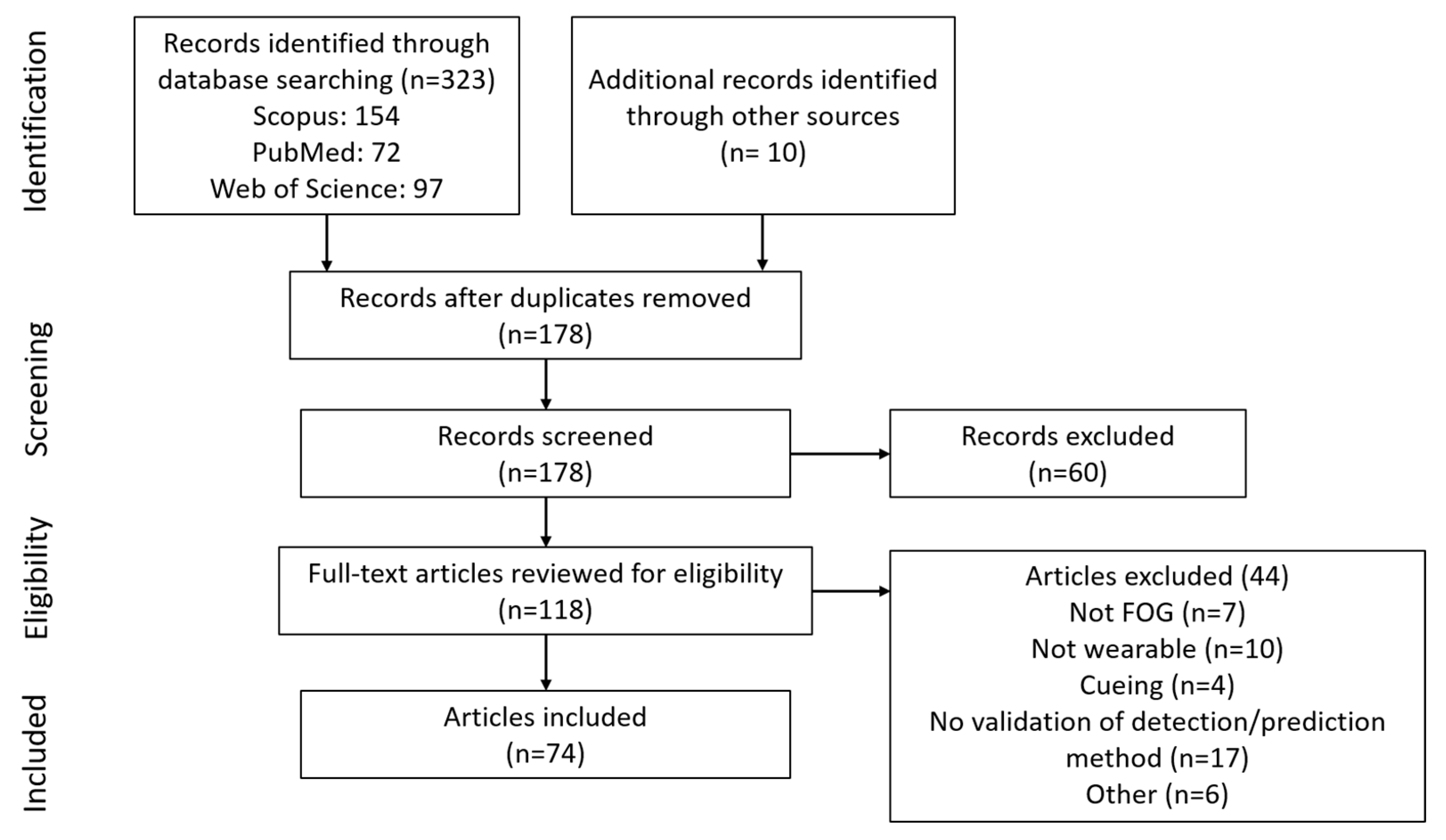

The initial search provided 323 documents. An additional 10 articles that did not appear in the search but were referenced by other articles were included, resulting in 333 articles. After removing duplicates, 178 documents were available (Figure 1). Following screening and eligibility assessment, 74 articles were included in the review: 68 on FOG detection, seven on FOG prediction, and one article in both categories.

Figure 1.

Diagram of article selection process.

Study characteristics related to population, data collection location and summary, sensor type and location, FOG detection method, feature extraction and selection, classifier performance, and whether analysis was performed in real time are presented in Table 1. Features extracted from wearable sensor data are presented in Table 2. Table 3 presents a summary of the top machine-learning methods from studies that compared different machine-learning classifiers for FOG detection using wearable sensors.

Table 1.

Characteristics of FOG detection studies and FOG prediction studies, using wearable sensors.

Table 2.

Features extracted from wearable-sensor data and used for freezing of gait detection or prediction.

Table 3.

Top machine-learning methods from studies that compared different machine-learning classifiers for FOG detection using wearable sensors.

4. Discussion

4.1. FOG Detection

FOG detection methods vary in complexity, with the simplest models directly comparing wearable sensor variables to thresholds [29,41,44,46,53,63,73,123]. Threshold methods tended to have poorer detection performance but faster processing time, making them potentially useful in real-time systems [24,70,77,79,92,93]. To improve classification performance, features that can better differentiate between FOG and typical PD gait have been used, such as Fourier transforms [29,34,35,41,44,53,65,69,78], wavelet transforms [51,56,63,71,79,83,91,92,93,96], k-index [59,60,61,62,72,73], freezing of gait criterion (FOGC) [46], freezing of gait detection on glasses (FOGDOG) [70], R-index [94], and the widely-used freeze index [29].

To further improve FOG detection performance, multiple features and machine-learning (ML) techniques have been used, such as neural networks [36,38,55,66,76,80,85,86,88,89,91], decision trees [25,39,42,45,52,54,58,85], random forests, [39,42,43] naïve Bayes [42,43], nearest neighbor [42], and support vector machines [64,71,74,75,81,83,86]. In addition, anomaly detection [20] and unsupervised machine learning have been attempted [87], but not extensively explored.

The best performing classifiers for FOG detection were convolutional neural networks, support vector machines, random forest, and AdaBoosted decision trees (Table 3).

4.1.1. Decision Trees

Decision trees are a series of binary selections that form branches resembling a tree structure. More complex decision trees can improve performance. For example, random forest classifiers use multiple decision trees, where the final decision is the majority vote of the individual trees. Boosting can also improve performance. AdaBoosting (adaptive boosting) repeatedly retrains the classifier, placing increasing importance on incorrectly classified training examples [124,125]. LogitBoosting (logistic boosting) [126], RUSBoosting [127], and RobustBoosting [128] are extensions of AdaBoosting that can further improve performance [85]. Decision trees for FOG detection included ensembles of trees and boosting techniques [42,43,85], with performance results ranging from 66.25% to 98.35% for sensitivity and 66.00% to 99.72% for specificity [25,39,42,43,45,52,54,58,85].

4.1.2. Support Vector Machines (SVM)

Support vector machines are binary (two class) classifiers that trace a plane to separate data points from each class. New data points are then classified based on their side of the plane. If data points are not easily separable, a kernel can transform the data into a dimension that is linearly separable [125]. SVM for FOG detection achieved 74.7%–99.73% sensitivity and 79.0%–100% specificity [64,71,74,75,81,83,86].

4.1.3. Neural Networks

Neural networks (NN) are made up of interconnected layers of nodes inspired by the structure of neurons in the brain [129]. NN have been frequently used in FOG detection and prediction studies. For FOG detection, model performance achieved 72.2%–99.83% sensitivity and 48.4%–99.96% specificity [36,38,55,66,76,80,85,86,88,89,91]. Neural networks for FOG prediction tended to perform slightly worse, up to 86% sensitivity, 80.25% specificity, and 89% precision [96,97,99].

Different NN subtypes have been used in FOG detection and prediction, such as convolutional [85,90] and recurrent [97,100] NN. Convolutional neural networks (CNN) have become popular in numerous applications, including medical image analysis, in part due their ability to recognize local patterns within images and because feature selection prior to implementation is not required [130,131]. CNN performed well for FOG detection [85], achieving 91.9% sensitivity and 89.5% specificity. Recurrent NN have recently been used for FOG prediction due to their applicability to time-series data [97,100]. Recurrent neural networks (RNN) utilize previous data in addition to current inputs during classification [132], thus giving the network “memory” to help recognize sequences [133]. A long short-term memory network (LSTM), a type of RNN, was used for FOG prediction [100], achieving over 90% accuracy when predicting FOG 5 s in advance.

4.1.4. Unsupervised and Semi-Supervised Models

Since freezing manifests differently for each person, person-specific models outperformed person-independent models [42,58,74,86] (with some exceptions as in [53]). However, in practice, it is difficult to obtain enough data to develop a model for an individual. To address this small dataset problem, unsupervised learning has been attempted. These methods do not rely on experts labelling FOG episodes. Instead, clustering techniques are used to define the classes [87], or an anomaly detection approach is used to define the normal class and then identify abnormalities (such as FOG) that do not conform to that class [45,90]. Unsupervised FOG detection approaches are appealing since they do not require data labelling; however, few studies have used unsupervised FOG detection, and unsupervised models performance has been worse than supervised models [90].

Recently, transfer learning, which uses a previously-trained network as a base and adapts the model to a new task [100], and semi-supervised learning, which uses both labeled and unlabeled data during training [69,88,89], have been used to create partly personalized FOG detection methods without large amounts of data. In [100], transfer learning trained a neural network using group data before adding an additional network layer that was trained using an individual’s data. Semi-supervised learning methods [69,88,89] use labeled data to train a base classifier before updating in an unsupervised manner. This reduces the need for labeled data and preserves the generalization ability from a multiple person data set, while allowing person-specific tuning. Semi-supervised learning theoretically combines the advantages of both supervised and unsupervised learning. When applied to FOG detection, performance achieved 89.2%–95.9% sensitivity [69,88,89] and 93.1%–95.6% specificity [69,88,89]. Although the methods are promising, due to a current shortage of studies, the value of these methods for FOG detection remains unclear.

4.1.5. Limitations and Challenges of FOG Detection

FOG detection and prediction is affected by the participant’s medication state (ON and OFF), with substantial effects on motor control, gait patterns, and physical abilities. Freezing occurs more frequently in the OFF state than the ON state. In the OFF state, smaller shuffling steps are common, whereas in the ON state, many people can walk fairly normally. A machine-learning model trained during a person’s optimal medication state may perform worse if the medication wears off and their unassisted gait changes. Given that medication is needed in PD management, medication state is crucial contextual information for FOG detection and prediction research.

With machine-learning algorithms becoming more prevalent, larger FOG detection and prediction datasets are needed for model development. FOG studies ranged from 1 to 32 participants, with most studies having more than 10 participants. Studies involving few participants may not adequately validate a FOG detection method, especially when machine-learning algorithms are involved. Data augmentation techniques [85] or additional testing with more participants are required. On the other hand, large participant pools may not guarantee unbiased datasets since some participants freeze many times during data collection, while others may not freeze at all. For example, in [48], only 6 of 20 participants froze during data collection, which may lead to person-biased models that over-represent the few individuals with FOG data. Difficulty in participant recruitment and FOG unpredictability are therefore challenges that may limit the availability and quality of training data.

Following data collection, FOG episodes are typically visually identified and labelled. Visual FOG identification is currently the gold standard. These labels are ground truth for detection method validation. Even though FOG is a well-defined clinical phenomenon [7], the criteria for defining the beginning and end of FOG episodes [24,25,98] was not defined in some articles. Differing FOG definitions make comparison between studies problematic. Published datasets can provide consistent ground truth FOG labelling. The Daphnet [24] (10 participants) and CuPiD [101] (18 participants) datasets provide consistent input but fewer than 250 FOG episodes; thus, dataset size may be an issue for machine learning, especially if deep learning is used [85].

When evaluating a classification system, ideally, different data are used for training and testing, as in [25,38,51,55,56,64,66,67,85,96,97,99,100], in order to prevent model performance overestimation that can occur when the model is evaluated using data used in model training. Cross-validation is often used when the dataset size is limited, as done in [24,31,32,33,39,42,43,45,52,54,58,74,75,81,86,87,88,89,90,98]. For FOG research, leave-one-person-out cross-validation was the most common. In this method, model training used data from all but one participant, model testing used data from the remaining participant, the process was repeated for each participant, and the performance results were averaged. Other studies, often more preliminary in nature, used ad hoc optimization to tune parameters and set thresholds [34,44,48,59,60,61,62,63,95]. This approach, although useful for initial system assessment, is not a good indicator of classifier performance, and should be followed by a more robust evaluation scheme, such as cross-validation.

Feature calculation from wearable sensor data is typically done using data windows. Window lengths ranged from 0.2 to 32 s [36,48,92,93], with the most common window length being 1 s. Long windows with many sample points are desirable for calculating frequency-based features involving the discrete Fourier transform, since the number of sample points in the input signal will determine the output frequency bin resolution. However, long windows decrease the temporal resolution and do not permit distinguishing short events within the window. In addition, long windows with many data points may be slower to process and may introduce unwanted lags between data acquisition and classification for detection or prediction. Studies comparing multiple window lengths found that, in general, 1–4 s windows are preferable [42,44,48,57,63,64].

FOG detection studies used different performance metrics. For example, a FOG detection system used to trigger a real-time cue during walking might emphasize freeze onset detection. This detection system might attempt to classify every data point or window as FOG or no freeze, and be evaluated using the number of correctly classified instances [24,31,32,33]. In contrast, a long-term monitoring system may treat each freeze occurrence as a binary event and evaluate whether the FOG event was successfully detected [74,75]. Experimental procedures and underlying definitions, such as ignoring FOG shorter than 3 s [43] or calculating specificity with data from participants without FOG [64], also varied between studies. Differences in evaluation metrics and procedures make FOG detection method comparisons more difficult.

To help compare future FOG detection and prediction studies, researchers should include study population details; including, sex, PD severity, number of participants, the number and duration of FOG episodes (ideally for each person), and medication state during testing. Methodologically, the FOG labelling criterion, detailed detection method, validation method, and basis upon which the performance evaluation metrics are calculated should be clearly stated.

4.2. FOG Prediction

The FOG prediction studies varied in approach and performance, with most being somewhat preliminary and focusing less on performance and more on understanding the intricacies of FOG prediction. In addition to FOG detection study considerations (e.g., dataset size, medication state, FOG definitions, contextual or study-specific performance metric definitions), FOG prediction studies must define the pre-FOG class using data before freeze onset. FOG prediction is typically done by training a machine-learning model to recognize data from the pre-FOG class. Six of the seven FOG prediction studies selected a pre-FOG segment duration that ranged from 1 s [45,96,100] to 6 s [45]. Since the transition from walking to FOG is subtle, labelling the start of pre-FOG from visual observation is difficult. Instead, a FOG episode is visually identified, and data prior to the FOG are selected using a single fixed duration. Three studies used a 5 s period [96,97,99]; one study used a 2 s period [98]; one used 1,3 and 5 s periods [100]; and one used 1–6 s periods, in 1 s increments [45]. The seventh study [95] used an assumed 3 s period before FOG for feature selection; then, a person-specific, multivariate Gaussian-distribution-based anomaly-detection model was created and manually tuned for each participant.

Optimal pre-FOG segment duration is difficult to determine. If the pre-FOG segment is assumed to be a linear degradation of gait leading to FOG (threshold theory [134]), data closest to the freeze would resemble FOG, and data farther from the freeze would resemble typical PD walking. For a two-class classifier (pre-FOG, typical PD walking), short pre-FOG segments are preferred, since data are closer to FOG onset and likely more distinct from typical walking [100].

A short pre-FOG segment may not be ideal when using a three-class classifier consisting of typical PD walking, pre-FOG, and FOG classes as in [45], which found that very short pre-FOG segments made it difficult to distinguish between the pre-FOG and FOG classes. Longer pre-FOG segments improved pre-FOG classification but greatly reduced FOG and typical walking classification accuracy. The best performing pre-FOG segment duration differed across participants, and likely between individual FOG episodes for the same person [45]. The observation that a single pre-FOG duration is inadequate is also supported by [95,98]. For this reason, a person-specific or episode-specific pre-FOG duration may help to reduce overlap with the walking class and increase class purity (contain only pre-FOG data), thus improving pre-FOG detection performance.

4.3. Features Used in FOG Detection and Prediction

A variety of features have been used in FOG detection and prediction. While most FOG detection and prediction features were previously established in non-PD applications [135,136,137,138], custom features were created to detect FOG, namely, freezing of gait criterion (FOGC) [46,47], freezing of gait detection on glasses (FOGDOG) [70], k value [59,60,61,62,72,73], R value [94], freeze index [29], K freeze index [67], and multichannel freeze index [67]. Time domain features, such as maximum acceleration amplitude within a window [40,41] or rotation about a single axis [98], are relatively simple and fast to compute. Gait-based features such as cadence [49], stride duration [35,50,71], and step length [35,71,77], as well as statistical features including mean [42,45,52,54,57,58,71,74,75,77,80,81,95], standard deviation [42,45,52,54,57,58,71,74,75,77,80,81,88,89,95,98], and root mean square [45,48,54,78,86] are also calculated from time domain data. Frequency domain features include freeze index (FI) [29], which was the most widely-used frequency domain feature [23,24,25,29,31,32,33,34,42,44,49,53,54,64,65,69,71,77,78,80,86,88,89,98], peak amplitude and corresponding frequency [40,41,64], standard deviation in frequency domain [50,57,64,74], spectral density centre of mass [50,57,66,74,80,81,86,96], and power of the signal in specific frequency bands [24,25,31,32,33,34,40,42,52,54,58,75,80]. While Fourier transforms are typically used to convert signals from the time domain to frequency domain, Fourier transform limitations have led to increased usage of wavelet approaches [51,56,63,71,79,83,91,92,93,96].

A feature set can be more representative of the wide range of FOG manifestations. Studies that combined time and frequency domains features [96] had better performance than either type of feature individually. Time domain features can account for gait parameters such as step length [35,71,77] cadence [49], asymmetry [45], and peak limb angular velocity [88,89], whereas frequency domain features can capture more subtle patterns characteristic of FOG, such as trembling in specific frequency bands [29]. The best performance is typically achieved with multiple features.

The choice of features is very important, especially for real-time systems, where, in addition to classification performance considerations, classification speed is critical. For example, the calculation of stride duration at the end of the stride (approximately 1 s) could result in the delayed detection of a FOG event. Other features such as step length, cadence, cadence variation, stride peaks and FOGC may share this limitation, depending on the feature calculation method. Features extracted from appropriately-sized windowed data do not have this problem, since the features can be calculated as soon as the data window is available. The feature availability to the classifier is determined by the step size of the sliding window and calculation delay. All of the window-based features in Table 2 could be used in a real-time application, given sufficient processing power. However, an excessive number of features or complex features requiring many calculation stages may induce unacceptable delays when computing power is limited, as in many wearable systems. Using a minimal number of easily-calculated features is desirable; however, too few or overly-simple features may adversely impact classification performance. To address the delicate balance of classification performance versus classification speed, feature selection algorithms can be used to determine the best features from a larger set, as implemented in [45,51,55,56,58,66,67,76,80,83,86,95,96,98,99]. Algorithms such as the Relief-F or correlation-based approaches can be used to rank features according to their relevance so that the least relevant can be eliminated [139]. The most used feature selection methods in this review were paired t-tests [86,98], mutual information [45,58,67,95], and the Wilcoxon sum rank test [55,66,76,96]. The topic of feature selection is broad and encompasses numerous methods that can be used to improve classifier models. Given the diversity of features in the literature for detecting and predicting FOG, the best feature or feature set has yet to be determined. For future studies, it is generally suggested to begin with multiple features that can then be tuned or eliminated using feature section methods to produce a set of optimal features.

5. Conclusions

Based on 74 freezing of gait detection and prediction articles, this review reported details of the participants, walking task, sensors, features extracted, detection and prediction methods, and performance. The continued development of high-performing FOG detection methods is important for long-term monitoring and real-time cueing, and together with development of FOG prediction systems, is important for implementation in gait-assist systems. While FOG detection methods have been steadily increasing in performance, important challenges remain. Small FOG datasets may limit the machine-learning models that can be used, especially for deep learning. Sets of diverse features in both the time and frequency domains have helped to represent the inconsistent nature of FOG. The adoption of transfer learning, and semi-supervised learning models, built upon the established FOG detection methods, could add an element of personalization while preserving the robust generalization of person-independent models, thus making them promising approaches for future FOG detection and prediction research.

Author Contributions

Conceptualization, S.P., J.K., J.N. and E.D.L.; Methodology, S.P., J.K., J.N. and E.D.L.; Investigation, S.P.; Data curation, S.P.; Writing—original draft preparation, S.P.; Writing—review and editing, S.P., J.K., J.N. and E.D.L.; Supervision, J.K., J.N. and E.D.L.; Project administration, J.K.

Funding

This research was funded by the Natural Sciences and Engineering Research Council of Canada (NSERC) and University of Waterloo.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Jankovic, J. Parkinson’s disease: Clinical features and diagnosis. J. Neurol. Neurosurg. Psychiatry 2008, 79, 368–376. [Google Scholar] [CrossRef] [PubMed]

- Macht, M.; Kaussner, Y.; Möller, J.C.; Stiasny-Kolster, K.; Eggert, K.M.; Krüger, H.-P.; Ellgring, H. Predictors of freezing in Parkinson’s disease: A survey of 6620 patients. Mov. Disord. 2007, 22, 953–956. [Google Scholar] [CrossRef] [PubMed]

- Hausdorff, J.M.; Cudkowicz, M.E.; Firtion, R.; Wei, J.Y.; Goldberger, A.L. Gait variability and basal ganglia disorders: Stride-to-stride variations of gait cycle timing in Parkinson’s disease and Huntington’s disease. Mov. Disord. 1998, 13, 428–437. [Google Scholar] [CrossRef] [PubMed]

- Baltadjieva, R.; Giladi, N.; Gruendlinger, L.; Peretz, C.; Hausdorff, J.M. Marked alterations in the gait timing and rhythmicity of patients with de novo Parkinson’s disease. Eur. J. Neurosci. 2006, 24, 1815–1820. [Google Scholar] [CrossRef] [PubMed]

- Hausdorff, J.M. Gait dynamics in Parkinson’s disease: Common and distinct behavior among stride length, gait variability, and fractal-like scaling. Chaos Interdiscip. J. Nonlinear Sci. 2009, 19, 026113:1–026113:14. [Google Scholar] [CrossRef]

- Sofuwa, O.; Nieuwboer, A.; Desloovere, K.; Willems, A.-M.; Chavret, F.; Jonkers, I. Quantitative gait analysis in Parkinson’s disease: Comparison with a healthy control group. Arch. Phys. Med. Rehabil. 2005, 86, 1007–1013. [Google Scholar] [CrossRef]

- Nutt, J.G.; Bloem, B.R.; Giladi, N.; Hallett, M.; Horak, F.B.; Nieuwboer, A. Freezing of gait: Moving forward on a mysterious clinical phenomenon. Lancet Neurol. 2011, 10, 734–744. [Google Scholar] [CrossRef]

- Snijders, A.H.; Nijkrake, M.J.; Bakker, M.; Munneke, M.; Wind, C.; Bloem, B.R. Clinimetrics of freezing of gait. Mov. Disord. 2008, 23, 468–474. [Google Scholar] [CrossRef]

- Schaafsma, J.D.; Balash, Y.; Gurevich, T.; Bartels, A.L.; Hausdorff, J.M.; Giladi, N. Characterization of freezing of gait subtypes and the response of each to levodopa in Parkinson’s disease. Eur. J. Neurol. 2003, 10, 391–398. [Google Scholar] [CrossRef]

- Okuma, Y. Freezing of gait and falls in Parkinson’s disease. J. Park. Dis. 2014, 4, 255–260. [Google Scholar] [CrossRef]

- Okuma, Y.; Silva de Lima, A.L.; Fukae, J.; Bloem, B.R.; Snijders, A.H. A prospective study of falls in relation to freezing of gait and response fluctuations in Parkinson’s disease. Park. Relat. Disord. 2018, 46, 30–35. [Google Scholar] [CrossRef] [PubMed]

- Bloem, B.R.; Hausdorff, J.M.; Visser, J.E.; Giladi, N. Falls and freezing of gait in Parkinson’s disease: A review of two interconnected, episodic phenomena. Mov. Disord. 2004, 19, 871–884. [Google Scholar] [CrossRef] [PubMed]

- Nantel, J.; Bronte-Stewart, H. The effect of medication and the role of postural instability in different components of freezing of gait (FOG). Park. Relat. Disord. 2014, 20, 447–451. [Google Scholar] [CrossRef] [PubMed]

- Masud, T.; Morris, R.O. Epidemiology of falls. Age Ageing 2001, 30, 3–7. [Google Scholar] [CrossRef]

- Young, W.R.; Mark Williams, A. How fear of falling can increase fall-risk in older adults: Applying psychological theory to practical observations. Gait Posture 2015, 41, 7–12. [Google Scholar] [CrossRef]

- Ward-Griffin, C.; Hobson, S.; Melles, P.; Kloseck, M.; Vandervoort, A.; Crilly, R. Falls and fear of falling among community-dwelling seniors: The dynamic tension between exercising precaution and striving for independence. Can. J. Aging 2004, 23, 307–318. [Google Scholar] [CrossRef]

- Adkin, A.L.; Frank, J.S.; Jog, M.S. Fear of falling and postural control in Parkinson’s disease. Mov. Disord. 2003, 18, 496–502. [Google Scholar] [CrossRef]

- Bloem, B.R.; Grimbergen, Y.A.M.; Cramer, M.; Willemsen, M.; Zwinderman, A.H. Prospective assessment of falls in Parkinson’s disease. J. Neurol. 2001, 248, 950–958. [Google Scholar] [CrossRef]

- Kim, H.B.; Lee, H.J.; Lee, W.W.; Kim, S.K.; Jeon, H.S.; Park, H.Y.; Shin, C.W.; Yi, W.J.; Jeon, B.; Park, K.S. Validation of freezing-of-gait monitoring using smartphone. Telemed. e-Health 2018, 24, 899–907. [Google Scholar] [CrossRef]

- Pham, T.T.; Nguyen, D.N.; Dutkiewicz, E.; McEwan, A.L.; Leong, P.H.W. An anomaly detection technique in wearable wireless monitoring systems for studies of gait freezing in Parkinson’s disease. In Proceedings of the 2017 International Conference on Information Networking (ICOIN), Da Nang, Vietnam, 11–13 January 2017; pp. 41–45. [Google Scholar] [CrossRef]

- Ginis, P.; Nackaerts, E.; Nieuwboer, A.; Heremans, E. Cueing for people with Parkinson’s disease with freezing of gait: A narrative review of the state-of-the-art and novel perspectives. Ann. Phys. Rehabil. Med. 2018, 61, 407–413. [Google Scholar] [CrossRef]

- Nieuwboer, A. Cueing for freezing of gait in patients with Parkinson’s disease: A rehabilitation perspective. Mov. Disord. 2008, 23, 475–481. [Google Scholar] [CrossRef]

- Jovanov, E.; Wang, E.; Verhagen, L.; Fredrickson, M.; Fratangelo, R. deFOG—A real time system for detection and unfreezing of gait of Parkinson’s patients. In Proceedings of the 31st Annual International Conference of the IEEE Engineering in Medicine and Biology Society: Engineering the Future of Biomedicine, Minneapolis, MN, USA, 3–6 September 2009; pp. 5151–5154. [Google Scholar] [CrossRef]

- Bachlin, M.; Plotnik, M.; Roggen, D.; Maidan, I.; Hausdorff, J.M.; Giladi, N.; Troster, G. Wearable assistant for Parkinson’s disease patients with the freezing of gait symptom. IEEE Trans. Inf. Technol. Biomed. 2010, 14, 436–446. [Google Scholar] [CrossRef] [PubMed]

- Mazilu, S.; Blanke, U.; Hardegger, M.; Troster, G.; Gazit, E.; Dorfman, M.; Hausdorff, J.M. GaitAssist: A wearable assistant for gait training and rehabilitation in Parkinson’s disease. In Proceedings of the 2014 IEEE International Conference on Pervasive Computing and Communication Workshops (PERCOM WORKSHOPS), Budapest, Hungary, 24–28 March 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 135–137. [Google Scholar] [CrossRef]

- Silva de Lima, A.L.; Evers, L.J.W.; Hahn, T.; Bataille, L.; Hamilton, J.L.; Little, M.A.; Okuma, Y.; Bloem, B.R.; Faber, M.J. Freezing of gait and fall detection in Parkinson’s disease using wearable sensors: A systematic review. J. Neurol. 2017, 264, 1642–1654. [Google Scholar] [CrossRef] [PubMed]

- Nieuwboer, A.; Dom, R.; De Weerdt, W.; Desloovere, K.; Janssens, L.; Stijn, V. Electromyographic profiles of gait prior to onset of freezing episodes in patients with Parkinson’s disease. Brain 2004, 127, 1650–1660. [Google Scholar] [CrossRef] [PubMed]

- Ferster, M.L.; Mazilu, S.; Tröster, G. Gait parameters change prior to freezing in Parkinson’s disease: A data-driven study with wearable inertial units. In Proceedings of the 10th EAI International Conference on Body Area Networks, Sydney, Australia, 28–30 September 2015; EAI: Ghent, Belgium, 2015; pp. 159–166. [Google Scholar] [CrossRef]

- Moore, S.T.; MacDougall, H.G.; Ondo, W.G. Ambulatory monitoring of freezing of gait in Parkinson’s disease. J. Neurosci. Methods 2008, 167, 340–348. [Google Scholar] [CrossRef] [PubMed]

- Zabaleta, H.; Keller, T.; MartÍ Massó, J.F. Power spectral distribution analysis for detection of freezing of gait in patients with Parkinson’s disease. In Proceedings of the 4th European Conference of the International Federation for Medical and Biological Engineering, IFMBE, Antwerp, Belgium, 23–27 November 2008; Vander SLoten, J., Verdonck, P., Nyssen, M., Haueisen, J., Eds.; Springer: Berlin, Germany, 2008; pp. 2089–2092. [Google Scholar] [CrossRef]

- Bachlin, M.; Roggen, D.; Troster, G.; Plotnik, M.; Inbar, N.; Meidan, I.; Herman, T.; Brozgol, M.; Shaviv, E.; Giladi, N.; et al. Potentials of Enhanced Context Awareness in Wearable Assistants for Parkinson’s Disease Patients with the Freezing of Gait Syndrome. In Proceedings of the 2009 International Symposium on Wearable Computers, Linz, Austria, 4–7 September 2009; pp. 123–130. [Google Scholar] [CrossRef]

- Bächlin, M.; Plotnik, M.; Roggen, D.; Inbar, N.; Giladi, N.; Hausdorff, J.; Tröster, G. Parkinson’s disease patients’ perspective on context aware wearable technology for auditive assistance. In Proceedings of the 3rd International Conference on Pervasive Computing Technologies for Healthcare—Pervasive Health 2009, London, UK, 1–3 April 2009; Troester, G., Connelly, K., Arnrich, B., Eds.; IEEE: Piscataway, NJ, USA, 2009. [Google Scholar] [CrossRef]

- Bächlin, M.; Plotnik, M.; Roggen, D.; Giladi, N.; Hausdorff, J.M.; Tröster, G. A wearable system to assist walking of parkinsońs disease patients benefits and challenges of context-triggered acoustic cueing. Methods Inf. Med. 2010, 49, 88–95. [Google Scholar] [CrossRef]

- Bächlin, M.; Roggen, D.; Plotnik, M.; Hausdorff, J.M.; Giladi, N.; Tröster, G. Online detection of freezing of gait in Parkinson’s disease patients: A performance characterization. In Proceedings of the BODYNETS 2009—4th International ICST Conference on Body Area Networks, Los Angeles, CA, USA, 1–3 April 2009; Kaiser, W., Lu, C., Eds.; ICST: Brussels, Belgium, 2009. [Google Scholar] [CrossRef]

- Delval, A.; Snijders, A.H.; Weerdesteyn, V.; Duysens, J.E.; Defebvre, L.; Giladi, N.; Bloem, B.R. Objective detection of subtle freezing of gait episodes in Parkinson’s disease. Mov. Disord. 2010, 25, 1684–1693. [Google Scholar] [CrossRef]

- Djuric-Jovicic, M.; Jovicic, N.S.; Milovanovic, I.; Radovanovic, S.; Kresojevic, N.; Popovic, M.B. Classification of walking patterns in Parkinson’s disease patients based on inertial sensor data. In Proceedings of the 10th Symposium on Neural Network Applications in Electrical Engineering, Belgrade, Serbia, 23–25 September 2010; pp. 3–6. [Google Scholar] [CrossRef]

- Popovic, M.B.; Djuric-Jovicic, M.; Radovanovic, S.; Petrovic, I.; Kostic, V. A simple method to assess freezing of gait in Parkinson’s disease patients. Braz. J. Med. Res. 2010, 43, 883–889. [Google Scholar] [CrossRef]

- Cole, B.T.; Roy, S.H.; Nawab, S.H. Detecting freezing-of-gait during unscripted and unconstrained activity. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society, EMB, Boston, MA, USA, 30 August–3 September 2011; pp. 5649–5652. [Google Scholar] [CrossRef]

- Tsipouras, M.G.; Tzallas, A.T.; Tripoliti, E.; Rigas, G.; Bougia, P.; Fotiadis, D.I.; Tsouli, S.; Konitsiotis, S. On assessing motor disorders in Parkinson’s disease. In Proceedings of the Second International ICST Conference, MobiHealth 2010, Ayia Napa, Cyprus, 18–20 October 2010; Lin, J., Nikita, K.S., Eds.; Springer: Berlin/Heidelberg, Germany, 2011; pp. 35–38. [Google Scholar] [CrossRef]

- Niazmand, K.; Tonn, K.; Zhao, Y.; Fietzek, U.M.; Schroeteler, F.; Ziegler, K.; Ceballos-Baumann, A.O.; Lueth, T.C. Freezing of Gait detection in Parkinson’s disease using accelerometer based smart clothes. In Proceedings of the 2011 IEEE Biomedical Circuits and Systems Conference (BioCAS), San Diego, CA, USA, 10–12 November 2011; pp. 201–204. [Google Scholar] [CrossRef]

- Zhao, Y.; Tonn, K.; Niazmand, K.; Fietzek, U.M.; D’Angelo, L.T.; Ceballos-Baumann, A.; Lueth, T.C. Online FOG identification in Parkinson’s disease with a time-frequency combined algorithm. In Proceedings of the IEEE-EMBS International Conference on Biomedical and Health Informatics, Hong Kong and Shenzhen, China, 2–7 January 2012; pp. 192–195. [Google Scholar] [CrossRef]

- Mazilu, S.; Hardegger, M.; Zhu, Z.; Roggen, D.; Troester, G.; Plotnik, M.; Hausdorff, J. Online detection of freezing of gait with smartphones and machine learning techniques. In Proceedings of the 6th International Conference on Pervasive Computing Technologies for Healthcare, San Diego, CA, USA, 21–24 May 2012; Arriaga, R., Matic, A., Eds.; IEEE: Piscataway, NJ, USA, 2012. [Google Scholar] [CrossRef]

- Tripoliti, E.E.; Tzallas, A.T.; Tsipouras, M.G.; Rigas, G.; Bougia, P.; Leontiou, M.; Konitsiotis, S.; Chondrogiorgi, M.; Tsouli, S.; Fotiadis, D.I. Automatic detection of freezing of gait events in patients with Parkinson’s disease. Comput. Methods Programs Biomed. 2013, 110, 12–26. [Google Scholar] [CrossRef]

- Moore, S.T.; Yungher, D.A.; Morris, T.R.; Dilda, V.; MacDougall, H.G.; Shine, J.M.; Naismith, S.L.; Lewis, S.J. Autonomous identification of freezing of gait in Parkinson’s disease from lower-body segmental accelerometry. J. Neuroeng. Rehabil. 2013, 10, 19:1–19:10. [Google Scholar] [CrossRef]

- Mazilu, S.; Calatroni, A.; Gazit, E.; Roggen, D.; Hausdorff, J.M.; Tröster, G. Feature learning for detection and prediction of freezing of gait in Parkinson’s disease. In Machine Learning and Data Mining in Pattern Recognition; Perner, P., Ed.; Springer: Berlin/Heidelberg, Germany, 2013; Volume 7988, pp. 144–158. [Google Scholar]

- Coste, C.A.; Sijobert, B.; Pissard-Gibollet, R.; Pasquier, M.; Espiau, B.; Geny, C. Detection of freezing of gait in Parkinson disease: Preliminary results. Sensors 2014, 14, 6819–6827. [Google Scholar] [CrossRef] [PubMed]

- Sijobert, B.; Denys, J.; Coste, C.A.; Geny, C. IMU based detection of freezing of gait and festination in Parkinson’s disease. In Proceedings of the 2014 IEEE 19th International Functional Electrical Stimulation Society Annual Conference, IFESS, Kuala Lumpur, Malaysia, 17–19 September 2014. [Google Scholar] [CrossRef]

- Kwon, Y.; Park, S.H.; Kim, J.W.; Ho, Y.; Jeon, H.M.; Bang, M.J.; Jung, G.I.; Lee, S.M.; Eom, G.M.; Koh, S.B.; et al. A practical method for the detection of freezing of gait in patients with Parkinson’s disease. Clin. Interv. Aging 2014, 9, 1709–1719. [Google Scholar] [CrossRef]

- Pepa, L.; Ciabattoni, L.; Verdini, F.; Capecci, M.; Ceravolo, M.G.G. Smartphone based fuzzy logic freezing of gait detection in Parkinson’s Disease. In Proceedings of the 10th IEEE/ASME International Conference on Mechatronic and Embedded Systems and Applications, Senigallia, Italy, 10–12 September 2014. [Google Scholar] [CrossRef]

- Djuric-Jovicic, M.D.; Jovicic, N.S.; Radovanovic, S.M.; Stankovic, I.D.; Popovic, M.B.; Kostic, V.S. Automatic Identification and Classification of Freezing of Gait Episodes in Parkinson’s Disease Patients. IEEE Trans. Neural Syst. Rehabil. Eng. 2014, 22, 685–694. [Google Scholar] [CrossRef] [PubMed]

- Assam, R.; Seidl, T. Prediction of freezing of gait from parkinson’s disease movement time series using conditional random fields. In Proceedings of the 3rd ACM SIGSPATIAL International Workshop on the Use of GIS in Public Health, HealthGIS 2014—In Conjuncture with the 22nd ACM SIGSPATIAL International Conference on Advances in Geographic Information Systems, ACM GIS 2014, Dallas, TX, USA, 4 November 2014; Kanza, Y., Gudes, O., Goldberg, D.W., Eds.; ACM: New York, NY, USA, 2014; pp. 11–20. [Google Scholar] [CrossRef]

- Mazilu, S.; Blanke, U.; Troster, G. Gait, wrist, and sensors: Detecting freezing of gait in Parkinson’s disease from wrist movement. In Proceedings of the IEEE International Conference on Pervasive Computing and Communication Workshops (PerCom Workshops), St. Louis, MI, USA, 23–27 March 2015; pp. 579–584. [Google Scholar] [CrossRef]

- Zach, H.; Janssen, A.M.; Snijders, A.H.; Delval, A.; Ferraye, M.U.; Auff, E.; Weerdesteyn, V.; Bloem, B.R.; Nonnekes, J. Identifying freezing of gait in Parkinson’s disease during freezing provoking tasks using waist-mounted accelerometry. Park. Relat. Disord. 2015, 21, 1362–1366. [Google Scholar] [CrossRef] [PubMed]

- Kim, H.; Lee, H.J.; Lee, W.; Kwon, S.; Kim, S.K.; Jeon, H.S.; Park, H.; Shin, C.W.; Yi, W.J.; Jeon, B.S.; et al. Unconstrained detection of freezing of Gait in Parkinson’s disease patients using smartphone. In Proceedings of the 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; pp. 3751–3754. [Google Scholar] [CrossRef]

- Handojoseno, A.M.A.; Gilat, M.; Quynh Tran Ly, Q.T.; Chamtie, H.; Shine, J.M.; Nguyen, T.N.; Tran, Y.; Lewis, S.J.G.; Nguyen, H.T. An EEG study of turning freeze in Parkinson’s disease patients: The alteration of brain dynamic on the motor and visual cortex. In Proceedings of the 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; pp. 6618–6621. [Google Scholar] [CrossRef]

- Venu, N.; C, L.; Student, P.G. Prediction of freezing of gait (FOG) episodes in Parkinson’s disease patients by gait analysis. Int. J. Innov. Res. Sci. Eng. Technol. 2016, 5, 13679–13684. [Google Scholar] [CrossRef]

- Rodriguez-Martín, D.; Sama, A.; Pérez-López, C.; Catala, A.; Mestre, B.; Alcaine, S.; Bayes, À. Comparison of features, window sizes and classifiers in detecting freezing of gait in patients with Parkinson’s disease through a waist-worn accelerometer. In Proceedings of the 19th International Conference of the Catalan Association for Artificial Intelligence, Barcelona, Spain, 19–21 October 2016; Nebot, À., Binefa, X., de Mántaras, R.L., Eds.; IOS Press: Amsterdam, The Netherlands, 2016; pp. 127–136. [Google Scholar] [CrossRef]

- Mazilu, S.; Blanke, U.; Calatroni, A.; Gazit, E.; Hausdorff, J.M.; Tröster, G. The role of wrist-mounted inertial sensors in detecting gait freeze episodes in Parkinson’s disease. Pervasive Mob. Comput. 2016, 33, 1–16. [Google Scholar] [CrossRef]

- Lorenzi, P.; Rao, R.; Romano, G.; Kita, A.; Irrera, F. Mobile devices for the real-time detection of specific human motion disorders. IEEE Sens. J. 2016, 16, 8220–8227. [Google Scholar] [CrossRef]

- Lorenzi, P.; Rao, R.; Romano, G.; Kita, A.; Serpa, M.; Filesi, F.; Parisi, R.; Suppa, A.; Bologna, M.; Berardelli, A.; et al. Smart sensing systems for the detection of human motion disorders. Procedia Eng. 2015, 120, 324–327. [Google Scholar] [CrossRef]

- Lorenzi, P.; Rao, R.; Romano, G.; Kita, A.; Serpa, M.; Filesi, F.; Irrera, F.; Bologna, M.; Suppa, A.; Berardelli, A. Smart sensors for the recognition of specific human motion disorders in Parkinson’s disease. In Proceedings of the 2015 6th International Workshop on Advances in Sensors and Interfaces (IWASI), Gallipoli, Italy, 18–19 June 2015; pp. 131–136. [Google Scholar] [CrossRef]

- Kita, A.; Lorenzi, P.; Romano, G.; Rao, R.; Parisi, R.; Suppa, A.; Bologna, M.; Berardelli, A.; Irrera, F. Smart Sensing System for the Detection of Specific Human Motion Symptoms of the Parkinson’s Disease. In Proceedings of the 9th International Joint Conference on Biomedical Engineering Systems and Technologies, BIODEVICES, Rome, Italy, 21–23 February 2016; SCITEPRESS—Science and Technology Publications: Setúbal, Portugal, 2016; pp. 152–159. [Google Scholar] [CrossRef]

- Rezvanian, S.; Lockhart, T.E. Towards real-time detection of freezing of gait using wavelet transform on wireless accelerometer data. Sensors 2016, 16, 475. [Google Scholar] [CrossRef]

- Ahlrichs, C.; Samà, A.; Lawo, M.; Cabestany, J.; Rodríguez-Martín, D.; Pérez-López, C.; Sweeney, D.; Quinlan, L.R.; Laighin, G.Ò.; Counihan, T.; et al. Detecting freezing of gait with a tri-axial accelerometer in Parkinson’s disease patients. Med. Biol. Eng. Comput. 2016, 54, 223–233. [Google Scholar] [CrossRef]

- Capecci, M.; Pepa, L.; Verdini, F.; Ceravolo, M.G. A smartphone-based architecture to detect and quantify freezing of gait in Parkinson’s disease. Gait Posture 2016, 50, 28–33. [Google Scholar] [CrossRef] [PubMed]

- Ly, Q.T.; Handojoseno, A.M.A.; Gilat, M.; Nguyen, N.; Chai, R.; Tran, Y.; Lewis, S.J.G.; Nguyen, H.T. Identifying montages that best detect the electroencephalogram power spectrum alteration during freezing of gait in Parkinson’s disease patients. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society, EMBS, Orlando, FL, USA, 16–20 August 2016; pp. 6094–6097. [Google Scholar] [CrossRef]

- Pham, T.T.; Moore, S.T.; Lewis, S.J.G.; Nguyen, D.N.; Dutkiewicz, E.; Fuglevand, A.J.; McEwan, A.L.; Leong, P.H.W. Freezing of gait detection in Parkinson’s disease: A subject-independent detector using anomaly scores. IEEE Trans. Biomed. Eng. 2017, 64, 2719–2728. [Google Scholar] [CrossRef] [PubMed]

- Shine, J.M.; Moore, S.T.; Bolitho, S.J.; Morris, T.R.; Dilda, V.; Naismith, S.L.; Lewis, S.J.G. Assessing the utility of Freezing of gait questionnaires in Parkinson’s disease. Park. Relat. Disord. 2012, 18, 25–29. [Google Scholar] [CrossRef] [PubMed]

- Pham, T.T.; Nguyen, D.N.; Dutkiewicz, E.; McEwan, A.L.; Leong, P.H.W. Wearable healthcare systems: A single channel accelerometer based anomaly detector for studies of gait freezing in Parkinson’s disease. In Proceedings of the 2017 IEEE International Conference on Communications (ICC), Paris, France, 21–25 May 2017; pp. 1–5. [Google Scholar] [CrossRef]

- Ahn, D.; Chung, H.; Lee, H.-W.; Kang, K.; Ko, P.-W.; Kim, N.S.; Park, T. Smart gait-aid glasses for Parkinson’s disease patients. IEEE Trans. Biomed. Eng. 2017, 64, 2394–2402. [Google Scholar] [CrossRef]

- Tahafchi, P.; Molina, R.; Roper, J.A.; Sowalsky, K.; Hass, C.J.; Gunduz, A.; Okun, M.S.; Judy, J.W. Freezing-of-Gait detection using temporal, spatial, and physiological features with a support-vector-machine classifier. In Proceedings of the 2017 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Seogwipo, Korea, 11–15 July 2017; pp. 2867–2870. [Google Scholar] [CrossRef]

- Suppa, A.; Kita, A.; Leodori, G.; Zampogna, A.; Nicolini, E.; Lorenzi, P.; Rao, R.; Irrera, F. L-DOPA and freezing of gait in Parkinson’s disease: Objective assessment through a wearable wireless system. Front. Neurol. 2017, 8, 406:1–406:14. [Google Scholar] [CrossRef]

- Kita, A.; Lorenzi, P.; Rao, R.; Irrera, F. Reliable and robust detection of freezing of gait episodes with wearable electronic devices. IEEE Sens. J. 2017, 17, 1899–1908. [Google Scholar] [CrossRef]

- Rodríguez-Martín, D.; Samà, A.; Pérez-López, C.; Català, A.; Moreno Arostegui, J.M.; Cabestany, J.; Bayés, À.; Alcaine, S.; Mestre, B.; Prats, A.; et al. Home detection of freezing of gait using support vector machines through a single waist-worn triaxial accelerometer. PLoS ONE 2017, 12, e0171764:1–e0171764:26. [Google Scholar] [CrossRef]

- Rodríguez-Martín, D.; Pérez-López, C.; Samà, A.; Català, A.; Arostegui, J.M.M.; Cabestany, J.; Mestre, B.; Alcaine, S.; Prats, A.; De La Cruz Crespo, M.; et al. A waist-worn inertial measurement unit for long-term monitoring of Parkinson’s disease patients. Sensors 2017, 17, 827. [Google Scholar] [CrossRef]

- Ly, Q.T.; Handojoseno, A.M.A.; Gilat, M.; Chai, R.; Martens, K.A.E.; Georgiades, M.; Naik, G.R.; Tran, Y.; Lewis, S.J.G.; Nguyen, H.T. Detection of turning freeze in Parkinson’s disease based on S-transform decomposition of EEG signals. In Proceedings of the 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Seogwipo, Korea, 11–15 July 2017; pp. 3044–3047. [Google Scholar] [CrossRef]

- Pepa, L.; Capecci, M.; Ciabattoni, L.; Spalazzi, L.; Ceravolo, M.G. An unobtrusive expert system to detect freezing of gait during daily living in people with Parkinson’s disease. In Proceedings of the 2017 2nd International Multidisciplinary Conference on Computer and Energy Science, Split, Croatia, 12–14 July 2017; Rodrigues, J.J.P.C., Nižetić, S., Patrono, L., Milanović, Z., Šolić, P., Vukojević, K., Perković, T., Eds.; IEEE: Piscataway, NJ, USA, 2017. [Google Scholar]

- Wang, K.; Li, B.; Gu, D.; Dai, K.; Zhou, L. A smartphone based system for freezing of gait monitoring for Parkinson’s disease patients. In Proceedings of the 9th IEEE International Conference on Communication Software and Networks, ICCSN 2017, Guangzhou, China, 6–8 May 2017; pp. 1529–1533. [Google Scholar] [CrossRef]

- Punin, C.; Barzallo, B.; Huerta, M.; Bermeo, A.; Bravo, M.; Llumiguano, C. Wireless devices to restart walking during an episode of FOG on patients with Parkinson’s disease. In Proceedings of the 2017 IEEE Second Ecuador Technical Chapters Meeting (ETCM), Salinas, Ecuador, 16–20 October 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Saad, A.; Zaarour, I.; Guerin, F.; Bejjani, P.; Ayache, M.; Lefebvre, D. Detection of freezing of gait for Parkinson’s disease patients with multi-sensor device and Gaussian neural networks. Int. J. Mach. Learn. Cybern. 2017, 8, 941–954. [Google Scholar] [CrossRef]

- Samà, A.; Rodríguez-Martín, D.; Pérez-López, C.; Català, A.; Alcaine, S.; Mestre, B.; Prats, A.; Crespo, M.C.; Bayés, À. Determining the optimal features in freezing of gait detection through a single waist accelerometer in home environments. Pattern Recognit. Lett. 2018, 105, 135–143. [Google Scholar] [CrossRef]

- Prateek, G.V.; Skog, I.; McNeely, M.E.; Duncan, R.P.; Earhart, G.M.; Nehorai, A. Modeling, detecting, and tracking freezing of gait in Parkinson disease using inertial sensors. IEEE Trans. Biomed. Eng. 2018, 65, 2152–2161. [Google Scholar] [CrossRef] [PubMed]

- Ashour, A.S.; El-Attar, A.; Dey, N.; El-Naby, M.M.A.; El-Kader, H.A. Patient-dependent freezing of gait detection using signals from multi-accelerometer sensors in Parkinson’s disease. In Proceedings of the 9th Cairo International Biomedical Engineering Conference, CIBEC 2018, Cairo, Egypt, 20–22 December 2018; Yassine, I., Basha, T., Eldeib, .A., Eds.; IEEE: Piscataway, NJ, USA, 2019; pp. 171–174. [Google Scholar] [CrossRef]

- Roffo, G.; Melzi, S.; Cristani, M. Infinite Feature Selection. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 4202–4210. [Google Scholar] [CrossRef]

- Camps, J.; Samà, A.; Martín, M.; Rodríguez-Martín, D.; Pérez-López, C.; Moreno Arostegui, J.M.; Cabestany, J.; Català, A.; Alcaine, S.; Mestre, B.; et al. Deep learning for freezing of gait detection in Parkinson’s disease patients in their homes using a waist-worn inertial measurement unit. Knowl.-Based Syst. 2018, 139, 119–131. [Google Scholar] [CrossRef]

- Oung, Q.W.; Basah, S.N.; Muthusamy, H.; Vijean, V.; Lee, H.; Khairunizam, W.; Bakar, S.A.; Razlan, Z.M.; Ibrahim, Z. Objective evaluation of freezing of gait in patients with Parkinson’s disease through machine learning approaches. In Proceedings of the 2018 International Conference on Computational Approach in Smart Systems Design and Applications, ICASSDA, Kuching, Malaysia, 15–17 August 2018. [Google Scholar] [CrossRef]

- Li, B.; Zhang, Y.; Tang, L.; Gao, C.; Gu, D. Automatic detection system for freezing of gait in Parkinson’s disease based on the clustering algorithm. In Proceedings of the 2nd IEEE Advanced Information Management, Communicates, Electronic and Automation Control Conference, IMCEC, Xi’an, China, 25–27 May 2018; Xu, B., Ed.; IEEE: Piscataway, NJ, USA, 2018; pp. 1640–1644. [Google Scholar] [CrossRef]

- Mikos, V.; Heng, C.-H.; Tay, A.; Chia, N.S.Y.; Koh, K.M.L.; Tan, D.M.L.; Au, W.L. Real-time patient adaptivity for freezing of gait classification through semi-supervised neural networks. In Proceedings of the 16th IEEE International Conference on Machine Learning and Applications, ICMLA 2017, Cancun, Mexico, 18–21 December 2017; Chen, X., Luo, B., Luo, F., Palade, V., Wani, M.A., Eds.; IEEE: Piscataway, NJ, USA, 2018; Volume 1, pp. 871–876. [Google Scholar] [CrossRef]

- Mikos, V.; Heng, C.-H.; Tay, A.; Yen, S.-C.; Chia, N.S.Y.; Koh, K.M.L.; Tan, D.M.L.; Au, W.L. A neural network accelerator with integrated feature extraction processor for a freezing of gait detection system. In Proceedings of the IEEE Asian Solid-State Circuits Conference, Tainan, Taiwan, 5–7 November 2018; pp. 59–62. [Google Scholar] [CrossRef]

- Mohammadian Rad, N.; van Laarhoven, T.; Furlanello, C.; Marchiori, E. Novelty detection using deep normative modeling for imu-based abnormal movement monitoring in parkinson’s disease and autism spectrum disorders. Sensors 2018, 18, 3533. [Google Scholar] [CrossRef] [PubMed]

- El-Attar, A.; Ashour, A.S.; Dey, N.; El-Kader, H.A.; Abd El-Naby, M.M.; Shi, F. Hybrid DWT-FFT features for detecting freezing of gait in Parkinson’s disease. Front. Artif. Intell. Appl. 2019, 314, 117–126. [Google Scholar] [CrossRef]

- Punin, C.; Barzallo, B.; Clotet, R.; Bermeo, A.; Bravo, M.; Bermeo, J.P.; Llumiguano, C. A non-invasive medical device for parkinson’s patients with episodes of freezing of gait. Sensors 2019, 19, 737. [Google Scholar] [CrossRef]

- Barzallo, B.; Punin, C.; Llumiguano, C.; Huerta, M. Wireless assistance system during episodes of freezing of gait by means superficial electrical stimulation. In Proceedings of the World Congress on Medical Physics and Biomedical Engineering 2018, IFMBE, Prague, Czech Republic, 3–8 June 2018; Lhotska, L., Ed.; Springer: Singapore, 2019; Volume 68, pp. 865–870. [Google Scholar] [CrossRef]

- Mazzetta, I.; Zampogna, A.; Suppa, A.; Gumiero, A.; Pessione, M.; Irrera, F. Wearable sensors system for an improved analysis of freezing of gait in Parkinson’s disease using electromyography and inertial signals. Sensors 2019, 19, 948. [Google Scholar] [CrossRef]

- Mazilu, S.; Calatroni, A.; Gazit, E.; Mirelman, A.; Hausdorff, J.M.; Troster, G. Prediction of freezing of gait in Parkinson’s from physiological wearables: An exploratory study. IEEE J. Biomed. Heal. Informatics 2015, 19, 1843–1854. [Google Scholar] [CrossRef]

- Ardi Handojoseno, A.M.; Shine, J.M.; Nguyen, T.N.; Tran, Y.; Lewis, S.J.G.; Nguyen, H.T. Analysis and prediction of the freezing of gait using EEG brain dynamics. IEEE Trans. Neural Syst. Rehabil. Eng. 2015, 23, 887–896. [Google Scholar] [CrossRef]

- Zia, J.; Tadayon, A.; McDaniel, T.; Panchanathan, S. Utilizing neural networks to predict freezing of gait in Parkinson’s patients. In Proceedings of the 18th International ACM SIGACCESS Conference on Computers and Accessibility, ASSETS 2016, Reno, NV, USA, 23–26 October 2016; ACM: New York, NY, USA, 2016; pp. 333–334. [Google Scholar] [CrossRef]

- Palmerini, L.; Rocchi, L.; Mazilu, S.; Gazit, E.; Hausdorff, J.M.J.M.; Chiari, L. Identification of characteristic motor patterns preceding freezing of gait in Parkinson’s disease using wearable sensors. Front. Neurol. 2017, 8, 394:1–394:12. [Google Scholar] [CrossRef]

- Handojoseno, A.M.A.; Naik, G.R.; Gilat, M.; Shine, J.M.; Nguyen, T.N.; Quynh, T.L.Y.; Lewis, S.J.G.; Nguyen, H.T. Prediction of freezing of gait in patients with Parkinson’s disease using EEG signals. In Telehealth for our Ageing Society; van den Berg, M.E.L., Maeder, A.L., Eds.; IOS Press: Amsterdam, The Netherlands, 2018; Volume 246, pp. 124–131. ISBN 9781614998440. [Google Scholar]

- Torvi, V.G.; Bhattacharya, A.; Chakraborty, S. Deep domain adaptation to predict freezing of gait in patients with Parkinson’s disease. In Proceedings of the 17th IEEE International Conference on Machine Learning and Applications, ICMLA 2018, Orlando, FL, USA, 17–18 December 2018; Wani, M.A., Sayed-Mouchaweh, M., Kantardzic, M., Gama, J., Lughofer, E., Eds.; IEEE: Piscataway, NJ, USA, 2019; pp. 1001–1006. [Google Scholar] [CrossRef]

- Mazilu, S.; Blanke, U.; Roggen, D.; Tröster, G.; Gazit, E.; Hausdorff, J.M. Engineers meet clinicians. In Proceedings of the 4th Augmented Human International Conference, Stuttgart, Germany, 7–8 March 2013; ACM: New York, NY, USA, 2013; pp. 124–127. [Google Scholar] [CrossRef]

- REMPARK—Personal Health Device for the Remote and Autonomous Management of Parkinson’s Disease. Available online: https://rempark.cetpd.upc.edu/project (accessed on 2 October 2019).

- Verleysen, M.; Pérez López, C.; Rodríguez Martín, D.M.; Cabestany Moncusí, J.; Moreno Aróstegui, J.M.; Rodríguez Molinero, A. A heterogeneous database for movement knowledge extraction in Parkinson’s Disease. In Proceedings of the European Symposium on Artificial Neural Networks, Computational Intelligence and Machine Learning: ESANN 2013, Bruges, Belgium, 24–26 April 2013; pp. 413–418. [Google Scholar]

- MASPARK. Available online: https://www.aicos.fraunhofer.pt/en/our_work/projects/maspark.html (accessed on 10 May 2019).

- Niazmand, K.; Tonn, K.; Kalaras, A.; Fietzek, U.M.; Mehrkens, J.H.; Lueth, T.C. Quantitative evaluation of Parkinson’s disease using sensor based smart glove. In Proceedings of the 24th International Symposium on Computer-Based Medical Systems (CBMS), Bristol, UK, 27–30 June 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 1–8. [Google Scholar] [CrossRef]

- Nilsson, J.-O.; Gupta, A.K.; Handel, P. Foot-mounted inertial navigation made easy. In Proceedings of the 2014 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Busan, Korea, 27–30 October 2014; IEEE: Piscataway, NJ, USA, 2015; pp. 24–29. [Google Scholar] [CrossRef]

- Zijlstra, W.; Hof, A.L. Assessment of spatio-temporal gait parameters from trunk accelerations during human walking. Gait Posture 2003, 18, 1–10. [Google Scholar] [CrossRef]

- Laudanski, A.; Yang, S.; Li, Q. A concurrent comparison of inertia sensor-based walking speed estimation methods. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Boston, MA, USA, 30 August–3 September 2011; pp. 3484–3487. [Google Scholar] [CrossRef]

- Madgwick, S.O.H.; Harrison, A.J.L.; Vaidyanathan, R. Estimation of IMU and MARG orientation using a gradient descent algorithm. In Proceedings of the IEEE International Conference on Rehabilitation Robotics, Zurich, Switzerland, 29 June–1 July 2011; pp. 1–7. [Google Scholar] [CrossRef]

- Cole, B.T.; Roy, S.H.; De Luca, C.J.; Nawab, S.H. Dynamic neural network detection of tremor and dyskinesia from wearable sensor data. In Proceedings of the Proceeding of the Annual International Conference of the IEEE Engineering in Medicine and Biology, Buenos Aires, Argentina, 31 August–4 September 2010; pp. 6062–6065. [Google Scholar] [CrossRef]

- Moore, S.T.; MacDougall, H.G.; Gracies, J.M.; Cohen, H.S.; Ondo, W.G. Long-term monitoring of gait in Parkinson’s disease. Gait Posture 2007, 26, 200–207. [Google Scholar] [CrossRef] [PubMed]

- Khan, A.M.; Lee, Y.K.; Kim, T.-S. Accelerometer signal-based human activity recognition using augmented autoregressive model coefficients and artificial neural nets. In Proceedings of the 30th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Vancouver, BC, Canada, 20–25 August 2008; pp. 5172–5175. [Google Scholar] [CrossRef]

- Kaminiski, M.J.; Blinowska, K.J. A new method of the description of the information flow sources. Biol. Cybern. 1991, 65, 203–210. [Google Scholar] [CrossRef] [PubMed]

- Koopman, B.O. Hamiltonian Systems and Transformation in Hilbert Space. Proc. Natl. Acad. Sci. USA 1931, 17, 315–318. [Google Scholar] [CrossRef] [PubMed]

- Hua, J.C.; Roy, S.; McCauley, J.L.; Gunaratne, G.H. Using dynamic mode decomposition to extract cyclic behavior in the stock market. Phys. A Stat. Mech. Appl. 2016, 448, 172–180. [Google Scholar] [CrossRef]

- Phinyomark, A.; Limsakul, C.; Phukpattaranont, P. A Novel Feature Extraction for Robust EMG Pattern Recognition. J. Comput. 2009, 1, 71–80. [Google Scholar]

- Phinyomark, A.; Nuidod, A.; Phukpattaranont, P.; Limsakul, C. Feature extraction and reduction of wavelet transform coefficients for EMG pattern classification. Electron. Electr. Eng. 2012, 122, 27–32. [Google Scholar] [CrossRef]

- Challis, R.E.; Kitney, R.I. Biomedical signal processing (in four parts) Part 3 The power spectrum and coherence function. Med. Biol. Eng. 1991, 29, 225–241. [Google Scholar] [CrossRef]

- Tzallas, A.T.; Tsipouras, M.G.; Rigas, G.; Tsalikakis, D.G.; Karvounis, E.C.; Chondrogiorgi, M.; Psomadellis, F.; Cancela, J.; Pastorino, M.; Waldmeyer, M.T.A.; et al. PERFORM: A system for monitoring, assessment and management of patients with Parkinson’s disease. Sensors 2014, 14, 21329–21357. [Google Scholar] [CrossRef]

- Shiavi, R. Introduction to Applied Statistical Signal Analysis: Guide to Biomedical and Electrical Engineering Applications, 3rd ed.; Academic Press: Burlington, NJ, USA, 2007. [Google Scholar]

- Vinck, M.; Oostenveld, R.; Van Wingerden, M.; Battaglia, F.; Pennartz, C.M.A. An improved index of phase-synchronization for electrophysiological data in the presence of volume-conduction, noise and sample-size bias. Neuroimage 2011, 55, 1548–1565. [Google Scholar] [CrossRef]

- Lachaux, J.-P.; Rodriguez, E.; Martinerie, J.; Varela, F.J. Measuring phase synchrony in brain signals. Hum. Brain Mapp. 1999, 8, 194–208. [Google Scholar] [CrossRef]

- Tay, A.; Yen, S.C.; Lee, P.Y.; Wang, C.Y.; Neo, A.; Phan, S.W.; Yogaprakash, K.; Liew, S.; Au, W.L. Freezing of gait (FoG) detection for Parkinson disease. In Proceedings of the 10th Asian Control Conference (ASCC), Kota Kinabalu, Malaysia, 31 May–3 June 2015; pp. 1–6. [Google Scholar] [CrossRef]

- Wu, X.; Kumar, V.; Ross, Q.J.; Ghosh, J.; Yang, Q.; Motoda, H.; McLachlan, G.J.; Ng, A.; Liu, B.; Yu, P.S.; et al. Top 10 algorithms in data mining. Knowl. Inf. Syst. 2008, 14, 1–37. [Google Scholar] [CrossRef]

- Bishop, C.M. Pattern Recognition and Machine Learning, 1st ed.; Springer-Verlag: New York, NY, USA, 2006; ISBN 9780387310732. [Google Scholar]

- Friedman, J.; Hastie, T.; Tibshirani, R. Additive logistic regression: A statistical view of boosting. Ann. Stat. 2000, 28, 337–407. [Google Scholar] [CrossRef]

- Seiffert, C.; Khoshgoftaar, T.M.; Van Hulse, J.; Napolitano, A. RUSBoost: Improving classification performance when training data is skewed. In Proceedings of the 19th International Conference on Pattern Recognition, Tampa Bay, FL, USA, 8–11 December 2008; pp. 1–4. [Google Scholar] [CrossRef]

- Freund, Y. A More Robust Boosting Algorithm. arXiv 2009, arXiv:0905.2138. [Google Scholar]

- Egmont-petersen, M.; De Ridder, D.; Handels, H. Image processing with neural networks—A review. Patt. Recognit. 2002, 35, 2279–2301. [Google Scholar] [CrossRef]

- Yamashita, R.; Nishio, M.; Do, R.K.G.; Togashi, K. Convolutional neural networks: An overview and application in radiology. Insights Imaging 2018, 9, 611–629. [Google Scholar] [CrossRef]

- Anwar, S.M.; Majid, M.; Qayyum, A.; Awais, M.; Alnowami, M.; Khan, M.K. Medical Image Analysis using Convolutional Neural Networks: A Review. J. Med. Syst. 2018, 42, 1–13. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Sak, H.; Senior, A.; Beaufays, F. Long Short-Term Memory Based Recurrent Neural Network Architectures for Large Vocabulary Speech Recognition. arXiv 2014, arXiv:1402.1128. [Google Scholar]

- Heremans, E.; Nieuwboer, A.; Vercruysse, S. Freezing of gait in Parkinson’s disease: Where are we now? Curr. Neurol. Neurosci. Rep. 2013, 13, 350:1–350:9. [Google Scholar] [CrossRef]

- Howcroft, J.; Kofman, J.; Lemaire, E.D. Prospective fall-risk prediction models for older adults based on wearable sensors. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 1812–1820. [Google Scholar] [CrossRef]

- Howcroft, J.; Lemaire, E.D.; Kofman, J. Wearable-sensor-based classification models of faller status in older adults. PLoS ONE 2016, 11, e0153240:1–e0153240:16. [Google Scholar] [CrossRef] [PubMed]

- Caby, B.; Kieffer, S.; de Saint Hubert, M.; Cremer, G.; Macq, B. Feature extraction and selection for objective gait analysis and fall risk assessment by accelerometry. Biomed. Eng. Online 2011, 10, 1–19. [Google Scholar] [CrossRef] [PubMed]

- Shany, T.; Redmond, S.J.; Narayanan, M.R.; Lovell, N.H. Sensors-based wearable systems for monitoring of human movement and falls. IEEE Sens. J. 2012, 12, 658–670. [Google Scholar] [CrossRef]

- Saeys, Y.; Inza, I.; Larranaga, P. A review of feature selection techniques in bioinformatics. Bioinformatics 2007, 23, 2507–2517. [Google Scholar] [CrossRef] [PubMed]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).