1. Introduction

Partial discharge (PD) detection plays an important role in the evaluation of insulation condition [

1]. Different PD types may cause diverse damages to equipment insulation [

2]. Therefore, it is meaningful to be able to distinguish between different PD types for electrical equipment repair and maintenance [

3,

4].

Feature extraction is of great importance during PD pattern recognition. It directly affects the recognition results [

5,

6,

7,

8,

9]. Chu et al. employed statistical distribution parameters method for PD recognition. Different types of PD have been identified [

5]. Ma et al. used the fractal theory for motor single-source PD classification [

6]. Cui et al. adopted the image moments characteristic parameter of PD to analyze the surface discharge development process [

7]. However, the data size of these methods is very large and the speed of data processing is slow, which is not suitable for online monitoring. Alvarez et al. extracted the waveform feature parameters to discriminate the PD sources [

8]. However, the electromagnetic wave radiated by the PD pulse will decay and can be negatively influenced by the electromagnetic interference. Tang et al. used wavelet decomposition method for PD recognition in gas-insulated switchgear (GIS) [

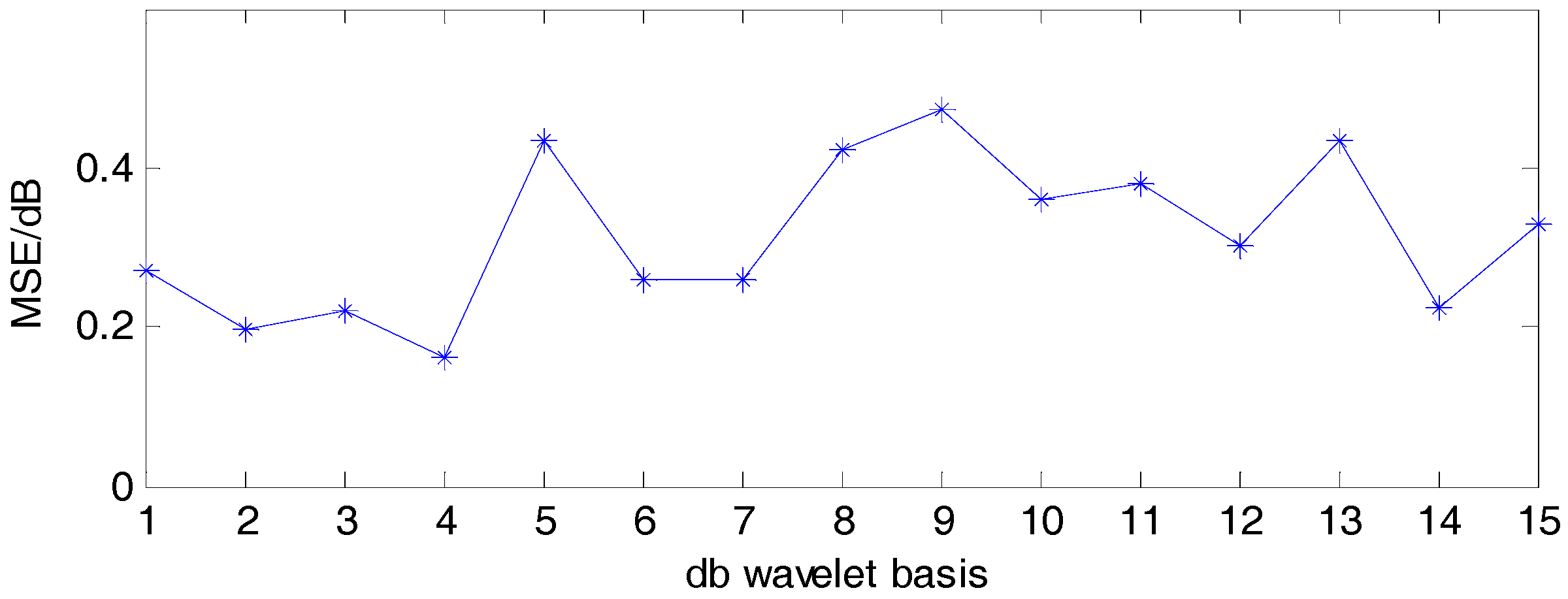

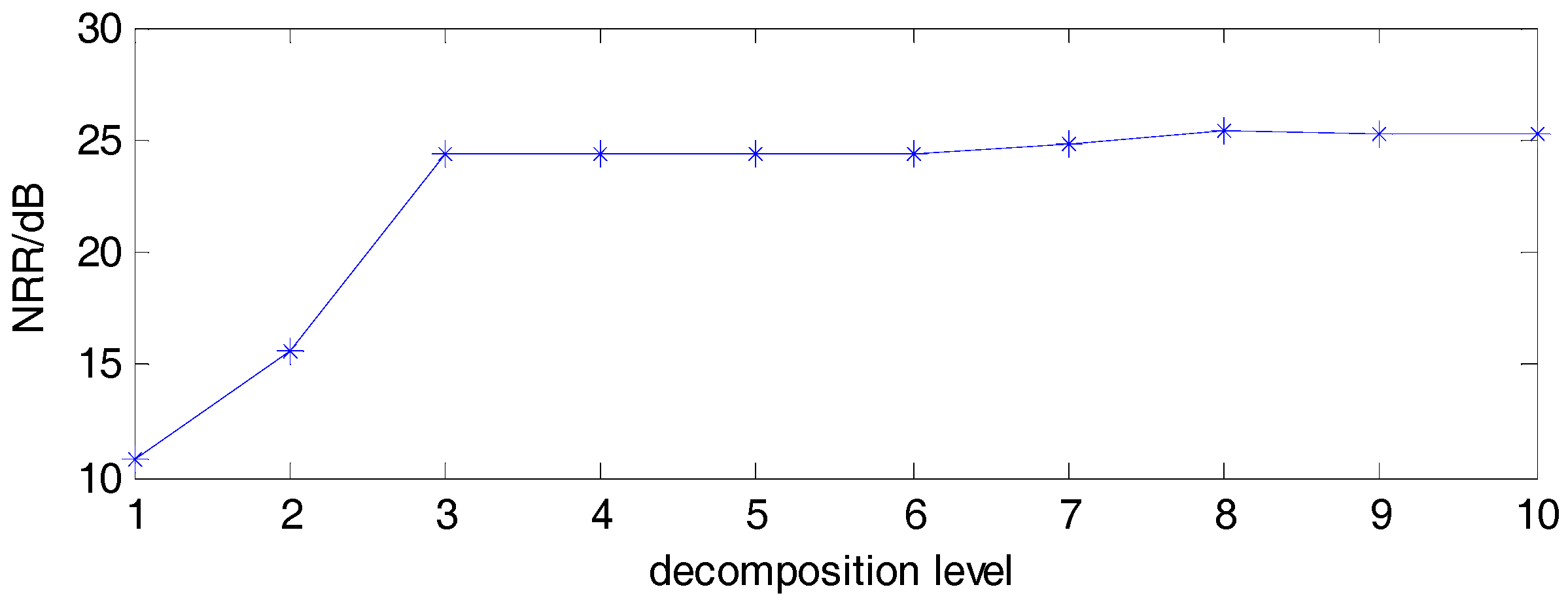

9]. However, his method has some inherent limitation, such as the difficulty of the selection of wavelet basis, wavelet thresholds, decomposition levels, and so on.

Empirical Mode Decomposition (EMD), proposed by Huang et al. in 1998, is a self-adapting method for signal decomposition [

10]. It is a data-driven approach that is suitable for analyzing non-linear and non-stationary problems. However, it is restricted by its inherent mode-mixing phenomenon. Boudraa et al. put forward a signal filtering method based on EMD [

11]. It is limited to signals that were corrupted by additive white Gaussian noise. To solve the mode-mixing problem in EMD, Ensemble Empirical Mode Decomposition (EEMD) was proposed by Wu and Huang [

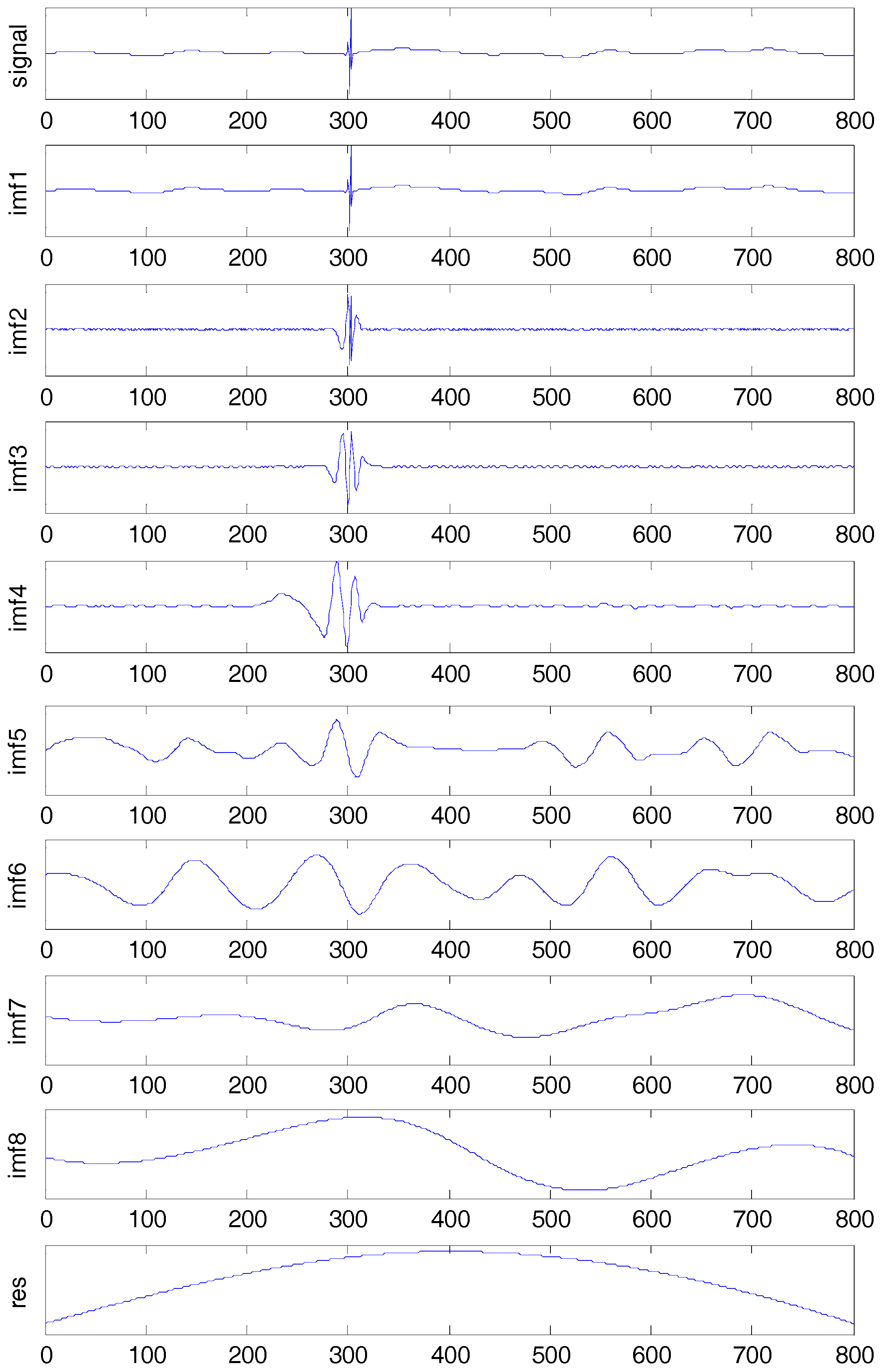

12]. White noise components are added artificially in EEMD and eliminated through repetitive averaging. EEMD decomposes signals into Intrinsic Mode Functions (IMFs) containing signals’ local features. It could effectively apply the uniform distribution character to make up for the absence of signal scales. It is also suitable for non-linear and non-stationary signals. Furthermore, EEMD has been widely adopted in fault feature extraction [

13,

14,

15,

16]. Fu et al. proposed a novel approach based on fast EEMD to extract the fault feature of bearing vibration signals [

13]. The test results from both the simulation signal and the experiment data demonstrated its effectiveness. The heart phonocardiogram is analyzed in [

14] by employing EEMD combined with kurtosis features. Its practicality was proven through the experimental dataset obtained from 43 heart sound recordings in a real clinical environment. Kong et al. proposed an envelope extraction method based on EEMD for the double-impulse extraction of faulty hybrid ceramic ball bearings [

15]. The pre-whitened signals were de-noised using EEMD, and the Hilbert Envelope Extraction Method was employed to extract the double impulse. Simulation results verified the validity of this method. Patel et al. presented a novel approach by combining template matching with EEMD [

16]. EEMD was applied to decompose the noisy data into IMFs. However, the data size of IMFs is always large. To reduce the calculation, some steps should be taken to extract the IMFs that represent prominent features.

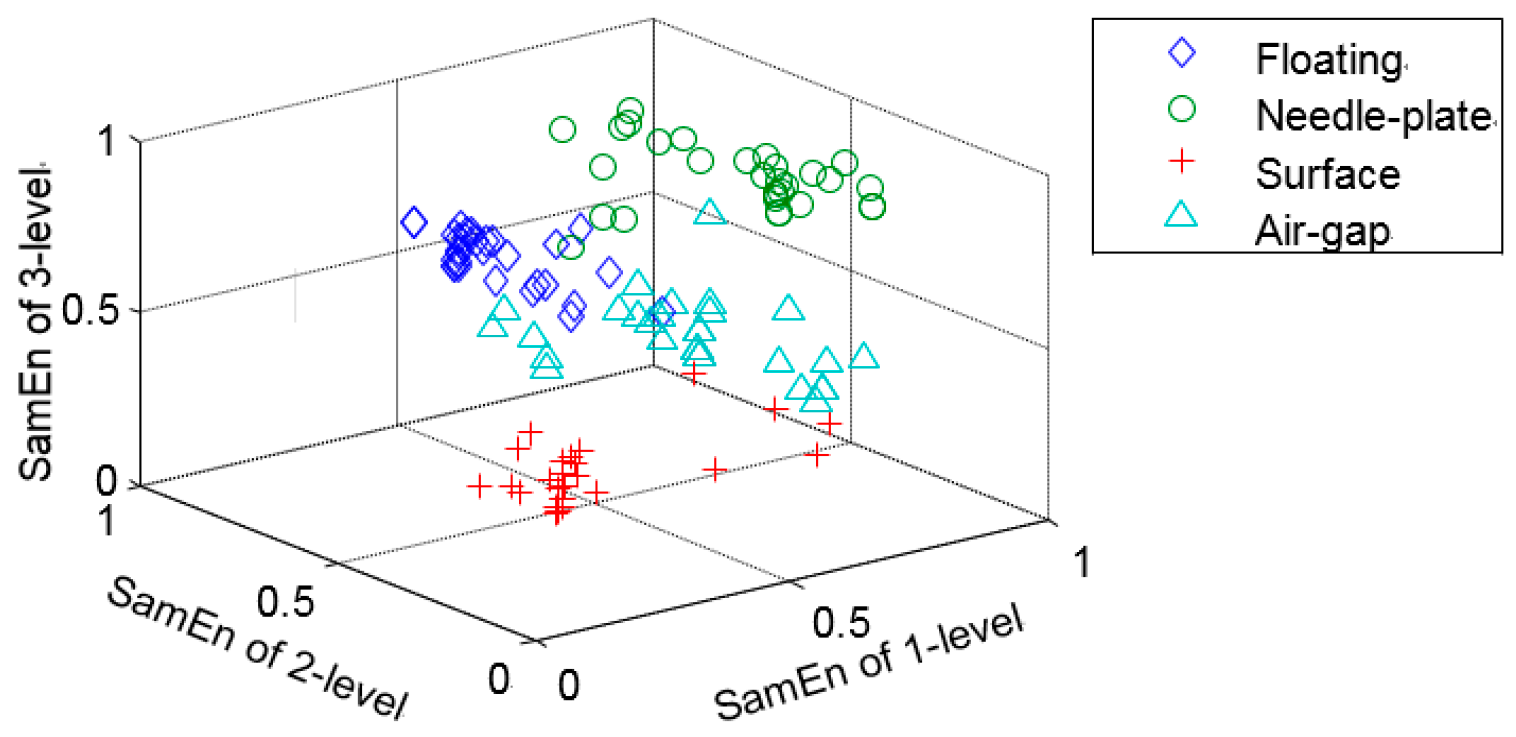

Sample Entropy (SamEn) is the negative natural logarithm of the conditional probability [

17]. A lower SamEn value indicates more self-similarity in a time series. SamEn has many positive characteristics, such as good residence to noise interference and closer agreement between theory for data sets and known probabilistic content. Widodo et al. presented the intelligent prognostics for battery health based on sample entropy [

18]. SamEn features could represent the health condition of battery. Mei et al. used sample entropy to quantify parameters of four foot types. From this, it could be used to quantify the regularity and complexity of a data series [

19]. SamEn could avoid the influence of the noise when exploring a time series. Therefore, SamEn is an effective tool for evaluating complex non-linear time series. Moreover, SamEn displays the property of relative consistency in situations where approximate entropy does not. In practice these characteristics are suitable for PD signal analysis. In this study SamEn is adopted to extract the representative characteristics from IMFs of EEMD.

In recent years, various pattern recognition approaches have been used in PD pattern recognition [

20,

21]. Majidi et al. created seventeen samples for classifying internal, surface, and corona partial discharges in the laboratory [

20]. Different PD types were identified with an artificial neural network (ANN) and the sparse method. However, an ANN presents problems of slow convergence rate and the tendency to be entrapped in a local minimum. As a learning machine, which is based on kernel functions, a Support Vector Machine (SVM) classifier could effectively solve such problems. In Reference [

21], the PD and noise-related coefficients are identified by SVM. The performance was evaluated with PD signals measured in air and in solid dielectrics. However, SVM is restricted in practical applications for its inherent restriction by Mercer conditions and the difficult choice of regularization parameters [

22].

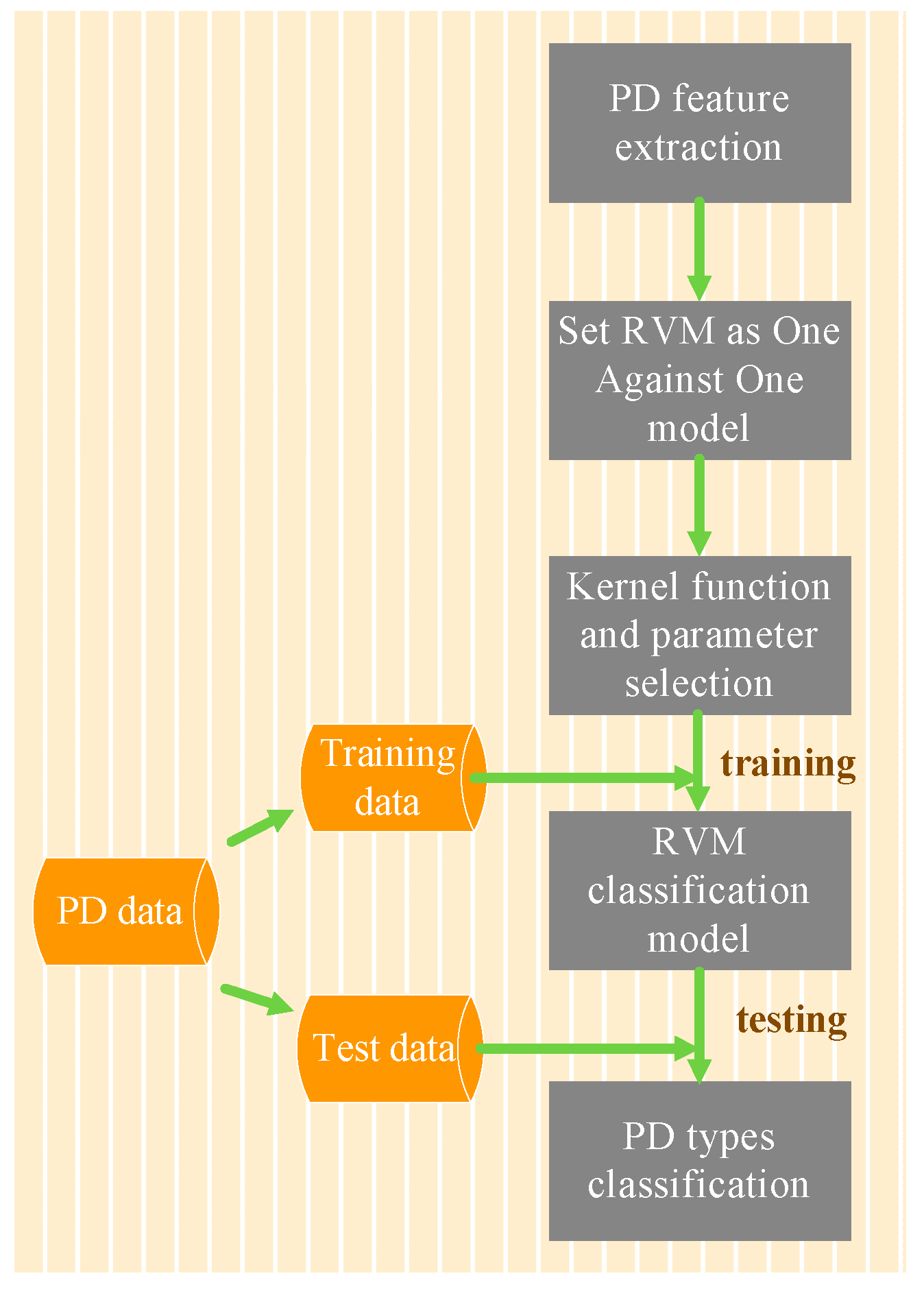

Relevance Vector Machine (RVM), proposed by Tipping, is a novel pattern recognition method based on kernel functions [

23]. The model is learning under a Bayesian framework, whose kernel functions are not restricted by Mercer conditions. Moreover, the regularization coefficient is adjusted automatically during the estimation of hyper parameters. As an extension of SVM, RVM has become the research focus in recent years [

24,

25,

26]. Nguyen employed RVM for Kinect gesture recognition and compared it with SVM [

24]. Results showed that RVM could achieve the state-of-the-art predictive performance and run much faster than SVM. Compared with SVM, RVM needs fewer vectors, and could effectively avoid the choice of regularization coefficient and restriction of Mercer conditions. Liu et al. proposed an intelligent multi-sensor data fusion method using RVM for gearbox fault detection [

25]. Experimental results demonstrated that RVM not only has higher detection accuracy, but also has better real-time accuracy. It has been shown in literature that RVM can be very sensitive to outliers far from the decision boundary that discriminates between two classes. To solve this problem, Hwang proposed a robust RVM based on a weighting scheme that is insensitive to outliers [

26]. Experimental results from synthetic and real data sets verified its effectiveness. In this paper, RVM is used to recognize the different PD types using extracted features. The resulting recognition achieved encouraging accuracy.

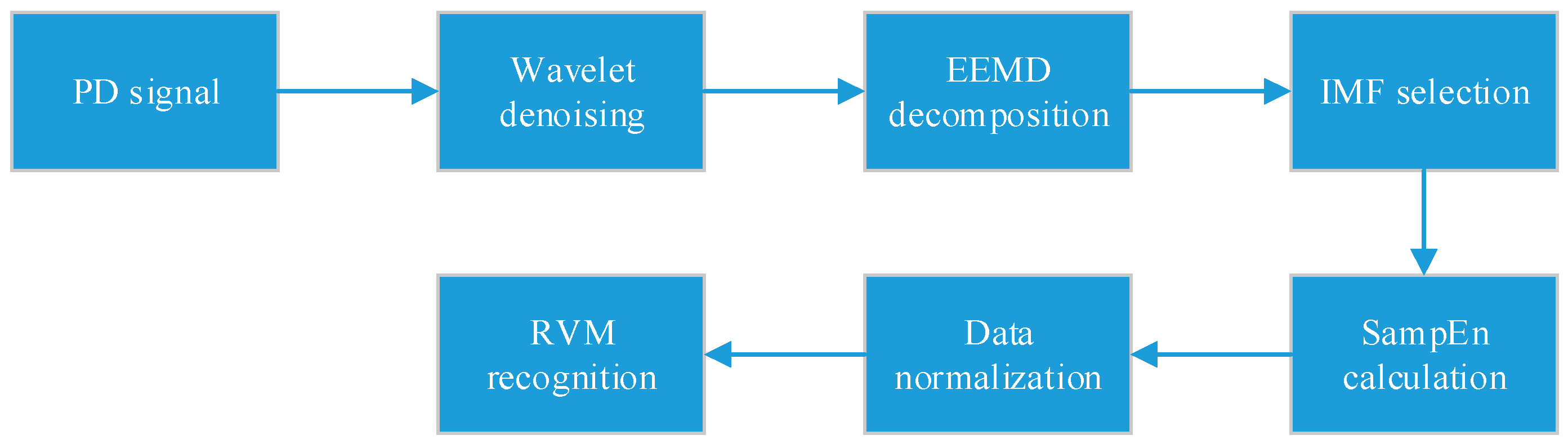

The rest of this paper is organized as follows:

Section 2 introduces the conception of EMD, EEMD, Sample Entropy and RVM, and also presents the feature extraction approach based on EEMD-SamEn.

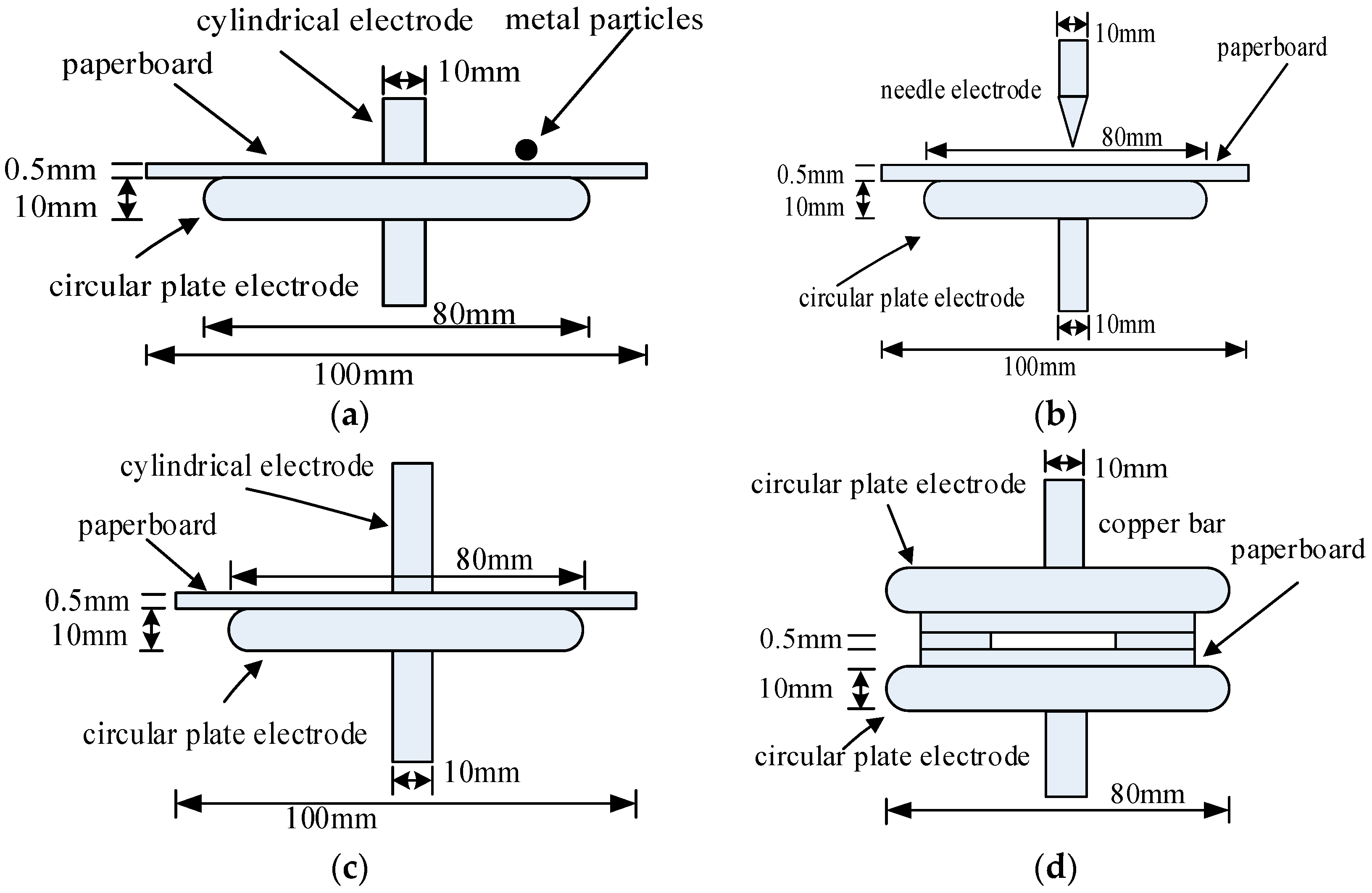

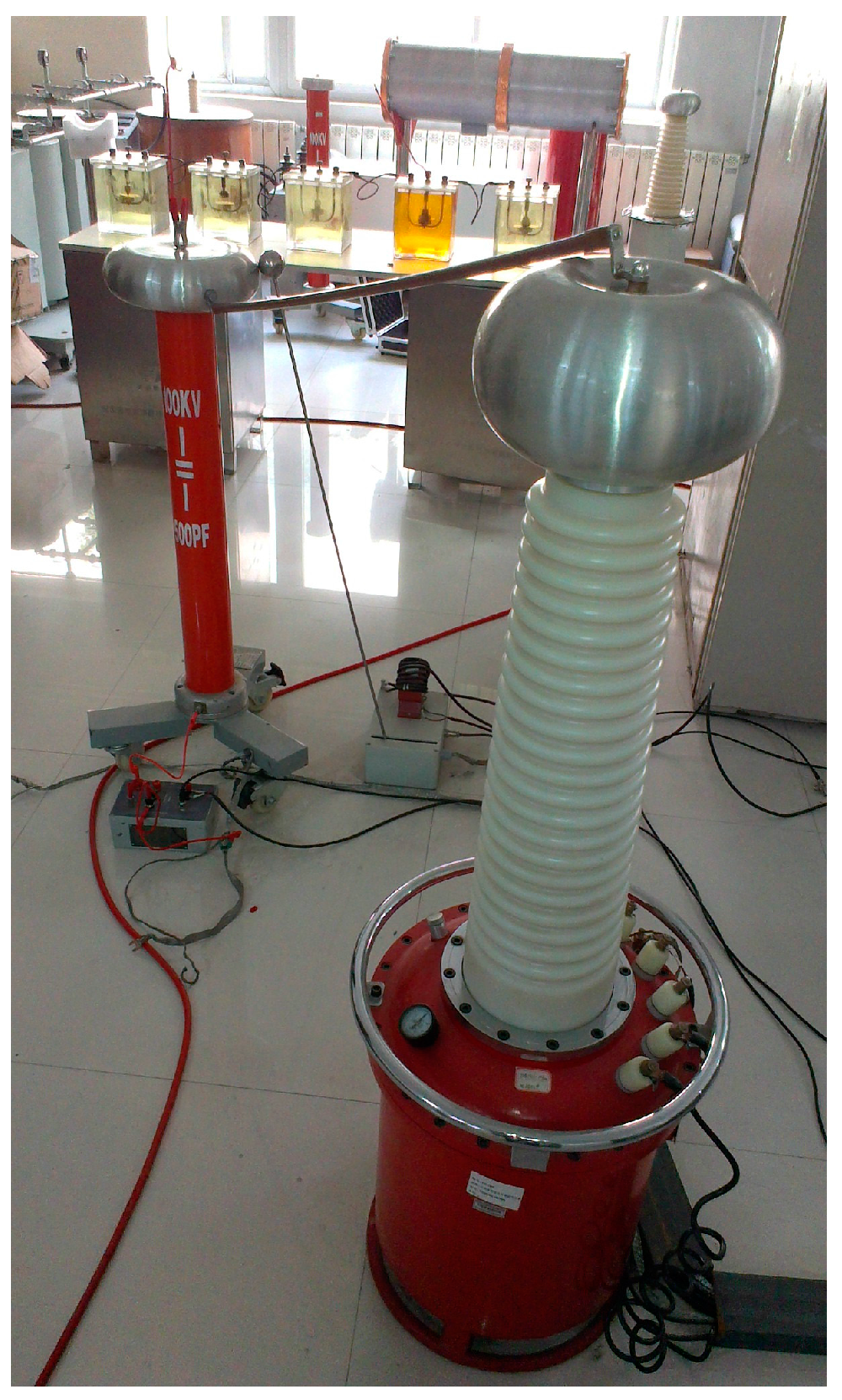

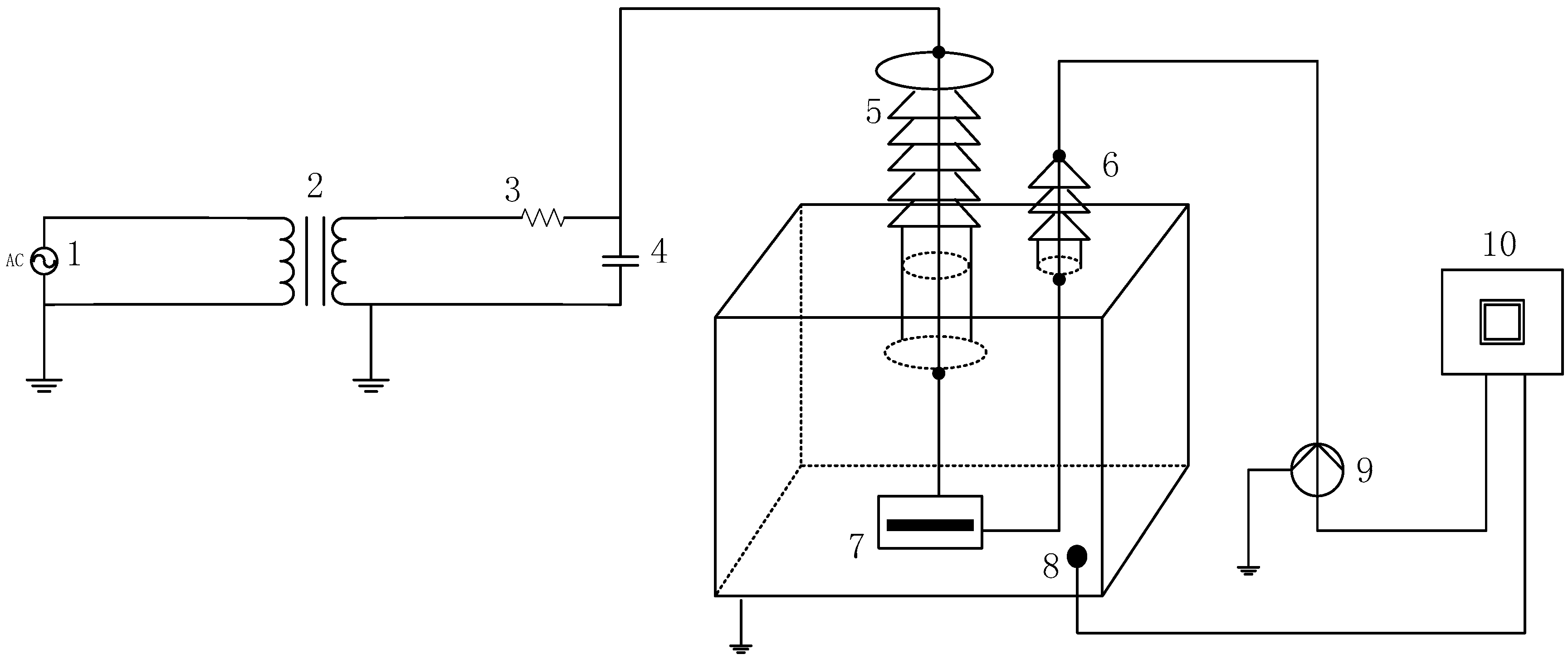

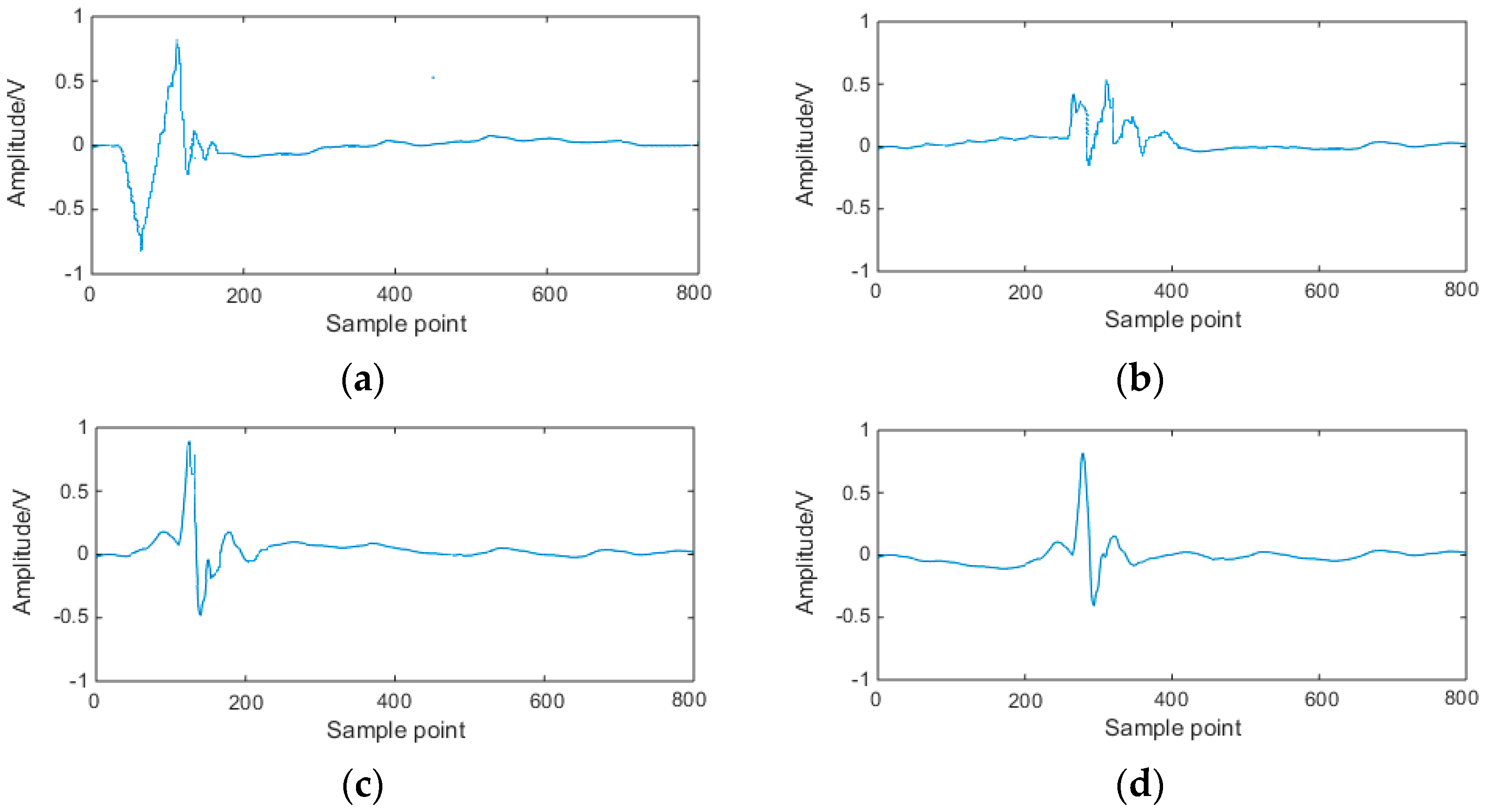

Section 3 describes the PD experiments and calculates the PD parameters.

Section 4 evaluates the performance of the proposed method and compares it with different feature extraction methods. Finally,

Section 5 concludes this paper.

5. Conclusions

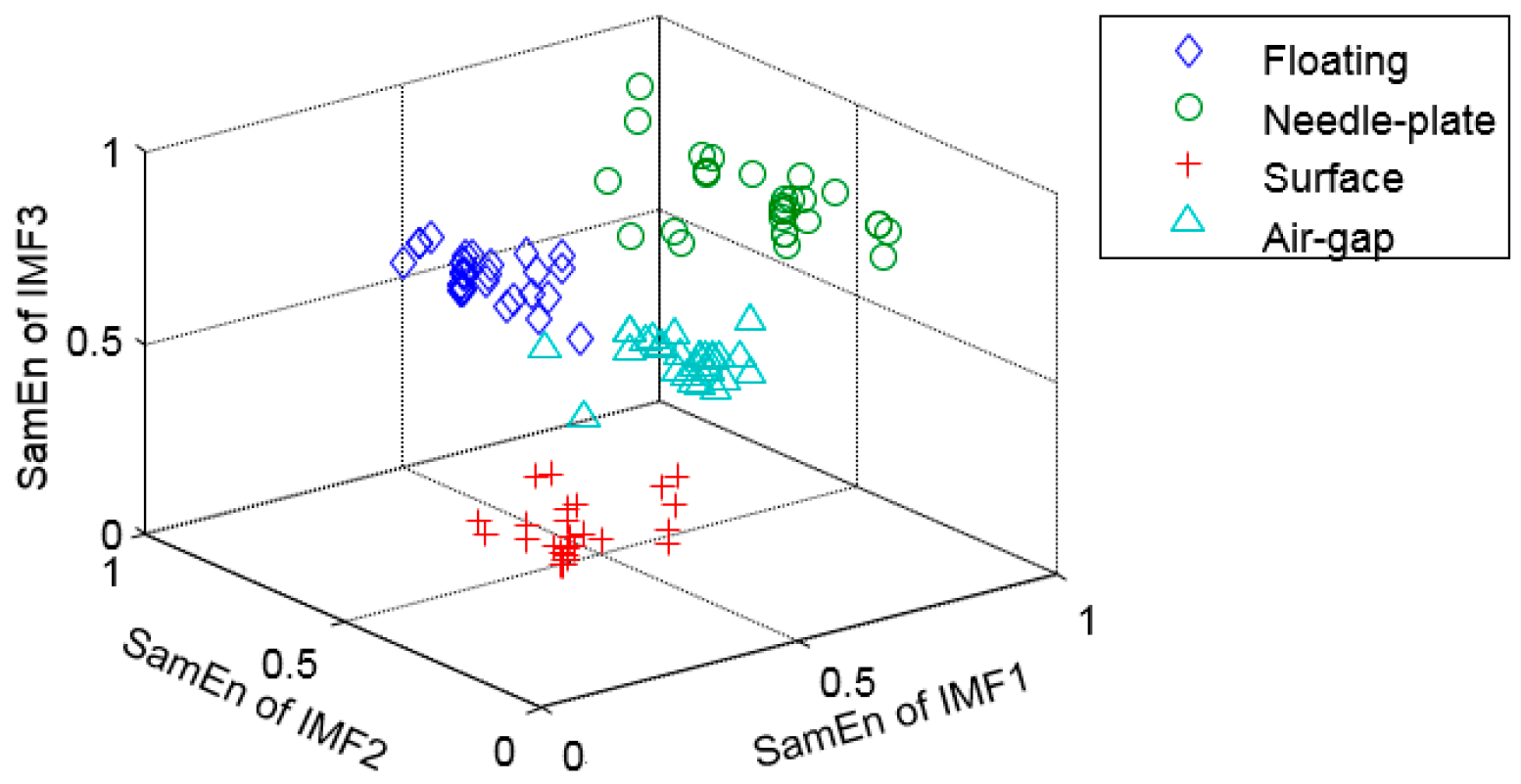

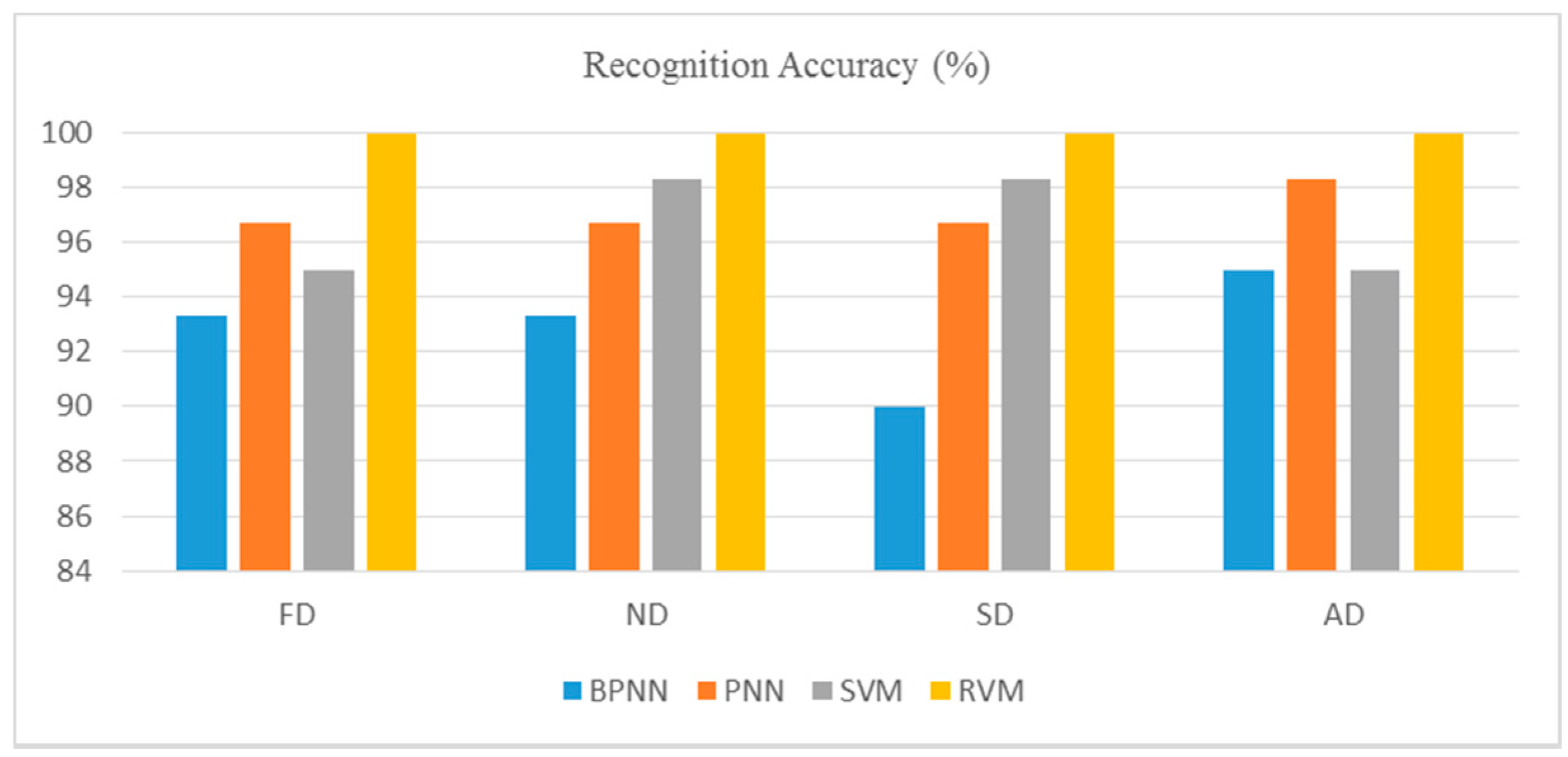

Partial Discharge fault recognition plays an important part in the insulation diagnosis of electrical equipment. In this study, Ensemble Empirical Mode Decomposition (EEMD) and Sample Entropy (SamEn) are combined for PD feature extraction. EEMD is employed for PD signal decomposition without mode-mixing or virtual components. Based on the IMFs of EEMD, Sample Entropy is calculated, which is sensitive to the properties of PD signals. The combination of EEMD and SamEn demonstrates that the proposed feature extraction method, combining the superiorities of both EEMD and Sample Entropy, is able to recognize the different PD types effectively. According to the results, the EEMD-SamEn method has obvious advantage over Waveform Features, Statistics Parameters, the W-SamEn and EMD-SamEn methods, as it solves the problems of high-dimension calculation and signal attenuation in traditional feature extraction methods. Thus, EEMD-SamEn is a practical tool for PD pattern recognition.

In this paper, different classifiers are employed for PD type recognition which include BPNN, PNN, SVM and RVM. Due to the particular model’s structure, RVM could avoid the choice of a regularization coefficient and restriction of Mercer conditions. Comparatively, RVM demonstrated the best performance with the average accuracy of RVM reaching an encouraging level.

It is worth noting that the PD experiment in this paper is aimed at a single PD defect. However, it is common that multiple defects appear at the same time in PD detection. Therefore, future study will focus on the multiple defects of PD signals. Considering that different measurement circuits and sensors may cause diverse PD features, signals from different measurement conditions should be extracted to verify the effectiveness of the proposed method in the future. Moreover, the work in this paper is accomplished in a laboratory environment, and it should be noted that there is a big difference between a laboratory environment and a field environment. The feature of on-site PD signals could be different from that of experimental signals. Additionally, in the real-world environment of PD condition maintenance, there is always insufficient time and a lack of experts to deal with the PD data, which are some important limitations of this research. For further consideration, large amounts of field-based PD data could be collected and analyzed.