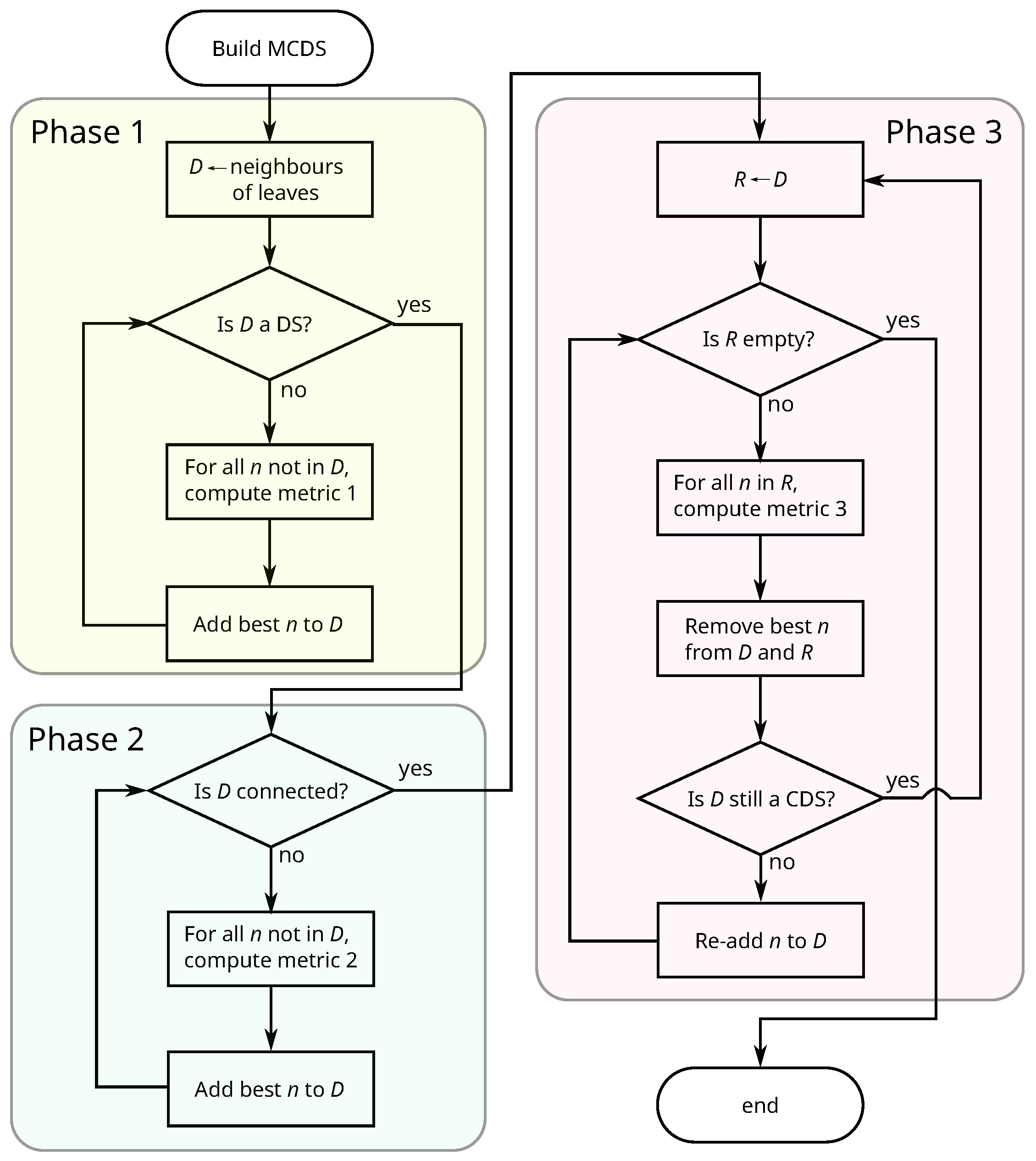

In this section we perform several simulations to verify that the proposed algorithm allows us to compute a CDS formed by a reduced number of vertices with a good performance in terms of maximization of importance functions.

In the literature, we can find several theoretical graph models proposed to construct graphs that would display certain properties frequently appearing in empirical graphs (see, for instance the review in [

19]). In particular, we will consider the UDG [

8] and the small-world model [

19].

4.1. Unit Disk Graph

We have considered an ad hoc wireless network which is a decentralized type of wireless network characterized by a lack of fixed communication infrastructure, so that the selection of vertices forwarding data is dynamically made by considering the current network connectivity. Several researchers have proposed using the CDS as a virtual backbone in these networks as an alternative to the fixed routing infrastructure in classical wired networks [

6,

7]. The virtual backbone represents the "skeleton” of the entire network and is used to frequently exchange routing information (traffic conditions, neighbourhood information, etc.) and broadcast a message in the network.

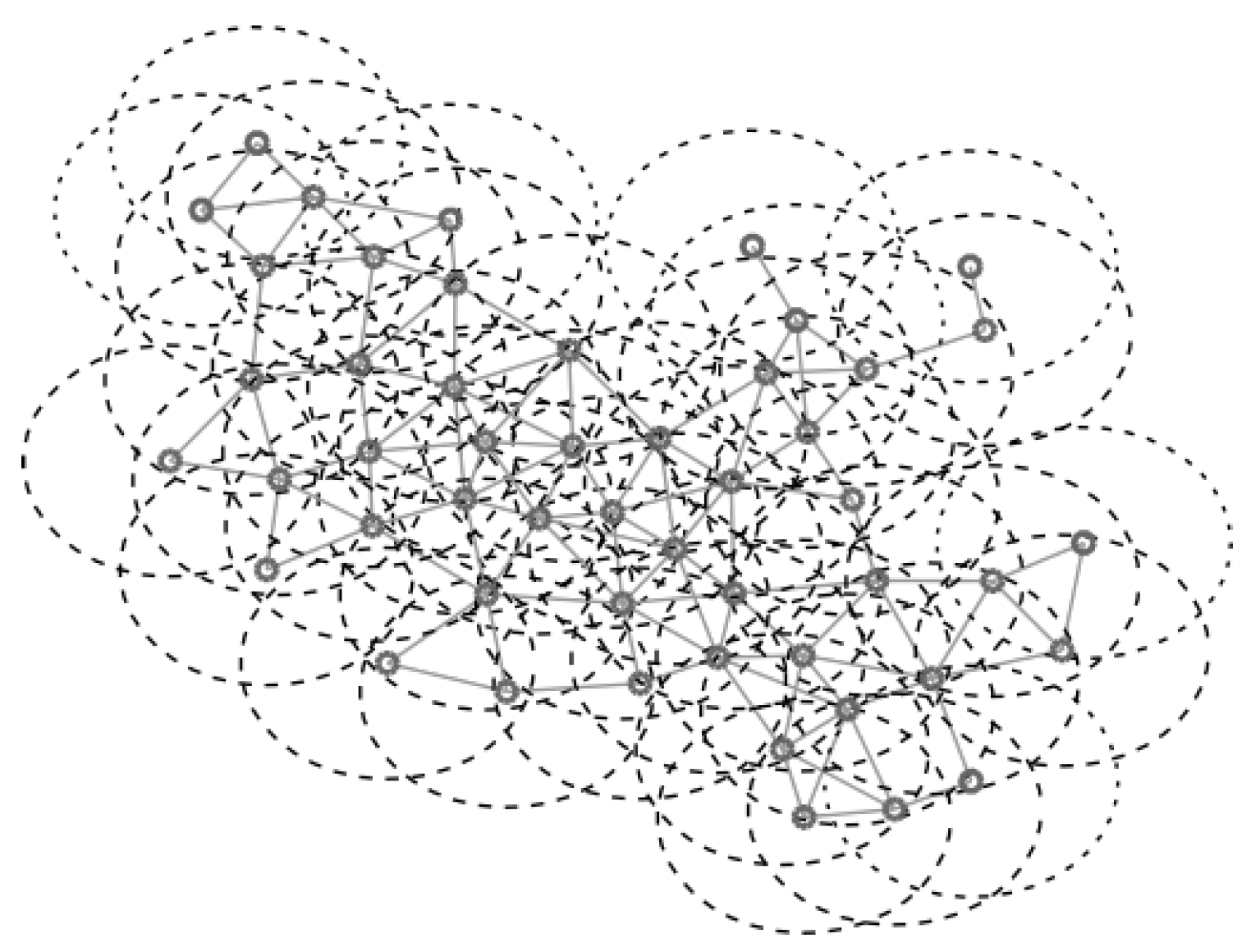

For the UDG model the network is defined by

, where the vertices in

V are embedded in the Euclidean plane. We assume that the maximum transmission range is the same for all the vertices in the network and it is unit scaled. There exists an edge

if

u and

v are in the maximum transmission range of each other i.e., the Euclidean distance is

.

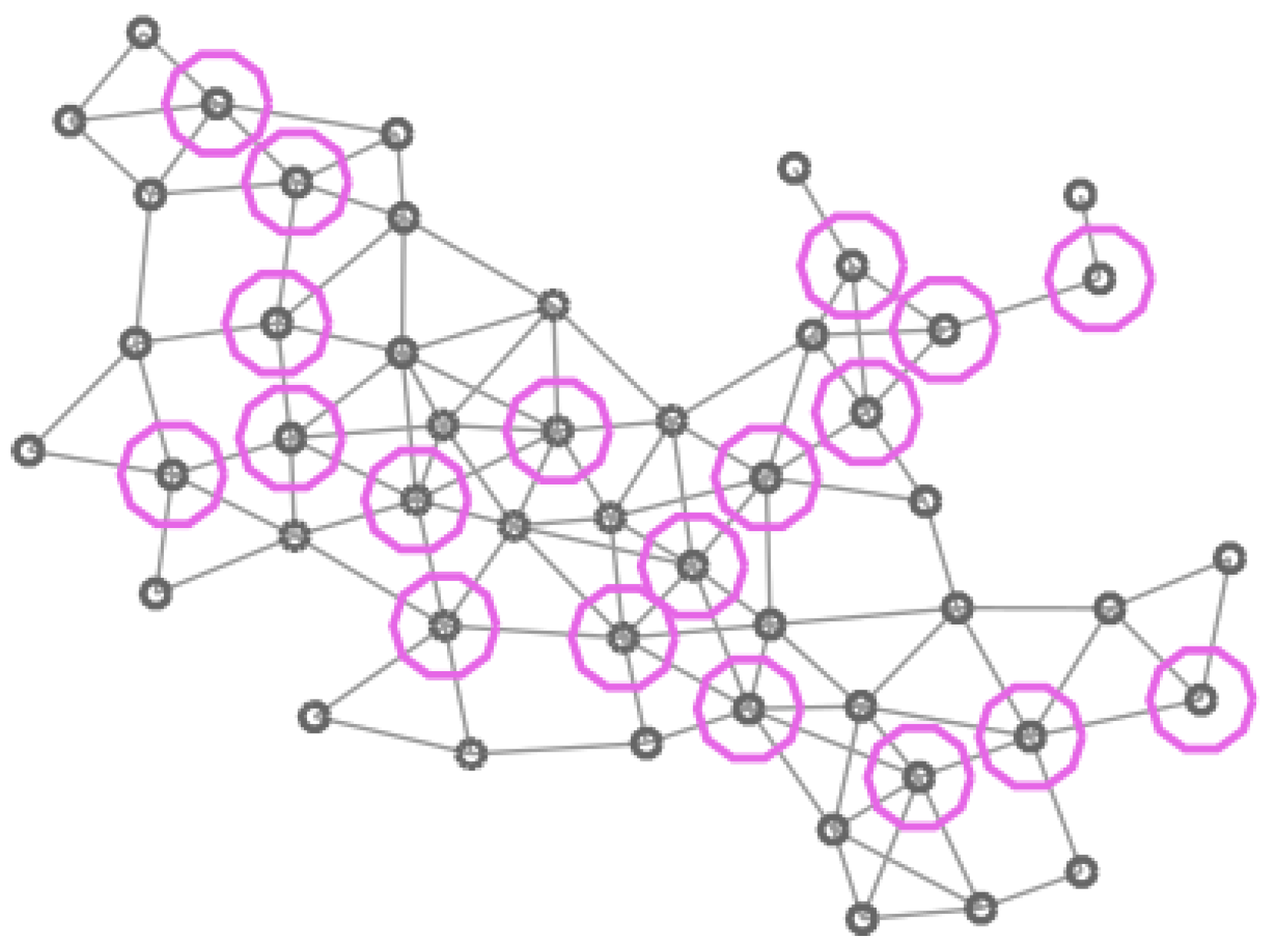

Figure 2 shows an example of a UDG of 50 vertices with the coverage radius of each vertex.

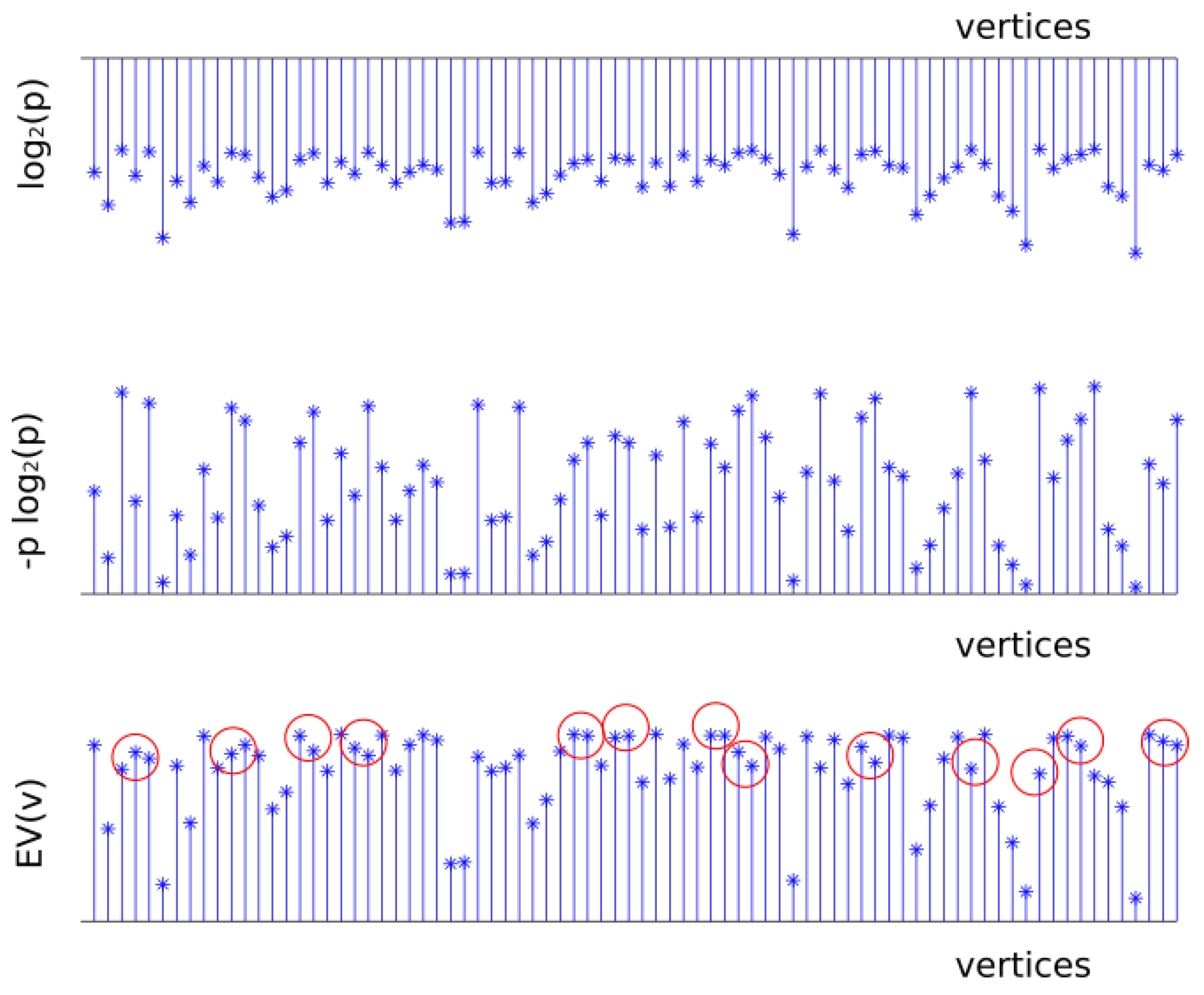

Figure 3 shows the values of

,

, and

, obtained generating

f according to a uniform distribution in the interval

. It is interesting to observe that

and

have the same trend but

presents some differences which are marked in red in the figure.

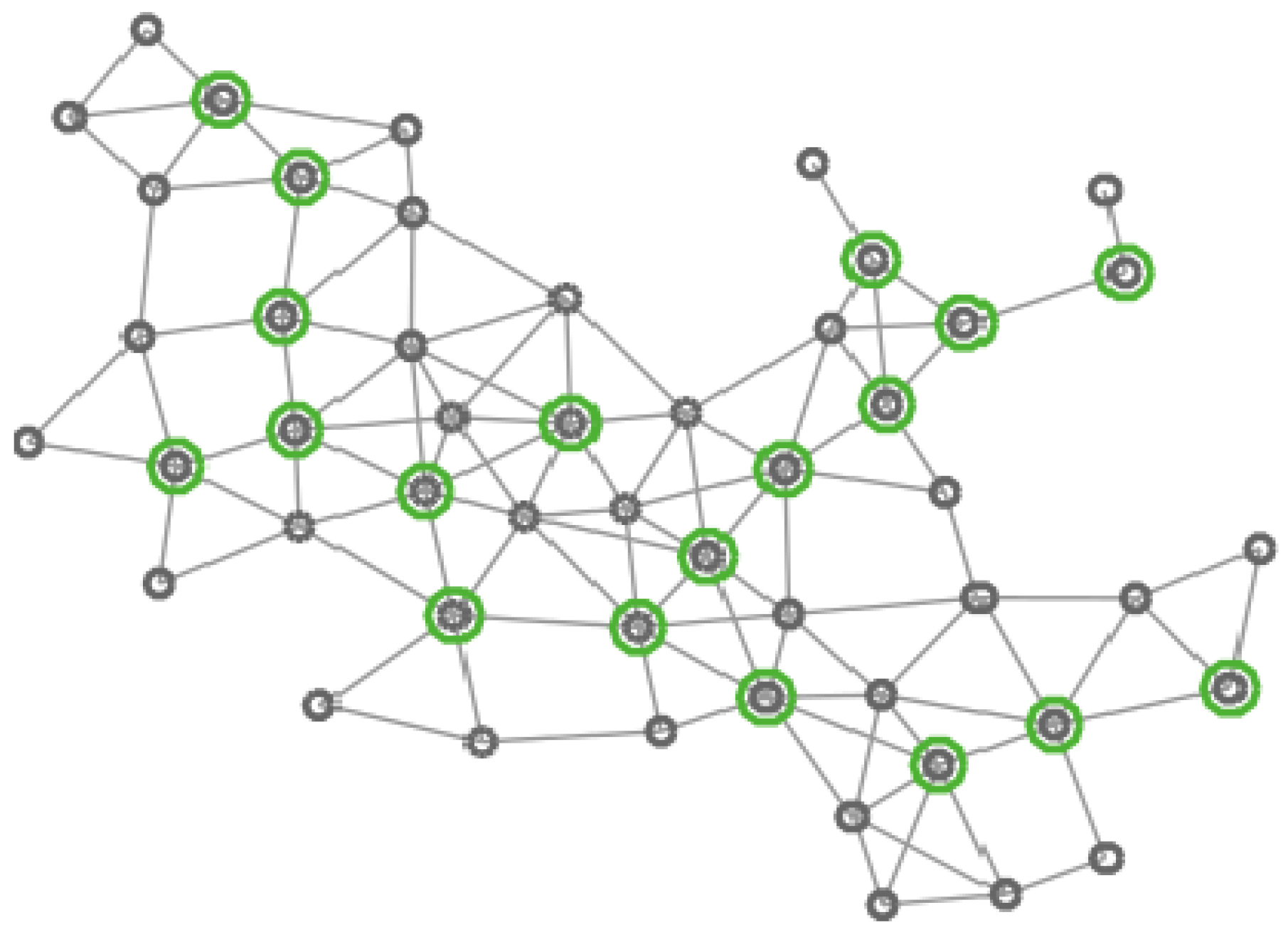

In

Figure 4,

Figure 5,

Figure 6 and

Figure 7 four different CDS can be observed: a CDS without using a vertex importance metric (called 1-CDS), the

-CDS, the

-CDS, and the

-CDS. Note that there are variations between the four configurations although all of them are constituted by the same number of vertices (19 out of initial 50 vertices).

We have considered graphs with 20, 50, and 80 vertices with randomly generated connections. The function

f of each vertex follows a standard uniform distribution. We have generated

realizations of different graphs for each one of those sizes. The CDS corresponding to each approach above depicted is computed so that its size is minimal and in the case of vertex importance metrics, the respective cost function given by Equations (

8), (

11), or (

12), must be maximized for the obtained CDS. For all these CDSs, we have calculated the maximum value of every importance function, denoted by

b, and its deviation with respect to that maximum so that we can obtain the parameter

where

r is the value of the importance function obtained by any CDS.

Table 1 shows the number of times, expressed as a percentage, that the four CDS achieve the minimum size. Data shown in the table demonstrate that all the CDS are similar in terms of size. This table also shows the mean deviation obtained by averaging the results of evaluating Equation (

15) throughout

realizations, with

b being the minimum value of the four CDS sizes. This deviation is very small because of difference in size is less than two vertices for all the network sizes. Note also that

-CDS and

-CDS give exactly same results.

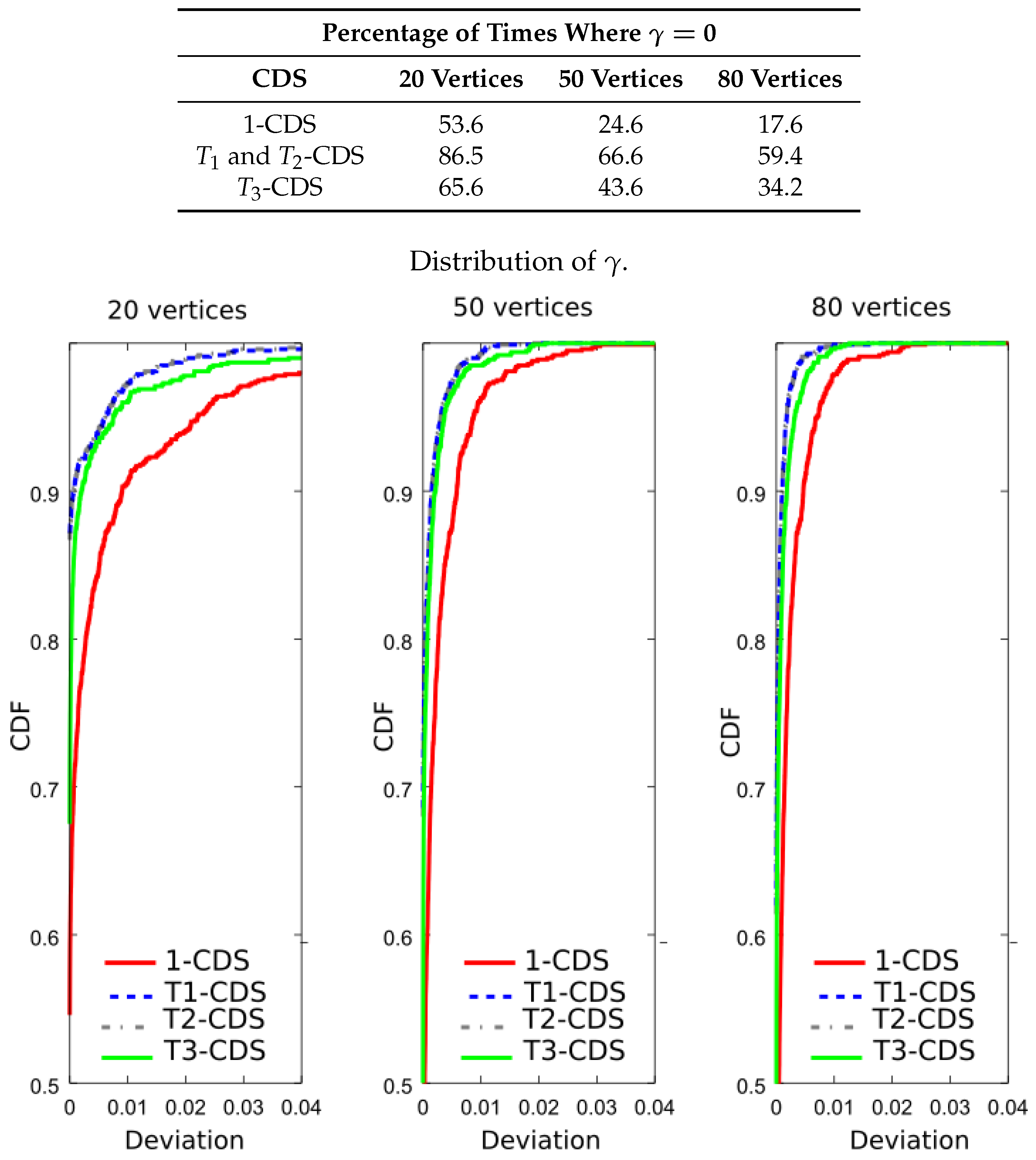

Now, we wish to verify that

-CDS reduces the error probability. For this purpose, we evaluated the importance function shown in Equation (

8) for the four CDSs. The table included in

Figure 8 shows the result percentages in terms of number of times the maximum value of the importance function is achieved, i.e.,

. We see that

-CDS and

-CDS exhibit the same performance, at

for 20 vertices. The difference with respect to 1-CDS and

-CDS is also remarkable for 50 and 80 vertices.

Figure 8 also depicts the cumulative distribution function (CDF) of the function

given in Equation (

15) (curves of

-CDS and

-CDS are represented in the same line). We observe that the difference appears depending on the applied method reduces with the graph size. Note that 1-CDS shows poor performance since the number of times it achieves the maximum value of the importance function is lower than that exhibited by the other methods.

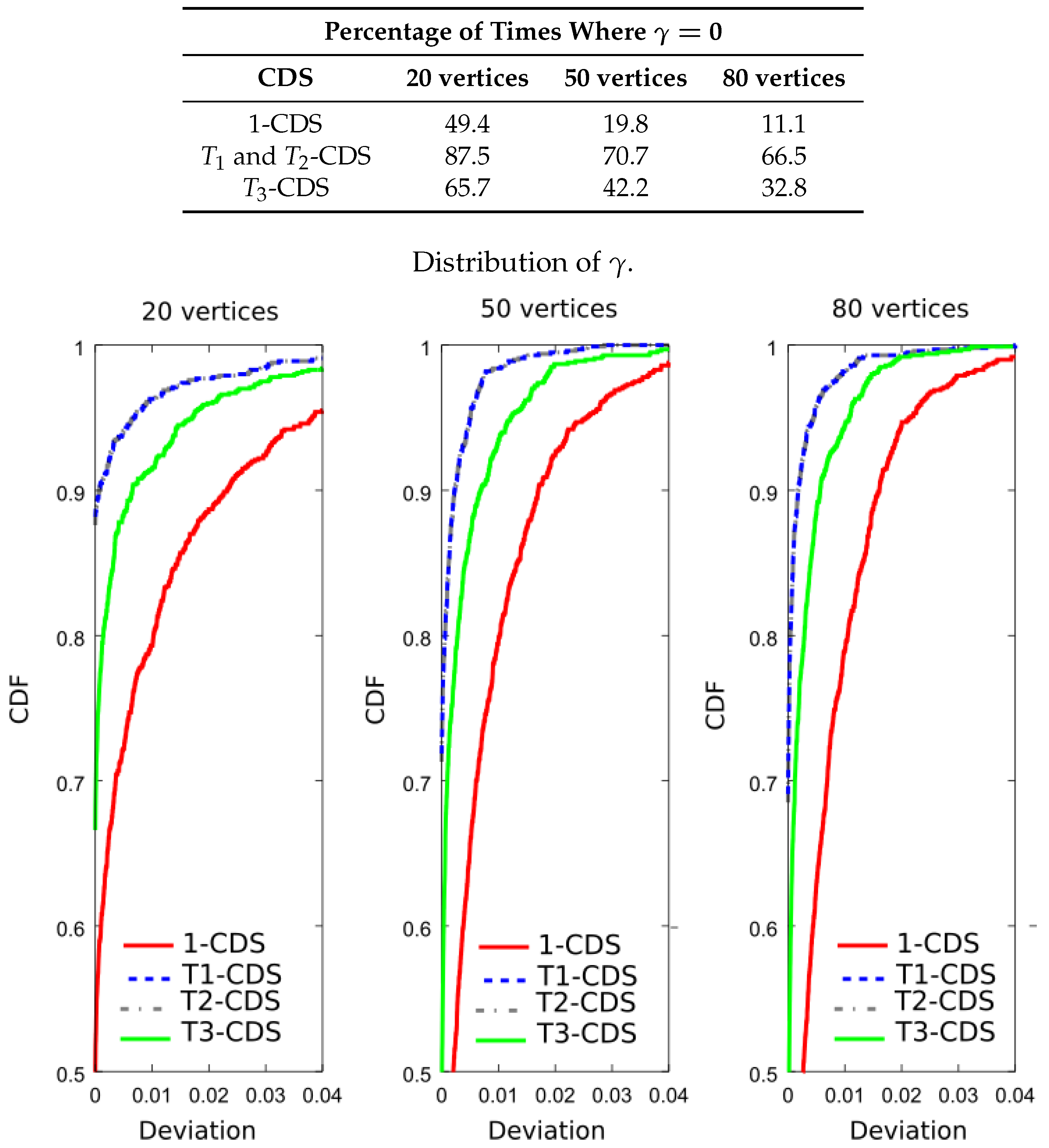

Following the computer experiments, we compared the entropy of the computed CDS. For that purpose, we have evaluated Equation (

11) to calculate the percentage of times in which each CDS achieves the maximum value of the importance function. The table included in

Figure 9 shows the new results. It is apparent that

-CDS and

-CDS achieve the best performances with a considerable gap with respect to the rest of the algorithms. The same observation can be made if we see the CDF in

Figure 9:

-CDS and

-CDS have a high probability regardless of the network size, while the other methods present a considerable error. Again, 1-CDS provides the worst results.

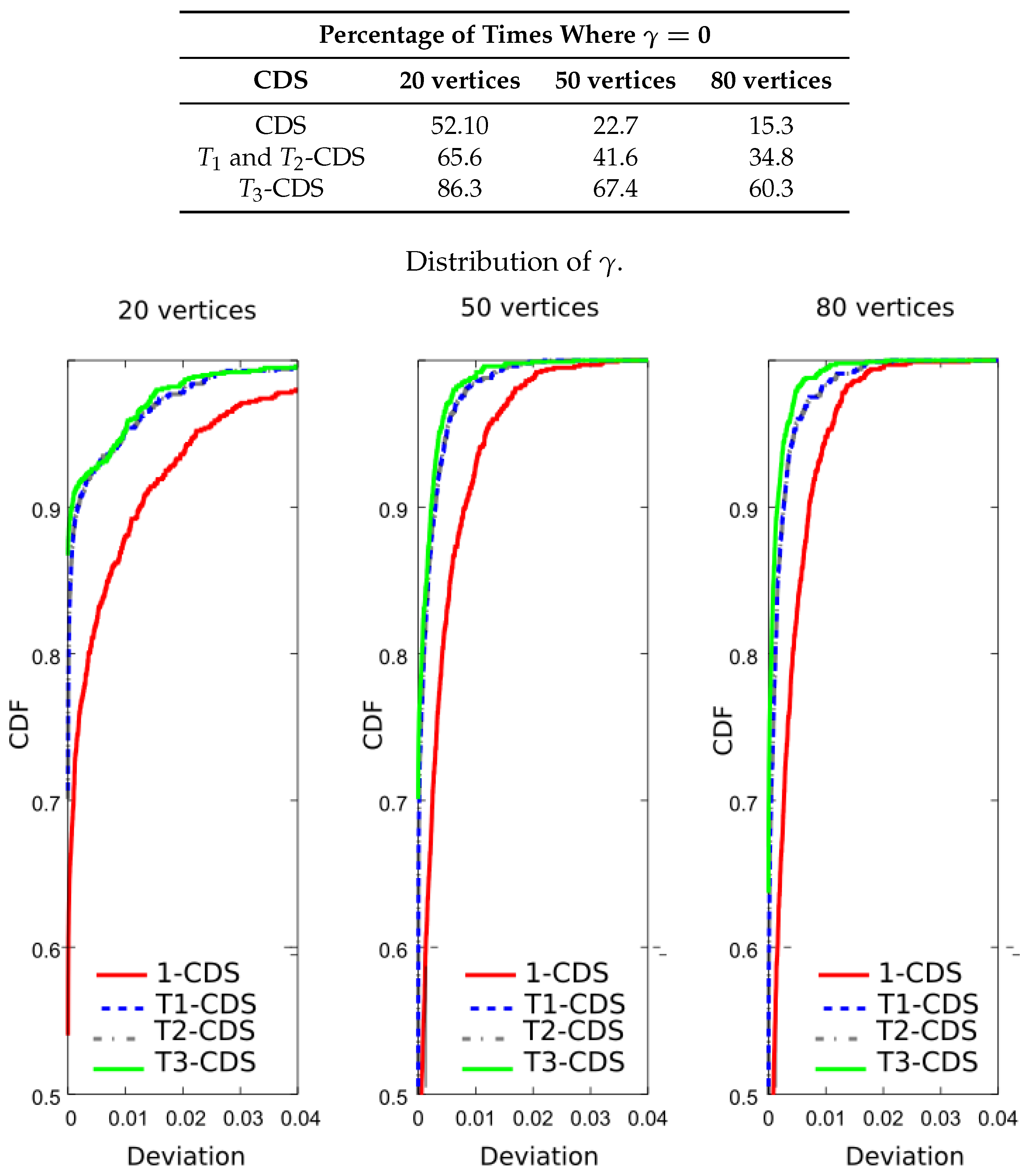

Finally, we compared the CDS in terms of the sum of entropy variation. We evaluated Equation (

12) for the vertices of the obtained CDS.

Figure 10 shows that

-CDS gives the best performances in terms of the percentages above explained although the differences in CDF compared to the

-CDS and

-CDS are negligible.

Therefore, it can be said that the algorithms proposed in this paper are correctly working in the sense of maximizing their cost function using a reduced number of vertices, and that the metrics defined in Equations (

8) and (

11) show same performances, while the metric of entropy variation (see Equation (

12)) presents differences that we will try to analyse. 1-CDS provides the worst results for all the defined metrics since the algorithm only considers the vertex degree.

4.2. Small-World Model

The small-world model was introduced in [

22]. According with this model, a set of

N vertices is organized into a regular circular graph where each vertex is directly connected to a mean number of

K-nearest neighbours. For each edge in the graph, the target node with probability

is rewired. When

, the small world graph becomes the random graph. In our simulations, we generated

realizations of graphs with

, and 80 vertices with

and

(i.e, a mean number of 10, 25, and 40 connections for any vertex). The function

f follows a uniform distribution in the interval

.

Table 2 shows the number of times, expressed as a percentage, where the CDS achieved the minimum size. We can see that, as occurs in the UDG, there is no a remarkable difference between the four CDSs. However, the deviation with respect to the optimum value is considerably higher than for the UDG graph. This means that in those occasions where the CDS does not achieve the best size, the CDS size differs in more than two vertices from the optimum.

We evaluated the deviation of Equation (

15) in order to verify the correct behavior of the proposed algorithm.

Table 3,

Table 4 and

Table 5 show the result percentages in terms of number of times achieving the maximum value of the importance functions in Equations (

8), (

11), and (

12), respectively. We can see that each

T-CDS gives the best result for the corresponding importance metric. In general, the results are similar to those obtained with UDG.

4.3. Importance Function Comparison

In order to compare the three importance functions, we will consider a graph formed by

N nodes, denoted by

, with

, where

.

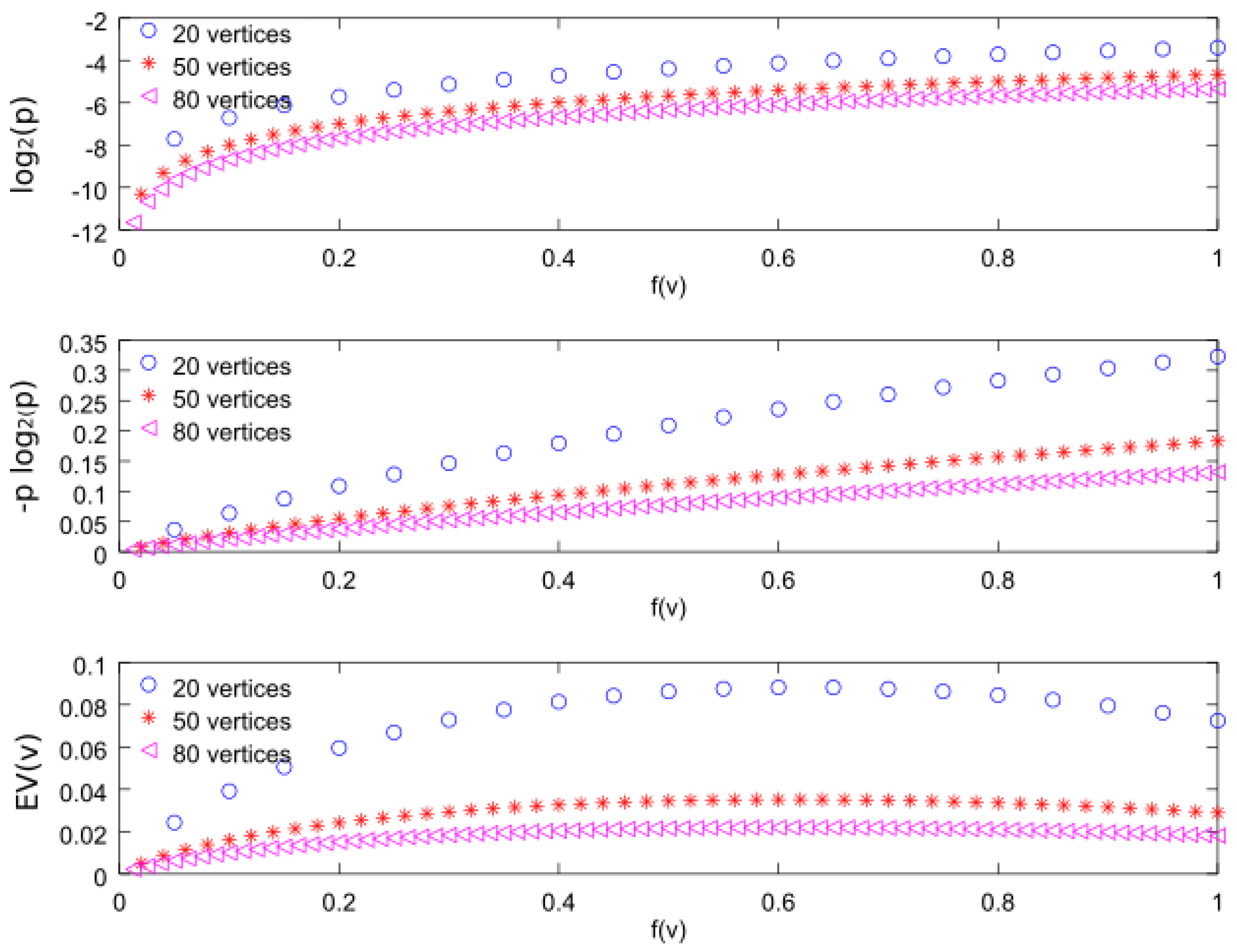

Figure 8 shows the three importance functions for

, 50, and 80. From the top figure, we can observe that the

function is increasing with respect to

f. In fact, for our discrete distribution, we can find the analytical expression

In

Figure 11, we can see that the curves converge for large number of vertices (

and

).

The second importance function represented in the figure is

. This metric allows us to maximize the entropy. The analytical expression for

is given by

Note that the

term has an important influence on the curve values, as it can be seen in

Figure 11, but again the curves converge for large number of vertices. The importance function

increases with respect to

f, as happens with

, and, for this reason,

-CDS and

-CDS give the same results in the simulation figure above presented.

Finally, the bottom figure represents the third importance function considered in this work i.e.,

. We can observe that it is an increasing function with

f, similarly to the first two functions, although for higher

f it decreases with a smaller slope. By evaluating Equation (

2) for

, we can express this importance function as follows:

where

is constant for a given graph. As can be seen in

Figure 11, we can directly observe that the maximum values are close to

regardless of the vertex number.

Using simulations we confirmed that the value

obtained for

is also valid for random samples of a uniform distribution. For that, we generated

samples of a uniform distribution and computed

using Equation (

12). We found that the maximum values for

, 50, and 80 vertices are, respectively,

,

, and

.

Therefore, we can conclude that the importance function behaves in a similar way to the other two when . For this reason, the -CDS does not maximize the error probability or the entropy.