1. Introduction

The classification and localization of reflectors constitutes a fundamental task in the field of mobile robotics, since this information contributes in a decisive way to other higher level tasks, such as the generation of environment maps and the robot’s localization. With respect to the process of reflector classification, the techniques more broadly used are based on geometric considerations obtained from the TOFs for every reflector type [

1,

2]. An important inconvenience of the systems based on geometric considerations is their high dependence on the precision with which the measurements of the TOFs are carried out, and consequently, the classification results are strongly influenced by noise.

Principal Component Analysis (PCA) has been used to reduce the dimension of data sets and object recognition in different works related to image processing [

3–

6]. The classification and localization technique for 3-D reflectors based on PCA using an ultrasonic sensor is explicitly discussed in [

7], in which it is applied to 18 TOF values originated from a sensor that contains two emitter/receiver transducers and 12 receivers, (see

Figure 1). The pulses emitted by E

0/R

0 are processed by itself (E

0/R

0) and by transducers E

1/R

1, R

2, R

3, R

4, R

5, R

6, R

7, and R

8. The pulses emitted by E

1/R

1 are processed by transducers E

1/R

1, E

0/R

0, R

5, R

7, R

9, R

10, R

11, R

12, and R

13. In [

7–

11] to reduce the number of transducers, the simultaneous emission of complementary sequences by two or more emitters is proposed.

The PCA is applicable only for data in vectorized representation. Therefore, the data obtained from a matrix sensor must have been previously converted to a vector form. A typical way to do this is the so-called “matrix to vector alignment”, which consists of concatenating all the rows in the matrix together to get a single vector.

Figure 1 shows two matrix sensors of nine transducers. The TOFs

t0_2,

t0_6,

t0_3,

t0_8,

t0_0,

t0_5,

t0_4,

t0_7,

t0_1 obtained from reception of sequence emitted by E

0/R

0 and the TOFs

t1_0,

t1_5,

t1_7,

t1_9,

t1_10,

t1_11,

t1_12,

t1_13 obtained from reception of sequence emitted by E

1/R

1 are aligned to get a single vector (1). Receiver R

2 and R

8 are neighbours in the sensor, while they are far away from each other in the vectorized representation. The same observations hold for positions R

0 and R

6,

etc. Due to the vector alignment the spatial information is missed. Also it can be remarked that the original 3 × 3 matrixes are converted to an 18 × 18 scatter matrix in PCA, which leads to higher time and memory space costs:

In [

8], the sensorial structure is formed by four transducers which can simultaneously obtain 16 TOF values at every scanning process. These 16 TOF values are aligned in a vector that is used in the classification algorithm based on PCA.

In [

12] the generalized PCA (GPCA) algorithm is used for image compression. The GPCA algorithm deals with the data in its original matrix representation and considers the projection onto a space, which is the tensor product of two vector spaces. In this paper, we use the GPCA for recognition and localization of ultrasound reflectors which aim to overcome the drawbacks in the traditional PCA. We used a matrix of 16 transducers (using four of the centre sensors as emitters/receivers and the other 12 as receivers). Thus, we can obtain up to four 4 × 4 matrices, each of which performs a classification procedure independently. The results of the four classification processes merge to give the final result.

The rest of this paper is organized as follows. Section 2 illustrates the proposed sensor structure. Section 3 describes the GPCA algorithm. Experimental results are presented in Section 4. And, conclusions are offered in Section 5.

3. GPCA Algorithm

In this paper, the usage of GPCA is proposed to carry out the reflector classification using the measurements of 16, 32 and 64 TOFs provided by the ultrasonic sensors. This method maintains the spatial distribution of sensor data, which is represented by the TOFs in a matrix format (2):

In (2), t1… t16 are the TOFs associated to each receiver and τe ∈ IRr × c is the TOF matrix obtained for each emitter (r and c are the number of rows and columns of the sensor, respectively). Therefore, in our case we can obtain up to four matrices (

,

,

and

for the emitters E1, E2, E3 and E4).

In GPCA we compute an optimal (l1, l2)-dimensional space, such that the projections of the data points (subtracted by the mean) onto this axis system have the maximum variance among all possible (l1, l2)-dimensional axes systems. Unlike PCA, the projections of the data points onto the (l1, l2)-dimensional axis system in GPCA are matrices, instead of vectors.

Let consider

S = {

τ0,

τ1,

τ2, …

τn−1} be a training set of

n samples of TOF matrix. The mean TOF matrix of the set is defined by:

Matrices with mean zero are represented as:

Then the variance of the projections of

Φi onto the (

l1,

l2) dimensional axis system is defined as:

where || ||

F is the Frobenius norm and

L ∈ IR

r×l1 and R ∈ IR

c×l2 are two matrices with orthonormal columns, such that the variance var(

L,

R) is maximum. The maximum value of (5) cannot be found in closed form and thus an iterative approach is needed:

♦ For a given

R, the optimal matrix

L consists of the

l1 eigenvectors of the matrix

ML which correspond to the largest

l1 eigenvalues, where:

♦ In the same way, given

L, the matrix

R consists of the

l2 eigenvectors of the matrix

MR, corresponding to the largest

l2 eigenvalues, where:

In the realized experiments we used l1 = l2 = 2 (L ∈ IR4 × 2 and R ∈ IR4 × 2) and l1 = l2 = 1 (L ∈ IR4×1 and R ∈ IR4×1).

To calculate the

L and

R matrices that maximize

Equation 5, it is necessary to initially fix one of them. Fixing

L, we can calculate

R by computing the eigenvectors of the matrix

MR, and then, with the calculated

R we can then update

L by computing the eigenvectors of the matrix

ML. It is necessary to repeat the procedure until the result converges. The solution depends on the initial choice of

L (

L0). As it is recommended in [

12], we use

L0 = (

I, 0)

T, where

I is the identity matrix. To measure the convergence of the GPCA procedure we use the root mean square error (

ζ), defined as follows:

In the realized experiments for ζ = 10−8 the procedure converges within five iterations.

Once the transformation matrices

L and

R are determined and given a new TOF matrix

τi to be classified, its zero-mean version

Φi is transformed into the feature space as:

Then we can reconstruct

Φi as:

The reconstruction error (

εR) for

Φ̂i can be computed as:

3.1. Offline Generation of the Classes

The objective of this work is to classify one of the three reflector types (plane, corner, and edge) and its approximate direction (azimuth angle γ, elevation angle θ) and distance (r) with respect to the frontal space of the sensor. Before beginning the classification process, it is necessary to create different classes, depending on the reflector type and its spatial location. Every class has two transformation matrices L, R associated to it. These matrices are referred as LP and RP for the plane, LC and RC for the corner, and LE and RE for the edge.

As it is stated in [

7,

8], we assume that the frontal space of the sensorial structure is formed by

Q directions defined by (

γq, θq), with

q ∈ {1, 2,

…,

Q}. Along every direction

q, there are

D discrete distances referred to as

rd, with

d ∈ {1, 2,...,

D}. To generate the transformation matrices associated to every direction class and every reflector type, the reflectors

{P,

C,

E} have been located at every direction

q and for all the

d distances, obtaining the TOF vectors. In GPCA, to generate the transformation matrices associated with every direction and every reflector type (

,

,

,

,

,

), the reflectors {

P,

C,

E} have been located at every direction

q and for all the

D distances.

When more than one transducer is emitting, we use a matrix of TOF for each emitter, i.e.:

,

,

and

for the emitters E1, E2, E3 and E4. Therefore, it is necessary to compute the transformation matrices associated to every direction and every reflector type (

,

,

,

,

,

) for each emitter.

3.2. Classification and Position Estimation of the Reflector

The strategy proposed in this paper to carry out the online classification process is to first classify the type and approximate direction in which the reflector is located and then to estimate its distance with respect to the frontal space of the sensor.

To classify the type and approximate direction of the reflector, we calculate the square reconstruction error (

ε), using the transformation matrices associated to every direction

q and every reflector type:

The reflector is classified as a plane if

, it is classified as an edge if

and it is classified as a corner if

. The value of

q, which corresponds to the minimum value of

εq determines the approximate direction of the reflector. When more than one emitter is being used, we determine the minimum value of reconstruction error in each direction for each emitter, and added to all these minimum values. The minimum values are taken with the same angle for all transmitter, otherwise the results will not classify correctly. For four emitters that is:

Once the type of reflector and the direction in which it is positioned are known, the approximate distance with respect to the sensor structure can be determined. The Frobenius norm in the transformed space between the feature vector

τe for the object to be classified, and every feature vector of the training samples of the class to which this reflector belongs, are calculated. For example, if the object was classified as a plane in the direction

q, the TOF vector set used offline to generate the transformation matrix will have been

. Therefore, it is only necessary to compute the Frobenius norm in the transformed space among the feature vector corresponding to the TOF vector

τe, and the feature vectors corresponding to the training samples, as is shown in:

, d = 1, 2,…, D.

The value corresponding to d, that provides the minimum εd, will be the approximate reflector distance, in the direction q.

It has been proven empirically that the relationship among the distances of the reflectors to the sensor structure, and the Frobenius norm of their feature vectors in the transformed space, is approximately linear. In this way, considering the distance interval, where the reflector is, and the Frobenius norm in the transformed space, a correct estimation can be obtained by means of a linear interpolation of the distance at which the reflector is positioned.

3.3. Processing Time Using PCA and GPCA

To compare the processing time of the GPCA classification method with the PCA method, we analyze the number of multiplication operations required to classify the type of the reflector from the reconstruction error. To do this, we consider a generic sensor as proposed in

Figure 2 with

r rows and

c columns. With TOF’s obtained a

r ×

c matrix and a vector of dimension

r.c are built for GPCA and PCA methods respectively. It’s also considered that a number

l of eigenvectors are selected and that the sensor has

m emitters.

In PCA, the reconstruction error is given by the following expression:

where L is the transformation matrix and

Φ is the measurements column vector with mean zero. The dimensions of the vector

Φ and matrix

L are

r·c·m (

Φ ∈ ℜ

(r.c.m)) and

(r·c·m) ×

l (

L ∈ ℜ

(r.c.m)×l) respectively. We can obtain the number of multiplication operations required broking expression (13) down in different terms (14) and (15):

Therefore, taking the dimensions of vector

Φ and matrix

L into account, to calculate the terms

E =

LTΦ and

F =

LE l.(r.c.m) multiplications are needed. To obtain the Euclidean distance,

r.c.m multiplications are needed. So, the total number of multiplications required to classify the type of the reflector using PCA is:

In GPCA the reconstruction error is given by:

where

L and

R are transformation matrices and

Φ is the measurements matrix with mean zero. In this case, the dimensions of matrices

Φ,

L and

R are

r ×

c (

Φ ∈ ℜ

r×c),

r ×

l1 (L ∈ ℜ

r × l1) and

c ×

l2 (R ∈ ℜ

r × l2 respectively. Following the same method that in PCA, we obtain the number of multiplications broking expression (17) down in terms:

Then, the total number of multiplications required to classify the type of the reflector using GPCA is:

The number of multiplications for both methods particularized for

r = 3,

c = 3 and using different numbers of emitters (

m) and eigenvalues (

l,

l1,

l2) are shown in

Table 1. One can see that the total number of multiplications using GPCA is slightly lower than using PCA. However, its main advantage is that because the classification is performed independently with the TOFS obtained for each emitter and added to the obtained values, it is possible to make a parallelization of the calculations when using a multiprocessor system. That is, for two emitters (

m = 2) using a dual processor system, the processing time can be reduced by more than half using GPCA (160 using PCA and 68 using GPCA).

4. Simulation Results

A simulator has been used in order to carry out the simulations; this allows TOFs to be obtained in three-dimensional environments, based on the sensor model proposed by Barshan and Kuc [

13] and using the rays technique [

14]. The system employs a frequency of 50 Khz. To this frequency we can suppose a specular model. This simulator is the same as that used in [

7] and it is validated with real measurements. The measures used to compare the two methods of classification, PCA and GPCA, have been obtained under the same conditions.

To evaluate and compare the GPCA classification method with the PCA method, we generate TOFs simulating the sensor structure of

Figure 2. To obtain the transformation matrices, the reflectors have been located at distances from 50 to 350 cm, with 30-cm intervals. The azimuth and elevation angles were from −12° to +12°, with intervals of 2°.

In the simulations carried out, we analyze the percentage of successful classifications, using different values for the number of emitters (m) and different values of the number of used eigenvectors (l = l1 = l2) corresponding to the largest eigenvalues. In all the cases, the results are obtained adding to the TOFs a zero mean, independent and identically distributed (i.i.d.) Gaussian noise with typical deviation of 15 μs. The distance for plane, edge, and corner-type reflectors placed at distances from 50 to 350 cm, with intervals of 20 cm, and an azimuth angle of 7.5°. For each type of reflector at each distance 500 tests were conducted. We also performed simulations for different azimuth and elevation angles and the results are similar to the ones showed in this section.

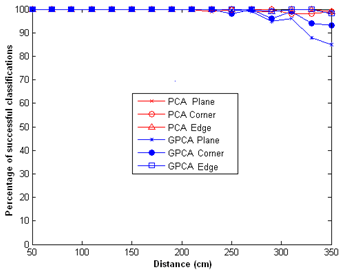

In

Figure 3 the classification percentage for

l = 2 and

m = 2, using PCA and GPCA methods, is shown. In this figure we can observe that the percentage of successful classifications using the PCA algorithm is greater than using the GPCA for distances greater than 200 cm. This is due to, the GPCA algorithm need a amount of input data greater than PCA for a appropriate classification. In both cases we obtained a 100% success rate for distances below 200 cm.

If we maintain the same number of emitters and use only the eigenvector corresponding to the largest eigenvalue, the percentage of success is greater than 95% up to 300 cm and then decreases very sharply, as shown in

Figure 4.

The simulations carry out with other values of noise and a single eigenvector have shown that, using PCA and GPCA the percentages successful classifications of corners and planes fall sharply for distances greater than 330 cm. This is due to the loss of dimensionality in the transformed space.

If one wants to increase the percentage of hits in the classification, we can increase the number of emitters. In

Figure 5 the results obtained for

m = 4 are shown. In this figure we can see that the percentage of hits is over 98% up to 350 cm. In this case the processing time for PCA is approximately twice that with two transmitters. However, using GPCA we can get a time similar to that obtained for a single emitter, if we use parallel processing.

In applications that do not require classifying objects at distances greater than 290 cm, you can use a single eigenvector to obtain 100% success. In

Figure 6 the results obtained for

m = 4 and

l = 1 are shown. In this figure we can see that the percentage of success is 100% up to 290 cm and then decreases very sharply.

The GPCA approach better organizes the data in the sense of adjacent components in the matrix representation correspond to physically adjacent readings in the sensor array. This allows, with smaller transformation matrixes to achieve similar results to those obtained with PCA and the computational cost is lower.

The results obtained in estimating the distance are similar using PCA and GPCA methods. However, using GPCA has the advantage of a lower computational cost. The computational cost in the estimation of distance can be analyzed in the same way as is analyzed in the classification process.

Although all the results presented come from simulations done with non-correlated noise, tests with correlated noise have been carried out. It has been verified that the proposed algorithm is very robust against that kind of noise. In these tests, the classification is successful even when the correlated noise has higher standard deviations than the non-correlated noise.