Intelligent Lead: A Novel HRI Sensor for Guide Robots

Abstract

: This paper addresses the introduction of a new Human Robot Interaction (HRI) sensor for guide robots. Guide robots for geriatric patients or the visually impaired should follow user's control command, keeping a certain desired distance allowing the user to work freely. Therefore, it is necessary to acquire control commands and a user's position on a real-time basis. We suggest a new sensor fusion system to achieve this objective and we will call this sensor the “intelligent lead”. The objective of the intelligent lead is to acquire a stable distance from the user to the robot, speed-control volume and turn-control volume, even when the robot platform with the intelligent lead is shaken on uneven ground. In this paper we explain a precise Extended Kalman Filter (EKF) procedure for this. The intelligent lead physically consists of a Kinect sensor, the serial linkage attached with eight rotary encoders, and an IMU (Inertial Measurement Unit) and their measurements are fused by the EKF. A mobile robot was designed to test the performance of the proposed sensor system. After installing the intelligent lead in the mobile robot, several tests are conducted to verify that the mobile robot with the intelligent lead is capable of achieving its goal points while maintaining the appropriate distance between the robot and the user. The results show that we can use the intelligent lead proposed in this paper as a new HRI sensor joined a joystick and a distance measure in the mobile environments such as the robot and the user are moving at the same time.1. Introduction

There are approximately 285 million visually impaired people around the globe. Among them, 39 million people are totally blind. In addition, 90% of the visually handicapped live in developing countries [1]. In Korea, there are approximately 45,000 visually impaired people. However, they are provided with only 60∼70 guide dogs. Due to the aging of the population, the numbers of geriatric patients who suffer from dementia or poor physical health are on the rise. It is therefore important to develop guide robots for the elderly as well as for the visually handicapped.

A number of studies have been performed to identify a means of providing information on self location and obstacle location for the visually impaired. In an RFID-based study [2,3], a robot cart converts the location information which has been received through the RFID into voice data. As a navigation tool, various sensors are attached to clothes and then the acquired environment information with these sensors is delivered to the visually impaired through other sensory organs [4,5]. Many robots such as GuideCane [6,7], RoJi [8] and ROVI [9] have been studied in the area of autonomous robots for the visually impaired. In the above studies ultrasonic sensors are attached to the two-wheeled mobile robot as a means of detecting obstacles. The user uses the feedback force through a stick for avoiding obstacles when the steering wheel is acting. This becomes the cause that any ground bumps are directly transmitted to the user. In another study, eyeDog [10] is designed for the user to follow the robot with a dog leash. The shock to the robot is not transmitted to the user because of the flexibility of the leash, but the robot cannot acquire information about the relative location of the user and the robot.

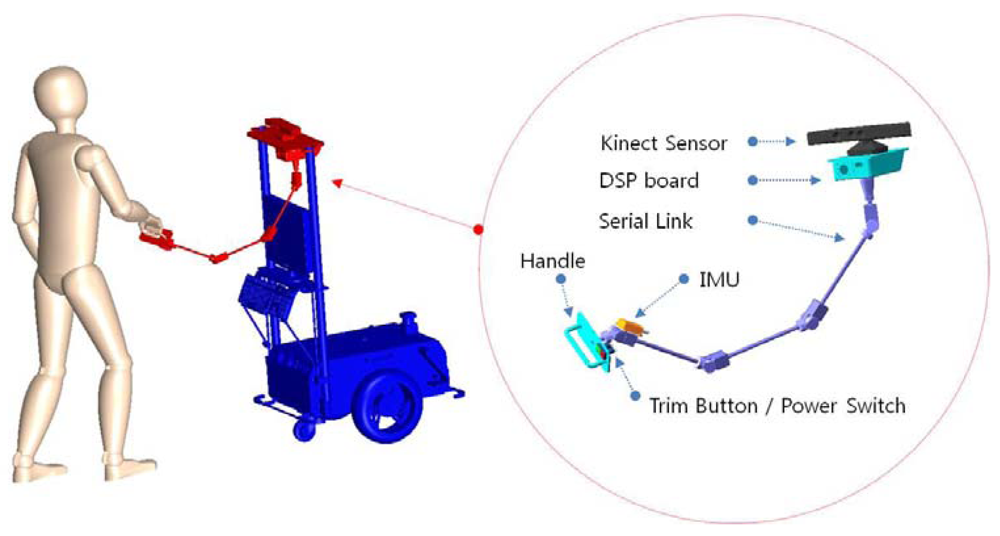

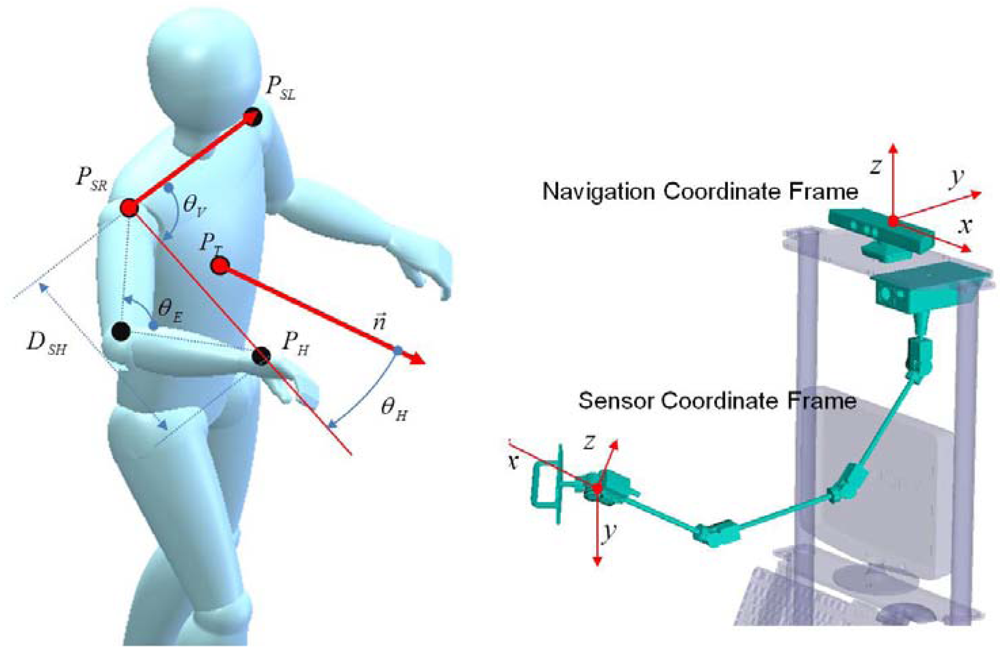

Ultimately, our goal is to fabricate a human-friendly guide robot. Among the visually-impaired population, totally blind are only 15%. For this reason human-friendly guide robot which is in a cooperative relationship with the user, not just a common mobile robot which only follows control commands is more needed. This study addresses the fabrication of a new HRI sensor (Figure 1) with which a user's location and control volumes can be acquired at the same time. We will call this an HRI sensor the “intelligent lead”. The intelligent lead proposed in this study and a 3D conceptual view about its application is shown in Figure 1.

The objective of the intelligent lead is to acquire a stable distance from the user to the robot, speed-control volume and turn-control volume, even when the robot platform with the intelligent lead is shaken on uneven ground. In this paper we explain the precise Extended Kalman Filter (EKF) procedure for this. The intelligent lead physically consists of a Kinect sensor, the serial linkage attached with eight rotary encoders, and an Inertial Measurement Unit (IMU), and their measurements are fused by the EKF. Since a user swings his/her arms back and forth while walking, it is very difficult to locate the user's body using only his/her hand position information. A new sensor is needed to locate the user's body, or one of the sensors used to detect obstacles can be used as well.

In many previous studies, an ultrasonic sensor was often used as a means for detecting obstacles. Recently, many studies on how to detect and avoid obstacles with 2D laser scanners, Kinect sensors and vision sensors [11,12] have been conducted. A vision system is light and cheap as a passive sensor. Among others, the Kinect sensor has been used to recognize a 3D object or environment in the robot industry due to its significant price competitiveness. Compared to the SR-3000 which is a commercial 3D laser scanner, it is less accurate. Since it is much cheaper, however, the Kinect sensor has been used to locate a user's body in this study.

The intelligent lead is structured to fuse the data which has been acquired through the serial linkage, Kinect and IMU sensor with the EKF. This paper is organized as follows. Section 2 describes a configuration of the major hardware of the intelligent lead. Section 3 describes how to configure the serial linkage and an IMU integrator with the EKF, and identifies a process to obtain sensor output values from the user's joint positions. Section 4 contains an analysis of the results of two tests which were been performed after attaching the proposed intelligent lead to a mobile robot. Finally, Section 5 contains conclusions and plans for the future.

2. Sensor System Architecture

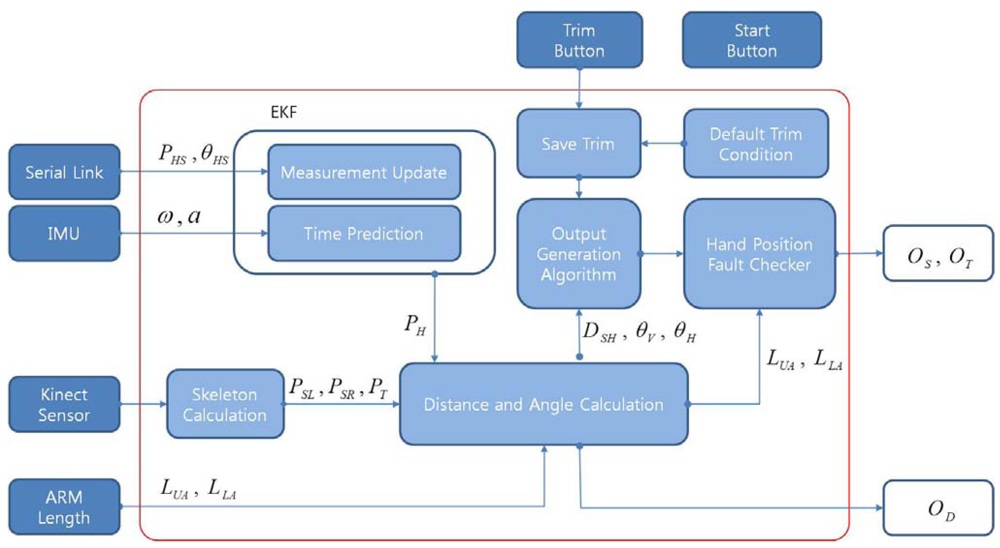

Figure 2 shows the block diagram of the intelligent lead and the mobile robot. The intelligent lead consists of an IMU, a serial linkage and the Kinect sensor.

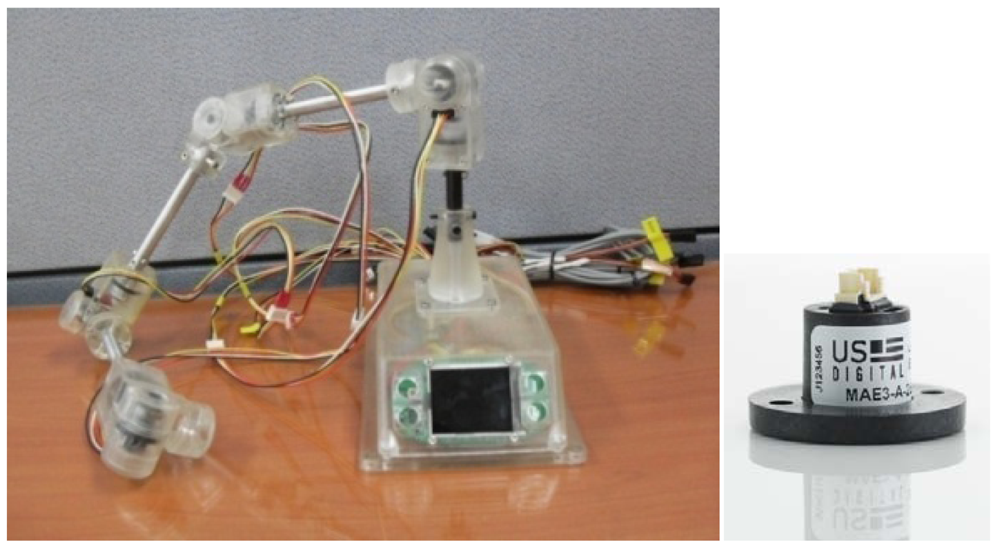

The mobile robot consists of a 2D laser scanner, a landmark detector and a servo driver module. The intelligent lead output is used for generation of steering angle in modified VFH+ algorithm. The serial linkage is connected with rotary encoders or absolute encoders consecutively. The encoders which are selected should be small and precise. In general, however, larger encoders are more precise. MAE2-A2 made by USDigital Company is used in this study (Figure 3). The encoder is magnetic and an absolute encoder which provides PWM pulse with a 4,096 resolution at a 360° turn. Thus, 0.088° of accuracy per joint is found. In addition, the sampling rate of the PWM pulse is 250 Hz.

The Kinect sensor was attached to the end of the serial linkage to measure a user's shoulder and torso position. The Kinect sensor acquires depth information by applying IR patterns to an object and measuring them with an IR sensor. Compared to a general camera, there is no interference of ambient light, and it also works at night. Usability at night is considered an important characteristic for human-friendly guide robots.

We acquired a user's shoulder and torso position from depth image with OpenNI library. If the base of the serial linkage is shaken because a robot passes over a rough surface or makes a dynamic motion, the vibration is directly transmitted in the coordinates on a user's hand. To compensate for the vibration of the robot's base frame, an IMU is installed in the area which is held by the user's hand. Accelerometer and gyroscope data are used in the time prediction procedures. For this, an MTi sensor made by Xsens Company with 100 Hz of sampling rate has been used.

The three sensors mentioned above have measurement errors. First, in the case of the low-price IMU, the drift of the gyroscope causes cumulative errors. We used the encoder connected in the serial linkage with small products to reduce weight. As the size of an encoder decreases, accuracy declines as well. Also, as the serial linkage gets longer, the amount of position and pose errors increases proportionally. The position and pose errors differ depending on assembly accuracy as well as the length of the link and the encoder accuracy itself.

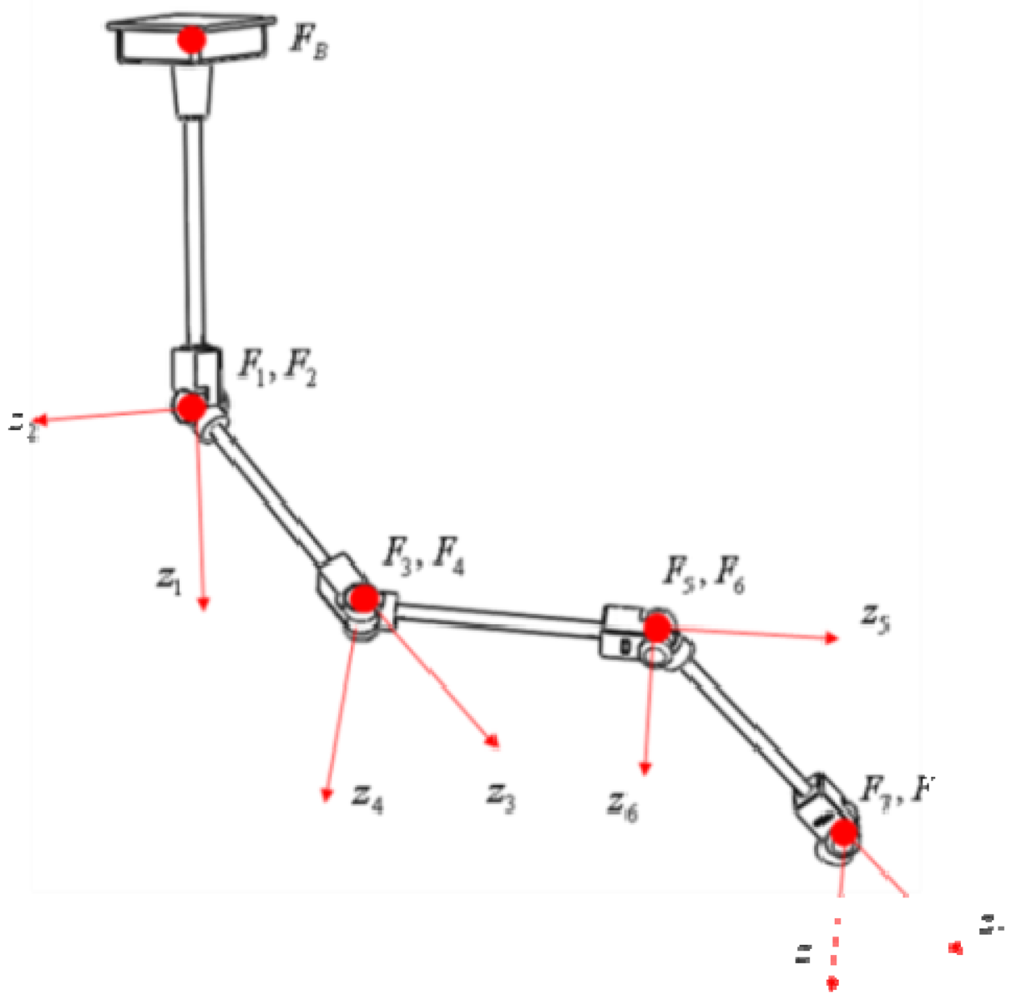

The Kinect sensor can suffer from occlusions by smoke/fog or obstacles. In addition, the performance of the Kinect sensor decreases in a dynamic environment. Therefore, it is necessary to reduce error in the integration system through fusion instead of separately using these sensors. This kind of integration can improve accuracy and prevent output errors generated from sensor failures from suddenly increasing. We made the serial linkage which consists of eight axes of rotation. We attached an absolute encoder to each axis. The link coordinate parameters of the serial linkage fabricated for the test is given in the table below (Table 1).

If is a transformation matrix from the IMU sensor coordinate frame to the last joint coordinate frame, the transformation matrix T from the IMU sensor coordinate frame to the base coordinate frame is calculated as follows:

If we denote the entry in the i-th row and the j-th column of the matrix T as T[i,j], the relative position and pose of sensor coordinates frame with respect to the navigation coordinate frame are calculated as follows:

In Equation (3), the notation θ, ψ, φ mean roll, pitch, yaw angle respectively.

3. Intelligent Lead Sensor

3.1. Serial Linkage/IMU Integration with EKF

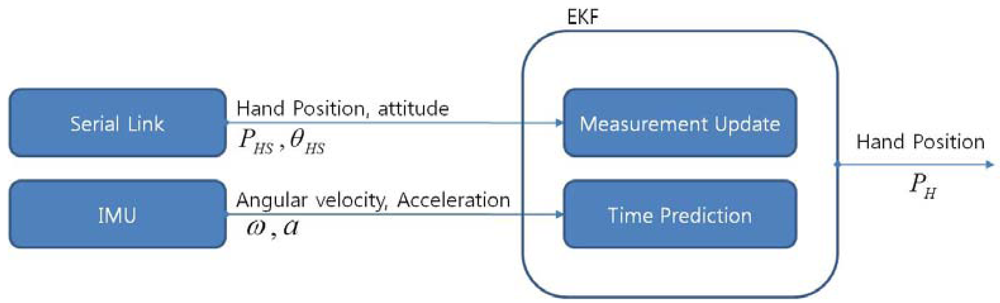

The objective of using the serial linkage, the IMU and the Kinect sensor is to produce a stable distance from a user to a robot, speed-control volume and turn-control volume, even when the robot platform in which the base frame of the serial linkage is installed is shaken by a rough surface. To achieve this objective we acquire the hand position from the EKF with the serial linkage and the IMU.

In Figure 4, the symbol PHS, θHS represent position data and orientation data of the IMU coordinate frame which is attached to the user's hand respectively. The symbols ω and α represent angular velocity and linear acceleration, respectively. The symbol PH means a position data calculated from the EKF. In this section, a system dynamic nonlinear equation, system matrix, and an output matrix which are necessary to design the EKF to fuse both the serial linkage and the IMU are introduced.

Table 2 represents the related symbols used to explain the EKF. The linear speed equation of the hand is as follows:

The linear acceleration equation is as follows:

The quaternion equations of rotation velocity, which represent coordinate transformation from body coordinate frame to navigation coordinate frame, are expressed in a differential equation as shown below:

To induce state space representation from the nonlinear Equations (4∼6), state vector and output vector are defined as follows:

Then, the system matrix and the output matrix are obtained as follows:

We need to first initialize x0, P0, Q, R and then to calculate time prediction procedure and measurement update procedure consequently. First we acquire roll, pitch and yaw angles from MTi sensor and then we convert Euler angle to quaternion q0 with these data. The covariance matrix Pn0 is set to an initially calculated value from the serial linkage. And the initial value of vb is set to 0. From these values, the initial state and the initial covariance matrix are assigned as follows:

The process noise matrix and measurement noise matrix were determined to avoid the divergence of the filter output by trial and error as follows:

In the above Equation (12)In×n represents n by n dimensional identity matrix.

The time prediction procedure shown in the block diagram above can be stated as follows:

With the current state vector and IMU sensor data, update the system matrix from the Equations (9).

Calculate the derivative of state vector by using the equation, ẋn−1 = Axn−1

Update the current state vector by using the equation, xn = xn−1 + ẋn−1dt

Normalize the quaternion among the updated states.

Update the P matrix by using the equation, Pn = APn−1AT + Q

The measurement update procedure can also be stated as follows:

Determine the output matrix with the current quaternion from the Equation (9).

Measure the output, zm with the hand position, PHS and pose, θHS measured from the serial linkage.

Calculate the output error by using the equation, e = zm − Hxn

Update Kalman gain by using the equation, K = PnHT[HPnHT + R]−1

Update the state vector by using the equation, xn+1 = xn + Ke

Update the P matrix by using the equation, Pn+1 = Pn − KHPn

The first 3-terms of the state vector, which are updated through the above procedures, are the coordinates of the hand position viewed from the base coordinate frame of the serial linkage. As mentioned previously, we assume that the base coordinate frame of the serial linkage is placed at the pinhole of the depth camera of the Kinect sensor.

3.2. Intelligent Lead Output Generation

The intelligent lead proposed in this study acquires a user's left shoulder position, right shoulder position and torso position from the Kinect sensor and hand position with Serial linkage and IMU on a real-time basis.

The intelligent lead has two buttons. One is the start button and another is the trim button. If the user pushes the trim button current output volumes are saved in trim buffers. The intelligent lead has a hand position fault checker block. If the distance from the shoulder to the hand is larger than the sum of the upper arm length and the lower arm length, than the output volumes become zero. This prevents unwanted generation of output volumes when the user loses hold of the handle. If the user's hand position is obtained from the output of the EKF, the user's control intention should be investigated by using this data and the user's right shoulder, left shoulder and torso position data which have been obtained from the Kinect sensor. Because the size of walking steps, the amount of back and forth arm swings and the amount of movements to the left and right differ from individual to individual, it is necessary to get information on changes of the user's arm-joints while walking.

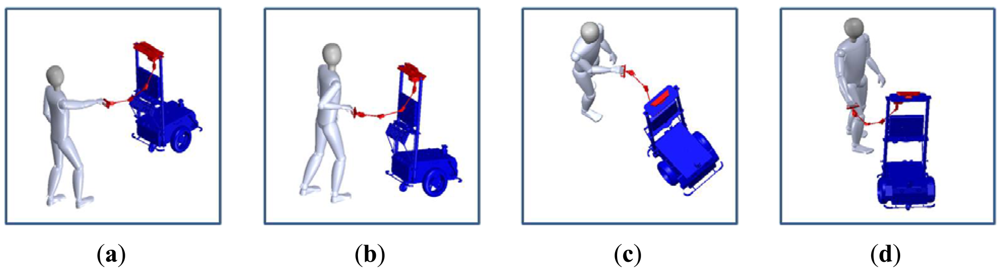

When people walk with a dog, it is more common to move the hand to the left and right to point out directions instead of changing the angle of the wrist. In addition, many people push their hand forward and pull down backward to increase or decrease the velocity. Figure 5 describes the actions for generating commands of speed increase, speed decrease, left turn and right turn respectively.

We assume the user is a right-hander. Figure 6 shows frames, angles and distance defined from the joint positions.

The normal vector represents the direction of the plane in which a triangle is formed by the left shoulder position, the right shoulder position and the torso position. The symbol DSH means the size of the line created by the right shoulder position and the hand position. The symbol θV means the angle between the normal vector and the line created by the right shoulder position and the hand position. The symbol θH means the angle between the line created by the right shoulder position and the hand position and the line formed by the left shoulder position and the right shoulder position. The distance from the shoulder to the hand is calculated as follows:

If the left shoulder position, PSL is (x1y1z1)T, right shoulder position, PSR is (x2y2z2)T and torso position, PT is(x3y3z3)T, then the normal vector n⃗ of the plane that includes these three points can be calculated as follows:

The angles defined above can be obtained using the law of cosine:

If the height of the hand position is above the height of the torso position, we assume that the user has the intention to control the robot's direction or speed. In addition, if θV is above a certain limit value, then we conclude that the user bent his/her waist. We fixed the limited value to 45 degrees in the following test. In these cases the intelligent lead output forced to set 0. This assumption prevents unwanted output generation. The intelligent lead has the trim button to set trim values for speed control and turn control. The default trim value is as follows:

Finally we get intelligent lead output values for control command as follows:

In Equations (19) and (20), OS and OT mean speed control value and turn control value respectively, PH(z) and PT(z) represent z-axis value of hand and torso position respectively. The distance from the user's body to the robot can be calculated simply with the mean of two shoulder positions and a torso position:

The intelligent lead generates OD, OS, OT represented by the above Equations (19∼21) simultaneously on a real-time basis. Figure 7 shows the summary block diagram of the proposed intelligent lead.

Table 3 lists the related symbols for generating intelligent lead output.

4. Experimental Results

4.1. Mobile Robot for Testing

Many studies have proposed strategies for robots to complete their tasks without any collision with obstacles and/or other robots. Especially the methods using the potential field [13] and the Vector Field Histogram Plus (VFH+) algorithm [14,15] have given diverse and useful results. In this study, the robot direction and speed were controlled based on the VFH+ algorithm by using the obstacle information acquired from a 2D laser scanner and current location information acquired from an IR landmark detector. The size of the robot and local map were fixed to be 0.8 m and 3 × 3 meters in the VFH+ algorithm.

The HOKUYO UTM30-LX was used as a 2D laser scanner to detect obstacles. Even though UTM-30LX has measure range from −135° to 135°, the data measured from −90° to 90° was used in this test. Its specifications are listed in Table 4. Hagisonic's Stargazer was used as an IR landmark detector. We firmly affixed a total of 16 landmarks as IR reflective materials to the ceiling, whose height is 2.5 meters. Landmarks were aligned with a 1 meter interval horizontally.

To determine the steering angle with the VFH+ algorithm, it is first necessary to create a local map with 2D laser scanner data. Then from the local map, we obtain an obstacle vector, a Primary Polar Histogram, a Binary Polar Histogram, and a Masked Polar Histogram, in that order. Steering angle is calculated based on the final Masked Polar Histogram. The desired steering angle is simply determined by the sum of hvfh and OT, and steering angle error value he is calculated by the difference desired angle and current angle as follows:

In the above equation, hc is the current direction which is measured from the IR landmark detector and OT is the desired differential direction which is generated from the intelligent lead. The position error, pe is calculated as follows:

In the above equation, pd represents the desired distance from a user to a robot which is fixed at 0.8 meters in this test. The symbol OS is the desired differential speed which is generated from the intelligent lead output, and OD is the measured distance from the user to the robot with the intelligent lead. For the test, a two wheel-type mobile robot was designed. Mitsubishi's 400W AC servo motor, 10:1 bevel gearbox, 13-inch wheels and PC (Intel i7-2600 CPU @3.4 GHz) were used. Figure 8 pictures the mobile robot and the intelligent lead sensor fabricated for the test.

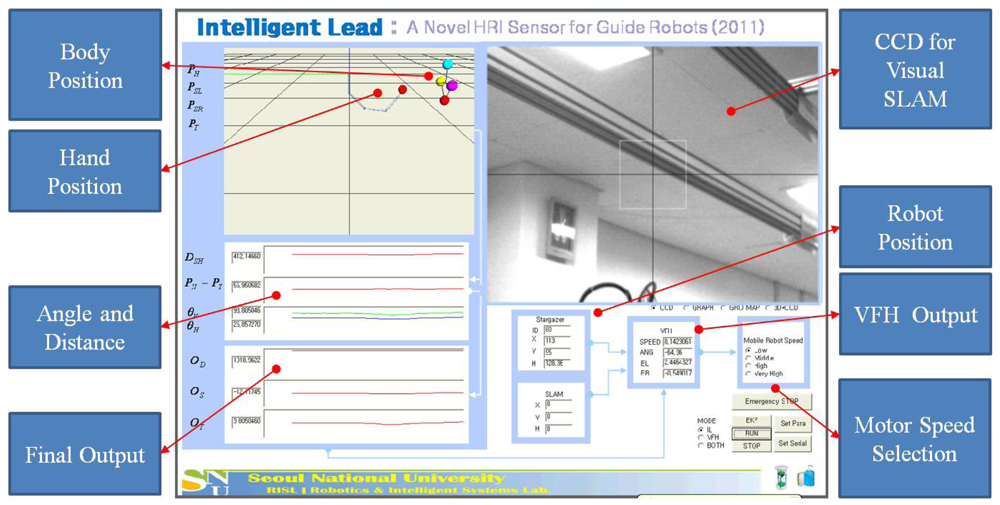

Figure 9 below shows the captured screen from the monitoring program made for our testing. We expressed calculated positions on the upper left side in 3D using the OpenGL. In Figure 9, the four dots on the top represent the user's head position, left shoulder position, right shoulder position, and torso position which have been detected by the Kinect sensor, respectively. In addition, the dot in the middle refers to the hand location which has been detected through the EKF. The values which were calculated from the four positions were marked on the bottom left. On the right side of the display a CCD camera image for visual tracking is shown, and on the bottom side we show current robot positions calculated from SLAM and detected from the Stargazer landmark detector. The values calculated from the VFH+ algorithm are displayed and selection buttons for servo motor speed setting are arrayed.

4.2. Test Results

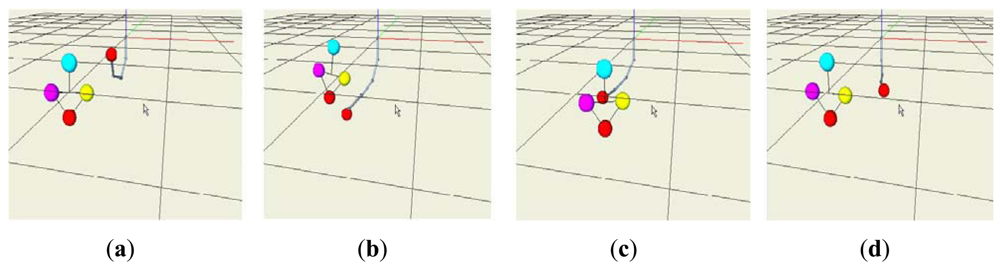

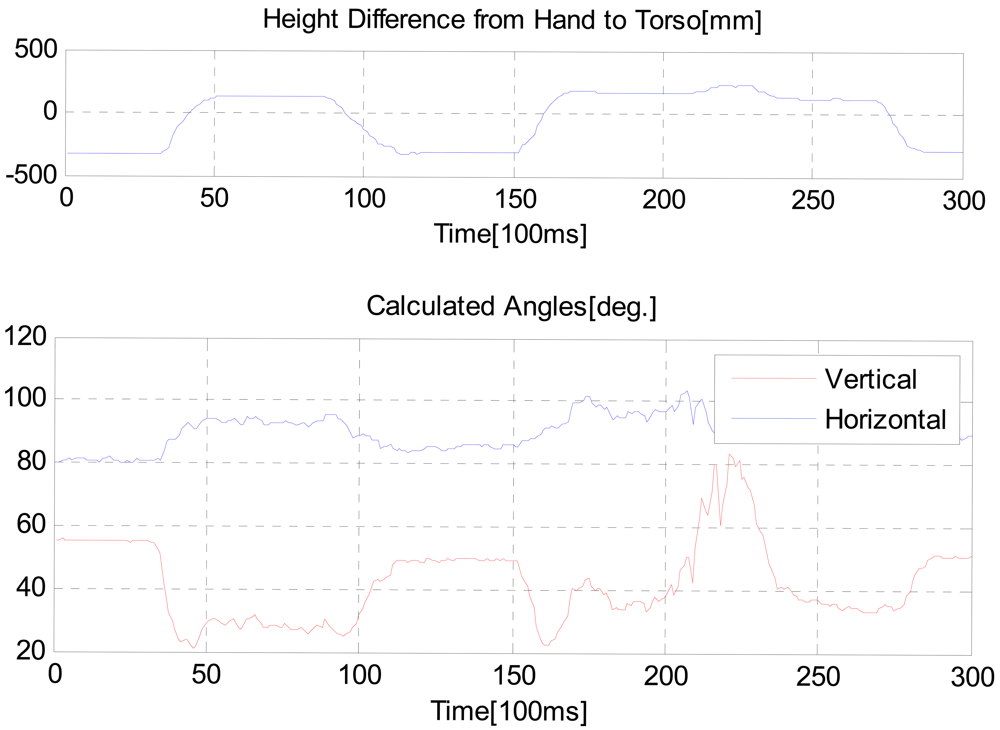

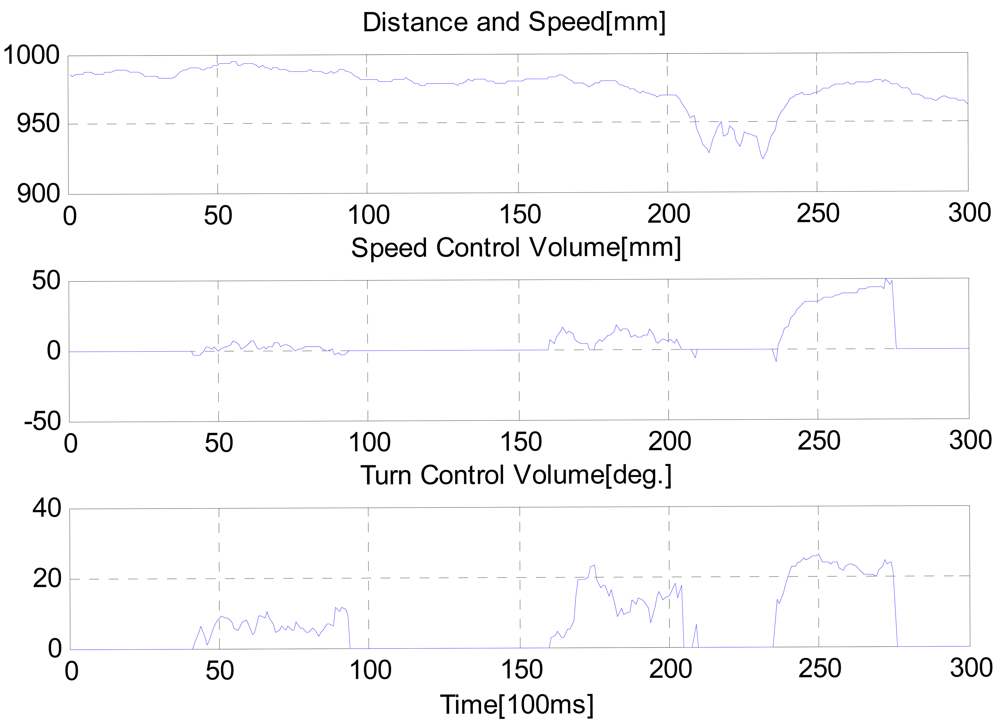

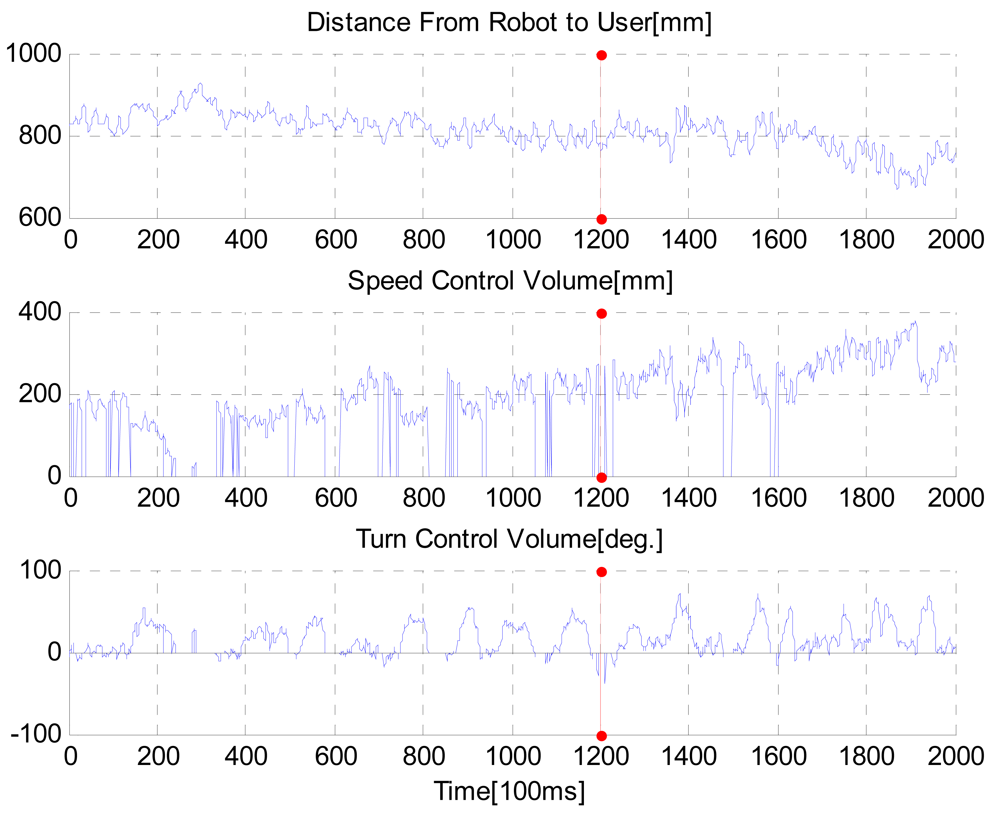

In the first test, the control volumes were measured by moving the handle of the intelligent lead horizontally while the robot is stopped. Figure 10 shows acquired positions on the 3D viewer when we generated speed increase, speed decrease, left turn and right turn commands respectively.

Figure 11 shows measured values when the hand position was shaking. We intended that θV changes via the defined limit value, 45 degrees and the height of the hand position goes from lower to upper than that of the torso position, and then from upper to lower.

As shown in the Figure 12, in the region of 200∼250 time tick, the user bent down at the waist. Since the height of the hand was above than that of the torso position, as θV was larger than 45 degrees the intelligent lead output was not generated. The distance from the user to the robot was always generated, regardless of θV value and the height of the hand position.

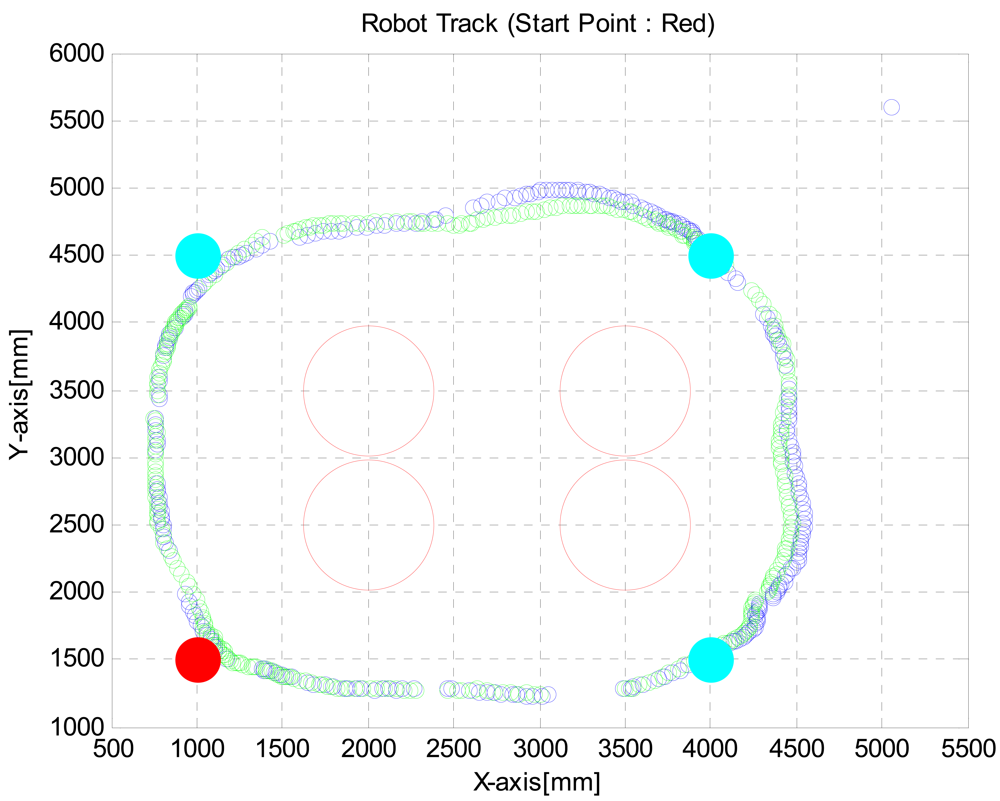

In the second test, the robot was programmed to move to (1, 1.5), (1, 4.5), (4, 4.5) and (4, 1.5) points with avoiding obstacles twice by using VFH+ algorithm. We located obstacles at (2, 2.5), (2, 3.5), (3.5, 2.5) and (3.5, 3.5) points. The obstacles were cylinders with 0.5 meter diameter. Figure 13 shows robot track measured with the Stargazer. Because of the alignment and flatness errors of landmarks, it is shown that the robot path was cut off whenever detected landmarks are changing. Nevertheless the robot reached the desired destinations by avoiding obstacles.

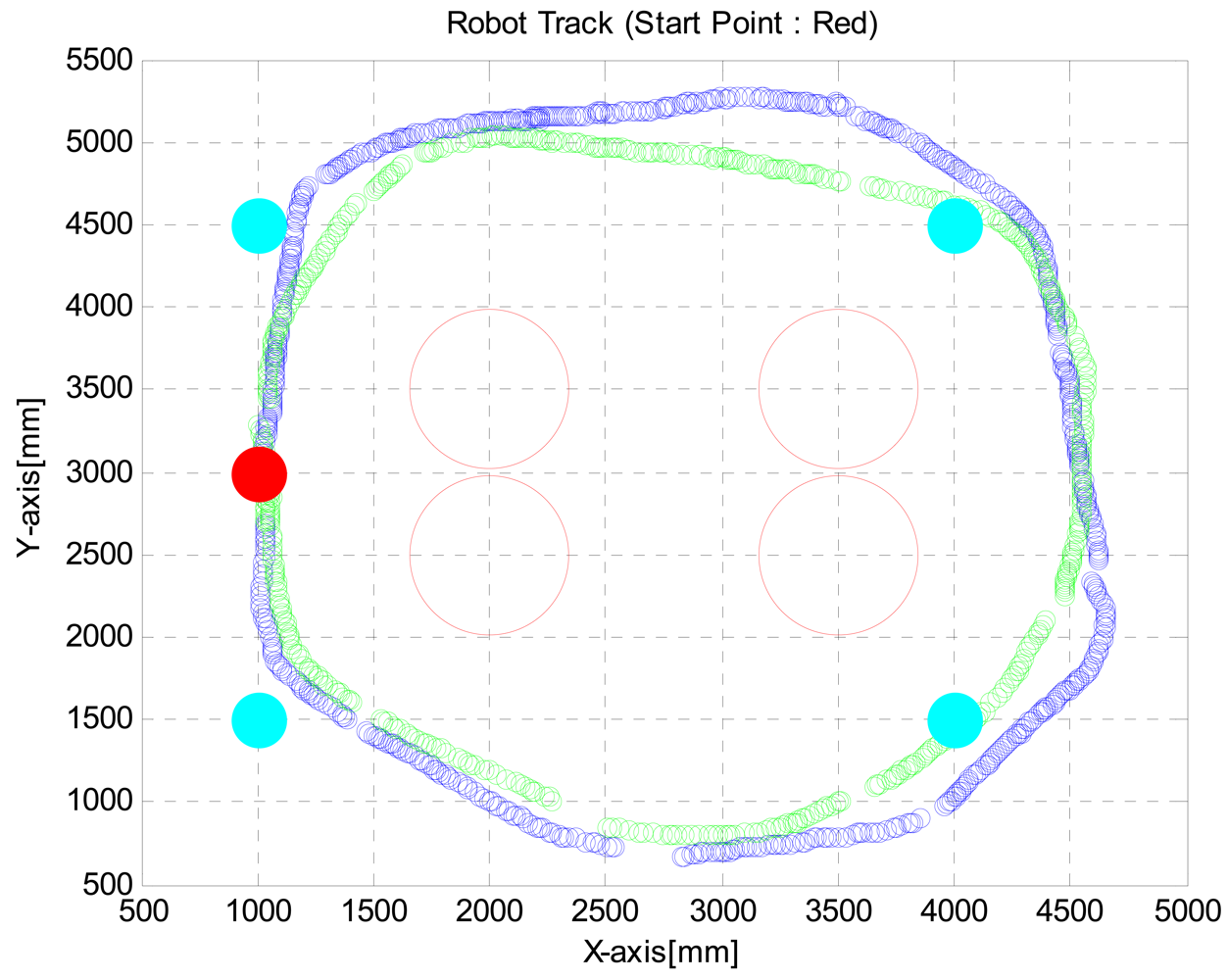

When a robot is moving around following a user, it is difficult to use a joystick without a fixed joystick pad. The proposed intelligent lead can solve this problem. We show how to use intelligent lead as a joystick and a distance measure. In the third test, the ability of the robot equipped with the intelligent lead to reach a destination by avoiding obstacles was tested. The intention was for the robot to lead the users keeping 0.8 meter distance from the user. Our objective was to make a track twice by passing points at (1, 1.5), (1, 4.5), (4, 4.5) and (4, 1.5). We controlled the speed of the robot with the intelligent lead to make a first track within 120 seconds and then a second track within 80 seconds. And we generated turn control commands through the intelligent lead to arrive at the destinations and avoid obstacles. Figure 14 shows the robot track acquired from the third test. A red point means a start point and green points mean en route points or destination points, respectively. The result shows that we could reach the destination points keeping pre-defined distance from the robot.

Output data from the intelligent lead during the test is shown in Figure 15. Mean distance between user and robot was 0.8101 meters. We confirmed that the proposed intelligent lead could be used as a new HRI joystick. Moreover, our results suggested that motion of humans could interact with robots using the proposed intelligent lead. During many tests, the OpenNI algorithm for searching skeleton fails occasionally at turning intervals. This is because the user gets out of the FOV due to the limited FOV. In future work, we need to convert the software to be able to solve this problem.

5. Conclusions

In this paper, we introduced the intelligent lead as a new HRI sensor. To detect the user's hand position, we made a serial linkage consisting of eight rotary encoders and then we constructed the intelligent lead with the Kinect sensor, the serial linkage and MTi sensor as an IMU. Their measurements were fused by an EKF. A mobile robot was designed to test the performance of the proposed intelligent lead and the related positions are monitored by calculating in 3D with the OpenGL. Several tests were conducted to verify that the mobile robot with the intelligent lead is capable of achieving its goal points while maintaining the appropriate distance between the robot and the user. It is shown that the intelligent lead acquires a stable distance from the user to the robot, speed-control volume and turn-control volume on a real-time basis. Moreover, our results suggested that the motion of humans could interact with the robot by the proposed intelligent lead in mobile environments such as those where the robot and the user are moving at the same time. During many tests, the OpenNI algorithm for searching skeleton failed occasionally at turning intervals for reasons of the limited FOV. In the future work, we need to convert the software to be able to solve this problem.

Acknowledgments

This work was supported in part by a Korea Science and Engineering Foundation (KOSEF) NRL Program grant funded by the Korean government (No.R0A-2008-000-20004-0), and in part by the ASRI, and in part by the Brain Korea 21 (BK21) Project, and in part by the Industrial Foundation Technology Development Program of MKE/KEIT [Development of CIRT (Collective Intelligence Robot Technologies)].

References

- World Health Organization. Media Centre, Visual impairment and blindness. Available online: http://www.who.int/mediacentre/factsheets/fs282/en/ (accessed on 20 March 2012).

- Kulyukin, V.; Gharpure, C.; Nicholson, J. RoboCart: Toward Robot-Assisted Navigation of Grocery Stores by the Visually Impaired. Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Edmonton, Canada, 2–6 August 2005; pp. 2845–2850.

- Kulyukin, V.; Gharpure, C.; Nicholson, J.; Pavithran, S. RFID in Robot-Assisted Indoor Navigation for the Visually Impaired. Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Sendai, Japan, 28 September–2 October 2004; pp. 1979–1984.

- Pradeep, V.; Medioni, G.; Weiland, J. Robot Vision for the Visually Impaired. Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, San Francisco, CA, USA, 13–18 June 2010; pp. 15–22.

- Hesch, J.A.; Mirzaei, F.M.; Mariottini, G.L.; Roumeliotis, S.I. A 3D Pose Estimator for the Visually Impaired. Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, St. Louis, MI, USA, 11–15 October 2009; pp. 2716–2723.

- Ulrich, I.; Borenstein, J. The GuideCane—A Computerized Travel Aid for the Active Guidance of Blind Pedestrians. Proceedings of the IEEE International Conference on Robotics and Automation, Albuquerque, NM, USA, 21–27 April 1997; pp. 1283–1288.

- Ulrich, I.; Borenstein, J. The GuideCane—Applying mobile robot technologies to assist the visually impaired. Proc. IEEE Trans. Syst. Man Cybern. Part A 2001, 31, 131–136. [Google Scholar]

- Shim, I.; Yoon, J.; Yoh, M. A human robot interactive system—RoJi. Int. J. Control Autom. Syst. 2004, 2, 398–405. [Google Scholar]

- Melvin, A.A.; Prabu, B.; Nagarajan, R.; Illias, B. ROVI: A Robot for Visually Impaired for Collision-Free Navigation. Proceedings of the International Conference on Man-Machine Systems, Penang, Malaysia, 11–13 October 2009; pp. 3B51–3B56.

- Galatas, G.; McMurrough, C.; Mariottini, G.L.; Makedon, F. eyeDog: An Assistive-Guide Robot for the Visually Impaired. Proceedings of the International Conference on Pervasive Technologies Related to Assistive Environments, Crete, Greece, 25–27 May 2011.

- Se, S.; Lowe, D.; Little, J. Vision-based Mobile Robot Localization And Mapping Using Scale-Invariant Features. Proceedings of the International Conference on Robotics & Automation, Seoul, Korea, 21–26 May 2001; pp. 2051–2058.

- Chu, T.; Guo, N.; Backén, S.; Akos, D. Monocular camera/IMU/GNSS integration for ground vehicle navigation in challenging GNSS environments. Sensors 2012, 12, 3162–3185. [Google Scholar]

- Ge, S.S.; Cui, Y.J. New Potential Functions for Mobile Robot Path Planning. IEEE Trans. Rob. Autom. 2000, 16, 615–620. [Google Scholar]

- Ulrich, I.; Borenstein, J. VFH+: Reliable Obstacle Avoidance for Fast Mobile Robots. Proceedings of the IEEE International Conference on Robotics and Automation, Leuven, Belgium, 18–21 May 1998; pp. 1572–1577.

- Ringdahl, O. Path Tracking and Obstacle Avoidance Algorithms for Autonomous Forest Machines. Master Thesis, Umea University, Umea, Sweden, 2003. [Google Scholar]

| Frame and Transformation Matrix | θ (deg.) | α (deg.) | a (mm) | d (mm) | |

|---|---|---|---|---|---|

| θ1 | 0 | 0 | 50 | |

| θ2 | 90 | 0 | 0 | ||

| θ3 | −90 | 0 | 200 | ||

| θ4 | 90 | 0 | 0 | ||

| θ5 | −90 | 0 | 200 | ||

| θ6 | 90 | 0 | 0 | ||

| θ7 | −90 | 0 | 200 | ||

| θ8 | 90 | 0 | 0 | ||

| 0 | −90 | 0 | 10 | ||

| Symbol | Meaning | Symbol | Meaning |

|---|---|---|---|

| Pn | Hand position | Q | Process noise matrix |

| Direct Cosine Matrix | R | Measurement noise matrix | |

| vb | Velocity | x | State Vector |

| m | Mass | y | Output Vector |

| fb | Force | θ | Euler angle |

| gn | Gravity | A | System Matrix |

| q | Quaternion | H | Output Matrix |

| P | Covariance matrix | K | Kalman gain |

| Symbol | Meaning | Symbol | Meaning |

|---|---|---|---|

| PH | Hand Position calculated from EKF | OS | Speed Control Volume |

| PSL | Left Shoulder Position | OT | Turn Control Volume |

| PSR | Right Shoulder Position | OD | Distance Output from Body to Robot |

| PT | Torso Position | DSH | Distance from Shoulder to Hand |

| θE | Angle of Elbow | n⃗ | Normal vector generated Body plane |

| θV | Angle of Vertical | LUA | Length of Upper Arm |

| θH | Angle of Horizontal | LLA | Length of Lower Arm |

| Parameter | Typical value | |

|---|---|---|

| Light Source | Laser diode (λ = 785 nm), Class 1 (FDA) |

| Detection Range | 0.1 to 30 m, Max. 60 m | |

| Accuracy | 0.1 to 10 m: ±30 mm, 10 to 30 m: ±50 mm | |

| Scan Angle | 270° | |

| Angular Resolution | 0.25° (360°/1,440 steps) | |

| Scan Time | 25 msec/scan | |

| Interface | USB2.0 (Full Speed) |

© 2012 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Cho, K.-B.; Lee, B.-H. Intelligent Lead: A Novel HRI Sensor for Guide Robots. Sensors 2012, 12, 8301-8318. https://doi.org/10.3390/s120608301

Cho K-B, Lee B-H. Intelligent Lead: A Novel HRI Sensor for Guide Robots. Sensors. 2012; 12(6):8301-8318. https://doi.org/10.3390/s120608301

Chicago/Turabian StyleCho, Keum-Bae, and Beom-Hee Lee. 2012. "Intelligent Lead: A Novel HRI Sensor for Guide Robots" Sensors 12, no. 6: 8301-8318. https://doi.org/10.3390/s120608301