1. Introduction

Due to the rapid growth in robotics in recent decades, it is unavoidable that robots should come into our lives, interacting with us and the environment. From this point of view, robots’ perception plays a key role, and it is sensory information, which relays messages from outside of the robot, that makes it possible for robots to perceive [

1]. In order to improve the capability and reliability of the sensory information from various modalities, they can be fused [

2,

3]. In the field of mobile robots, the concept of sensor data fusion has already been widely used on wheeled vehicles. Several practical applications have been addressed, including fusion between an inertial measurement unit (IMU) and vision [

4], Kinect™/laser fusion [

5], odometry/laser fusion via extended Kalman filter (EKF) for robotic guidance [

6], laser/GPS fusion via Kalman filter for tracking in outdoor environments, laser/vision fusion for human detection [

7], and Kinect™/thermal fusion for robust people detection. Moreover, multi-sensor information was employed in the European URUS (Ubiquitous Networking Robotics in Urban Sites) project, which aims to develop a network of robots to collaborate and communicate with human beings and the environment in urban areas [

8].

On the other hand, in the field of legged robots, which has focused on motion development and stability improvement in the past, there have been only limited studies on utilizing sensor fusion to stabilize robot behavior. However, due to the rapid development of modern legged robots, the underlying principle for creating stable locomotion has been revealed. As a result, more and more research concerning legged robots has focused on how to enhance the robot’s performance through sensory feedback algorithms and data fusion. For example, a velocity estimation method based on the kinematics model and IMU for a bipedal robot was addressed [

9]. A full body state estimation algorithm was proposed based on IMU and legged odometry for a quadruped [

10]. Both of these employed the EKF. A vision-based obstacle avoidance navigation system for humanoid robots has also been reported [

11]. A pose estimation methodology was developed for a six-legged walking robot, which fuses the sensory information from IMU, vision and leg odometry [

12]. By fusing the sensory data from IMU, electric compass and touch sensors, a state estimation algorithm can be established for a hexapod robot to accomplish a two-dimensional navigation task [

13]. Regarding humanoid robot research, an observer-based approach is utilized for stabilizing bipedal walking motion based on the 3-dimensional linear inverted pendulum model (LIPM) and preview control [

14]. In addition, an EKF-based state estimation method is introduced using only common proprioceptive sensors and leg kinematics [

15]. In our previous research, a leg-strain-based configuration estimator was developed for a hexapod robot [

16]; later, the sensor was fused with an IMU via EKF for estimating the full body state of a hexapod robot [

17]. An IMU/encoder fusion model-based feedback control was also developed for improving the stability of the robot’s jogging motion [

18]. In addition, a 9-axis IMU [

19] and a 12-axis gyroscope-free IMU [

20] were developed for better motion state reconstruction.

As the environments are already designed for humans, humanoid robots in comparison to other types of robots have a unique and incomparable importance to human society. To successfully build a humanoid robot and achieve human locomotion in machine form, creating stable walking must play a key role, and other behaviors, such as turning [

21,

22] and running [

23], must also be taken into account. With the well-developed locomotion strategy based on the concept of Zero-Moment Point (ZMP) [

24], the walking controller of the bipedal robots can be composed of two stages: generation of a stable walking pattern in the sense of ZMP, and a real-time control strategy that tracks the robot’s ZMP to the planned value. However, practically, the discrepancy between the theoretical model and the empirical robot, such as the backlash in mechanism, un-modeled dynamics, and disturbance of the environment, all affect the stability of the locomotion. To improve the performance of robotic motion, many studies regarding stable walking pattern generation have been reported [

25,

26,

27]. In these approaches, the robots are often simplified as a point mass located at its center of mass (CoM) and moved as an inverted pendulum. By specifying the dynamic relations between the CoM and the ZMP, the CoM trajectory corresponding to the pre-planned ZMP trajectory can be generated. In order to grant walking stability to the bipedal robots, the implementation of a real-time feedback controller is crucial to deal with the uncertainty and disturbance from the outside, and the controller usually relies on the sensory information of the body state or ground reaction forces. Nishiwaki

et al. regulated the CoM position of the robot according to ZMP errors [

28]. Sato

et al. developed a ZMP disturbance observer to realize the position and acceleration compensation of the CoM [

29]. Buschmann

et al. proposed another effective approach, which used position/force control [

30]. Stephens

et al. proposed a dynamic balance force control, which considers the full rigid-body dynamics of the robot to produce the desired contact force [

31]. Most of these methods require force measurement between the foot and the ground.

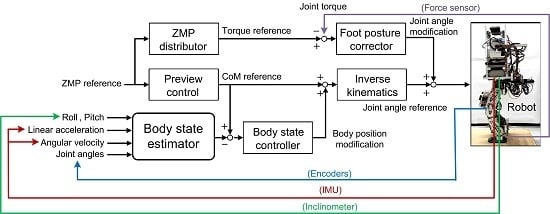

In our previous work on the empirical bipedal robot, the robot successfully fulfilled some tasks, such as walking and turning, using a preview-control-based open-loop control strategy [

32]. However, instability due to the backslash of the mechanism as well as unmodeled dynamics encouraged us to develop a multi-sensor feedback control strategy to improve the stability of the locomotion [

33]. As a result, we investigated the feasibility of stabilizing bipedal walking by sensory feedback using the state of the body at a specific position, which is hereafter referred to as the “FP.” This position is also equal to the CoM when the robot is in the standing posture suggested by the detailed CAD model of the robot. A body state estimator based on the LIPM was designed, fusing the sensory information of joint angles (from encoders), body acceleration and angular velocity (from a 6-axis IMU), and body inclination (from an inclinometer) via an extended Kalman filter. Then, a walking stabilizer based on the estimated body position (

i.e., FP) was designed. Furthermore, a posture corrector using the information from the 6-axis force sensors on the ankles was introduced to eliminate unwanted torque during motion. Note that Kajita

et al. also designed a body posture controller to regulate the CoM and a ground contact wrench based on the simple inverted pendulum model [

34]. His work focused on controlling the robot’s walking on surfaces at the macro-scale (such as walking from an even flat surface to an inclined flat surface). In contrast, our work focuses on micro-scale regulation of the body posture. The novelty of this work lies in the design of the body state estimator, which combines the advantages of “leg odometry” and dynamic IMU-based state information. Following this, the estimated state is deployed for body motion regulation. Finally, the proposed control structure was implemented on a child-sized bipedal robot and experimentally evaluated.

The rest of this paper is organized as follows.

Section 2 describes the design of the proposed body state estimator through multi-sensor data fusion.

Section 3 reports the feedback control strategy to regulate and stabilize the walking bipedal robot based on the proposed body state estimator.

Section 4 briefly introduces the empirical bipedal robot.

Section 5 reports the experimental evaluation of the estimator and regulator, while

Section 6 concludes the work.

2. The Body State Estimator

The method of generating a nominal CoM trajectory is based on the preview control strategy proposed by Kajita [

35], which links a pre-planned ZMP trajectory

to the CoM motion through the LIPM. Based on the preview control law, once the desired ZMP trajectory is defined, the CoM trajectory can be determined and controlled through the inverse kinematics of the robot using the sensory information from the joint encoders. However, because of the backlash in the mechanism and unmodeled dynamics of the system, the real CoM position is difficult to obtain using only the joint encoders. Consequently, the purpose of developing the multi-sensor-based body state estimator is to obtain a more precise body state and also to act as a reference for the feedback control strategy.

The body state estimator is a key component in this study, which estimated the state as located at a fixed position on the body (i.e., FP). This position is also equal to the CoM when the robot is in the standing posture suggested by the detailed CAD model of the robot. Note that the CoM position varies while the robot walks, but the variation is less than 15 mm according to the CAD model. The state estimator can be further divided into two parts: the translational part and the rotational part. As described in the previous section, the proposed LIPM model-based control strategy only requires the CoM state (i.e., translational only). However, in order to correctly estimate the CoM state, the complete body state estimator, including translation and orientation, is still required because the body orientation is necessary for transforming the raw strapdown IMU readings into those in the inertial frame. These two parts will be described separately below.

On the translational side, since motions in the three spatial directions are independent, the motion model can be decoupled into three discrete and linear KFs, each in charge of motion along with a specific principle axis in the inertial frame. An exemplary presentation of the translational KF model in the fore-aft direction is given below, where the constant acceleration model is utilized in the prediction stage:

where T is the sample period, and

is the estimated translation state of the FP of the body, including position

, velocity

, and acceleration

. The symbols

and

represent the standard process and measurement noise variables given by covariance

and

, respectively, which are assumed to be independent and normally distributed with zero means. The measurement state

includes position

and acceleration

, and the derivation of these two states will be introduced separately in the following paragraphs.

The FP position measurement

is computed based on the leg joint configuration [

16], which means the FP position can be determined using the joint encoders according to the kinematics of the robot [

32]. Since the empirical bipedal robot walks slowly and steadily, it is reasonable to assume that its foot contacts the ground rigidly without any slippage during locomotion. In each stride when the robot is supported by one leg, the robot body configuration with respect to the ground-contact foot can be computed according to the forward kinematic. When the robot is supported by both feet, the relative configuration between the two feet can also be derived based on legged kinematics. Thus, through sequential composition, the body configuration (

i.e., FP) of the robot at its

th stride relative to its initial configuration (

i.e., the “inertial frame”) at all instants can be computed. The quantitative computation can be represented as

where

is landing position, and

is an array composed of leg joint angles, measured directly from motor encoders.

On the rotational side, a quaternion-based extended Kalman filter is built to estimate body orientation, and the estimated state is used to perform gravity compensation and coordinate transformation of the accelerometer readings [

17]. The model can be represented as

where the body state

is composed of the body orientation represented by quaternion

and spatial angular velocity

. The measurement state

is composed of the body orientation represented in pitch, roll, and yaw, and spatial angular velocity

. The symbols

and

represent the standard process and measurement noise variables given by covariance

and

, respectively. Both

and

are non-linear functions, where

is the relation between the previous state and current state under the assumption of constant angular speed motion, and

projects the orientation represented in orientation form onto the measurement body orientation represented in pitch, roll, and yaw, and spatial angular velocity. The flowchart of the quaternion-based extended Kalman filter is depicted in

Figure 1.

The non-linear functions

and

, as well as the linearized matrix

and

, are shown below:

where

is the linearized system matrix,

is the linearized matrix of

. Here, we set

and

, where θ represent the rotation angle and the unit vector

indicates the axis of rotation. Refer to the

Appendix for the components of the matrix

. The details of the quaternion computation can be found in [

36].

Figure 1.

Flowchart of the quaternion-based extended Kalman filter for complete body state estimation.

Figure 1.

Flowchart of the quaternion-based extended Kalman filter for complete body state estimation.

Considering the feedback sensors, a 2-axis inclinometer is used to measure roll and pitch

, and a 3-axis gyro in the IMU is used to obtain angular velocity

. Although the inclinometer has a similar internal structure to the accelerometer, and it indeed uses the gravity vector as the criterion for inclination measurement, the commercial inclinometer is utilized owing to its precision and low noise level. When the robot performs straight walking (

i.e., no yaw motion involved), the yaw measurement

is set to zero in the empirical implementation. When the work extends to the turning motion, the yaw orientation of the robot was set to be the nominal trajectory. With estimated body orientation

by Equation (3) and measured linear acceleration in the body frame

by the 3-axis accelerometer in the IMU, the body linear acceleration in the inertial frame can be derived through coordination transformation and after gravity compensation:

which is used as the measured acceleration in Equation (1). Note that the world coordinate system is set at the beginning of the walk, and the relationship between the body and the world coordinate system in the legged odometry is established by the sequential composition of joint encoder data and leg kinematics. The legged odometry data is further fused with the EKF to estimate the body state.

It is necessary to mention here that the CoM moves during robot locomotion, and this phenomenon occurs on all kinds of multi-legged platforms because the legs are, in general, not massless. The IMU is fixedly mounted on the robot (

i.e., a strapdown IMU) at the position equivalent to the robot CoM when the robot is in standing posture. The complete CAD model of the robot reveals that the CoM varies by less than 15 mm in our walking experiment. When the CoM moves during a walking experiment (the position can be correctly estimated by the joint angles), its translational state can be computed from the IMU translational state plus the effect of angular acceleration (

i.e., can be computed kinematically by the 12-axis IMU mount on the robot [

20]) and angular velocity (

i.e., by the gyro). Because the robot body is programmed to move without orientation variation during walking, the angular state is comparably small. We found that the acceleration difference between the CoM and the IMU is not significant. In addition, we found that the acceleration computation that occurs as a consequence of this position shifting is noisy because, in our case, the distance between the two positions is small and the angular motion is not obvious. As a result, we found that the state difference between the FP and actual CoM in our application was small and decide to use the estimation at the FP as the reference position for feedback, rather than to precisely estimate the CoM position in our current imprecisely built robot.

To offer a brief summary of this section, we intend to use the body state but not the ZMP state as the sensory information for feedback control. Therefore, creating a robust body state estimator is an important task that must be completed before the implementation of the control strategy. The idea of sensor fusion is to combine the advantages of “leg odometry” and dynamic IMU-based state information. For the bipedal walking robot with foot contact, the sequential composition of the forward/inverse kinematics with joint encoders would ideally be able to deliver non-drift body pose information. However, the computation on the physical robot is compromised by imperfect empirical conditions such as backlash, assembly inaccuracy, rigidity of the structure,

etc. One obvious example is that when the robot is programmed to pose in double stance according to kinematics, there is a foot positioning error on the physical robot of up to 2 cm, so that one of the feet is not able to flatly contact the ground. In addition, when the foot slips, the composition has some drift error, and this is the reason we called it “legged odometry.” In contrast to the encoders which provide information on the position state, the IMU provides the derivative state or the double derivative state, which captures the dynamics of the robot better. However, the IMU-only system is not good for position or orientation estimation (

i.e., drift problem) because of unobservability. As a result, the body state can be better estimated by fusing both encoder- and IMU data. For each DOF, there are two sensory inputs for fusion. Translational DOFs use encoders and accelerometers. Pitch and roll use inclinometers and gyros. Ideally, the bipedal kinematics based on encoder data can yield body orientation as well, and this was indeed our first method for yielding orientation for fusion. However, the encoders’ orientation estimation on the physical robot was very bad (about 3 degrees), resulting from imperfect empirical conditions such as backlash, assembly inaccuracy, rigidity of the structure,

etc. Therefore, the inclinometer was utilized, which provided better estimation on the robot. Though the inclinometer is not good in a dynamic environment because it is an accelerometer-based device, the sensing accuracy is tolerable for our current walking experiment. Furthermore, the raw data coming from each sensor (encoders and accelerometers or inclinometer and gyroscopes) are not correctly related through derivation because both sensors are noisy. This is the major reason why we use the (extended) Kalman filter, which fuses the data and provides the correctly related estimation. Finally,

Figure 2 shows the overall information flow of the proposed body state estimator.

Figure 2.

Structure of the body state estimator.

Figure 2.

Structure of the body state estimator.

3. Multi-Sensor Feedback Control Algorithm for the Stable Walking of a Bipedal Robot

Theoretically, by utilizing the nominal trajectory according to the preview control law, the corresponding CoM trajectory can be derived based on the preplanned ZMP trajectory. Then, the position of each joint angle at each point in time can be obtained via inverse kinematics of the robot. However, in reality, the empirical CoM trajectory of the robot does not follow the planned version owing to many uncertainties, such as backlash in the mechanism, un-modeled dynamics, disturbance, discrepancy between robot and LIPM, etc. To remedy this unwanted phenomenon, a real-time controller is implemented to regulate the actual body trajectory of the robot to approach the nominal one and further improve stability while the robot is in motion. This controller relies on multi-sensor information, including joint angles (from encoders), acceleration (from IMU), angular velocity (from IMU), and force and torque of the feet (from force sensors). This multi-sensor feedback controller is composed of two parts: a body state feedback control algorithm and a foot posture corrector. These two parts are described separately below.

3.1. Body State Feedback Control Algorithm

In the proposed body state feedback control, the state of the fixed position (

i.e., FP) estimated by the body state estimator was employed to modify the difference between the current FP position and the nominal one. Since the displacement modification is directly added to the FP position, a damping controller is chosen to smooth position modification [

34]. While a PID controller was also tried, it caused chattering in simulations and preliminary experiments. By utilizing the estimator that provides the empirical FP trajectory

described in

Section 2 and the computed nominal FP trajectory

described in Equation (2), the adjustment of FP displacement can be set according to the following:

The damping controller can be separated into two parts. The first part is a position controller (i.e., ), where represents the nominal FP trajectory derived based on the preview control law, represents the estimated FP position, and is the proportional gain. The second part functions as a damper to smoothen the response of the controller, where the variation of the position (i.e., ) can be numerically derived through the first-order differential equation. Thus, is a time constant. Finally, the calculated is added to the preview controller by equation to compensate for the discrepancy between the estimated FP position and nominal FP position. Thus, if the gain is small, the effect of the real-time adjustment is minor and the robot would move according to its nominal motion. In contrast, if is too large, the compensation may overcorrect the robot and the robot may unstably overshoot in the compensated direction. If is small, the effect of modification decreases quickly. On the other hand, if is large, it causes a slow response and the robot may again unstably move toward the over-compensated direction. With the control strategy described in Equation (6), the position error would be regulated to improve the walking performance of the robot. Note that the strategy of regulating the FP trajectory along with the lateral y-direction is implemented independently and in the same manner.

4. The Bipedal Robot and Experiment Setup

The child-sized bipedal robot shown in

Figure 6 is utilized for experimental evaluation of the proposed body state estimator and the multi-sensor feedback control algorithm. The robot is 1.27 m in height and 78 kg in mass, and it has 6 active degrees of freedom in each leg. A real-time (RT) embedded control system is installed on the robot as the main computation power, including a PXI chassis (PXI-1031DC, National Instruments (NI), Austin, TX, USA), a PXI embedded controller (PXI-8110, NI), a digital I/O board with programmable FPGA (PXI-7813R, NI), and an expansion chassis (NI 9151, NI) with an A/O module (NI 9264, NI) and an A/I module (NI 9205, NI). All active joints are driven by brushed DC motors (RE-40, Maxon, Sachseln, Switzerland) with integrated magnetic encoders for joint angle measurement. The robot has an IMU comprising one 3-axis accelerometer (ADXL330, Analog Device, Norwood, MA, USA) and three 1-axis rate gyros (ADXRS610, Analog Device) mounted at a fixed point on the body, which is also equal to the CoM when the robot is in the standing posture. It also has a 2-axis inclinometer (SCA100T, VTI Instrument, Irvine, CA, USA) for body inclination measurement. In addition, the robot has two 6-axis force/torque transducers (IFS-90M40A100-I50-ANA, NITTA, Suwanee, GA, USA) mounted on the ankles. The software in the RT processor and the FPGA run with 1 kHz and 10 kHz sampling rates, respectively.

Figure 6.

Picture of the child-sized bipedal robot that serves as the platform for experimental evaluation.

Figure 6.

Picture of the child-sized bipedal robot that serves as the platform for experimental evaluation.

In order to quantitatively evaluate the performance of the estimator and the controller, several experiments were executed whereby the robot ran within the ground truth measurement system (GTMS) as shown in

Figure 7, which is composed of two 500 Hz high-speed cameras (A504k, Basler, Ahrensburg, Germany) and one 6-axis force plate (FP4060-07, Bertec, Columbus, OH, USA). The high-speed cameras are mounted on the top right and left sides of the runway. When a bright marker is captured by both cameras, its spatial coordinate can be reconstructed. By installing three bright LEDs on top of the robot, the spatial 6-DOF body state can be derived according to the geometrical relation between the markers and the robot’s fixed body position. The 6-axis force plate is mounted in the middle of the runway to capture the force interaction between the ground and the robot. More details can be found here [

37].

Figure 7.

Experimental setup for bipedal walking.

Figure 7.

Experimental setup for bipedal walking.

6. Conclusions

In this paper, a sensor data fusion algorithm was developed to obtain the correct body motion for a bipedal robot during walking. In order to lower the measurement error and filter the signal noise, an EKF-based body state estimator was designed with sensory information from the joint encoders, the IMU, and the inclinometer. By employing this body state estimator, the true body state of the humanoid robot can be acquired, including translation at a specific fixed position (i.e., FP) on the body and orientation. Later, the proposed body state estimator was employed as a feedback control strategy to further improve the stability of the bipedal walking motion by regulating its body motion in real time. In addition, a foot posture corrector was introduced to reduce the extraneous torque resulting from the complex contact conditions between the robot’s soles and the ground. The performance of the estimator and the control strategy are assessed under the ground truth measurement system. Though there are some imperfections and disturbances, the tracking error arises from the discrepancy between the LIPM-based preview control and the real robot system. However, the proposed controller can still achieve some improvements compared with the open-loop strategy. First, the performance of the proposed body state estimator was evaluated by comparing its estimated FP trajectory with the empirical robot’s FP trajectory. The averaged RMS errors are 4.38 mm in the fore-aft direction and 6.49 mm in the lateral direction, which show improvements of 35% and 8%, respectively, compared to the nominal trajectory. Second, the body state feedback control algorithm is employed to regulate the actual FP position relative to the desired one. The performance of the controller is evaluated by the RMS errors of the robot’s FP and ZMP trajectories to the nominal FP and ZMP trajectories. With the proposed body state feedback control structure, the averaged RMS errors of the FP position improve 23% and 37%, respectively, in comparison with the robot operated by the open-loop method. This indicates that the proposed body regulation strategy is functional and stabilizes the robot’s walking motion. Finally, the proposed foot posture corrector was added to the system. During a turning task, the averaged RMS errors of the ZMP positions improve 42% and 5% respectively in comparison with the robot operated by the open-loop method.

We are in the process of developing a whole-body controller to obtain a more accurate CoM state by fusing force information and by using multiple models to cover the dynamics of the robot. We also plan to improve the control structure, which could then adjust the walking pattern in real time to realize reliable walking suitable for a wider range of environmental settings. At the same time, it would be feasible to carry out theoretical work on a combined controller which simultaneously takes the CoM and ZMP trajectories into account.