Intensity Variation Normalization for Finger Vein Recognition Using Guided Filter Based Singe Scale Retinex

Abstract

:1. Introduction

2. Intensity Variation

3. Proposed Intensity Variation Normalization Method

3.1. Guided Filter

3.2. Single Scale Retinex Algorithm

3.3. Proposed GFSSR

4. Experimental Results

4.1. Dataset

4.2. Investigation of Optimal Parameters

4.3. Comparison of Image Enhancement

4.4. Comparison of Matching Accuracy

4.4.1. Experiments Results on MMCBNU_6000

| Data | EER Values/Improved Ratios | ||

|---|---|---|---|

| DWT [28] | LBP [29] | LPQ [30] | |

| Original image | 3.93% | 2.20% | 2.33% |

| IN | 3.36%/14.5% | 6.67%/−203.2% | 6.78%/−191.0% |

| SR | 3.71%/5.6% | 2.26%/−2.7% | 2.03%/12.9% |

| SSR | 5.17%/−31.6% | 1.60%/27.3% | 3.27%/−40.3% |

| HE | 4.77%/−21.4% | 2.18%/1.0% | 2.51%/−7.7% |

| Proposed GFSSR | 2.57%/34.6% | 1.50%/31.8% | 1.56%/33.0% |

4.4.2. Experiments Results on UTFVP

| Data | EER Values/Improved Ratios | ||

|---|---|---|---|

| DWT [28] | LBP [29] | LPQ [30] | |

| Original image | 12.09% | 8.62% | 8.06% |

| IN | 9.16%/24.2% | 11.26%/−30.6% | 5.83%/27.7% |

| SR | 12.52%/−3.6% | 7.5%/13.0% | 7.78%/3.5% |

| SSR | 14.71%/−21.7% | 8.32%/3.5% | 8.75%/−8.6% |

| HE | 14.32%/18.4 | 8.65%/−0.3% | 10.01%/−24.2 |

| Proposed GFSSR | 8.88%/26.6% | 5.70%/33.9% | 4.31%/46.5% |

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Lu, Y.; Yoon, S.; Xie, S.J.; Yang, J.C.; Wang, Z.H.; Park, D.S. Efficient descriptor of histogram of salient edge orientation map for finger vein recognition. Appl. Opt. 2014, 53, 4585–4593. [Google Scholar] [CrossRef] [PubMed]

- Lu, Y.; Yoon, S.; Xie, S.J.; Yang, J.C.; Wang, Z.H.; Park, D.S. Finger vein recognition using generalized local line binary pattern. KSII Trans. Internet Inf. 2014, 8, 1766–1784. [Google Scholar]

- Lu, Y.; Yoon, S.; Park, D.S. Finger vein ideitification system using two cameras. Electron. Lett. 2014, 50, 1591–1593. [Google Scholar] [CrossRef]

- Mohd Asaari, M.S.; Suandi, S.A.; Rosdi, B.A. Fusion of band limited phase only correlation and width centroid contour distance for finger based biometrics. Expert Syst. Appl. 2014, 41, 3367–3382. [Google Scholar] [CrossRef]

- Yang, J.F.; Shi, Y.H. Towards finger-vein image restoration and enhancement for finger-vein recognition. Inf. Sci. 2014, 268, 33–52. [Google Scholar] [CrossRef]

- Raghavendra, R.; Raja, K.; Jayachander, S.; Busch, C. A low-cost multimodal biometric sensor to capture finger vein and fingerprint. In Proceedings of the IEEE International Joint Conference on Biometrics, Clearwater, FL, USA, 29 September–2 October 2014; pp. 1–7.

- Xi, X.M.; Yang, G.P.; Yin, Y.L.; Meng, X.J. Finger vein recognition with personalized feature selection. Sensors 2013, 13, 11243–11259. [Google Scholar] [CrossRef] [PubMed]

- Liu, F.; Yang, G.P.; Yin, Y.L.; Wang, S.Q. Singular value decomposition based minutiae matching method for finger vein recognition. Neurocomputing 2014, 145, 75–89. [Google Scholar] [CrossRef]

- Miura, N.; Nagasaka, A.; Miyatake, T. Feature extraction of finger-vein patterns based on repeated line tracking and its application to personal identification. Mach. Vis. Appl. 2004, 15, 194–203. [Google Scholar] [CrossRef]

- Xie, S.J.; Yoon, S.; Yang, J.C.; Lu, Y.; Park, D.S.; Zhou, B. Feature component-based extreme learning machines for finger vein recognition. Cogn. Comput. 2014, 6, 446–461. [Google Scholar] [CrossRef]

- Kumar, A.; Zhou, Y.B. Human identification using finger images. IEEE Trans. Image Process. 2012, 21, 2228–2244. [Google Scholar] [CrossRef] [PubMed]

- Kim, H.-G.; Lee, E.; Yoon, G.-J.; Yang, S.-D.; Lee, E.; Yoon, S. Illumination normalization for sift based finger vein authentication. In Advances in Visual Computing; Bebis, G., Boyle, R., Parvin, B., Koracin, D., Fowlkes, C., Wang, S., Choi, M.-H., Mantler, S., Schulze, J., Acevedo, D., et al., Eds.; Springer: Berlin/Heidelberg, Germany, 2012; Volume 7432, pp. 21–30. [Google Scholar]

- Yang, J.F.; Zhang, B.; Shi, Y.H. Scattering removal for finger-vein image restoration. Sensors 2012, 12, 3627–3640. [Google Scholar] [CrossRef] [PubMed]

- Pi, W.; Shin, J.; Park, D.S. An effective quality improvement approach for low quality finger vein image. In Proceedings of the 2010 International Conference on Electronics and Information Engineering (ICEIE), Kyoto, Japan, 1–3 August 2010; pp. 424–427.

- Yang, J.F.; Shi, Y.H. Finger-Vein roi localization and vein ridge enhancement. Pattern Recogn. Lett. 2012, 33, 1569–1579. [Google Scholar] [CrossRef]

- Yang, J.F.; Yang, J.L. Multi-Channel gabor filter design for finger-vein image enhancement. In Proceedings of the Fifth International Conference on Image and Graphics, Xi’an, China, 20–23 September 2009; pp. 87–91.

- Park, Y.H.; Park, K.R. Image quality enhancement using the direction and thickness of vein lines for finger-vein recognition. Int. J. Adv. Robot. Syst. 2012, 9, 1–10. [Google Scholar]

- Lee, E.C.; Park, K.R. Image restoration of skin scattering and optical blurring for finger vein recognition. Opt. Lasers Eng. 2011, 49, 816–828. [Google Scholar] [CrossRef]

- Yang, J.F.; Wang, J.J. Finger-Vein image restoration considering skin layer structure. In Proceedings of the 2011 International Conference on Hand-Based Biometrics (ICHB), Hongkong, China, 17–18 November 2011; pp. 1–5.

- He, K.M.; Sun, J.; Tang, X.O. Guided image filtering. IEEE Trans. Pattern Anal. 2013, 35, 1397–1409. [Google Scholar] [CrossRef] [PubMed]

- Lu, Y.; Xie, S.J.X.; Yoon, S.; Wang, Z.; Park, D.S. An available database for the research of finger vein recognition. In Proceedings of the 6rd International Congress on Image and Signal Processing, Hangzhou, China, 16–18 December 2013; pp. 386–392.

- Ton, B.T.; Veldhuis, R.N.J. A high quality finger vascular pattern dataset collected using a custom designed capturing device. In Proceedings of the 2013 International Conference on Biometrics (ICB), Madrid, Spain, 4–7 June 2013; pp. 1–5.

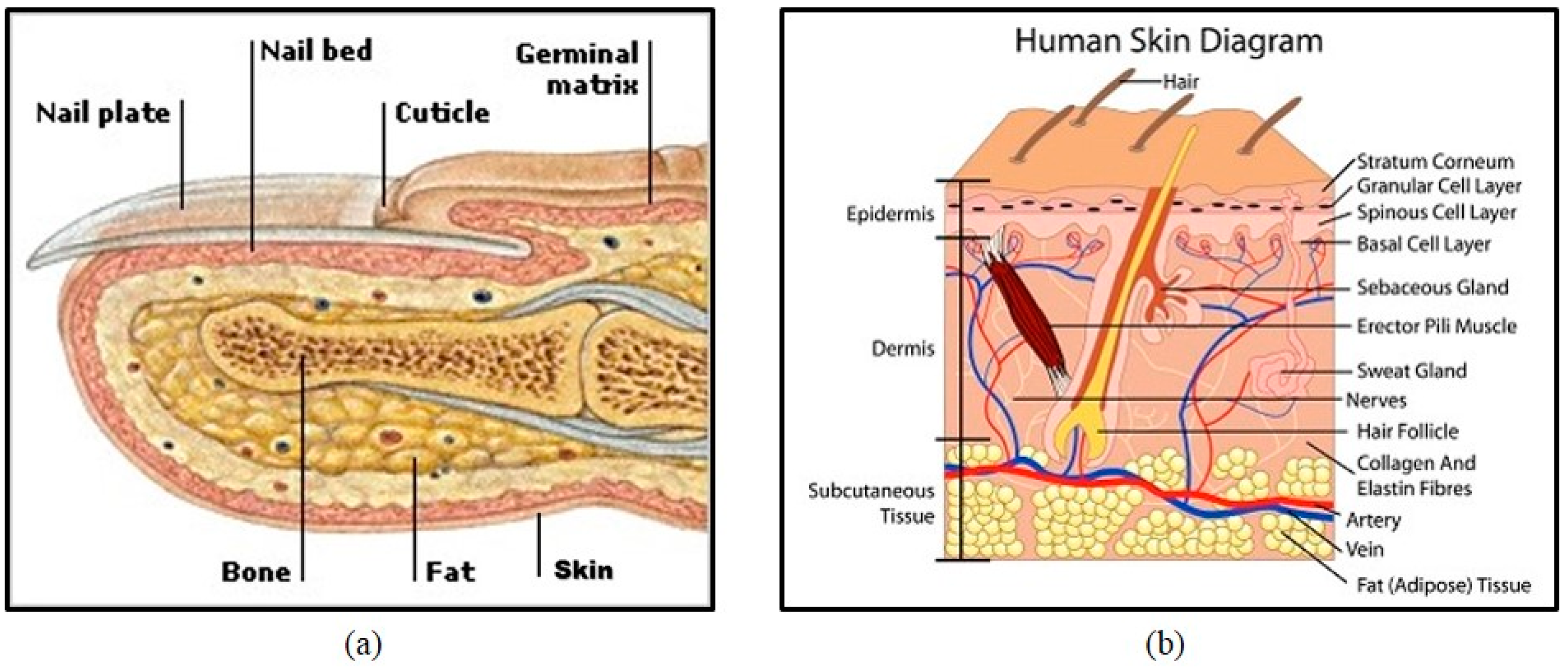

- Finger Cross-section Image. Available online: http://www.factmonster.com/dk/science/encyclopedia/skin.html (accessed on 10 July 2015).

- Skin Diagram Images. Available online: http://www.Bloomingcomplexions.Com/skin-layers/ (accessed on 10 July 2015).

- Jobson, D.J.; Rahman, Z.U.; Woodell, G.A. Properties and performance of a center/surround retinex. IEEE Trans. Image Process. 1997, 6, 451–462. [Google Scholar] [CrossRef] [PubMed]

- Chen, W.; Er, M.J.; Wu, S.Q. Illumination compensation and normalization for robust face recognition using discrete cosine transform in logarithm domain. IEEE Trans. Syst. Man Cybern. B 2006, 36, 458–466. [Google Scholar] [CrossRef]

- Lu, Y.; Xie, S.J.; Yoon, S.; Yang, J.C.; Park, D.S. Robust finger vein roi localization based on flexible segmentation. Sensors 2013, 13, 14339–14366. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, D.T.; Park, Y.H.; Shin, K.Y.; Park, K.R. New fing-vein recognition method based on image quality assessment. KSII Trans. Internet Inf. 2013, 7, 347–365. [Google Scholar]

- Ojala, T.; Pietikäinen, M.; Mäenpää, T. Multiresolution gray-scale and rotation invraiant texture classification with local binary patterns. IEEE T. Pattern Anal. 2002, 21, 971–987. [Google Scholar] [CrossRef]

- Rahtu, E.; Heikkila, J.; Ojansivu, V.; Ahonen, T. Local phase quantization for blur-insensitive image analysis. Image Vis. Comput. 2012, 30, 501–512. [Google Scholar] [CrossRef]

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xie, S.J.; Lu, Y.; Yoon, S.; Yang, J.; Park, D.S. Intensity Variation Normalization for Finger Vein Recognition Using Guided Filter Based Singe Scale Retinex. Sensors 2015, 15, 17089-17105. https://doi.org/10.3390/s150717089

Xie SJ, Lu Y, Yoon S, Yang J, Park DS. Intensity Variation Normalization for Finger Vein Recognition Using Guided Filter Based Singe Scale Retinex. Sensors. 2015; 15(7):17089-17105. https://doi.org/10.3390/s150717089

Chicago/Turabian StyleXie, Shan Juan, Yu Lu, Sook Yoon, Jucheng Yang, and Dong Sun Park. 2015. "Intensity Variation Normalization for Finger Vein Recognition Using Guided Filter Based Singe Scale Retinex" Sensors 15, no. 7: 17089-17105. https://doi.org/10.3390/s150717089