Moving Object Detection on a Vehicle Mounted Back-Up Camera

Abstract

:1. Introduction

2. Background Works

2.1. Background Subtraction

2.2. Optical Flow

3. Moving Object Detection

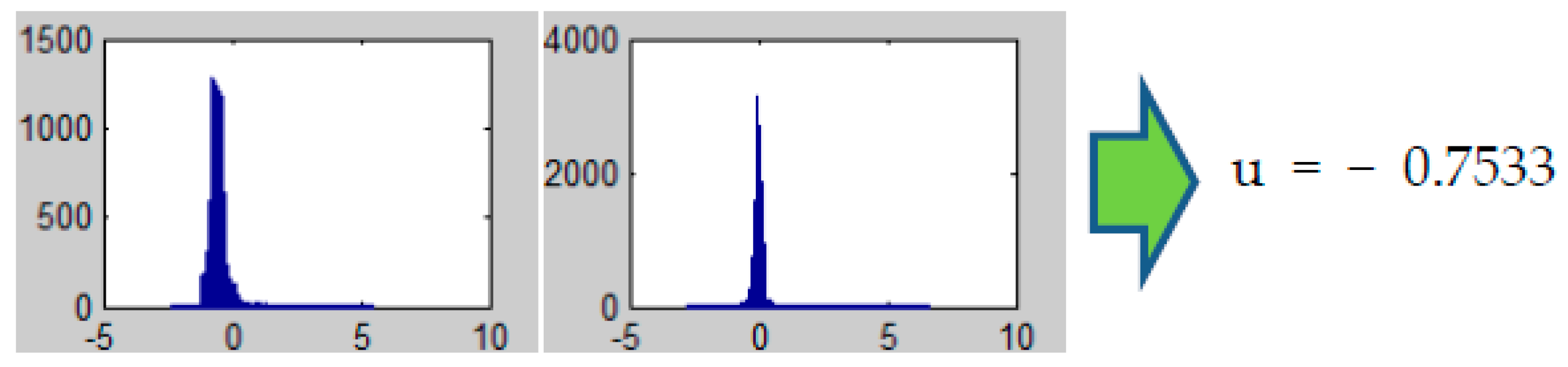

3.1. Background Motion Compensation

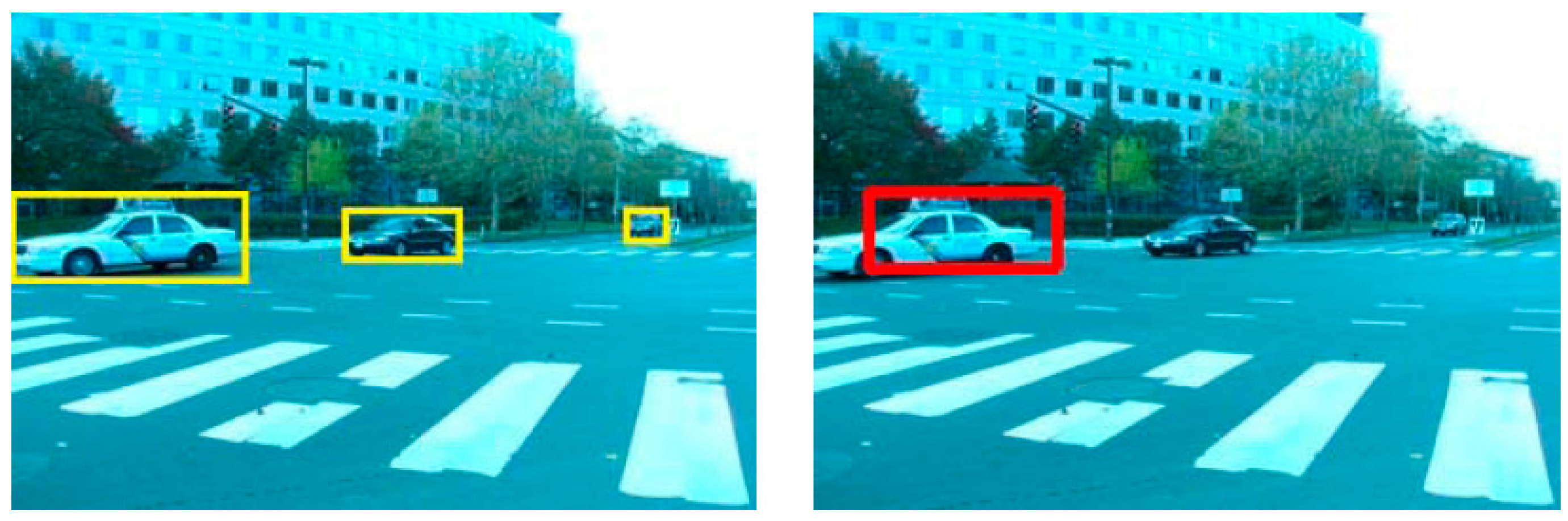

3.2. Object Detection

4. Experimental Results

| Algorithm | Precision | Recall |

|---|---|---|

| Rank-constraint 1 [26] | 0.83 | 0.99 |

| Rank-constraint 2 [18] | 0.95 | 0.92 |

| Suggested method | 0.98 | 0.78 |

| Scenarios |  |  |  |  |  |

| False-Positives | 0 | 2 | 2 | 0 | 3 |

| False-Negatives | 0 | 0 | 1 | 4 | 0 |

| Scenarios |  |  |  |  |  |

| False-Positives | 1 | 0 | 2 | 0 | 1 |

| False-Negatives | 1 | 0 | 0 | 0 | 1 |

| Scenarios |  |  |  |  |  |

| False-Positives | 0 | 0 | 0 | 1 | 0 |

| False-Negatives | 0 | 1 | 2 | 0 | 0 |

| Scenarios |  |  |  |  | |

| False-Positives | 0 | 0 | 0 | 0 | |

| False-Negatives | 0 | 1 | 0 | 0 |

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Fleming, B. New automotive electronics technologies. IEEE Veh. Technol. Mag. 2008, 3, 10–12. [Google Scholar]

- Jiménez-Pinto, J.; Torres-Torriti, M. Optical flow and driver’s kinematics analysis for state of alert sensing. Sensors 2013, 13, 4225–4257. [Google Scholar] [CrossRef] [PubMed]

- Llamazares, A.; Ivan, V.; Molinos, E.; Ocaña, M.; Vijayakumar, S. Dynamic obstacle avoidance using bayesian occupancy filter and approximate inference. Sensors 2013, 13, 2929–2944. [Google Scholar] [CrossRef] [PubMed]

- Doğan, S.; Temiz, M.S.; Külür, S. Real time speed estimation of moving vehicles from side view images from an uncalibrated video camera. Sensors 2010, 10, 4805–4824. [Google Scholar] [CrossRef] [PubMed]

- Zhan, C.; Duan, X.; Xu, S.; Song, Z.; Luo, M. An improved moving object detection algorithm based on frame difference and edge detection. In Proceedings of the Fourth International Conference on Image and Graphics, Chengdu, China, 22–24 August 2007.

- McFarlane, N.J.B.; Schofield, C.P. Segmentation and tracking of piglets in images. Mach. Vis. Appl. 1995, 8, 187–193. [Google Scholar] [CrossRef]

- Friedman, N.; Russell, S. Image segmentation in video sequences: a probabilistic approach. In Proceedings of the 13th Conference on Uncertainty in Artificial Intelligence, Providence, RI, USA, 1–3 August 1997.

- Chris, S.; Grimson, W.E.L. Adaptive background mixture models for real-time tracking. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Fort Collins, CO, USA, 23–25 June 1999.

- Poggio, T.; Torre, V.; Koch, C. Computational vision and regularization theory. Nature 1985, 317, 314–319. [Google Scholar] [CrossRef] [PubMed]

- Yilmaz, A.; Javed, O.; Shah, M. Object tracking: A survey. J. ACM Comput. Surv. 2006, 38, 1–45. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 25 June 2005.

- Viola, P.; Jones, M. Rapid object detection using a boosted cascade of simple features. In Proceedings of the IEEE Computer Society Confercence on Computer Vision and Pattern Recognition, Kauai, HI, USA, 8–14 December 2001.

- Lienhart, R.; Kuranov, A.; Pisarevsky, V. Empirical Analysis Of Detection Cascades of Boosted Classifiers for Rapid Object Detection. In Pattern Recognition—25th DAGM Symposium, Magdeburg, Germany, September 10–12, 2003; Springer Berlin Heidelberg: Berlin, Germay, 2003. [Google Scholar]

- Huang, S.C.; Chen, B.H. Highly accurate moving object detection in variable bit rate video-based traffic monitoring systems. Neural Netw. Learn. 2013, 24, 1920–1931. [Google Scholar] [CrossRef] [PubMed]

- Huang, S.C.; Chen, B.H. Automatic moving object extraction through a real-world variable-bandwidth network for traffic monitoring systems. IEEE Trans. Ind. Electron. 2013, 61, 2099–2112. [Google Scholar] [CrossRef]

- Cheng, F.C.; Huang, S.C.; Chen, B.H. A hybrid background subtraction method with background and foreground candidates detection. ACM Trans. Intell. Syst. Technol. 2015, 7, 1–14. [Google Scholar] [CrossRef]

- Guo, J.M.; Hsia, C.H.; Liu, Y.F.; Shih, M.H.; Chang, C.H.; Wu, J.Y. Fast background subtraction based on a multilayer codebook model for moving object detection. IEEE Trans. Circuits Syst. Video Technol. 2013, 23, 1809–1821. [Google Scholar] [CrossRef]

- Zhou, X.; Yang, C.; Yu, W. Moving object detection by detecting contiguous outliers in the low-rank representation. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 35, 597–610. [Google Scholar] [CrossRef] [PubMed]

- Huang, S.C.; Do, B.H. Radial basis function based neural network for motion detection in dynamic scenes. IEEE Trans. Cybern. 2013, 44, 114–125. [Google Scholar] [CrossRef] [PubMed]

- Bouwmans, T. Traditional and recent approaches in background modeling for foreground detection: An overview. Comput. Sci. Rev. 2014, 11–12, 31–66. [Google Scholar] [CrossRef]

- Sharmin, N.; Brad, R. Optimal filter estimation for Lucas-Kanade optical flow. Sensors 2012, 12, 12694–12709. [Google Scholar] [CrossRef]

- Black, B.J.; Anandan, P. Robust dynamic motion estimation over time. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Maui, HI, USA, 3–6 June 1991.

- Brox, T.; Bruhn, A.; Papenberg, N.; Weickert, J. High Accuracy Optical Flow Estimation Based on a Theory for Warping. In Computer Vision—ECCV 2004: 8th European Conference on Computer Vision, Prague, Czech Republic, May 11–14, 2004. Proceedings, Part IV; Springer Berlin Heidelberg: Berlin, Germany, 2004; pp. 25–36. [Google Scholar]

- Jepson, A.; Black, M.J. Mixture models for optical flow computation. In Proceedings of IEEE Computer Society Conference on Computer Vision and Pattern Recognition, New York, NY, USA, 15–17 June 1993.

- Kim, J.; Wang, X.; Wang, H.; Zhu, C.; Kim, D. Fast moving object detection with non-stationary background. Multimedia Tools Appl. 2013, 67, 311–335. [Google Scholar] [CrossRef]

- Sheikh, Y.; Javed, O.; Kanade, T. Background subtraction for freely moving cameras. In Proceedings of the IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009.

- Black, M.J.; Anandan, P. The robust estimation of multiple motions: Parametric and piecewise-smooth flow fields. Comput. Vis. Image Underst. 1996, 63, 75–104. [Google Scholar] [CrossRef]

- Sand, P.; Teller, S. Particle Video: Long-Range Motion Estimation Using Point Trajectories. Int. J. Comput. Vis. 2008, 80, 72–91. [Google Scholar] [CrossRef]

- Guo, G.; Kaye, M.E.; Zhang, Y. Enhancement of Gaussian background modelling algorithm for moving object detection & its implementation on FPGA. In Proceedings of the IEEE 28th Canadian Conference on Electrical and Computer Engineering, Halifax, NS, Canada, 3–6 May 2015.

- Botella, G.; Martín, J.A.; Santos, M.; Meyer-Baese, U. FPGA-based multimodal embedded sensor system integrating low- and mid-level vision. Sensors 2011, 11, 8164–8179. [Google Scholar] [CrossRef] [PubMed]

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons by Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, D.-S.; Kwon, J. Moving Object Detection on a Vehicle Mounted Back-Up Camera. Sensors 2016, 16, 23. https://doi.org/10.3390/s16010023

Kim D-S, Kwon J. Moving Object Detection on a Vehicle Mounted Back-Up Camera. Sensors. 2016; 16(1):23. https://doi.org/10.3390/s16010023

Chicago/Turabian StyleKim, Dong-Sun, and Jinsan Kwon. 2016. "Moving Object Detection on a Vehicle Mounted Back-Up Camera" Sensors 16, no. 1: 23. https://doi.org/10.3390/s16010023

APA StyleKim, D.-S., & Kwon, J. (2016). Moving Object Detection on a Vehicle Mounted Back-Up Camera. Sensors, 16(1), 23. https://doi.org/10.3390/s16010023