1. Introduction

Potato is now regarded as the fourth most important food crop around the world after wheat, rice and maize (corn), due to its great yield production and high nutritive value [

1]. However, quality and nutrition of potato tubers can be adversely affected by mechanical damages, which happen frequently during the handling chain from harvest, storage, and transport to packaging. Skinning injury is related to excoriation of potato skin. In fact, potato periderm is composed of three cell layers: phellem, phellogen and phelloderm [

2]. Among them, well-organized suberized cells constitute phellem, which is referred to as skin. According to Lulai [

3], the resistance to skinning injury is determined by phellem (skin) tensile-related fractures and phellogen shear-related fractures, where the force required for fracture of the phellogen cell walls is dominant. Skinning injury is one kind of superficial damage on potatoes that can result in the fracture of phellogen cell walls of the native periderm and loss of the protective layer of phellem cells [

4]. Skinning injury not only results in the discoloration of the wounded area, but also provides sites for infection by pathogens [

5], and it can increase water loss and wastage during storage [

6]. It has been reported as a common, persistent and costly problem of the potato industry [

4].

Nowadays, machine vision technology has been attracting more attention in scientific inspection for quality and safety of a variety of food and agricultural products [

7]. Visible imaging can be regarded as one traditional machine vision technology working in the range of visible wavelengths (380–780 nm). Color and texture are two sensory quality attributes that have been frequently used in the external quality inspecting and grading. Containing the basic visual information in the images corresponding to human vision, color is viewed as the most elementary information that is stored in pixels [

8]. The aim of texture analysis is to try to discriminate different patterns in images by obtaining the variance of intensity values across pixels or by extracting the dependency of intensity values between pixels and their neighboring pixels [

9,

10]. Color and texture features have been used on potato external quality inspection. Marique et al. [

11] developed a procedure to process and segment potato images using Kohonen’s self-organizing map (SOM). RGB values of each pixel were fed into the model to discriminate between pixels associated with either healthy or bruised parts of potato tubers. They found that bruises that were very dissimilar in appearance were correctly identified, and some particular defects such as green spots could be located as well. In a broad scale, Ebrahimi et al. [

12] believed that the difference between red and green components of RGB space was effective in greening detection in potatoes. Color and texture features are often combined to improve detection. Barnes et al. [

13] used an adaptive boosting algorithm (AdaBoost) to discriminate between blemishes and non-blemishes based on both the color and texture features of the region surrounding a given pixel. To detect external defects on potatoes, Moallem et al. [

14] extracted RGB components of each pixel and co-occurrence texture features from the grey level component of color-space images to implement into several supervised classifiers. Results showed that SVM (Support Vector Machine) represented a higher performance for potato defect detection. Based on the above research, color and texture features were extracted and implemented into LS-SVM (Least Square Support Vector Machine) to recognize skinning injury.

The biospeckle imaging technique is an emerging non-destructive method for the evaluation of vitality of biomaterials, which is based on the biospeckle phenomenon when the biomaterial is illuminated by coherent light. The backscattered light displays granular patterns, i.e., presenting randomly distributed, time-dependent light and dark spots on CCD (Charge Coupled Device) sensor. Reflecting surface information and particle movement at the cellular/sub-cellular level, it has found its way into the applications in various agro-products/food detection areas [

15,

16,

17,

18]. Previous study in our laboratory also confirmed the feasibility of this non-destructive method in defect and stem/calyx discrimination [

19]. Zdunek et al. [

15] believed that light propagation and Biospeckle Activity (BA) could be affected since red laser (670 nm) is absorbed by chlorophyll. They investigated the potential interrelationships between the BA and chlorophyll content in apples. Results showed that BA linearly decreased with increasing chlorophyll content. Alves et al. [

17] studied the correlation between particular phenomena (moisture content, respiration rate, water activity, and mass loss changes) and the activity observed in fresh-cut carrots. They concluded that it was viable to monitor the respiration process in fresh-cut carrots and assign a spectral signature to their water content and respiration. Arefi et al. [

20] carried out research to recognize mealy from non-mealy apples via developing classification models based on biospeckle imaging. Results showed that BA was higher for fresh apples in comparison with semi-mealy and mealy samples. Skinning injury results in compositional and structural changes in wounded area, which may serve as the foundation for BA monitoring and skinning injury classification.

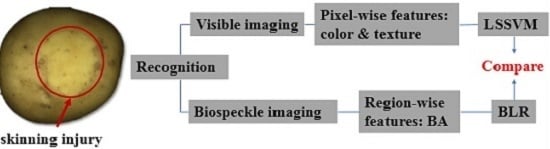

Though several researches have been conducted to uncover the mechanism of induction and regulation of skinning injury [

21,

22,

23], limited progress has been made in skinning injury detection to our knowledge. The objective of this research was attempting to identify skinning injury on potatoes using two CCD-based methods, i.e., visible imaging and biospeckle imaging. Pixel-wise features including color and texture were derived from visible imaging, while the calculation of region-wise feature of BA was described in detail based on biospeckle imaging. Pixel- and region-wise features were implemented into LS-SVM and BLR (Binary Logistic Regression) to conduct classification, respectively. Finally, these two methods were compared in recognizing skinning injury.

4. Discussions

Wound-induced suberization begins to establish after skinning injury. According to Lulai [

4], tuber tissue suberization involves two stages: (1) “closing layer” is formed whereby the walls of existing cells at the wound site suberize, which is often referred to as “primary suberization”; and (2) “wound periderm” whereby files of new cells are formed and suberized below the closing layer, which is often referred to as “secondary suberization”. Schematic diagram of closing layer formation was shown in

Figure 9. Suberized rapidly neighboring the wound surface, closing layer serves to provide the initial protective barrier for the injury [

31]. According to Lulai et al. [

22], rapid wound-induced increase in Polyamines biosynthesis was observed during closing layer formation. Within 6 h of wounding, increases in the in vitro activities of enzymes (arginine and ornithine decarboxylase) and expression of related genes were observed. Previous study also indicated that closing layer development completes at about six days after injury [

31,

32]. The tendency of BA after injury shown in

Figure 7 approximately conforms to the description above, indicating the feasibility of biospeckle imaging in characterizing vitality.

Within one day, physiological and biochemical reactions under wounded areas are especially fierce, particle movements become more active. After one day, closing layer on wounded area becomes established, implying the start of new periderm formation. Though secondary suberization is also involved [

4], biospeckle imaging is more likely to reflect surface or sub-surface information. The similarity of skin and flesh colors may confuse the classification performance within a short time, whereas the surface color of the injured skin becomes darker with the elapse of time due to deepening extent of oxidation for sufficient exposure to exterior environment, which guaranteed the feasibility of skinning injury recognition using color features during late stage. Texture features seem not very suitable in classifying SS and IS because of the complex and irregular textural changes, considering the suberization, the variation of lenticels and unpredictable environmental influences. Meanwhile, the scale of ROI images was limited, which may not represent the whole textural changes within injured areas. Biospeckle activity may result from processes related with movement of the scattering centers in the tissue, such as cytoplasmic streaming, organelle movement, cell growth and division during maturation and biochemical reactions [

33]. As stated by Zdunek [

34], in organs with a high pigment content or in the final stages of development biospeckle activity is relatively low. There is no research connected with color change effect on biospeckle activity up to now. Theoretically, particle movement and biochemical reactions caused by color change may affect biospeckle activity. However, how these two factors are related is still unknown.

As mentioned above, these two techniques are complementary to each other in recognition of skinning injury. Both configuration systems are low-cost, yet visible imaging system is much more mature and stable. Biospeckle imaging system is more vulnerable to exterior fluctuations, and all optical elements including laser, beam expander and polarizing film should be assembled precisely to obtain clear images on CCD sensor. In regard to time cost, visible imaging generated characteristic features more quickly, though great improvement of efficiency can be achieved considering BA computed within 10 s instead of 40 s. Furthermore, visible imaging technique has been utilized in commercial production, while biospeckle imaging technique is still restrained in lab. Nevertheless, biospeckle imaging has great potential in characterizing surface and subsurface information, which has been proved previously in our team [

19].

5. Conclusions

This paper presents the discrimination of SS and IS using CCD-based visible and biospeckle imaging techniques. For visible imaging, color and texture features were extracted and introduced into the classifier of LS-SVM. BA calculated by speckle patterns based on biospeckle imaging was regarded as the particular feature, and employed into another classifier of BLR. Results showed that color features performed better than texture features, especially for injured potatoes after storing for no less than 1 d, with the average classification accuracy of 90%. Complementarily, BA was a feasible feature in BLR when discriminating SS and IS within 1 d, with the classification accuracy of 88.10%. In addition, great efficiency improvement can be realized via capturing and processing speckle patterns within 10 s instead of 40 s.

In the future, the selection of ROI images shall be more subjective, to make sure the SS and IS are more representative and consistent. Other features, like fractal dimensions, can be further evaluated. BA calculated by THSP-based IM was not a perfect method to characterize potato vitality, so other quantitative methods could be assessed in the future. Besides, acquisition time of speckle patterns still has room to be reduced to improve efficiency further.