1. Introduction

For the robotic harvesting manipulator used in the horticultural industry, the main technique used to identify the location of fruits is a vision system. Since a vision system was proposed in this field [

1], various sensor schemes have been practiced utilizing the intensity, the spectral information, or the laser range finder [

2]. The successful fruit identification rate has been reported between 70% and 90% with a variation in laboratory conditions. Some issues are still to be solved before the widespread commercial use of the automatic harvesting manipulator. Normally, a color intensity thresholding with a certain filter has been used to contrast the salient features of an image [

3]. In practice, the image data acquired by a single sensor is degraded since the imaging sensors have certain physical limitations. On the other hand, the light spectrum is potentially affected by multiple factors in an open unstructured environment [

4]. In order to study the features from different wavebands on spectral coordinates, the hyperspectral techniques have been used to capture the necessary information from a wide range of the light spectrum. The study of the statistics of image segments obtained from different wavebands, in particular, techniques involving fuzzy wavebands, may provide the feature references necessary for the development of a machine vision system [

5]. However, the distribution of the spectra on the segments of some components from certain spectral coordinates can be fuzzy and hence make the processing extremely difficult. Alternatively, multispectral image processing has been proposed to capture and combine more information with the capability of acquiring more wavebands utilizing a smarter processing method to improve detection [

4,

6]. Instead of dispersing the light spectrum into discrete wavebands, the multispectral image captures the specific range of wavelengths across wide spectral coordinates. For example, three charge coupled device (CCD) micro cameras have been used side by side with three different optical waveband filters of 550 nm, 650 nm, and 950 nm in the fruit harvesting [

7]. The two ratios of the 550 nm to 950 nm filtered components and the 650 nm to 950 nm filtered components were calculated to contrast the fruits from the background. Successful detection was about 75% when the sky was overcast. In another study, a monochromatic near-infrared camera equipped with multi-waveband pass filters was used to identify unripe green citrus fruits from images [

8] based on the measurements done on the green leaves and the green types of citrus fruits through seasons [

4]. The reference index of band intensity was used followed by the global threshold to classify the citrus from the background. However, the resultant multispectral images were not well synchronized and aligned in dynamic scenes with the saturated area by the diffuse reflectance [

4]. On top of that, the multispectral scheme has been broadly designed and practiced in research especially for analysis of inspection in agricultural applications. Various sensors are selected and practiced depending on the application particularity [

9,

10]. For example, image fusion improved fruit detection when the fruits in the visible image were over-exposed and the fruits in the thermal image were warmer than the canopy by using an infrared thermal camera with a digital color camera [

11].

Multispectral image processing can be applied with multiple sensors shooting the same channel [

9] or a single sensor shooting the channel by interchanging specific physical optical filters [

10]. Basically, the linear combination of the components for different spectral regions are used to contrast the image for the subsequent segmentation application [

12]. The coefficients in the linear combination can be found via the principal component (PC) analysis in the linear discrete analysis. The results show good classification accuracy using both near infrared spectroscopy and Fourier transform infrared spectroscopy in plant species [

12]. In fact, the discriminant analysis using linear combination depends on the coefficients such as PCs which carries the optimal variance property. However, the result may not be accurate enough when the image data is distributed nonlinearly in color space, especially the image captured under the natural lighting condition. Hence, an alternative nonlinear solution using a fusion technique would be a way to find a more accurate discriminant result.

The multisensory fusion technique is a synergistic combination of different sources with complementarity to enhance the main information for various particular applications [

13,

14]. The use of image fusion technique has two issues to be addressed [

13] namely the registration and the fusion of the data. The registration is the prerequisite of the fusion approach to align the different resource images precisely [

13]. Most of the registration can be done by the algorithmic method to find a transform matrix based on the control point or the feature in the image [

13] or using a customized frame for a region of interest [

11]. However, the distortion in the image is transformed based on the availability of the information. The prevalent issue from the natural scene is the local disparity and the uncertainty of the availability of information which cannot be solved by the algorithmic global transform matrix, for example, the shifting of objects due to the difference in triggering a dynamic temporal coordinate [

8]. In practice, when the registration of different resources is done, the further registration process can be possibly bypassed using portable multispectral imaging systems with a dual-band spectral or a three-band spectral imaging system [

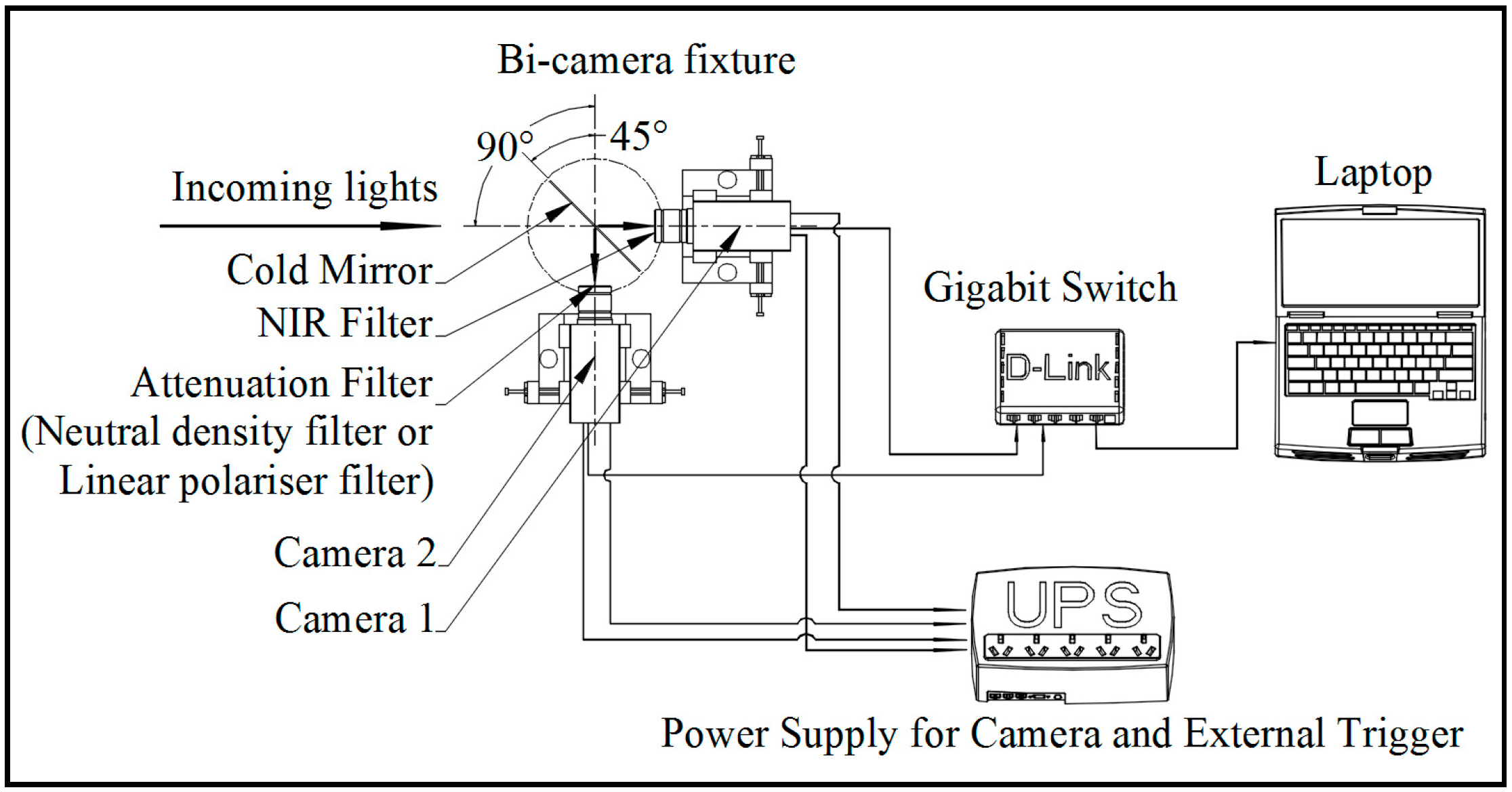

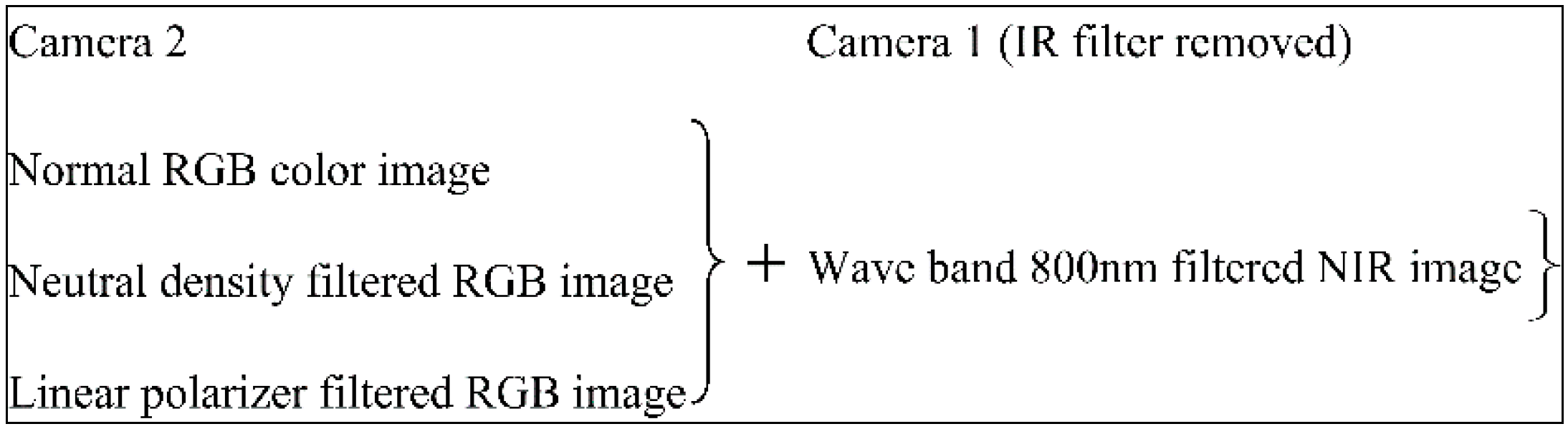

15,

16]. A similar idea has been adopted in this research with more flexibility at the terminal of two sensors to acquire various citrus fruit image data online with some physical optical attenuation filter functions [

17]. On top of the registration, the fusion technique itself is essential and applied in the signal level, pixel level, feature level, and symbol level approaches [

13]. Signal level fusion performs the direct combination of several signals in order to provide a signal that has the same general format as the source signals. The pixel level fuses the images by determining the correlated pixel from each source image. Feature level fusion extracts the feature such as the edge or other feature and combines the feature into the fused image. Symbol level fusion processes the information at each source image and then makes a decision at a high level of abstraction to the fused image. In this research, the pixel level fusion is selected due to the fact that the main objective is to enhance the citrus fruit area based on the digitized spectra in intensity. The fusion of images aims to enhance the image quality while reducing more remaining background noise to find the area of the citrus fruits for the manipulator. The pixel-level fusion is a two-dimensional signal level fusion in two approaches namely the multiscale decomposition (MSD) and the non-multiscale decomposition (NMSD). The natural citrus fruit image data contains background noise with spatial variance properties such as the unstructured similar spectral reflection as citrus color on the side or tip of the leaves. Hence the MSD methods are suited to handle the fusion of two source images compared to the NMSD methods [

13]. In consideration of both the spatial noise and the combination of different resources, the discrete wavelet transform (DWT) is adopted in this paper since the wavelet can deal with the frequency detail along the spatial coordinates in decomposition scale level. In DWT, the image is filtered by convolving two coefficient filters such as a low-pass and a high-pass filter both row wise and column wise successively. The original coefficient is downscaled to decompose the image into four coefficients such as low-low, low-high, high-low, and high-high pass coefficients in each decomposition scale level. The low pass approximation coefficient can be decomposed continually to the subsequent level. The issue of shift variance properties caused by the subsampling will not lead to extra distortion on the fused result if the images are well aligned by the nonparametric registration. In addition, the details with spatial property can be removed by thresholding the high pass coefficients in each scale level as in spatial coordinates. The fusion in the last level can be achieved with the combination of the envelope spectra from those low pass coefficients. The fusion of the coefficients from the multisensor has variant arithmetic rules such as maximum, minimum, mean or weighted mean, and majority voting in the multi-classifier application on high dimensional coordinate data space [

18,

19,

20,

21]. In addition the linear combination of component a* from CIE La*b* color space and component I from YIQ color space has been approached in a fusion strategy for robust tomato recognition [

22]. Since in CIE La*b* color space, the red/green colors are represented along the a* axis, the extreme value along a* axis depends on the salient feature in the application. The extreme value is also limited by the constraints such as the citrus color and the feature from the background. However, when the image data is nonlinearly in the color space, the more robust method is encouraged instead of the linear combination.

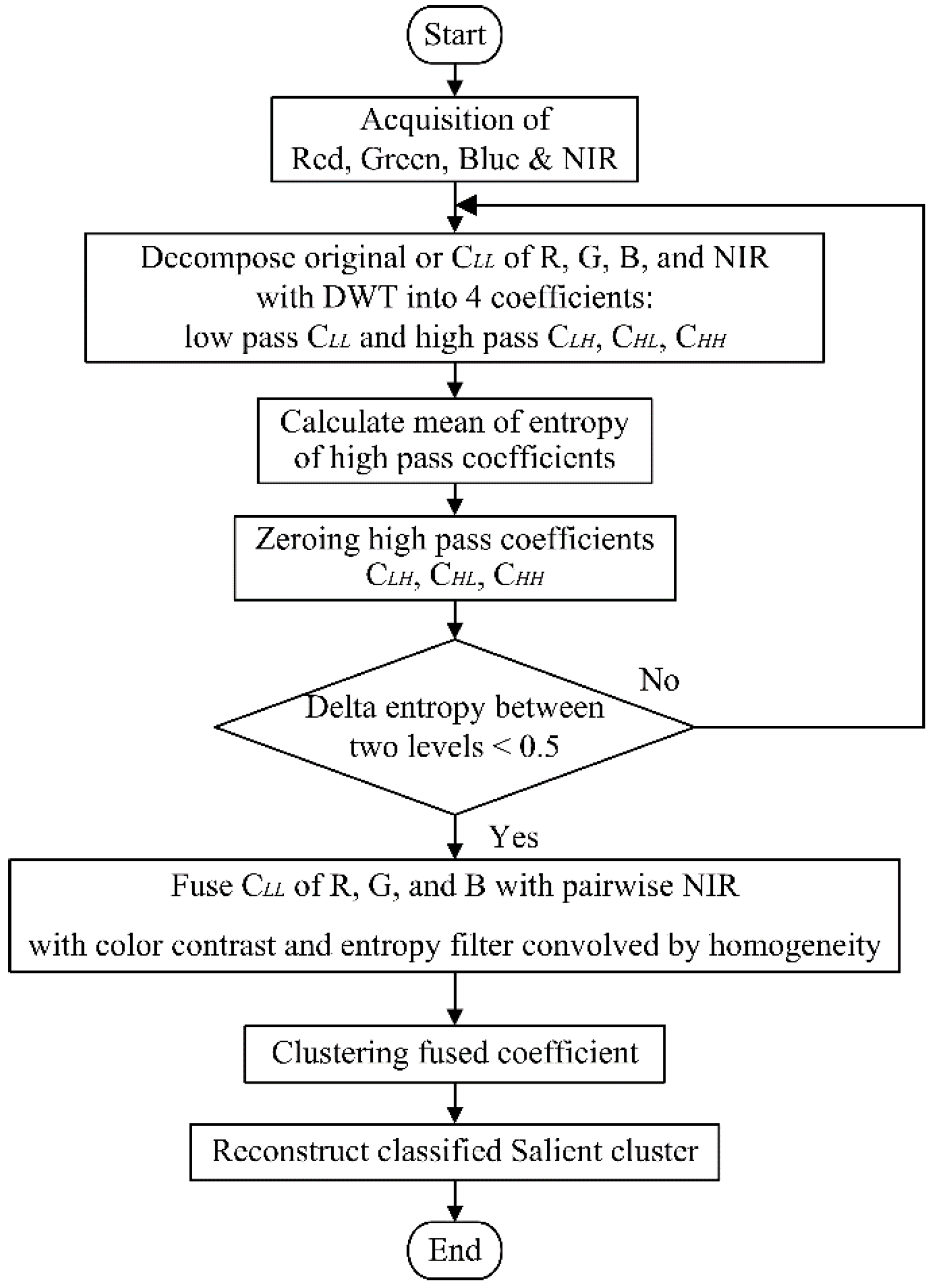

The main purpose of this research is to investigate and evaluate a multispectral machine vision system which is robust to the background noise with spatial properties and the enhancement of the fundamental spectra from the images acquired from the multispectral sources. The alignment of two images in two coordinates is done with the customized hardware alignment. The synchronization is achieved by triggering two sensors in a master and slave architecture. Based on the hardware registration, the software-based nonlinear fusion rule has been proposed using information contents and color contrast in multiscale decomposition application using DWT. In DWT, the high pass coefficients are zeroed in order to remove the spatial unstructured noise. Since the removal of the detail in high pass coefficients blurs the area of the fruits in the resultant cluster image as well, the enhancement of the fundamental envelope spectra is considered in the fusion of two resources without losing the main information. Hence, the entropy from the color component with the color contrast and the homogeneity from NIR component is combined arithmetically to modify the original color image. As a result, the modified color image has better clustering results compared to the linear combination methods.

As follows,

Section 2 summarizes the hardware registration followed by the detail of the nonlinear fusion approach with the information contents to modify the original color image. The modified color image is then classified with the selected clustering method for a nonlinear clustering problem.

Section 3 evaluates the proposed fusion method using statistical harmonic

F measure in comparison to the results of the other linear combination methods. The last section draws a conclusion for this paper.

3. Evaluation and Results Discussion

The validation for the classified result is non-trivial since there is no benchmark for the dissimilarity comparison. Also, the structure of the image data in color space is unknown a priori. In addition, the outcome of clusters from one image is completely independent of the others. Hence without universal reference, the citrus fruit area is manually segmented in advance. Then the classified cluster with citrus fruits is utilized in the evaluation study since the remaining background is of no interest.

To measure the accuracy of the clustering,

F measure is adopted as a harmonic mean using the fraction of the precision and the recall [

32]: the precision (

P) is the fraction of retrieved objects that are relevant while the recall (

R) is the fraction of relevant objects that are retrieved.

where a true positive (

TP) assigns two similar objects to the same cluster. There are two types of errors: a false positive (

FP) assigns two dissimilar documents to the same cluster and a false negative (

FN) assigns two similar documents to different clusters. In this case, since the background clusters are unknown with unstructured fluent features, only the salient cluster with citrus fruits is selected to compare with the weak manual segmentation reference. In this way, each pixel is valued first. In each cluster, the pixels belong to the other clusters are labeled by zero. Hence, the salient non-zero pixels are used to calculate the components for

F measure. By following the definition, FP detects the background pixels which are not included in the reference. FN rejects the foreground pixels, which are included in the reference, into the background clusters. Assume the reference image by

R and the clustering result by

C with non-zeros pixels for citrus fruits, the true positive TP is found by the binomial coefficient of

, FP is found by the binomial coefficient of

, and FN is found by the binomial coefficient of

. Then, the

F measure is calculated with weight parameter. Note that

P is the measure of the amount of false positive and if it is 1, then there is no false positive, i.e., no background pixels are included in the foreground. On the other hand,

R is a similar measure for false negative and if it is 1, then there is no false negative, i.e., no foreground pixels are included in the background. The

F measure is the weighted harmonic mean of precision and recall:

Then the

F measure is the weighted harmonic mean of precision and recall.

where

and

or thus

. The balanced

F measure weighs the precision and the recall equally with

or

. In this application, the precision is more important than the recall—i.e., the false positive in

P will decide the accuracy of clustering. Ideally, the result should have the least amount of background noise classified as the salient feature (i.e., the reduction of false positive). The measure of a false negative is not as important as the value of a false positive since simply the main purpose of the clustering is the detection of the majority of citrus fruit area and a small number of pixels excluded from the salient feature cluster will not create issues in the practical use of the proposed algorithm.

With these performance evaluation metrics, the proposed nonlinear fusion method is evaluated using a comparison study with a number of existing methods. Since the proposed method is developed for the agricultural application, naturally the first method employed is the projection based vegetation color indices [

33], i.e., the projection

from the coordinates of the RGB color space in

w, where

onto the lower dimensional space of

x where

x is a direction vector. The direction vector is constructed by the coefficients of the color indices or the normalized indices such as the applied two color indices

and

. Since the vegetation color index can be interpreted as a linear combination of color components, another linear discriminant analysis method, Fisher linear discriminant analysis (FLDA) [

34,

35], as a supervised linear discrimination analysis is also adopted in the study. FLDA is the dimension reduction technique. Two segments, namely citrus fruit foreground and the remaining background, are manually segmented to find the maximal eigenvector. The projection data is classified using the nearest neighbor based on the centroids of two prior segments. On top of FLDA, principle component analysis (PCA) is adopted to find a direction which provides the maximum variance between the data sets in two different ways, one with R, G, and B components only and the other with R, G, B, and NIR components as well. PCA automatically extracts the eigenvectors based on the maximization of the variance of the projected data using the centered covariance matrix of the data [

36].

The evaluation study is conducted with MATLAB (8.6.0.267246 (R2015b)) on a computer of the 64-bit operating system with Intel(R) Core(TM) i7-4790 CPU @ 2.40 GHz and 4 GB RAM. Two vegetation color indices, “R - B” and “2R - G - B” and two approaches with PCA and FLDA are applied in addition to the proposed method in the study. In the color indices algorithm, the projected data is classified into two segments using the optimal thresholding. In PCA, two ways of linear combinations of intensities are used; one with only R, G, and B color components and the other with NIR in addition to those three color components to have a fair comparison with the proposed method. The first eigenvector is determined based on the centered covariance matrix using the components to be combined. The projected data are classified using the optimal global thresholding. In addition, FLDA with a manual segmentation is applied in the study. For comparison of different methods, the clusters with citrus fruits are separated and the F measure is calculated to check the dissimilarity. In the dissimilarity measure, the ground truth references are manually created a priori. Only the objects of interest, i.e., areas around citrus fruits, are segmented manually and used for the F measure calculation. The methods mentioned above are applied to the original images obtained directly from the cameras and also to the images after the DWT has been applied, except that FLDA is applied only to the original color image.

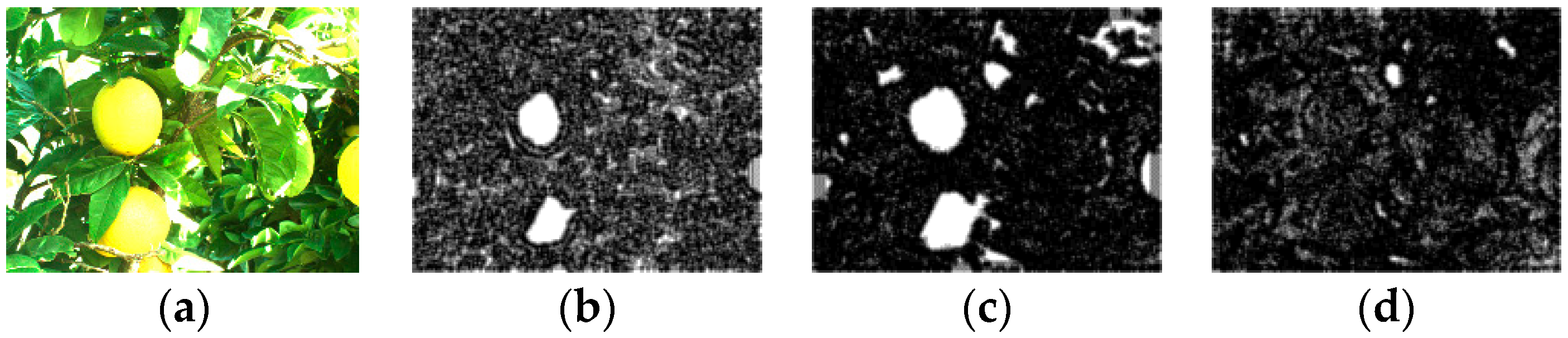

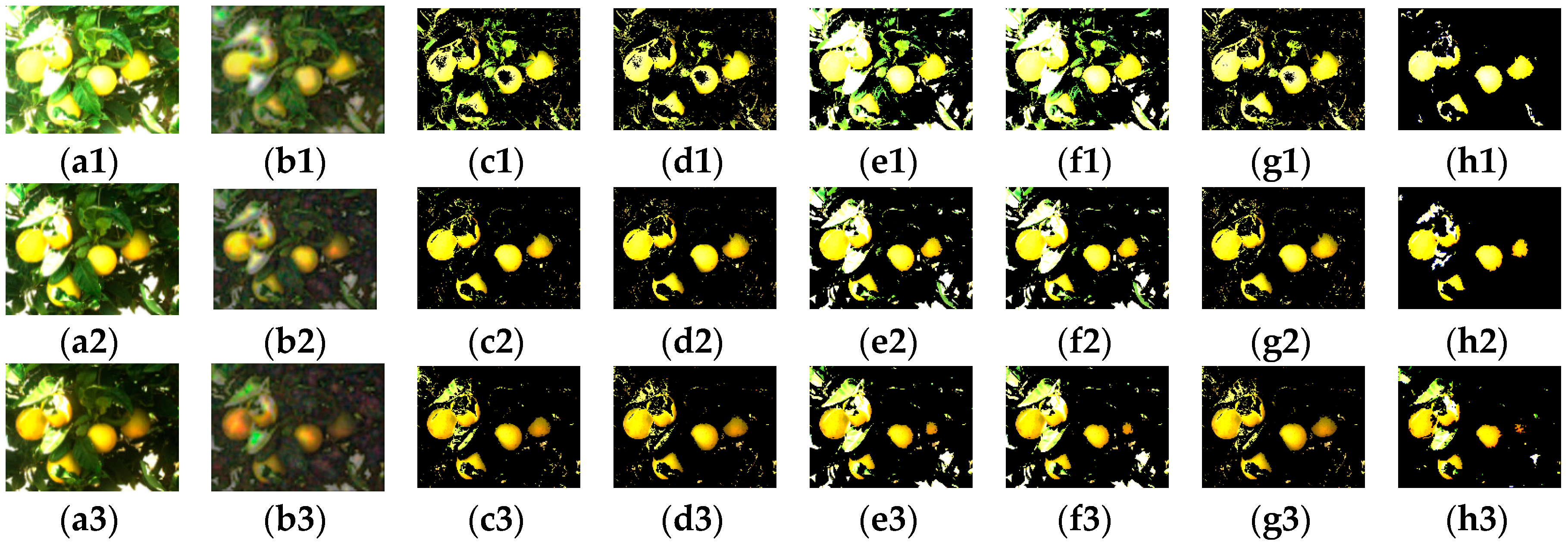

Figure 9 shows an example of the results of the study.

Figure 9(a1–a3) display the three types of color images,

Figure 9(b1–b3) are the corresponding modified color images by nonlinear fusion approach after DWT applied, The images listed in columns from 3rd column to 7th column in

Figure 9 are the results obtained by the methods of “

” color index, “

” color index, a linear combination of R, G, and B only; a linear combination of R, G, B, and NIR; and the FLDA, respectively.

Figure 9(h1–h3) shows the results of the proposed algorithm. In the figure, the color image in the first row is the image with no filters (termed as VIS) and the second row is the one with a neutral density filter (termed as NEUT) and the one in the third row is with linear polarizer filter (termed as POLA), respectively. These two additional filtered color images are employed to the study to show the effects of the proposed algorithm. The figures clearly show the proposed algorithm presents the robust results with high reduction of background noise, especially with the images of no filters. This can be observed more clearly in

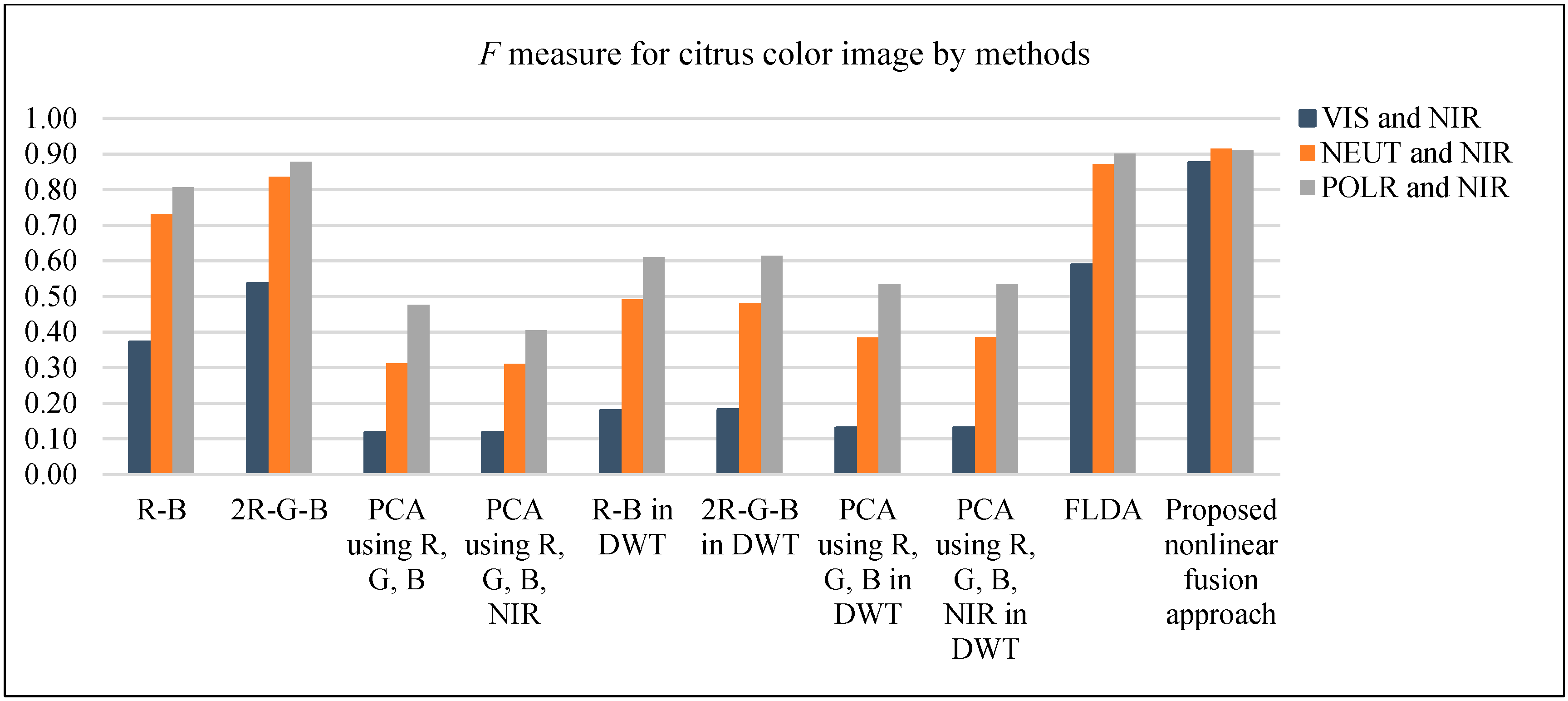

Table 3 and

Figure 10, which shows the results of the average

F measures obtained from 30 randomly selected images each with no filter, with a neutral density filter, and with a linear polarizer filter. The corresponding pairwise images of NIR are also used together with those images. The

F measures of the VIS images show the superior performance of the proposed scheme compared to other methods, while for NEUT and POLA images, the proposed method shows a bit better performance than some of the existing methods. The reason is that these two types of images have been attenuated with the filter which makes the nonlinear noises reduced compared to the VIS images. However,

Table 3 shows the performance as the quantified measure, but in reality, in the image, the proposed method can provide a significant reduction of the false positive background noise, which is more desirable. The proposed fusion rule enhances the salient feature to complement the loss of content contrast by thresholding the high pass coefficients in DWT. In addition, the identification rate of applied methods has been counted. Since the focus is the colored citrus clustering, and due to the varied illumination condition and the non-Euclidean structure of the citrus fruit, only the number of visible colored citrus fruits are counted by comparison to the resultant cluster. The quantified rate by the vegetation indices are similar and so is the linear combination of color components with or without the NIR component. The rate by the proposed method is acceptable as shown in

Table 4 but with high

F measure for each type of color image by comparing to the other methods and to the results by FLDA comparably, especially with low false positive in

F measure for the normal color image.

Without loss of the salient feature information, the proposed nonlinear fusion method is more robust against the false positive fraction. As expected, since the computational complexity of the proposed method is quite high due to the processes involved in the decomposition, clustering of the fused image data, and the reconstruction of DWT, the processing time would be substantially increased compared to other methods (See

Table 5). However, the efficiency is compromised with the accuracy of the application at the end. The overall performance shows that the proposed nonlinear fusion method has advantages in the development of a machine vision system for an automatic fruit harvesting manipulator.