The Modular Optical Underwater Survey System

Abstract

:1. Introduction

2. The Modular Optical Underwater Survey System (MOUSS)

2.1. Components and Settings

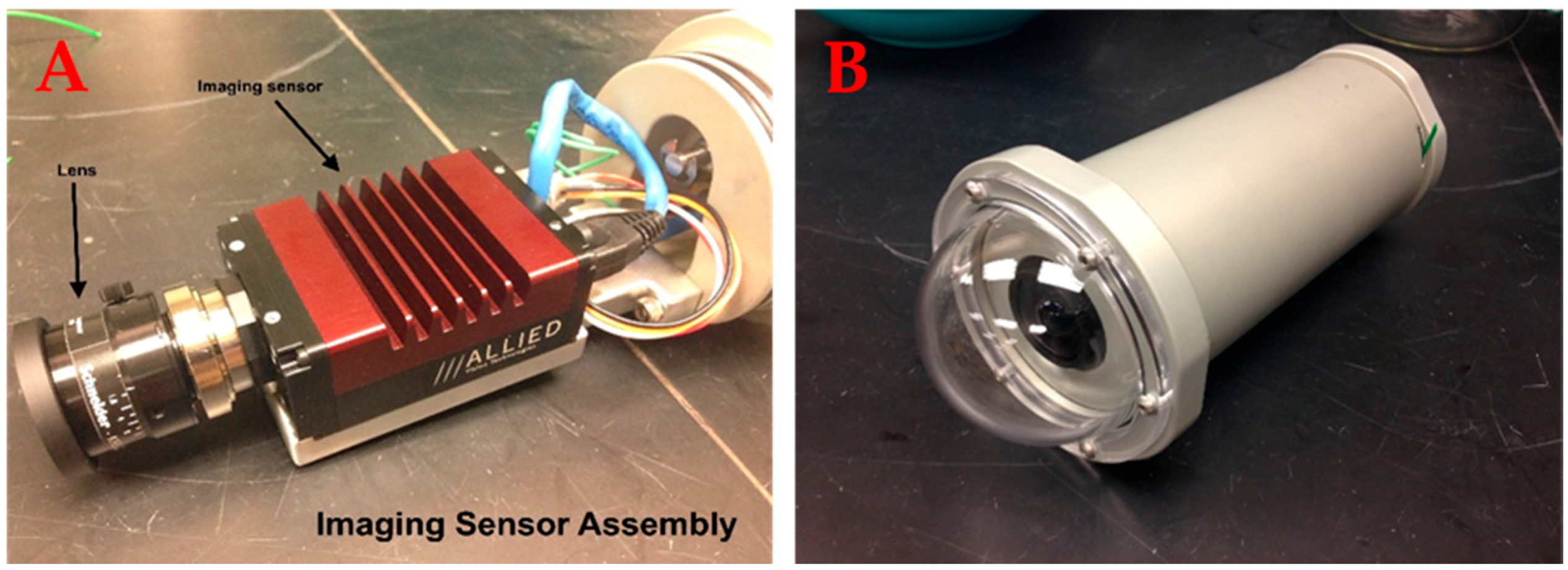

2.1.1. Camera Module

2.1.2. Digital Video Recording Module (DVR)

2.1.3. Battery Module

2.1.4. Frame Module

2.2. Calibration

2.3. Deployment

2.4. Data Analyses

3. Discussion

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Cappo, M.; Harvey, E.S.; Shortis, M. Counting and measuring fish with baited video techniques—An overview. In Proceedings of the Australian Society for Fish Biology Workshop, Hobart, Australia, 28–29 August 2006; pp. 101–114. [Google Scholar]

- Langlois, T.J.; Fitzpatrick, B.R.; Fairclough, D.V.; Wakefield, C.B.; Hesp, S.A.; McLean, D.L.; Harvey, E.S.; Meeuwig, J.J. Similarities between Line Fishing and Baited Stereo-Video Estimations of Length-Frequency: Novel Application of Kernel Density Estimates. PLoS ONE 2012, 7, e45973. [Google Scholar] [CrossRef] [PubMed]

- Ellis, D.M.; DeMartini, E.E. Evaluation of a video camera technique for indexing the abundances of juvenile pink snapper, Pristipomoides filamentosus, and other Hawaiian insular shelf fishes. Fish. Bull. 1995, 93, 67–77. [Google Scholar]

- Parrish, F.A.; DeMartini, E.E.; Ellis, D.M. Nursery habitat in relation to production of juvenile pink snapper, Pristipomoides filamentosus, in the Hawaiian Archipelago. Oceanogr. Lit. Rev. 1997, 11, 1356. [Google Scholar]

- Richards, B.L.; Smith, S.G.; Ault, J.S.; DiNardo, G.T.; Kobayashi, D.; Domokos, R.; Anderson, J.; Taylor, J.; Misa, W.; Giuseffi, L.; et al. Design and Implementation of a Bottomfish Fishery-Independent Survey in the Main Hawaiian Islands; NOAA Technical Memorandum NMFS-PIFSC-53; Pacific Islands Fisheries Science Center: Honolulu, HI, USA, 2016.

- Haight, W.R.; Kobayashi, D.R.; Kawamoto, K.E. Biology and management of deepwater snappers of the Hawaiian Archipelago. Mar. Fish. Rev. 1993, 55, 20–27. [Google Scholar]

- Merritt, D.W.; Donovan, M.K.; Kelley, C.; Waterhouse, L.; Parke, M.; Wong, K.; Drazen, J.C. BotCam: A baited camera system for non-extractive monitoring of bottomfish species. Fish. Bull. 2011, 109, 56–67. [Google Scholar]

- Misa, W.F.X.E.; Richards, B.L.; Moriwake, V.N.; Kelley, C.; Drazen, J.C.; Dinardo, G. Evaluating the effect of soak time on bottomfish abundance and length data from stereo-video surveys. J. Exp. Mar. Biol. Ecol. 2016, 479, 20–34. [Google Scholar] [CrossRef]

- Sackett, D.K.; Drazen, J.C.; Moriwake, V.N.; Kelley, C.D.; Schumacher, B.D.; Misa, W.F.X.E. Marine protected areas for deepwater fish populations: An evaluation of their effects in Hawaii. Mar. Biol. 2014, 161, 411–425. [Google Scholar] [CrossRef]

- Misa, W.F.X.E.; Drazen, J.C.; Kelley, C.D.; Moriwake, V.N. Establishing species–habitat associations for 4 eteline snappers with the use of a baited stereo-video camera system. Fish. Bull. 2013, 111, 293–308. [Google Scholar] [CrossRef] [Green Version]

- Moore, C.H.; Drazen, J.C.; Kelley, C.D.; Misa, W.F.X.E. Deepwater marine protected areas of the main Hawaiian Islands: Establishing baselines for commercially valuable bottomfish populations. Mar. Ecol. Prog. Ser. 2013, 476, 167–183. [Google Scholar] [CrossRef]

- Merritt, D.W. BotCam: Design, Testing and Development of a Fully Automated Stereo-Video Bottom Camera Bait Station for Ecosystem Monitoring of Bottom Fish Species. Master’s Thesis, University of Hawaii at Manoa, Honolulu, HI, USA, 2005. [Google Scholar]

- Misa, W.; Amin, R.; Richards, B.L.; Taylor, J.; Rollo, A.; Miller, D.; Demarke, C.; Koyanagi, K. From BotCam to MOUSS: Comparative tests on image quality, measurement accuracy, and fish count data from field deployments of two stereo-camera systems. Fish. Res. (under review).

- Ralston, S.; Polovina, J.J. A multispecies analysis of the commercial deep-sea handline fishery in Hawaii. Fish. Bull. 1982, 80, 435–448. [Google Scholar]

- Bouguet, J.Y. Camera Calibration Toolbox for Matlab. 2004. Available online: http://www.vision.caltech.edu/bouguetj/calib_doc/ (acessed on 10 October 2017).

- Clarke, T.A.; Fryer, J.G. The Development of Camera Calibration Methods and Models. Photogramm. Rec. 1998, 16, 51–66. [Google Scholar] [CrossRef]

- Boutros, N.; Shortis, M.R.; Harvey, E.S. A comparison of calibration methods and system configurations of underwater stereo-video systems for applications in marine ecology. Limnol. Oceanogr. Methods 2015, 13, 224–236. [Google Scholar] [CrossRef]

- Willis, T.J.; Millar, R.B.; Babcock, R.C. Detection of spatial variability in relative density of fishes: Comparison of visual census, angling, and baited underwater video. Mar. Ecol. Prog. Ser. 2000, 198, 249–260. [Google Scholar] [CrossRef] [Green Version]

- Cappo, M.; Harvey, E.S.; Malcolm, H.; Speare, P. Potential of video techniques to monitor diversity, abundance and size of fish in studies of marine protected areas. In World Congress on Aquatic Protected Areas Aquatic Protected Areas-What Works Best and How Do We Know; Australian Society for Fish Biology: Cairns, Australia, 2003; pp. 455–464. [Google Scholar]

- Anderson, M.J.; Gorley, R.N.; Clarke, K.R. PERMANOVA+ for PRIMER: Guide to Software and Statistical Methods; PRIMER-E Ltd.: Plymouth, UK, 2008. [Google Scholar]

- Murphy, H.M.; Jenkins, G.P. Observational methods used in marine spatial monitoring of fishes and associated habitats: A review. Mar. Freshw. Res. 2010, 61, 236–252. [Google Scholar] [CrossRef]

- Patel, R.; Handegard, N.O.; Godø, O.R. Behaviour of herring (Clupea harengus L.) towards an approaching autonomous underwater vehicle. ICES J. Mar. Sci. 2004, 61, 1044–1049. [Google Scholar] [CrossRef]

- Somerton, D.A.; Williams, K.; Campbell, M.D. Quantifying the behavior of fish in response to a moving camera vehicle by using benthic stereo cameras and target tracking. Fish. Bull. 2017, 115, 343–354. [Google Scholar] [CrossRef]

- Schobernd, Z.H.; Bacheler, N.M.; Conn, P.B. Examining the utility of alternative video monitoring metrics for indexing reef fish abundance. Can. J. Fish. Aquat. Sci. 2014, 71, 464–471. [Google Scholar] [CrossRef]

- Shortis, M.R.; Ravanbakskh, M.; Shaifat, F.; Harvey, E.S.; Mian, A.; Seager, J.W.; Culverhouse, P.F.; Cline, D.E.; Edgington, D.R. A review of techniques for the identification and measurement of fish in underwater stereo-video image sequences. In Proceedings of the SPIE Optical Metrology 2013, Munich, Germany, 23 May 2013; Volume 8791, p. 87910G. [Google Scholar]

| Camera Module | |

| Camera Model | ST-CAM-1920HD (Allied Vision Prosilica GT 1920) |

| Resolution | 1936 × 1456 (2.82 Mpx) |

| Color/Mono | Color or Mono |

| Interface | Ethernet IEEE 802.3 1000baseT |

| Image Sensor | Sony ICX674 |

| Sensor Type (Size) | Progressive CCD (2/3) |

| Cell Size | 4.54 µm |

| Iris | Fixed |

| Frame Rate | Variable (0–40 fps) * |

| Bit Depth | 8–14 bits ** |

| Binning | 1–8 pixels/rows *** |

| Gain | 0–30 db |

| Power Requirement | 7–25 VDC (5 W) |

| Lens | Schneider 21017528 4.8 mm, f/1.8 |

| Housing Dimensions | 8.89 × 20.32 cm |

| Weight Including Housing | 2.32 kg/Camera |

| Digital Video Recording Module | |

| Operation System | Linux |

| Data Storage | 2 × 512 GB Solid State Drives |

| Output | DNG, JPEG, PGM, PNG TIFF, SGI **** |

| Power Requirement | 9–36 VDC (16 W) |

| Housing Dimensions | 33.02 × 15.87 cm |

| Weight Including Housing | 8.16 kg |

| Battery Module | |

| Type | Nickel-metal hydride (NiMH) |

| Duration | 6+ h |

| Voltage | 14.4 V |

| Capacity | 16 amp hour |

| Housing Dimensions | 33.02 × 15.87 cm |

| Weight Including Housing | 7.48 kg |

| Complete System Overview | |

| Depth Rating | 500 m |

| Total Weight | 29.43 kg |

| Overall Dimensions (excluding rigging) | 46.99 × 21.59 × 102.49 cm |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Amin, R.; Richards, B.L.; Misa, W.F.X.E.; Taylor, J.C.; Miller, D.R.; Rollo, A.K.; Demarke, C.; Singh, H.; Young, G.C.; Childress, J.; et al. The Modular Optical Underwater Survey System. Sensors 2017, 17, 2309. https://doi.org/10.3390/s17102309

Amin R, Richards BL, Misa WFXE, Taylor JC, Miller DR, Rollo AK, Demarke C, Singh H, Young GC, Childress J, et al. The Modular Optical Underwater Survey System. Sensors. 2017; 17(10):2309. https://doi.org/10.3390/s17102309

Chicago/Turabian StyleAmin, Ruhul, Benjamin L. Richards, William F. X. E. Misa, Jeremy C. Taylor, Dianna R. Miller, Audrey K. Rollo, Christopher Demarke, Hanumant Singh, Grace C. Young, Jeremy Childress, and et al. 2017. "The Modular Optical Underwater Survey System" Sensors 17, no. 10: 2309. https://doi.org/10.3390/s17102309