1. Introduction

Line scanning (also 1D or linear) cameras, which produce a single line of pixels for each exposure, have been used widely in areas such as remote sensing [

1,

2] and industrial inspection [

3,

4,

5]. While 2D frame cameras offer the benefit of imaging a larger scene with each exposure, linescan cameras allow capturing of images at higher frame rates or spatial resolution [

6]. One specific but common example is hyperspectral line scanning cameras, which provide both high spatial and spectral resolution. Many applications require accurate and direct determination of the real world coordinates of line scan image data, also known as georeferencing or mapping. This requires precise calibration of the sensor’s intrinsic (e.g., focal length and principal point) and extrinsic parameters (i.e., camera pose with respect to the vehicle body frame). In the remote sensing literature, determination of extrinsic parameters is known as lever arm (translation) and boresight (orientation) alignment. More recently line scanning cameras have also been studied for low altitude unmanned aerial vehicle (UAV) and mobile ground based applications [

7,

8,

9,

10], but there are fewer studies addressing the extrinsic calibration requirements that closer proximity to the scene implies. Requirements include obtaining a 6 degree of freedom (DOF) extrinsic parameter solution including translation, which has a greater influence on mapping when proximal; avoiding ground control points (GCPs), which need to be more accurately geolocated when viewed from nearby; and a need for smaller survey areas for calibration, because it is more difficult to obtain data over large areas with mobile ground vehicles. This paper addresses these requirements by providing a novel method to estimate line scanning camera pose with respect to the platform body frame, where the location and orientation of the platform is itself provided in world coordinates from a navigation system. The method uses the data from the navigation and line scanning camera only, avoiding the need for auxiliary sensors.

Extrinsic calibration for 2D frame cameras has been studied extensively due to their ubiquitous use across many different fields, and established solutions exist [

11,

12,

13,

14]. Calibration of 1D cameras has not received as much attention. Methods can be loosely grouped into two categories: scan-based calibration and line-based calibration [

6]. Scan-based calibration requires an accurate rig with a linear actuator that moves the camera orthogonally to the line scan at a constant speed over a calibration pattern, such as a checker board [

15,

16]. This method is suitable for industrial inspection applications in a controlled laboratory or factory setting, where a linear actuator, manipulator arm or other rig is capable of moving the sensor through a precisely specified trajectory. Line-based calibration methods, on the other hand, allow calibration from a single line scan of a 3D target with a carefully designed pattern of lines [

17,

18]. Line-based approaches require that the dimensions of the calibration pattern are known precisely, and that the whole pattern has been imaged in one exposure. Recently, a variation of this method using multiple line scans of a planar calibration pattern has been proposed [

19], and the use of an additional auxiliary frame camera has also been explored [

6,

20]. All the aforementioned approaches are suitable for well controlled environments: for scan-based calibration the movement of the sensor needs to be accurately controlled, while for line-based methods, the position of the pattern with respect to the sensor is critical. However, in a mobile ground based field platform, where the camera is rigidly mounted in a particular position to the platform, it is difficult to meet either of those requirements.

In previous methods, extrinsic parameters are usually determined with respect to the calibration pattern or an auxiliary frame camera. Therefore to determine the camera to navigation system transform either requires accurate knowledge of a pattern or points in world coordinates or an additional step such as “hand-eye” calibration [

21]. Hand-eye calibration involves determining the transformation from a camera to an end effector (a robotic hand for instance), where these are rigidly linked, and is a thoroughly covered topic in the robotics literature. The problem is generally solved by imaging a calibration pattern from many different locations, where the transformations between the different end effector positions and camera to calibration pattern transformations are known using standard frame camera calibration techniques. Comparisons can be made with the problem in this paper, where the navigation system positions (and therefore any transformations between them) are known, and camera to calibration pattern transformations can be determined using any of the previously discussed methods.

As remote sensing most commonly involves imaging from an aerial or satellite platform, translation (lever arm) offsets have a smaller effect on imaging accuracy, and can be measured manually [

22,

23]. Accurately geolocated GCPs are commonly used to determine boresight alignment [

24], which can also be adopted for ground based applications [

25]. Efforts have been made to avoid the use of GCPs, by detecting points of interest in separate scans of the same area and determining their 3D position using a known digital elevation model (DEM) [

22]. Similarly, non-surveyed tie-points between overlapping acquisition runs have been used in combination with bundle adjustment to determine boresight parameters [

26]. The use of GCPs has also been combined with DEMs to improve accuracy and allow self-calibration [

27]. Frame cameras have been used to aid in determination of boresight misalignments [

28], and additionally in combination with a DEM [

29]. Frame camera images have also been used to improve the geometric characteristics of processed hyperspectral linescan images from a UAV [

30].

This paper provides a method for the determination of the relative 6 DOF pose of a rigidly mounted line scanning camera with respect to a navigation system on a ground based mobile platform. With this approach many of the previously outlined requirements and limitations are mitigated:

The dimensions of the calibration pattern do not need to be known, and so it does not need to be printed to any particular accuracy, nor even measured.

GCPs do not need to be surveyed.

Auxiliary sensors, such as 2D frame cameras, are not required to aid the calibration.

A single, compact calibration pattern can be used rather than widely distributed GCPs.

Translational (lever arm) offsets are determined in addition to rotations (boresight), due to their increased significance when at close proximity to the scene.

The remainder of the paper is organised as follows. In

Section 2 the theory of the proposed method is outlined in detail. Then

Section 3 provides practical implementation details and the experimental method. Experimental results using the Ladybird and Shrimp robotic platforms are produced and discussed in

Section 4 and

Section 5.

2. Overview of Approach

In this section, the theoretical approach used for estimating the camera pose with respect to the platform body is outlined in detail. Initially, an overview of the line scanning camera model is provided, which is an adaptation of the widely used pinhole model. This allows defining lines or rays in 3D space that intersect both the camera centre and a pixel on the sensor. When combined with the Cartesian coordinate transformations between camera, body and world frames, rays can be projected onto a surface, and conversely a world 3D point can be reprojected to a point on the 2D sensor. It is desirable to minimise any errors in the camera pose, as they directly affect mapping accuracy.

We propose a method that estimates the relative camera pose using image and navigation system data. The data are obtained by moving the platform in order to observe a calibration pattern with multiple point targets from different perspectives. The calibration pattern point locations are then manually labelled in the image data. Starting from an initial hand measured camera pose, image pixel locations of the observed pattern points and corresponding platform poses are combined, and all of the resulting rays are used to triangulate the pattern point locations in world coordinates. These point estimates are then reprojected to the sensor frame for each observation. The reprojection error is calculated as the distance between each observed and reprojected pixel. The reprojection error uncertainty is calculated by propagating the input uncertainties through each calculation as variance-covariance matrices (henceforth referred to as covariance matrices for brevity). Assuming a normal distribution of the reprojection error over input parameters, the likelihood of the data given a relative camera pose hypothesis can be estimated. By maximising the likelihood, the six relative camera pose parameters can be optimised. Following this, a random sampling based procedure is provided to estimate the uncertainties of the optimal camera pose using Markov Chain Monte Carlo (MCMC).

Throughout this paper, superscripts represent the reference frame of a particular variable. Subscripts refer to a descriptor (e.g., which pose is being referred to), axis reference, and instance identifiers for that variable, in that order. For example, refers to the camera centre location along the x axis relative to the body frame.

2.1. Line Scanning Camera Model

Using the pinhole camera model with homogeneous coordinates, a point

in world coordinates is projected to the camera sensor at

with the following Equation [

31]:

where

s is a scale factor and

can be broken down into,

is the rotation matrix of the camera with respect to the world frame. Joined horizontally are

and

, which are the identity matrix and the world camera position (i.e., the camera centre

) respectively.

is the intrinsic camera matrix:

where

f,

and

are the focal length (in pixels) and principal points respectively (we neglect skew because there is only one spatial axis). For a line scanning camera, we assume that

and so it follows that for a 3D world point to be visible in the 1D pixel array, it must be located near the plane that intersects the scan line on the sensor (i.e., where

) and the camera centre (focal point). How closely a point must be located to that plane depends on the instantaneous field of view (IFOV) and distance from the sensor. The IFOV is the angle over which each pixel is sensitive to radiation. While linescan image data is by definition at

, reprojection errors can occur in both

u and

v as will be shown later. Therefore, even though the model allows for two spatial dimensions on the image sensor, it describes the projection of points for individual 1D line scan frames only.

Each pixel point

maps to a ray or line in 3D space, which connects the sensor pixel, camera centre and object being viewed. While that ray may be defined by any two points that lie on it, the following are mathematically convenient to obtain: the camera centre

and

, where

is the pseudo-inverse of

[

31].

2.2. Rotation and Transform Conventions

In this paper, we use both Euler and axis-angle conventions to represent rotations compactly. The navigation system on the platforms used in this work provide platform pose estimates using the Euler

intrinsic convention (also known as Tait-Bryan or yaw-pitch-roll), which are represented as

, and may be converted to rotation matrices as per Berner [

32] or

Section 3.1 in Underwood [

33]. While Euler angle representations are commonly used in robotics applications, they present the following ambiguities. Some different combinations of

and

can represent the same rotation [

34]. Similarly, a small freedom of rotation about a non-orthogonal axis can result in a large correlated degree of freedom spread over two Euler angles, which is difficult to interpret when estimating parameter uncertainty. For these reasons, while navigation and hand measured pose data is provided as Euler angles, we favour the axis-angle representation for all internal calculations and results. An axis-angle rotation is given as a unit length vector

and a rotation

around it:

Since rotations only have three degrees of freedom, an axis-angle rotation may be expressed as a length three vector:

Axis-angle rotations may be converted to rotation matrices as follows [

32]:

where

A complete 6 DOF pose transform can be compactly represented with the three translation and three orientation parameters:

or

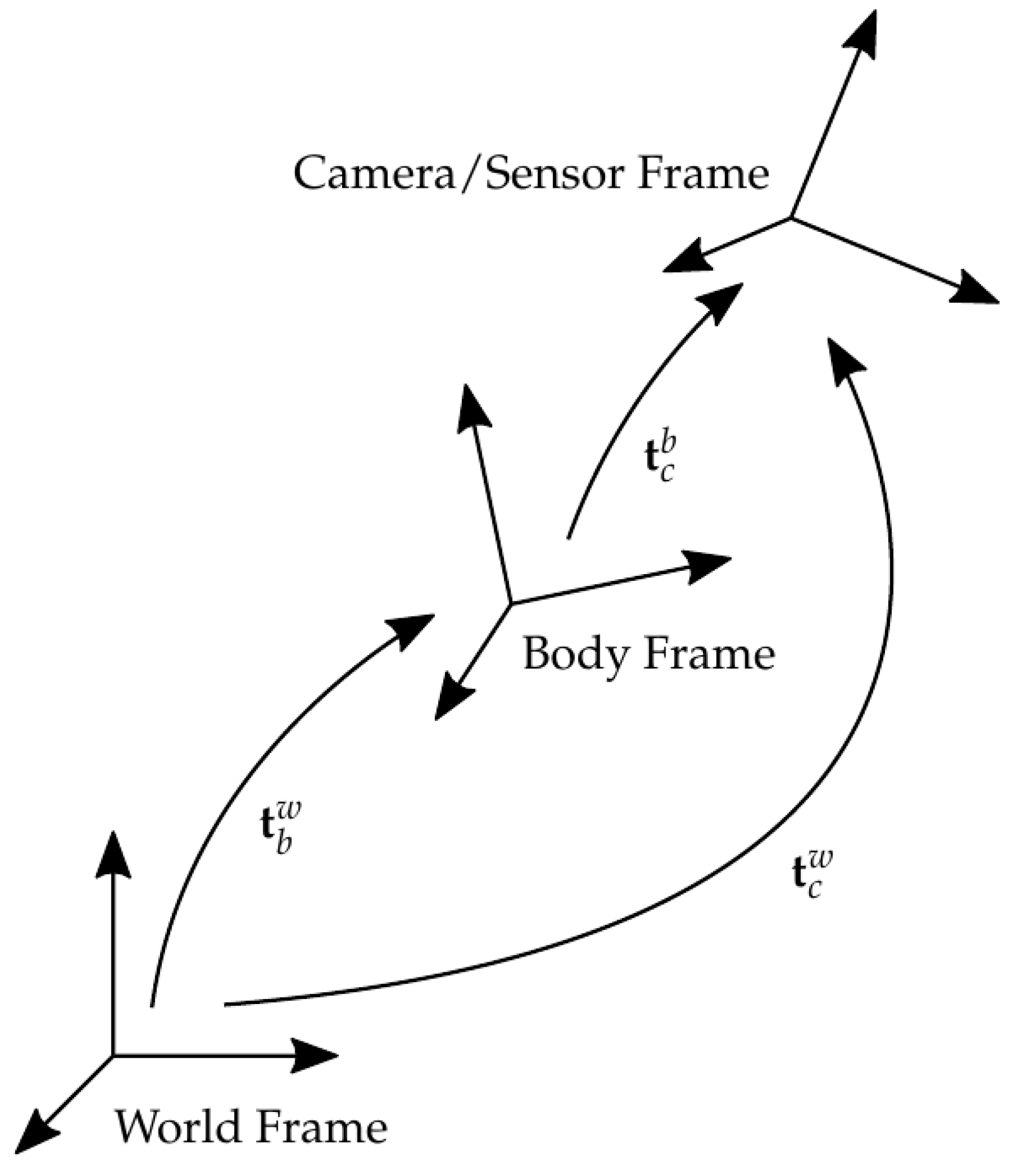

depending on whether Euler or axis-angle conventions are used. The pose transforms of importance in this paper are the world to platform body transform

, platform body to camera transform

, and, combining these, the world to camera transform

(see

Figure 1). Note the sub- and superscripts: e.g.,

denotes the translation and rotation of the camera axes with respect to the platform body.

By splitting the world pose of the camera

into a combination of the body pose

and the camera relative pose

,

from Equation (

2) can be shown as a function of the camera rotation and translation with respect to the body frame

and

, and platform body rotation and translation with respect to the world frame

and

:

In our case, and are provided by the navigation system, and and are the relative camera pose parameters we would like to estimate.

2.3. Estimation of Calibration Pattern Points

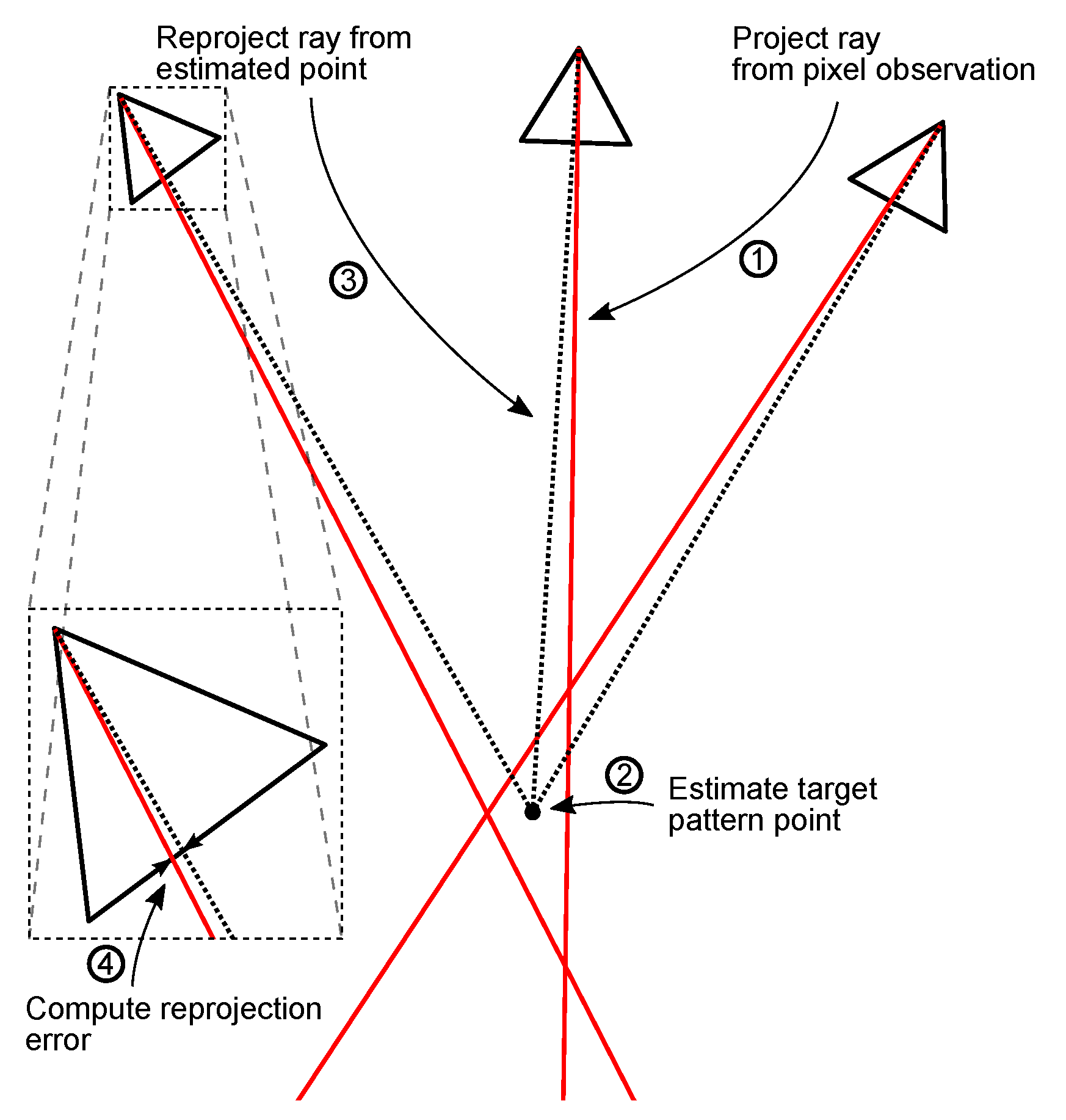

The first step of the proposed method involves estimating the location of calibration pattern points in world coordinates, as these are unknown and must be computed from the data. As shown in

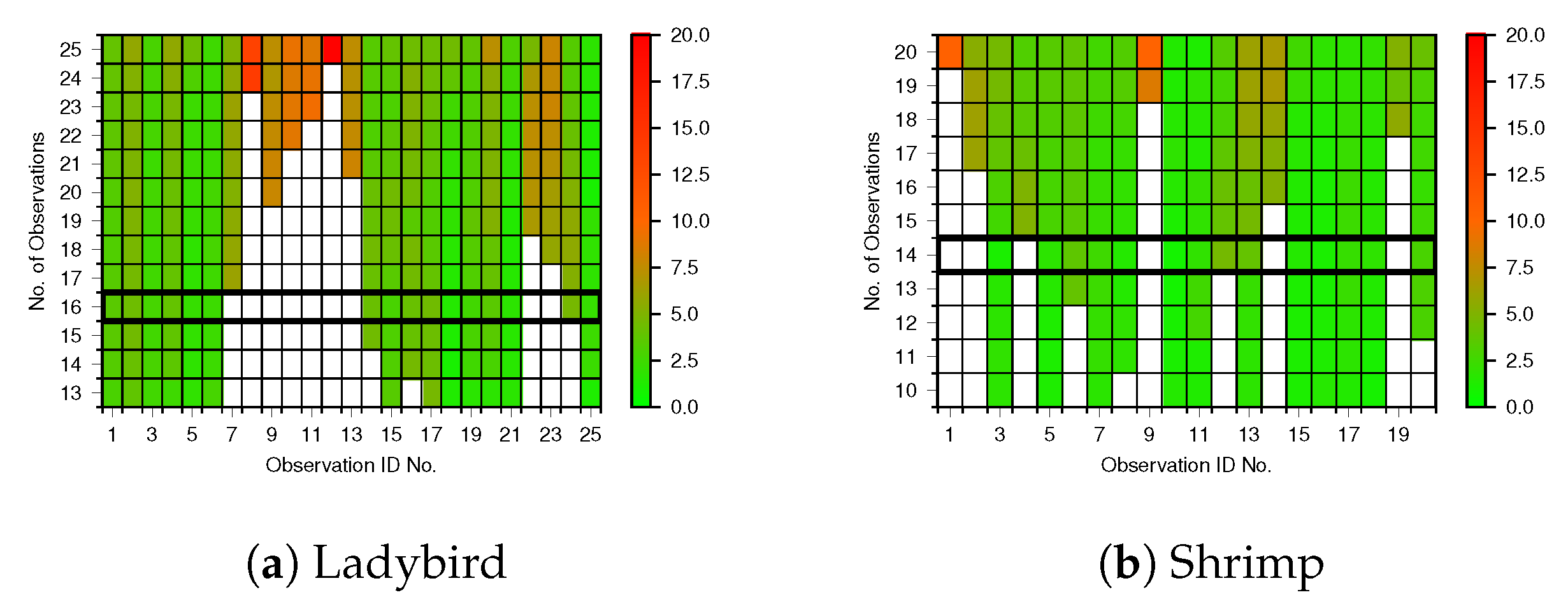

Figure 2, rays are calculated for each pixel observation of each calibration pattern point, given the concurrent navigation system solution and camera pose proposal. Average point locations are determined by triangulating all rays corresponding to the same calibration pattern point. Uncertainties for all inputs (pixel locations, navigation solutions and intrinsics) in the form of covariance matrices are propagated using the Jacobian of the point calculation function, yielding an uncertainty estimate (covariance matrix) for each calibration point estimate.

The proposed method starts with repeated imaging of points that can be uniquely identified. The use of a regular calibration pattern ensures points can be easily distinguished and is therefore recommended. The location of each pattern point

for

is estimated from all of its observation rays

. There are

M points on the calibration pattern and the whole pattern is viewed

N times. For each point, we calculate the nearest points between all pairs of observation rays

and apply a weighted average. Nearest points between rays are calculated as follows [

35]:

where

We could estimate

as the unweighted mean of all

for a given pattern point

k, but some estimates are more certain than others given the conditions of how they were measured. A more accurate estimate is obtained using a weighted average according to the uncertainty. The uncertainty of each point

can be obtained by computing its Jacobian

with respect to all input values. Also required are the uncertainties of the pixel and platform pose observations for each ray, expressed as covariance matrices,

,

,

and

, as well as intrinsic and extrinsic parameter covariances,

and

. Although line scan cameras have only one pixel coordinate (

u), there is also uncertainty in the second coordinate

v, because a point elicits a pixel response if it is located within the camera’s IFOV, not necessarily directly on the scan line.

and

contain variances and covariances of the intrinsic camera parameters and the relative camera pose respectively. All the input covariance matrices are combined into one matrix

:

No correlation between the navigation solutions of the two rays is assumed, which is reasonable if the two observations are sufficiently separated in time.

and

can now be used to compute the uncertainty of

(Equation (

11)) as covariance matrix

:

Because we wish to estimate both

and

with respect to all error sources other than the camera pose, we set all elements of the

covariance matrix

to zero temporarily [

33,

36]. Each point

on the calibration pattern can then be estimated by computing an average that is weighted according to the covariances [

37]:

This ensures that the contribution of each closest point for each ray pair () is weighted according to its certainty, taking into account navigation system uncertainty or challenging viewpoint geometry (such as a small angle between the two rays).

2.4. Calculation of Reprojection Error and Likelihood Optimisation

Once estimates and uncertainties of each calibration pattern point have been obtained, they are reprojected to the camera for each observation, which allows calculating an error between each of the observed pixel locations and the reprojected pixels (see

Figure 2). The uncertainties of all inputs and calibration pattern point estimates are also propagated through, which yields an uncertainty value for each reprojection error. This enables the calculation of an overall likelihood value of the data given a camera pose proposal. The optimiser maximises this likelihood by varying the camera pose to arrive at an estimate.

For each observation

i,

can be reprojected according to Equation (

1), given a

and corresponding navigation system solution

. The reprojection error is calculated as the Euclidean distance between the reprojected and observed pixel locations:

The reprojection is two dimensional, because non-optimal

can result in reprojected pixels that deviate from the one dimensional scan line (

), but

is assumed to be 0. The variance of the reprojection error can also be computed using the input covariance matrix and Jacobian

:

where

The Jacobian

is lower case because it is only one dimensional in this instance, since

is a scalar value. As in Equation (

13), we again set all elements of

to zero. The log likelihood of a transform

given the observations can then be estimated as,

The objective is to maximise

, by varying the 6-DOF

vector. This can be achieved using standard optimisation methods to minimise the negative log likelihood:

is fully recalculated at each optimisation iteration, which includes the triangulation of calibration pattern points and calculation of their reprojection error.

2.5. Variance-Covariance Matrix Estimation

Once the relative camera pose

has been determined, it is desirable to approximate the covariance matrix of the solution, which provides an estimate of how uncertain the six relative camera pose parameters are. In combination with covariances of other parameters, such as the navigation system solution, this also allows mapping accuracy to be quantified. In other words, the result provides values for

, completing the full covariance matrix (see Equation (

29) in

Section 3.6). Note that all elements of

are set to zero for its estimation and optimisation, as previously mentioned in

Section 2.3 and

Section 2.4.

The proposed approach is based on similar work done with lidar sensors [

33,

36], but the details differ because 1D cameras do not directly provide depth information. We propose a random sampling based method, where a set of sample sensor to body transforms are selected using a MCMC algorithm [

38], which differs from the Monte Carlo (MC) importance sampling approach in [

33,

36]. This provides greater sampling efficiency and avoids the need to manually define a sampling region. The algorithm is guided by the likelihood of each relative camera pose sample, which governs the selection of the next sample.

There are several MCMC variations, but they all share the property that each sample is selected based on the previous. For a large number of samples, the distribution tends towards the probability distribution that is being sampled from (i.e.,

in this paper) [

39]. For further details about MCMC sampling, the reader is referred to the numerous resources available on the topic [

38,

39]. The MCMC algorithm provides a list of samples

, which are distributed according to

, from which the covariance can be computed as:

where

3. Materials and Methods

This section outlines the equipment and methods used to obtain the data and analyse the results. A planar calibration pattern was placed in the environment and imaged from several different orientations using a line scanning camera mounted to two different ground based robotic platforms. A navigation system mounted to each platform recorded the 6 DOF position and orientation of the platforms () throughout the acquisition period. Image pixel locations of calibration pattern points and matching robot poses were then used to estimate the relative camera pose using an iterative optimisation algorithm. Finally, the uncertainty of the camera pose estimate in the form of a covariance matrix was approximated using MCMC.

First the ground based mobile platforms and associated sensors used to acquire data are introduced, followed by a description of the data acquisition process and extraction of pattern point observations. The implementation of the method presented in

Section 2 is outlined, which includes the optimisation and an outlier removal process. Methods for mapping image data and comparing camera poses are presented, as required for the analysis of the results, and a method is presented to calculate the basin of attraction, to assess the sensitivity of the process to the initial camera pose.

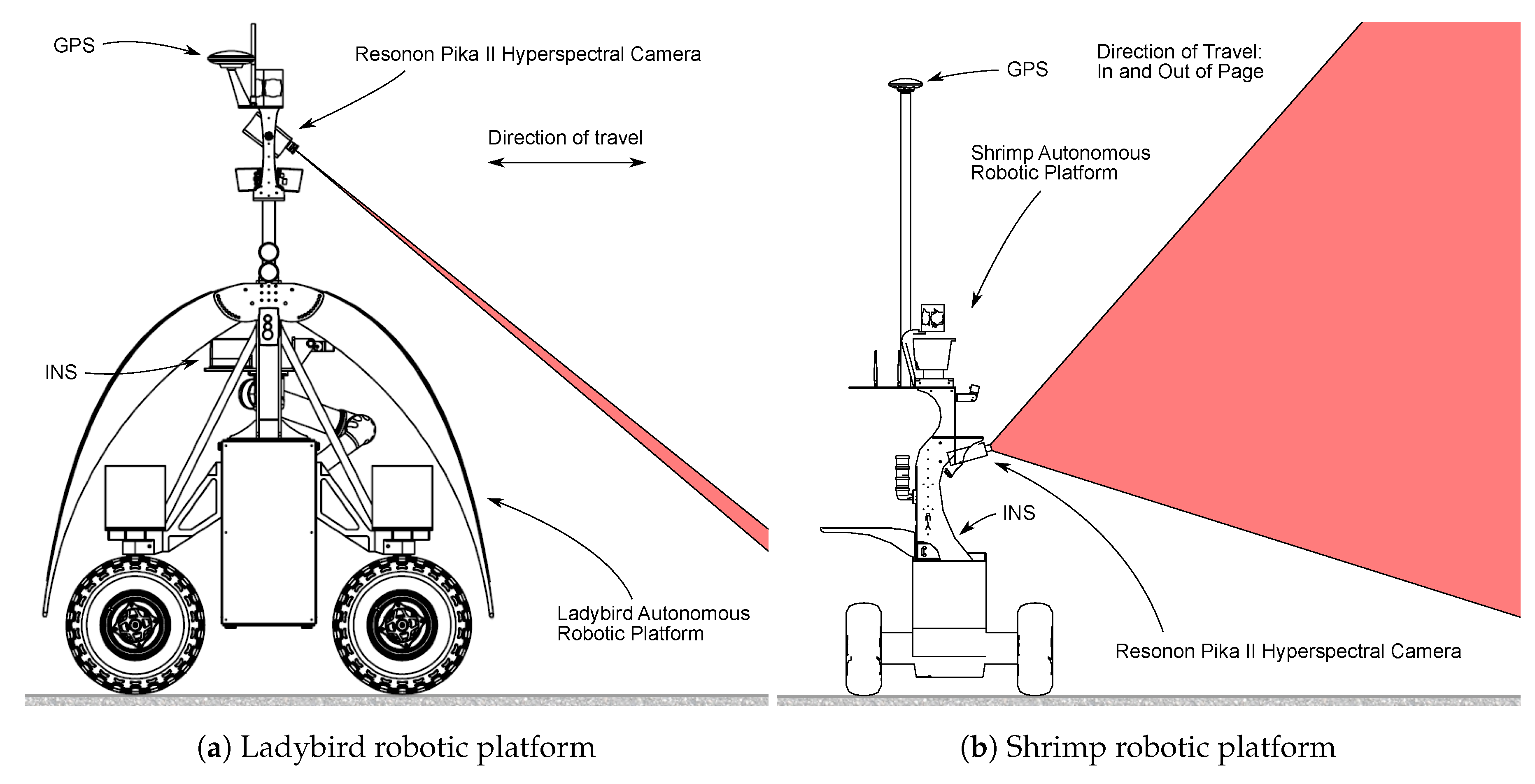

3.1. Mobile Sensing Platforms

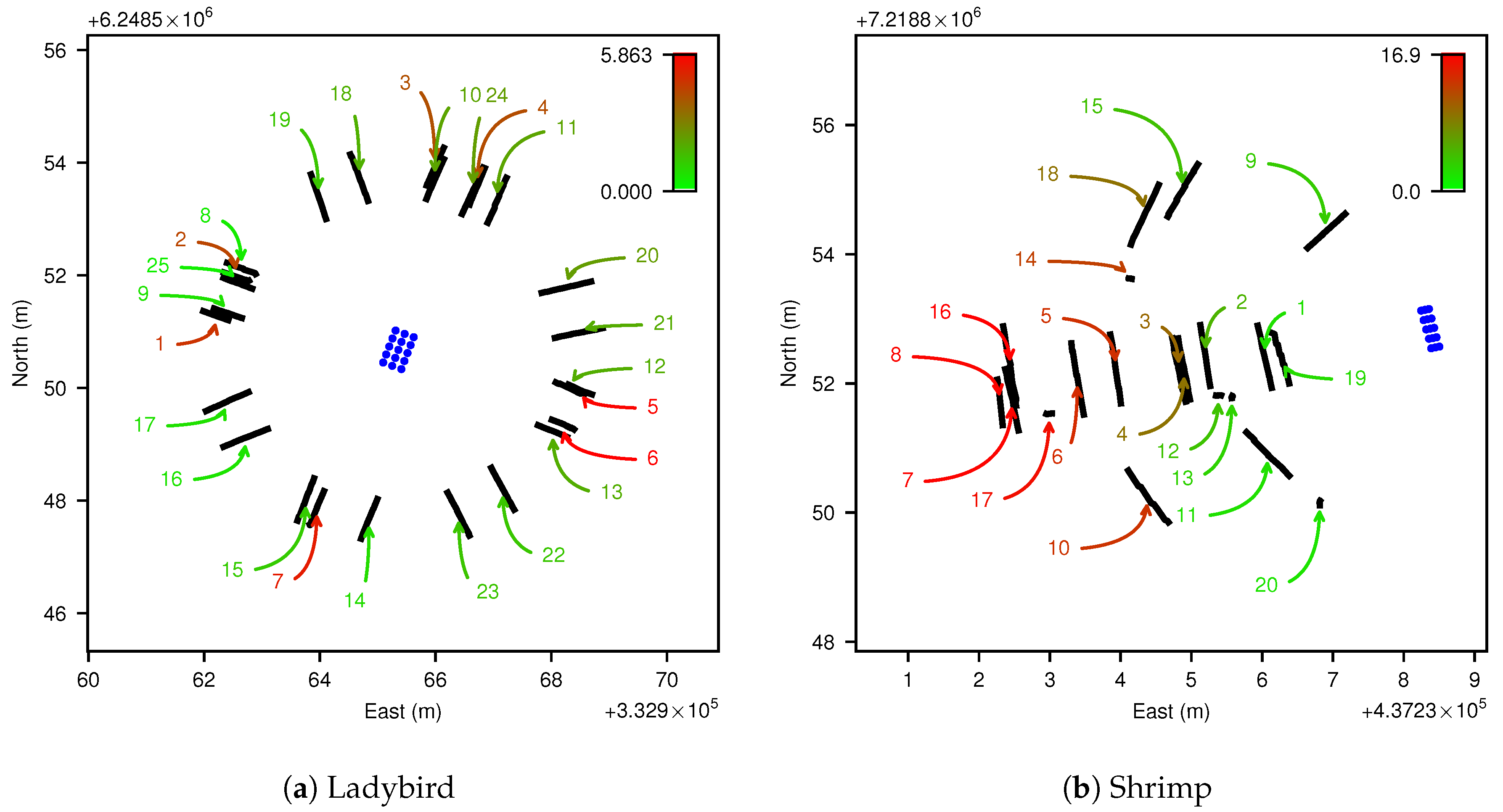

A line scanning hyperspectral camera was mounted to two different robotic platforms, Ladybird and Shrimp (

Figure 3). Both were designed and built at the Australian Centre for Field Robotics (ACFR) at The University of Sydney as flexible tools to support a range of research applications [

40,

41,

42,

43,

44,

45]. The sensor suite on both platforms includes a real time kinematic (RTK)/global positioning system (GPS)/inertial

navigation system (INS), which provides platform pose and covariance estimates (details in

Table 1). The GPS units on both platforms are identical, but the Shrimp platform uses a lower grade inertial measurement unit (IMU) than the Ladybird platform.

Line scan image data were acquired with a Resonon Pika II visible to near infrared (VNIR) line scanning camera that was mounted to the Ladybird and Shrimp robots in a push broom configuration. For the Ladybird, the camera was oriented such that the scan line is horizontal, pitched down for scanning the ground surface (

Figure 3a). On Shrimp, the camera was mounted such that the scan line is vertical, and pitched upwards slightly to allow scanning of upright objects (

Figure 3b). The camera produces hyperspectral images of 648 spatial by 244 spectral pixels (spectral resolution of 2 nm from 390.9–887.4 nm) at a rate of 133 frames per second and native bit depth of 12. For the purposes of this paper, the spectral dimension was averaged to produce 648 pixel monochrome scan lines. Apart from this averaging step, the method described in this paper is not particular to hyperspectral cameras and may be applied equally to other types of line scanning imagers. Schneider Cinegon 6 mm and 8 mm objective lenses were used for Shrimp and Ladybird respectively, and manually focused with a checker board at the typical distance to the scene. The principal point of the camera/lens combination was assumed to be at the centre of the line scan (

), the focal length was assumed to be as per the manufacturer supplied measurements (see

Table 1), and distortion was assumed to be zero. Hand measured pose estimates and manufacturer supplied lens details are shown in

Table 1.

Initial pose estimates were measured by hand with the mobile platforms on a level surface using measuring tape for translational offsets, and a digital inclinometer (SPI Pro 3600) for angular offsets around the robots’ horizontal x and y axes. Angular offsets around the robots’ vertical z axis were assumed to be the intended mounting orientations, which are in increments of 90° for both platforms. Note that if the camera is mounted at angles that are clearly not in 90° increments, referring to a CAD model is recommended. Hand measured translation parameters (, and ) were assumed to have a standard deviation () of 0.1 m and orientation parameters (, and ) were assumed to have a of 2°.

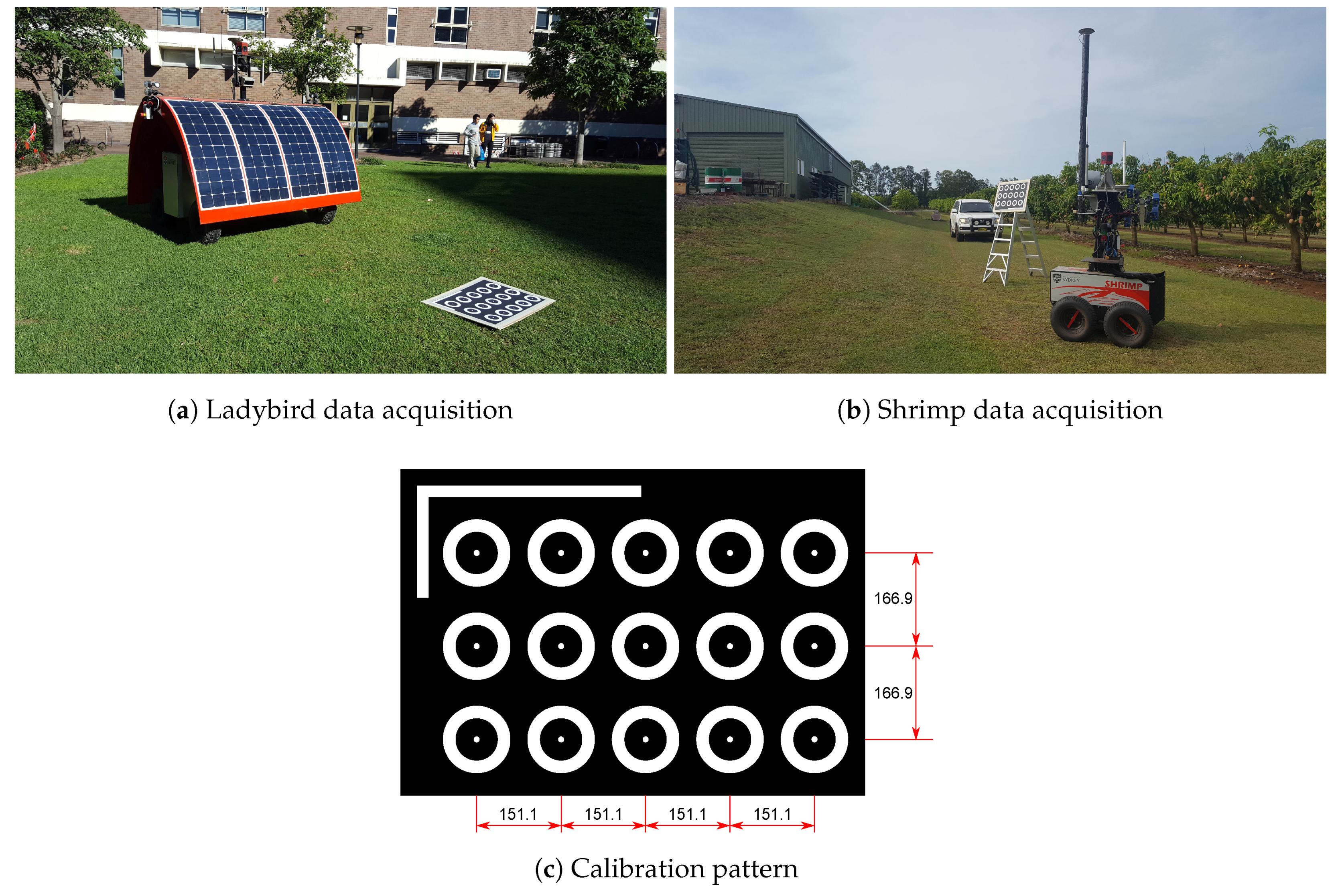

3.2. Data Acquisition

A calibration pattern with 15 points arranged in a 3 × 5 pattern was printed to an A1 size sheet of paper and mounted to a flat rigid plywood board (see

Figure 4c). The pattern was designed to maximise contrast for efficient extraction of pattern points. A corner shape was added to one side of the pattern to facilitate unique identification of each point. It is not necessary to know the pattern’s dimensions for recovery of the platform to camera pose, as each point is treated independently during the calibration. This also means that theoretically a single point with sufficient observations could be used for calibration. However, we added more pattern points since there is no significant practical cost, efficiently increasing the amount of data obtained. For the Ladybird platform, the pattern board was placed on relatively flat ground (see

Figure 4a). As shown in

Figure 5, the pattern was scanned from several directions around a circle with the calibration pattern in the centre. Two types of scans were performed, one with the robot’s wheels flat on the ground and one with one side of the robot elevated by driving over an aluminium channel. This raised two of the wheels by approximately 100 mm, inducing a roll of approximately 4°. For the Shrimp platform, the same calibration pattern was mounted to a ladder in an approximately vertical orientation (see

Figure 4b). In this case data were acquired next to a hill with various orientations and positions with respect the pattern, where the hill caused continuously variable roll and pitch, up to approx. 17° (see

Figure 5b). For both platforms, body orientation was intentionally varied as much as possible in an attempt to maximise observability of parameters [

33]. The robots were manually operated throughout the acquisition period, and care was taken to move slowly and smoothly while the calibration pattern was imaged.

All data was timestamped allowing association between individual scan lines and platform pose solutions. Localisation uncertainties reported by the navigation system are shown in

Table 2 as median standard deviations (i.e., square root of the diagonals of the covariance matrices only) for the acquisition runs, which illustrates that the navigation system in the Ladybird platform is able to provide body pose estimates with much greater certainty than the navigation system on Shrimp, due to the higher grade IMU.

3.3. Pattern Pixel Extraction

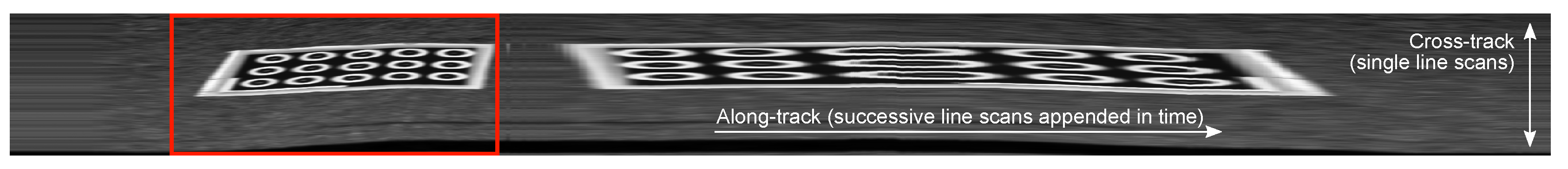

Approximate pixel locations of points on the calibration pattern were selected manually by appending successive line scans to form a rectangular image and selecting individual pattern points in order (see

Figure 6 and

Figure 7). Note that line scans were concatenated naively, ignoring camera or body pose data (i.e., not mapped or georeferenced). This worked well for this purpose, because the platforms were moved slowly and smoothly while the calibration pattern was scanned. Particular care was taken to ensure that point ID numbers were consistent for all observations of the calibration pattern. Pixel locations were then refined to sub pixel precision by extracting a

patch around the selected points and resizing it to

pixels using bi-cubic interpolation. The intensity peak closest to the centre was taken as the pattern point pixel location. Along-track, the closest time stamp was used to obtain the corresponding navigation solution. This provides pixel position

and platform pose

, which are necessary for calibration according to Equation (

22).

3.4. Optimisation and Uncertainty Estimation

Optimisation was performed using the Powell optimiser algorithm provided by the SciPy python package [

46,

47]. While other optimisers may be suitable for this task, as long as they minimize a scalar (negative log likelihood), while varying a vector (relative camera pose), the Powell algorithm achieved acceptable performance with the following tolerance values:

= 1 × 10

−5 and

= 1 × 10

−8. The objective function that was provided to the optimiser takes the relative camera pose parameters (

) and computes the negative log likelihood

(see Equation (

22)) given all pixel locations and navigation system solutions. The optimiser repeatedly calls this function, updating

in order to find a relative camera pose

that minimises

.

As described in

Section 2.5 we use MCMC to estimate uncertainties in the form of a covariance matrix. MCMC was performed with the emcee python package [

38], which was given a function that computes the log likelihood (Equation (

21)). The algorithm was initialised with the previously optimised relative camera pose

, and run with 250 walkers and 100 iterations, yielding 25,000 samples. A burn in run was also performed with 100 iterations to allow the function to explore the local region prior to performing the actual sampling run. Each sample represents one hypothetical parameter vector

. The distribution of the samples generated by the MCMC algorithm correspond to

, so the uncertainty of the relative camera pose estimate,

, can be estimated by computing Equation (

23).

For all calculations of

, 6×6 covariances for the platform pose were provided by the navigation system at each time stamp. It was assumed that a

u pixel point location could be estimated to within one standard deviation of 0.5 pixels (i.e.,

). If a point is visible it must also be within the IFOV of the sensor (see

Table 1), which is approximately 2 pixels for both platforms. We assumed this to span two standard deviations (95%), and so one standard deviation is 0.5 pixels (

). Principal point and focal length were assumed to have a standard deviation of 2 pixels and 0.1 mm respectively. As previously mentioned, the uncertainty of the relative camera pose,

, was temporarily set to zero (see

Section 2.3 and

Section 2.4).

3.5. Outlier Removal

Unusually high reprojection errors were removed by an iterative process of outlier rejection. First optimisation was performed on all observations shown in

Figure 5 for each platform. Reprojection errors,

were calculated for each observation

i of each pattern point

k (see Equation (

18)). These were then averaged per observation:

The observation i with the largest mean reprojection error was then removed from the data set and the process was repeated several times (i.e., optimise, calculate reprojection error, remove observation with largest reprojection error). The removal process may be stopped once all mean reprojection errors are below a threshold.

3.6. Mapping

To demonstrate mapping performance, rays were projected to a plane that was fitted to the estimated pattern point coordinates. Utilising the method in

Section 2.3, the pattern points (

) were first calculated given the data and relative camera pose . Using the general form of the equation of a plane

, a best fit plane can be found in a linear least squares fashion (setting

):

where the plane parameters

can be solved for by left multiplying

with the pseudo-inverse of

,

.

The rays for observation

i of pattern point

k, defined by

and

as calculated in

Section 2.1, can then be projected to the plane by computing their point of intersection:

Knowing all input covariance matrices, including the covariance of the relative camera position and orientation, as obtained using MCMC (see

Section 2.5), the uncertainty of each point projection

may also be calculated. First the partial derivatives of Equation (

27) with respect to all inputs were computed to yield the Jacobian

of the

x,

y and

z position of each point. The uncertainty of each projected point can then be calculated:

where

3.7. Comparing Poses

To assess how close a solution is to the optimal, we require a distance metric between two different 6 DOF pose transforms. As described in

Section 2.2, each pose vector is composed of three translation and three orientation parameters. Given two unique poses

and

, we can compare the translation parts easily by computing their Euclidean distance:

However, measuring the distance or difference between two rotations is more complicated, and the readers are referred to Huynh [

34] for an in-depth analysis of the topic. Huynh [

34] presents and recommends a number of metrics for comparing rotations. Of these, the geodesic on the unit sphere was chosen, because it represents the magnitude of the rotation angle required to align the two rotations, which was deemed to be an intuitive measure. It can be computed as follows:

where

and

are unit quaternion equivalents of

and

respectively, computed as:

can also be interpreted to be equal to the absolute value of the angular magnitude (in the range ) of the axis-angle rotation required to align the two orientations. For this reason, the metric will be simply referred to as the axis-angle difference. Combined, and form a 2D pose distance that is convenient for visualisation.

3.8. Basin of Attraction

Because the method in this paper requires an approximate initial camera pose, it is important to numerically quantify how precise this initial camera pose must be to yield an accurate optimised estimate. To measure how far an initial hand measured camera pose can be from the optimum, while still resulting in correct global convergence, we test a set of starting conditions that are altered by different amounts, and measure how close the optimal result is from the known global optimum. Due to the high dimensionality of the search space, a random sub-sampling of initial poses is performed. Deviation of initial values from the known optimum is quantified in the two dimensional pose distance space defined in

Section 3.7. The 2D space was sampled uniformly in a grid, and for each location a 6D initial parameter vector was randomly generated at the corresponding Euclidean and axis-angle distance from the known optimal value.

First an x, y and z translation vector was generated at random, uniformly distributed over an equal negative to positive range for all three parameters. The vector was normalised to unity and then multiplied by the Euclidean distance value of the corresponding grid position. The resulting vector was added to the optimised translation parameters, yielding the initial coordinates. Similarly, three axis-angle orientation values were randomly generated in the same way, normalised to unity and multiplied by the corresponding axis-angle difference value at the given grid location, yielding an axis-angle rotation. The optimised orientation parameters were then rotated by this difference rotation, producing the initial orientation values.

This yields a set of sparse random 6 DOF samples that are uniformly spaced in terms of pose distance from the known optimal camera pose, allowing the basin of attraction to be mapped. Optimisation was performed for each randomly generated initial camera pose on the grid. For each result, the Mahalanobis distance to the reference optimum was calculated, given the covariance matrix resulting from the MCMC uncertainty estimation on the optimised parameters.

4. Results

This section presents the results of line scan camera pose estimation for two different platforms and configurations, including outlier rejection, resulting camera pose and uncertainty, in-depth analysis of the uncertainty, the impact of platform pose diversity on the accuracy, and finally the combined mapping uncertainty.

4.1. Outlier Rejection

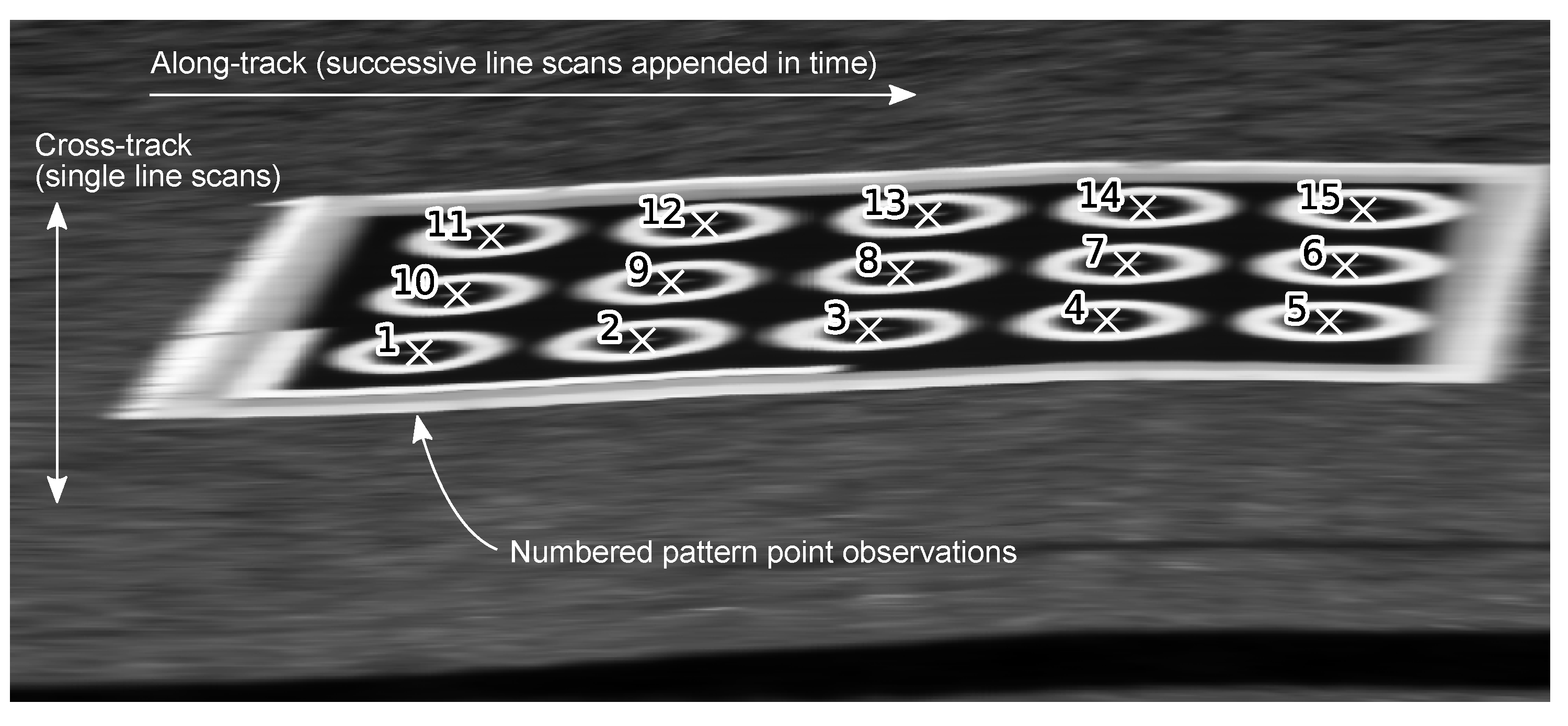

The iterative results of outlier removal based on reprojection errors are shown in

Figure 8. Each row represents an outlier removal iteration, labelled by the number of remaining observations, where the top row includes all observations. Each column represents one of the observations from

Figure 5, where each observation is one view of all 15 points on the calibration pattern. The colour of each cell indicates the mean reprojection error of the 15 points within the single observation of the calibration pattern, for a particular outlier removal iteration. In each figure, the black rectangle highlights the row with the greatest number of observations that all have mean errors less than a 5 pixel threshold. Ladybird exhibited a greater number of outliers and higher worst-case reprojection errors than Shrimp, with 9 compared to 6 outliers respectively. This resulted in 16 inliers for Ladybird and 14 for Shrimp.

4.2. Pose and Uncertainty Results

The relative camera pose transforms and associated uncertainties (one standard deviation) for both platforms are shown in

Table 3 before and after outlier removal. The hand measured estimate is also shown for reference, where the tolerances reflect the difficulty of measurement. The results for the Shrimp platform exhibit greater uncertainty compared to the Ladybird platform.

In

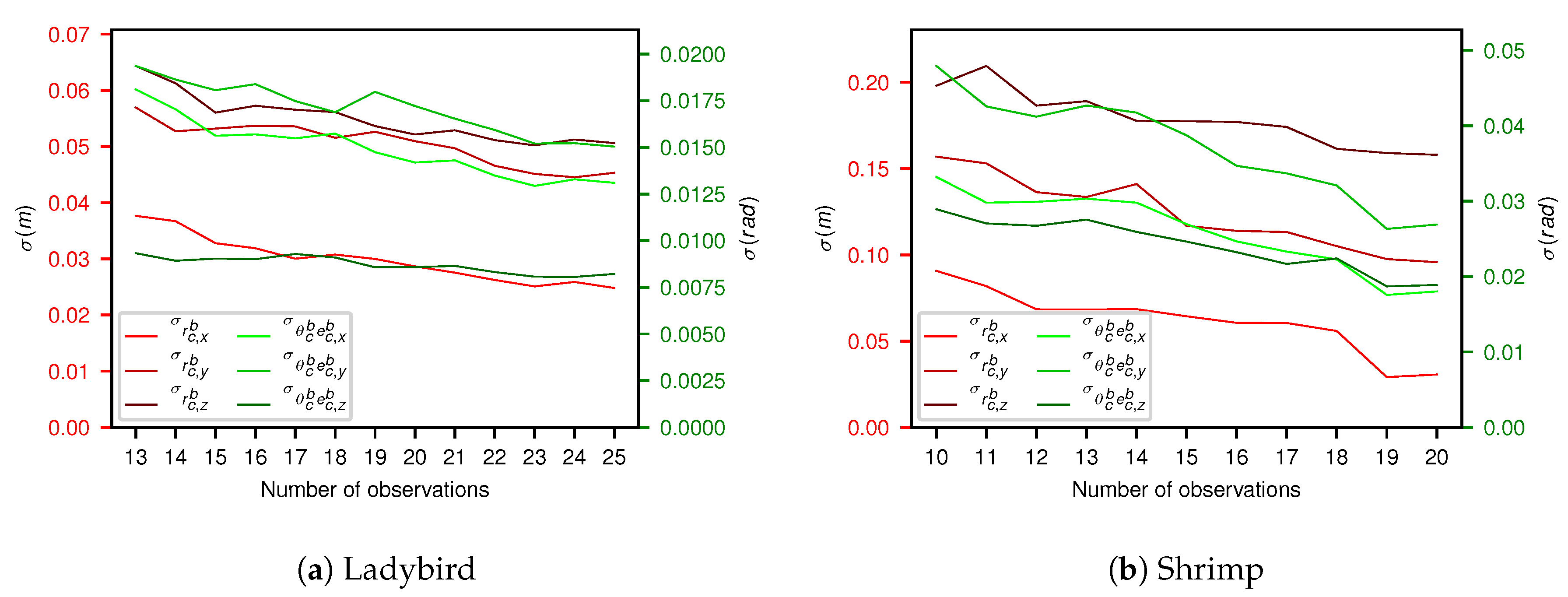

Figure 9 each outlier removal stage is plotted against each parameter’s standard deviation. As would be expected, increasing the number of observations decreases the uncertainty of the estimate.

The number of observations affects the number of computations and therefore has a significant effect on calibration and MCMC run time. For Ladybird, optimisation and MCMC took approx. 15 min and 7 h respectively for all 25 observations. For 16 observations, this was reduced to just over 7 min and 4 h. For Shrimp, the respective optimisation and MCMC times were reduced from approx. 5.5 min and 5 h for all 20 observations to just over 2 min and 3 h with 14 observations.

4.3. In-Depth Uncertainty Analysis

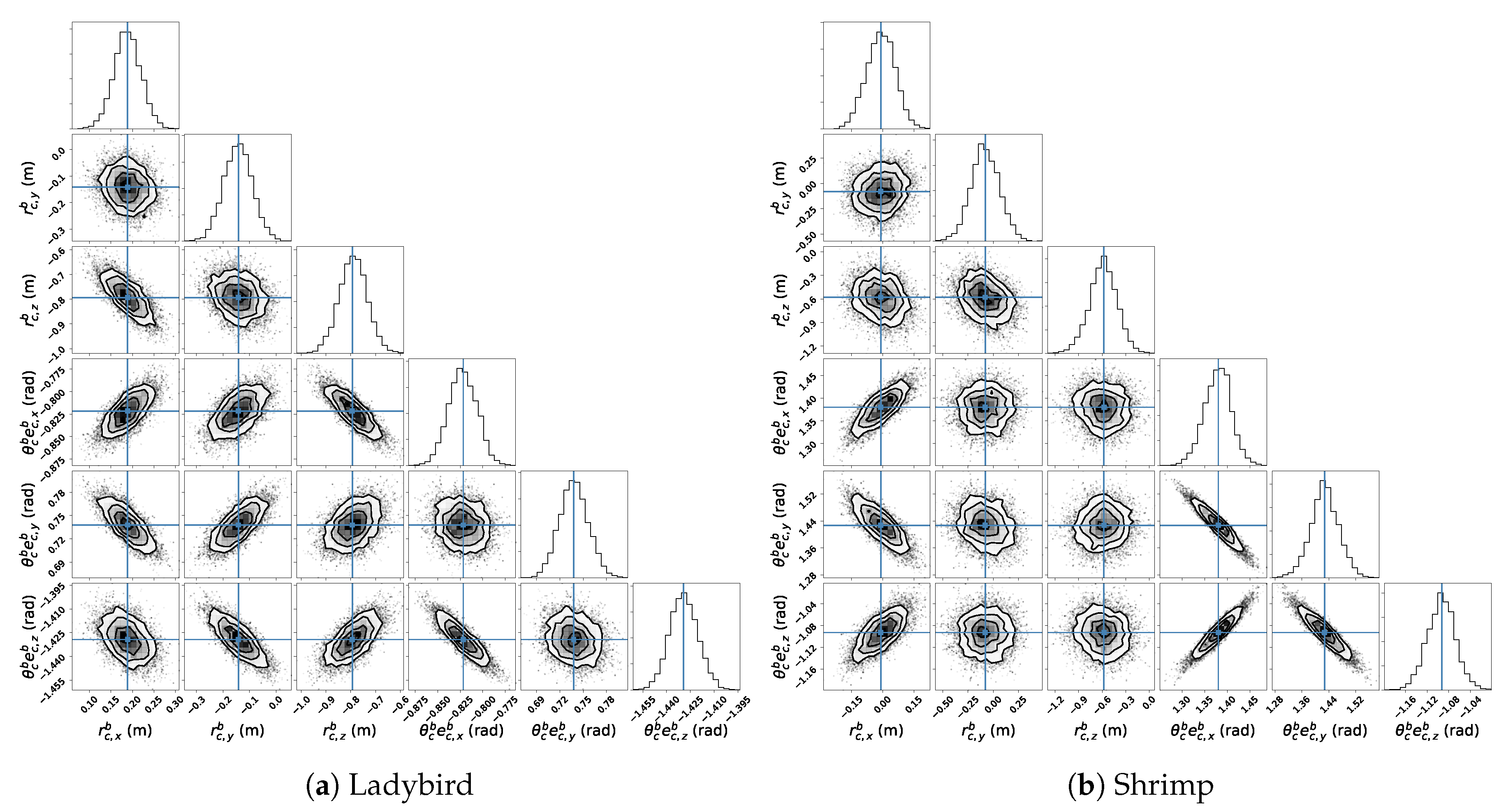

Examining uncertainty in more detail, MCMC samples are shown on a corner plot in

Figure 10 [

48]. Each sub-plot below the diagonal provides a 2D histogram, showing the MCMC sample density between two parameters (i.e., the marginal likelihood distribution for a parameter pair), and on the diagonal a 1D histogram, giving the sample density for the marginal likelihood distribution for each single parameter.

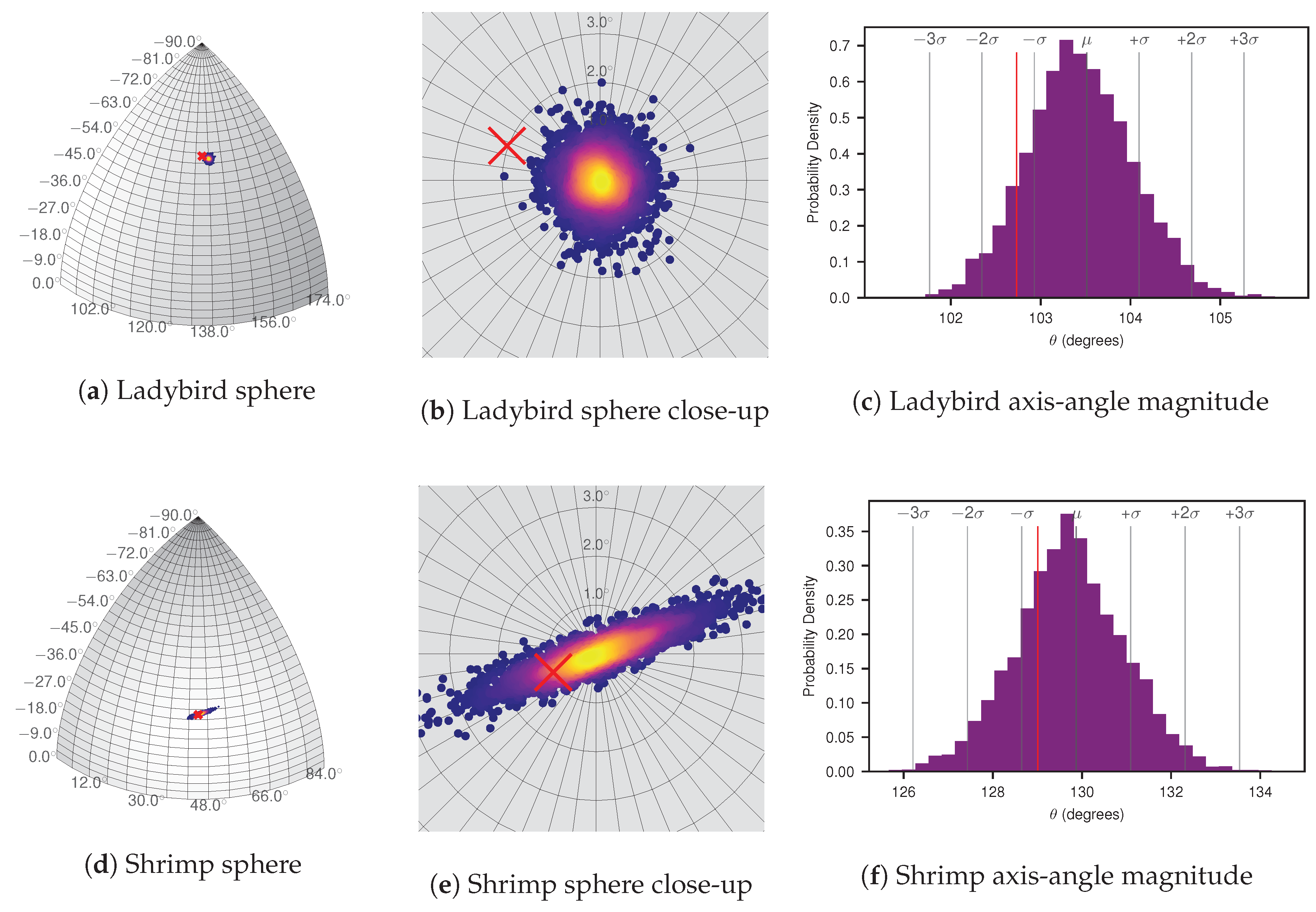

For human interpretation of the uncertainty, in

Figure 11a,d the MCMC sample values for

are plotted on a sphere. For visualisation only, each point is coloured according to a kernel density estimate (KDE) performed on all MCMC samples to give an indication of the axis-angle vector marginal likelihood. Hand measured pose estimates are shown as red crosses. Likewise, in

Figure 11c,f, values for

are presented in a histogram, showing the marginal likelihood of the axis-angle magnitude. While both figures indicate a clustering to within two degrees, the Shrimp platform’s distribution exhibits a more elongated elliptical shape, while for Ladybird, it is more uniformly spread in all directions. Also apparent for Ladybird is that the manual measurements are well outside the region of high likelihood, both in terms of the axis-angle unit vector and magnitude. Conversely, the hand measured pose for the Shrimp platform is highly likely given the data, suggesting the initial manual measurement may have been more accurate than for Ladybird.

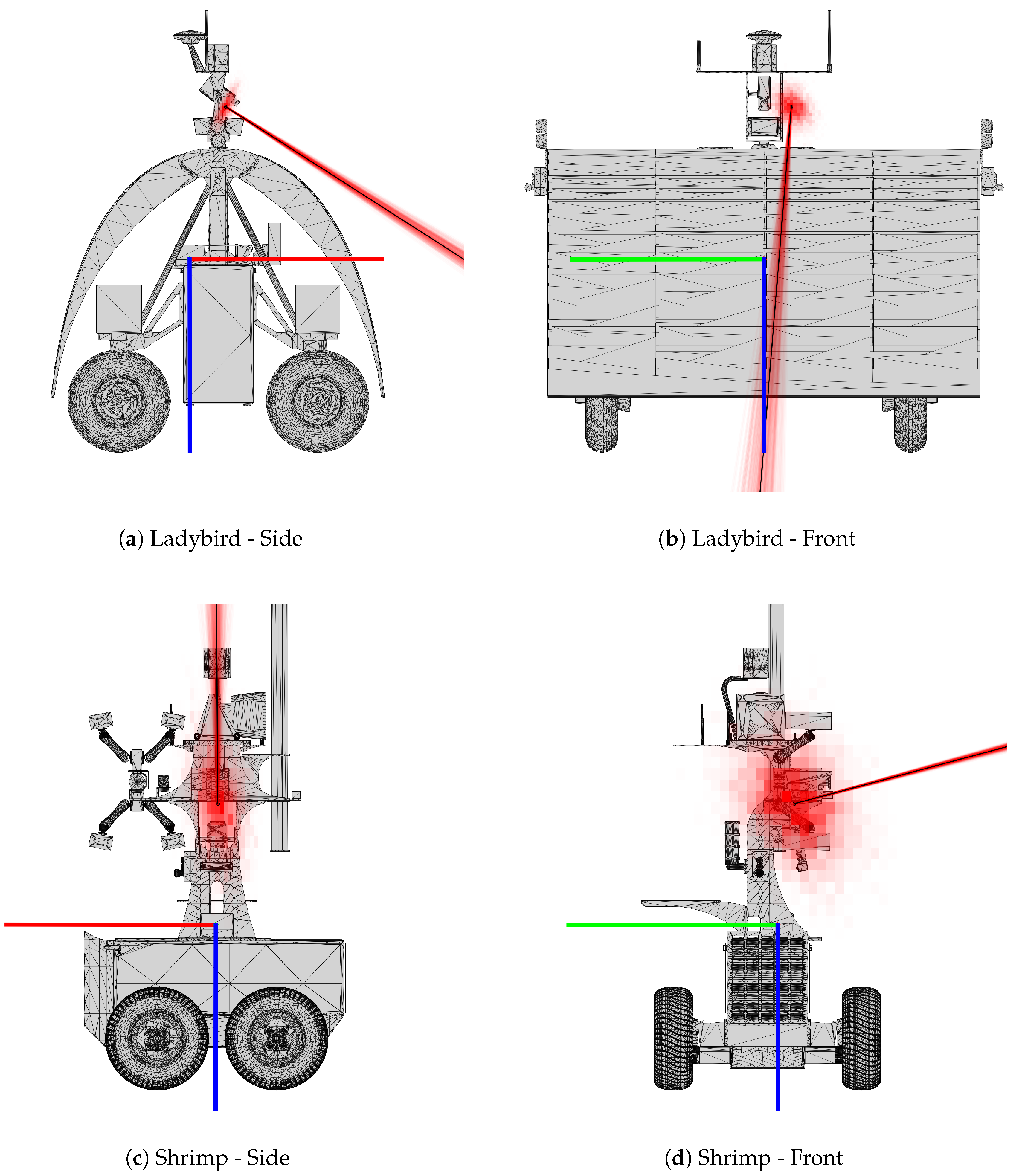

In

Figure 12, the distributions of MCMC samples is shown superimposed on the corresponding platform model. Translation parameters are presented as a 2D histogram, similar to

Figure 10, demonstrating the marginal density of the likelihood distribution from each orthogonal viewpoint. To present the orientation parameter distribution, line segments coincident with the camera’s viewing direction, and anchored to the optimized camera centre, are rotated by each MCMC rotation sample. Each line is semi-transparent, and so as all samples build up, the density distribution of the camera orientation is visualised. It is evident that there is greater variance in the MCMC samples for Shrimp when compared to the Ladybird platform, particularly for the translation parameters, as corroborated by the numerical results in

Table 3.

4.4. Effect of Angular Diversity

To investigate how angular diversity of body poses in a dataset affects the certainty of the result, two experimental subsets were compiled from the outlier-rejected dataset with 16 observations for Ladybird. The first includes only ten observations with small roll angles

< 1.9°. The second includes five observations with small roll

and five with roll angles 3.3° <

< 5.8°. Both datasets therefore contain ten total observations, representing low and high angular diversity. Optimisation results for these subsets are shown in

Table 4. Despite containing the same number of observations, the dataset with high angular diversity results in significantly lower uncertainty for the optimal camera pose, compared to the low diversity set.

4.5. Repeatability

To assess the repeatability of the approach, another two experimental subsets were compiled from the outlier-rejected dataset with 16 observations for Ladybird. They each contained five observations with small roll

and three with roll angles 3.3° <

< 5.8°. The two subsets do not share any observations, making them independent. Optimisation results for these subsets are shown in

Table 5. The two results are consistent with each other, as the two distributions overlap to a significant degree. Due to the small dataset size, uncertainty values are higher than the result in

Table 3 with all 16 observations, which is expected as demonstrated in

Section 4.3.

4.6. Mapping Accuracy

Figure 13 shows Ladybird’s and Shrimp’s observations, after outlier removal, projected to the best fit plane of predicted point locations before and after calibration (see

Section 3.6).

Figure 13a,c give projections of all observations, while

Figure 13b,d only show the projections for one observation, but include uncertainty ellipses. Post calibration average pattern point estimates are also shown as green crosses.

For both platforms, the calibrated camera pose exhibited more densely bound projected points. The change in spread is less pronounced for the Shrimp platform, because as mentioned previously the manual measurements were by chance much closer to the optimum values, though a significant offset can be observed between manual and calibrated results. The plots also demonstrate the effect of relative camera pose uncertainty on mapping uncertainty, which were significant for both Ladybird and Shrimp.

Given the hand measurement for shrimp happened to be close to the optimal, the effect of adding just one degree of error to the measured axis-angle vector is shown in

Figure 14, which compares the mapped points from the optimised and erroneous camera pose. The optimisation improves the cluster significantly compared to a hand measured pose with one degree error.

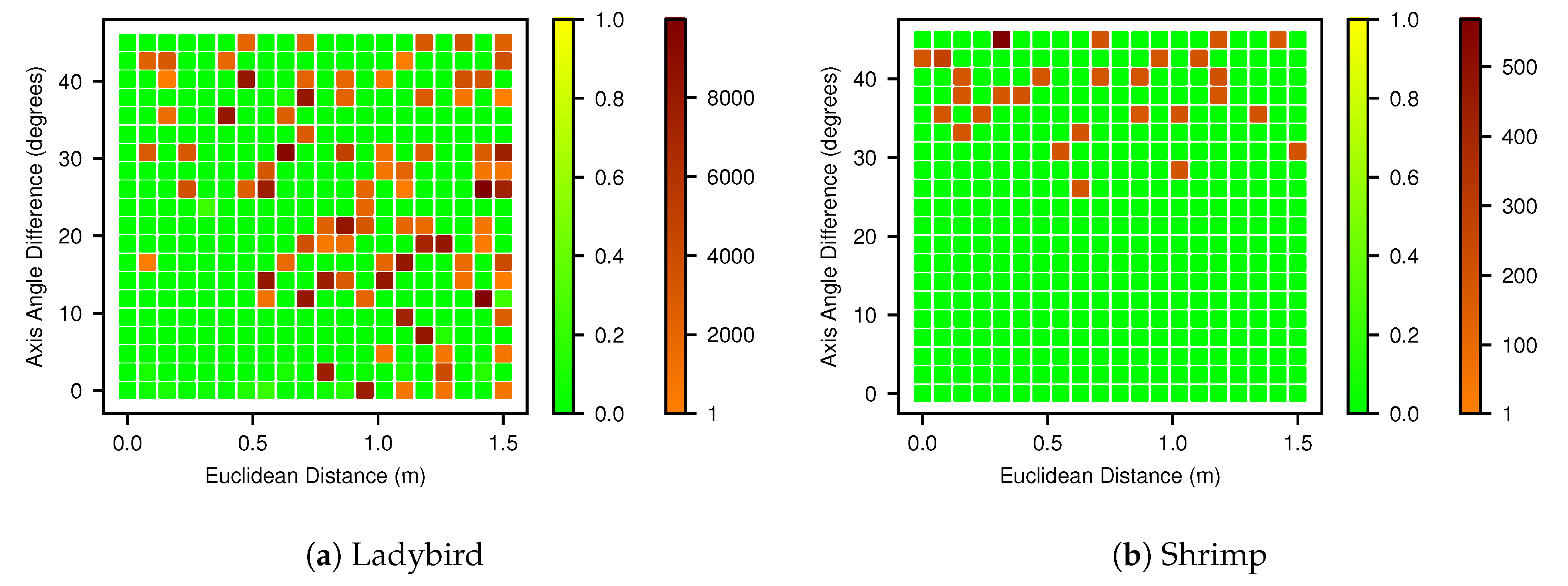

4.7. Basin of Attraction

Basin of attraction plots, which were generated as described in

Section 3.8 using the Powell optimiser, are shown in

Figure 15 for both platforms. The Mahalanobis distances were generally either close to zero or very large, so they are colour coded into two tiers, below and above 1.0, to improve readability. The basin for the Ladybird platform (

Figure 15a) shows success can be expected in the triangular region with less than 20° and 0.5 m of hand measurement error. The basin for Shrimp (

Figure 15b) shows a greater immunity to translation errors and successful results can be expected with initialisation errors less than 20° and as high as 1.5 m. Both figures indicate that when the initialisation error is higher, there is still a high chance (approx. 60%) of a solution within a Mahalanobis distance of 0.1, but it cannot be relied upon, and deteriorates as the distance from optimum increases.

5. Discussion

The results show that the proposed method was able to to reliably estimate the relative line scan camera pose on a mobile ground vehicle, resulting in a reduction in mapping error, as long as the calibration data includes sufficient viewpoint variability. An uncertainty of 0.06 m/0.018 rad (1.05°) for Ladybird, and 0.18 m/0.042 rad (2.39°) for Shrimp was achieved. This result is dependent on the certainty of input parameters, which include pixel observations, navigation system solutions, and camera intrinsics. For example, confidence in the calibrated pose parameters for the Ladybird platform was significantly greater than for the Shrimp platform, due to Ladybird’s higher grade IMU, which allowed the navigation system to provide more certain solutions.

The results show that it is necessary to examine reprojection errors and remove outliers, as is common with many camera calibration approaches. Outliers statistically fall outside the assumptions encoded in their respective error models, and so the mean of the final camera pose distribution is pulled in the wrong direction. A number of observations for both platforms exhibited high reprojection errors relative to other observations (>approx. 8 pixels). These errors could be caused by manual labelling inaccuracies (e.g., due to limited resolution), navigation system solution errors that incorrectly fall outside the reported navigation uncertainty, or a combination thereof. Removing outliers had significant effects on the results, evident particularly in the correction of some parameters such as the z-offset

for both platforms (see

Table 3). The results shown in

Figure 8 also support the iterative removal of outliers at each stage. For instance, reprojection errors of Ladybird observation 20 improve as other observations are removed, while Shrimp observation 4 degrades, and was eventually removed. Conversely, as shown in

Figure 9 a larger number of observations, outliers or not, allows for greater certainty in the final result. Thus, there are two competing factors when performing outlier rejection. A sufficiently large number of observations is required to maintain an acceptable level of certainty, yet removing outliers is important to minimise reprojection errors. It is, therefore, desirable to obtain a sufficient number of observations to allow for subsequent outlier removal. The additional computational time required when increasing the number of observations is also a consideration. The ending condition threshold should be chosen such that significant outliers are removed, but a sufficient number of observations remain. In this paper a value of 5 pixels was empirically determined as an appropriately balanced threshold given the data.

The main product of the MCMC uncertainty analysis is a covariance matrix (Equation (

23)), which can be used to estimate mapping accuracy (e.g.,

Figure 13a,c). However, covariance matrices represent uncertainty in a compressed format, given the assumption that the likelihood function is normally distributed. The corner plots (

Figure 10) provide a direct view of the MCMC sampling result, which qualitatively confirm that for both vehicles the normality assumption is justified: specifically that the distributions behave linearly within the sampled region, and the 1D histograms are qualitatively Gaussian in shape.

In this paper we propose methods of visualising sensor pose distributions in a human interpretable way, as depicted in

Figure 11 and

Figure 12. The sphere plots and associated axis-angle magnitude histograms in

Figure 11 present the same underlying data in the MCMC sample plots (

Figure 10), but focus on human interpretability of the orientation parameters. The sphere provides a relatable reference that demonstrates how closely clustered the pose orientation is. Similarly, the visualisation in

Figure 12 allows for human interpretation of the resulting camera pose and uncertainty (likelihood distribution) with respect to the platform models. These figures particularly highlight the greater uncertainty in translation parameters compared to orientation. They also confirm that the solutions are qualitatively “sensible” with respect to the physical platform models.

The primary objective of optimising the camera pose is to reduce mapping errors. This was demonstrated in the results by the tighter clustering of mapped calibration target points that was achieved post-calibration. The improvement was particularly noticeable for the Ladybird platform, and to a lesser extent for the Shrimp platform. By chance, the manually measured camera pose on Shrimp was much closer to the optimal result than it was for Ladybird, and so the mapping improvement for Shrimp was less pronounced. The camera location on the Shrimp platform was easier to access, due to the lower height and smaller footprint, compared to the Ladybird platform, which likely explains the better manual estimate. Nevertheless, such accurate manual measurements can typically not be guaranteed, and

Figure 14 reveals the sensitivity of the map to small errors in camera pose, highlighting the need for calibration.

The results reinforce the importance of acquiring a calibration dataset that exhibits a wide variety of platform poses with respect to the calibration pattern. This was tested by optimising both with and without large body roll (

) observations. Removing high

observations had a considerable effect, as shown in

Table 4, where uncertainty approximately doubled and even tripled for some parameters.

The proposed method is able to deal with a wide range of initial hand measured values when paired with the Powell optimisation algorithm. In

Figure 15 we propose an intuitive approach to visualising the basins of attraction, by reducing the 6 DOF initial parameter space to form a 2D pose-distance space, comprising Euclidean and axis-angle distance. The plots demonstrate that initial estimates that deviate up to 0.5 m or 20° are likely to result in a successful optimisation. Additionally, with even larger deviations there is still a better than even ( 60%) chance of success. However, this is highly dependent on the geometry of the sensor and platform, the acquired data and the chosen optimisation algorithm. In our case, the solution for the Shrimp platform was surprisingly robust to initial translation errors (up to 1.5 m), while some failures can be seen at over 0.5 m for the Ladybird platform. This may be the result of the greater platform roll and pitch angles (up to 17°) achieved with Shrimp during data acquisition. In addition, different optimisers will have varying abilities to deal with local minima and “flat” regions in the 6 DOF parameter space. Nevertheless, measurement error tolerances of ±0.5 m and ±20° should be practically achievable for most applications.

As shown in

Table 5, the camera pose estimates obtained from two independent datasets for the same platform were consistent with each other. The results therefore verify that the proposed approach is repeatable by demonstrating that different sets of data from the same platform yield consistent results.

An important advantage of the proposed method is that exact dimensions of the calibration pattern do not need to be known. As such, a planarity assumption or assumptions about the distances or geometry between points are not required. This simplifies the method in the field because it is not affected by printing errors or damage/warping of the pattern which affects the relative geometry of the points. Furthermore, a calibration pattern could be manually produced in the field if necessary. One important condition, however, is that individual points are uniquely distinguishable in the line scan image data.