Calibration of Binocular Vision Sensors Based on Unknown-Sized Elliptical Stripe Images

Abstract

:1. Introduction

2. Principle and Methods

2.1. Mathematical Model of BSVS

2.2. Algorithm Principle

- he ratios of the minor axis to the major axis are equivalent.

- The minor axis of the major axis of one elliptical stripe is parallel to that of the other elliptical stripe. The angles between the minor axis and the major axis of these two elliptical stripes are equivalent.

2.2.1. Solving

2.2.2. Solving

2.2.3. Non-Linear Optimization

3. Discussion

4. Experiment

4.1. Simulation Experiment

4.1.1. Impact of Image Noise on Calibration Accuracy

4.1.2. Impact of Distance between Two Target Planes on Calibration Accuracy

4.1.3. Impact of Elliptical Stripe Size on Calibration Accuracy

4.2. Physical Experiment

4.2.1. Performance of Different Targets in Complex Light Environments

4.2.2. Extrinsic Calibration of BSVS

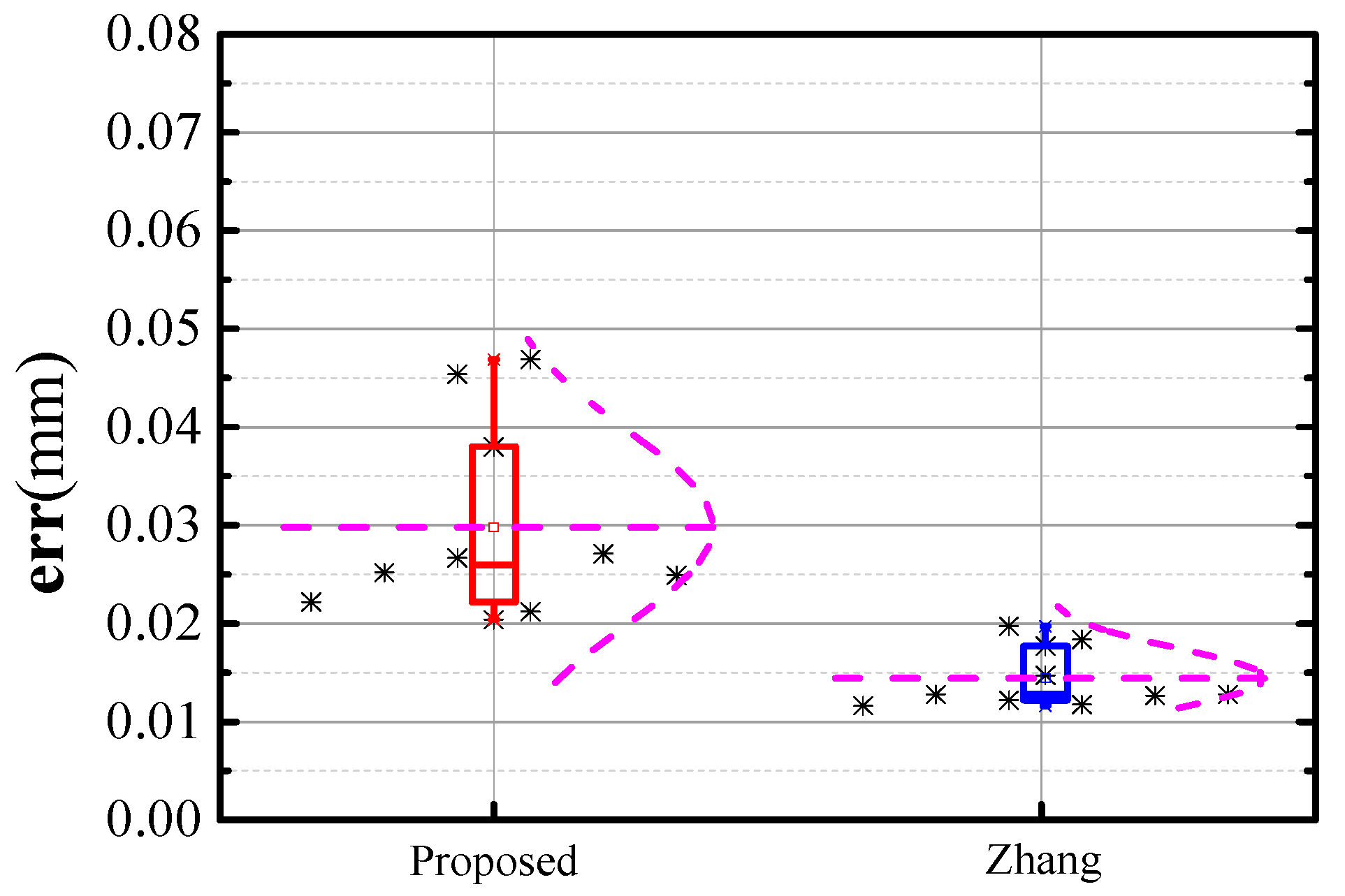

4.2.3. Evaluation of the Proposed Method

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Xu, Y.J.; Gao, F.; Ren, H.Y.; Zhang, Z.H.; Jiang, X.Q. An Iterative Distortion Compensation Algorithm for Camera Calibration Based on Phase Target. Sensors 2017, 17, 1188. [Google Scholar] [CrossRef] [PubMed]

- LI, Z.X.; Wang, K.Q.; Zuo, W.M.; Meng, D.Y.; Zhang, L. Detail-Preserving and Content-Aware Variational Multi-View Stereo Reconstruction. IEEE Trans. Image Process. 2016, 25, 864–877. [Google Scholar] [CrossRef] [PubMed]

- Poulin-Girard, A.S.; Thibault, S.; Laurendeau, D. Influence of camera calibration conditions on the accuracy of 3D reconstruction. Opt. Express 2016, 24, 2678–2686. [Google Scholar] [CrossRef] [PubMed]

- Lilienblum, E.; Al-Hamadi, A. A Structured Light Approach for 3-D Surface Reconstruction With a Stereo Line-Scan System. IEEE Trans. Instrum. Meas. 2015, 64, 1258–1266. [Google Scholar] [CrossRef]

- Liu, Z.; Li, X.J.; Yin, Y. On-site calibration of line-structured light vision sensor in complex light environments. Opt. Express 2015, 23, 29896–29911. [Google Scholar] [CrossRef] [PubMed]

- Seitz, S.M.; Curless, B.; Diebel, J.; Scharstein, D.; Szeliski, R. A Comparison and Evaluation of Multi-View Stereo Reconstruction Algorithms. In Proceedings of the IEEE International Conference on Computer Vision & Pattern Recognition, New York, NY, USA, 17–22 June 2006; pp. 519–528. [Google Scholar]

- Wu, F.C.; Hu, Z.Y.; Zhu, H.J. Camera calibration with moving one-dimensional objects. Pattern Recognit. 2005, 38, 755–765. [Google Scholar] [CrossRef]

- Qi, F.; Li, Q.H.; Luo, Y.P.; Hu, D.C. Camera calibration with one-dimensional objects moving under gravity. Pattern Recognit. 2007, 40, 343–345. [Google Scholar] [CrossRef]

- Douxchamps, D.; Chihara, K. High-accuracy and robust localization of large control markers for geometric camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 31, 376–383. [Google Scholar] [CrossRef] [PubMed]

- Heikkila, J. Geometric camera calibration using circular control points. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1066–1077. [Google Scholar] [CrossRef]

- Tsai, R.Y. A versatile camera calibration technique for high-accuracy 3D machine vision metrology using off-the-shelf TV camera and lenses. IEEE J. Robot. Autom. 1987, 3, 323–344. [Google Scholar] [CrossRef]

- Zhao, Y.; Li, X.F.; Li, W.M. Binocular vision system calibration based on a one-dimensional target. Appl. Opt. 2012, 51, 3338–3345. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.Y. Camera calibration with one-dimensional objects. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 892–899. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.Y. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Bradley, D.; Heidrich, W. Binocular Camera Calibration Using Rectification Error. In Proceedings of the 2010 Canadian Conference on Computer and Robot Vision, Ottawa, ON, Canada, 31 May–2 June 2010. [Google Scholar]

- Jia, Z.Y.; Yang, J.H.; Liu, W.; Liu, F.J.Y.; Wang, Y.; Liu, L.L.; Wang, C.N.; Fan, C.; Zhao, K. Improved camera calibration method based on perpendicularity compensation for binocular stereo vision measurement system. Opt. Express 2015, 23, 15205–15223. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.; Yin, Y.; Liu, S.P.; Chen, X. Extrinsic parameter calibration of stereo vision sensors using spot laser projector. Appl. Opt. 2016, 55, 7098–7105. [Google Scholar] [CrossRef] [PubMed]

- Zhang, H.; Wong, K.-Y.K.; Zhang, G.Q. Camera calibration from images of sphere. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 499–503. [Google Scholar] [CrossRef] [PubMed]

- Wu, X.L.; Wu, S.T.; Xing, Z.H.; Jia, X. A Global Calibration Method for Widely Distributed Cameras Based on Vanishing Features. Sensors 2016, 16, 838. [Google Scholar] [CrossRef] [PubMed]

- Ying, X.H.; Zha, H.B. Geometric interpretations of the relation between the image of the absolute conic and sphere images. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 2031–2036. [Google Scholar] [CrossRef] [PubMed]

- Wong, K.-Y.K.; Zhang, G.Q.; Chen, Z.H. A stratified approach for camera calibration using spheres. IEEE Trans. Image Process. 2011, 20, 305–316. [Google Scholar]

- Hartley, R.I.; Zisserman, A. Multiple View Geometry in Computer Vision, 2nd ed.; Cambridge University Press: New York, NY, USA, 2003. [Google Scholar]

- Moré, J.J. The Levenberg-Marquardt Algorithm, Implementation and Theory. Lect. Notes Math. 1978, 630, 105–116. [Google Scholar]

- Liu, Z.; Wu, Q.; Chen, X.; Yin, Y. High-accuracy calibration of low-cost camera using image disturbance factor. Opt. Express 2016, 24, 24321–24336. [Google Scholar] [CrossRef] [PubMed]

- Bouguet, J.-Y. Camera Calibration Toolbox for Matlab. Available online: http://www.vision.caltech.edu/bouguetj/calib_doc/index.html (accessed on 29 July 2017).

- Steger, C. An Unbiased Detector of Curvilinear Structures. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 20, 113–125. [Google Scholar] [CrossRef]

- Fitzgibbon, A.; Pilu, M.; Fisher, R.B. Direct least square fitting of elipses. IEEE Trans. Pattern Anal. Mach. Intell. 1999, 21, 476–480. [Google Scholar] [CrossRef]

| fx | fy | u0 (pixel) | v0 (pixel) | γ | k1 (mm−2) | k2 (mm−4) | |

|---|---|---|---|---|---|---|---|

| Left camera | 3672.23 | 3672.87 | 833.11 | 631.99 | 8.46 × 10−5 | −0.11 | −0.05 |

| Right camera | 3673.59 | 3672.85 | 821.11 | 632.18 | −1.59 × 10−5 | −0.13 | 0.92 |

| rx | ry | rz | tx (mm) | ty (mm) | tz (mm) | |

|---|---|---|---|---|---|---|

| Proposed method | 0.0084 | 0.6822 | 0.0416 | −449.6990 | −5.6238 | 180.8245 |

| Zhang’s method | 0.0082 | 0.6845 | 0.0421 | −450.5520 | −5.7329 | 183.8668 |

| Index | Proposed Method | Zhang’s Method | ||||

|---|---|---|---|---|---|---|

| x (mm) | y (mm) | z (mm) | x (mm) | y (mm) | z (mm) | |

| 1 | 100.430 | −40.851 | 578.504 | 100.550 | −40.899 | 579.197 |

| 2 | 100.732 | −30.883 | 577.749 | 100.856 | −30.921 | 578.464 |

| 3 | 101.028 | −20.922 | 577.016 | 101.157 | −20.949 | 577.753 |

| 4 | 91.072 | −30.768 | 575.206 | 91.185 | −30.806 | 575.923 |

| 5 | 91.676 | −10.858 | 573.712 | 91.798 | −10.872 | 574.472 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, Z.; Wu, S.; Yin, Y.; Wu, J. Calibration of Binocular Vision Sensors Based on Unknown-Sized Elliptical Stripe Images. Sensors 2017, 17, 2873. https://doi.org/10.3390/s17122873

Liu Z, Wu S, Yin Y, Wu J. Calibration of Binocular Vision Sensors Based on Unknown-Sized Elliptical Stripe Images. Sensors. 2017; 17(12):2873. https://doi.org/10.3390/s17122873

Chicago/Turabian StyleLiu, Zhen, Suining Wu, Yang Yin, and Jinbo Wu. 2017. "Calibration of Binocular Vision Sensors Based on Unknown-Sized Elliptical Stripe Images" Sensors 17, no. 12: 2873. https://doi.org/10.3390/s17122873

APA StyleLiu, Z., Wu, S., Yin, Y., & Wu, J. (2017). Calibration of Binocular Vision Sensors Based on Unknown-Sized Elliptical Stripe Images. Sensors, 17(12), 2873. https://doi.org/10.3390/s17122873