Coordinated Target Tracking via a Hybrid Optimization Approach

Abstract

:1. Introduction

2. Problem Description

2.1. Kinematics Model of UAV

2.2. Sensor Visible Regions in Complex Environment

2.3. The Motion of the Ground Target

3. The Proposed Method

3.1. The Receding Horizon Control in Coordinated Tracking

- Step 1:

- Determine the optimal control sequence based on the state X [k]:where u* denotes the optimal control sequence minimizing the cost function J. Since the dynamic model of the UAV is obtained from prior knowledge, the trajectory of the UAV can be updated in an iterative fashion based on the input control signal and its current state. Because the motion of the target is unknown, its future positions can only be estimated based on previous observation. The cost function J returns the goodness of the tracking based on the relative position between the target and the UAV, which would be discussed in detail in Section 3.2.

- Step 2:

- Apply the optimal input sequence u* to the system, and update the states of the system.

- Step 3:

- Repeat Steps (1) and (2) for the next time instant.

3.2. The Cost of the Tracking Path

3.2.1. Dynamic Constraint of the UAV

3.2.2. The Target Observation Time

3.2.3. The Anti-Collision Constraint

3.2.4. The Regulation Term

3.3. Chemical Reaction Optimization-Based Coordinated Tracking

3.3.1. Coding Scheme

3.3.2. On-Wall Ineffective Collision

3.3.3. Decomposition

3.3.4. Inter-Molecular Ineffective Collision

3.3.5. Synthesis

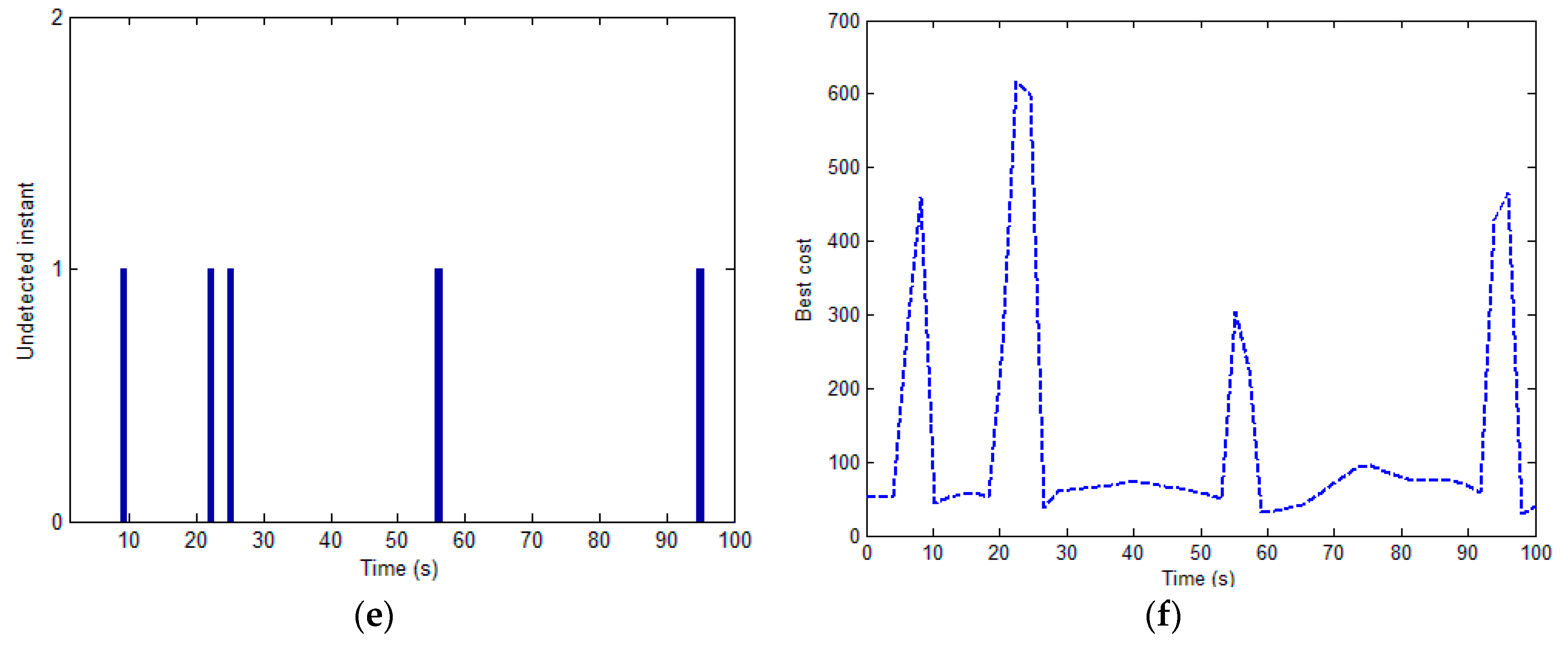

4. Simulation Results and Analysis

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Horri, N.M.; Dahia, K. Model Predictive Lateral Control of a UAV Formation Using a Virtual Structure Approach. Int. J. Unmanned Syst. Eng. 2014, 2, 1–11. [Google Scholar] [CrossRef]

- Yang, K.; Sukkarieh, S. 3D smooth path planning for a UAV in cluttered natural environments. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Nice, France, 22–26 September 2008; pp. 794–800.

- Belkhouche, F. Reactive Path Planning in a Dynamic Environment. IEEE Trans. Robot. 2009, 25, 902–911. [Google Scholar] [CrossRef]

- Cheng, P. Time-optimal UAV trajectory planning for 3D urban structure coverage. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Nice, France, 22–26 September 2008; pp. 2750–2757.

- Quintero, P.; Papi, F.; Klein, D.J.; Chisci, L.; Hespanha, J.P. Optimal UAV coordination for target tracking using dynamic programming. In Proceedings of the 49th IEEE Conference on Decision and Control, Atlanta, GA, USA, 15–17 December 2010; pp. 4541–4546.

- Chen, H.D.; Chang, K.C.; Agate, C.S. UAV path planning with Tangent-plus-Lyapunov vector field guidance and obstacle avoidance. IEEE Trans. Aerosp. Electron. Syst. 2013, 49, 840–856. [Google Scholar] [CrossRef]

- Shaferman, V.; Shima, T. Unmanned aerial vehicles cooperative tracking of moving ground target in urban environments. J. Guid. Control Dyn. 2008, 5, 1360–1371. [Google Scholar] [CrossRef]

- Yu, H.; Beard, R.W.; Argyle, M.; Chamberlain, C. Probabilistic path planning for cooperative target tracking using aerial and ground vehicles. In Proceedings of the 2011 American Control Conference, San Francisco, CA, USA, 29 June–1 July 2011; pp. 4673–4678.

- Zhang, C.G.; Xi, Y.G. Robot path planning in globally unknown environments based on rolling windows. Sci. China 2001, 2, 131–139. [Google Scholar]

- Beard, R.W.; McLain, W.; Nelson, B. Decentralized Cooperative Aerial Surveillance Using Fixed-Wing Miniature UAVs. Proc. IEEE 2006, 7, 1306–1324. [Google Scholar] [CrossRef]

- He, Z.; Xu, J.X. Moving target tracking by UAVs in an urban area. In Proceedings of the IEEE International Conference on Control and Automation, Hangzhou, China, 12–14 June 2013; pp. 1933–1938.

- Besada-Portas, E.; de la Torre, L.; de la Cruz, J.M.; de Andrés-Toro, B. Evolutionary Trajectory Planner for Multiple UAVs in Realistic Scenarios. IEEE Trans. Robot. 2010, 26, 619–634. [Google Scholar] [CrossRef]

- Wang, L.; Su, F.; Zhu, H.; Shen, L. Active sensing based cooperative target tracking using UAVs in an urban area. In Proceedings of the International conference on Advanced Computer Control, Shenyang, China, 27–29 March 2010; Volume 2, pp. 486–491.

- Vincent, R.; Mohammed, T.; Gilles, L. Comparison of Parallel Genetic Algorithm and Particle Swarm Optimization for Real-Time UAV Path Planning. IEEE Trans. Ind. Inform. 2013, 9, 132–141. [Google Scholar]

- Wise, R.A.; Rysdyk, R.T. UAV coordination for Autonomous Target Tracking. In Proceedings of the AIAA Guidance, Navigation and Control Conference and Exhibit, Keystone, CO, USA, 21–14 August 2006; pp. 1–22.

- Lam, A.S.; Li, V.K. Chemical Reaction Optimization: A tutorial. Mim. Comput. 2012, 4, 3–17. [Google Scholar] [CrossRef]

- Gal, O.; Doytsher, Y. Fast and Accurate Visibility Computation in a 3D Urban Environment. In Proceedings of the Fourth International Conference on Advanced Geographic Information Systems, Applications, and Services, Valencia, Spain, 30 January–4 February 2012; pp. 105–110.

- Yao, P.; Wang, H.; Su, Z. Real-time path planning of unmanned aerial vehicle for target tracking and obstacle avoidance in complex dynamic environment. Aerosp. Sci. Technol. 2015, 47, 269–279. [Google Scholar] [CrossRef]

| KELossRate | 0.2 | InitialKE | 200 |

| MoleColl | 0.3 | PopSize | 100 |

| buffer | 0 | α | 500 |

| Β | 10 | c1 | 0.15 |

| c2 | 2.5 |

| Performance | Proposed Method | LGVF-Based Algorithm |

|---|---|---|

| Mean visibility time (s) | 95 | 82 |

| Maximum visibility time (s) | 96 | 79 |

| Minimum visibility time (s) | 91 | 84 |

| Variation of visibility time (s) | 4 | 3 |

| Range of relative distances (m) | [51, 588] | [11, 548] |

| Performance | Proposed Method | LGVF-Based Algorithm |

|---|---|---|

| Mean visibility time (s) | 94 | 83 |

| Maximum visibility time (s) | 97 | 81 |

| Minimum visibility time (s) | 91 | 89 |

| Variation of visibility time (s) | 4 | 7 |

| Range of relative distances (m) | [54, 608] | [15, 611] |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license ( http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; Cao, Y. Coordinated Target Tracking via a Hybrid Optimization Approach. Sensors 2017, 17, 472. https://doi.org/10.3390/s17030472

Wang Y, Cao Y. Coordinated Target Tracking via a Hybrid Optimization Approach. Sensors. 2017; 17(3):472. https://doi.org/10.3390/s17030472

Chicago/Turabian StyleWang, Yin, and Yan Cao. 2017. "Coordinated Target Tracking via a Hybrid Optimization Approach" Sensors 17, no. 3: 472. https://doi.org/10.3390/s17030472

APA StyleWang, Y., & Cao, Y. (2017). Coordinated Target Tracking via a Hybrid Optimization Approach. Sensors, 17(3), 472. https://doi.org/10.3390/s17030472