1. Introduction

Among many surveillance functions of Wireless Sensor Networks (WSNs), tracking a moving target in a sensing field is a major one that has wide-spread areas of applications, such as habitat monitoring, traffic monitoring, and intruder tracking [

1,

2,

3]. In target tracking, the current presence of moving targets will be detected by sampling the sensed signals (e.g., light, sound, image, or video) [

4]. In recent years, with the price of smart camera dropping rapidly, the development of wireless camera sensor networks (WCNs) has been heavily fostered [

5,

6]. Hence, a new trend in target tracking is to deploy sensor nodes with smart cameras to capture, process and analyze image data locally and to send extracted information back to the sink node [

7]. However, the target tracking in WCNs is greatly different from that in traditional WSNs with respect to camera field of view (FOV), bandwidth consumption, and multimedia data processing [

8]. Therefore, much attention should be paid to some special target tracking schemes in WCNs.

Due to the requirement for an uninterrupted and reliable tracking network system, decentralized or distributed tracking approaches are usually preferred much more than centralized solutions in WCNs. Distributed approaches, e.g., [

9,

10], aim to achieve scalability and high fault tolerance for large networks, where the measurements are maintained in several task nodes across the tracking network [

6]. However, much energy consumption is usually required in task nodes in terms of processing information data and communicating with their neighbour nodes due to the consensus algorithms. Hence, decentralized solutions, e.g., [

11,

12,

13,

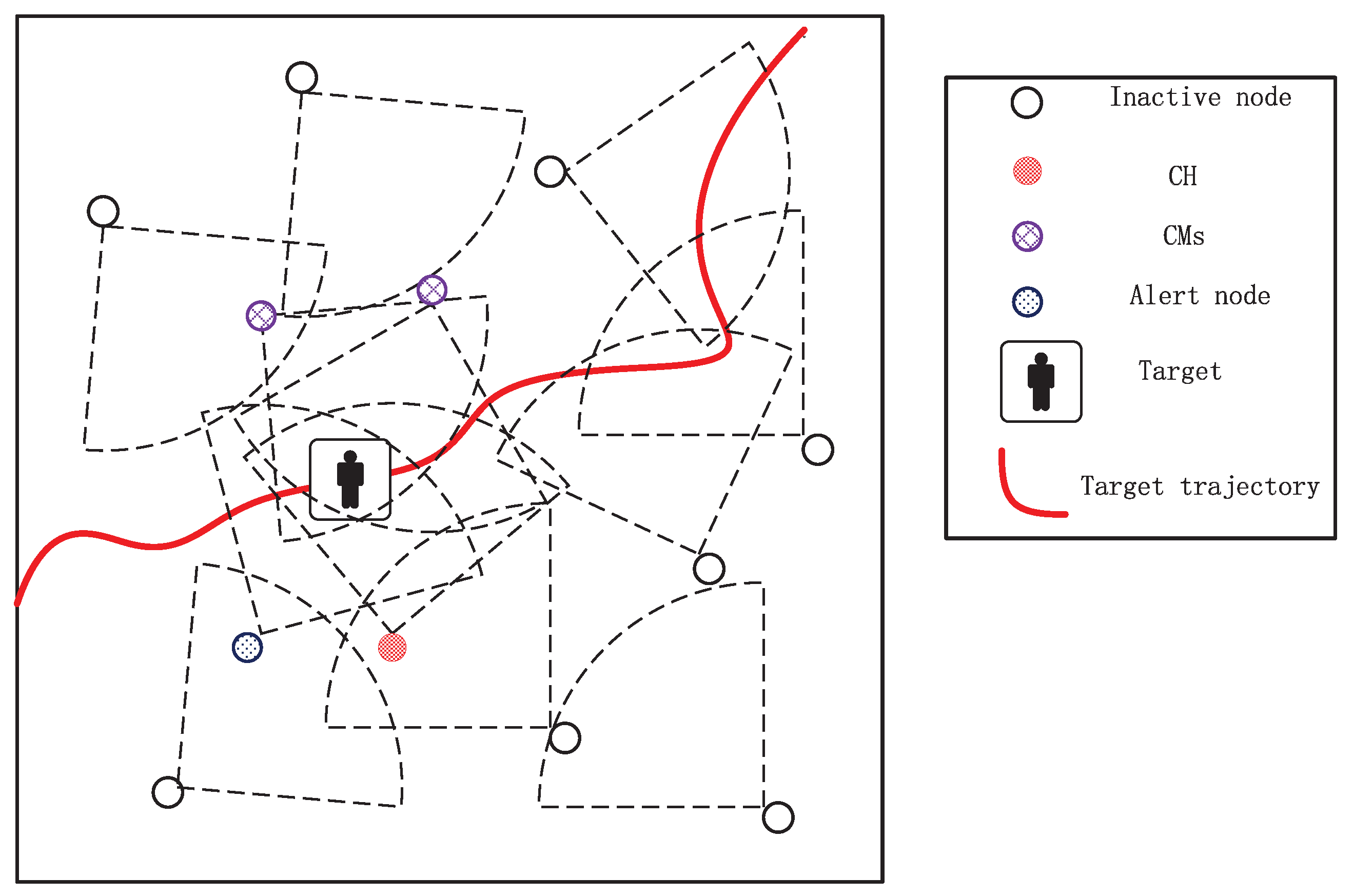

14], may be partial to be adopted for some application situations that use camera nodes with limited energy, since they behave well in balancing energy consumption and resilience to faults. In such solutions, a task cluster will be formed and all cluster nodes will detect the target and process locally. Subsequently they forward information data to the selected CH which fuses different results and acts as the cluster scheduler. Therefore, the decentralized schemes need measurement integration methods (e.g., Kalman filter (KF), information filter (IF), particle filter (PF), etc.) and task cluster selection mechanisms.

The tracking algorithms that depend on linear filters, such as traditional KF and IF, cannot be applied to camera networks, since the target measurements provided by the camera sensors are non-linearly related to each other [

15]. The consensus filter is a popular and efficient method to take tracking task in a distributed framework, e.g., [

10,

16,

17], but inappropriate to cluster-based decentralized tracking systems as it requires us to ensure agreement among all neighbouring nodes. In decentralized systems, some variants of information filter such as extend information filter (EIF) [

18], cubature information filter (CIF) [

19] and square root cubature information filter (SRCIF) [

20] are preferred due to the fact that they can distribute the computational burden and be easily extended for decentralized multi-sensor cooperative state estimation. CIF is derived from EIF and cubature Kalman filter (CKF) [

21]. SRCIF is the square root version of CIF, where the square root covariances matrix is propagated to make the entire filter robust against round-off errors [

19]. In our work, we also employ SRCIF to integrate different measurements because it is numerically stable and robust compared to other filter algorithms [

19,

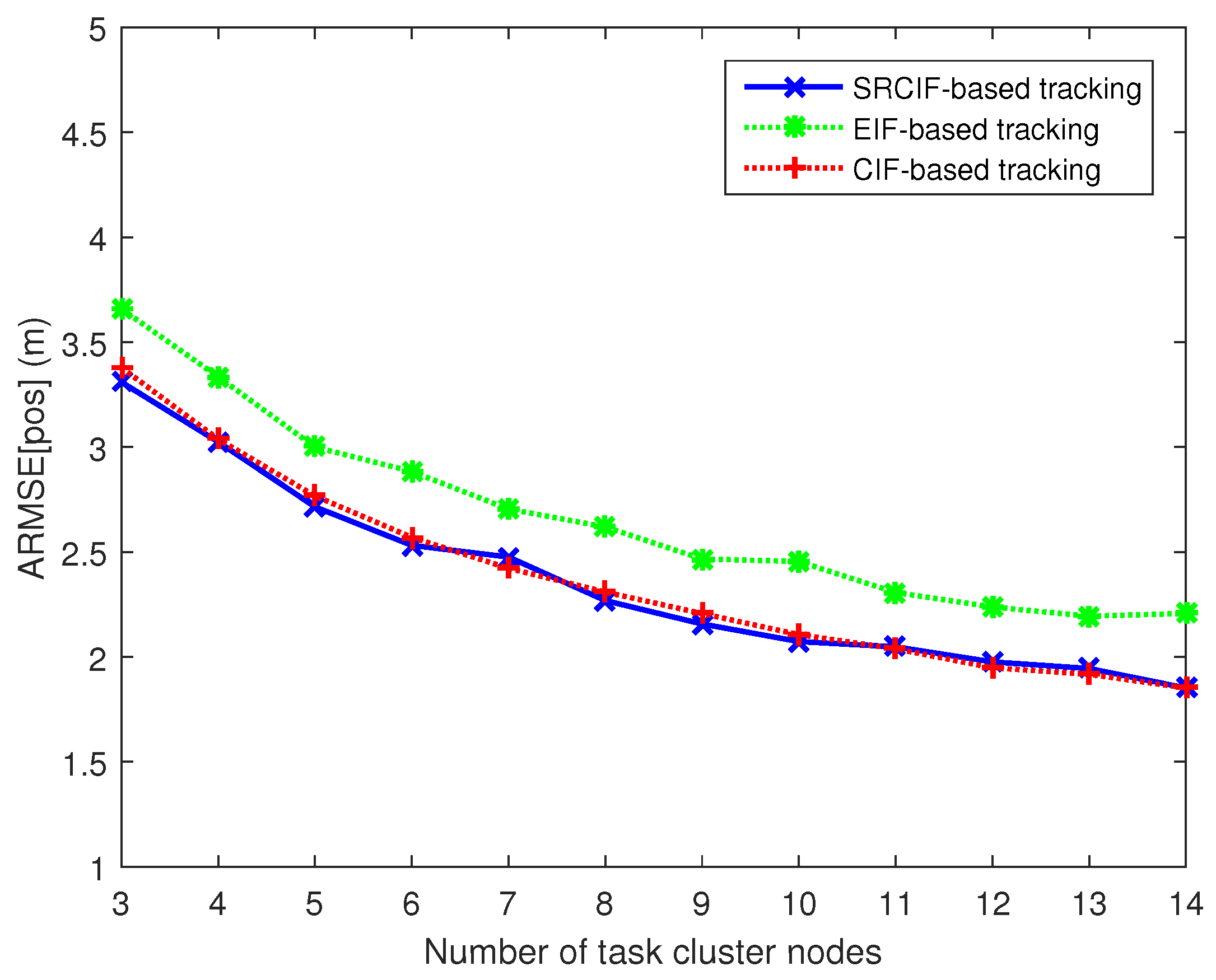

20].

WCNs tend to evolve into large-scale networks with limited bandwidth and energy resources, especially for outdoor surveillance applications. Hence, a single target may be viewed simultaneously by a large number of camera nodes. Decentralized solutions that use numerous task nodes could improve tracking accuracy at the expense of large communication overhead as well as high energy consumption, which, however, leads to the reduction of network lifespan. Hence, improving tracking accuracy and prolonging network lifespan are two conflicting requirements in WCNs with limited energy. An efficient way to balance the two requirements requires only a desired number of camera nodes to participate in the tracking task with satisfaction of relevant requirements. Therefore, how to select appropriate task cluster nodes (including the CH) is of critical importance in decentralized target tracking, and subsequently is the main goal of our work.

Bernab et al. [

22] present an entropy-based algorithm that dynamically selects multiple camera nodes to balance sensing performance and energy consumption. Additionally, the CH is also dynamically selected using entropies and transmission error rates therein. Nevertheless, entropy is a nonlinear metric and its computation is inappropriate for a decentralized approach. In [

23], novel camera activation and CH selection mechanisms that consider transmission errors and use the trace of information matrix as uncertainty metric are developed, where all camera nodes that their rewards overtake the cost will be activated to form the tracking cluster. However, both of the above works assume an omnidirectional sensing model, i.e., the target can be viewed within a circular area whose radius equals to the sensing range of camera nodes. In practical circumstances, they are hard to directly apply to WCNs due to the directional sensing and limitations in FOV of cameras. In [

7], the authors propose a surprisal selection method to facilitate the camera nodes to take independent decision on whether their observations are informative or not, which considers the directional sensing nature of camera nodes. However, this method fixes the fusion center (FC) and requires the knowledge of the total number of camera nodes that view the current target.

Network lifetime and tracking accuracy are two main concerns for target tracking in WCNs. Work [

4] has proposed and proved that a smaller energy balance metric of a network implies a longer lifetime of the tracking network, given a total amount of energy. Therefore, the balance of energy distribution should be paid much attention when selecting the task camera nodes and their CH. In our work, we propose an efficient and robust multi-sensor decentralized target tracking scheme for WCNs. More specifically, considering characteristics and constraints of WCNs such as directional sensing, limited energy, observation limitations in FOV of cameras and insufficient computational capability, we utilise the following mechanisms to efficiently carry out tracking tasks: (1) a more realistic camera node sensing model; (2) a decentralized SRCIF for fusing different observation results at the CH; (3) an efficient mechanism for selection of task cluster nodes that balances the energy consumption and tracking accuracy; and (4) a mechanism that selects the CH by taking a compromise between the remaining energy and the distance-to-target.

This paper focuses on balancing energy consumption and tracking accuracy in single target tracking in dense WCNs. Note that some problems such as boundary detection, losses of data packets and recovery of the lost target are assumed to be out of the scope of the paper. Our main contributions are:

Proposing a greedy on-line decision mechanism to select task cluster nodes based on the defined contribution decision (CD) which quantifies the expected information gain and the energy consumption. This mechanism dynamically changes the weight of energy consumption in CD according to the related remaining energy of the node in a current candidate node set.

Designing an efficient CH selection mechanism that casts such a selection problem as an optimization problem based on the predicted target position and the remaining energy.

Analysing the probability of a target precisely detected by camera nodes when selecting suitable task cluster nodes and their CH, in consideration of the inaccuracy of the predicted next target position.

Integrating all proposed mechanisms into a decentralized tracking scheme in order that these mechanisms can be implemented efficiently and steadily.

The rest of this paper is structured as follows. In

Section 2, we formulate some tracking problems in WCNs and discuss main system models.

Section 3 introduces a decentralized SRCIF algorithm for measurement fusion. The proposed mechanisms of cluster node selection and CH selection are detailed in

Section 4 and

Section 5, respectively.

Section 6 illustrates the decentralized tracking scheme which integrates all proposed mechanisms. In

Section 7, we evaluate the proposed mechanisms and compare them with state-of-the-art methods.

Section 8 concludes the paper and discuss our future work.

3. Decentralized SRCIF Algorithm for Measurement Fusion

In this section, we briefly introduce the process of the decentralized square root cubature information filter (SRCIF) algorithm which is used to fuse measurements from different sensor nodes. The SRCIF have many desirable properties compared to other filter algorithms, such that it is numerically stable and robust as well as easy to extend for multi-sensor state estimation [

19]. For more theory details about the SRCIF, see [

19,

20,

28].

The information filter are parametric RBFs, which uses the information matrix

and information vector

at timestep

k:

where

is the covariance matrix of the Gaussian distribution that represents the estimated state and

is the state vector of the target. Let

and

be

where

and

are the triangular square-root matrixs of

and

, respectively. From Equations (

10), (

12) and (

13), we can obtain that

Hence, we get the relationship of square roots of the error covariance matrix and that of the information matrix

Prediction step of SRCIF

- (1)

Compute the cubature points

of timestep

where

is the i-th element of the following 2n cubature points set

- (2)

Compute the propagated cubature points

- (3)

Estimate the predicted state

- (4)

Estimate the square root factor of the predicted error covariance and information matrix

where

denotes the operation of orthogonal triangular decomposition (for example, if

, then

is a lower-triangular matrix and

, see Section VI of [

28] for more details about the operation of

),

is a square root of

and

- (5)

Compute the predicted information state vector according to the Equation (

11)

Measurement update step of SRCIF for camera node

- (1)

Compute the cubature points

- (2)

Compute the propagated cubature points

- (3)

Estimate the predicted measurement

- (4)

Compute the weighted-centered matrices

- (5)

Estimate the cross-covariance matrix

where

is a square root of

,

and

are a lower-triangular matrix, and

(m and n are the dimensions of measurement state and target motion state, respectively).

- (6)

Evaluate the square-root information contribution matrix of sensor node

- (7)

Evaluate the information contribution vector and information contribution matrix of sensor node

where

is the target measurement of

at timestep

k.

For a decentralized multi-camera tracking network, suppose

camera nodes track the same target at timestep

k. After sensing and processing locally, the nodes will transmit their results (including

and

) to a local fusion central (FC) simultaneously. Subsequently, the FC node will fuse these results with its own as follows:

After obtaining the updated information vector

and the updated information matrix

, the corresponding error covariance matrix

and the update target state yield

According to the above descriptions, the CH computes and by briefly adding , from all CMs with the contributions of its own. The CMs only require us to execute the update step of SRCIF. Apart from gathering and integrating different measurements, the CH computes the predicted next state of target and then sends the state to the next cluster nodes. Therefore, the decentralized SRCIF algorithm could integrate the information data in an arbitrary order and distribute the computational burden of the measurements update among all the cluster nodes, which can be easily extended for multi-sensor fusion. Additionally, in the cluster-based tracking network using the decentralized SRCIF, in contrast to the consensus filter, only the CH requires us to receive the data from its CMs and the CMs do not need to communicate with other neighbours, which could heavily reduce the energy consumption resulting in extending the lifespan of the sensor network.

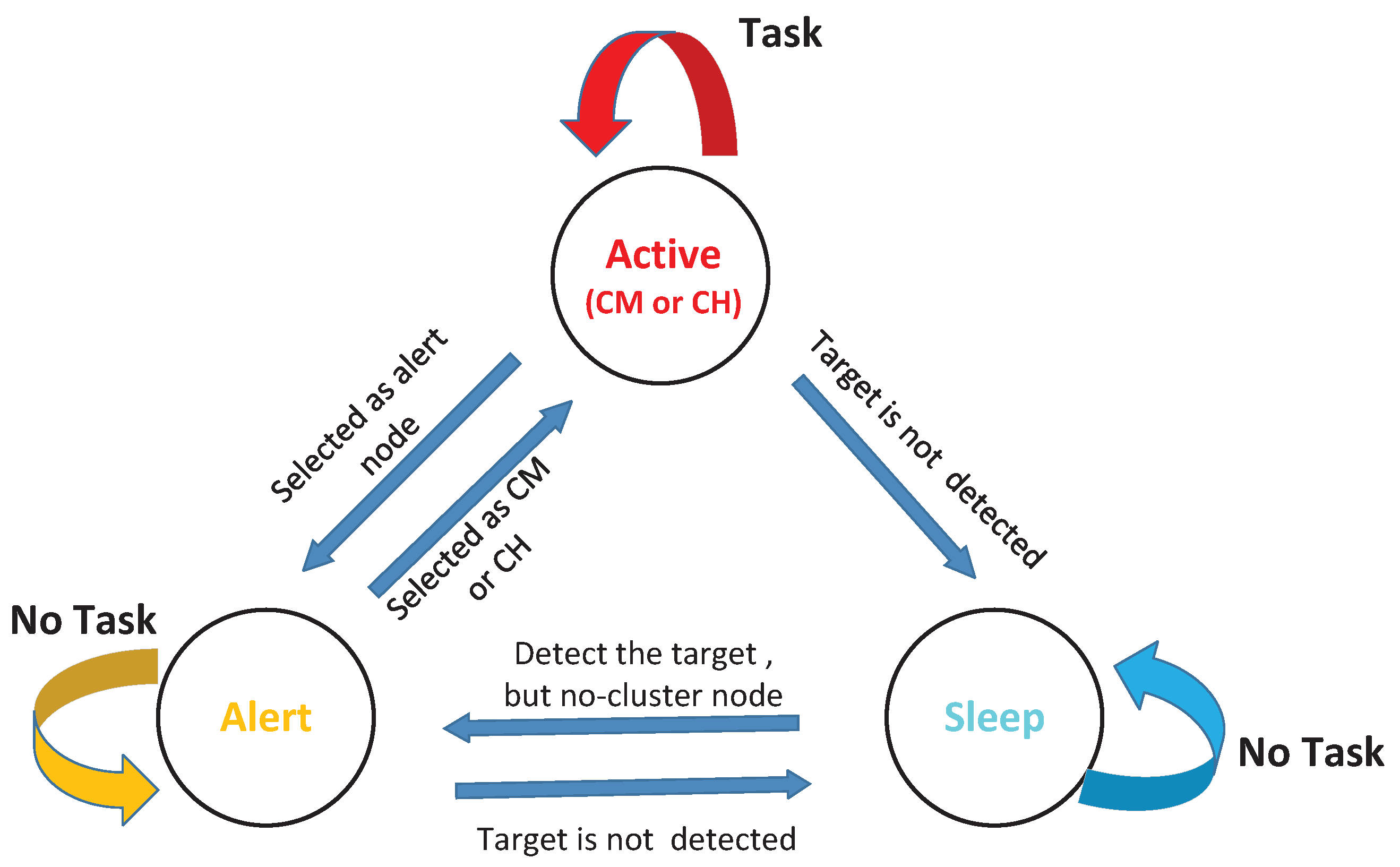

4. Selection of Task Cluster Nodes

In WCNs, each camera node usually has limited bandwidth and energy resources. Additionally, not all camera nodes that view the target contribute equally to detecting and tracking the target. Even if a node contributes a lot, it consumes too much energy to work well afterwards. Therefore, to increase the lifetime of a tracking network, only some camera nodes should be activated to act as task cluster nodes and some activated nodes should be deactivated to other states. However, this may lead to a decrease of tracking accuracy compared with traditional methods such that all camera nodes that view the target are included to integrate as many measurements as possible. Thus, an appropriate task cluster node selection mechanism should be put forward to balance the tracking accuracy and network lifetime.

In this section, we present our cluster node activation mechanism which adopts a greedy on-line decision approach to decide the most suitable task nodes. A camera node will be activated as a CM or deactivated according to both its measurements and its energy consumption. Therefore, an online decision mechanism that maximizes the trade-off between information gain and energy consumption is adopted. Let

be a set of camera nodes that view the target at current timestep

k:

. The size of

is

which meets

. In this work,

is considered as the set of candidate camera node at timestep

, which contains all current cluster nodes and all alert nodes at timestep

k. Let

be the information gain deriving from the measurements of

and

denote its energy consumption if it is activated. Subsequently, the expected contribution decision

for a candidate node

at timestep

can be expressed as

where

and

are weighting factors of expected information gain and energy consumption corresponding to camera

at timestep

, respectively. Hence, for each node in

, we calculate its expected

, and then rank all candidate camera nodes in descending order according to their

. Finally, some top-ranked camera nodes will be selected from the candidate set to be activated to form a new cluster for timestep

.

Then the expected information gain of

,

, and its weighting factor

are firstly computed. Different metrics have been proposed to gauge the tracking performance of the information filter [

7,

14,

22,

23]. Among them, the trace (sum of diagonal elements) of the predicted information matrix

computed at timestep

k using Equation (

33) corresponds to the mean squared error (MSE) of the updated state. Thus, it can be used to measure the expected information gain. Hence, the expected information gain of camera node

at timestep

can be given by

where

denotes trace operation. For facilitating data analysis and comparisons, we carry on the normalization calculation to the information gain as follows:

where

and

are minimum and maximum expected information gain values of candidate nodes, respectively. In general, a lager

value implies more useful information gained by the measurements of

. In a camera sensor network with limited energy, trace is a linear metric. Therefore, in the case of our decentralized SRCIF, the CH can simply compute the predicted information gain of each candidate node resulting in a low burden to the limited-resource camera sensor network.

With respect to the weighting factor

, we set it with different values based on both the predicted position of the target and the FOV of the camera

. According to

Section 2.3, when the target is located in different zones of FOV of the camera

, the probability of the target being precisely sensed by the camera

,

, is different (see Equation (

5)). Then the credibility of the information gain from

should be also different. Thus, the weighting factor of the camera node

at timestep

,

, is given by

Next,

and

for camera

at timestep

are calculated. Energy has been considered as the main resource in battery-powered WCNs. Thus, in this work, we only consider energy consumption as the resource’s consumption. Clearly, a camera node will keep an active state when it is a CM or CH. Therefore, the predicted energy consumption for camera

at timestep

,

, is expressed as follows:

where

is the total energy consumption of active node

during one tracking, computed by Equation (

7). Note that

cannot be selected as a CM, if its current remaining energy

.

weights the relative importance between predicted energy consumption

and expected information gain

for

at timestep

. In traditional schemes, the absolute quantity of energy usually conducts as an indicate of

β (like in work [

23]). However, in this work, we set

dynamically according to the current remaining energy of itself and other candidate nodes. Ordinarily, the importance of energy for a node varies depending on the current remaining energy of itself and others. The higher remaining energy a camera node has, the less importance the energy will be. Thus we adopt the relative amount of energy as an indicate, which is described as follows:

where

a is a constant factor,

is normalized predicted remaining energy of

and

is the average normalized predicted remaining energy of candidate nodes at timestep

. The normalization process of remaining energy is the same as that in the information gain. As shown in Equation (

43), the camera nodes with higher remaining energy will be assigned a smaller weighting factor of energy consumption than those with lower remaining energy.

Note that the selection processes are conducted in the current CH and all candidate nodes just need to send their information data packets (including their positions, current energy, trace of predicted information matrix and relationship with the

) to their CH separately. The CH does not require any knowledge about candidate nodes in advance, in contrast to that in [

7]. Therefore, this mechanism is suitable for decentralized implementation of WCNs. Algorithm 1 summarizes the proposed mechanism for selection of cluster nodes at the end of timestep

k.

| Algorithm 1. The greedy on-line cluster node selection mechanism (operate at current CH) |

Input: , , , .

Output: A camera node set that contains top-ranked nodes based on their . |

- 1.

for each camera node - 2.

Compute using Equation ( 41) - 3.

if and - 4.

Compute expected using Equation ( 39). - 5.

. - 6.

else - 7.

, . - 8.

end if - 9.

end for - 10.

Normalize and , then get set and set . - 11.

for Each camera node - 12.

if - 13.

Compute using in Equation ( 43). - 14.

Compute its expected contribution decision using Equation ( 38) - 15.

else - 16.

. - 17.

end if - 18.

end for - 19.

Sort the set in the descending order, and then get a new set that contains top-ranked nodes based on expected contribution decision D.

|

5. Selection of CH

Dynamic cluster formation requires camera nodes to take time-varying states for enabling decentralized and determining optimal decision where the CH acts as the scheduler. A new CH for the next timestep is selected at the end of the current timestep. It communicates with its member nodes to exchange information data, gathers, fuses the data and then determines the next cluster (including CH and CMs). Hence, tracking performance strongly depends on which node acts as CH. Our objective is to select a reliable and efficient CH.

According to

Section 2.4, the CH requires the most expenditure of energy among the task cluster nodes. Hence, selecting an appropriate CH could balance the remaining energy distribution of the current cluster. Furthermore, the smaller energy balance metric of the network implies a longer lifetime of the tracking network, given a total amount of energy [

4]. Additionally, the situation that the selected CH could not view the target is not our intention but it happens occasionally in WCNs, since there may exit a great difference between the predicted and true target positions. Therefore, we cast such a selection problem as an optimization problem based on the predicted target position and the remaining energy distribution, to determine the CH

as:

where

is a weighted combination of

and current remaining energy

for camera node

. We define the weighted combination of

as:

where

weights the energy priority;

is the camera-target distance;

;

;

and

. There are three restrictive conditions in Equation (

44). The first condition requires the remaining energy of node selected as CH must has more than the least energy consumption of the CH during one tracking. The second condition requires the predicted target to be located in zone

of the camera

if the camera node

is selected as the CH. Finally, the third condition requires the candidate CHs to belong to the cluster node set

.

Under the above descriptions, this mechanism prioritizes nodes near the predicted target position and with more remaining energy. The camera nodes with less energy are not likely to be selected as the CH, which can delay their death and then lead to a longer network lifetime. Moreover, the nodes close to the predicted target position under the condition

could view the target well with a higher probability, given the error of the predicted target position. However, a candidate CH may not have both the most remaining energy and the shortest distance to the target at the same time. Hence, the goal of this CH selection mechanism is to make a good trade-off between the balanced remaining energy distribution and the robust tracking ability. Each camera node

computes individually

and remaining energy

, then sends them to the CH. Therefore, the CH does not require any knowledge about the candidate camera nodes in advance: nodes transmit to the CH everything the CH needs. Thus it is also efficient and suitable for decentralized implementation in WCNs. Algorithm 2 summarizes the proposed mechanism for selection of CH at the end of timestep

k.

| Algorithm 2. Selection of CH based weighted combination (operate in current CH) |

Input: Task cluster node set and their energy set and positions, predicted target position .

Output: The CH . |

- 1.

Compute the distance between the predicted target position and each camera node , . - 2.

, , , . - 3.

for each camera node - 4.

if and - 5.

Compute as in Equation ( 46) and compute as in Equation ( 47). - 6.

Compute using Equation ( 45). - 7.

else - 8.

. - 9.

end if - 10.

end for - 11.

.

|

6. Efficient and Robust Decentralized Tracking Scheme

The proposed decentralized target tracking scheme is divided into three mechanisms, namely measurements fusion and state prediction, selection of task cluster nodes and selection of CH, which have been described in detail above. Next, we will integrate these mechanisms into a decentralized tracking scheme.

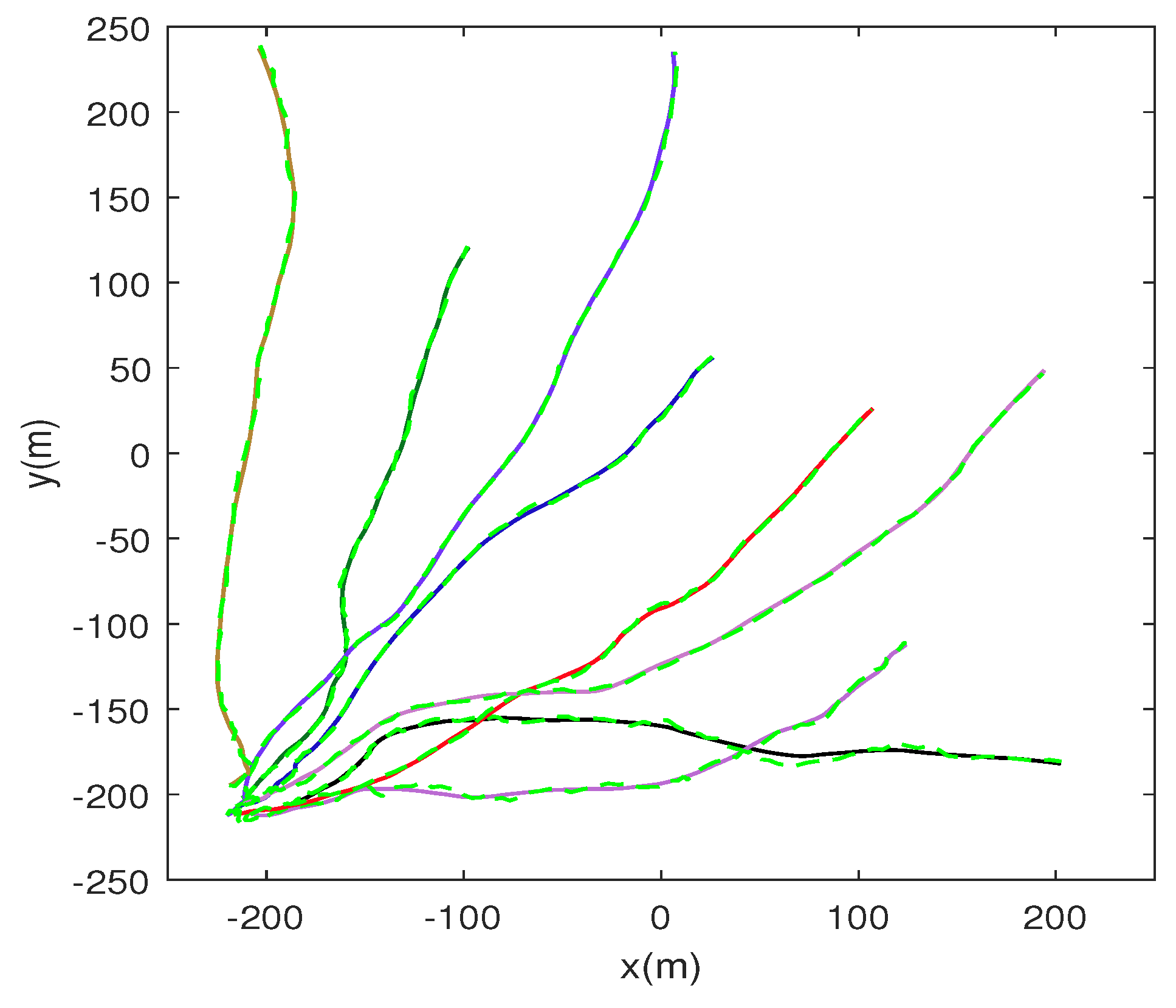

All camera nodes are assumed in a sleep mode initially, but periodically awake to detect the target. Once a target appears in the monitor region boundary, the boundary nodes that sense the target will activate themselves to execute the tracking task. Meanwhile, the first boundary node that senses the target will automatically become the CH by broadcasting its information to inform other active nodes. Subsequently, the first CH will select the next CH and CMs to form a task cluster.

When the timestep

k is up, the CH and CMs all capture the image of the target and perform SRCIF algorithm locally. After acquiring efficient data, the CMs forward the data to the CH after a random-delayed time with the conflict detect mechanism, CSMA/CA. The CH then fuses the received data together with its local measurements to achieve a more accurate current state estimation of the target. Subsequently, the target state at timestep

is predicted in the CH and sent to CMs and all alert nodes. Each node

will calculate its trace of the predicted information matrix and send it with its current state data to the CH. Finally, the CH selects the next CMs and CH based on the received data. Note that if a CM selected by the last CH could not view the current target, it will fall into sleep state. However, if the selected CH could not view the current target yet, it will continue working and fuse different measurements without its own data. The concrete operations of the CH are described in detail in Algorithm 3, and the corresponding operations of the CMs and alert nodes are presented in Algorithm 4.

| Algorithm 3. Operations in the CH at timestep k |

| Start: The data and which are from the last CH at timestep . |

When the pre-set time is out, obtain the measurement , compute the and based on the measurement update of the SRCIF as described in Section 3. Receive the packets with from each CMs, and then perform the information fusion to achieve and as in Equations ( 34) and ( 35). Compute and as in Equations ( 36) and ( 37). Compute and based on the prediction step of the SRCIF as described in Section 3. Broadcast the packets with to its CMs and all alert nodes. Compute its predicted based on the measurement update phase of the SRCIF as described in Section 3. Receive the packets with from each camera . Select the new task cluster nodes and a new CH from current cluster nodes and all alert nodes according to the description in Section 4 and Section 5. Announce the selected CMs and CH with packets that include .

|

| Algorithm 4. Operations in CMs or alert nodes at timestep k |

| Start: The data and which are from the last CH at timestep . |

- 1.

for each camera node - 2.

if - 3.

When the pre-set time is up, measure the current target and obtain the measurement . - 4.

Compute the and based on the measurement update phase of the SRCIF as described in Section 3. - 5.

Transmit the packets with to its CH. - 6.

end if - 7.

Upon receiving the packet with , compute its predicted based on the measurement update phase of the SRCIF as described in Section 3. - 8.

Transmit the packet with to the CH. - 9.

will keep active state if selected as a CM or CH, or it falls into sleep state. - 10.

end for

|

8. Conclusions and Future Work

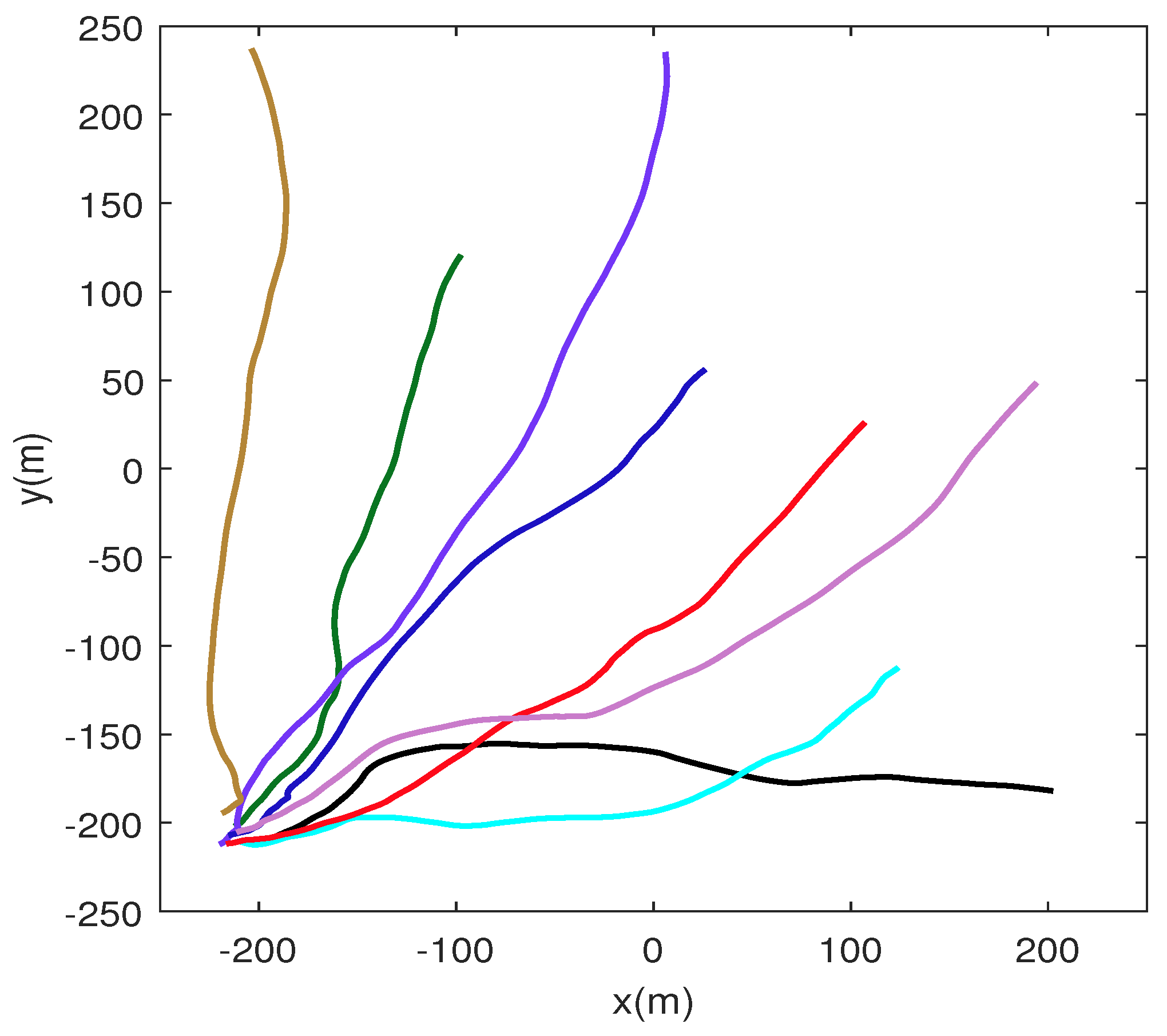

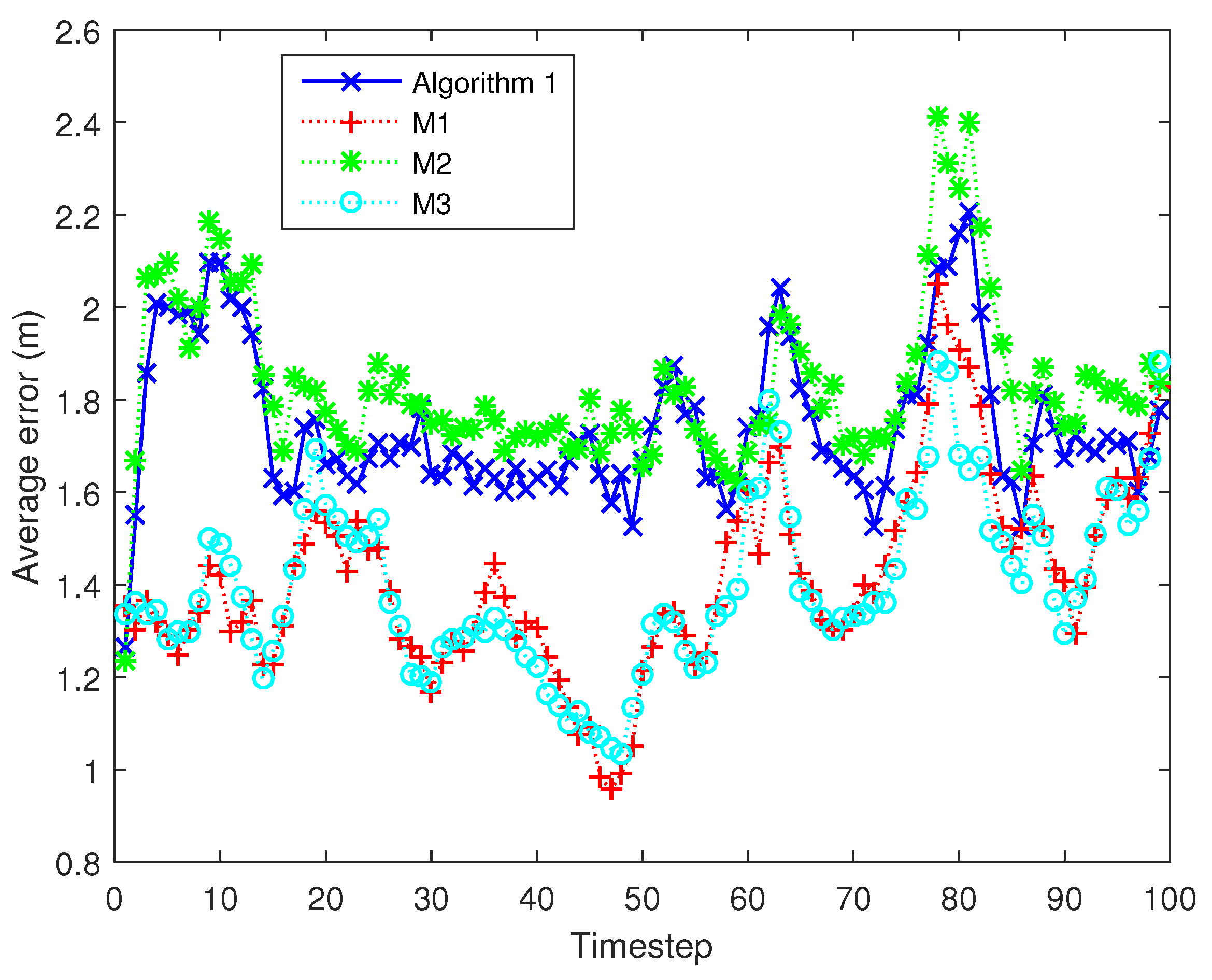

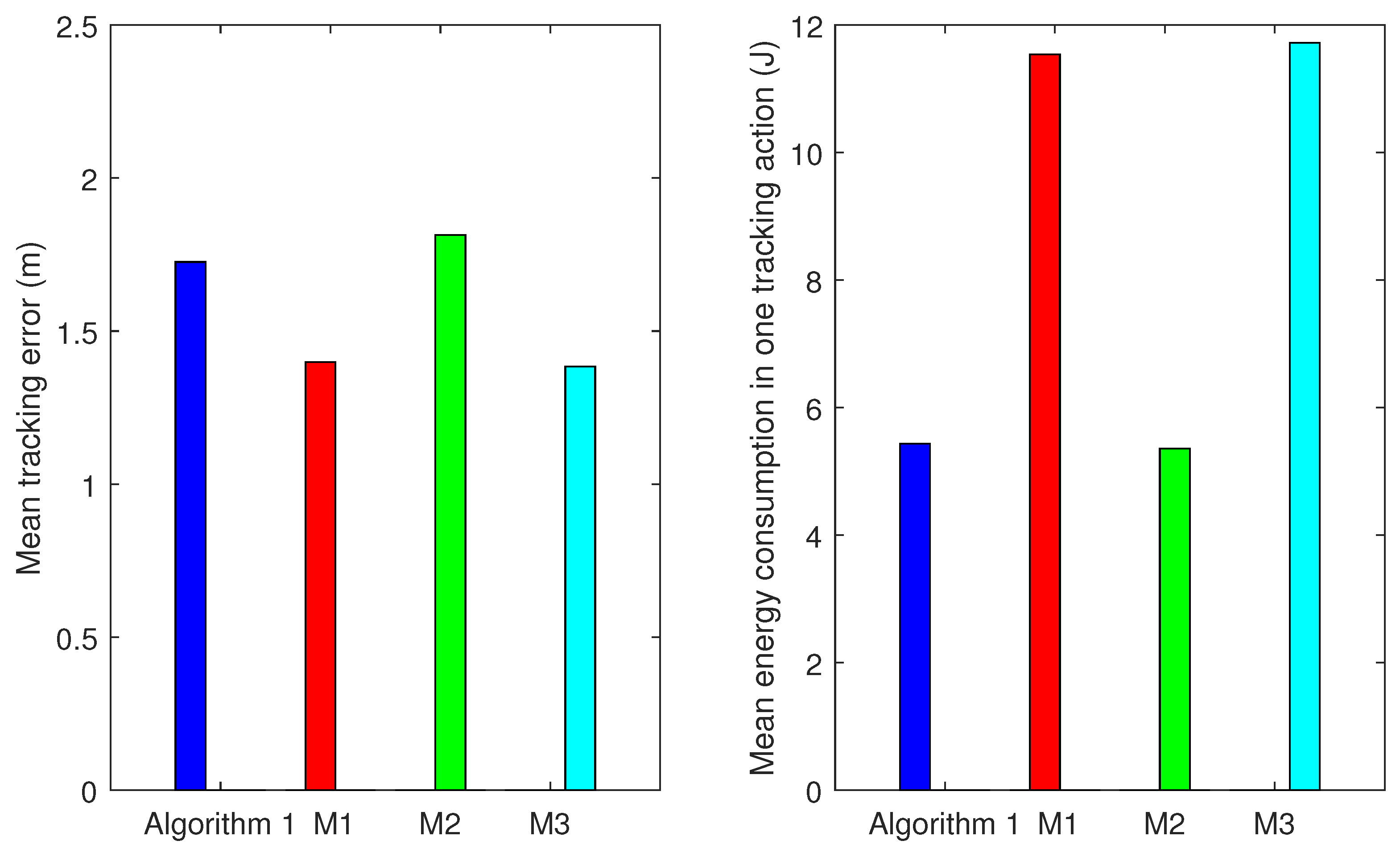

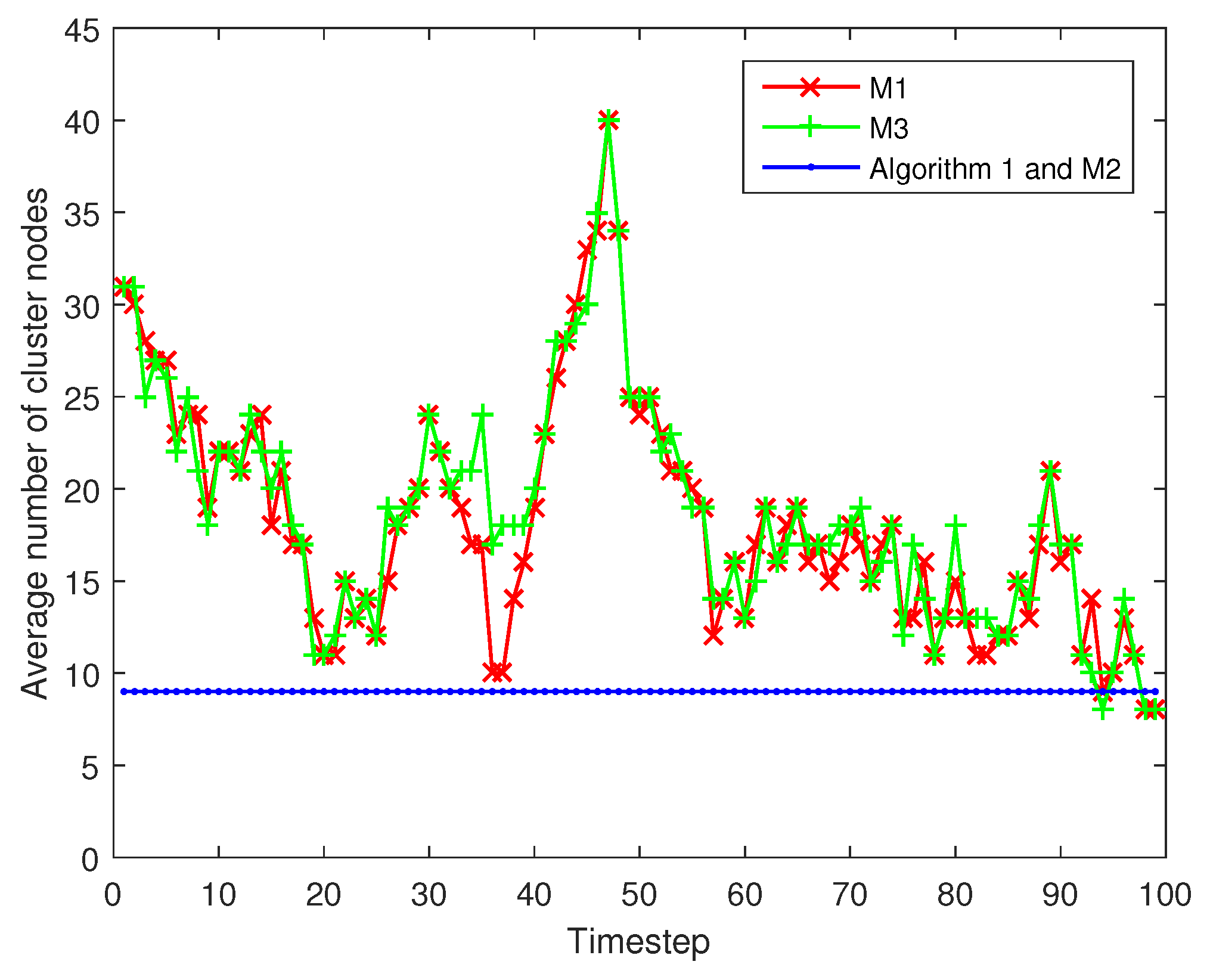

In this paper, we considered a cluster-based single target tracking situation in dense WCNs where the cluster will dynamically change with the moving of the target. Considering some characteristics and constraints of WCNs, an effective and robust decentralized tracking scheme is proposed in this paper to balance the energy consumption and tracking accuracy. The tracking scheme integrates different mechanisms: (1) a more realistic camera node sensing model; (2) a decentralized SRCIF for fusing different observation results; (3) a greedy on-line decision mechanism to select task cluster nodes; and (4) an efficient and stable mechanism for selection of cluster head (CH). The proposed scheme could distribute the computational burden to each cluster node. Furthermore, the computational burden performed by the cluster nodes (including the CH) is roughly constant regardless of the cluster size. Therefore, it can be easily extended for multi-sensor collaborative target tracking in WCNs. Additionally, the proposed scheme selects a fixed number of task cluster nodes based on the defined contribution decision (CD), which makes it applicable to a sensor network where each sensor node is resource-constrained. Each mechanism is evaluated and compared with related mechanisms using state of the art methods. The comparison results demonstrate that the proposed scheme behaves really well in balancing the resource consumption and tracking accuracy.

In our future endeavors, we aim to carry out our further work on the following aspects. The first aspect is to investigate the multi-target tracking schemes in WCNs, which will be more complicated than those in the single target scenario. Then, the recovery mechanism for cases of emergency or target loss in practice should also be discussed. The third valuable aspect is to investigate the problem of tracking a high-speed target in WCNs. In addition, the simulation of our approach is implemented via MATLAB platform, which is not applicable in practical wireless sensor network nodes where the advanced software is not available in MCU and embedded system. Therefore, one of our following tasks is the implementation of the solution in C language to use directly in sensor nodes. Finally, how to reduce the cost of building a tracking network is also a significant subject in our future work.