Smartphone-Based Escalator Recognition for the Visually Impaired

Abstract

:1. Introduction

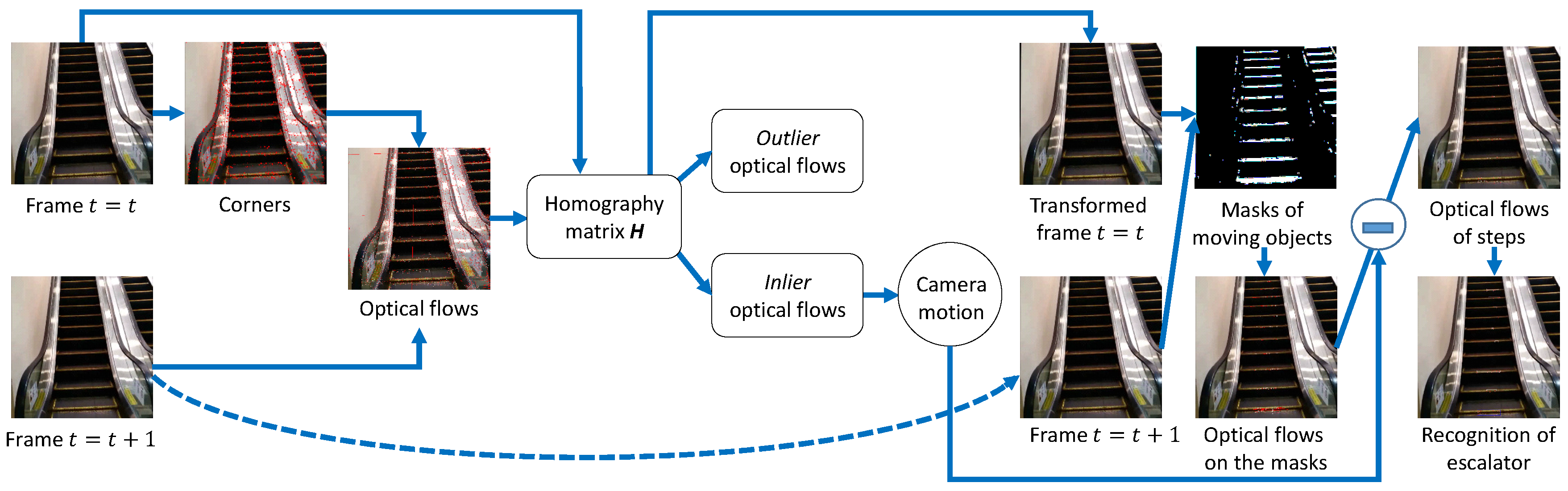

2. Outline of the Proposed Method

2.1. Corner Detection

2.2. Optical Flow Computation

2.3. Homography Transformation for Image Registration

2.3.1. DLT Algorithm

2.3.2. Estimation of Homography Matrix Using RANSAC

- Select four optical flows randomly.

- Calculate the homography matrix H by applying the DLT algorithm to the four optical flows.

- Count the number of optical flows with back projection errors less than a certain value as follows:The optical flows which satisfy Equation (14) are determined to be inliers, and the others are determined to be outliers.

- Iterate the above steps from 1 to 3 for a certain time.

- Determine the pre-optimal homography matrix that produces the most inliers.

- Calculate the optimal homography matrix from the inliers of the pre-optimal homography matrix.

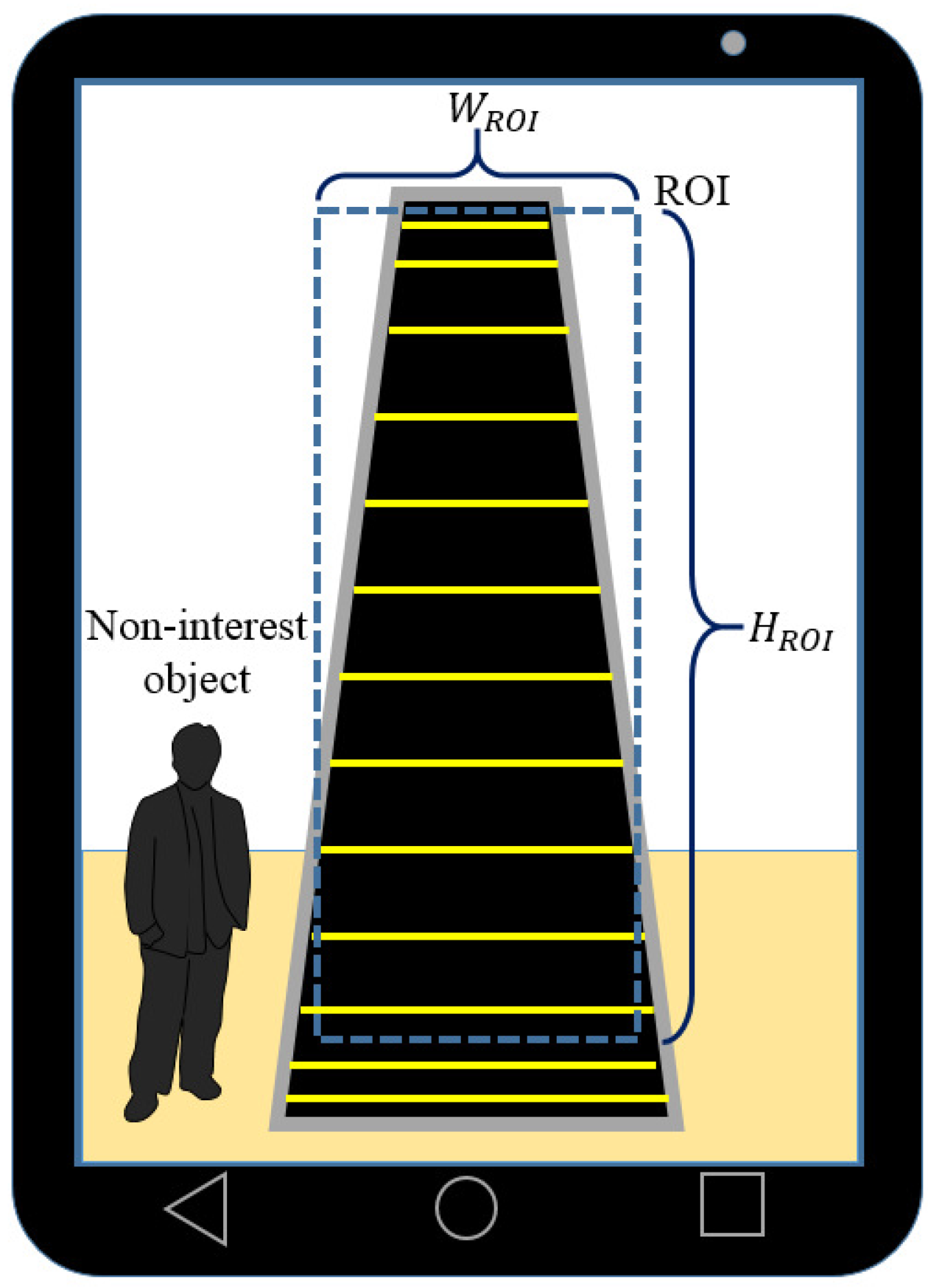

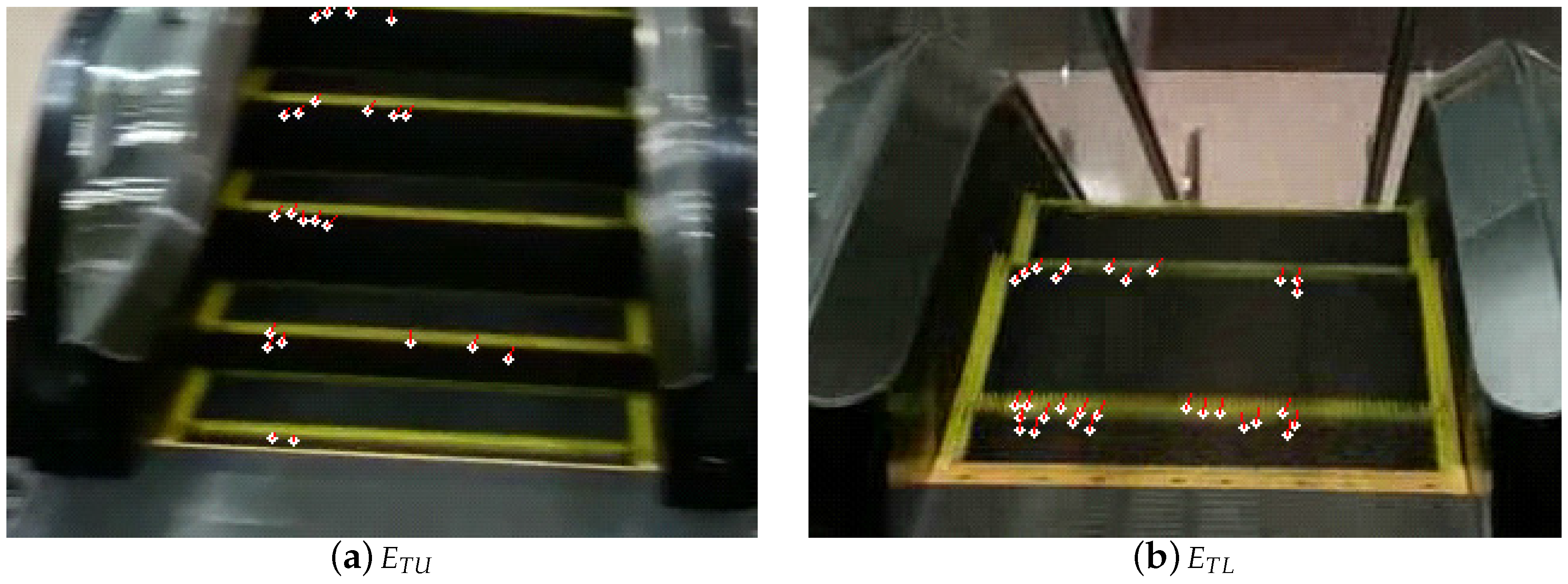

2.4. Extraction of Optical Flows on Moving Steps

2.5. Recognition of an Escalator

- Escalators going to upper floors (denoted by )

- Escalators going to lower floors ()

- Escalators coming from upper floors ()

- Escalators coming from lower floors ()

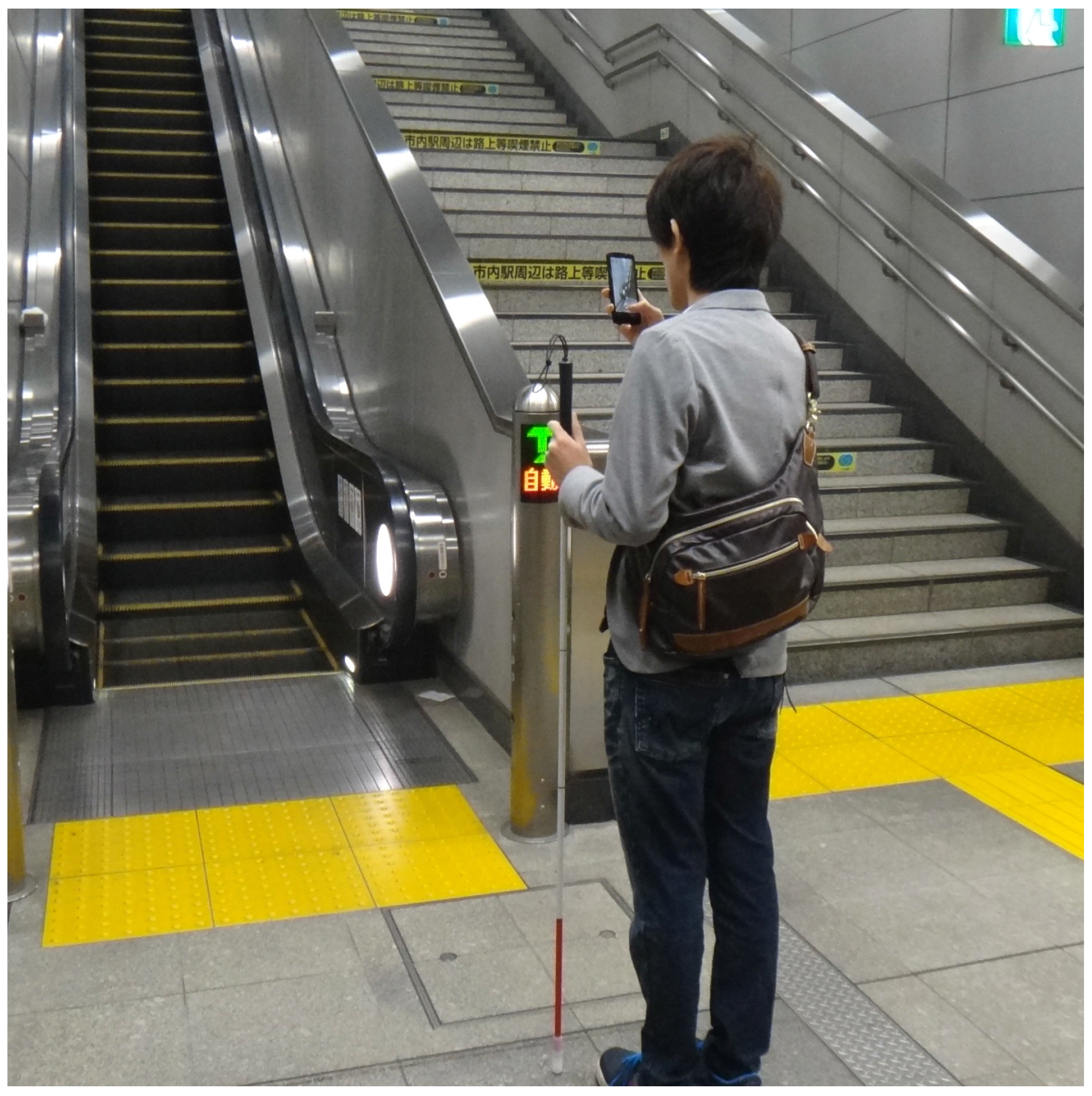

2.6. Notification to a User

3. Experiments

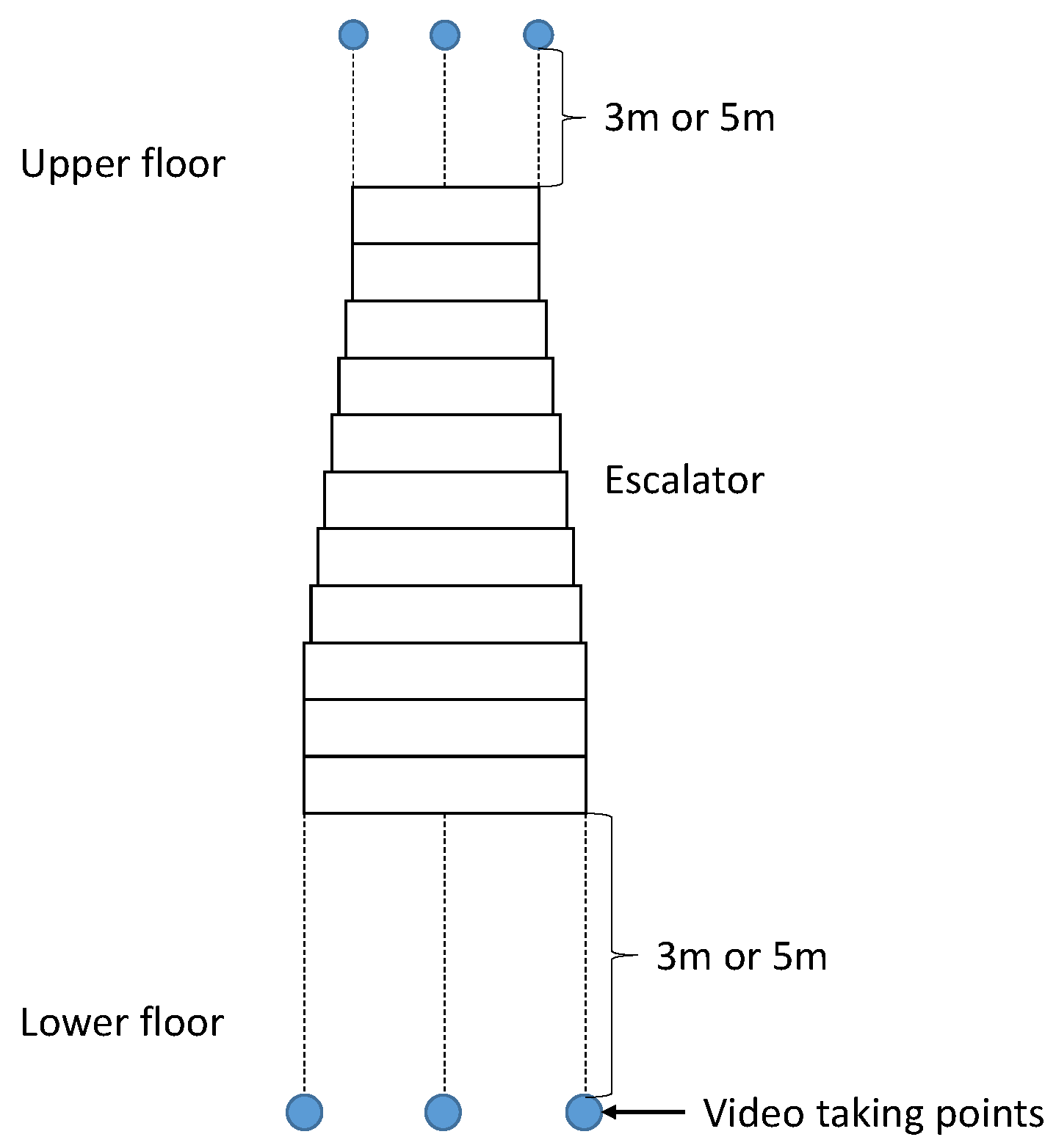

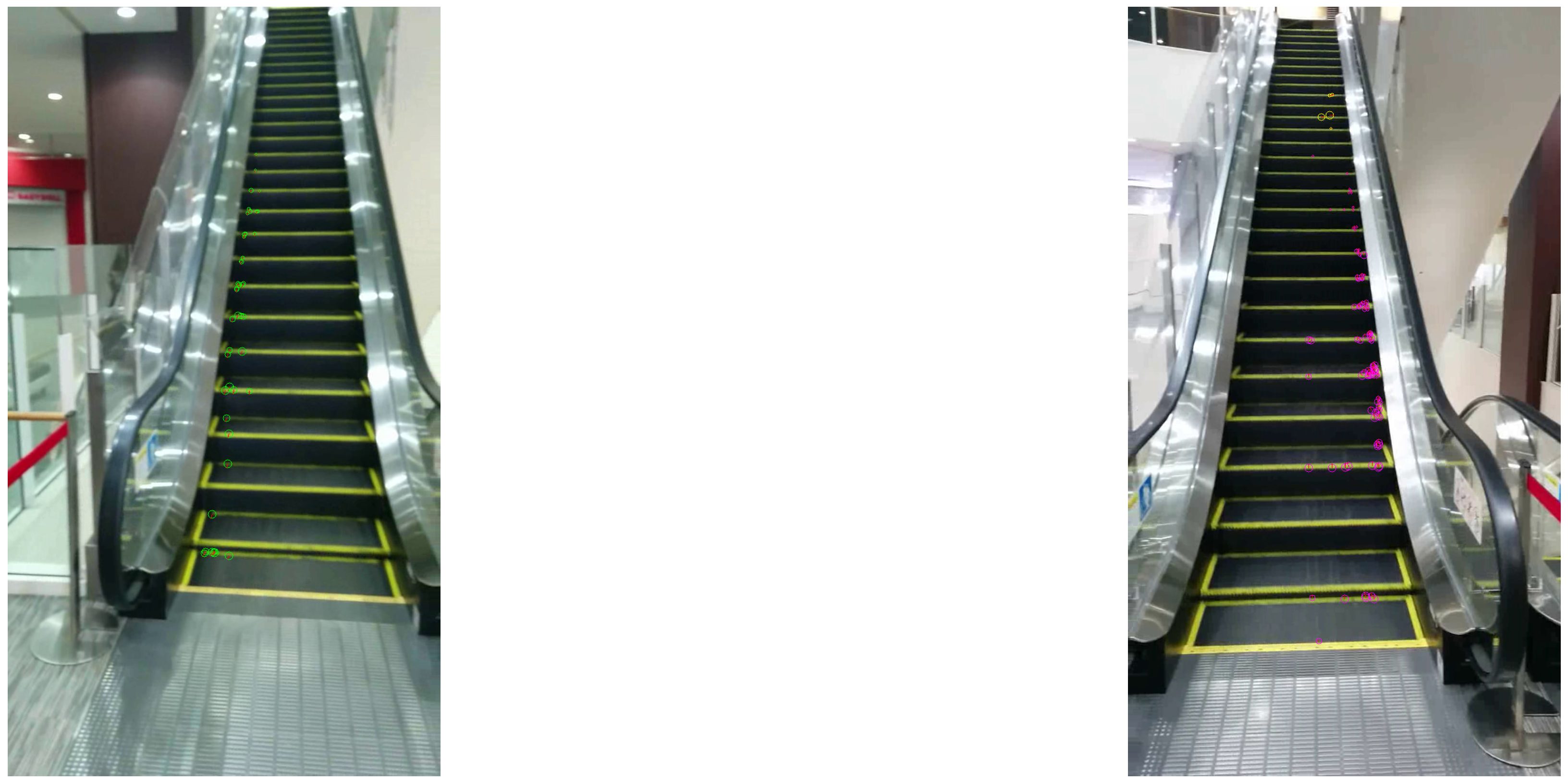

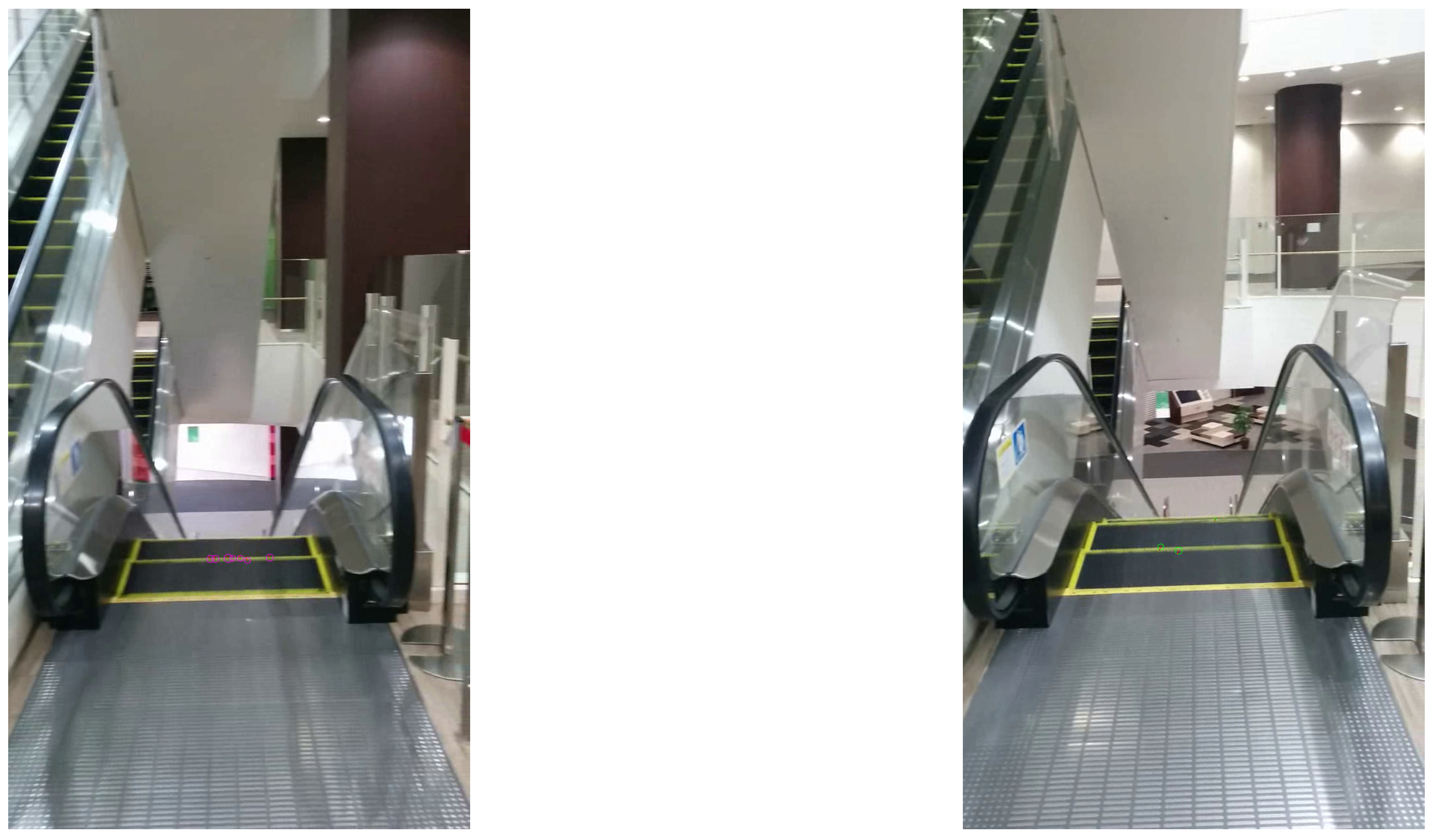

3.1. Conditions

3.2. Results

4. Discussion

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- WHO. World Health Organization, Media Centre, Visual Impairment and Blindness, Fact Sheet No. 282. 2014. Available online: http://www.who.int/mediacentre/factsheets/fs282/en/ (accessed on 1 August 2014).

- Leo, M.; Medioni, G.G.; Trivedi, M.M.; Kanade, T.; Farinella, G.M. Computer Vision for Assistive Technologies. Comput. Vis. Image Underst. 2017, 154, 1–15. [Google Scholar] [CrossRef]

- Bolgiano, D.; Meeks, E. A Laser Cane for the Blind. IEEE J. Quantum Electr. 1967, 3, 268. [Google Scholar] [CrossRef]

- Benjamin, J.M.; Ali, N.A.; Schepis, A.F. A Laser Cane for the Blind. In Proceedings of the San Diego Biomedical Symposium, San Diego, CA, USA, 31 January–2 February 1973; Volume 12, pp. 53–57. [Google Scholar]

- Benjamin, J.M., Jr. The Laser Cane. J. Rehabil. Res. Dev. 1974, BPR 10-22, 443–450. [Google Scholar]

- Okayasu, M. Newly developed walking apparatus for identification of obstructions by visually impaired people. J. Mech. Sci. Technol. 2010, 24, 1261–1264. [Google Scholar] [CrossRef]

- Akitaseiko. 1976. Available online: http://www.akitaseiko.jp (accessed on 6 January 2017).

- Wahab, M.H.A.; Talib, A.A.; Kadir, H.A.; Johari, A.; Noraziah, A.; Sidek, R.M.; Mutalib, A.A. Smart Cane: Assistive Cane for Visually-impaired People. Int. J. Comput. Sci. Issues 2011, 8, 21–27. [Google Scholar]

- Dang, Q.K.; Chee, Y.; Pham, D.D.; Suh, Y.S. A Virtual Blind Cane Using a Line Laser-Based Vision System and an Inertial Measurement Unit. Sensors 2016, 16, 95. [Google Scholar] [CrossRef] [PubMed]

- Takizawa, H.; Yamaguchi, S.; Aoyagi, M.; Ezaki, N.; Mizuno, S. Kinect cane: An assistive system for the visually impaired based on the concept of object recognition aid. Pers. Ubiquitous Comput. 2015, 19, 955–965. [Google Scholar] [CrossRef]

- Ju, J.S.; Ko, E.; Kim, E.Y. EYECane: Navigating with camera embedded white cane for visually impaired person. In Proceedings of the 11th International ACM SIGACCESS Conference on Computers and Accessibility, Pittsburgh, PA, USA, 25–28 October 2009; pp. 237–238. [Google Scholar]

- Vera, P.; Zenteno, D.; Salas, J. A smartphone-based virtual white cane. Pattern Anal. Appl. 2014, 17, 623–632. [Google Scholar] [CrossRef]

- Shoval, S.; Borenstein, J.; Koren, Y. The NavBelt-A Computerized Travel Aid for the Blind Based on Mobile Robotics Technology. IEEE Trans. Biomed. Eng. 1998, 45, 1376–1386. [Google Scholar] [CrossRef] [PubMed]

- Khan, A.; Moideen, F.; Lopez, J.; Khoo, W.L.; Zhu, Z. KinDectect: Kinect Detecting Objects. In Proceedings of the 13th International Conference on Computers Helping People with Special Needs, Linz, Austria, 11–13 July 2012; pp. 588–595. [Google Scholar]

- Huang, H.C.; Hsieh, C.T.; Yeh, C.H. An Indoor Obstacle Detection System Using Depth Information and Region Growth. Sensors 2015, 15, 27116–27141. [Google Scholar] [CrossRef] [PubMed]

- McDaniel, T.; Krishna, S.; Balasubramanian, V.; Colbry, D.; Panchanathan, S. Using a haptic belt to convey non-verbal communication cues during social interactions to individuals who are blind. In Proceedings of the International Workshop on Haptic Audio Visual Environments and Games, Ottawa, ON, Canada, 18–19 October 2008; pp. 13–18. [Google Scholar]

- Zöllner, M.; Huber, S.; Jetter, H.C.; Reiterer, H. NAVI-A Proof-of-Concept of a Mobile Navigational Aid for Visually Impaired Based on the Microsoft Kinect. In Proceedings of the 13th IFIP TC13 Conference on Human-Computer Interaction, Lisbon, Portugal, 5–9 September 2011; Volume IV, pp. 584–587. [Google Scholar]

- Balakrishnan, G.; Sainarayanan, G.; Nagarajan, R.; Yaacob, S. A Stereo Image Processing System for Visually Impaired. World Acad. Sci. Eng. Technol. 2006, 20, 206–215. [Google Scholar]

- Balakrishnan, G.; Sainarayanan, G.; Nagarajan, R.; Yaacob, S. Wearable Real-Time Stereo Vision for the Visually Impaired. Eng. Lett. 2007, 14, 1–9. [Google Scholar]

- Dunai, L.; Fajarnes, G.P.; Praderas, V.S.; Garcia, B.D.; Lengua, I.L. Real-Time Assistance Prototype—A new Navigation Aid for blind people. In Proceedings of the 36th Annual Conference on IEEE Industrial Electronics Society, Glendale, AZ, USA, 7–10 November 2010; pp. 1173–1178. [Google Scholar]

- Lee, Y.H.; Medioni, G. RGB-D camera Based Navigation for the Visually Impaired. In Proceedings of the RSS 2011 RGB-D: Advanced Reasoning with Depth Camera Workshop, Berkeley, CA, USA, 12 July 2011; pp. 1–6. [Google Scholar]

- Helal, A.S.; Moore, S.E.; Ramachandran, B. Drishti: An integrated navigation system for visually impaired and disabled. In Proceedings of the Fifth International Symposium on Wearable Computers, Zurich, Switzerland, 7–9 October 2001; pp. 149–156. [Google Scholar]

- Halabi, O.; Al-Ansari, M.; Halwani, Y.; Al-Mesaifri, F.; Al-Shaabi, R. Navigation Aid for Blind People Using Depth Information and Augmented Reality Technology. In Proceedings of the NICOGRAPH International 2012, Bali, Indonesia, 2–3 July 2012; pp. 120–125. [Google Scholar]

- Velzquez, R.; Maingreaud, F.; Pissaloux, E.E. Intelligent Glasses: A New Man-Machine Interface Concept Integrating Computer Vision and Human Tactile Perception. In Proceedings of the EuroHaptics 2003, Dublin, Ireland, 6–9 July 2003; pp. 456–460. [Google Scholar]

- Dunai, L.D.; Pérez, M.C.; Peris-Fajarnés, G.; Lengua, I.L. Euro Banknote Recognition System for Blind People. Sensors 2017, 17, 184. [Google Scholar] [CrossRef] [PubMed]

- Ulrich, I.; Borenstein, J. The GuideCane-Applying Mobile Robot Technologies to Assist the Visually Impaired. IEEE Trans. Syst. Man Cybern. Part A Syst. Hum. 2001, 31, 131–136. [Google Scholar] [CrossRef]

- Imadu, A.; Kawai, T.; Takada, Y.; Tajiri, T. Walking Guide Interface Mechanism and Navigation System for the Visually Impaired. In Proceedings of the 4th International Conference on Human System Interactions, Yokohama, Japan, 19–21 May 2011; pp. 34–39. [Google Scholar]

- Cloix, S.; Bologna, G.; Weiss, V.; Pun, T.; Hasler, D. Low-power depth-based descending stair detection for smart assistive devices. EURASIP J. Image Video Proc. 2016, 2016, 33. [Google Scholar] [CrossRef]

- Saegusa, S.; Yasuda, Y.; Uratani, Y.; Tanaka, E.; Makino, T.; Chang, J.Y. Development of a Guide-Dog Robot: Leading and Recognizing a Visually-Handicapped Person using a LRF. J. Adv. Mech. Des. Syst. Manuf. 2010, 4, 194–205. [Google Scholar] [CrossRef]

- Peng, E.; Peursum, P.; Li, L.; Venkatesh, S. A smartphone-based obstacle sensor for the visually impaired. In Proceedings of the Ubiquitous Intelligence and Computing, Xi’an, China, 26–29 October 2010; pp. 590–604. [Google Scholar]

- Dumitraş, T.; Lee, M.; Quinones, P.; Smailagic, A.; Siewiorek, D.; Narasimhan, P. Eye of the Beholder: Phone-based text-recognition for the visually-impaired. In Proceedings of the 10th IEEE International Symposium on Wearable Computers, Montreux, Switzerland, 11–14 October 2006; pp. 145–146. [Google Scholar]

- Tekin, E.; Coughlan, J.M.; Shen, H. Real-time detection and reading of LED/LCD displays for visually impaired persons. In Proceedings of the 2011 IEEE Workshop on Applications of Computer Vision (WACV), Kona, HI, USA, 5–7 January 2011; pp. 491–496. [Google Scholar]

- Zhang, S.; Yoshino, K. A braille recognition system by the mobile phone with embedded camera. In Proceedings of the Second International Conference on Innovative Computing, Information and Control, Kumamoto, Japan, 5–7 September 2007; p. 223. [Google Scholar]

- American Foundation for the Blind. Estimated number of adult Braille readers in the United States. J. Vis. Impair. Blind. 1996, 90, 287. [Google Scholar]

- Al-Doweesh, S.A.; Al-Hamed, F.A.; Al-Khalifa, H.S. What Color? A Real-time Color Identification Mobile Application for Visually Impaired People. In Proceedings of the HCI International 2014-Posters Extended Abstracts, Heraklion, Crete, 22–27 June 2014; pp. 203–208. [Google Scholar]

- Matusiak, K.; Skulimowski, P.; Strumillo, P. Object recognition in a mobile phone application for visually impaired users. In Proceedings of the 6th International Conference on Human System Interaction (HSI), Gdansk, Poland, 6–8 June 2013; pp. 479–484. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Rosten, E.; Drummond, T. Machine learning for high-speed corner detection. In Proceedings of the Computer Vision–ECCV 2006, Graz, Austria, 7–13 May 2006; pp. 430–443. [Google Scholar]

- Ivanchenko, V.; Coughlan, J.; Shen, H. Crosswatch: A camera phone system for orienting visually impaired pedestrians at traffic intersections. In Proceedings of the International Conference on Computers for Handicapped Persons, Linz, Austria, 9–11 July 2008; pp. 1122–1128. [Google Scholar]

- Ivanchenko, V.; Coughlan, J.; Shen, H. Real-time walk light detection with a mobile phone. In Proceedings of the Computers Helping People with Special Needs, Vienna, Austria, 14–16 July 2010; pp. 229–234. [Google Scholar]

- Tapu, R.; Mocanu, B.; Bursuc, A.; Zaharia, T. A smartphone-based obstacle detection and classification system for assisting visually impaired people. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Sydney, Australia, 1–8 December 2013; pp. 444–451. [Google Scholar]

- Mocanu, B.; Tapu, R.; Zaharia, T. An Obstacle Categorization System for Visually Impaired People. In Proceedings of the 2015 11th International Conference on Signal-Image Technology Internet-Based Systems (SITIS), Bangkok, Thailand, 23–27 November 2015; pp. 147–154. [Google Scholar]

- Mocanu, B.; Tapu, R.; Zaharia, T. When Ultrasonic Sensors and Computer Vision Join Forces for Efficient Obstacle Detection and Recognition. Sensors 2016, 16, 1807. [Google Scholar] [CrossRef] [PubMed]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Gonzalez, R.C.; Woods, R.E. Digital Image Processing, 3rd ed.; Prentice Hall: Upper Saddle River, NJ, USA, 2008; pp. 657–661. [Google Scholar]

- Shi, J.; Tomasi, C. Good features to track. In Proceedings of the 1994 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 21–23 June 1994; pp. 593–600. [Google Scholar]

- Lucas, B.D.; Kanade, T. An iterative image registration technique with an application to stereo vision. IJCAI 1981, 81, 674–679. [Google Scholar]

- Bouguet, J.Y. Pyramidal implementation of the affine lucas kanade feature tracker description of the algorithm. Intel Corp. 2001, 5, 4. [Google Scholar]

- Solem, J.E. Programming Computer Vision with Python-Tools and Algorithm for Analyzing Images; O’Reilly Media, Inc.: Newton, MA, USA, 2012; pp. 55–58. [Google Scholar]

- Szeliski, R. Computer Vision-Algorithms and Applications; Springer: London, UK, 2010. [Google Scholar]

- Google. Nexus 5. Available online: https://www.google.com/nexus/5x/ (accessed on 1 May 2016).

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–26 June 2005; Volume 1, pp. 886–893. [Google Scholar]

| Output | ||||||

|---|---|---|---|---|---|---|

| Others | ||||||

| 18 | 0 | 0 | 0 | 0 | ||

| 0 | 14 | 0 | 0 | 4 | ||

| Input | 0 | 0 | 17 | 1 | 0 | |

| 0 | 0 | 0 | 13 | 5 | ||

| Others | 0 | 0 | 0 | 0 | 12 | |

| Output | ||||||

|---|---|---|---|---|---|---|

| Others | ||||||

| 17 | 1 | 0 | 0 | 0 | ||

| 0 | 16 | 0 | 0 | 2 | ||

| Input | 0 | 0 | 18 | 0 | 0 | |

| 0 | 0 | 0 | 16 | 2 | ||

| Others | 0 | 0 | 0 | 0 | 12 | |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nakamura, D.; Takizawa, H.; Aoyagi, M.; Ezaki, N.; Mizuno, S. Smartphone-Based Escalator Recognition for the Visually Impaired. Sensors 2017, 17, 1057. https://doi.org/10.3390/s17051057

Nakamura D, Takizawa H, Aoyagi M, Ezaki N, Mizuno S. Smartphone-Based Escalator Recognition for the Visually Impaired. Sensors. 2017; 17(5):1057. https://doi.org/10.3390/s17051057

Chicago/Turabian StyleNakamura, Daiki, Hotaka Takizawa, Mayumi Aoyagi, Nobuo Ezaki, and Shinji Mizuno. 2017. "Smartphone-Based Escalator Recognition for the Visually Impaired" Sensors 17, no. 5: 1057. https://doi.org/10.3390/s17051057