Stereo Vision-Based High Dynamic Range Imaging Using Differently-Exposed Image Pair

Abstract

:1. Introduction

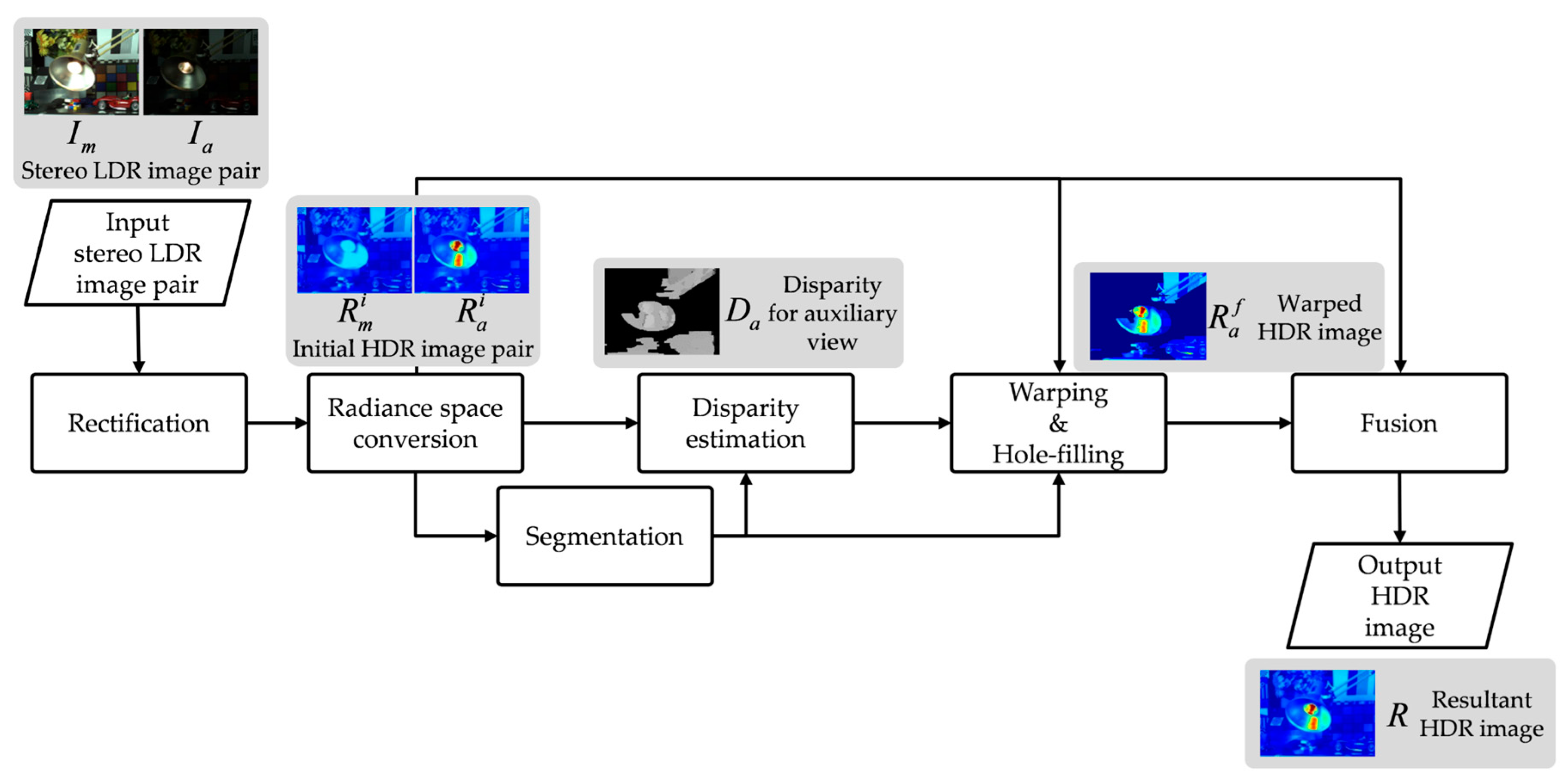

2. Proposed Stereo HDR Imaging Method

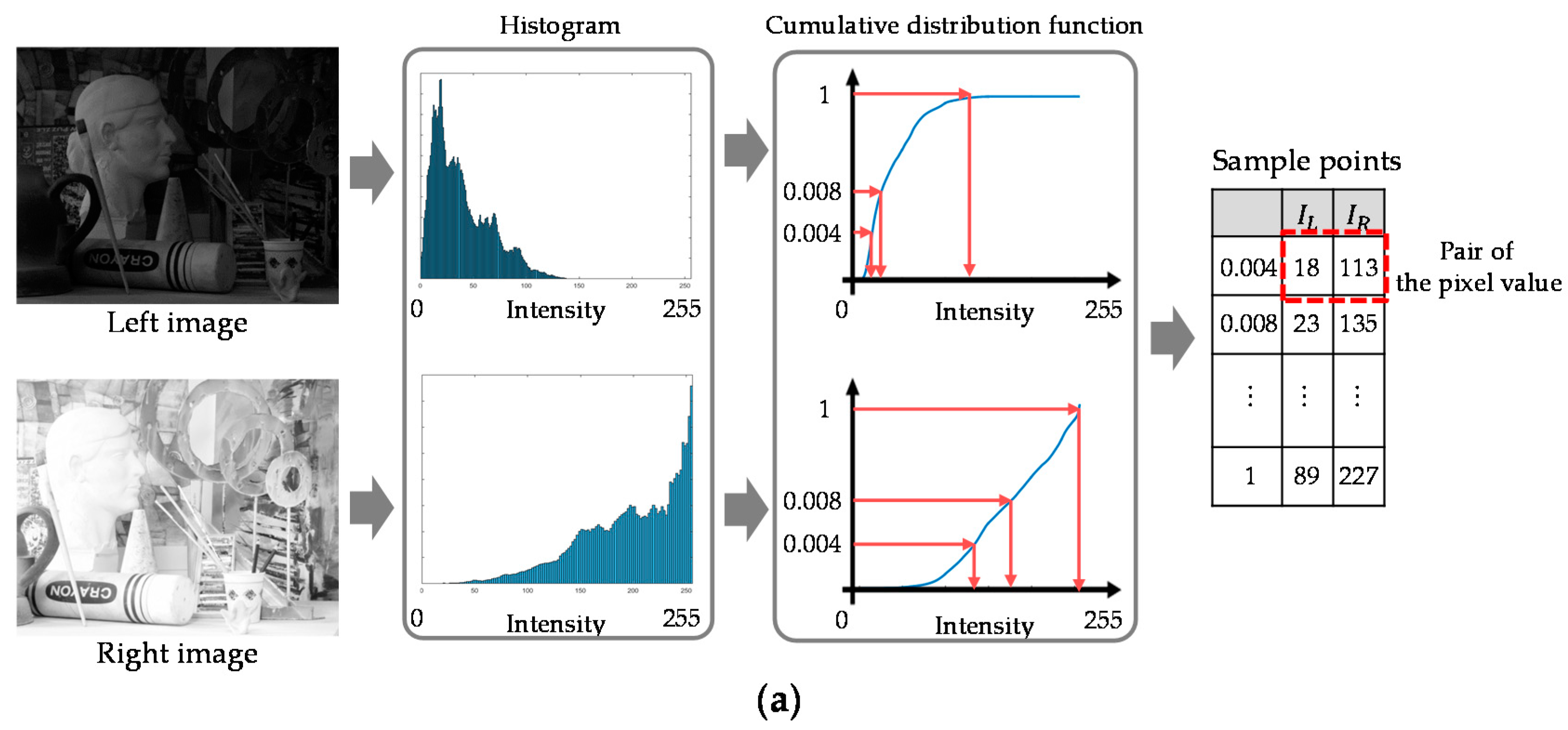

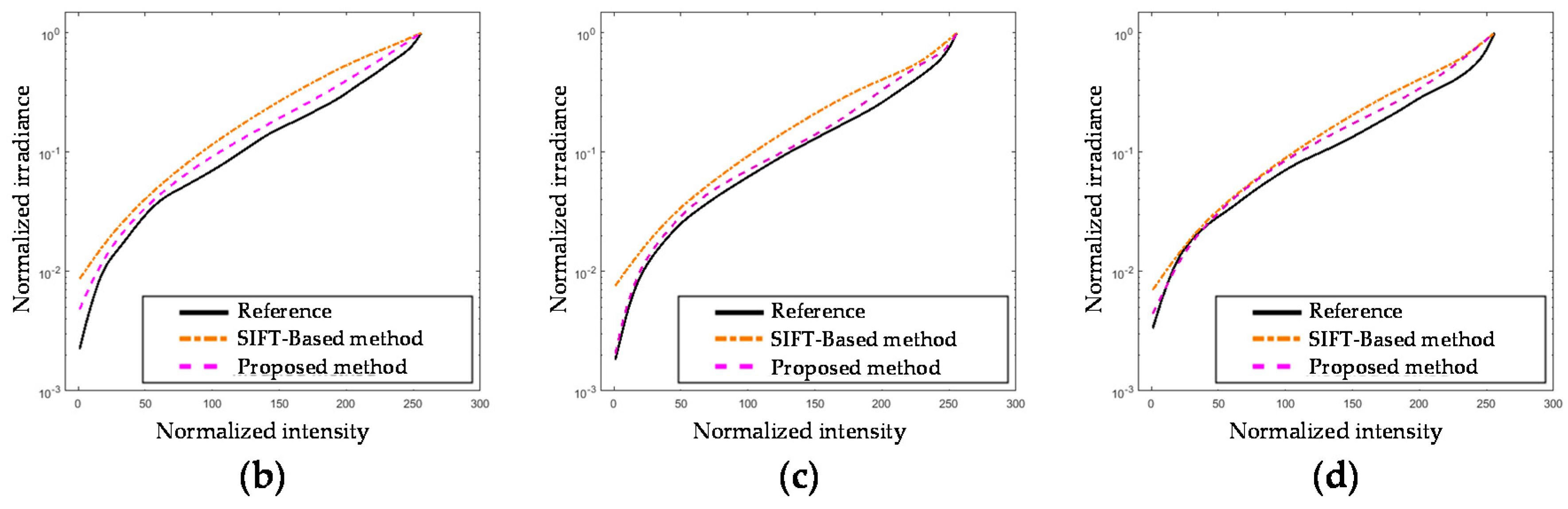

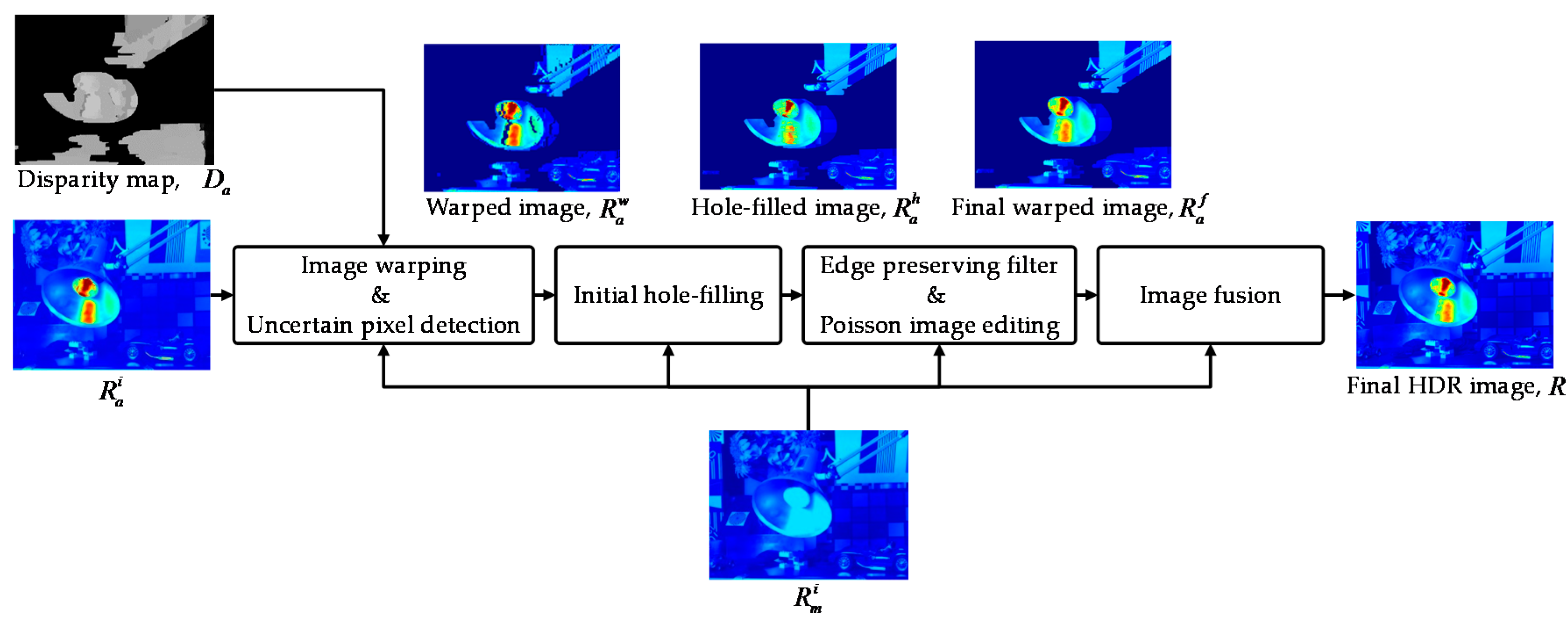

2.1. Overall Framework

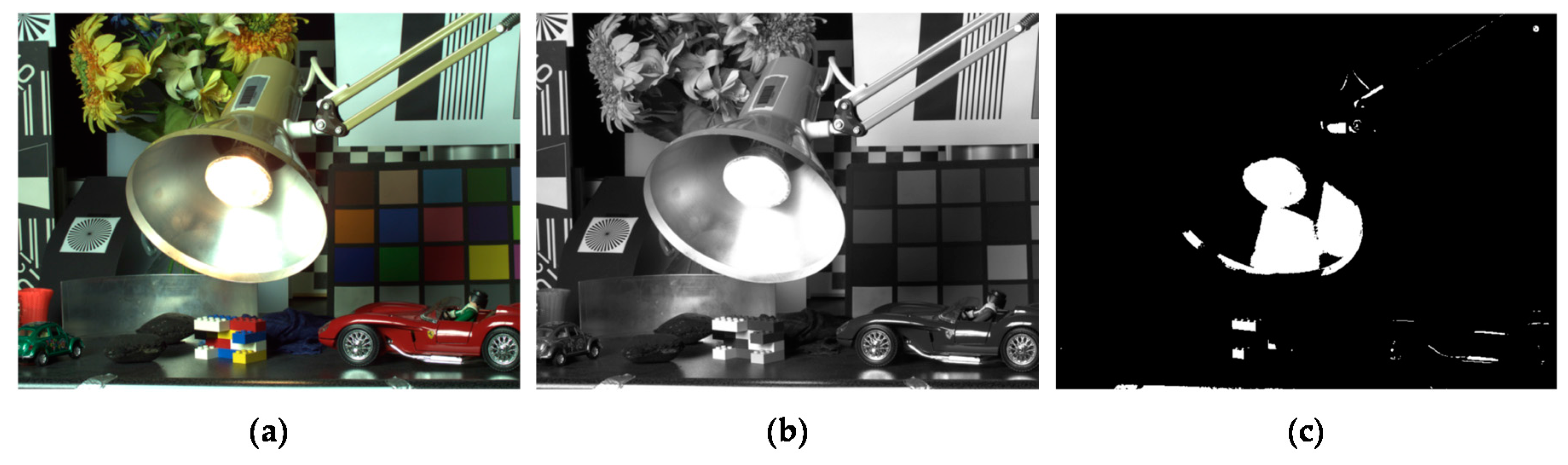

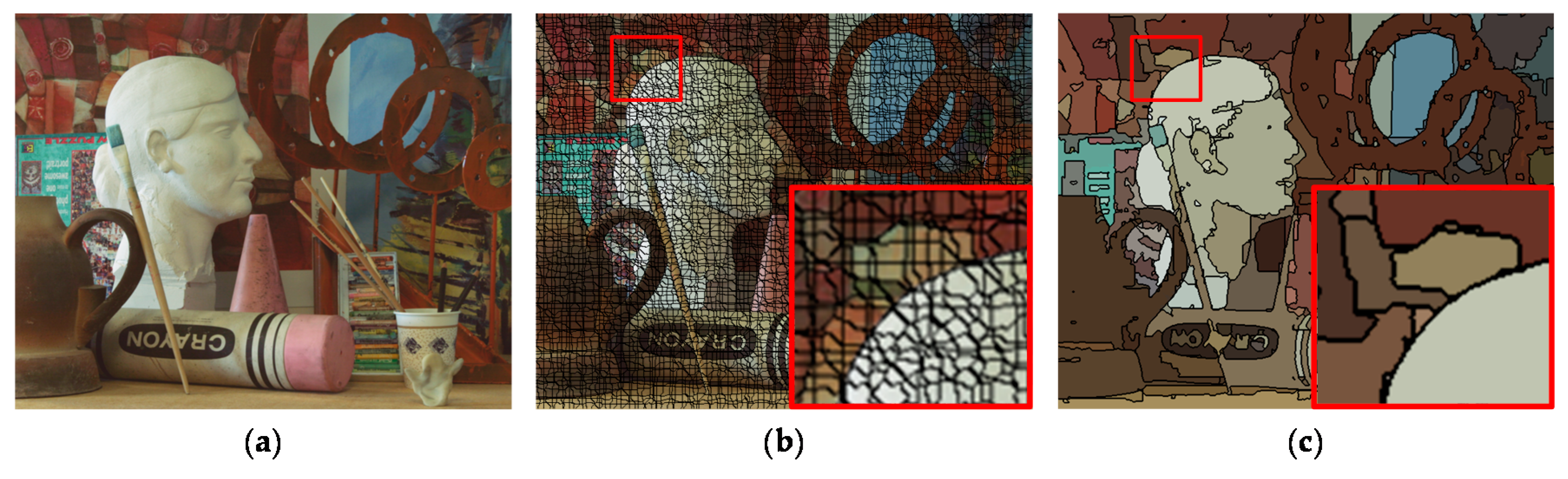

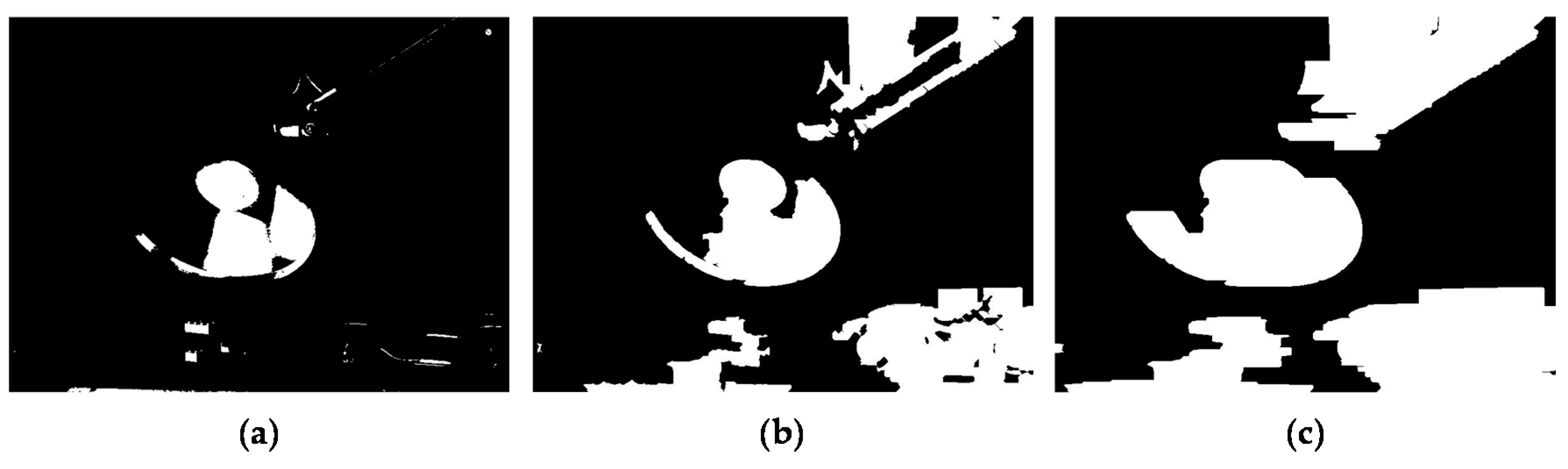

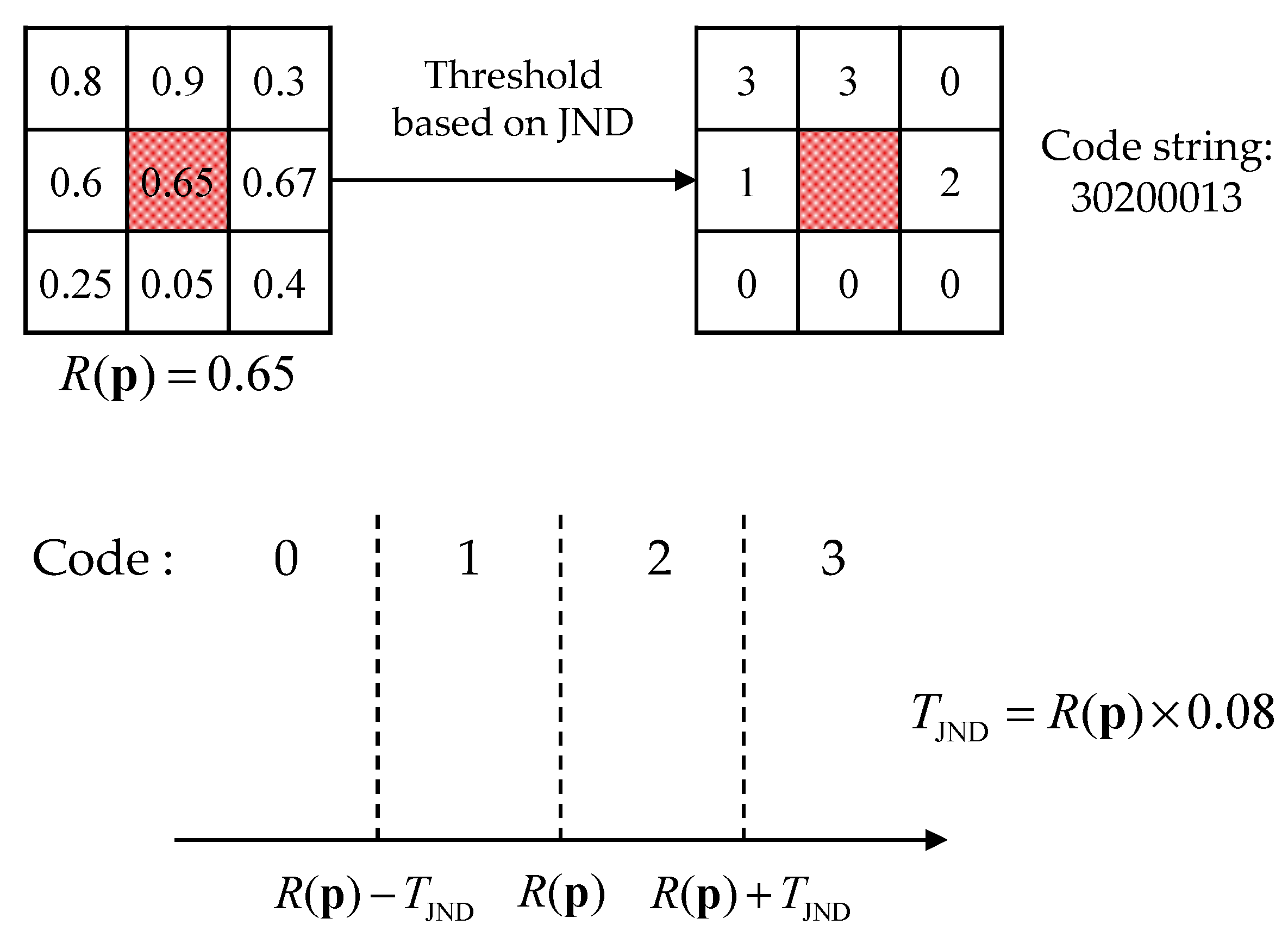

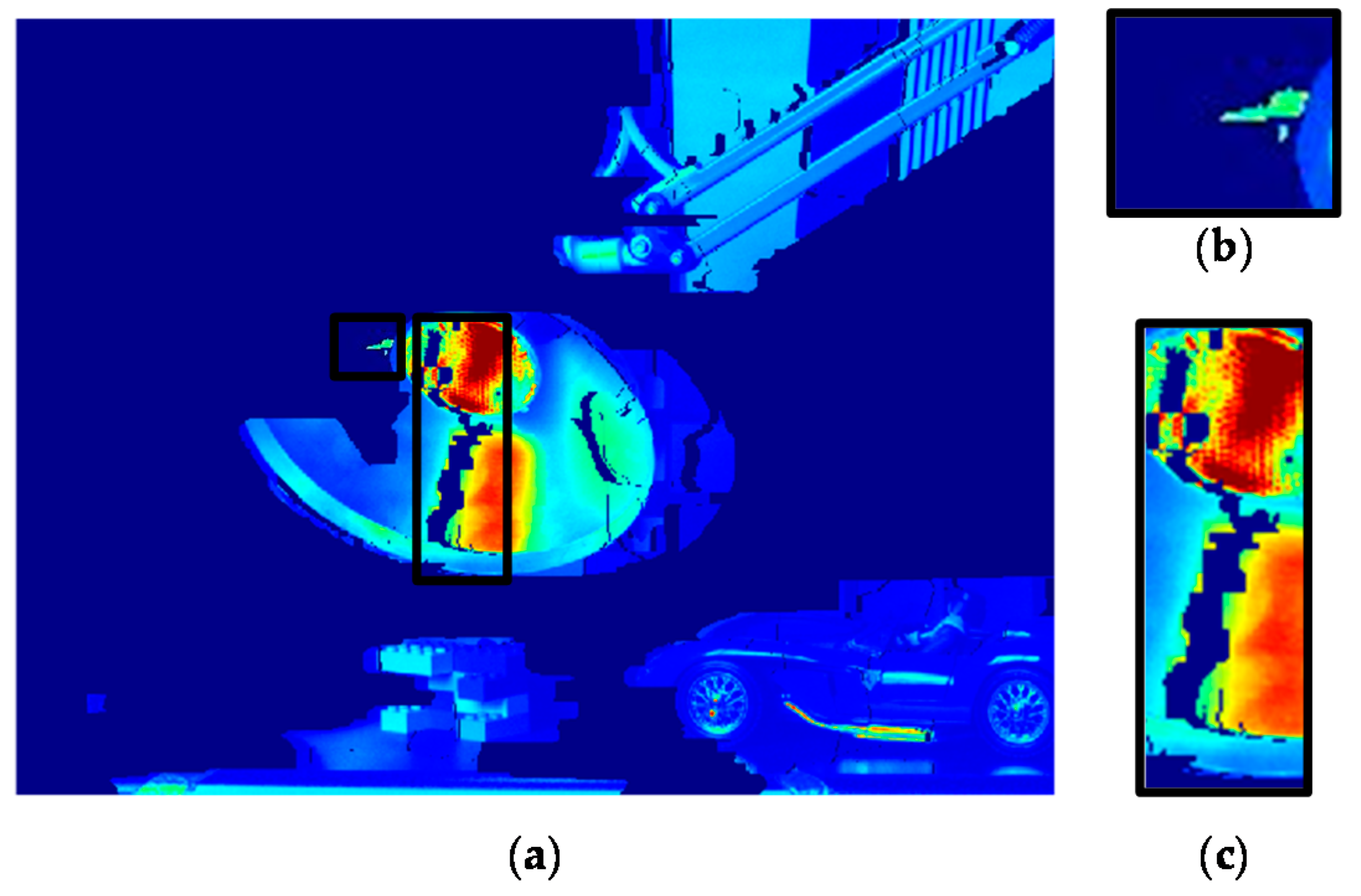

2.2. Disparity Estimation

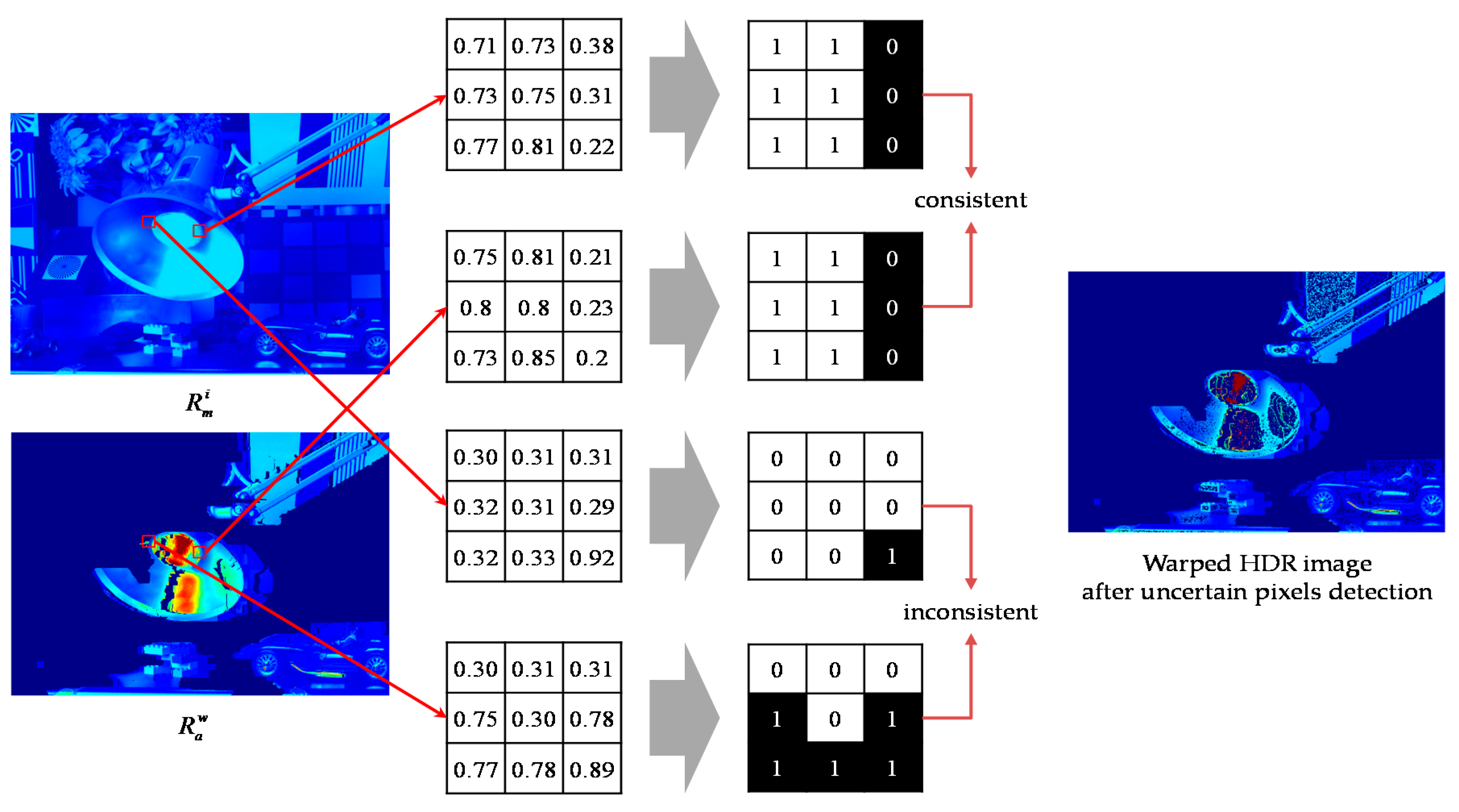

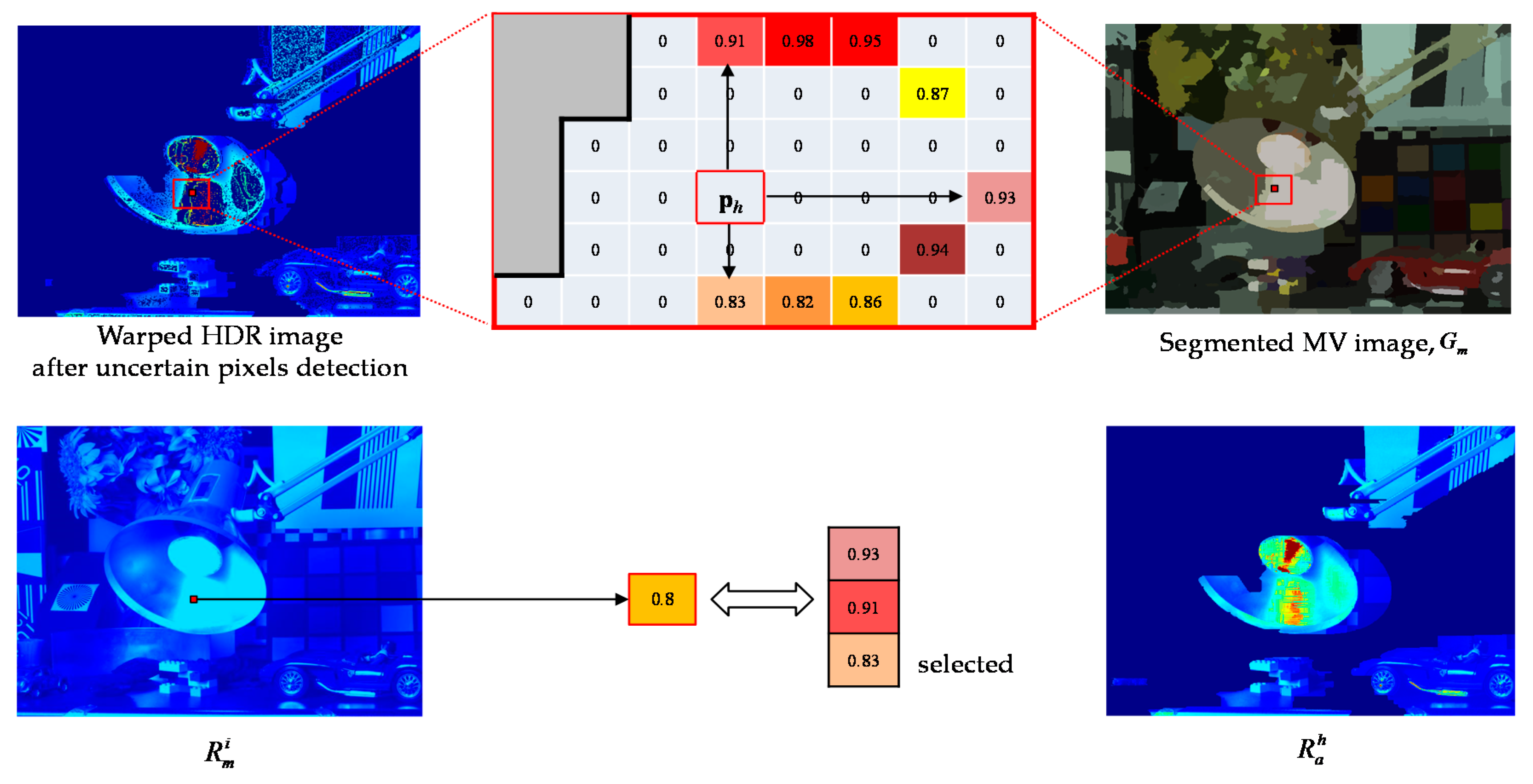

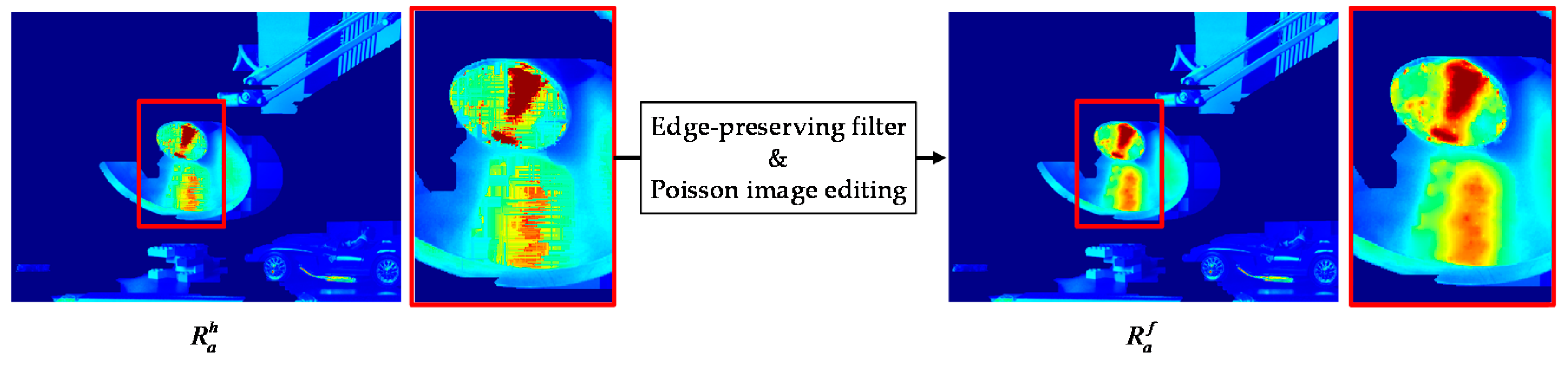

2.3. Hole-Filling for the Warped AV HDR Image

3. Results

3.1. Experimental Setup

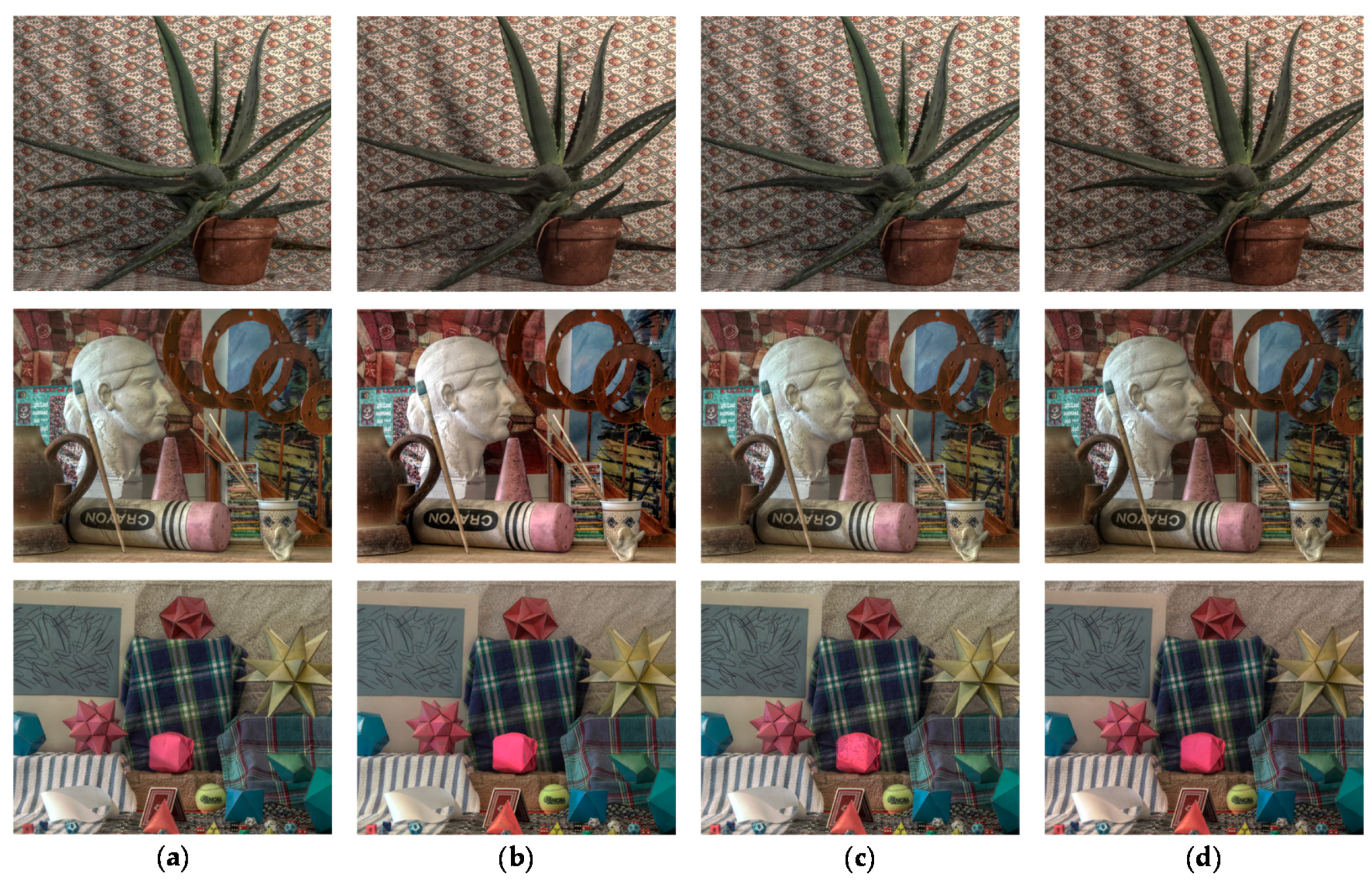

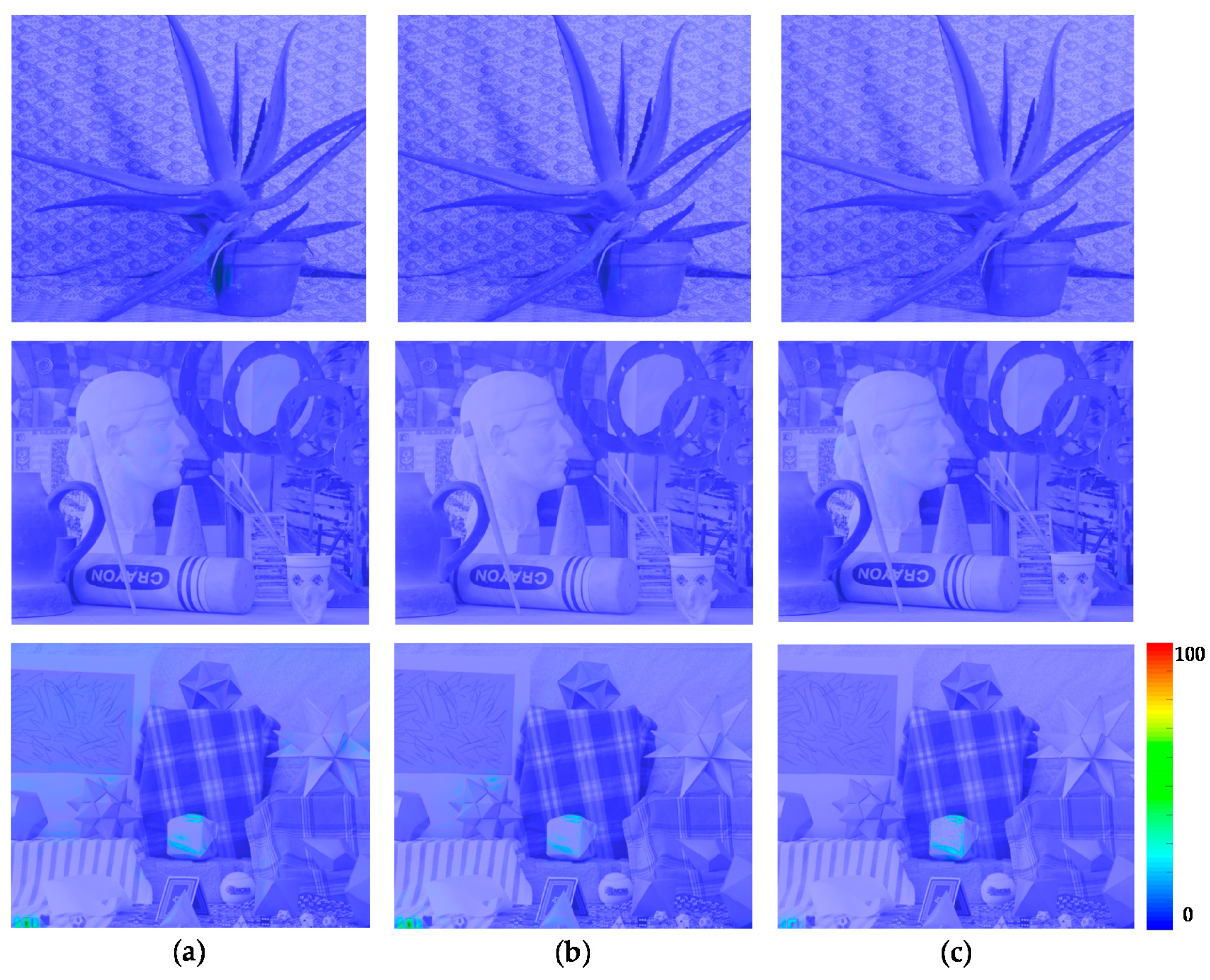

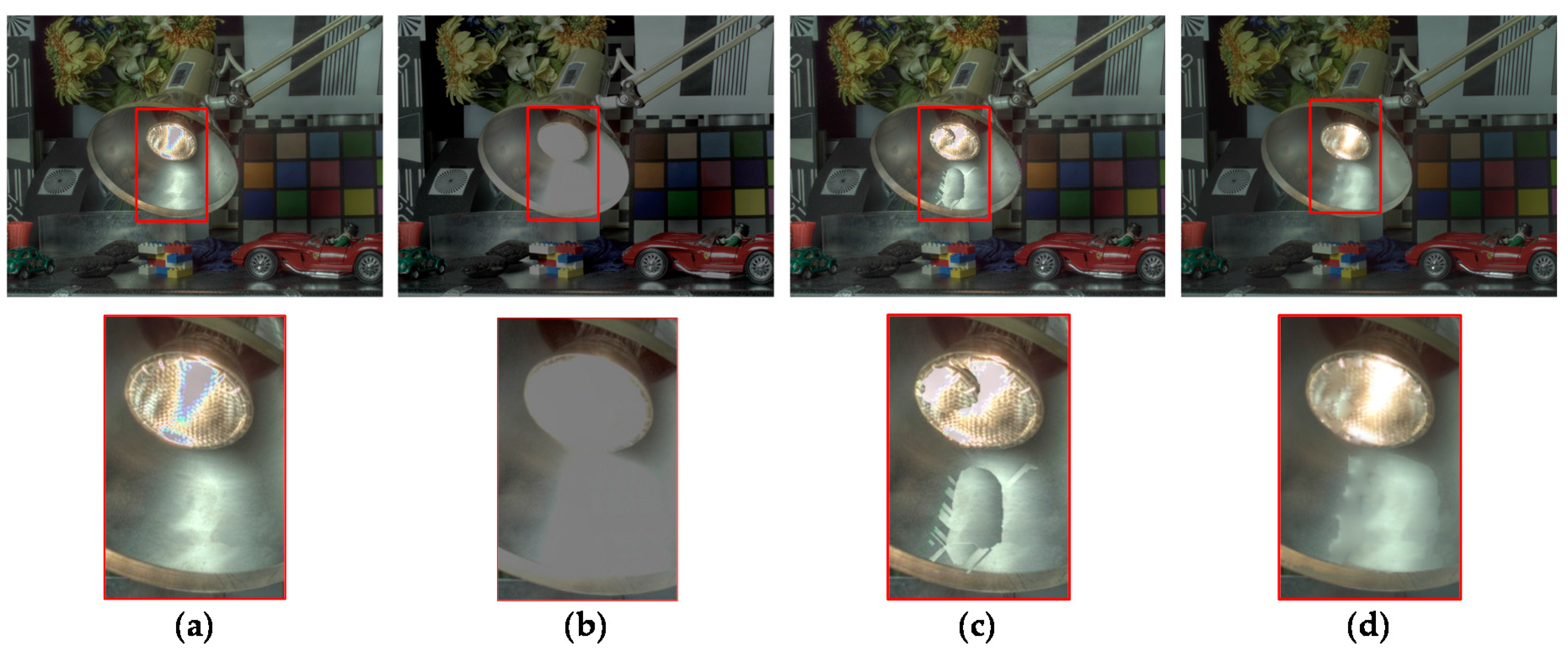

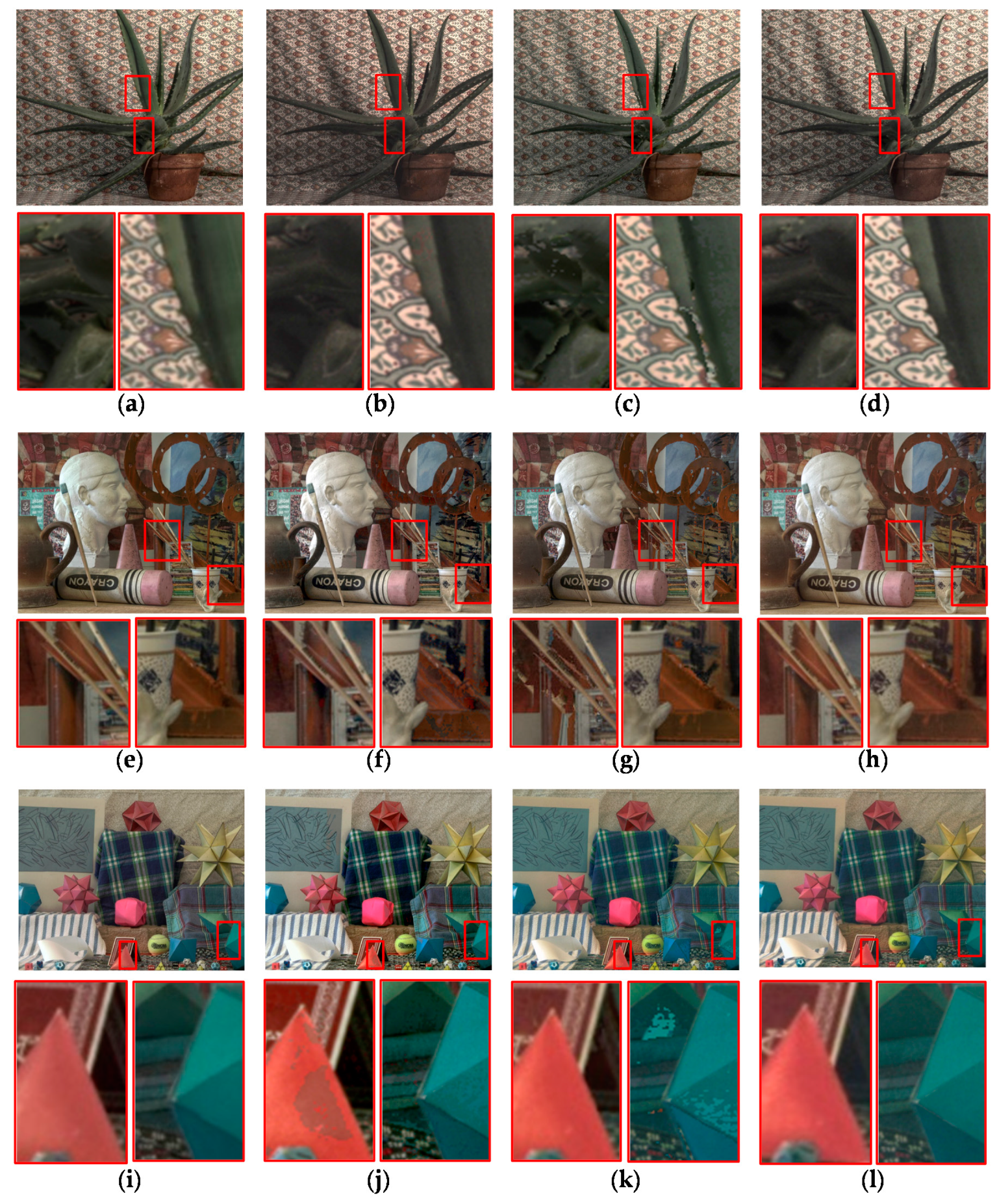

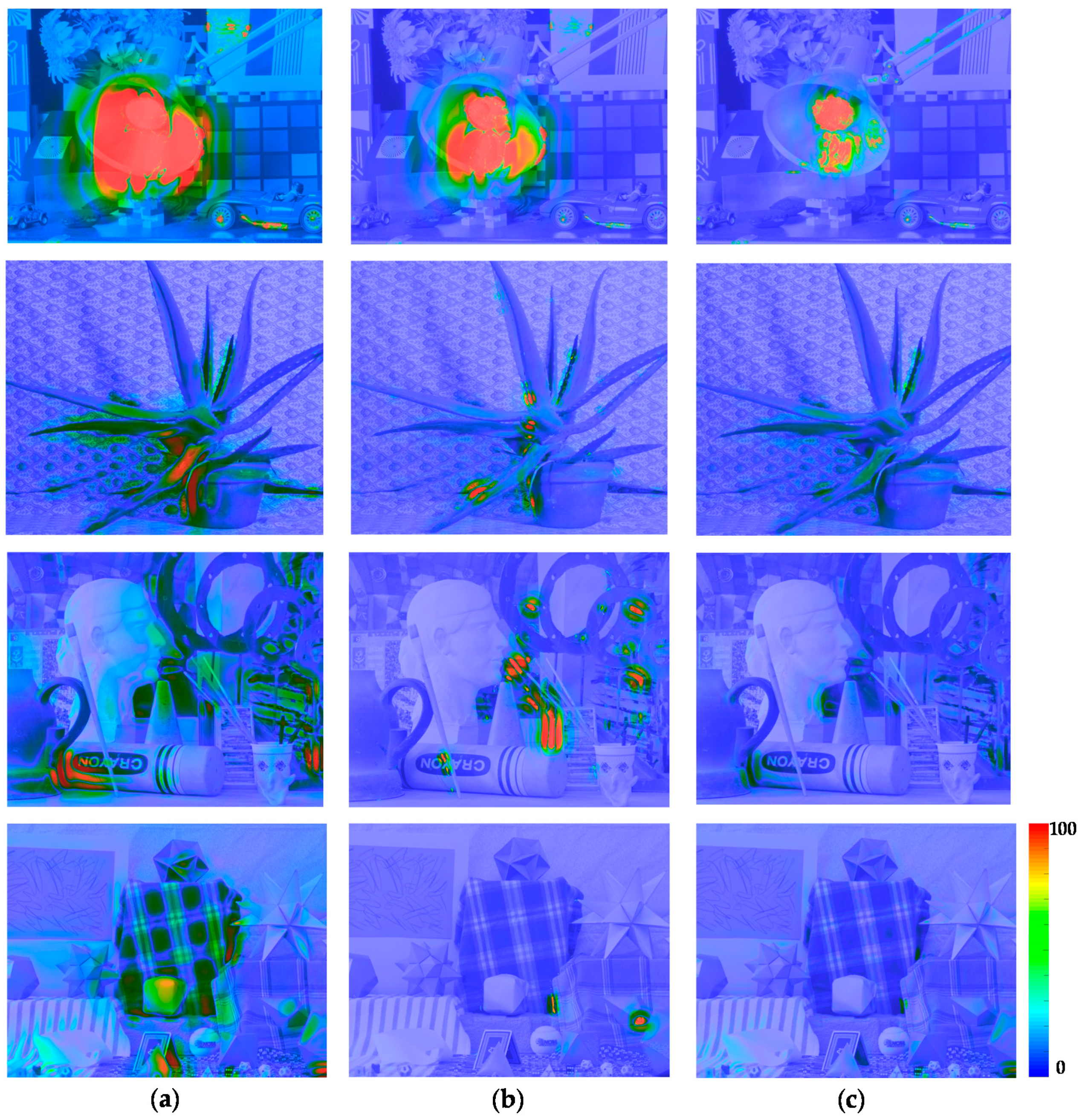

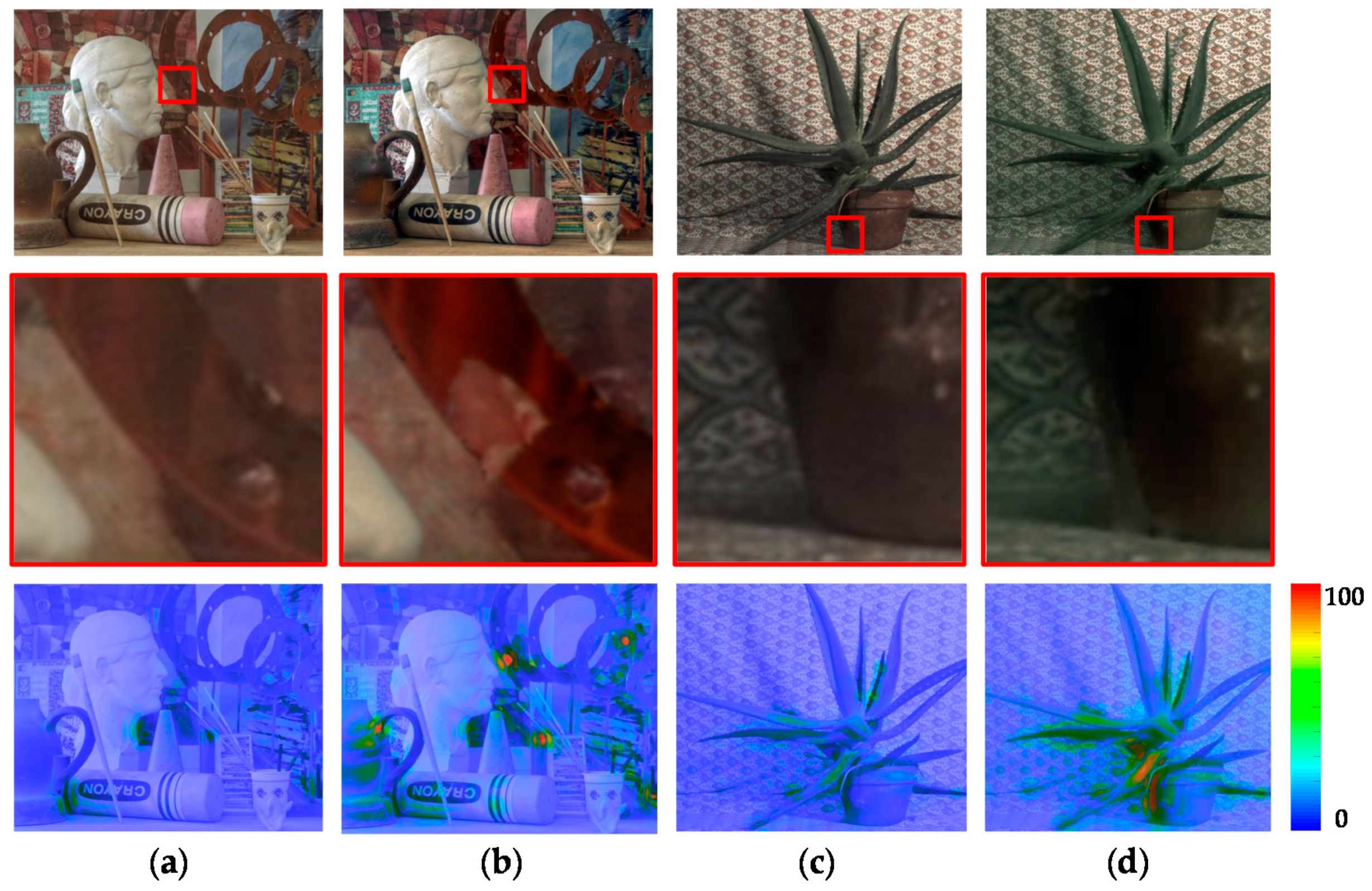

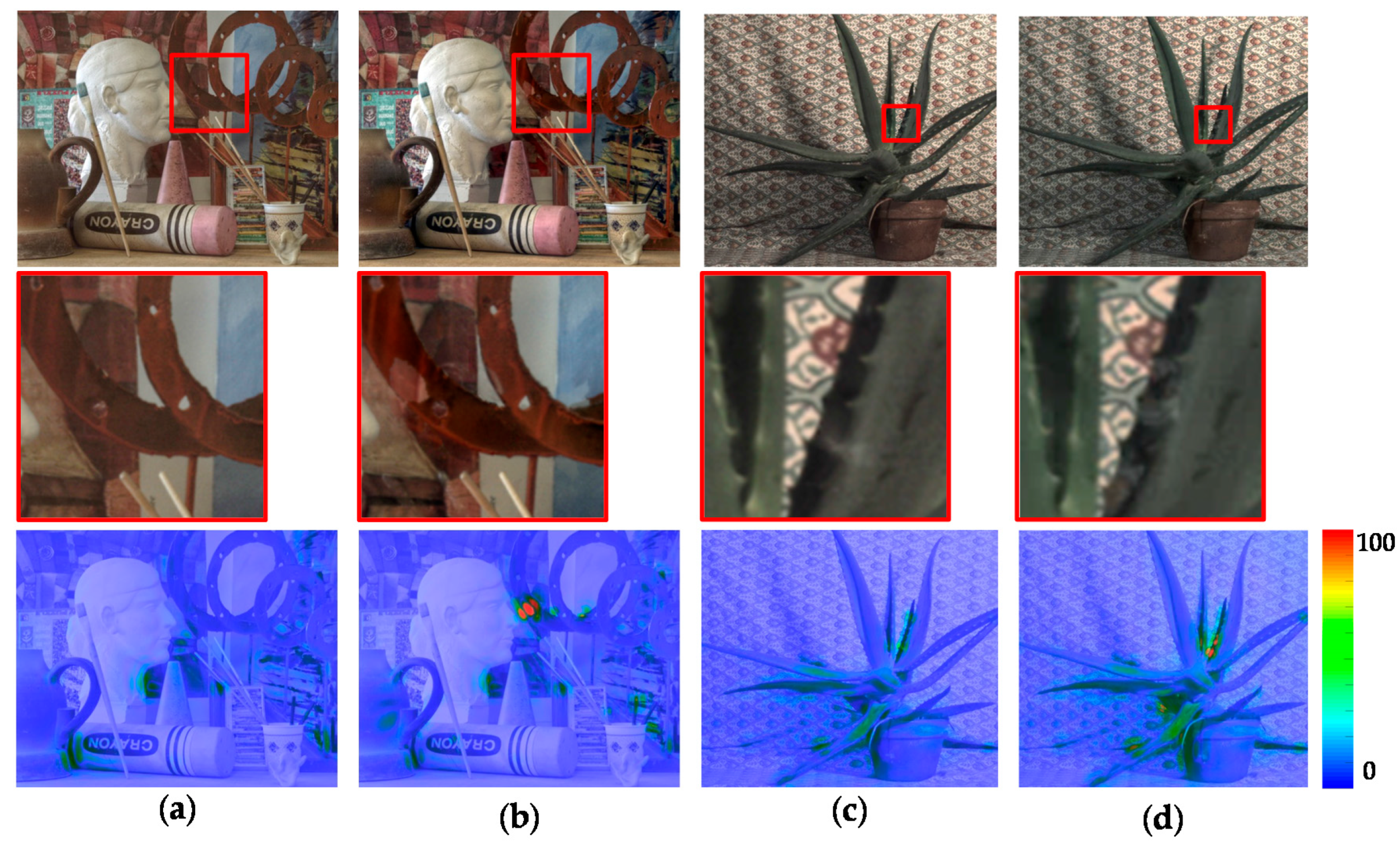

3.2. Evaluation of Performance

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Shafie, S.; Kawahito, S.; Itoh, S. A dynamic range expansion technique for CMOS image sensors with dual charge storage in a pixel and multiple sampling. Sensors 2008, 8, 1915–1926. [Google Scholar] [CrossRef] [PubMed]

- Shafie, S.; Kawahito, S.; Halin, I.A.; Hasan, W.Z.W. Non-linearity in wide dynamic range CMOS image sensors utilizing a partial charge transfer technique. Sensors 2009, 9, 9452–9467. [Google Scholar] [CrossRef] [PubMed]

- Martínez-Sánchez, A.; Fernández, C.; Halin, I.A.; Navarro, P.J.; Iborra, A. A Novel Method to Increase LinLog CMOS Sensors’ Performance in High Dynamic Range Scenarios. Sensors 2011, 11, 8412–8429. [Google Scholar] [CrossRef] [PubMed]

- Mertens, T.; Kautz, J.; Van Reeth, F. Exposure fusion: A simple and practical alternative to high dynamic range photography. Comput. Graph. Forum 2009, 28, 161–171. [Google Scholar] [CrossRef]

- Gallo, O.; Gelfandz, N.; Chen, W.-C.; Tico, M.; Pulli, K. Artifact-free high dynamic range imaging. In Proceedings of the IEEE International Conference on Computational Photography, San Francisco, CA, USA, 16–17 April 2009; pp. 1–7. [Google Scholar]

- Li, S.; Kang, X. Fast multi-exposure image fusion with median filter and recursive filter. IEEE Trans. Consum. Electron. 2012, 58, 626–632. [Google Scholar] [CrossRef]

- Zhang, W.; Cham, W.-K. Gradient-directed multiexposure composition. IEEE Trans. Image Process. 2012, 21, 2318–2323. [Google Scholar] [CrossRef] [PubMed]

- Jacobs, K.; Loscos, C.; Ward, G. Automatic high dynamic range image generation for dynamic scenes. IEEE Comput. Graph. Appl. 2008, 28, 84–93. [Google Scholar] [CrossRef] [PubMed]

- Sen, P.; Kalantari, N.K.; Yaesoubi, M.; Darabi, S.; Goldman, D.B.; Shechtman, E. Robust patch-based HDR reconstruction of dynamic scenes. ACM Trans. Graph. 2012, 31, 203. [Google Scholar] [CrossRef]

- Hu, J.; Gallo, O.; Pulli, K.; Sun, X. HDR Deghosting: How to Deal with Saturation? In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 1163–1170. [Google Scholar]

- Lu, J.; Yang, H.; Min, D.; Do, M.N. Patch Match Filter: Efficient edge-aware filtering meets randomized search for fast correspondence field estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 1854–1861. [Google Scholar]

- Nayar, S.K.; Mitsunaga, T. High dynamic range imaging: Spatially varying pixel exposures. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Hilton Head Island, SC, USA, 15 June 2000; pp. 472–479. [Google Scholar]

- Selmanovic, E.; Debattista, K.; Bashford-Rogers, T.; Chalmers, A. Generating stereoscopic HDR images using HDR-LDR image pairs. ACM Trans. Appl. Perception. 2013, 10, 3. [Google Scholar] [CrossRef]

- Lin, H.-Y.; Chang, W.-Z. High dynamic range imaging for stereoscopic scene representation. In Proceedings of the 16th IEEE International Conference on Image Processing, Cairo, Egypt, 7–10 November 2009; pp. 4305–4308. [Google Scholar]

- Sun, N.; Mansour, H.; Ward, R. HDR image construction from multi-exposed stereo LDR images. In Proceeding of the 17th IEEE International Conference on Image Processing, Hong Kong, China, 26–29 September 2010; pp. 2973–2976. [Google Scholar]

- Batz, M.; Richter, T.; Garbas, J.-U.; Papst, A.; Seiler, J.; Kaup, A. High dynamic range video reconstruction from a stereo camera setup. Signal Process. Image Commun. 2014, 29, 191–202. [Google Scholar] [CrossRef]

- Tursun, O.T.; Akyuz, A.O.; Erdem, A.; Erdem, E. The State of the Art in HDR Deghosting: A Survey and Evaluation. Comput. Graph. Forum 2015, 34, 683–707. [Google Scholar] [CrossRef]

- Fusiello, A.; Trucco, E.; Verri, A. A compact algorithm for rectification of stereo pairs. Mach. Vis. Appl. 2000, 12, 16–22. [Google Scholar] [CrossRef]

- Debevec, P.E.; Malik, J. Recovering high dynamic range radiance maps from photographs. In Proceedings of the ACM SIGGRAPH 2008 classes, Los Angeles, CA, USA, 11–15 August 2008; p. 31. [Google Scholar]

- Moore, A.P.; Prince, S.J.D.; Warrell, J.; Mohammed, U.; Jones, G. Superpixel lattices. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar]

- Felzenszwalb, P.F.; Huttenlocher, D.P. Efficient graph-based image segmentation. Int. J. Comput. Vis. 2004, 59, 167–181. [Google Scholar] [CrossRef]

- Akhavan, T.; Yoo, H.; Gelautz, M. Evaluation of LDR, tone mapped and HDR stereo matching using cost-volume filtering approach. Proceeding of the European Signal Processing Conference, Lisbon, Portugal, 1–5 September 2014; pp. 1617–1621. [Google Scholar]

- Zabih, R.; Woodfill, J. Non-parametric local transforms for computing visual correspondence. In Proceedings of the European Conference on Computer Vision, Stockholm, Sweden, 2–6 May 1994; pp. 151–158. [Google Scholar]

- Hirschmuller, H.; Scharstein, D. Evaluation of stereo matching costs on images with radiometric differences. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 31, 1582–1599. [Google Scholar] [CrossRef] [PubMed]

- Yoon, K.-J.; Kweon, I.S. Adaptive support-weight approach for correspondence search. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 650–656. [Google Scholar] [CrossRef] [PubMed]

- Tombari, F.; Mattoccia, S.; Stefano, L.D. Segmentation-Based Adaptive Support for Accurate Stereo Correspondence. In Proceedings of the Pacific-Rim Symposium on Image and Video Technology, Santiago, Chile, 17–19 December 2007; pp. 427–438. [Google Scholar]

- Min, D.; Lu, J.; Do, M.N. Joint histogram-based cost aggregation for stereo matching. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 2539–2545. [Google Scholar] [PubMed]

- Kang, S.-J.; Lee, D.-H.; Ji, S.-W.; Kim, C.-S.; Ko, S.-J. A novel method to generate the ghost-free wide dynamic range image. In Proceedings of the IEEE International Conference on Consumer Electronics, Las Vegas, NV, USA, 7–11 January 2016; pp. 97–98. [Google Scholar]

- Gastal, E.S.L.; Oliveira, M.M. Domain transform for edge-aware image and video processing. In Proceedings of the ACM SIGGRAPH 2011, Vancouver, BC, Canada, 7–11 Augest 2011; p. 69. [Google Scholar]

- Perez, P.; Gangnet, M.; Blake, A. Poisson image editing. In Proceedings of the ACM SIGGRAPH 2003, San Diego, CA, USA, 27–31 July 2003; pp. 313–318. [Google Scholar]

- Middlebury Stereo Vision Page. Available online: http://vision.middlebury.edu/stereo/ (accessed on 21 June 2017).

- Mantiuk, R.; Myszkowski, K.; Seidel, H.-P. A perceptual framework for contrast processing of high dynamic range images. In Proceedings of the Second Symposium on Applied Perception in Graphics and Visualization, La Coruña, Spain, 26–28 August 2005; pp. 87–94. [Google Scholar]

- Mantiuk, R.; Kim, K.J.; Rempel, A.G.; Heidrich, W. HDR-VDP-2: A calibrated visual metric for visibility and quality predictions in all luminance conditions. In Proceedings of the ACM SIGGRAPH 2011, Vancouver, BC, Canada, 7–11 Augest 2011; p. 40. [Google Scholar]

- Robertson, M.; Borman, S.; Stevenson, R. Dynamic range improvement through multiple exposures. In Proceedings of the International Conference on Image Processing, Kobe, Japan, 24–28 October 1999; pp. 159–163. [Google Scholar]

| HDR-VDP-2 | Normal-Long Exposure Pairs | Short-Long Exposure Pairs | ||||

|---|---|---|---|---|---|---|

| Lin and Coworkers’ Method [14] | Batz and Coworkers’ Method [16] | Proposed | Lin and Coworkers’ Method [14] | Batz and Coworkers’ Method [16] | Proposed | |

| Art | 92.05 | 92.76 | 94.87 | 79.23 | 84.95 | 92.64 |

| Aloe | 91.51 | 92.81 | 94.30 | 78.89 | 83.81 | 90.57 |

| Moebius | 92.27 | 92.44 | 92.76 | 82.43 | 86.26 | 92.71 |

| IIS Jumble | 38.81 | 45.56 | 58.01 | |||

| Average | 91.94 | 92.67 | 93.98 | 69.84 | 75.15 | 83.48 |

| HDR-VDP-2 | Art | Aloe | Moebius | IIS Jumble |

|---|---|---|---|---|

| Proposed | 92.64 | 90.57 | 92.71 | 58.01 |

| Proposed with conventional ICRF | 86.61 | 81.69 | 87.25 | 52.95 |

| Proposed without Rejection | 84.91 | 86.25 | 84.56 | 41.93 |

| Proposed without PIE | 86.44 | 86.66 | 87.78 | 49.57 |

| Proposed without Rejection & PIE | 82.48 | 85.81 | 82.63 | 36.22 |

| Proposed with conventional ICRF & without PIE | 82.26 | 78.18 | 85.90 | 49.88 |

| Proposed with conventional ICRF & without Rejection | 81.34 | 78.35 | 84.68 | 38.84 |

| Proposed with conventional ICRF & without Rejection and PIE | 78.17 | 77.95 | 81.87 | 35.96 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Park, W.-J.; Ji, S.-W.; Kang, S.-J.; Jung, S.-W.; Ko, S.-J. Stereo Vision-Based High Dynamic Range Imaging Using Differently-Exposed Image Pair. Sensors 2017, 17, 1473. https://doi.org/10.3390/s17071473

Park W-J, Ji S-W, Kang S-J, Jung S-W, Ko S-J. Stereo Vision-Based High Dynamic Range Imaging Using Differently-Exposed Image Pair. Sensors. 2017; 17(7):1473. https://doi.org/10.3390/s17071473

Chicago/Turabian StylePark, Won-Jae, Seo-Won Ji, Seok-Jae Kang, Seung-Won Jung, and Sung-Jea Ko. 2017. "Stereo Vision-Based High Dynamic Range Imaging Using Differently-Exposed Image Pair" Sensors 17, no. 7: 1473. https://doi.org/10.3390/s17071473