Visual Servoing for an Autonomous Hexarotor Using a Neural Network Based PID Controller

Abstract

:1. Introduction

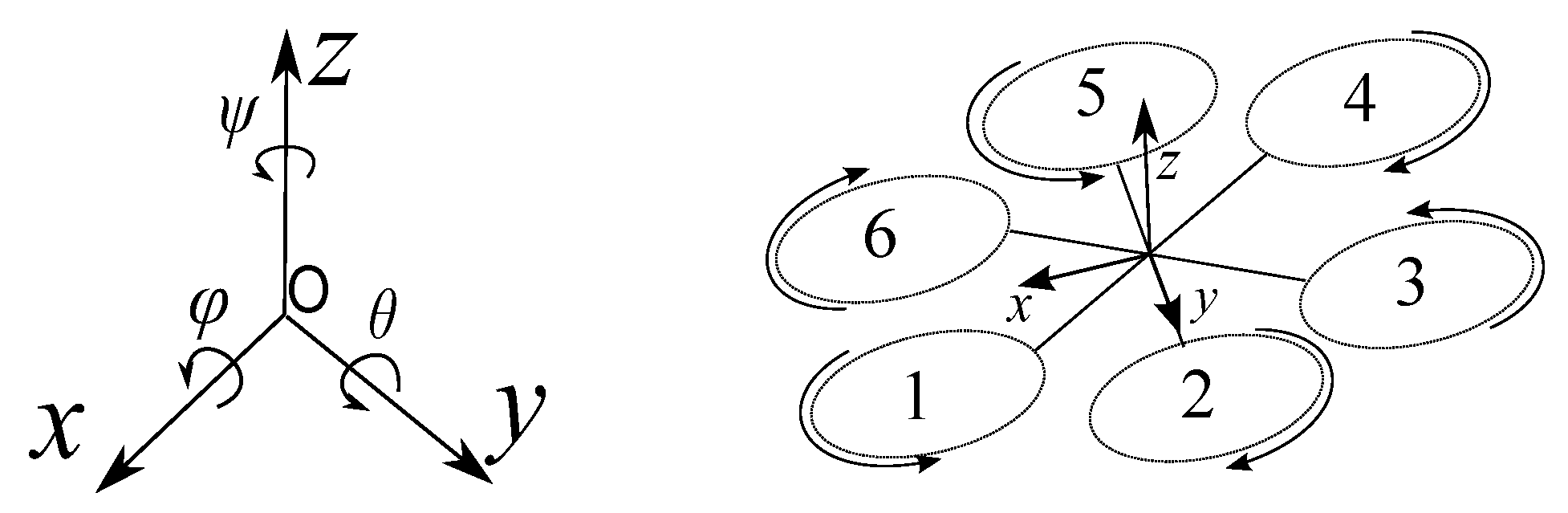

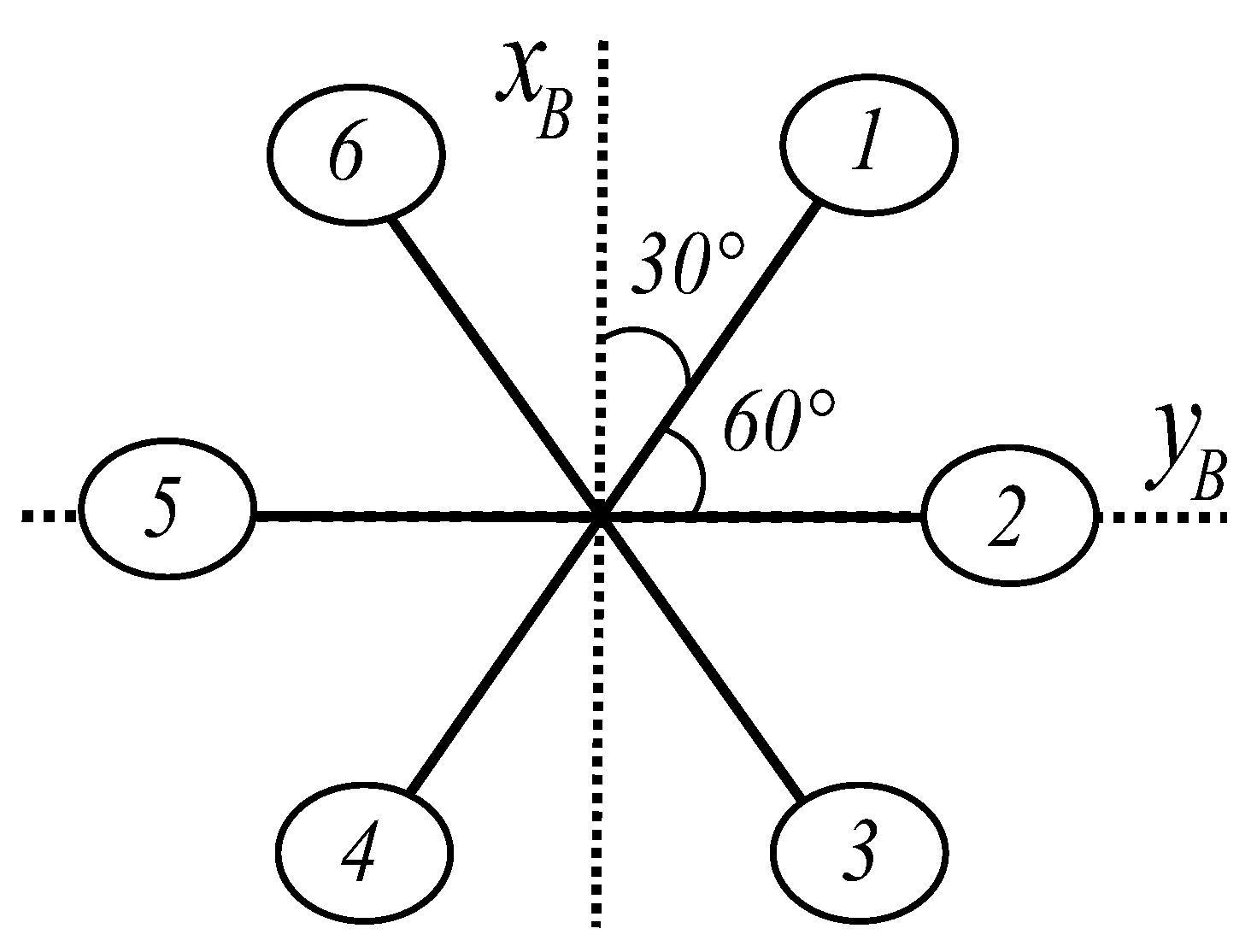

2. Hexarotor Dynamic Modeling

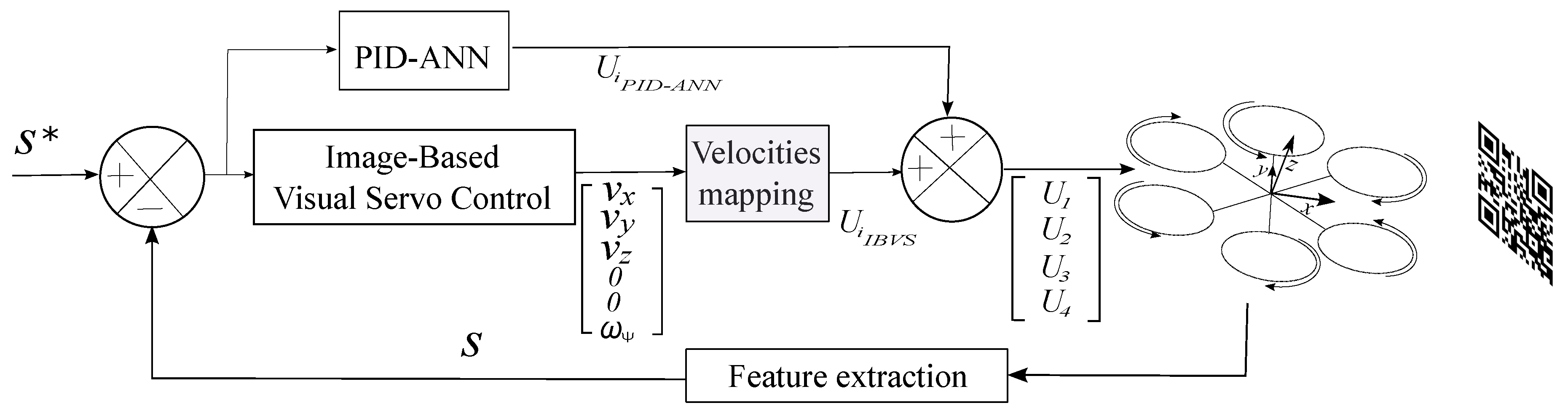

3. Visual Servo Control

4. Control of Hexarotor

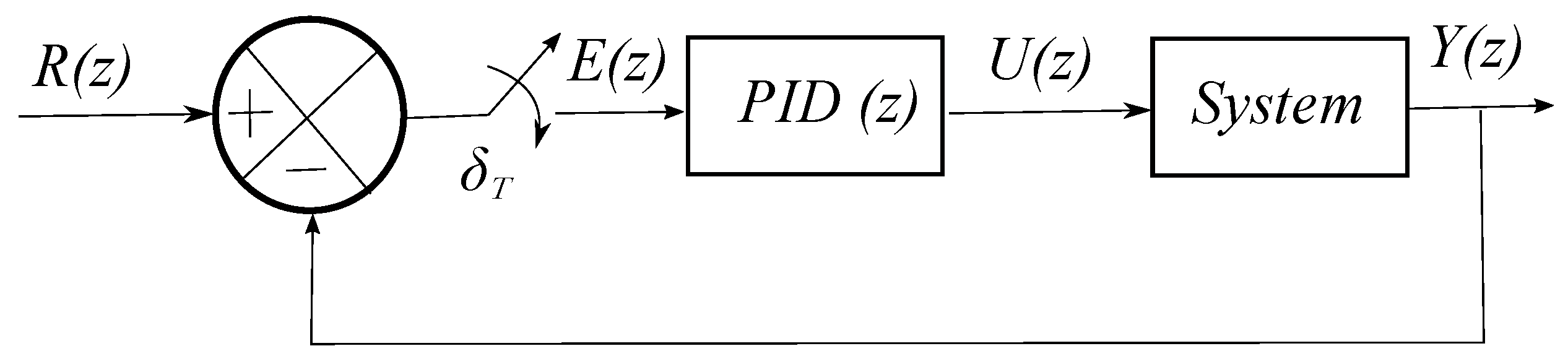

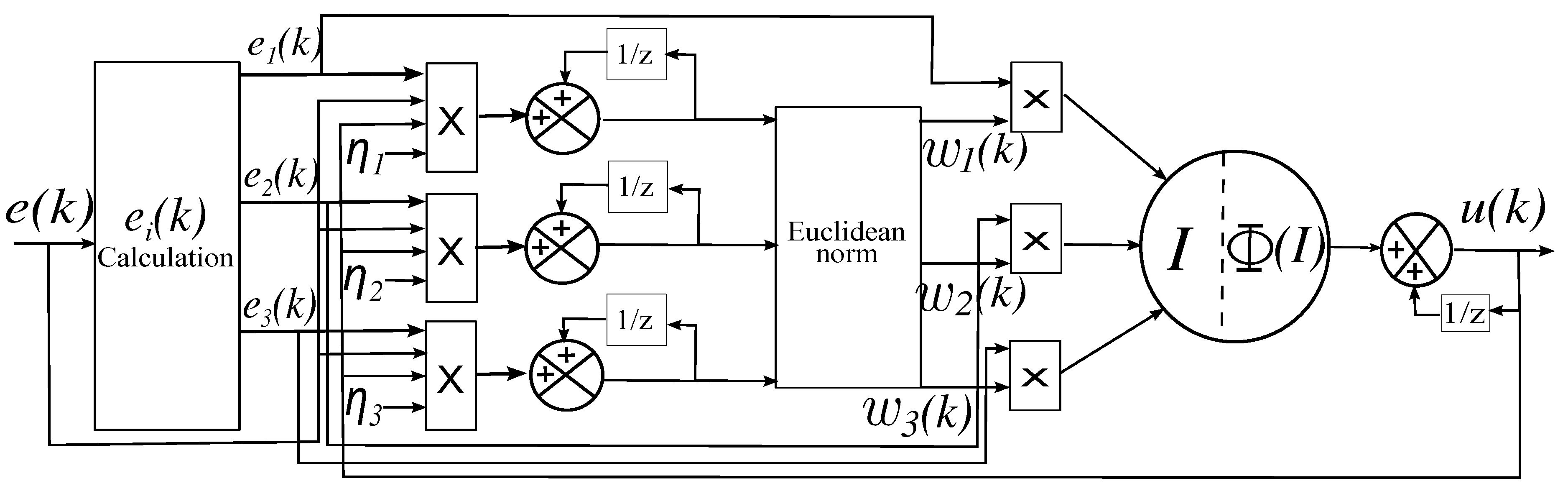

5. Neural Network Based PID

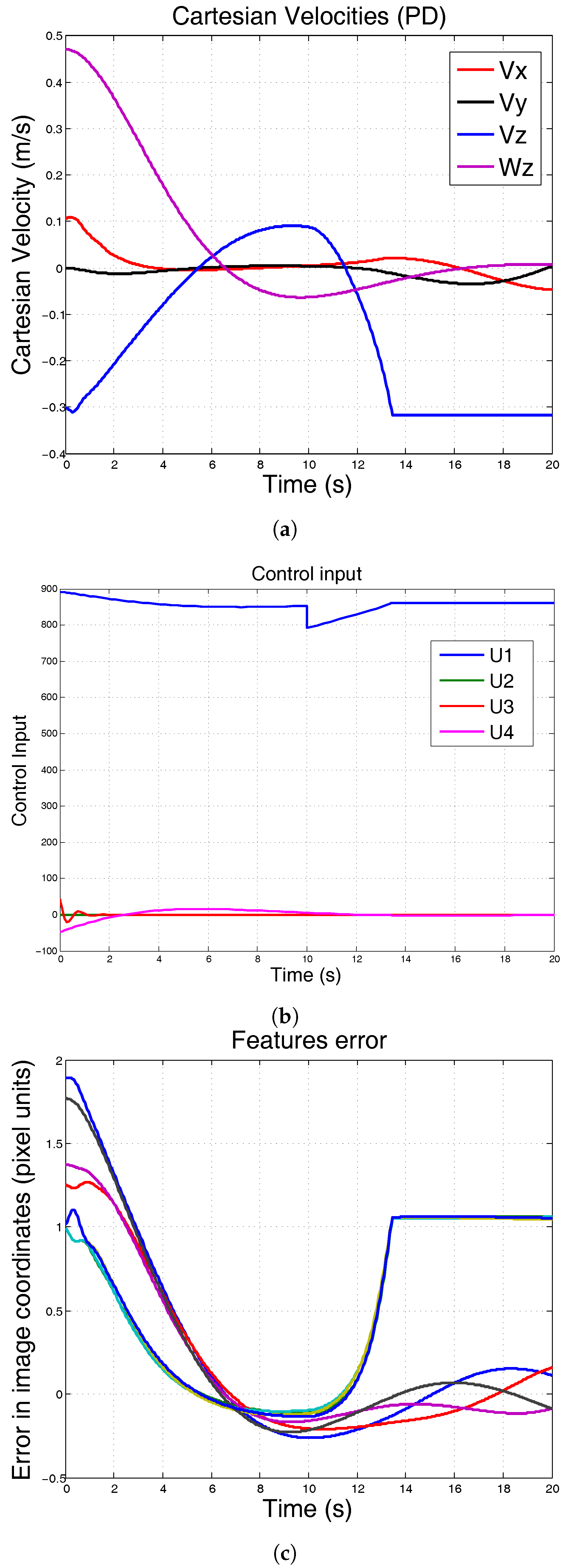

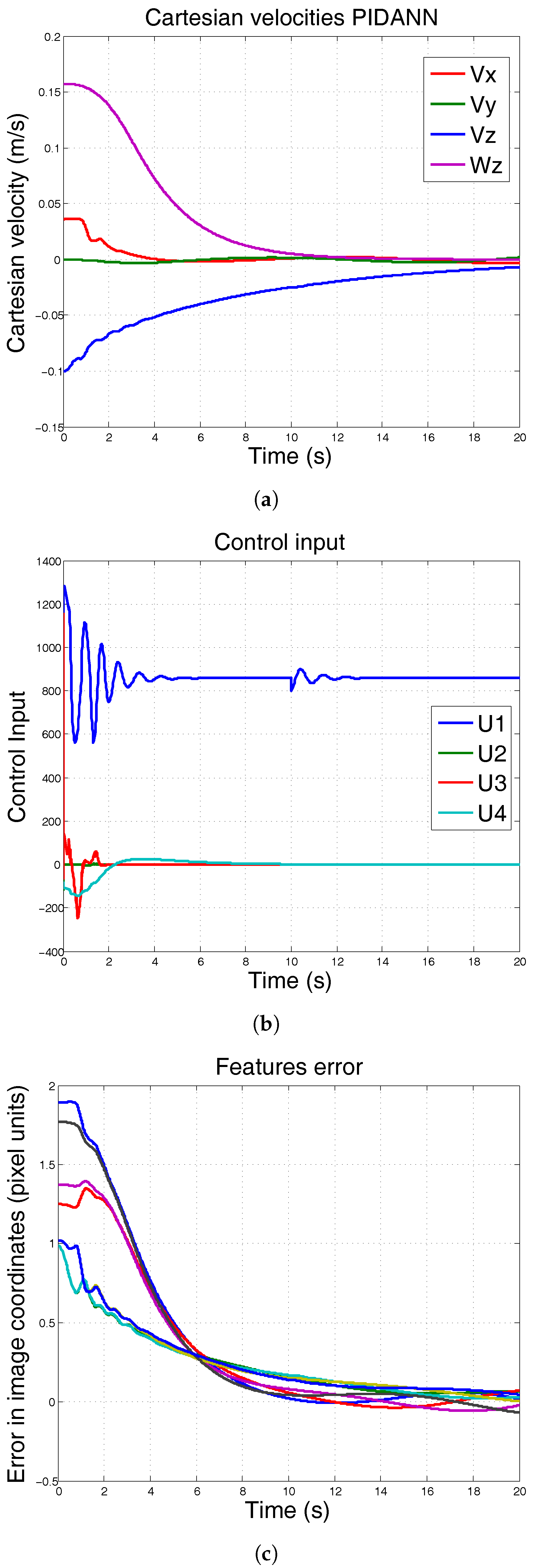

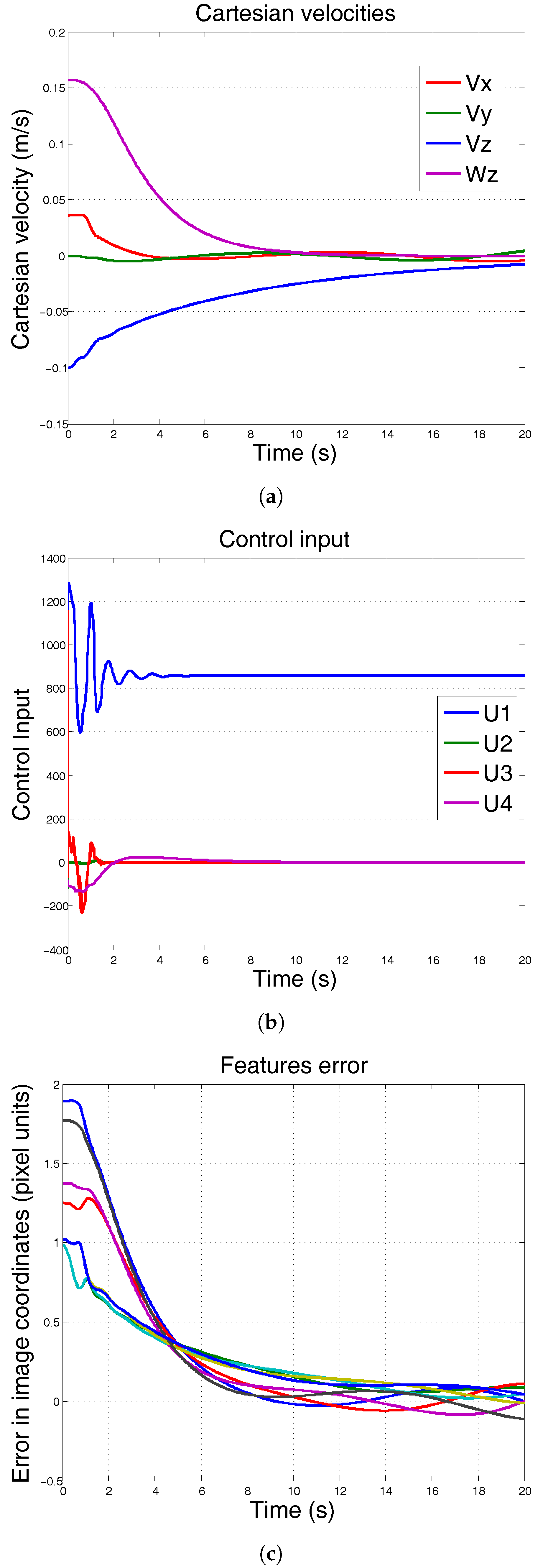

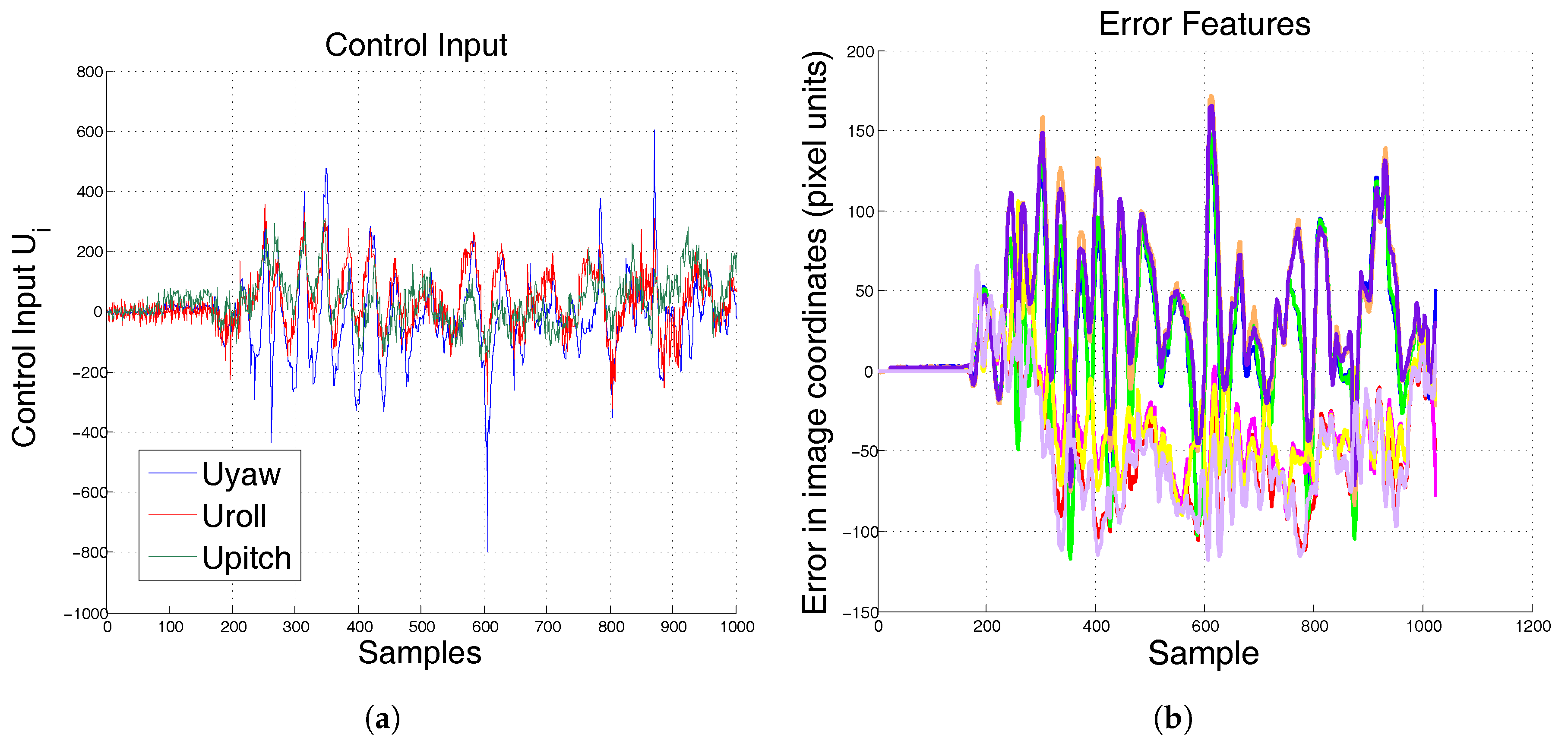

6. Simulation Results

7. Experimental Results

8. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Abbreviations

| UAV | Unmanned Aerial Vehicle |

| IBVS | Image Based Visual Servo |

| VTOL | Vertical Take-Off and Landing |

| PID | Proportional Integral Derivative |

| ANN | Artificial Neural Network |

| IMU | Inertial Measurement Unit |

| PD | Proportional Derivative |

| RMSE | Root Mean Square Error |

| AAD | Average Absolute Deviation |

References

- Bouabdallah, S.; Siegwart, R. Full control of a quadrotor. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, San Diego, CA, USA, 29 October–2 November 2007; pp. 153–158. [Google Scholar]

- Achtelik, M.; Zhang, T.; Kuhnlenz, K.; Buss, M. Visual tracking and control of a quadcopter using a stereo camera system and inertial sensors. In Proceedings of the International Conference on Mechatronics and Automation, Changchun, China, 9–12 August 2009; pp. 2863–2869. [Google Scholar]

- Klose, S.; Wang, J.; Achtelik, M.; Panin, G.; Holzapfel, F.; Knoll, A. Markerless, vision-assisted flight control of a quadrocopter. In Proceedings of the International Conference on Intelligent Robots and Systems (IROS), Taipei, Taiwan, 18–20 October 2010; pp. 5712–5717. [Google Scholar]

- Zhang, T.; Kang, Y.; Achtelik, M.; Kuhnlenz, K.; Buss, M. Autonomous hovering of a vision/IMU guided quadrotor. In Proceedings of the International Conference on Mechatronics and Automation, Changchun, China, 9–12 August 2009; pp. 2870–2875. [Google Scholar]

- Angeletti, G.; Valente, J.P.; Iocchi, L.; Nardi, D. Autonomous indoor hovering with a quadrotor. In Proceedings of the Workshop SIMPAR, Venice, Italy, 3–4 November 2008; pp. 472–481. [Google Scholar]

- Stefanik, K.V.; Gassaway, J.C.; Kochersberger, K.; Abbott, A.L. UAV-based stereo vision for rapid aerial terrain mapping. GISci. Remote Sens. 2011, 48, 24–49. [Google Scholar] [CrossRef]

- Kim, J.H.; Kwon, J.W.; Seo, J. Multi-UAV-based stereo vision system without GPS for ground obstacle mapping to assist path planning of UGV. Electron. Lett. 2014, 50, 1431–1432. [Google Scholar] [CrossRef]

- Salazar, S.; Romero, H.; Gómez, J.; Lozano, R. Real-time stereo visual servoing control of an UAV having eight-rotors. In Proceedings of the 2009 6th International Conference on IEEE Electrical Engineering, Computing Science and Automatic Control, Toluca, Mexico, 10–13 October 2009; pp. 1–11. [Google Scholar]

- Hrabar, S.; Sukhatme, G.S.; Corke, P.; Usher, K.; Roberts, J. Combined optic-flow and stereo-based navigation of urban canyons for a UAV. In Proceedings of the 2005 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2005), Edmonton, AB, Canada, 2–6 August 2005; pp. 3309–3316. [Google Scholar]

- Altug, E.; Ostrowski, J.P.; Mahony, R. Control of a quadrotor helicopter using visual feedback. In Proceedings of the IEEE International Conference on Robotics and Automation, Washington, DC, USA, 11–15 May 2002; Volume 1, pp. 72–77. [Google Scholar]

- Romero, H.; Benosman, R.; Lozano, R. Stabilization and location of a four rotor helicopter applying vision. In Proceedings of the American Control Conference, Minneapolis, MN, USA, 14–16 June 2006. [Google Scholar]

- Saripalli, S.; Montgomery, J.F.; Sukhatme, G.S. Visually guided landing of an unmanned aerial vehicle. IEEE Trans. Robot. Autom. 2003, 19, 371–380. [Google Scholar] [CrossRef]

- Wu, A.D.; Johnson, E.N.; Proctor, A.A. Vision-aided inertial navigation for flight control. J. Aerosp. Comput. Inf. Commun. 2005, 2, 348–360. [Google Scholar] [CrossRef]

- Azinheira, J.R.; Rives, P.; Carvalho, J.R.; Silveira, G.F.; De Paiva, E.C.; Bueno, S.S. Visual servo control for the hovering of all outdoor robotic airship. In Proceedings of the International Conference on Robotics and Automation, Washington, DC, USA, 11–15 May 2002; Volume 3, pp. 2787–2792. [Google Scholar]

- Bourquardez, O.; Chaumette, F. Visual servoing of an airplane for auto-landing. In Proceedings of the International Conference on Intelligent Robots and Systems, San Diego, CA, USA, 29 October–2 November 2007; pp. 1314–1319. [Google Scholar]

- Mejias, L.; Saripalli, S.; Campoy, P.; Sukhatme, G.S. Visual servoing of an autonomous helicopter in urban areas using feature tracking. J. Field Robot. 2006, 23, 185–199. [Google Scholar] [CrossRef] [Green Version]

- Serres, J.; Dray, D.; Ruffier, F.; Franceschini, N. A vision-based autopilot for a miniature air vehicle: Joint speed control and lateral obstacle avoidance. Auton. Robots 2008, 25, 103–122. [Google Scholar] [CrossRef]

- Hamel, T.; Mahony, R. Visual servoing of an under-actuated dynamic rigid-body system: An image-based approach. IEEE Trans. Robot. Autom. 2002, 18, 187–198. [Google Scholar] [CrossRef]

- Alaimo, A.; Artale, V.; Milazzo, C.L.R.; Ricciardello, A. PID controller applied to hexacopter flight. J. Intell. Robot. Syst. 2014, 73, 261–270. [Google Scholar] [CrossRef]

- Ceren, Z.; Altuğ, E. Vision-based servo control of a quadrotor air vehicle. In Proceedings of the IEEE International Symposium on Computational Intelligence in Robotics and Automation (CIRA), Daejeon, Korea, 15–18 December 2009; pp. 84–89. [Google Scholar]

- Rivera-Mejía, J.; Léon-Rubio, A.; Arzabala-Contreras, E. PID based on a single artificial neural network algorithm for intelligent sensors. J. Appl. Res. Technol. 2012, 10, 262–282. [Google Scholar]

- Yang, J.; Lu, W.; Liu, W. PID controller based on the artificial neural network. In Proceedings of the International Symposium on Neural Networks, Dalian, China, 19–21 August 2004; pp. 144–149. [Google Scholar]

- Ge, S.S.; Zhang, J.; Lee, T.H. Adaptive neural network control for a class of MIMO nonlinear systems with disturbances in discrete-time. IEEE Trans. Syst. Man Cybern. Part B Cybern. 2004, 34, 1630–1645. [Google Scholar] [CrossRef]

- Freddi, A.; Longhi, S.; Monteriù, A.; Prist, M. Actuator fault detection and isolation system for an hexacopter. In Proceedings of the 2014 IEEE/ASME 10th International Conference on Mechatronic and Embedded Systems and Applications (MESA), Senigallia, Italy, 10–12 September 2014; pp. 1–6. [Google Scholar]

- Sheng, Q.; Xianyi, Z.; Changhong, W.; Gao, X.; Zilong, L. Design and implementation of an adaptive PID controller using single neuron learning algorithm. In Proceedings of the 4th World Congress on Intelligent Control and Automation, Shanghai, China, 10–14 June 2002; Volume 3, pp. 2279–2283. [Google Scholar]

- Moussid, M.; Sayouti, A.; Medromi, H. Dynamic modeling and control of a hexarotor using linear and nonlinear methods. Int. J. Appl. Inf. Syst. 2015, 9. [Google Scholar] [CrossRef]

- Bouabdallah, S.; Murrieri, P.; Siegwart, R. Design and control of an indoor micro quadrotor. In Proceedings of the International Conference on Robotics and Automation, New Orleans, LA, USA, 26 April–1 May 2004; Volume 5, pp. 4393–4398. [Google Scholar]

- Chaumette, F.; Hutchinson, S. Visual servo control. I. Basic approaches. IEEE Robot. Autom. Mag. 2006, 13, 82–90. [Google Scholar] [CrossRef]

- Weiss, L.; Sanderson, A.; Neuman, C. Dynamic sensor-based control of robots with visual feedback. IEEE J. Robot. Autom. 1987, 3, 404–417. [Google Scholar] [CrossRef]

- Michel, H.; Rives, P. Singularities in the Determination of the Situation of a Robot Effector from the Perspective View of 3 Points. Ph.D. Thesis, INRIA, Valbonne, France, 1993. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Chaumette, F. Potential problems of stability and convergence in image-based and position-based visual servoing. In The Confluence of Vision and Control; Springer: Berlin, Germany, 1998; pp. 66–78. [Google Scholar]

- Wu, Z.; Sun, Y.; Jin, B.; Feng, L. An Approach to Identify Behavior Parameter in Image-based Visual Servo Control. Inf. Technol. J. 2012, 11, 217. [Google Scholar]

- Ogata, K. Discrete-Time Control Systems; Prentice Hall: Englewood Cliffs, NJ, USA, 1995; Volume 2. [Google Scholar]

- Corke, P.I. Robotics, Vision & Control: Fundamental Algorithms in Matlab; Springer: Berlin, Germany, 2011. [Google Scholar]

| Root Mean Square Error (Pixel Units) | ||||||||

| PID | 1741.9 | 393.1 | 1712.0 | 339.1 | 1677.5 | 329.2 | 1723.9 | 380.1 |

| ANNPID | 212.416 | 66.549 | 191.1447 | 57.4298 | 824.085 | 59.7654 | 861.8207 | 62.6225 |

| Average Absolute Deviation (Pixel Units) | ||||||||

| PID | 55.2177 | 9.6437 | 54.0636 | 10.5457 | 52.4827 | 10.704 | 53.069 | 9.6577 |

| ANNPID | 43.2281 | 7.4259 | 42.5686 | 11.3874 | 46.6832 | 11.484 | 47.5576 | 7.5503 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lopez-Franco, C.; Gomez-Avila, J.; Alanis, A.Y.; Arana-Daniel, N.; Villaseñor, C. Visual Servoing for an Autonomous Hexarotor Using a Neural Network Based PID Controller. Sensors 2017, 17, 1865. https://doi.org/10.3390/s17081865

Lopez-Franco C, Gomez-Avila J, Alanis AY, Arana-Daniel N, Villaseñor C. Visual Servoing for an Autonomous Hexarotor Using a Neural Network Based PID Controller. Sensors. 2017; 17(8):1865. https://doi.org/10.3390/s17081865

Chicago/Turabian StyleLopez-Franco, Carlos, Javier Gomez-Avila, Alma Y. Alanis, Nancy Arana-Daniel, and Carlos Villaseñor. 2017. "Visual Servoing for an Autonomous Hexarotor Using a Neural Network Based PID Controller" Sensors 17, no. 8: 1865. https://doi.org/10.3390/s17081865