1. Introduction

The need for more advanced human identification using biometric features has become a center of attention in fields such as security, surveillance, and forensics [

1]. Among traditional biometric identifiers, such as facial features, human gait is especially interesting because research has shown that the way a person moves can be used for identification purposes [

2]. An advantage of using the gait modality is that it can be linked with characteristics such as unobtrusiveness, effectiveness from a distance, and non-vulnerability, as it is difficult to continuously manipulate one’s own gait [

2]. Additionally, the derivatives of gait biometrics can be used to address estimations of other secondary biometric characteristics such as age, gender, height, weight, or even emotional state [

3].

There are several techniques used to observe an individual’s gait, including video data [

4], physical markers [

5], force plates [

6], and electrodes attached to the skin or inserted into the muscle (EMG) [

7]. However, most of these techniques are either too intrusive or not robust enough for applications related to surveillance or forensics because they involve too much physical interaction with the individual and/or introduce a large amount of error as a result of low quality source data.

As a solution to this problem, this paper examines using depth cameras such as the Microsoft Kinect which is capable of recording proximity and depth data in real time. In existing literature, approaches for gait analysis can be separated into two categories: model-free methods and model-based methods [

8]. The first of the two approaches (model-free methods) is based on images that often consist of a human silhouette that is changing over time. Popular approaches within this category include gait energy images (GEIs) [

8], frequency-domain features (FDFs) [

9], and chrono-gait images [

10]. These approaches have recently gained traction due to their simple yet effective properties. However, one common problem is that these approaches also tend to also be quite sensitive to viewpoints as well as scale which can lead to potential errors in noisy or uncontrolled environments [

11].

On the other hand, model-based methods are based on the principle of tracking and modeling the body, which is represented by multiple parameters such as joint coordinates and their corresponding temporal relations. From the subsets, parameters such as joint coordinates as well as corresponding temporal relations can be plotted and used to derive patterns directly related to specific gait signatures. The output gait signature profiles can be used for identification and recognition depending on the application. Additionally, unlike model-free methods, model-based methods are generally view invariant as well as scale independent [

11,

12].

To classify individuals based on their gait patterns techniques such as hidden Markov models (HMM) [

13], support vector machines (SVM) [

14], and neural networks (NN) [

15] are often utilized. Additionally, since gait is a sequence of temporal data, other types of classifiers like dynamic Bayesian networks (DBN) have also been used to assess the probability of an individual experiencing abnormal gait conditions [

16].

In applications such as forensics, surveillance, or rehabilitation, results often rely on identifying evidence to describe an individual. Commonly used biometrics often assume the availability of a database or a watchlist. While databases of faces and fingerprints are commonly used in practice, no databases of “gait patterns” derived from video recordings are commonly available. However, the motivation of this paper comes from the knowledge that forensic databases may contain names and records of an individual, such as a mugshot, and/or other information pertaining to certain traits including scars, tattoos, approximate height, weight, or gait abnormality (e.g., a limp in the right leg).

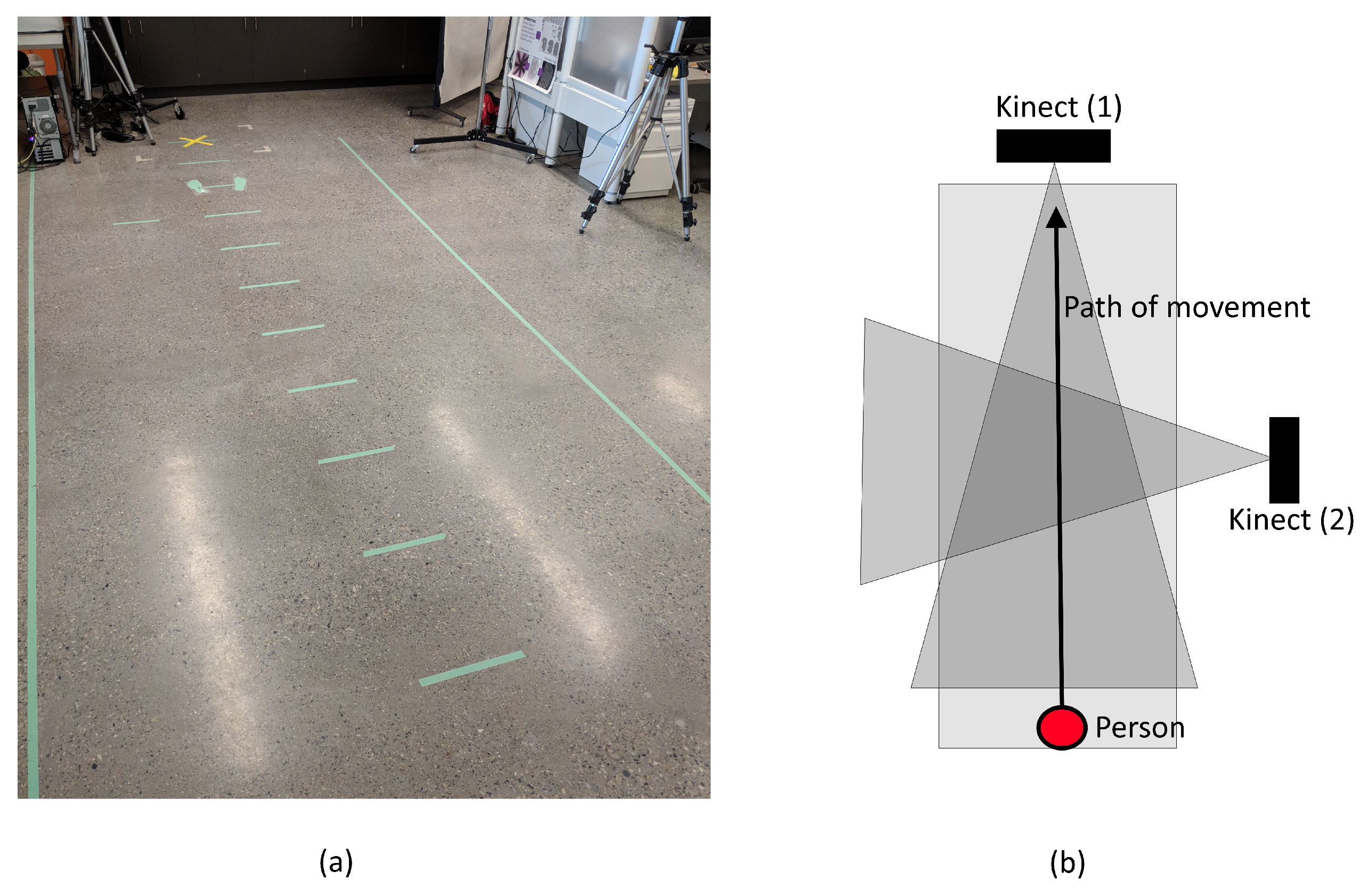

Thus, our study focuses on utilizing gait biometric characteristics, such as a limp, to improve screening applications using inexpensive sensors which can then be combined with other common biometrics to assist with verifying an individual’s identity. The main hypothesis of this paper is formulated as follows: human gait features derived using RGB-depth (RGB-D) cameras can be used to distinguish between different gait types such as normal versus abnormal (e.g., limping). To test this hypothesis, this paper proposes using the structure shown in

Figure 1.

The novelty of the proposed approach includes the design of a DBN that is based on the information collected from RGB-Depth sensory data. This design is then used to generate decisions regarding an individual’s gait status. The proposed DBN is tested against other state-of-the art classifiers that also conduct gait type classification [

17,

18]. In [

17], the authors attempted to classify Parkinson’s disease in subjects, while, in [

18], the authors performed automatic recognition of altered gait using wearable inertial sensors for conditions such as Huntington’s disease and assess the risk of a potential stroke.

The reason DBN was chosen over other classifiers is because of its unique properties, such as causality and ability to model temporal sequences such as gait. For example, in [

16], the authors demonstrated the usefulness of applying probabilistic models such as a DBN to assess gait. Additionally, the authors in [

13] presented a two-level DBN layered time series model (LTSM) to solve and improve on other gait classification methods such as HMM and dynamic texture models (DTM). The results from [

13,

16] further demonstrate the validity of the proposed model and its potential for other fields such as biological sequence analysis and activity recognition.

Nonetheless, a DBN is just one of many methods that can be used to model temporal relations. Other state-of-the art modeling techniques such as the generative probabilistic model with Allen’s interval-based relations have been created to effectively model complex actions using various combinations of atomic actions [

19]. The atomic actions are referred to as primitive events inferred from sensor data which can no longer be decomposed into a lower-level semantic form [

20]. These actions represent an intermediate form between low-level raw sensor data and high-level complex activities. However, it is assumed that the atomic actions are already recognized and labeled in advance [

19]. In this paper, the features used for gait detection are automatically labeled by the proposed system when fed raw sensor data from the Kinect camera. Acquiring feature data in this format eliminates the need for assuming feature labels and provides insight into building a system based off of real data.

The rest of this paper is organized as follows. A description of our method is presented in

Section 2. Experimental results are described in

Section 3, and a discussion of the results is presented in

Section 4. The concluding remarks and future plans are presented in

Section 5.

3. Results

For this study, two experiments were conducted based on the partitioned data defined in

Table 3. For the first experiment, the abnormal classes (left limp and right limp) were combined into one class (abnormal). This allowed for easy comparison with the other classifiers tested. To test the network, we used the Hugin Expert Lite model which allowed for a maximum of three time slices. Unfortunately, because of software limitations, we were not able to train the DBN on a longer time series (

). Therefore, some accuracy might have been sacrificed for the sake of simplicity. However, overall results remain promising. To calculate Correct Classification Rate (CCR), sensitivity, and specificity the following formulas were used:

where

is the number of true positives (correctly predicting the gait type of an individual as normal);

is the number of false negatives (incorrectly predicting an abnormal gait type);

is the number of true negatives (correctly predicting an abnormality (left limp or right limp)); and

is the number of false positives (abnormal gait types being predicted as normal).

The corresponding performance evaluation results are presented in

Table 7 and

Table 8. In addition to DBN, four other classifiers were used for comparison: K-nearest-neighbors (KNN), support vector machines (SVM), naïve Bayes (NB), and linear discriminant analysis (LDA). All of the mentioned classifiers were trained and tested on Dataset A in

Table 3 which consisted on locally collected data using five-fold cross validation. In

Table 7, the best results were observed with the proposed DBN, while the worst are seen with LDA. A comparable CCR was also obtained with a NB classifier. However, the CCR for the NB was still 10% lower than the DBN. As seen from the results, the DBN is effective at distinguishing normal from abnormal gait for Dataset A.

For the second experiment, the dataset was expanded. It included local as well as external data from two different datasets. The data selected for the second experiment consisted of local data used in the previous experiment combined with UPCV Kinect data from the University of Patras Computer Vision Group [

26,

34]. The purpose of this experiment was to observe how the selected classifiers CCR changed when additional data was introduced into the training and testing set. In

Table 8, it can be seen that the DBN once again achieved the highest CCR for binary classification. However, the CCR of all the other classification techniques also increased, with the most improvement observed in the SVM classifier (+19.32%).

In the second experiment, the abnormal class was in the DBN was separated so that it contained two different output classes for gait type: left limp, and right limp. To determine how efficient the DBN is at performing multi-class classification, the network described in

Figure 7 was tested on a newly labeled dataset that contained normal, left limp, and right limp gait types. The resulting confusion matrices for Datasets A and B are illustrated in

Figure 9a,b, respectively. The corresponding precision and recall for the classes ranged 71–100% and 86–98%, respectively, depending on the data used for testing and training.

To compare the results with the outcomes of other authors, the binary classification results of the DBN were used. However, the strength of the proposed network is that is robust to multi-class recognition. Results were compared with literature where the authors conducted similar experiments using contact and non-contact systems to detect specific gait abnormalities. At the time of conducting this study, the following studies were used for comparison [

17,

18,

35].

4. Discussion

The obtained results from the DBN were 88.68% CCR for multi-class classification (normal, left limp, right limp). These results are promising, however, to validate the results, they were compared against three similar studies.

The first article selected for comparison is by Kozlow et al. [

35]. The authors conducted gait detection via binary classification using information collected from the Kinect v2. In their study, the authors used two features to form the feature vector: minimum ankle flexion and maximum ankle flexion. From their experiments, the authors found that using KNN resulted in a 88.54% CCR. However, after repeating the experiments using the selected dataset, the CCR dropped to 83.45% when trained/tested using 21 features. When compared with the DBN proposed in this study, we observed that the DBN achieved a higher CCR for multi-class classification (88.68%).

The first article selected for comparison is by Prochzka et al. [

17] who examined using depth data from the Microsoft Kinect to perform Bayesian classification for Parkinson’s disease. The results from their study yielded good results ranging 90.2–94.1% CCR using features such as stride length and speed. However, to obtain the maximum CCR (94.1%), the researchers needed to include age into the feature vector. This is questionable, as age is not always known and needs to be acquired via manual questioning or approximated (which can introduce error into the system). The DBN proposed for this study results in a slightly lower CCR than observed in [

17]. However, the output class labels for antalgic gait are inherently more interrelated than the classes associated with Parkinson’s. They are also calculated using data that can be directly acquired using only the Kinect v2.

The final article [

18] contains information about an experiment conducted that attempts to perform automatic recognition of altered gait using wearable inertial sensors. In [

18], Mannini et al. examined 54 individuals with various known conditions such as being prone to stroke, Huntington’s disease and Parkinson’s disease, as well as healthy elderly individuals. Using classification methods such as NB, SVM, and logistic regression (LR), the authors attempted to maximize the discrimination capabilities of each classifier to correctly classify the data. Results from this study found that LR yielded the highest CCR (89.2%) when using inertial measurement units mounted on the subject’s shanks and over the lumbar spine. Comparing the proposed DBN to this literature shows that a similar CCR is obtainable when using exclusively non-contact methods, which are more favorable in real life applications such as border control. A comparison of the proposed method and that in [

18] is shown in

Table 9.

We compared the overall accuracy of our proposed network to the ones reported in [

17,

18,

35] in

Table 9. In the table, we can observe that the proposed method demonstrated a very good performance compared to the other methods. However, the DBN underperformed in comparison to the NB classifier presented in [

17]. This could be because the results presented were based on a relatively small dataset (51 samples) which was not publicly available, as well as the classes created were relatively easy to distinguish due to large separation between the classes. What makes our result unique as well as robust is that it was tested on over 300 samples from two different datasets, as well as on closely interrelated classes.

5. Conclusions

This study contributes to the development of future biometric-enabled technologies where automated gait analysis may be applied. Here, soft biometrics such as gait type are used to provide a more robust decision making process regarding individual’s identity (expressed in terms of distinguishing feature such as a limp) or gait deterioration analysis in non-invasive, remote sensor based analysis of walking patterns. The classification of gait type proposed in our work is based on a probabilistic inference process, via DBN. This approach is expandable to overall identity inference process that utilizes statistics collected using available or recorded biometrics of a individual’s face, fingerprint, and iris. It can be paired with other soft biometrics such as age, height, or other traits to assess identity, or used for analysis of gait for rehabilitation process monitoring and other healthcare applications.

The proposed gait feature analyzer is a framework that is able to detect gait abnormalities in a non-invasive manner using RGB-depth cameras. We examined the potential application for this framework by diagnosing various types of antalgic gait. Using a Kinect 2.0 sensor, we collected 168 samples which consisted of equal portions of normal gait, and simulated antalgic gait (for the left and right side). By using frame by frame analysis of the individual’s 3D skeleton, we were able to model the individual’s gait cycle as a function of frames. The gait cycle was then utilized to derive critical features for identifying one’s gait. Features such as cadence, stride length, and various knee joint as well as ankle joint parameters were selected. These selected features were used for testing a novel DBN that will output one’s gait type in a semantic form.

From experimental results, we found that the proposed DBN was effective for multi-class decisions (different types of gait) yielding an overall CCR of 88.68%. When compared to other state-of-the art methods, the proposed solution was comparable with all but one result which yielded 94.1% that also utilized Bayesian classification techniques (NB). There are many possible reasons the proposed network was unable to reach this level of accuracy, which include: not enough time sequences for an individual, or that the dataset we attempted to classify had strong interdependent properties, as well as having a limited amount of features that frequently contained overlapping PDFs.

In future work, we plan to address several limitations that currently exist. First, the database we collected that contains abnormal gait types is still relatively small and uniform. We plan to continue to improve the size and diversity of the database, including additional classes to improve the robustness of the framework. In addition, we will also continue to add more distinctive features that will be used to identify an individual’s gait type with higher accuracy. Lastly, we plan to explore more complex and powerful networks, including deep neural networks, provided enough input data.

The proposed gait feature analyzer is yet to be integrated with biometric-enabled screening architecture (

Figure 1). For example, forensic databases or watchlists often contain face or fingerprint data and a textual records, such as information about distinctive gait feature or abnormalities. Automated identification of these "soft" biometrics in the screening process (such as a person walking through airport border control) will allow to improve performance of the identification system, or assist in decision making based on multiple trait acquired during the screening process or on the video