A Vision-Based Approach to UAV Detection and Tracking in Cooperative Applications

Abstract

:1. Introduction

2. Related Work: Visual Detection and Tracking

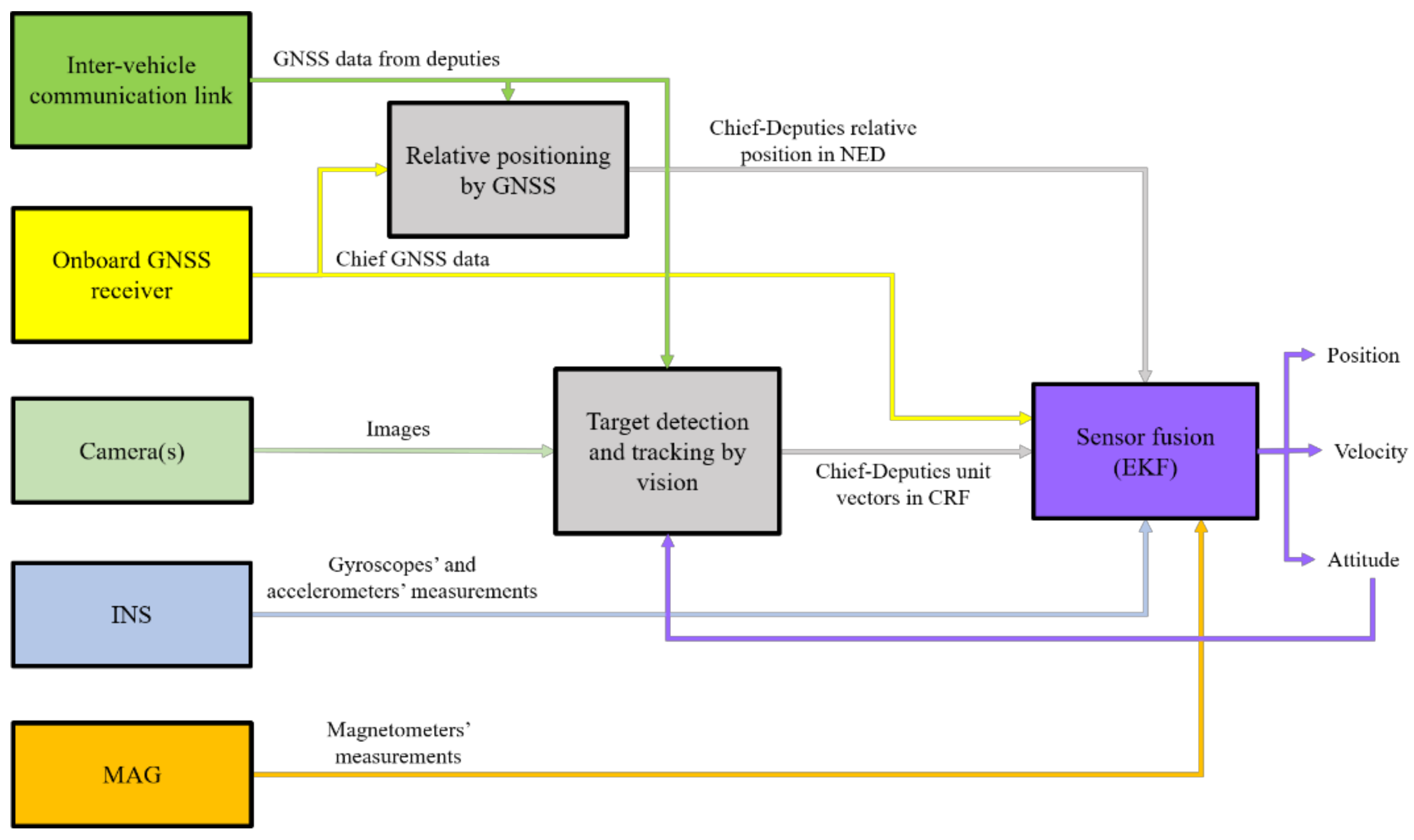

3. Cooperative Multi-UAV Applications

4. Image Processing Algorithms

- -

- an off-line “database generation” step enclosed in a dashed, rectangular box;

- -

- two main processing steps, i.e., detection and tracking, highlighted in red;

- -

- a supplementary processing step, i.e., template update, highlighted in blue;

- -

- four decision points highlighted in green.

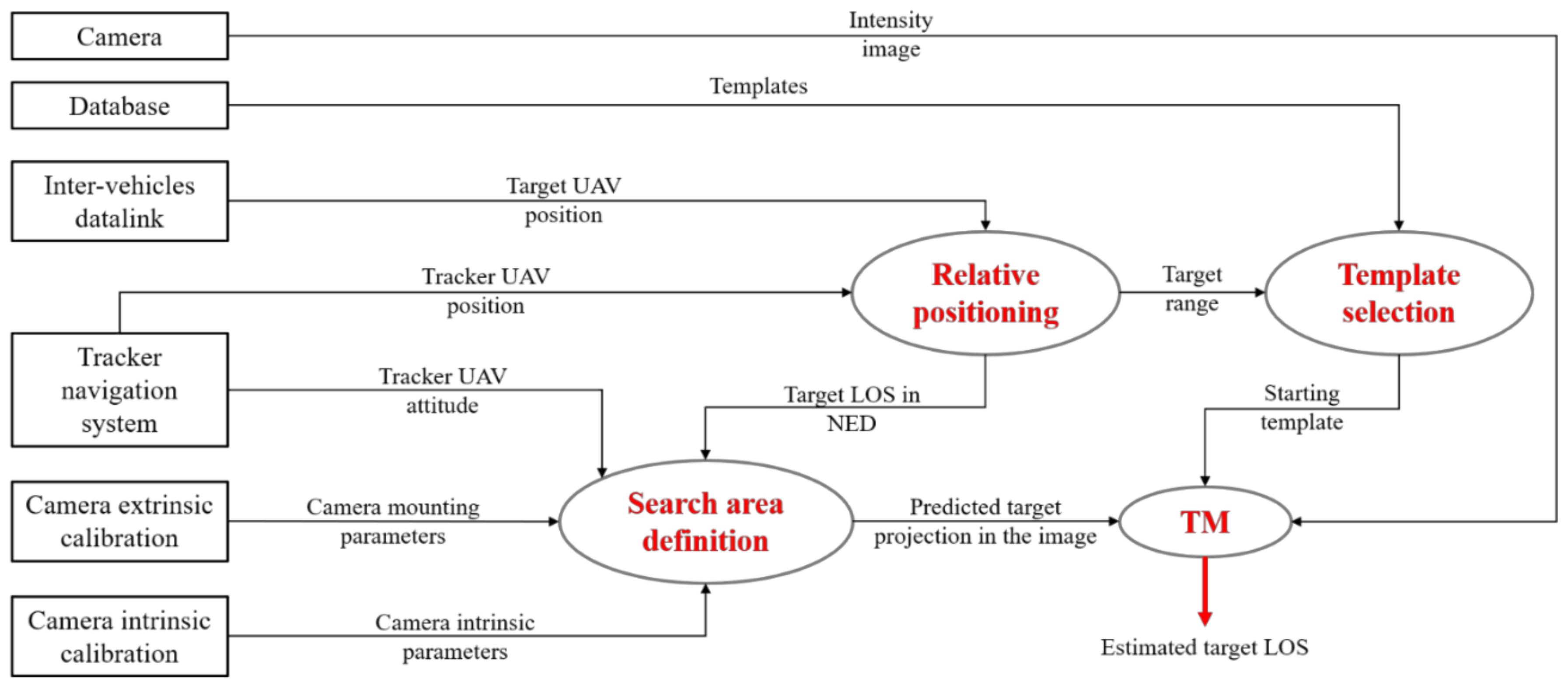

4.1. Detection

- (1)

- an intensity (grey-level) image (I) acquired by the camera onboard the tracker UAV, whose horizontal and vertical size is indicated by Nu and Nv, respectively;

- (2)

- a predicted estimate of the target projection in the image plane (upr, vpr), which allows defining a limited area where the TM must be applied;

- (3)

- a template (T), which is an intensity image with the size of the region of interest, purposely extracted from the database.

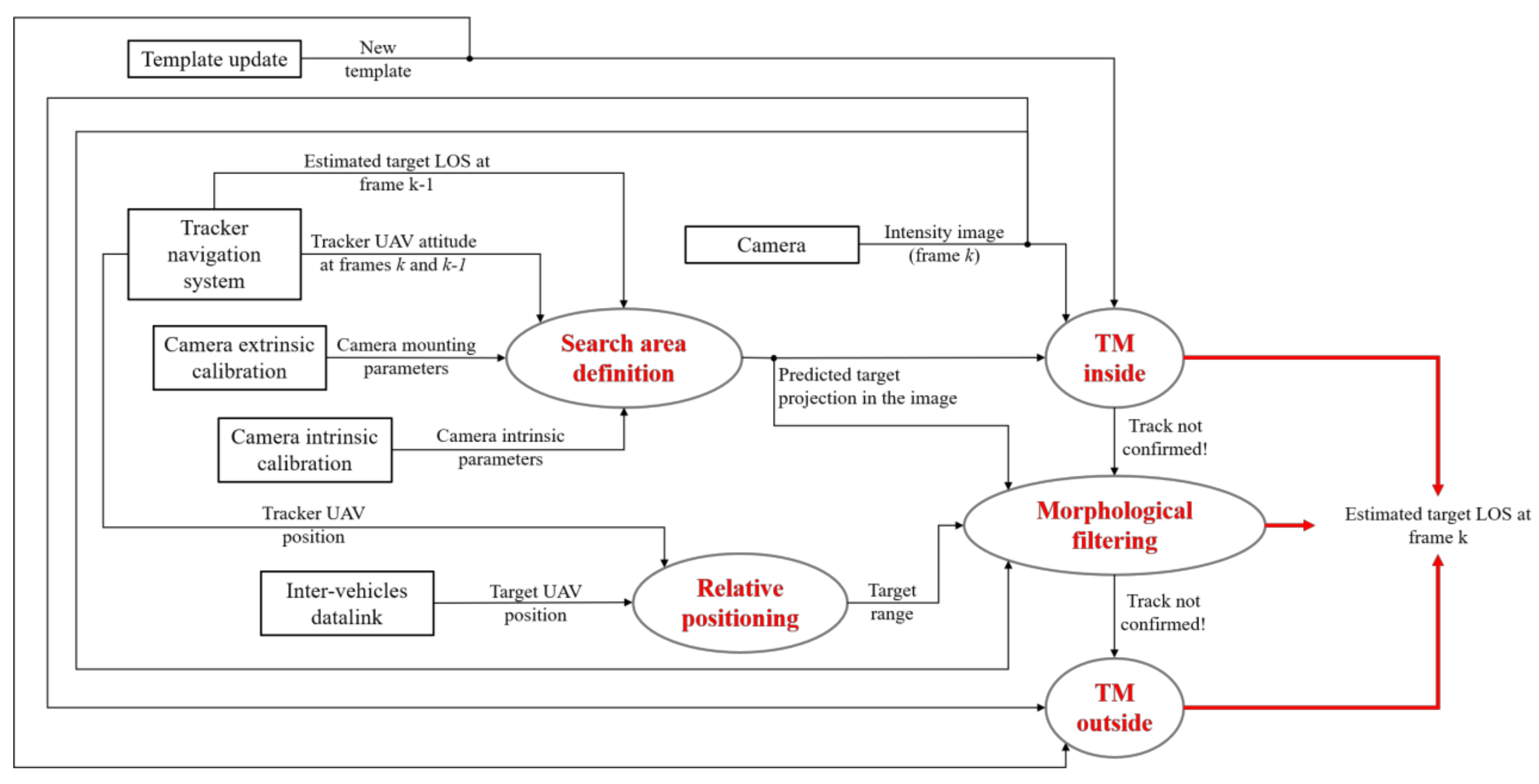

4.2. Tracking

4.2.1. TM-Inside Processing

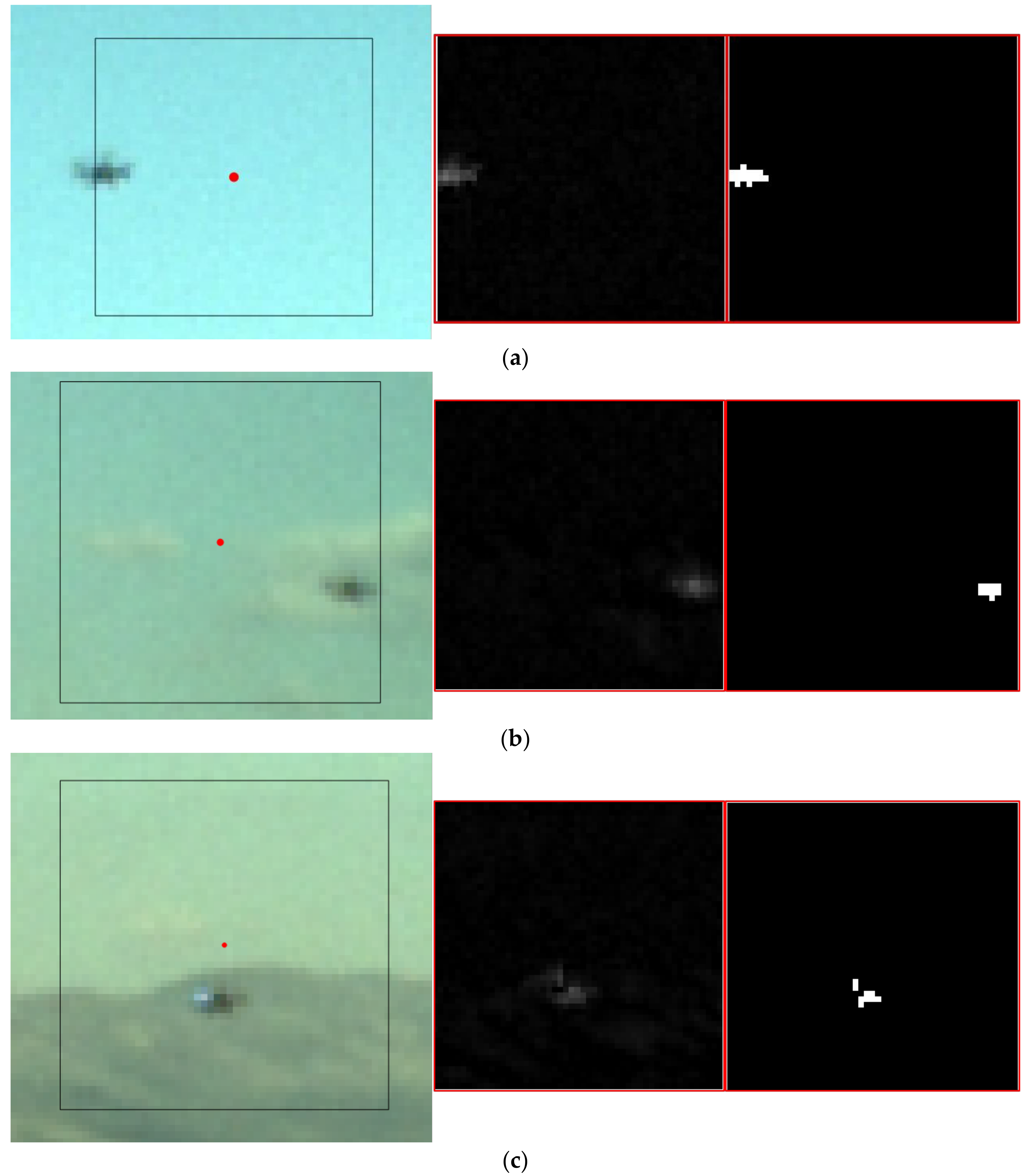

4.2.2. Morphological Filtering

- (a)

- no image regions are extracted → the target is declared to be out of the search window;

- (b)

- a single image region is extracted → the centroid is assigned as the estimated target position in the image (utr, vtr);

- (c)

- multiple image regions are extracted → the algorithm is not able to solve the ambiguity between the target and other objects (outliers).

4.2.3. TM-Outside Processing

4.3. Template Update

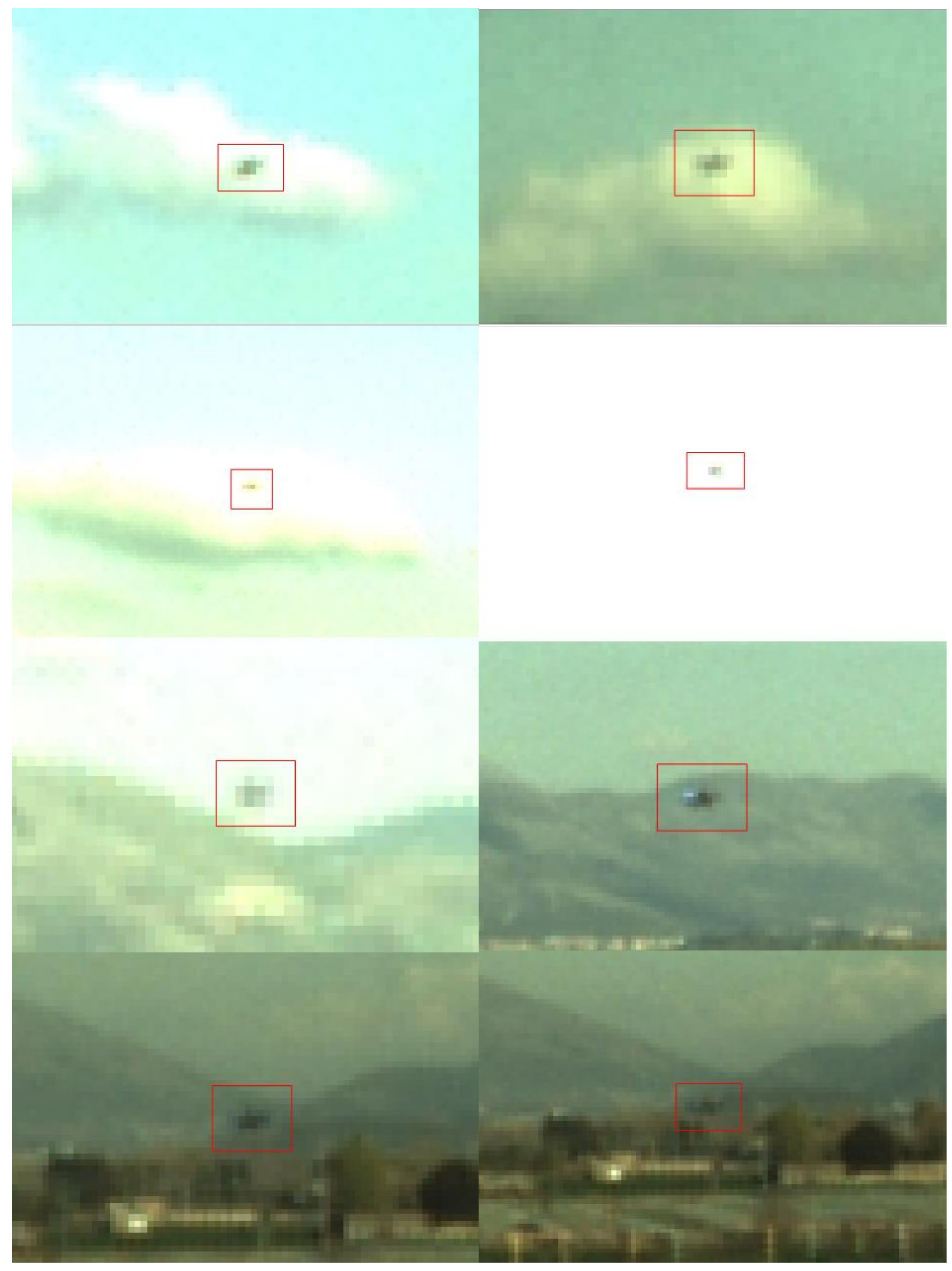

5. Flight Test Campaign

5.1. Experimental Setup

5.2. Flight Tests Description

- -

- to recognize periods of target absence from the FOV autonomously;

- -

- to recover the target autonomously by re-starting the detection process.

5.3. Performance Assessment: Detection Algorithm

- -

- Percentage of Missed Detections (MD), computed as the ratio between the number of frames in which the target is wrongly declared to be outside the image plane (i.e., it is not detected even if it is present in the image) and the total number of analyzed frames.

- -

- Percentage of Correct Detections (CD), computed as the ratio between the number of frames in which the target is correctly declared to be inside the image plane (the detection error is lower than a pixel threshold, τpix, both horizontally and vertically) and the total number of analyzed frames.

- -

- Percentage of False Alarms (FA), computed as the ratio between the number of frames in which the target is wrongly declared to be inside the image plane (i.e., it is detected even if it is not present in the image) and the total number of analyzed frames.

- -

- Percentage of Wrong Detections (WD), computed as the ratio between the number of frames in which the target is correctly declared to be inside the image plane, but the detection error is larger than τpix both horizontally and vertically, and the total number of analyzed frames.

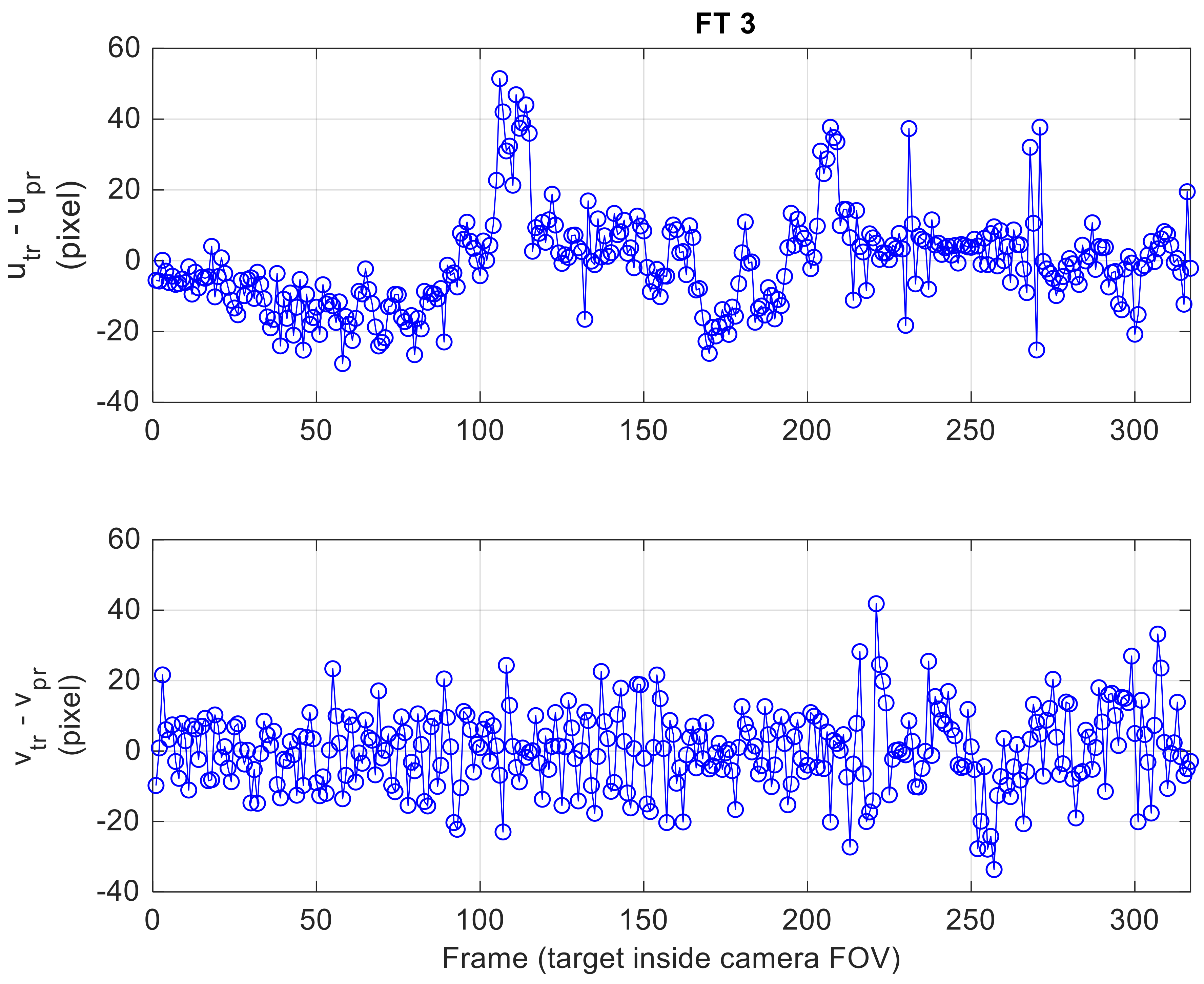

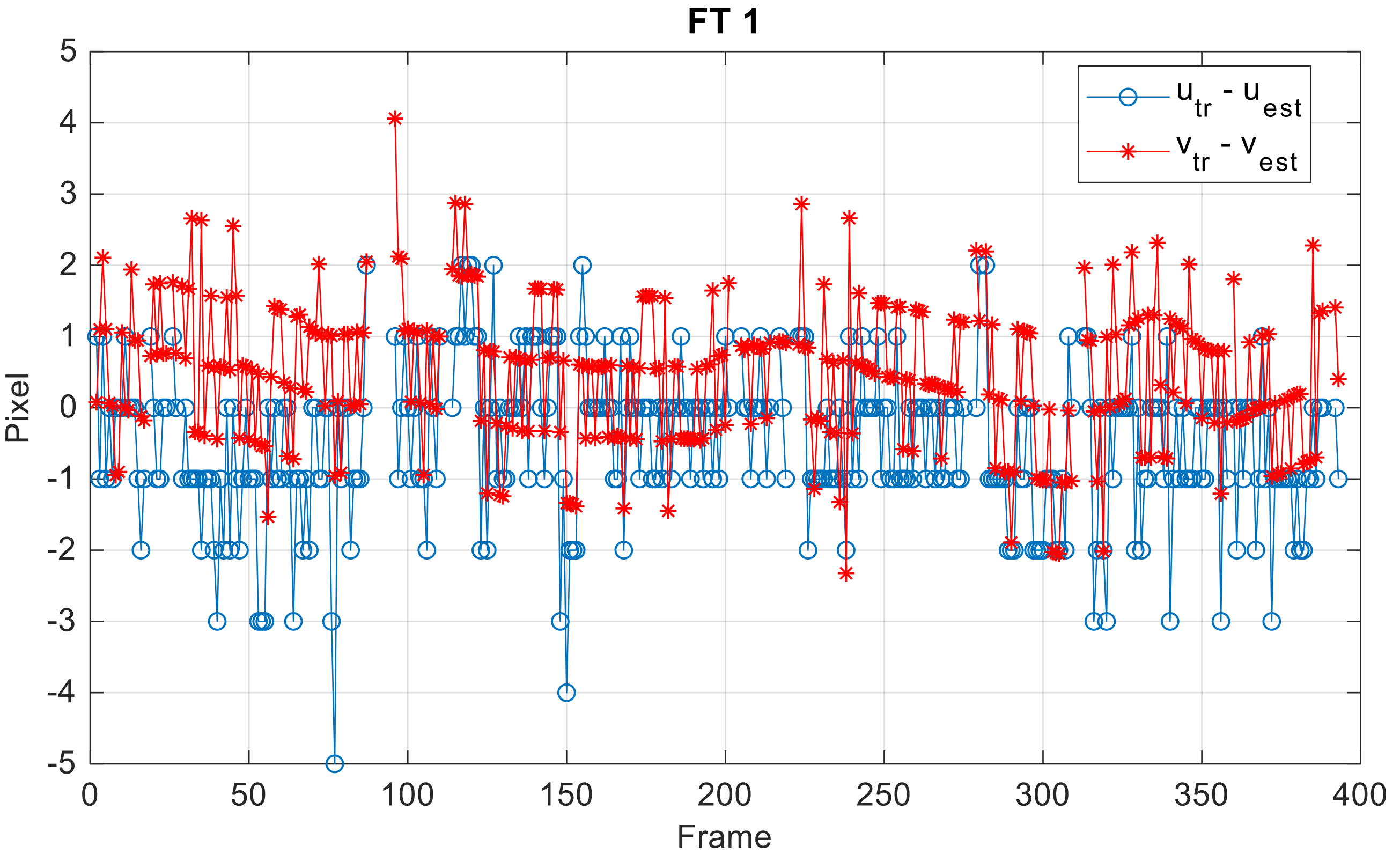

5.4. Performance Assessment: Tracking Algorithm

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Campoy, P.; Correa, J.F.; Mondragón, I.; Martínez, C.; Olivares, M.; Mejías, L.; Artieda, J. Computer vision onboard UAVs for civilian tasks. J. Intell. Robot. Syst. 2009, 54, 105–135. [Google Scholar] [CrossRef] [Green Version]

- Kanellakis, C.; Nikolakopoulos, G. Survey on computer vision for UAVs: Current developments and trends. J. Intell. Robot. Syst. 2017, 87, 141–168. [Google Scholar] [CrossRef]

- Ham, Y.; Han, K.K.; Lin, J.J.; Golparvar-Fard, M. Visual monitoring of civil infrastructure systems via camera-equipped Unmanned Aerial Vehicles (UAVs): A review of related works. Vis. Eng. 2016, 4, 1–8. [Google Scholar] [CrossRef]

- Turner, I.L.; Harley, M.D.; Drummond, C.D. UAVs for coastal surveying. Coast. Eng. 2016, 114, 19–24. [Google Scholar] [CrossRef]

- Nex, F.; Remondino, F. UAV for 3D mapping applications: A review. Appl. Geomat. 2014, 6, 1–15. [Google Scholar] [CrossRef]

- Schmid, K.; Lutz, P.; Tomić, T.; Mair, E.; Hirschmüller, H. Autonomous Vision-based Micro Air Vehicle for Indoor and Outdoor Navigation. J. Field Robot. 2014, 31, 537–570. [Google Scholar] [CrossRef]

- Weiss, S.; Achtelik, M.W.; Lynen, S.; Achtelik, M.C.; Kneip, L.; Chli, M.; Siegwart, R. Monocular Vision for Long-term Micro Aerial Vehicle State Estimation: A Compendium. J. Field Robot. 2013, 30, 803–831. [Google Scholar] [CrossRef]

- Cesetti, A.; Frontoni, E.; Mancini, A.; Zingaretti, P.; Longhi, S. A vision-based guidance system for UAV navigation and safe landing using natural landmarks. J. Intell. Robot. Syst. 2010, 57, 233–257. [Google Scholar] [CrossRef]

- Nguyen, P.H.; Kim, K.W.; Lee, Y.W.; Park, K.R. Remote Marker-Based Tracking for UAV Landing Using Visible-Light Camera Sensor. Sensors 2017, 17, 1987. [Google Scholar] [CrossRef] [PubMed]

- Al-Kaff, A.; García, F.; Martín, D.; De La Escalera, A.; Armingol, J.M. Obstacle detection and avoidance system based on monocular camera and size expansion algorithm for UAVs. Sensors 2017, 17, 1061. [Google Scholar] [CrossRef] [PubMed]

- Aguilar, W.G.; Casaliglla, V.P.; Pólit, J.L. Obstacle avoidance based-visual navigation for micro aerial vehicles. Electronics 2017, 6, 10. [Google Scholar] [CrossRef]

- Lai, J.; Mejias, L.; Ford, J.L. Airborne vision-based collision-detection system. J. Field Robot. 2011, 28, 137–157. [Google Scholar] [CrossRef] [Green Version]

- Fasano, G.; Accardo, D.; Tirri, A.E.; Moccia, A.; De Lellis, E. Morphological filtering and target tracking for vision-based UAS sense and avoid. In Proceedings of the 2014 International Conference on Unmanned Aircraft Systems, Orlando, FL, USA, 27–30 May 2014; pp. 430–440. [Google Scholar]

- Cledat, E.; Cucci, D.A. Mapping Gnss Restricted Environments with a Drone Tandem and Indirect Position Control. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 4, 1–7. [Google Scholar] [CrossRef]

- Vetrella, A.R.; Opromolla, R.; Fasano, G.; Accardo, D.; Grassi, M. Autonomous Flight in GPS-Challenging Environments Exploiting Multi-UAV Cooperation and Vision-aided Navigation. In Proceedings of the AIAA SciTech 2017, Grapevine, TX, USA, 9–13 January 2017. [Google Scholar]

- Trujillo, J.C.; Munguia, R.; Guerra, E.; Grau, A. Cooperative Monocular-Based SLAM for Multi-UAV Systems in GPS-Denied Environments. Sensors 2018, 18, 1351. [Google Scholar] [CrossRef] [PubMed]

- Vetrella, A.R.; Fasano, G.; Accardo, D.; Moccia, A. Differential GNSS and vision-based tracking to improve navigation performance in cooperative multi-UAV systems. Sensors 2016, 16, 2164. [Google Scholar] [CrossRef] [PubMed]

- Lai, J.; Ford, J.J.; Mejias, L.; O’Shea, P. Characterization of Sky-region Morphological-temporal Airborne Collision Detection. J. Field Robot. 2013, 30, 171–193. [Google Scholar] [CrossRef]

- Fu, C.; Carrio, A.; Olivares-Mendez, M.A.; Suarez-Fernandez, R.; Campoy, P. Robust real-time vision-based aircraft tracking from unmanned aerial vehicles. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation, Hong Kong, China, 31 May–7 June 2014; pp. 5441–5446. [Google Scholar]

- Krajnik, T.; Nitsche, M.; Faigl, J.; Vanek, P.; Saska, M.; Preucil, L.; Duckett, T.; Mejail, M.A. A Practical Multirobot Localization System. J. Intell. Robot. Syst. 2014, 76, 539–562. [Google Scholar] [CrossRef] [Green Version]

- Olivares-Mendez, M.A.; Mondragon, I.; Cervera, P.C.; Mejias, L.; Martinez, C. Aerial object following using visual fuzzy servoing. In Proceedings of the 1st Workshop on Research, Development and Education on Unmanned Aerial Systems (RED-UAS), Sevilla, Spain, 30 November–1 December 2011. [Google Scholar]

- Faigl, J.; Krajnik, T.; Chudoba, J.; Preucil, L.; Saska, M. Low-cost embedded system for relative localization in robotic swarms. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Karlsruhe, Germany, 6–10 May 2013; pp. 993–998. [Google Scholar]

- Martínez, C.; Campoy, P.; Mondragón, I.F.; Sánchez-Lopez, J.L.; Olivares-Méndez, M.A. HMPMR strategy for real-time tracking in aerial images, using direct methods. Mach. Vis. Appl. 2014, 25, 1283–1308. [Google Scholar] [CrossRef] [Green Version]

- Shi, J.; Tomasi, C. Good features to track. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 21–23 June 1994; pp. 593–600. [Google Scholar]

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef] [Green Version]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-Up Robust Features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef] [Green Version]

- Feng, X.; Mei, W.; Hu, D. A Review of Visual Tracking with Deep Learning. Adv. Intell. Syst. Res. 2016, 133, 231–234. [Google Scholar]

- Wang, N.Y.; Yeung, D.Y. Learning a deep compact image representation for visual tracking. Adv. Neural Inf. Process. Syst. 2013, 809–817. Available online: http://papers.nips.cc/paper/5192-learning-a-deep-compact-image-representation-for-visual-tracking (accessed on 10 October 2018).

- Gökçe, F.; Üçoluk, G.; Şahin, E.; Kalkan, S. Vision-based detection and distance estimation of micro unmanned aerial vehicles. Sensors 2015, 15, 23805–23846. [Google Scholar] [CrossRef] [PubMed]

- Rosten, E.; Drummond, T. Machine learning for high-speed corner detection. In Proceedings of the 9th European Conference on Computer Vision (ECCV), Graz, Austria, 7–13 May 2006; pp. 430–443. [Google Scholar]

- Fu, C.; Duan, R.; Kircali, D.; Kayacan, E. Onboard robust visual tracking for UAVs using a reliable global-local object model. Sensors 2016, 16, 1406. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Ye, D.H.; Chung, T.; Kolsch, M.; Wachs, J.; Bouman, C. Multi-target detection and tracking from a single camera in Unmanned Aerial Vehicles (UAVs). In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 October 2016; pp. 4992–4997. [Google Scholar]

- Rozantsev, A.; Lepetit, V.; Fua, P. Detecting flying objects using a single moving camera. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 879–892. [Google Scholar] [CrossRef] [PubMed]

- Matthews, I.; Ishikawa, T.; Baker, S. The Template Update Problem. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 810–815. [Google Scholar] [CrossRef] [PubMed]

- Causa, F.; Vetrella, A.R.; Fasano, G.; Accardo, D. Multi-UAV formation geometries for cooperative navigation in GNSS-challenging environments. In Proceedings of the 2018 IEEE/ION Position, Location and Navigation Symposium (PLANS), Monterey, CA, USA, 23–26 April 2018; pp. 775–785. [Google Scholar]

- Heikkila, J.; Silven, O. A four-step camera calibration procedure with implicit image correction. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Juan, Puerto Rico, 17–19 June 1997; pp. 1106–1112. [Google Scholar] [Green Version]

- Briechle, K.; Hanebeck, U.D. Template matching using fast normalized cross correlation. In Optical Pattern Recognition XII; International Society for Optics and Photonics: Bellingham, WA, USA, 2001; Volume 4387, pp. 95–103. [Google Scholar]

| Method | Image Processing Functions | Notes | |

|---|---|---|---|

| Detection | Tracking | ||

| [29] | Trained cascade classifier based on FAST features | N/A | A UAV-UAV distance estimation method is included |

| [31] | N/A | Feature matching (FAST), optical flow, local geometric filter | An additional outlier removal strategy is included (local outlier factor module) |

| [32] | Background motion compensation and optical flow | Kalman filtering | False alarms arising from the background motion are limited |

| [33] | Deep learning | N/A | Detection against a complex background is enabled |

| Our method | Template matching | Template matching, morphological filtering | Cooperation is exploited by aiding image processing based on the exchange of navigation data |

| FT | N | NIN | NOUT | ρ Mean (m) | ρ Standard Deviation (m) | Number of 360° Turns | Δu Mean (pixel) | Δv Mean (pixel) |

|---|---|---|---|---|---|---|---|---|

| 1 | 398 | 376 | 22 | 84.2 | 17.1 | 6 | 38.3 | 24.5 |

| 2 | 361 | 319 | 42 | 105.9 | 19.5 | 7 | 29.6 | 25.8 |

| 3 | 381 | 323 | 58 | 120.2 | 19.5 | 3 | 33.0 | 21.2 |

| FT | utr–upr (pixel) | vtr–vpr (pixel) | ||

|---|---|---|---|---|

| Mean | Standard Deviation | Mean | Standard Deviation | |

| 1 | 325.2 | 235.0 | −4.3 | 29.2 |

| 2 | 383.7 | 448.8 | −16.8 | 67.1 |

| 3 | 247.0 | 300.7 | −5.3 | 20.6 |

| FT | utr–upr (pixel) | vtr–vpr (pixel) | ||

|---|---|---|---|---|

| Mean | Standard Deviation | Mean | Standard Deviation | |

| 1 | 1.4 | 28.3 | −0.7 | 11.7 |

| 2 | 1.6 | 14.3 | −0.1 | 10.5 |

| 3 | −0.8 | 13.5 | 0.3 | 11.1 |

| FT | MD (%) | FA (%) | CD (%) | WD (%) | Nupd | FA + WD (%) |

|---|---|---|---|---|---|---|

| 1 | 4.27 | 0 | 95.73 | 0 | 4 | 0.00 |

| 2 | 10.80 | 1.66 | 87.53 | 0 | 11 | 1.66 |

| 3 | 23.88 | 0.79 | 75.07 | 0.26 | 20 | 1.05 |

| τupd | MD (%) | FA (%) | CD (%) | WD (%) | Nupd | FA + WD (%) |

|---|---|---|---|---|---|---|

| 0.86 | 15.22 | 2.10 | 82.15 | 0.52 | 35 | 2.62 |

| 0.87 | 10.24 | 5.77 | 79.53 | 4.46 | 61 | 10.23 |

| 0.88 | 14.96 | 2.10 | 82.68 | 0.26 | 65 | 2.36 |

| 0.89 | 13.91 | 2.36 | 83.20 | 0.52 | 69 | 2.88 |

| 0.90 | 12.34 | 2.10 | 85.04 | 0.52 | 82 | 2.62 |

| FT | utr–upr (pixel) | vtr–vpr (pixel) | ||

|---|---|---|---|---|

| Mean | Standard Deviation | Mean | Standard Deviation | |

| 1 | −0.5 | 1.1 | 0.4 | 1.0 |

| 2 | −0.7 | 0.9 | 0.8 | 0.8 |

| 3 | −1.3 | 1.6 | 1.0 | 1.5 |

| Distribution of action when the target is inside the FOV (376 frames) | MD (%) | CD TM IN (%) | CD TM OUT (%) | CD MF (%) | WD TM IN (%) | WD TM OUT (%) | WD MF (%) |

| 4.5 | 60.4 | 9.8 | 25.3 | 0 | 0 | 0 | |

| Distribution of action when the target is outside the FOV (22 frames) | CD (%) | FA TM IN (%) | FA TM OUT (%) | FA MF (%) | |||

| 100 | 0 | 0 | 0 | ||||

| Distribution of action for all the 398 frames | TM IN (%) | TM OUT (%) | MF (%) | ||||

| 63.2 | 10.3 | 26.5 | |||||

| Distribution of action when the target is declared out (39 frames) | MD (%) | CD (%) | |||||

| 43.6 | 56.4 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Opromolla, R.; Fasano, G.; Accardo, D. A Vision-Based Approach to UAV Detection and Tracking in Cooperative Applications. Sensors 2018, 18, 3391. https://doi.org/10.3390/s18103391

Opromolla R, Fasano G, Accardo D. A Vision-Based Approach to UAV Detection and Tracking in Cooperative Applications. Sensors. 2018; 18(10):3391. https://doi.org/10.3390/s18103391

Chicago/Turabian StyleOpromolla, Roberto, Giancarmine Fasano, and Domenico Accardo. 2018. "A Vision-Based Approach to UAV Detection and Tracking in Cooperative Applications" Sensors 18, no. 10: 3391. https://doi.org/10.3390/s18103391