Research on a Handheld 3D Laser Scanning System for Measuring Large-Sized Objects

Abstract

:1. Introduction

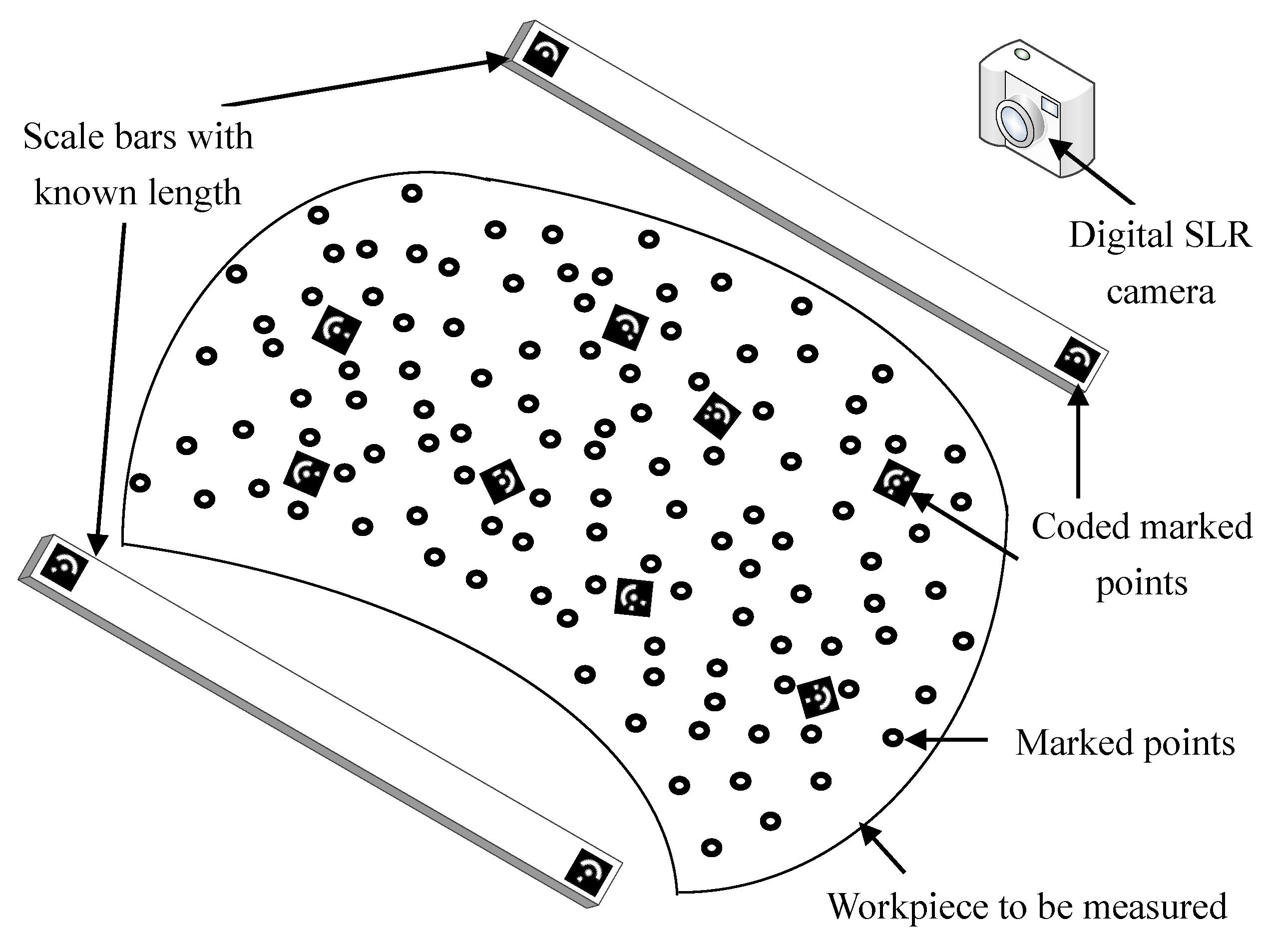

2. System Composition and Working Principle

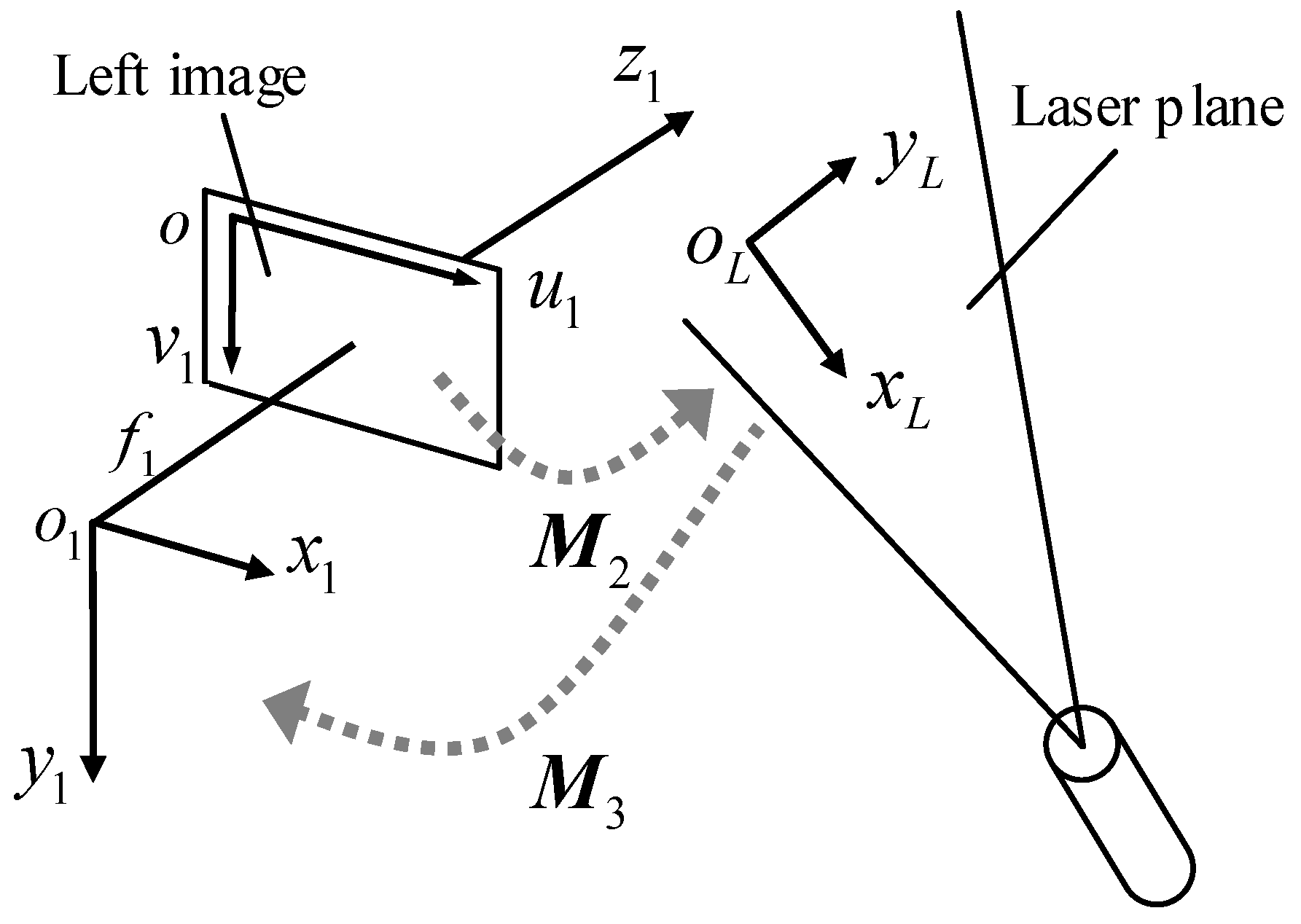

3. Modeling and Calibration of the Handheld 3D Laser Scanning System

3.1. Modeling and Calibration of the Binocular Stereo Vision System

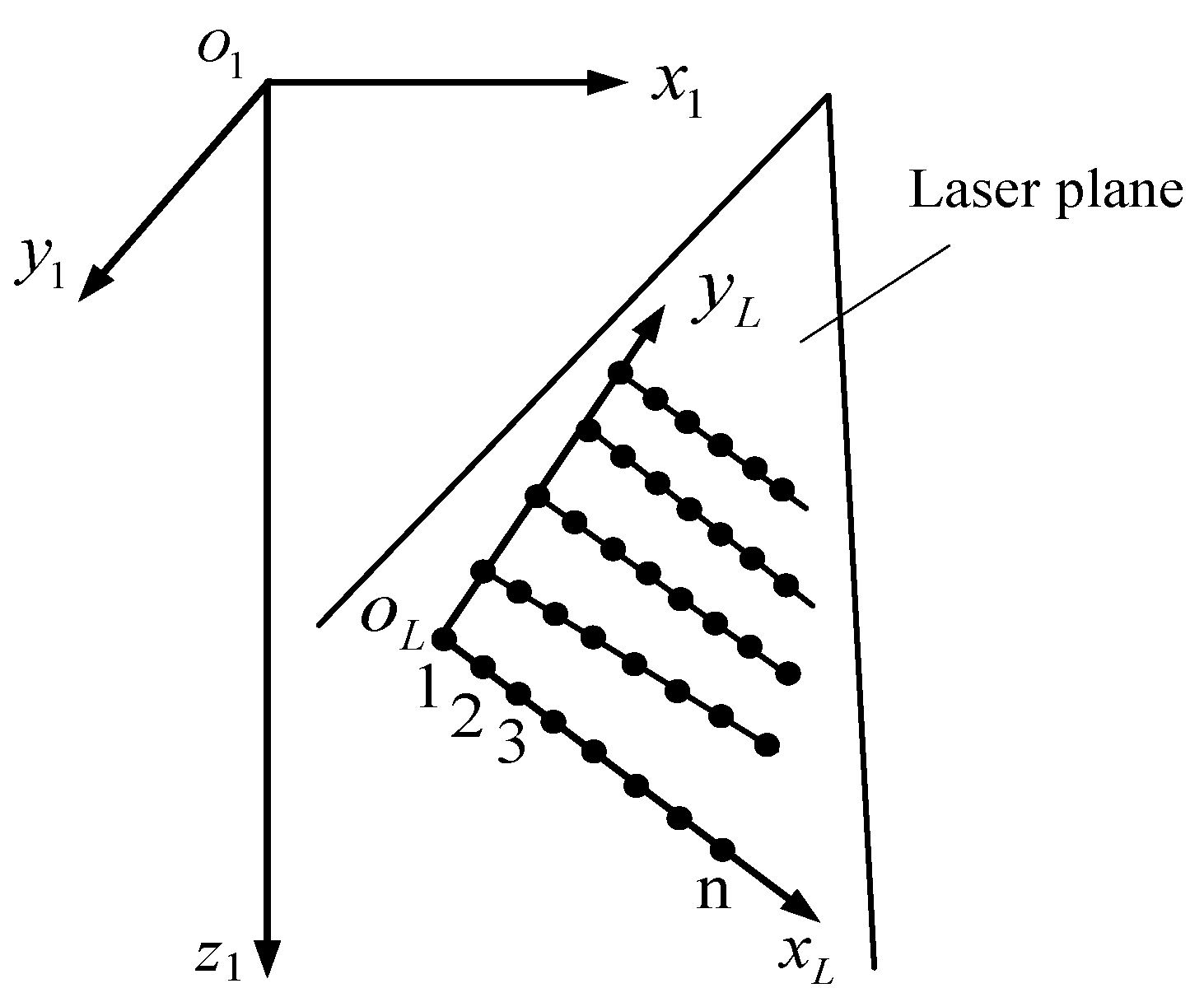

3.2. Modeling and Calibration of the Structured Light System

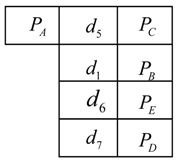

4. Match-Up Method of the Marked Points in and

4.1. Matching Up the Marked Points

where , , , , , are marked points with the sequence number i, j, r, t, a, c in respectively, , , are the distance between them respectively and .

where , , , , , are marked points with the sequence number i, j, r, t, a, c in respectively, , , are the distance between them respectively and . where points are connected with points , , …, and .

where points are connected with points , , …, and .- (1)

- Choose one marked point, such as , in Figure 9 with its scanner sub-library in ;

- (2)

- find the distances in library meeting the conditions and record the sequences of the marked points to a list. Assuming and , then the list of candidates is generated, .

- (3)

- check the workpiece sub-libraries according to the list ,, successively to find whether it exists all the distances meeting the conditions (, and stands for one distance in workpiece sub-libraries ) respectively. For instance, if the distances in meet to , then the point is believed as the same point with in .

- (4)

- repeat the steps (1)~(3) to find the points corresponding to , , , , then the marked points are matched up well.

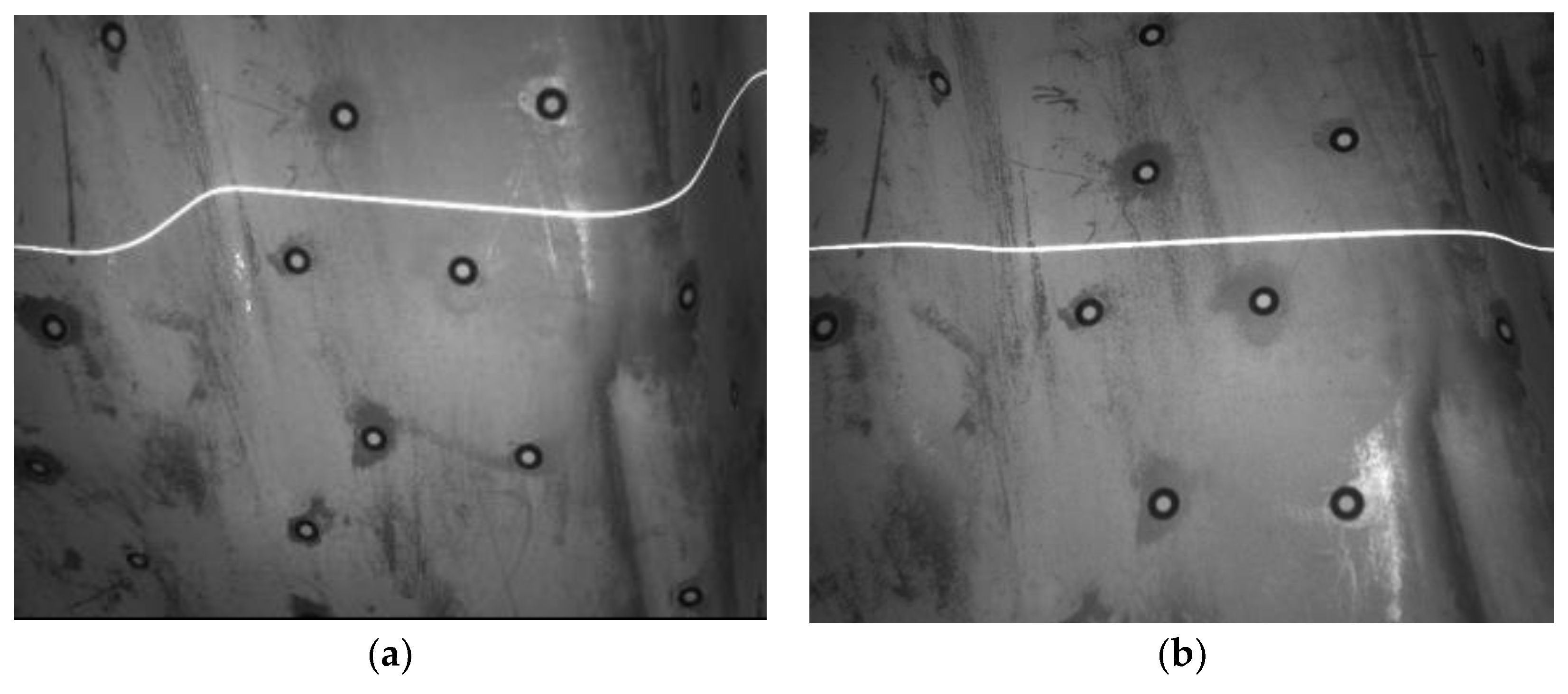

4.2. Obtaining the Contour of Workpiece in

5. Experiments and Results

5.1. System Hardware and Structure

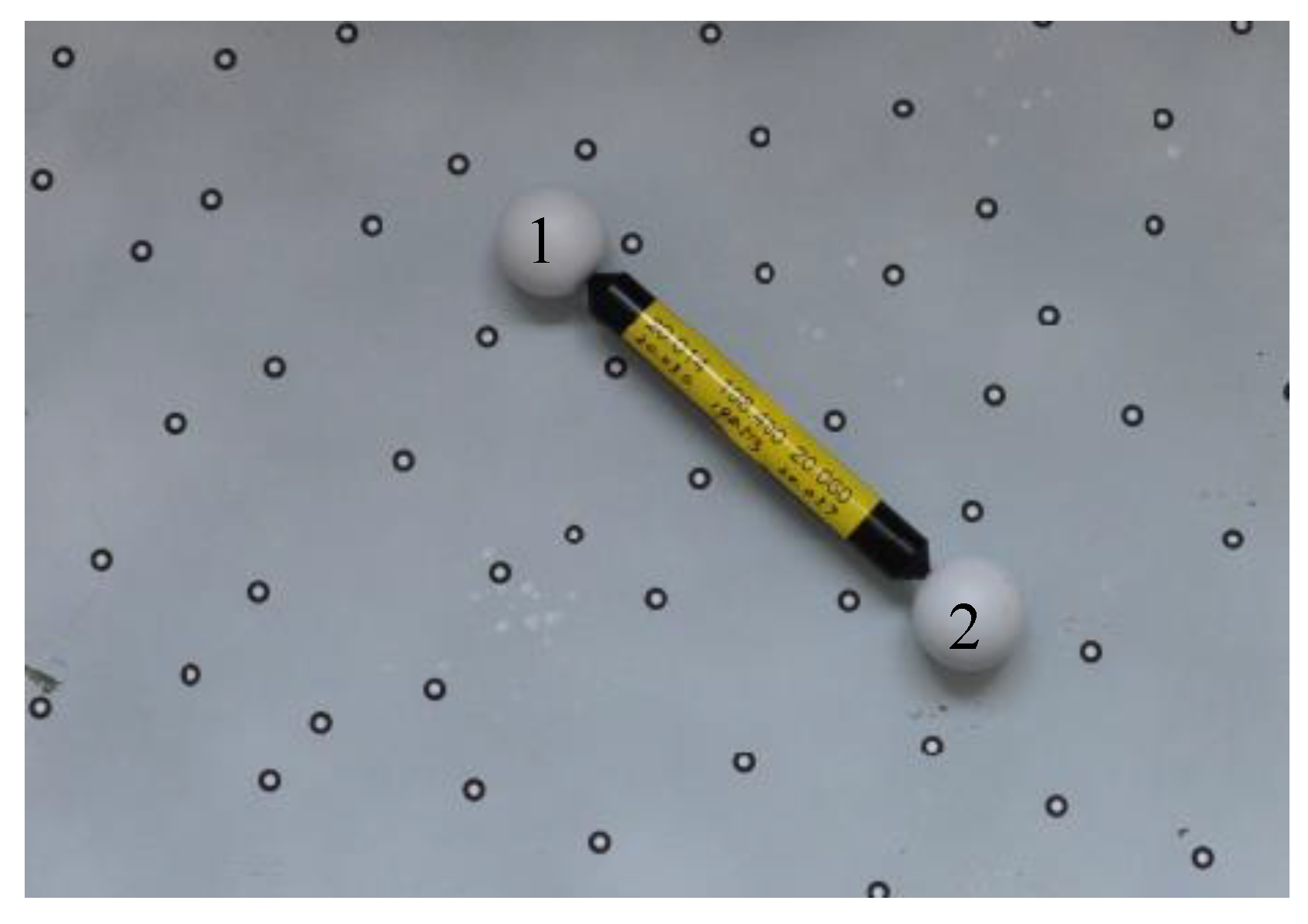

5.2. System Accuracy Test

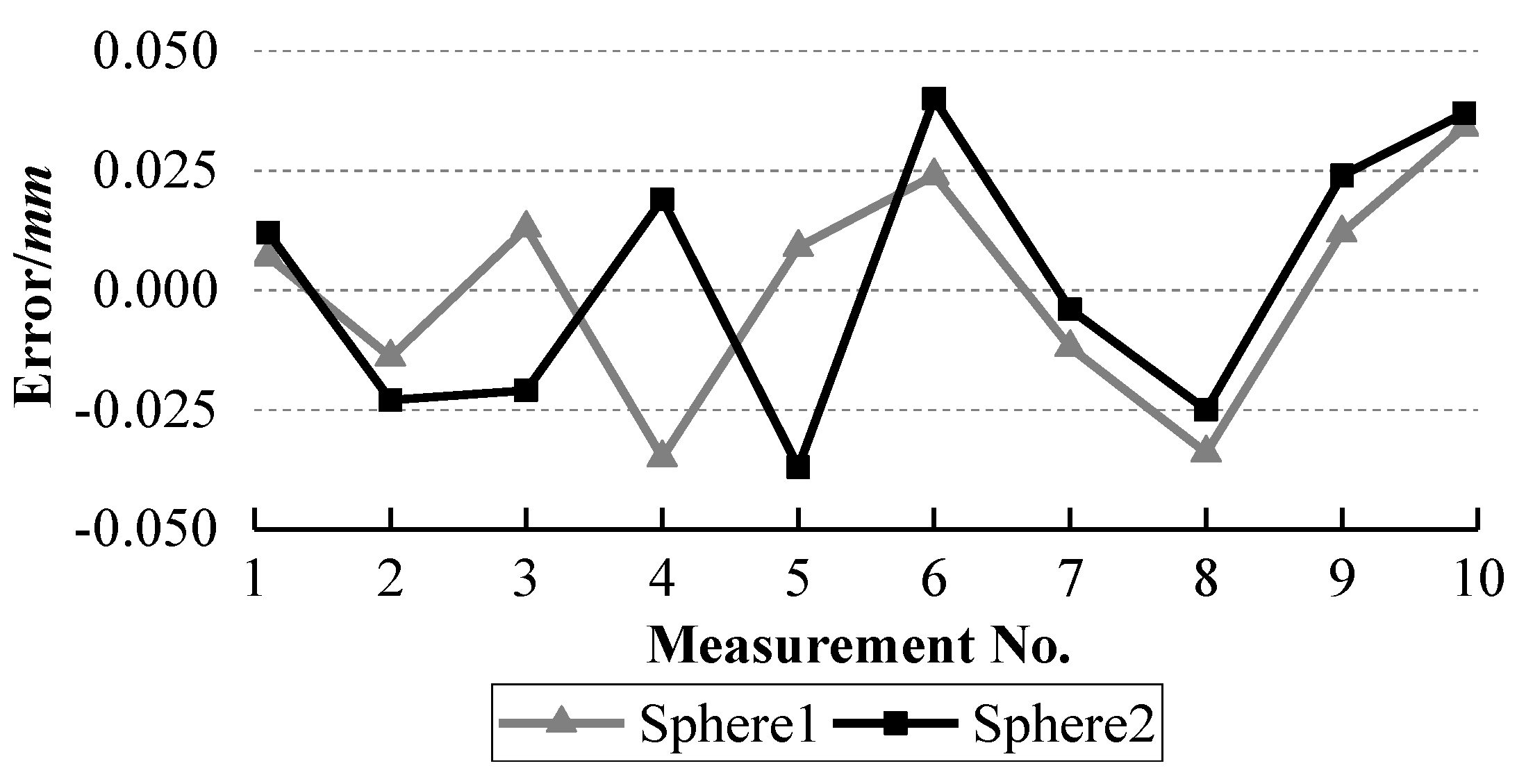

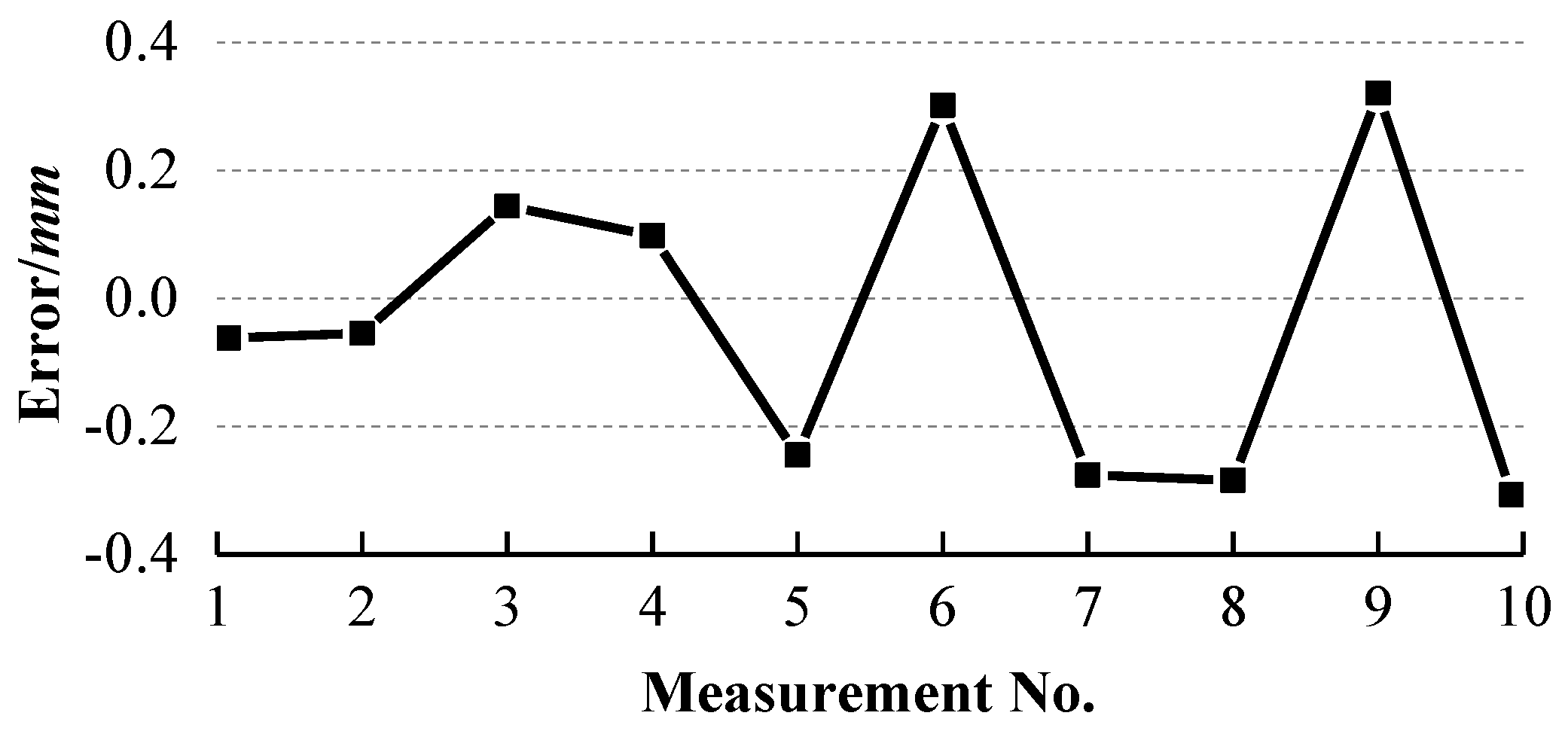

5.2.1. Systematic Error Test

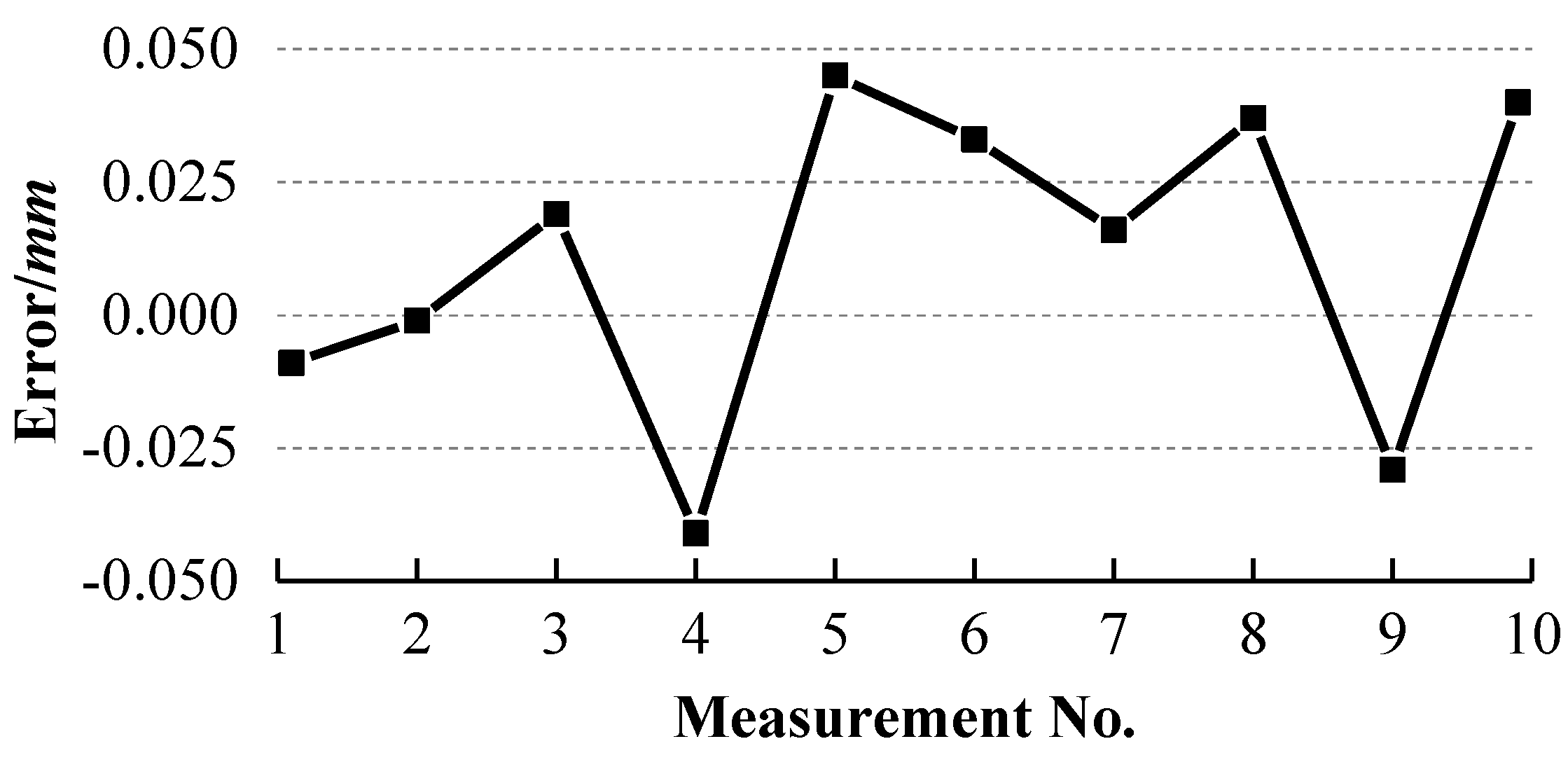

5.2.2. Random Error Test

5.3. Working Efficiency Test

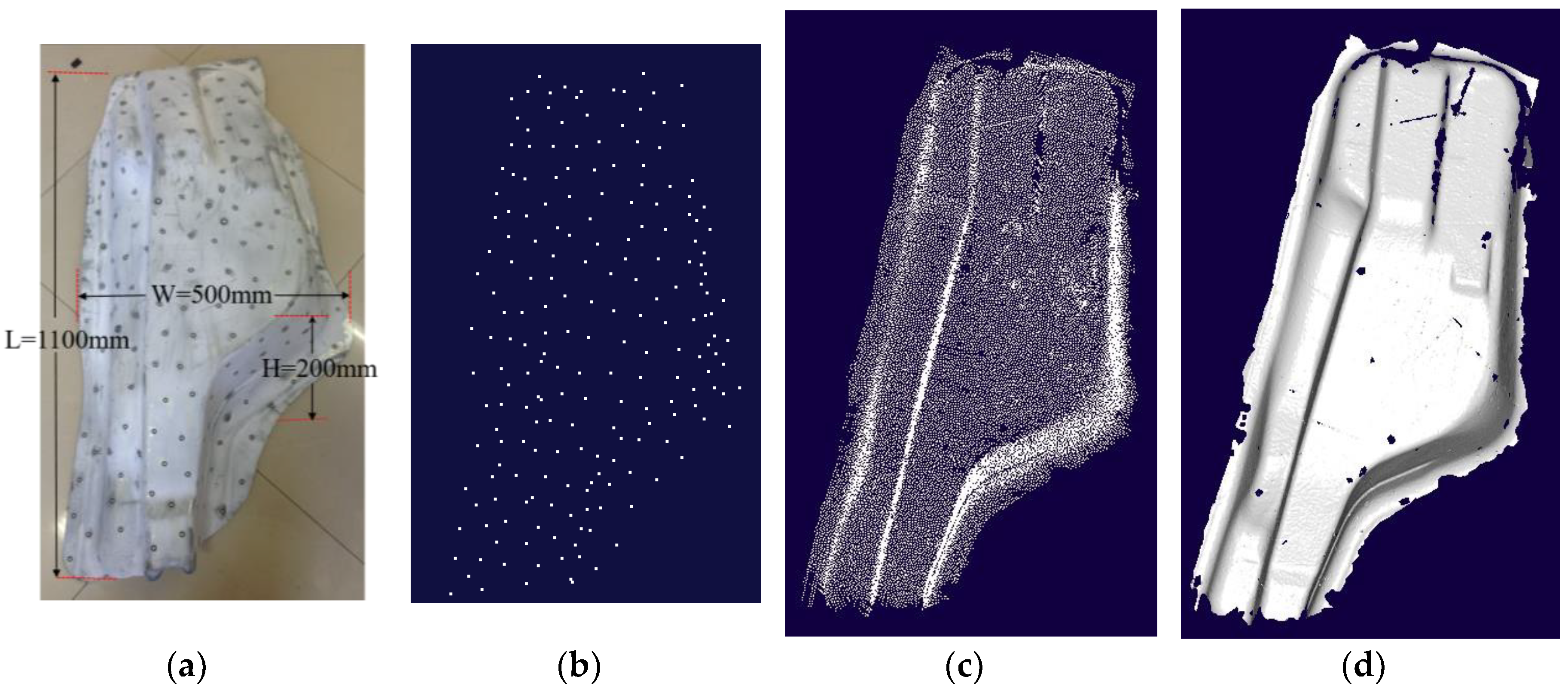

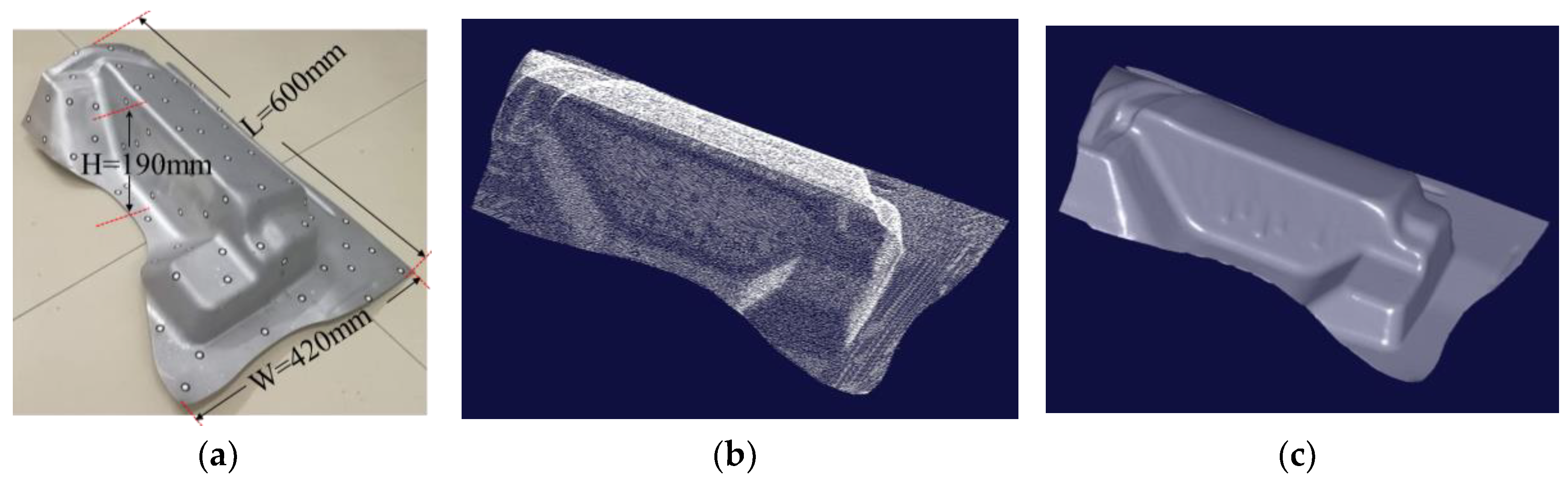

5.4. Application

5.5. Discussion

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Amans, O.C.; Beiping, W.; Ziggah, Y.Y.; Daniel, A.O. The need for 3D laser scanning documentation for select Nigeria cultural heritage sites. Eur. Sci. J. 2013, 9, 75–91. [Google Scholar]

- Price, G.J.; Parkhurst, J.M.; Sharrock, P.J.; Moore, C.J. Real-time optical measurement of the dynamic body surface for use in guided radiotherapy. Phys. Med. Biol. 2012, 57, 415–436. [Google Scholar] [CrossRef] [PubMed]

- Stevanovic, N.; Markovic, V.M.; Nikezic, D. New method for determination of diffraction light pattern of the arbitrary surface. Opt. Laser Technol. 2017, 90, 90–95. [Google Scholar] [CrossRef]

- Ke, F.; Xie, J.; Chen, Y.; Zhang, D.; Chen, B. A fast and accurate calibration method for the structured light system based on trapezoidal phase-shifting pattern. Optik 2014, 125, 5249–5253. [Google Scholar] [CrossRef]

- Suresh, V.; Holton, J.; Li, B. Structured light system calibration with unidirectional fringe patterns. Opt. Laser Eng. 2018, 106, 86–93. [Google Scholar] [CrossRef]

- Cuesta, E.; Suarez-Mendez, J.M.; Martinez-Pellitero, S.; Barreiro, J.; Zapico, P. Metrological evaluation of Structured Light 3D scanning system with an optical feature-based gauge. Procedia Manuf. 2017, 13, 526–533. [Google Scholar] [CrossRef]

- Ganganath, N.; Leung, H. Mobile robot localization using odometry and kinect sensor. In Proceedings of the IEEE Conference Emerging Signal Processing Applications, Las Vegas, NV, USA, 12–14 January 2012; pp. 91–94. [Google Scholar]

- Tang, Y.; Yao, J.; Zhou, Y.; Sun, C.; Yang, P.; Miao, H.; Chen, J. Calibration of an arbitrarily arranged projection moiré system for 3D shape measurement. Opt. Laser Eng. 2018, 104, 135–140. [Google Scholar] [CrossRef]

- Zhong, M.; Chen, W.; Su, X.; Zheng, Y.; Shen, Q. Optical 3D shape measurement profilometry based on 2D S-Transform filtering method. Opt. Commun. 2013, 300, 129–136. [Google Scholar] [CrossRef]

- Zhang, Z.; Jing, Z.; Wang, Z.; Kuang, D. Comparison of Fourier transform, windowed Fourier transform, and wavelet transform methods for phase calculation at discontinuities in fringe projection profilometry. Opt. Lasers Eng. 2012, 50, 1152–1160. [Google Scholar] [CrossRef]

- Bleier, M.; Nüchter, A. Towards robust self-calibration for handheld 3D line laser scanning. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 42, 31–36. [Google Scholar] [CrossRef]

- Zhang, S. Handbook of 3D Machine Vision: Optical Metrology and Imaging; CRC Press: Boca Raton, FL, USA, 2013; pp. 57–70. [Google Scholar]

- Koutecký, T.; Paloušek, D.; Brandejs, J. Method of photogrammetric measurement automation using TRITOP system and industrial robot. Optik Int. J. Light Electron Opt. 2013, 124, 3705–3709. [Google Scholar] [CrossRef]

- Gmurczyk, G.; Reymer, P.; Kurdelski, M. Global FEM Model of combat helicopter. J. KONES Powertrain Transp. 2011, 18, 137–144. [Google Scholar]

- Xu, H.; Ren, N. Working Principle and System Calibration of ATOS Optical Scanner. Tool Eng. 2006, 40, 81–84. [Google Scholar]

- Xie, Z.; Lu, W.; Wang, X.; Liu, J. College of Engineering, Ocean University of China. Analysis of Pose Selection on Binocular Stereo Calibration. Chin. J. Lasers 2015, 42, 237–244. [Google Scholar]

- Chen, S.; Xia, R.; Zhao, J.; Chen, Y.; Hu, M. A hybrid method for ellipse detection in industrial images. Pattern Recognit. 2017, 68, 82–98. [Google Scholar] [CrossRef]

- Sun, Q.; Liu, R.; Yu, F. An extraction method of laser stripe centre based on Legendre moment. Optik 2016, 127, 912–915. [Google Scholar] [CrossRef]

- Tian, Q.; Zhang, X.; Ma, Q.; Ge, B. Utilizing polygon segmentation technique to extract and optimize light stripe centerline in line-structured laser 3D scanner. Pattern Recognit. 2016, 55, 100–113. [Google Scholar]

- Sun, Q.; Chen, J.; Li, C. A robust method to extract a laser stripe centre based on grey level moment. Opt. Laser Eng. 2015, 67, 122–127. [Google Scholar] [CrossRef]

- Mu, N.; Wang, K.; Xie, Z.; Ren, P. Calibration of a flexible measurement system based on industrial articulated robot and structured light sensor. Opt. Eng. 2017, 56, 054103. [Google Scholar] [CrossRef]

| Measurement No. | Radius1 (mm) | Radius2 (mm) | Distance (mm) |

|---|---|---|---|

| 1 | 20.037 | 20.069 | 198.504 |

| 2 | 20.016 | 20.034 | 198.512 |

| 3 | 20.043 | 20.036 | 198.532 |

| 4 | 19.995 | 20.076 | 198.472 |

| 5 | 20.039 | 20.020 | 198.558 |

| 6 | 20.054 | 20.097 | 198.546 |

| 7 | 20.018 | 20.053 | 198.529 |

| 8 | 19.996 | 20.032 | 198.550 |

| 9 | 20.042 | 20.081 | 198.481 |

| 10 | 20.064 | 20.094 | 198.553 |

| Average | 20.0304 | 20.0592 | 198.523 |

| Measurement No. | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

|---|---|---|---|---|---|---|---|---|---|---|

| Max distance1 1/mm | 0.235 | 0.222 | 0.216 | 0.245 | 0.239 | 0.242 | 0.229 | 0.219 | 0.233 | 0.240 |

| Max distance2 2/mm | 0.252 | 0.224 | 0.254 | 0.200 | 0.240 | 0.232 | 0.253 | 0.223 | 0.241 | 0.235 |

| Measurement No. | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

|---|---|---|---|---|---|---|---|---|---|---|

| Max distance1/mm | 0.081 | 0.095 | 0.107 | 0.089 | 0.139 | 0.127 | 0.151 | 0.090 | 0.142 | 0.149 |

| Max distance2/mm | 0.117 | 0.097 | 0.119 | 0.143 | 0.092 | 0.105 | 0.126 | 0.134 | 0.095 | 0.113 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, X.; Xie, Z.; Wang, K.; Zhou, L. Research on a Handheld 3D Laser Scanning System for Measuring Large-Sized Objects. Sensors 2018, 18, 3567. https://doi.org/10.3390/s18103567

Wang X, Xie Z, Wang K, Zhou L. Research on a Handheld 3D Laser Scanning System for Measuring Large-Sized Objects. Sensors. 2018; 18(10):3567. https://doi.org/10.3390/s18103567

Chicago/Turabian StyleWang, Xiaomin, Zexiao Xie, Kun Wang, and Liqin Zhou. 2018. "Research on a Handheld 3D Laser Scanning System for Measuring Large-Sized Objects" Sensors 18, no. 10: 3567. https://doi.org/10.3390/s18103567