Hybrid System for Engagement Recognition During Cognitive Tasks Using a CFS + KNN Algorithm

Abstract

:1. Introduction

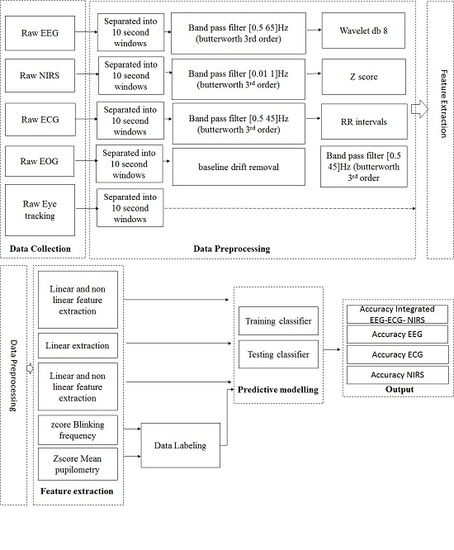

2. Systems Design Section

2.1. Participants

2.2. Engagement Tasks

2.2.1. BDS (Backward Digit Span)

2.2.2. FDS (Forward Digit Span)

2.2.3. Arithmetic

2.3. Software and Apparatus

2.3.1. Eye Tracking

2.3.2. Electrophysiology

2.3.3. Near-Infrared Spectroscopy

3. Analysis for Engagement Recognition

3.1. Data Preprocessing

3.1.1. Pupillometry

Zpupillometry = Z score of pupillometry

μbaseline = mean baseline

μptask = mean of pupil size during the encoding time

sdptask = standard deviation of pupil size during executing the tasks

3.1.2. Blinking Rates

Zblinkrate = Z score of blink rate

μblinkratetask = mean of blink rate during encoding time

μbaseline = mean baseline

sdblinkrate = standard deviation of blink eyes during executing the tasks

3.1.3. Engagement Recognition

Data Labeling for Training Data

3.2. Feature Extraction

3.3. Predictive Modeling

- Ms = Heuristic “merit” of a feature subset, S, containing k features.

- rcf = Mean feature-class correlation.

- rff = Average feature-feature intercorrelation.

- k = Number features.

4. Results

4.1. Classification Algorithm

4.2. Comparison of Stand-Alone and Hybrid Systems

5. Discussion

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Stikic, M.; Berka, C.; Levendowski, D.J.; Rubio, R.F.; Tan, V.; Korszen, S.; Barba, D.; Wurzer, D. Modeling temporal sequences of cognitive state changes based on a combination of EEG-engagement, EEG-workload, and heart rate metrics. Front. Neurosci. 2014, 8, 342. [Google Scholar] [CrossRef] [PubMed]

- Berka, C.; Levendowski, D.J.; Lumicao, M.N.; Yau, A.; Davis, G.; Zivkovic, V.T.; Olmstead, R.E.; Tremoulet, P.D.; Craven, P.L. EEG correlates of task engagement and mental workload in vigilance, learning, and memory tasks. Aviat. Space Environ. Med. 2007, 78, B231–B244. [Google Scholar] [PubMed]

- Billeci, L.; Tonacci, A.; Tartarisco, G.; Narzisi, A.; Di Palma, S.; Corda, D.; Baldus, G.; Cruciani, F.; Anzalone, S.M.; Calderoni, S.; et al. An Integrated Approach for the Monitoring of Brain and Autonomic Response of Children with Autism Spectrum Disorders during Treatment by Wearable Technologies. Front. Neurosci. 2016, 10, 276. [Google Scholar] [CrossRef] [PubMed]

- Bierre, K.L.; Lucas, S.J.; Guiney, H.; Cotter, J.D.; Machado, L. Cognitive Difficulty Intensifies Age-related Changes in Anterior Frontal Hemodynamics: Novel Evidence from Near-infrared Spectroscopy. J. Gerontol. A Biol. Sci. Med. Sci. 2017, 72, 181–188. [Google Scholar] [CrossRef] [PubMed]

- Aghajani, H.; Garbey, M.; Omurtag, A. Measuring Mental Workload with EEG+fNIRS. Front. Hum. Neurosci. 2017, 11, 359. [Google Scholar] [CrossRef] [PubMed]

- Hussain, M.S.; Calvo, R.A.; Chen, F. Automatic cognitive load detection from face, physiology, task performance and fusion during affective interference. Interact. Comput. 2013, 26, 256–268. [Google Scholar] [CrossRef]

- Brink, R.L.; Murphy, P.R.; Nieuwenhuis, S. Pupil diameter tracks lapses of attention. PLoS ONE 2016, 11, e0165274. [Google Scholar] [CrossRef]

- Yoder, K.J.; Belmonte, M.K. Combining computer game-based behavioural experiments with high-density EEG and infrared gaze tracking. J. Vis. Exp. 2010, 16, 2320. [Google Scholar] [CrossRef] [PubMed]

- Mathôt, S.; Melmi, J.-B.; Castet, E. Intrasaccadic perception triggers pupillary constriction. PeerJ 2015, 3, e1150. [Google Scholar] [CrossRef] [PubMed]

- Mathôt, S.; van der Linden, L.; Grainger, J.; Vitu, F. The Pupillary Light Response Reveals the Focus of Covert Visual Attention. PLoS ONE 2013, 8, e78168. [Google Scholar] [CrossRef] [PubMed]

- Orchard, L.N.; Stern, J.A. Blinks as an index of cognitive activity during reading. Integr. Physiol. Behav. Sci. 1991, 26, 108–116. [Google Scholar] [CrossRef] [PubMed]

- Bulling, A.; Ward, J.A.; Gellersen, H.; Troster, G. Eye Movement Analysis for Activity Recognition Using Electrooculography. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 741–753. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Niehorster, D.C.; Cornelissen, T.H.W.; Holmqvist, K.; Hooge, I.T.C.; Hessels, R.S. What to expect from your remote eye-tracker when participants are unrestrained. Behav. Res. Methods 2018, 50, 213–227. [Google Scholar] [CrossRef] [PubMed]

- Ahn, S.; Jun, S.C. Multi-Modal Integration of EEG-fNIRS for Brain-Computer Interfaces–Current Limitations and Future Directions. Front. Hum. Neurosci. 2017, 11, 503. [Google Scholar] [CrossRef] [PubMed]

- Hong, K.-S.; Khan, M.J.; Hong, M.J. Feature Extraction and Classification Methods for Hybrid fNIRS-EEG Brain-Computer Interfaces. Front. Hum. Neurosci. 2018, 12, 246. [Google Scholar] [CrossRef] [PubMed]

- Ahn, S.; Nguyen, T.; Jang, H.; Kim, J.G.; Jun, S.C. Exploring Neuro-Physiological Correlates of Drivers’ Mental Fatigue Caused by Sleep Deprivation Using Simultaneous EEG, ECG, and fNIRS Data. Front. Hum. Neurosci. 2016, 10, 219. [Google Scholar] [CrossRef] [PubMed]

- Zennifa, F.; Ide, J.; Noguchi, Y.; Iramina, K. Monitoring of cognitive state on mental retardation child using EEG, ECG and NIRS in four years study. Eng. Med. Biol. Soc. 2015, 6610–6613. [Google Scholar] [CrossRef]

- Iramina, K.; Matsuda, K.; Ide, J.; Noguchi, Y. Monitoring system of neuronal activity and moving activity without restraint using wireless EEG, NIRS and accelerometer. In Proceedings of the 2010 IEEE EMBS Conference on Biomedical Engineering and Sciences, IECBES, Kuala Lumpur, Malaysia, 30 November–2 December 2010; pp. 481–484. [Google Scholar]

- Li, X.; Song, D.; Zhang, P.; Zhang, Y.; Hou, Y.; Hu, B. Exploring EEG Features in Cross-Participant Emotion Recognition. Front. Neurosci. 2018, 12, 162. [Google Scholar] [CrossRef] [PubMed]

- Huang, S.; Li, J.; Zhang, P.; Zhang, W. Detection of mental fatigue state with wearable ECG devices. Int. J. Med. Inform. 2018, 119, 39–46. [Google Scholar] [CrossRef] [PubMed]

- Zakeri, S.; Abbasi, A.; Goshvarpour, A. The Effect of Creative Tasks on Electrocardiogram: Using Linear and Nonlinear Features in Combination with Classification Approaches. Iran J. Psychiatry 2017, 12, 49–57. [Google Scholar] [PubMed]

- Hall, M. Correlation-Based Feature Selection for Machine Learning. Ph.D. Thesis, Waikato University, Hamilton, New Zealand, 1999. [Google Scholar]

- Hu, B.; Li, X.; Sun, S.; Ratcliffe, M. Attention Recognition in EEG-Based Affective Learning Research Using CFS+KNN Algorithm. IEEE/ACM Trans. Comput. Biol; Bioinform. 2018, 15, 38–45. [Google Scholar] [CrossRef] [PubMed]

- Martínez-Sellés, M.; Datino, T.; Figueiras-Graillet, L.; Gama, J.G.; Jones, C.; Franklin, R.; Fernández-Avilés, F. Cardiovascular safety of anagrelide in healthy participants: Effects of caffeine and food intake on pharmacokinetics and adverse reactions. Clin. Drug Investig. 2013, 33, 45–54. [Google Scholar] [CrossRef] [PubMed]

- de Oliveira, R.A.M.; Araújo, L.F.; de Figueiredo, R.C.; Goulart, A.C.; Schmidt, M.I.; Barreto, S.M.; Ribeiro, A.L.P. Coffee Consumption and Heart Rate Variability: The Brazilian Longitudinal Study of Adult Health (ELSA-Brasil) Cohort Study. Nutrients 2017, 9, 741. [Google Scholar] [CrossRef] [PubMed]

- Mathôt, S.; Schreij, D.; Theeuwes, J. OpenSesame: An open-source, graphical experiment builder for the social sciences. Behav. Res. Methods 2012, 44, 314–324. [Google Scholar] [CrossRef] [PubMed]

- Dalmaijer, E.; Mathôt, S.; Van der Stigchel, S. PyGaze: An open-source, cross-platform toolbox for minimal-effort programming of eyetracking experiments. Behav. Res. Methods 2014, 46, 913–921. [Google Scholar] [CrossRef] [PubMed]

- Culham, J.C.; Valyear, K.F. Human parietal cortex in action. Curr. Opin. Neurobiol. 2006, 16, 205–212. [Google Scholar] [CrossRef] [PubMed]

- Reynolds, G.D.; Romano, A.C. The Development of Attention Systems and Working Memory in Infancy. Front. Syst. Neurosci. 2016, 10, 15. [Google Scholar] [CrossRef] [PubMed]

- Chayer, C.; Freedman, M. Frontal lobe functions. Curr. Neurol. Neurosci. Rep. 2001, 1, 547–552. [Google Scholar] [CrossRef] [PubMed]

- Akar, S.A.; Kara, S.; Latifoğlu, F.; Bilgiç, V. Analysis of the Complexity Measures in the EEG of Schizophrenia Patients. Int. J. Neural Syst. 2016, 26, 1650008. [Google Scholar] [CrossRef] [PubMed]

- Hall, M.; Frank, E.; Holmes, G.; Pfahringer, B.; Reutemann, P.; Witten, I.H. The WEKA data mining software: An update. SIGKDD Explor. 2009, 11, 10–18. [Google Scholar] [CrossRef]

- Oh, S.H.; Lee, Y.R.; Kim, H.N. A Novel EEG Feature Extraction Method Using Hjorth Parameter. Int. J. Electron. Electr. Eng. 2014, 2, 106–110. [Google Scholar] [CrossRef]

- Chen, C.-W.; Sun, C.-W. Combination of Electroencephalography and Near-Infrared Spectroscopy in Evaluation of Mental Concentration during the Mental Focus Task for Wisconsin Card Sorting Test. Sci. Rep. 2017, 7, 338. [Google Scholar] [CrossRef] [PubMed]

- Luhmann, V.A.; Muller, K.R. Why build an integrated EEG-NIRS? About the advantages of hybrid bio-acquisition hardware. IEEE Eng. Med. Biol. Soc. 2017, 4475–4478. [Google Scholar] [CrossRef]

- Palaniappan, R.; Sundaraj, K.; Sundaraj, S. A comparative study of the svm and k-nn machine learning algorithms for the diagnosis of respiratory pathologies using pulmonary acoustic signals. BMC Bioinform. 2014, 15, 223. [Google Scholar] [CrossRef] [PubMed]

- Chicco, D. Ten quick tips for machine learning in computational biology. BioData Min. 2017, 10, 35. [Google Scholar] [CrossRef] [PubMed]

| Point | Pupil | Blinking |

|---|---|---|

| +1 | Z score ≤ 0 | Z score ≥ 0 |

| −1 | Z score > 0 | Z score < 0 |

| Feature Type | Extracted Features |

|---|---|

| Time-frequency domain features |

|

| Nonlinear domain feature |

|

| Engagement Level | Sample Numbers | Weight | Percentage |

|---|---|---|---|

| Low | 763 | 763 | 38% |

| High | 1250 | 1250 | 62% |

| Engagement Level | Sample Numbers | Weight | Percentage |

|---|---|---|---|

| Low | 763 | 1006 | 50% |

| High | 1250 | 1006 | 50% |

| Balancing + CFS + KNN | KNN | Balancing + KNN | |

|---|---|---|---|

| Accuracy | 71.65 ± 0.16% | 71.17 ± 0.16% | 70.84 ± 0.17% |

| CFS + SVM | CFS + KNN (k = 1) | CFS + KNN (k = 3) | CFS + KNN (k = 9) | |

|---|---|---|---|---|

| Accuracy | 70.78 ± 0.19% | 65.64 ± 0.14% | 70.34 ± 0.16% | 71.65 ± 0.16% |

| Class | TP Rate | FP Rate | Precision | Recall | F-Measure | ROC Area | |

|---|---|---|---|---|---|---|---|

| hybrid | Low | 0.788 | 0.284 | 0.735 | 0.788 | 0.76 | 0.811 |

| High | 0.716 | 0.212 | 0.771 | 0.716 | 0.743 | 0.811 | |

| EEG | Low | 0.748 | 0.331 | 0.693 | 0.748 | 0.72 | 0.763 |

| High | 0.669 | 0.252 | 0.727 | 0.669 | 0.697 | 0.761 | |

| ECG | Low | 0.775 | 0.282 | 0.733 | 0.775 | 0.753 | 0.809 |

| High | 0.718 | 0.225 | 0.761 | 0.718 | 0.739 | 0.81 | |

| NIRS | Low | 0.701 | 0.302 | 0.699 | 0.701 | 0.7 | 0.764 |

| High | 0.698 | 0.299 | 0.7 | 0.698 | 0.699 | 0.764 |

| Hybrid | EEG | ECG | NIRS | |

|---|---|---|---|---|

| Accuracy | 71.65 ± 0.16% | 65.73 ± 0.17% | 67.44 ± 0.19% | 66.83 ± 0.17% |

| Participant | SVM | KNN |

|---|---|---|

| S1 | 74.36% | 75.64% |

| S2 | 88.46% | 85.90% |

| S3 | 61.54% | 61.54% |

| S4 | 34.62% | 64.10% |

| S5 | 51.28% | 46.15% |

| S6 | 96.15% | 96.15% |

| S7 | 83.33% | 83.33% |

| S8 | 78.21% | 67.95% |

| S9 | 44.87% | 50% |

| S10 | 70.51% | 62.82% |

| S11 | 92.31% | 91.03% |

| Features Selected | |

|---|---|

| Hybrid | activity (Hjorth Parameter) (fz), power density integral gamma (fz), relative alpha (fz), relative beta (fz), activity (pz), power density integral beta (pz), relative power gamma (pz), complexity ECG (Hjorth parameter), mobility ECG (Hjorth parameter), activity ECG (Hjorth parameter), mobility total (fp1), activity total (fp1), complexity deoxy (fp1), mobility deoxy (fp1), activity deoxy (fp1), mobility tot (fp2), complexity deoxy (fp2), mobility deoxy (fp2) |

| EEG | Activity (fz), alpha density (fz), theta density (fz), gamma density (fz), Kolmogorov complexity (fz), relative power, relative alpha (fz), relative beta (fz), relative gamma fz, activity (pz), alpha density(pz), beta density(pz), gamma density (pz), relative alpha (pz), relative beta (pz), relative gamma (pz) |

| ECG | Activity, mobility complexity (Hjorth parameter) |

| NIRS | Activity [tot(fp1), deoxy(fp1), deoxy(fp2)], mobility [tot(fp1)), deoxy(fp1), tot(fp2), deoxy (fp2)], complexity [tot (fp1), deoxy(fp1), deoxy (fp2) (Hjorth parameter)] |

| System | Class | MCC | PRC |

|---|---|---|---|

| Hybrid | Low | 0.505 | 0.781 |

| High | 0.505 | 0.798 | |

| EEG | Low | 0.418 | 0.716 |

| High | 0.418 | 0.758 | |

| ECG | Low | 0.493 | 0.782 |

| High | 0.493 | 0.795 | |

| NIRS | Low | 0.399 | 0.731 |

| High | 0.399 | 0.751 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zennifa, F.; Ageno, S.; Hatano, S.; Iramina, K. Hybrid System for Engagement Recognition During Cognitive Tasks Using a CFS + KNN Algorithm. Sensors 2018, 18, 3691. https://doi.org/10.3390/s18113691

Zennifa F, Ageno S, Hatano S, Iramina K. Hybrid System for Engagement Recognition During Cognitive Tasks Using a CFS + KNN Algorithm. Sensors. 2018; 18(11):3691. https://doi.org/10.3390/s18113691

Chicago/Turabian StyleZennifa, Fadilla, Sho Ageno, Shota Hatano, and Keiji Iramina. 2018. "Hybrid System for Engagement Recognition During Cognitive Tasks Using a CFS + KNN Algorithm" Sensors 18, no. 11: 3691. https://doi.org/10.3390/s18113691