Yield Visualization Based on Farm Work Information Measured by Smart Devices

Abstract

:1. Introduction

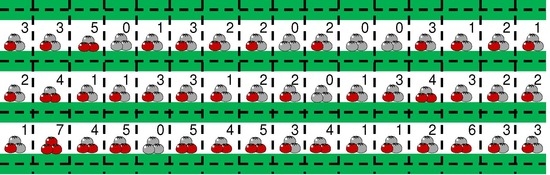

1.1. Background and Objective

1.2. Related Work with Farm Work Information

1.3. Experimental Field and System

2. Estimation of the Farmer’s Position

2.1. Overview

- Compared with other sensors such as cameras, the maintenance of the beacons is easy, because the battery in each beacon, consisting of two dry cells, lasts for about a year, and the cost of beacons is quite cheap as well.

- Compared with PDR, which does not require a complicated system, the complexity of the beacon-based system is almost the same, and the accuracy of position estimation is higher because the estimation errors are not accumulated over time.

- Unlike GPS, the beacons’ radio waves are less affected in a greenhouse, and the result acquired by the beacon waves is more accurate.

2.2. Estimation Method

2.3. Experiments and Discussion

3. Farmer’s Action Recognition

3.1. Overview

3.2. Recognition Method

3.2.1. Feature Representation

3.2.2. Action Recognition

- Representative time of each harvesting action is decided by finding, in the sequence, the local maximum of which is greater than .

- If the difference between a representative time and the following timeis smaller than frames, the latter is ignored.

- Based on the local maximum , a harvesting action is determined, which indicates that a farm laborer harvests (or does not harvest) a tomato at discrete time m.

3.3. Results and Discussion

4. Harvesting Map

4.1. Overview

4.2. Results and Interview

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Řezník, T.; Charvat, K.; Lukas, V.; Charvat, K., Jr.; Horakova, S.; Kepka, M. Open Data Model for (Precision) Agriculture Applications and Agricultural Pollution Monitoring. In Proceedings of the Enviroinfo and ICT for Sustainability, Copenhagen, Denmark, 7–9 September 2015. [Google Scholar]

- WAGRI. Available online: https://wagri.net (accessed on 12 November 2018).

- Trimble. Available online: https://agriculture.trimble.com/software/rugged-computers/ (accessed on 12 November 2018).

- Guan, S.; Shikanai, T.; Minami, T.; Nakamura, M.; Ueno, M.; Setouchi, H. Development of a system for recording farming data by using a cellular phone equipped with GPS. Agric. Inf. Res. 2006, 15, 241–254. [Google Scholar] [CrossRef]

- Priva. Available online: http://www.priva-asia.com/en/products/privassist/ (accessed on 12 November 2018).

- Morio, Y.; Tanaka, T.; Murakami, K. Agricultural worker behavioral recognition system for intelligent worker assistance. Eng. Agric. Environ. Food 2017, 10, 48–62. [Google Scholar] [CrossRef]

- Kilambi, P.; Ribnick, E.; Joshi, A.; Masoud, O.; Papanikolopoulos, N. Estimating pedestrian counts in groups. Comput. Vis. Image Underst. 2008, 110, 43–59. [Google Scholar] [CrossRef]

- Nedevschi, S.; Bota, S.; Tomiuc, C. Stereo-based pedestrian detection for collision-avoidance applications. IEEE Trans. Intell. Transp. Syst. 2009, 10, 380–391. [Google Scholar] [CrossRef]

- Choi, W.; Pantofaru, C.; Savarese, S. A general framework for tracking multiple people from a moving camera. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1577–1591. [Google Scholar] [CrossRef] [PubMed]

- Zhao, H.; Shibasaki, R. A novel system for tracking pedestrians using multiple single-row laser-range scanners. IEEEE Trans. Syst. Man Cybern. Part A Syst. Hum. 2005, 35, 283–291. [Google Scholar] [CrossRef]

- Sana. A Survey of Indoor Localization Techniques. IOSR J. Electr. Electron. Eng. 2013, 6, 69–76. [Google Scholar] [CrossRef]

- Lin, J.; Keogh, E.; Lonardi, S.; Chiu, B. A symbolic representation of time series with implications for streaming algorithms. In Proceedings of the 8th ACM SIGMOD Workshop on Research Issues in Data Mining and Knowledge Discovery, San Diego, CA, USA, 13 June 2003; pp. 2–11. [Google Scholar]

- Zhang, M.; Sawchuk, A. Motion primitive-based human activity recognition using a bag-of-features approach. In Proceedings of the 2nd ACM SIGHIT International Health Information Symposium, Miami, FL, USA, 28–30 January 2012; pp. 631–640. [Google Scholar]

- Kawahata, R.; Shimada, A.; Yamashita, Y.; Uchiyama, H.; Taniguchi, R. Design of a low-false-positive geature for a wearable device. In Proceedings of the 5th International Conference on Pattern Recognition Applications and Methods, Rome, Italy, 24–26 February 2016; pp. 581–588. [Google Scholar]

- Weston, J.; Watkins, C. Multi-Class Support Vector Machines; Technical Report CSD-TR-98-04; Department of Computer Science, Royal Holloway University of London: London, UK, 1998. [Google Scholar]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Liaw, A.; Wiener, M. Classification and regression by randomForest. R News 2002, 2, 18–22. [Google Scholar]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hashimoto, Y.; Arita, D.; Shimada, A.; Yoshinaga, T.; Okayasu, T.; Uchiyama, H.; Taniguchi, R.-I. Yield Visualization Based on Farm Work Information Measured by Smart Devices. Sensors 2018, 18, 3906. https://doi.org/10.3390/s18113906

Hashimoto Y, Arita D, Shimada A, Yoshinaga T, Okayasu T, Uchiyama H, Taniguchi R-I. Yield Visualization Based on Farm Work Information Measured by Smart Devices. Sensors. 2018; 18(11):3906. https://doi.org/10.3390/s18113906

Chicago/Turabian StyleHashimoto, Yoshiki, Daisaku Arita, Atsushi Shimada, Takashi Yoshinaga, Takashi Okayasu, Hideaki Uchiyama, and Rin-Ichiro Taniguchi. 2018. "Yield Visualization Based on Farm Work Information Measured by Smart Devices" Sensors 18, no. 11: 3906. https://doi.org/10.3390/s18113906