Using Unmanned Aerial Vehicles in Postfire Vegetation Survey Campaigns through Large and Heterogeneous Areas: Opportunities and Challenges

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Area

2.2. UAV Platform and Multispectral Camera

2.3. UAV Survey Campaign

2.4. Image Data Processing

2.5. WorldView-2 High Spatial Resolution Satellite Imagery and Image Comparison Statistical Analysis

3. Results

3.1. Raw Imagery Dataset Quality

3.2. Multispectral Mosaic Processing and Product Quality

3.3. Comparison of the Spatial Information Provided by UAV and WorldView-2 Imagery

4. Discussion

5. Conclusions

- (1)

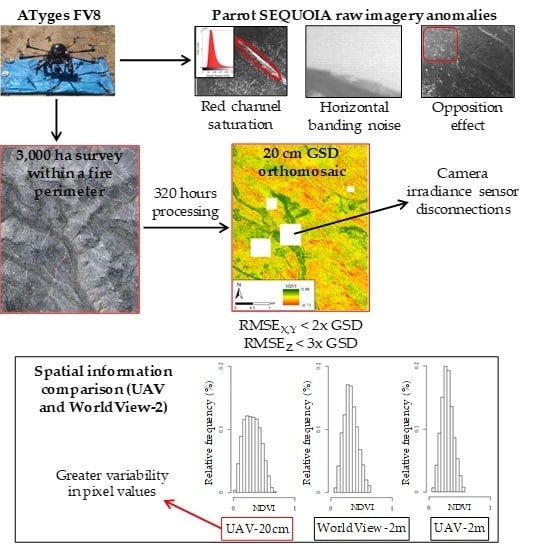

- The raw imagery acquired by the Parrot SEQUOIA multispectral camera presented some undesirable anomalies such as horizontal banding noise and non-homogeneous radiometry across the image. Moreover, the irradiance sensor disconnections induced some radiometric anomalies across a small area of the multispectral mosaic that had to be discarded.

- (2)

- The 16-bit imagery acquired on the UAV flights of the 3000 ha survey framework represents a large volume of data before processing it into a multispectral orthomosaic due to its ultra-high spatial resolution and the large size of the surveyed area. Nevertheless, this spatial resolution, which cannot be achieved with satellite platforms, could be crucial for developing spatial products to be used in post-fire management decision-making.

- (3)

- Data processing was very labor-intensive, taking about 320 h to obtain the final multispectral orthomosaic. Due to the large imagery dataset generated on a UAV survey of a large area, the dataset processing must be subdivided regardless of the available processing capability. The obtained geospatial accuracy of the UAV multispectral orthomosaic was high (RMSEX,Y < 30 cm and RMSEZ < 55 cm) regarding the large extension of the surveyed area and the spatial resolution of the dataset.

- (4)

- The spatial information provided by the ultra-high spatial resolution UAV multispectral orthomosaic was not redundant in these large and heterogeneous burned areas in comparison with high spatial resolution satellite imagery such as that provided by WorldView-2. The UAV orthomosaic could therefore improve the analysis and interpretation of fine-scale ground patterns.

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Seneviratne, S.I.; Nicholls, N.; Easterling, D.; Goodess, C.M.; Kanae, S.; Kossin, J.; Luo, Y.; Marengo, J.; McInnes, K.; Rahimi, M.; et al. Changes in climate extremes and their impacts on the natural physical environment. In Managing the Risks of Extreme Events and Disasters to Advance Climate Change Adaptation, 1st ed.; Field, C.B., Barros, V., Stocker, T.F., Qin, D., Dokken, D.J., Ebi, K.L., Mastrandrea, M.D., Mach, K.J., Plattner, G.K., Allen, S.K., et al., Eds.; Cambridge University Press: Cambridge, UK; New York, NY, USA, 2012; pp. 109–230. ISBN 978-11-0-702506-6. [Google Scholar]

- Quintano, C.; Fernández-Manso, A.; Calvo, L.; Marcos, E.; Valbuena, L. Land surface temperature as potential indicator of burn severity in forest Mediterranean ecosystems. Int. J. Appl. Earth Obs. 2015, 36, 1–12. [Google Scholar] [CrossRef]

- Poursanidis, D.; Chrysoulakis, N. Remote Sensing, natural hazards and the contribution of ESA Sentinels missions. Remote Sens. Appl. Soc. Environ. 2017, 6, 25–38. [Google Scholar] [CrossRef]

- Álvarez, A.; Gracia, M.; Vayreda, J.; Retana, J. Patterns of fuel types and crown fire potential in Pinus halepensis forest in the Western Mediterranean Basin. For. Ecol. Manag. 2012, 270, 282–290. [Google Scholar] [CrossRef]

- Vallejo, R.; Alloza, J.A. The restoration of burned lands: The case of eastern Spain. In Large Forest Fires, 1st ed.; Moreno, J.M., Ed.; Backhuys Publishers: Leiden, The Netherlands, 1998; pp. 91–108. [Google Scholar]

- Tessler, N.; Wittenberg, L.; Greenbaum, N. Vegetation cover and species richness after recurrent forest fires in the Eastern Mediterranean ecosystem of Mount Carmel, Israel. Sci. Total Environ. 2016, 572, 1395–1402. [Google Scholar] [CrossRef] [PubMed]

- Ruíz-Gallardo, J.R.; Castaño, S.; Calera, A. Application of remote sensing and GIS to locate priority intervention areas after wildland fires in Mediterranean systems: A case study from southeastern Spain. Int. J. Wildland Fire 2004, 13, 241–252. [Google Scholar] [CrossRef]

- Chu, T.; Guo, X.; Takeda, K. Remote sensing approach to detect post-fire vegetation regrowth in Siberian boreal larch forest. Ecol. Indic. 2016, 62, 32–46. [Google Scholar] [CrossRef]

- Viedma, O.; Torres, I.; Pérez, B.; Moreno, J.M. Modeling plant species richness using reflectance and texture data derived from QuickBird in a recently burned area of Central Spain. Remote Sens. Environ. 2012, 119, 208–221. [Google Scholar] [CrossRef]

- Jung, M.; Tautenhahn, S.; Wirth, C.; Kattge, J. Estimating Basal Area of Spruce and Fir in Post-fire Residual Stands in Central Siberia Using Quickbird, Feature Selection, and Random Forests. Procedia Comput. Sci. 2013, 18, 2386–2395. [Google Scholar] [CrossRef]

- Zhang, J.; Hu, J.; Lian, J.; Fan, Z.; Ouyang, X.; Ye, W. Seeing the forest from drones: Testing the potential of lightweight drones as a tool for long-term forest monitoring. Biol. Conserv. 2016, 198, 60–69. [Google Scholar] [CrossRef]

- Matese, A.; Toscano, P.; Di Gennaro, S.F.; Genesio, L.; Vaccari, F.P.; Primicerio, J.; Belli, C.; Zaldei, A.; Bianconi, R.; Gioli, B. Intercomparison of UAV, Aircraft and Satellite Remote Sensing Platforms for Precision Viticulture. Remote Sens. 2015, 7, 2971–2990. [Google Scholar] [CrossRef]

- Anderson, K.; Gaston, K.J. Lightweight unmanned aerial vehicles will revolutionize spatial ecology. Front. Ecol. Environ. 2013, 11, 138–146. [Google Scholar] [CrossRef]

- Tang, L.; Shao, G. Drone Remote Sensing for Forestry Research and Practices. J. For. Res 2015, 26, 791–797. [Google Scholar] [CrossRef]

- Ribeiro-Gomes, K.; Hernandez-Lopez, D.; Ballesteros, R.; Moreno, M.A. Approximate georeferencing and automatic blurred image detection to reduce the costs of UAV use in environmental and agricultural applications. Biosyst. Eng. 2016, 151, 308–327. [Google Scholar] [CrossRef]

- Zhou, J.; Pavek, M.J.; Shelton, S.C.; Holden, Z.J.; Sankaran, S. Aerial multispectral imaging for crop hail damage assessment in potato. Comput. Electron. Agric. 2016, 127, 406–412. [Google Scholar] [CrossRef]

- Beaty, R.M.; Taylor, A.H. Spatial and Temporal Variation of Fire Regimes in a Mixed Conifer Forest Landscape, Southern Cascades, California, USA. J. Biogeogr. 2001, 28, 955–966. [Google Scholar] [CrossRef]

- McKenna, P.; Erskine, P.D.; Lechner, A.M.; Phinn, S. Measuring fire severity using UAV imagery in semi-arid central Queensland, Australia. Int. J. Remote Sens. 2017, 38, 4244–4264. [Google Scholar] [CrossRef]

- Torresan, C.; Berton, A.; Carotenuto, F.; Di Gennaro, S.F.; Gioli, B.; Matese, A.; Miglietta, F.; Vagnoli, C.; Zaldei, A.; Wallace, L. Forestry applications of UAVs in Europe: A review. Int. J. Remote Sens. 2016, 38, 1–21. [Google Scholar] [CrossRef]

- Hardin, P.J.; Jensen, R.R. Small-Scale Unmanned Aerial Vehicles in Environmental Remote Sensing: Challenges and Opportunities. GISci. Remote Sens. 2011, 48, 99–111. [Google Scholar] [CrossRef]

- Jones, G.P.; Pearlstine, L.G.; Percival, H.F. An assessment of small unmanned aerial vehicles for wildlife research. Wildl. Soc. B 2006, 34, 750–758. [Google Scholar] [CrossRef]

- Koski, W.R.; Allen, T.; Ireland, D.; Buck, G.; Smith, P.R.; Macrander, A.M.; Halick, M.A.; Rushing, C.; Sliwa, D.J.; McDonald, T.L. Evaluation of an unmanned airborne system for monitoring marine mammals. Aquat. Mamm. 2009, 35, 347–357. [Google Scholar] [CrossRef]

- Israel, M. A UAV-based roe deer fawn detection system. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Conference on Unmanned Aerial Vehicle in Geomatics, Zurich, Switzerland, 14–16 September 2011. [Google Scholar]

- Chabot, D.; Bird, D.M. Evaluation of an off-the-shelf Unmanned Aircraft System for surveying flocks of geese. Waterbirds 2012, 35, 170–174. [Google Scholar] [CrossRef]

- Sarda-Palomera, F.; Bota, G.; Viñolo, C.; Pallarés, O.; Sazatornil, V.; Brotons, L.; Gomáriz, S.; Sarda, F. Fine-scale bird monitoring from light unmanned aircraft systems. IBIS 2012, 154, 177–183. [Google Scholar] [CrossRef]

- Vermeulen, C.; Lejeune, P.; Lisein, J.; Sawadogo, P.; Bouche, P. Unmanned aerial survey of elephants. PLoS ONE 2013, 8, e54700. [Google Scholar] [CrossRef] [PubMed]

- Floris, A.; Clementel, F.; Colle, G.; Gubert, F.; Bertoldi, L.; De Lorenzi, G. Estimation of Wood Volume with Photogrammetric Data Sensing from UAV on Small Surfaces: A Case Study in Trentino. In Proceedings of the 16th ASITA National Conference, Vicenza, Italy, 6–9 November 2012. [Google Scholar]

- Getzin, S.; Wiegand, K.; Schöning, I. Assessing biodiversity in forests using very high-resolution images and unmanned aerial vehicles. Methods Ecol. Evol. 2012, 3, 397–404. [Google Scholar] [CrossRef]

- Wallace, L.; Lucieer, A.; Watson, C.; Turner, D. Development of a UAV–LiDAR system with application to forest inventory. Remote Sens. 2012, 4, 1519–1543. [Google Scholar] [CrossRef]

- Dandois, J.P.; Ellis, E.C. High spatial resolution three-dimensional mapping of vegetation spectral dynamics using computer vision. Remote Sens. Environ. 2013, 136, 259–276. [Google Scholar] [CrossRef]

- Lisein, J.; Pierrot-Deseilligny, M.; Bonnet, S.; Lejeune, P. A Photogrammetric Workflow for the Creation of a Forest Canopy Height Model from Small Unmanned Aerial System Imagery. Forests 2013, 4, 922–944. [Google Scholar] [CrossRef]

- Puliti, S.; Orka, H.O.; Gobakken, T.; Naesset, E. Inventory of small forest areas using an Unmanned Aerial System. Remote Sens. 2015, 7, 9632–9654. [Google Scholar] [CrossRef] [Green Version]

- Fritz, A.; Kattenborn, T.; Koch, B. UAV-based photogrammetric point clouds-tree stem mapping in open stands in comparison to terrestrial laser scanner point clouds. In Proceedings of the UAV-g2013, Rostock, Germany, 4–6 September 2013. [Google Scholar]

- Gini, R.; Passoni, D.; Pinto, L.; Sona, G. Use of Unmanned Aerial Systems for Multispectral Survey and Tree Classification: A Test in a Park Area of Northern Italy. Eur. J. Remote Sens. 2014, 47, 251–269. [Google Scholar] [CrossRef]

- Jaakkola, A. Low-cost Mobile Laser Scanning and its Feasibility for Environmental Mapping. Ph.D. Dissertation, Aalto University, Espoo, Finland, 2015. [Google Scholar]

- Michez, A.; Piégay, H.; Lisein, J.; Claessens, H.; Lejeune, P. Classification of Riparian Forest Species and Health Condition Using Multi-Temporal and Hyperspatial Imagery from Unmanned Aerial System. Environ. Monit. Assess. 2016, 188, 1–19. [Google Scholar] [CrossRef] [PubMed]

- Aicardi, I.; Garbarino, M.; Lingua, A.; Lingua, E.; Marzano, R.; Piras, M. Monitoring Post-Fire Forest Recovery Using Multitemporal Digital Surface Models Generated from Different Platforms. In Proceedings of the EARSeL Symposium, Bonn, Germany, 20–24 June 2016. [Google Scholar]

- Fraser, R.H.; van der Sluijs, J.; Hall, R.J. Calibrating Satellite-Based Indices of Burn Severity from UAV-Derived Metrics of a Burned Boreal Forest in NWT, Canada. Remote Sens. 2017, 9, 279. [Google Scholar] [CrossRef]

- Cruz, H.; Eckert, M.; Meneses, J.; Martínez, J.F. Efficient Forest Fire Detection Index for Application in Unmanned Aerial Systems (UASs). Sensors 2016, 16, 893. [Google Scholar] [CrossRef] [PubMed]

- Pérez-Ortiz, M.; Peña, J.M.; Gutiérrez, P.A.; Torres-Sánchez, J.; Hervás-Martínez, C.; López-Granados, F. Selecting patterns and features for between- and within- crop-row weed mapping using UAV-imagery. Expert Syst. Appl. 2016, 47, 85–94. [Google Scholar] [CrossRef]

- Misopolinos, L.; Zalidis, C.H.; Liakopoulos, V.; Stavridou, D.; Katsigiannis, P.; Alexandridis, T.K.; Zalidis, G. Development of a UAV system for VNIR-TIR acquisitions in precision agriculture. In Proceedings of the Third International Conference on Remote Sensing and Geoinformation of the Environment, Paphos, Cyprus, 16–19 March 2015. [Google Scholar]

- Tian, J.; Wang, L.; Li, X.; Gong, H.; Shi, C.; Zhong, R.; Liu, X. Comparison of UAV and WorldView-2 imagery for mapping leaf area index of mangrove forest. Int. J. Appl. Earth Obs. 2017, 61, 22–31. [Google Scholar] [CrossRef]

- Calvo, L.; Santalla, S.; Valbuena, L.; Marcos, E.; Tárrega, R.; Luis-Calabuig, E. Post-fire natural regeneration of a Pinus pinaster forest in NW Spain. Plant Ecol. 2008, 197, 81–90. [Google Scholar] [CrossRef]

- Parrot. Available online: https://community.parrot.com/t5/Sequoia/bd-p/Sequoia (accessed on 3 June 2017).

- Santesteban, L.G.; Di Gennaro, S.F.; Herrero-Langreo, A.; Miranda, C.; Royo, J.B.; Matese, A. High-resolution UAV-based thermal imaging to estimate the instantaneous and seasonal variability of plant water status within a vineyard. Agric. Water Manag. 2017, 183, 49–59. [Google Scholar] [CrossRef]

- Kelcey, J.; Lucieer, A. Sensor Correction of a 6-Band Multispectral Imaging Sensor for UAV Remote Sensing. Remote Sens. 2012, 4, 1462–1493. [Google Scholar] [CrossRef]

- Burkart, A.; Aasen, H.; Alonso, L.; Menz, G.; Bareth, G.; Rascher, U. Angular Dependency of Hyperspectral Measurements over Wheat Characterized by a Novel UAV Based Goniometer. Remote Sens. 2015, 7, 725–746. [Google Scholar] [CrossRef] [Green Version]

- Koik, B.T.; Ibrahim, H. A literature survey on blur detection algorithms for digital imaging. In Proceedings of the 1st International Conference on Artificial Intelligence, Modelling and Simulation, Kota Kinabalu, Malaysia, 3–5 December 2013. [Google Scholar]

- Pix4D. Available online: https://pix4d.com/product/pix4dmapper-photogrammetry-software/ (accessed on 8 December 2016).

- McGlone, J.C. Manual of Photogrammetry, 6th ed.; American Society for Photogrammetry and Remote Sensing (ASPRS): Bethesda, MD, USA, 2013; ISBN 978-15-7-083071-6. [Google Scholar]

- Ruzgiené, B.; Berteška, T.; Gečyte, S.; Jakubauskienė, E.; Aksamitauskas, V.C. The surface modelling based on UAV Photogrammetry and qualitative estimation. Measurement 2015, 73, 619–627. [Google Scholar] [CrossRef]

- Zahawi, R.A.; Dandois, J.P.; Holl, K.D.; Nadwodny, D.; Reid, J.L.; Ellis, E.C. Using lightweight unmanned aerial vehicles to monitor tropical forest recovery. Biol. Conserv. 2015, 186, 287–295. [Google Scholar] [CrossRef]

- PNOA. Available online: http://pnoa.ign.es/caracteristicas-tecnicas (accessed on 21 December 2017).

- ESRI. Available online: http://desktop.arcgis.com/es/arcmap/10.3/main/get-started/whats-new-in-arcgis-1031.htm (accessed on 22 June 2016).

- DigitalGlobe. Available online: http://global.digitalglobe.com (accessed on 7 January 2016).

- Matthew, M.; Adler-Golden, S.; Berk, A.; Felde, G.; Anderson, G.; Gorodetzky, D.; Paswaters, S.; Shippert, M. Atmospheric correction of spectral imagery: Evaluation of the FLAASH algorithm with AVIRIS data. In Proceedings of the 32nd Applied Imagery Pattern Recognition Workshop, Washington, DC, USA, 15–17 October 2003. [Google Scholar]

- ENVI. Available online: http://www.harrisgeospatial.com/SoftwareTechnology/ENVI.aspx (accessed on 18 September 2017).

- Laliberte, A.S.; Goforth, M.A.; Steele, C.M.; Rango, A. Multispectral Remote Sensing from Unmanned Aircraft: Image Processing Workflows and Applications for Rangeland Environments. Remote Sens. 2011, 3, 2529–2551. [Google Scholar] [CrossRef]

- Ortega-Terol, D.; Hernandez-Lopez, D.; Ballesteros, R.; Gonzalez-Aguilera, D. Automatic Hotspot and Sun Glint Detection in UAV Multispectral Images. Sensors 2017, 17, 2352. [Google Scholar] [CrossRef] [PubMed]

- Tellidis, I.; Levin, E. Photogrammetric Image Acquisition with Small Unmanned Aerial Systems. In Proceedings of the ASPRS 2014 Annual Conference Proceedings, Louisville, KY, USA, 23–28 March 2014. [Google Scholar]

- Stark, B.; Zhao, T.; Chen, Y. An analysis of the effect of the bidirectional reflectance distribution function on remote sensing imagery accuracy from Small Unmanned Aircraft Systems. In Proceedings of the International Conference on Unmanned Aircraft Systems (ICUAS), Arlington, VA, USA, 7–10 June 2016. [Google Scholar]

- Roy, D.P.; Li, J.; Zhang, H.K.; Yan, L.; Huang, H.; Li, Z. Examination of Sentinel-2A multi-spectral instrument (MSI) reflectance anisotropy and the suitability of a general method to normalize MSI reflectance to nadir BRDF adjusted reflectance. Remote Sens. Environ. 2017, 199, 25–38. [Google Scholar] [CrossRef]

- Taboada, A.; Tárrega, R.; Marcos, E.; Valbuena, L.; Suárez-Seoane, S.; Calvo, L. Fire recurrence and emergency post-fire management influence seedling recruitment and growth by altering plant interactions in fire-prone ecosystems. For. Ecol. Manag. 2017, 402, 63–75. [Google Scholar] [CrossRef]

- Koci, J.; Jarihani, B.; Leon, J.X.; Sidle, R.C.; Wilkinson, S.N.; Bartley, R. Assessment of UAV and Ground-Based Structure from Motion with Multi-View Stereo Photogrammetry in a Gullied Savanna Catchment. ISPRS Int. Geo-Inf. 2017, 6, 328. [Google Scholar] [CrossRef]

- Laporterie-Dejean, F.; Boissezon, H.; Flouzat, G.; Lefevre-Fonollosa, M.J. Thematic and statistical evaluations of five panchromatic/multispectral fusion methods on simulated PLEIADES-HR images. Inf. Fusion 2005, 6, 193–212. [Google Scholar] [CrossRef]

- Thomas, C.; Ranchin, T.; Wald, L.; Chanussot, J. Synthesis of multispectral images to high spatial resolution: A critical review of fusion methods based on remote sensing physics. IEEE Trans. Geosci. Remote Sens. 2008, 46, 1301–1312. [Google Scholar] [CrossRef] [Green Version]

- Tu, T.M.; Su, S.C.; Shyu, H.C.; Huang, P.S. A new look at IHS-like image fusion methods. Inf. Fusion 2001, 2, 177–186. [Google Scholar] [CrossRef]

- Karl-Lehmann, J.R.; Nieberding, F.; Prinz, T.; Knoth, C. Analysis of Unmanned Aerial System-Based CIR Images in Forestry—A New Perspective to Monitor Pest Infestation Levels. Forests 2015, 6, 594–612. [Google Scholar] [CrossRef] [Green Version]

- Mesas-Carrascosa, F.J.; Torres-Sánchez, J.; Clavero-Rumbao, I.; García-Ferrer, A.; Peña, J.M.; Borra-Serrano, I.; López-Granados, F. Assessing Optimal Flight Parameters for Generating Accurate Multispectral Orthomosaicks by UAV to Support Site-Specific Crop Management. Remote Sens. 2015, 7, 12793–12814. [Google Scholar] [CrossRef]

- Shahbazi, M.; Sohn, G.; Théau, J.; Menard, P. Development and Evaluation of a UAV-Photogrammetry System for Precise 3D Environmental Modeling. Sensors 2015, 15, 27493–27524. [Google Scholar] [CrossRef] [PubMed]

- Harwin, S.; Lucieer, A. Assessing the Accuracy of Georeferenced Point Clouds Produced via Multi-View Stereopsis from Unmanned Aerial Vehicle (UAV) Imagery. Remote Sens. 2012, 4, 1573–1599. [Google Scholar] [CrossRef]

| WV-2 Green | WV-2 Red | WV-2 Red Edge | WV-2 NIR1 | |

|---|---|---|---|---|

| UAV (20 cm) green | 0.6832 | |||

| UAV (20 cm) red | 0.7426 | |||

| UAV (20 cm) red edge | 0.5873 | |||

| UAV (20 cm) NIR | 0.6312 | |||

| UAV (1 m) green | 0.7385 | |||

| UAV (1 m) red | 0.7649 | |||

| UAV (1 m) red edge | 0.6134 | |||

| UAV (1 m) NIR | 0.6476 | |||

| UAV (2 m) green | 0.7871 | |||

| UAV (2 m) red | 0.7968 | |||

| UAV (2 m) red edge | 0.6623 | |||

| UAV (2 m) NIR | 0.6942 |

| Green | Red | Red Edge | NIR | |

|---|---|---|---|---|

| UAV 20 cm | 0.00083 | 0.00170 | 0.00134 | 0.00246 |

| UAV 1 m | 0.00079 | 0.00161 | 0.00122 | 0.00225 |

| UAV 2 m | 0.00071 | 0.00146 | 0.00101 | 0.00206 |

| WV-2 | 0.00068 | 0.00132 | 0.00112 | 0.00211 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fernández-Guisuraga, J.M.; Sanz-Ablanedo, E.; Suárez-Seoane, S.; Calvo, L. Using Unmanned Aerial Vehicles in Postfire Vegetation Survey Campaigns through Large and Heterogeneous Areas: Opportunities and Challenges. Sensors 2018, 18, 586. https://doi.org/10.3390/s18020586

Fernández-Guisuraga JM, Sanz-Ablanedo E, Suárez-Seoane S, Calvo L. Using Unmanned Aerial Vehicles in Postfire Vegetation Survey Campaigns through Large and Heterogeneous Areas: Opportunities and Challenges. Sensors. 2018; 18(2):586. https://doi.org/10.3390/s18020586

Chicago/Turabian StyleFernández-Guisuraga, José Manuel, Enoc Sanz-Ablanedo, Susana Suárez-Seoane, and Leonor Calvo. 2018. "Using Unmanned Aerial Vehicles in Postfire Vegetation Survey Campaigns through Large and Heterogeneous Areas: Opportunities and Challenges" Sensors 18, no. 2: 586. https://doi.org/10.3390/s18020586