Fusion of an Ensemble of Augmented Image Detectors for Robust Object Detection

Abstract

:1. Introduction

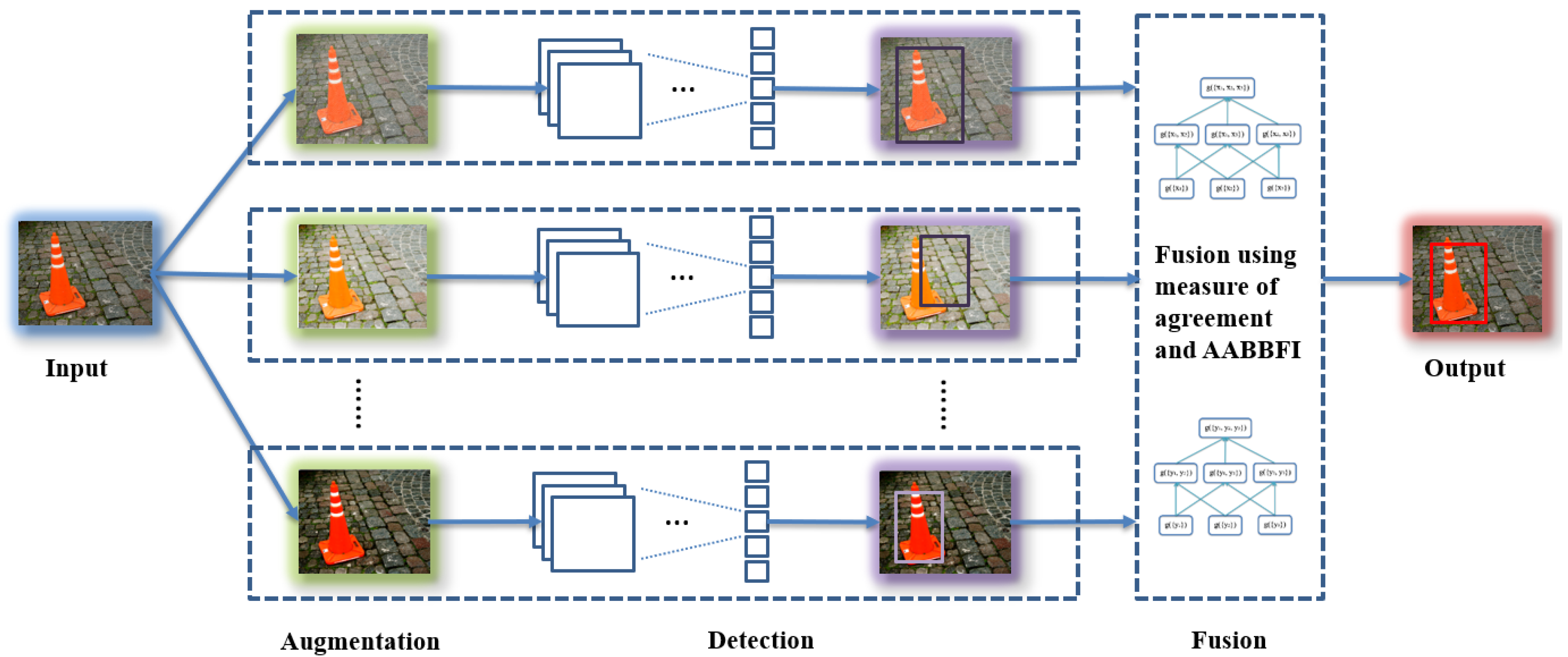

- A detection fusion system is proposed, which uses online image augmentation before the detection stage (i.e., during testing) and fusion after to increase detection accuracy and robustness.

- The proposed fusion method is a computationally-intelligent approach, based on the dynamic analysis of agreement among inputs for each case.

- The proposed system produces more accurate detection results in real time; therefore, it helps with developing an accurate and fast object detection sub-system for robust ADAS.

- The Choquet Integral (ChI) is extended from a one-dimensional interval to a two-dimensional axis-aligned bounding box (AABB).

2. Background

2.1. Fuzzy Measure

- (Normality) ,

- (Monotonicity) If and , then .

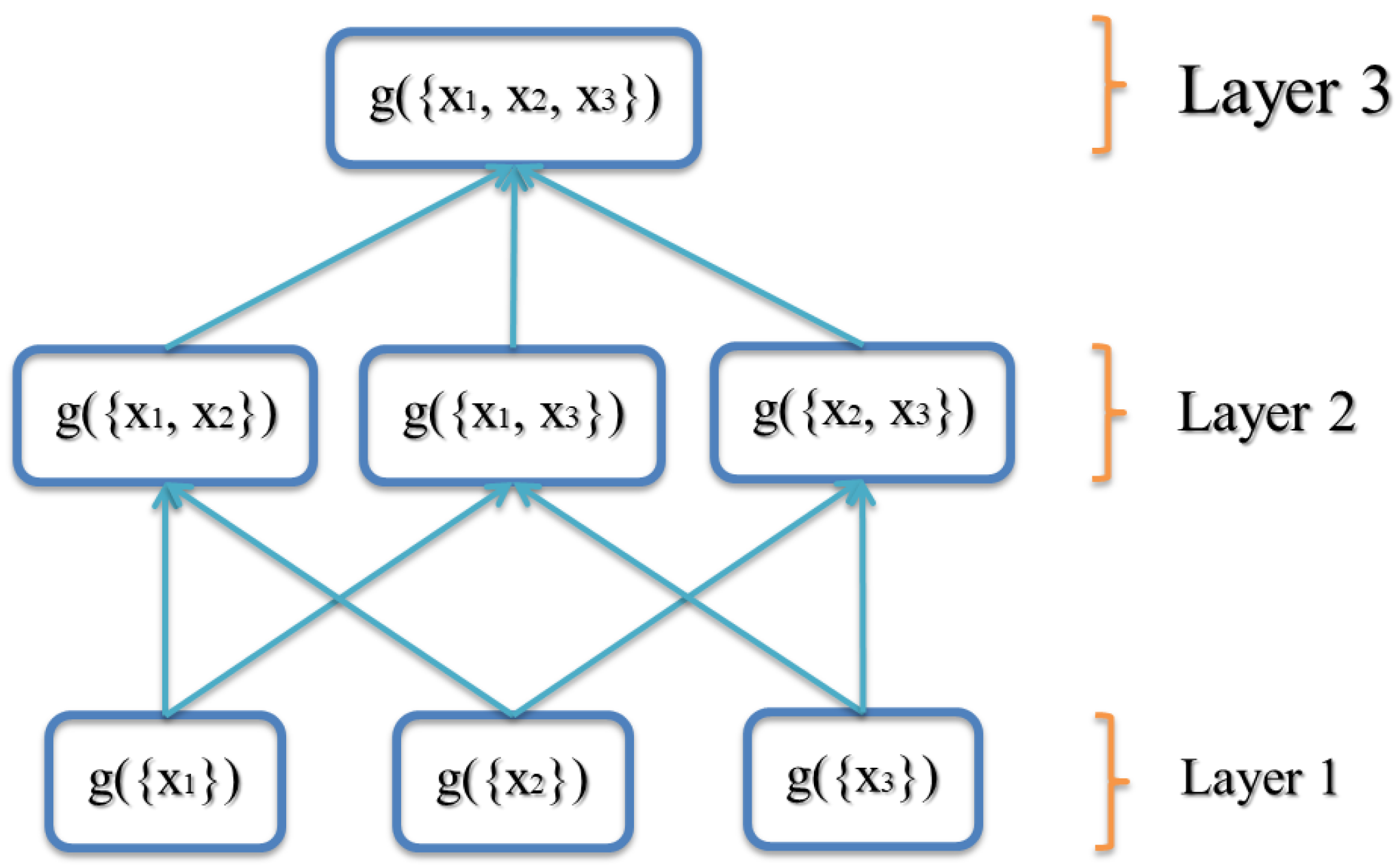

2.2. Choquet Integral

2.3. Interval-Valued Fuzzy Integral

2.4. Fuzzy Measure of Agreement

2.5. Object Detection Using Deep Learning in ADAS

2.6. Image Augmentation

2.7. Model Ensembles

3. Proposed System

3.1. Overview

| Algorithm 1 Algorithm for the proposed detection fusion system. |

|

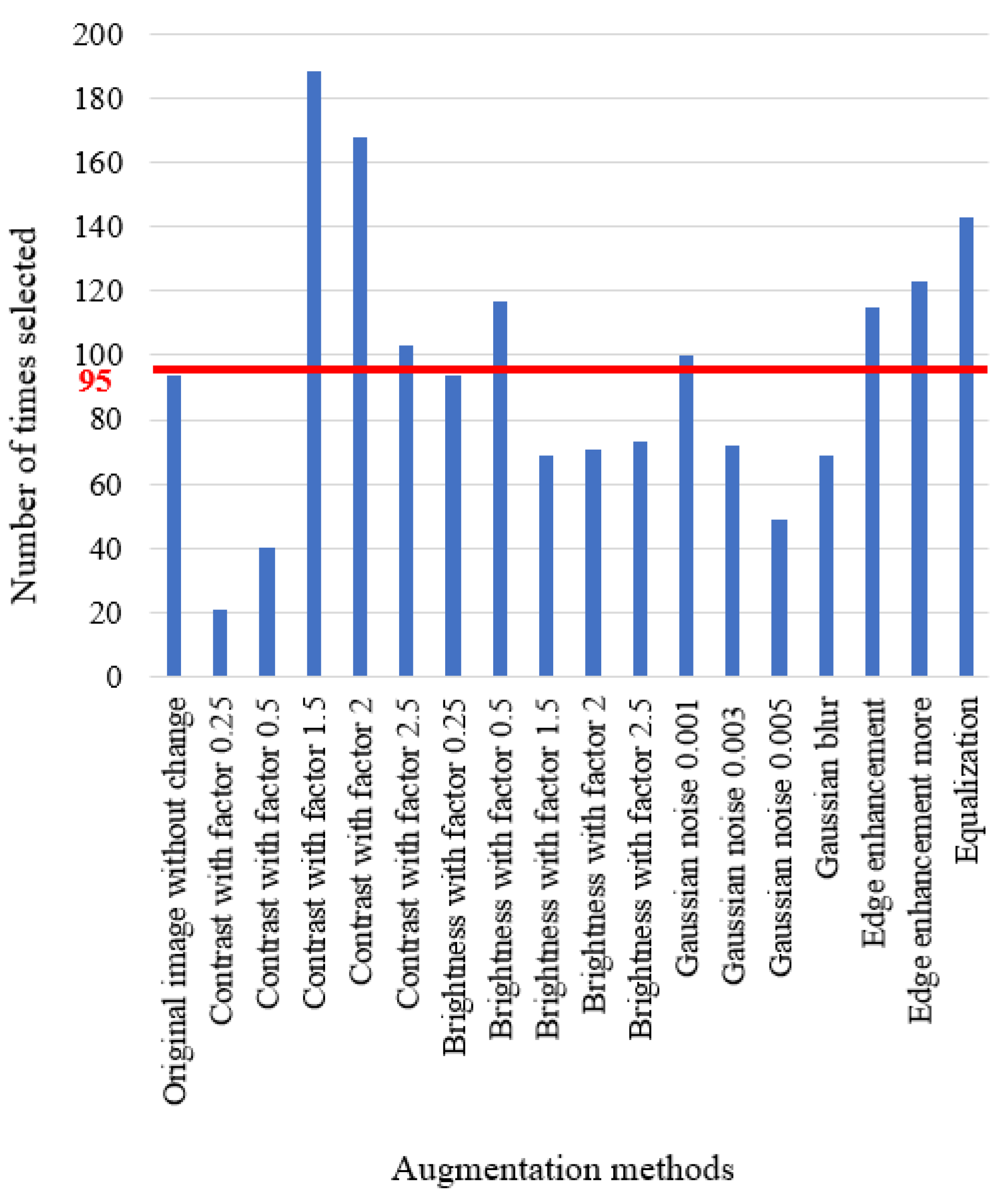

3.2. Augmentation

3.3. Detection

3.4. Fusion

4. Examples and Discussion

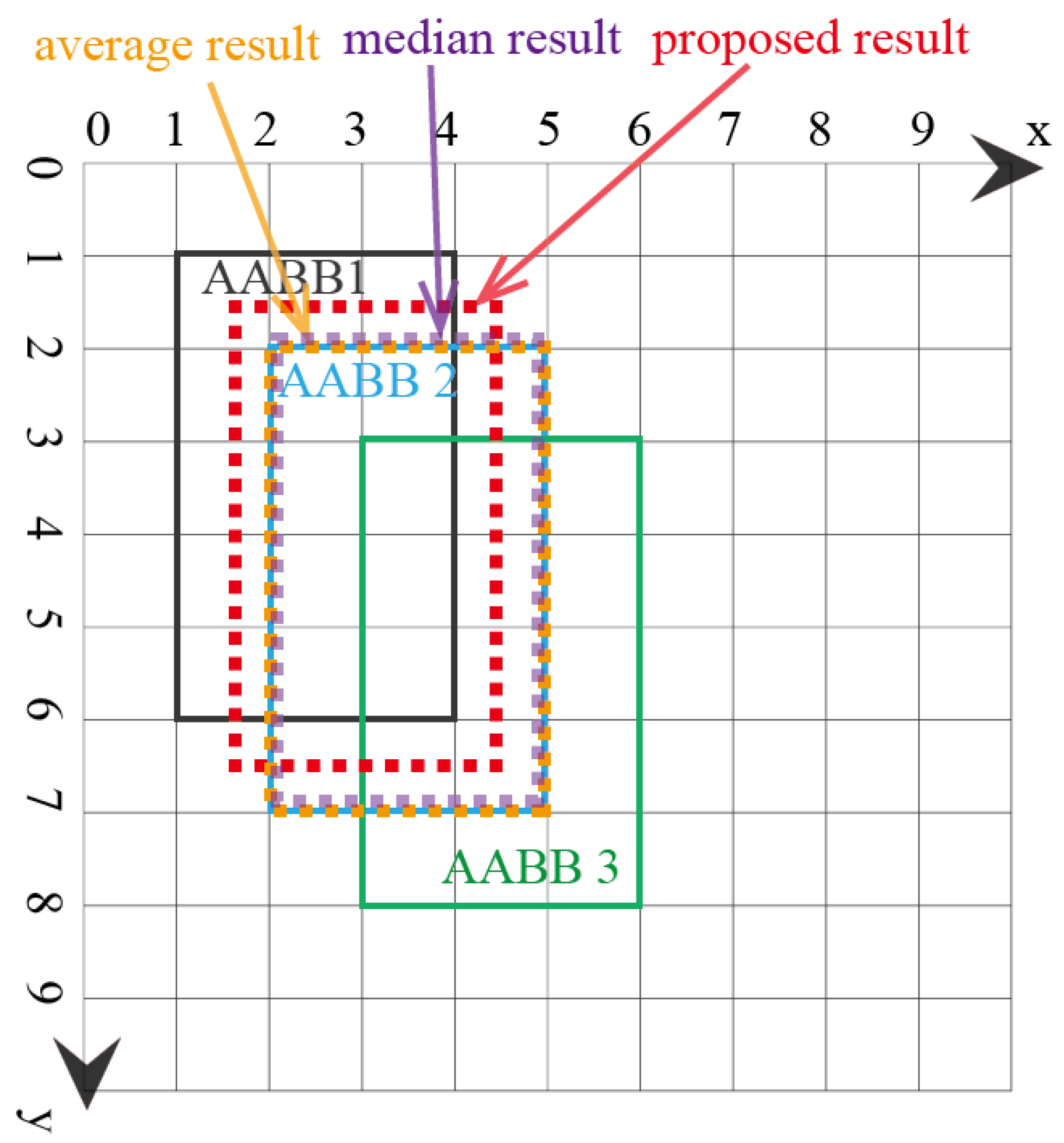

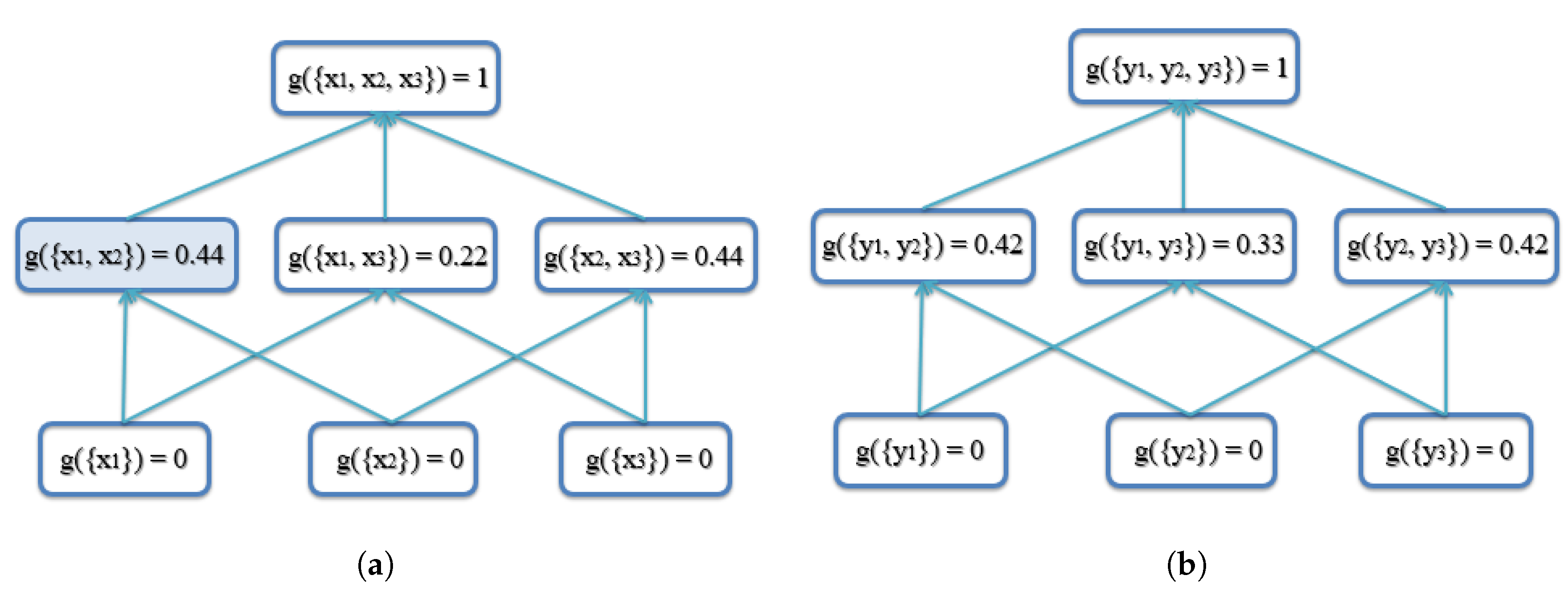

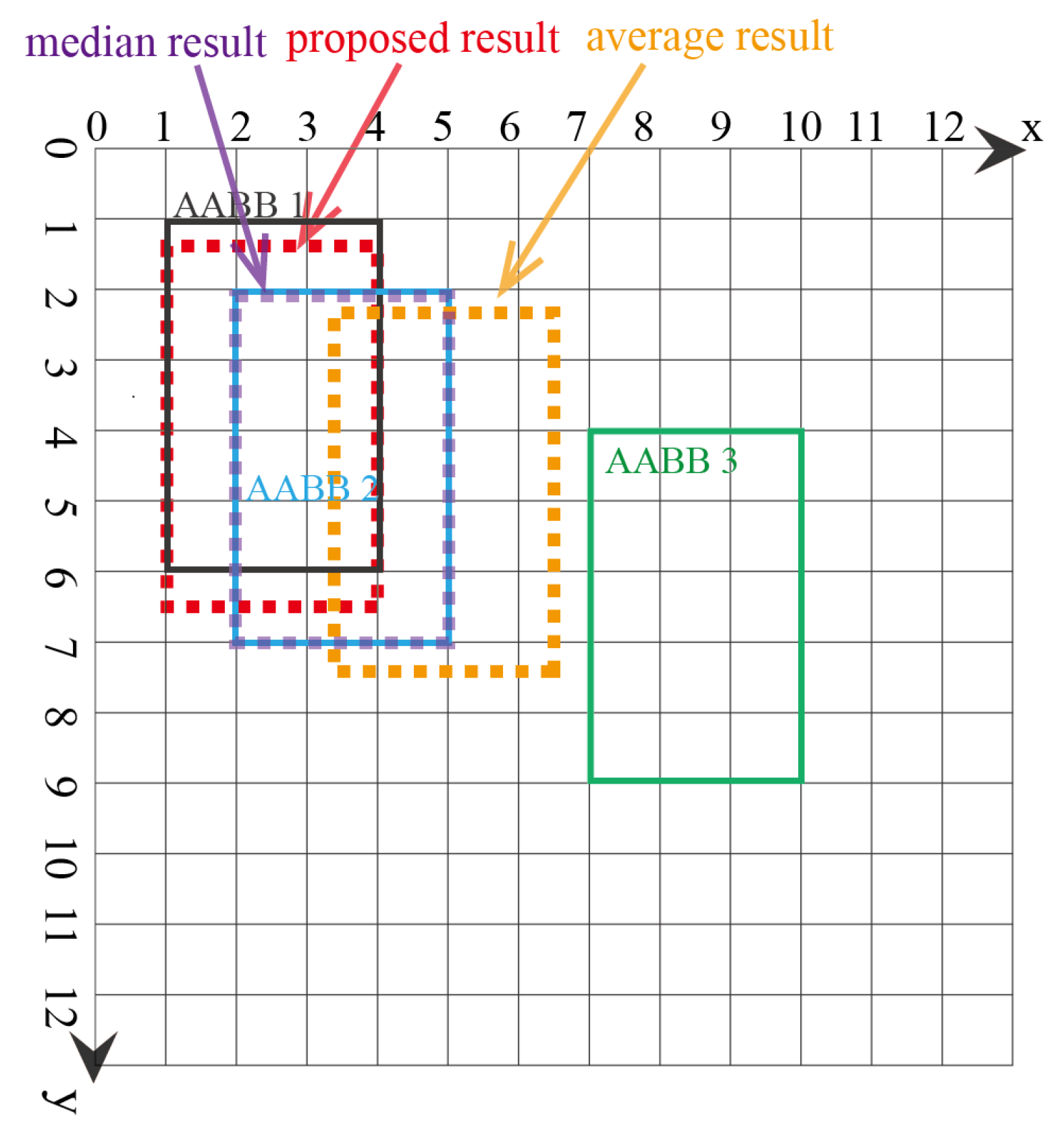

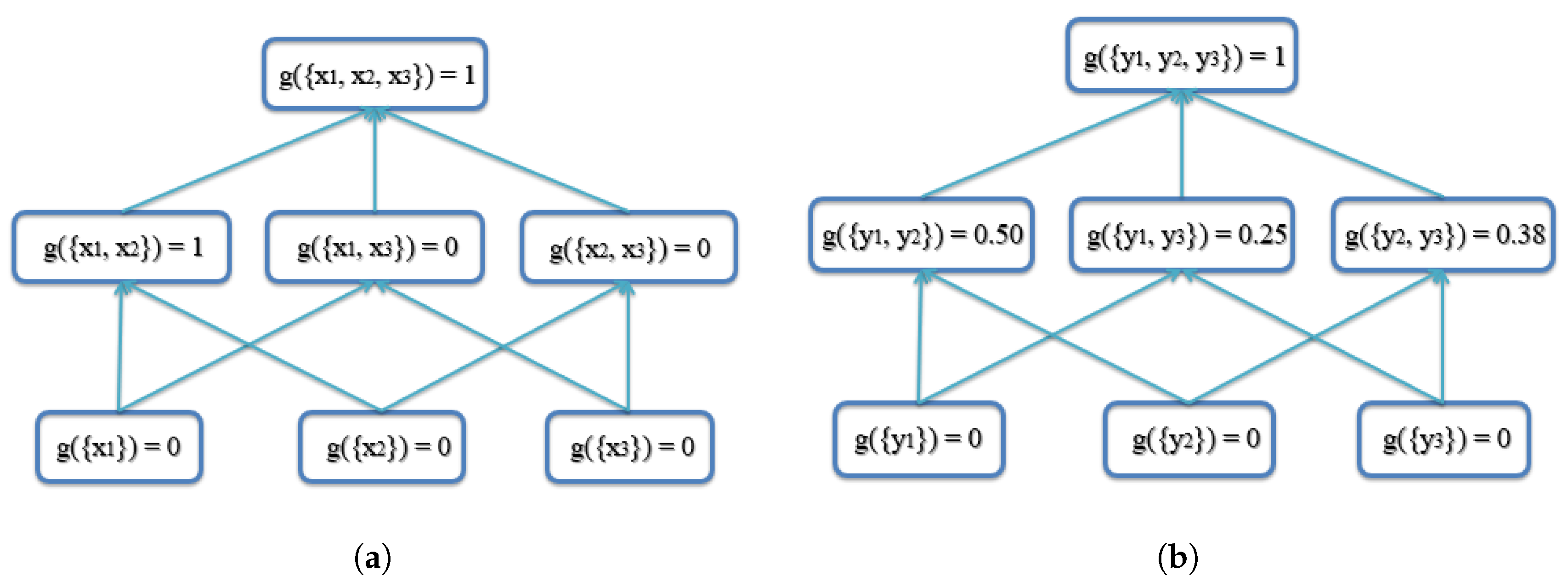

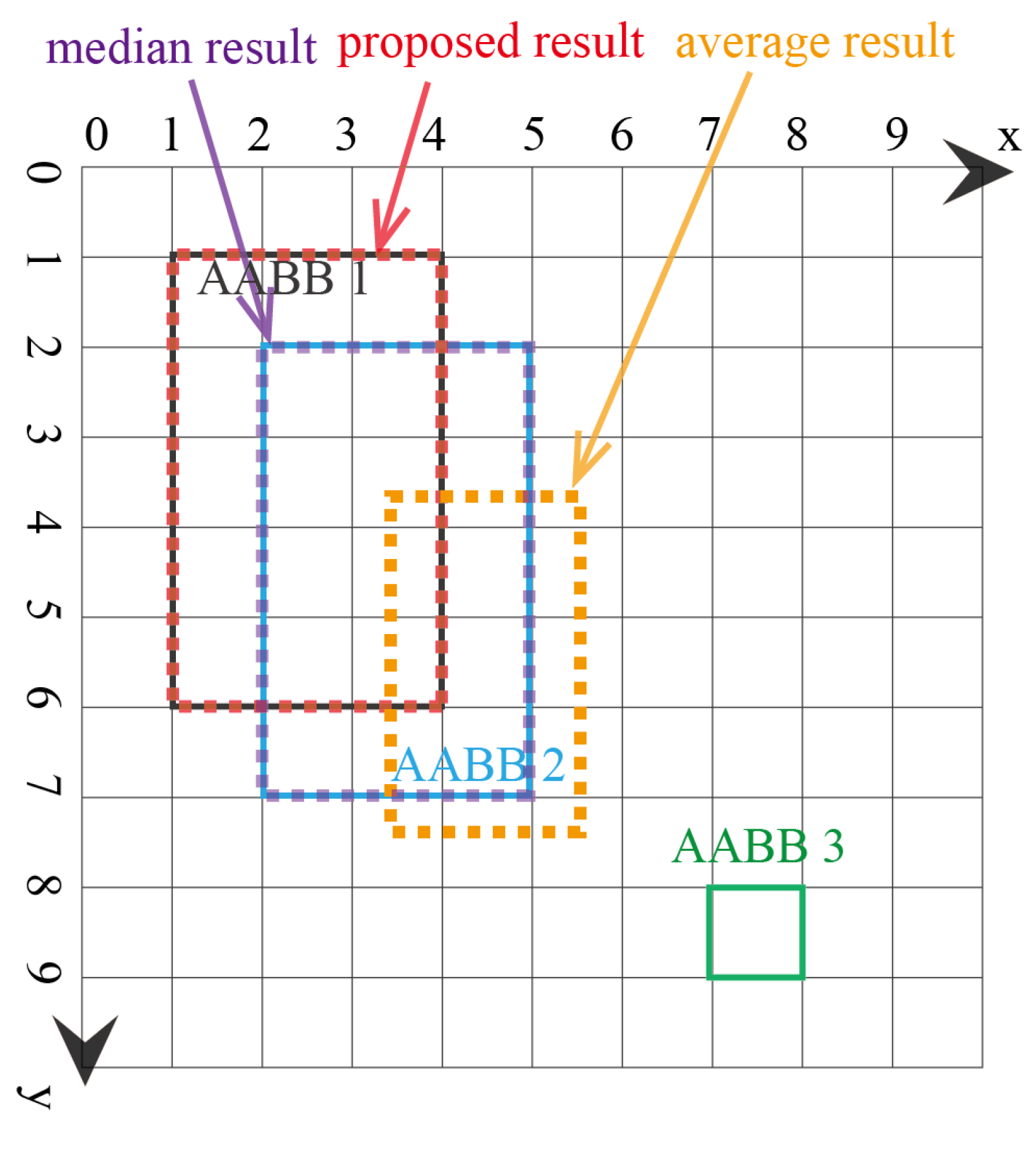

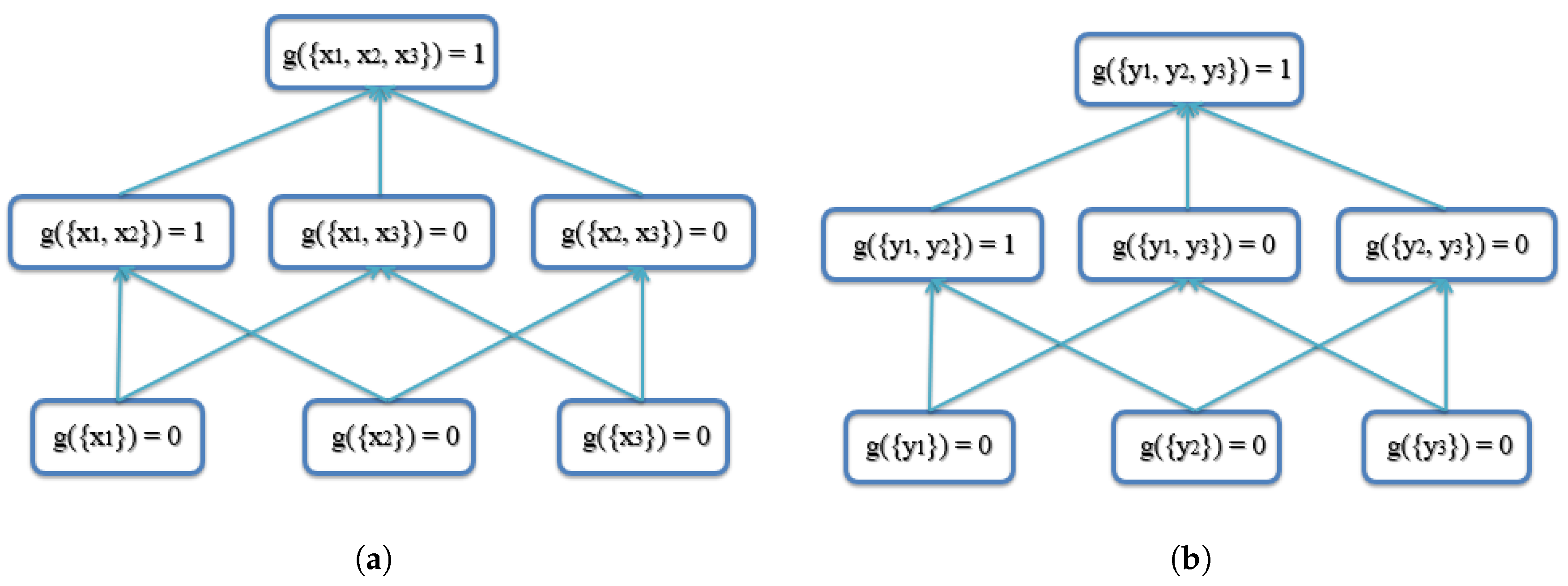

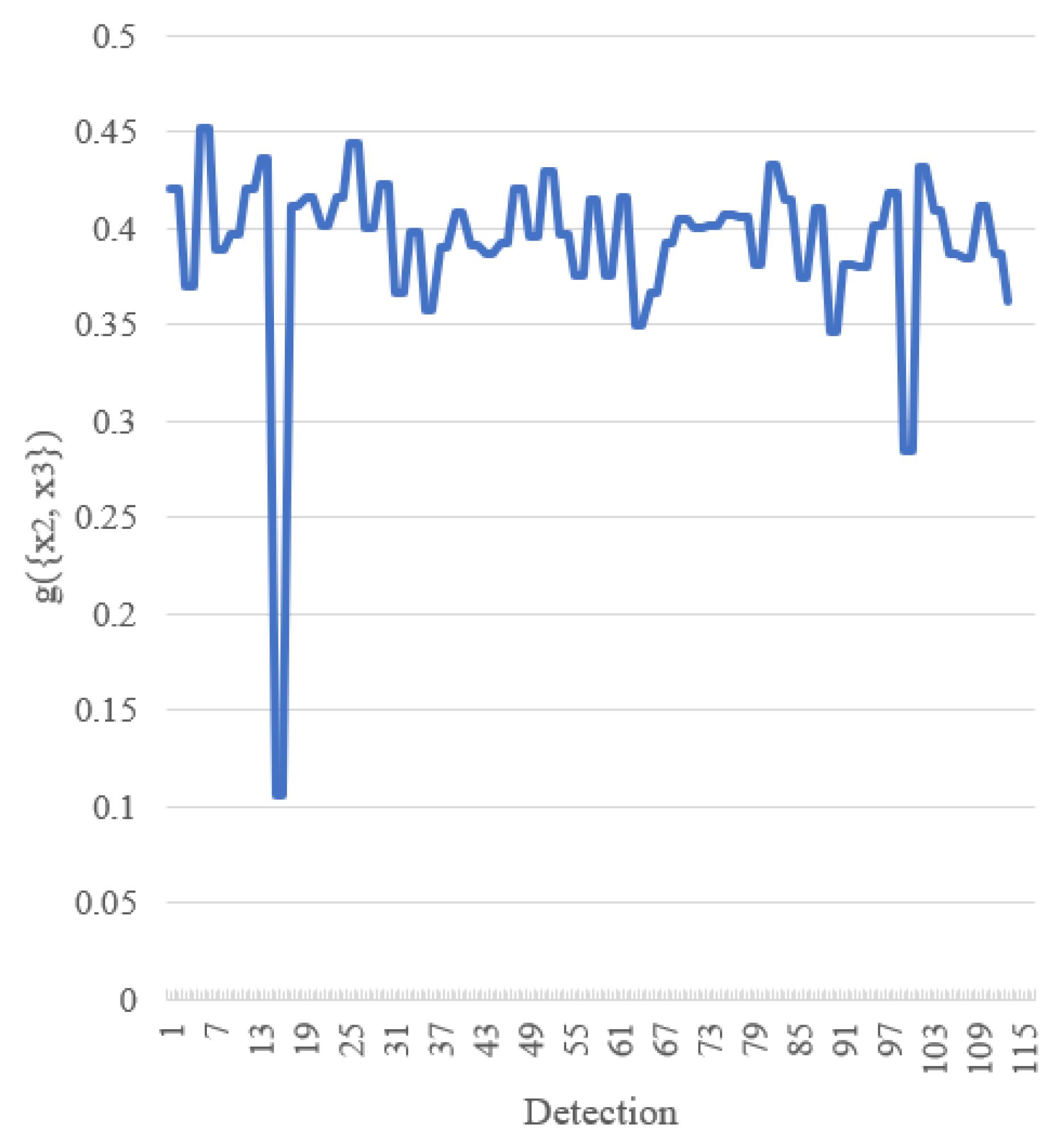

4.1. Synthetic Examples

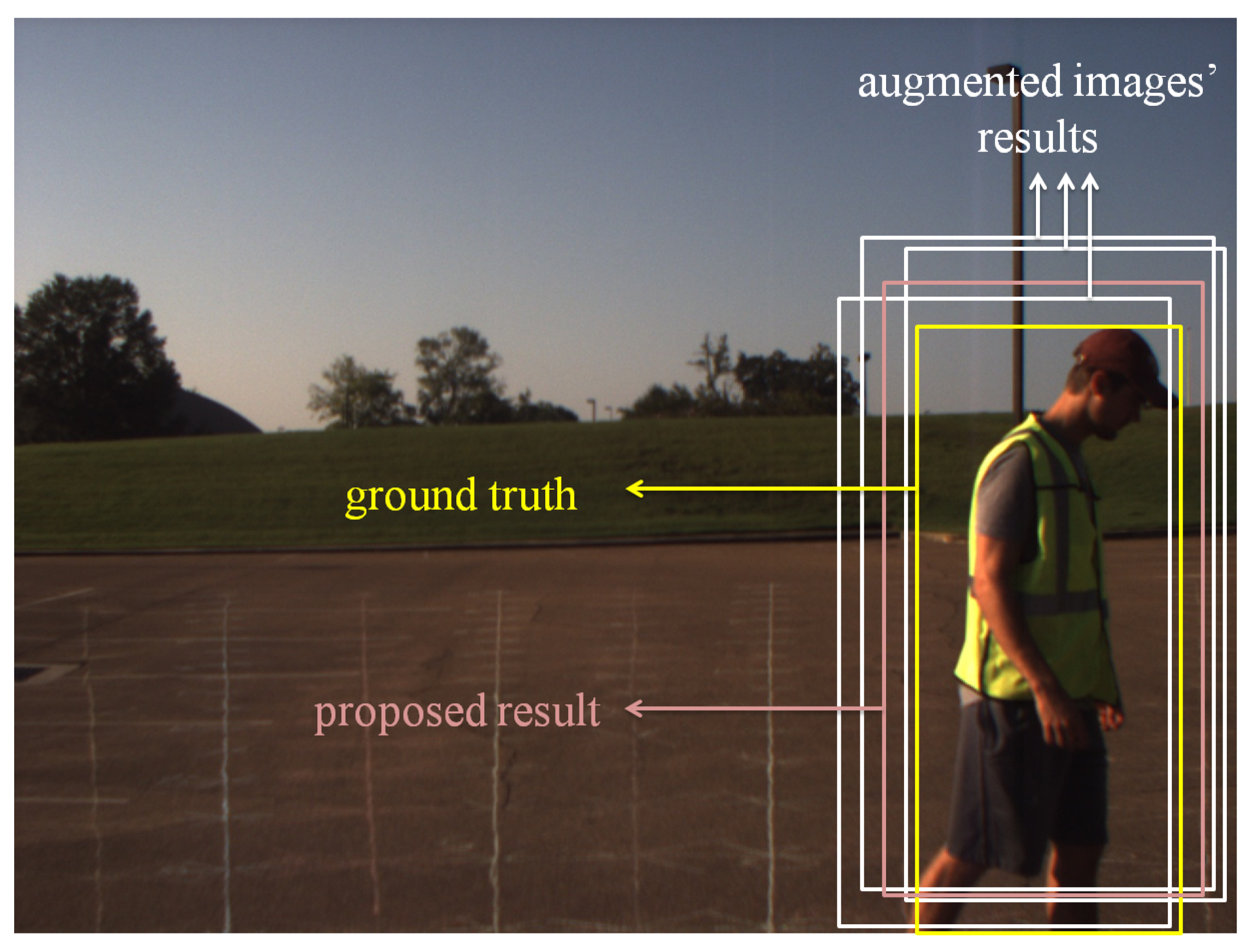

4.2. ADAS Examples

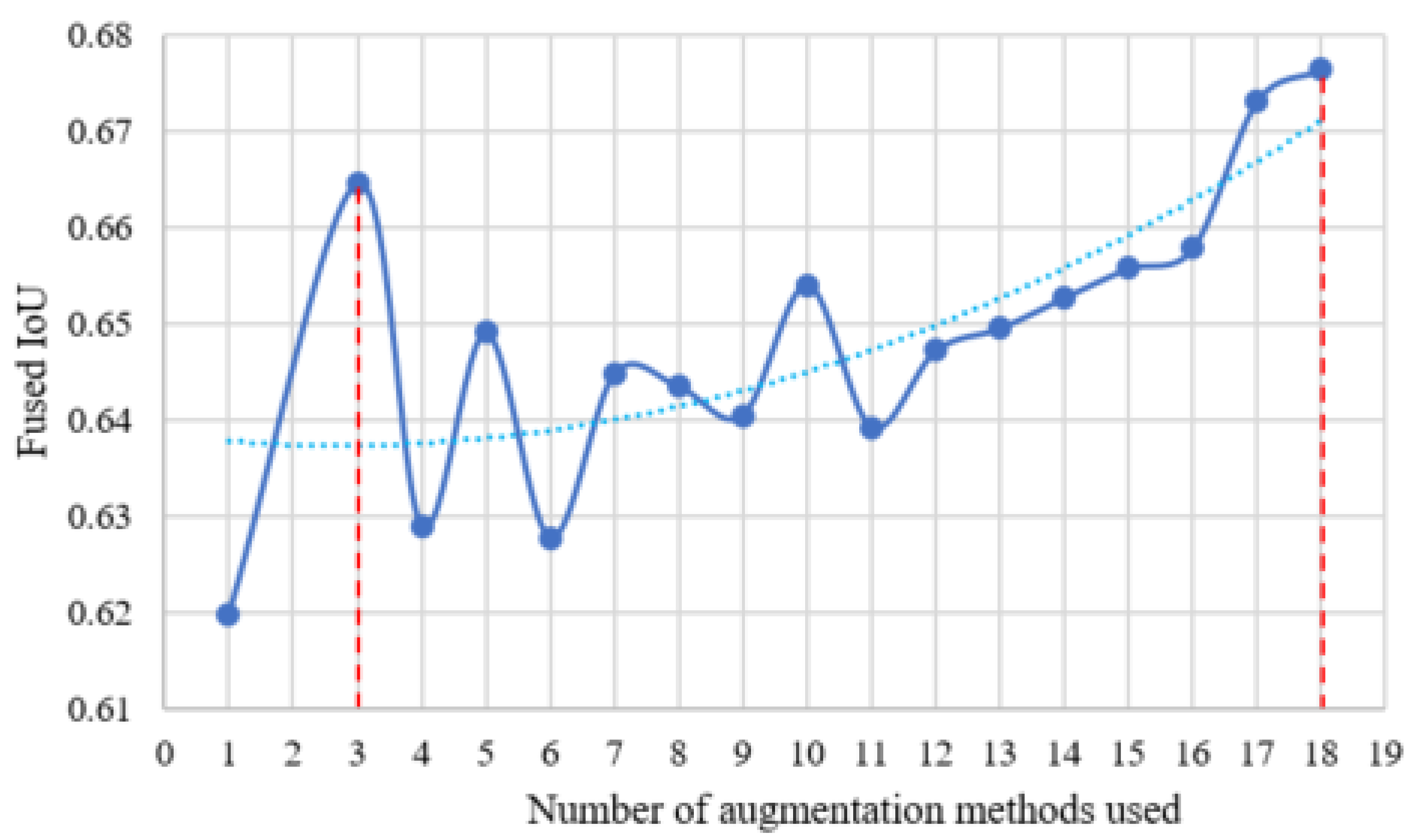

4.2.1. Augmentation Methods

4.2.2. Datasets

4.2.3. Training Parameters

4.2.4. Results

4.3. Discussion

5. Conclusions and Future Work

Author Contributions

Conflicts of Interest

References

- Everingham, M.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes (voc) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Wei, P.; Ball, J.E.; Anderson, D.T.; Harsh, A.; Archibald, C. Measuring conflict in a Multi-source environment as a normal measure. In Proceedings of the 2015 IEEE 6th International Workshop on Computational Advances in Multi-Sensor Adaptive Processing (CAMSAP), Cancun, Mexico, 13–16 December 2015; pp. 225–228. [Google Scholar]

- Wei, P.; Ball, J.E.; Anderson, D.T. Multi-sensor conflict measurement and information fusion. In Signal Processing, Sensor/Information Fusion, and Target Recognition XXV; International Society for Optics and Photonics: Baltimore, MD, USA, 2016; Volume 9842, p. 98420F. [Google Scholar]

- Sugeno, M. Theory of Fuzzy Integrals and Its Applications. Ph.D. Thesis, Tokyo Institute of Technology, Tokyo, Japan, 1974. [Google Scholar]

- Murofushi, T.; Sugeno, M. An interpretation of fuzzy measures and the Choquet integral as an integral with respect to a fuzzy measure. Fuzzy Sets Syst. 1989, 29, 201–227. [Google Scholar] [CrossRef]

- Grabisch, M.; Murofushi, T.; Sugeno, M. Fuzzy Measures and Integrals: Theory and Applications; Physica-Verlag: New York, NY, USA, 2000. [Google Scholar]

- Anderson, D.T.; Havens, T.C.; Wagner, C.; Keller, J.M.; Anderson, M.F.; Wescott, D.J. Extension of the fuzzy integral for general fuzzy set-valued information. IEEE Trans. Fuzzy Syst. 2014, 22, 1625–1639. [Google Scholar] [CrossRef]

- Wagner, C.; Anderson, D. Extracting meta-measures from data for fuzzy aggregation of crowd sourced information. In Proceedings of the 2012 IEEE International Conference on Fuzzy Systems (FUZZ-IEEE), Brisbane, QLD, Australia, 10–15 June 2012; pp. 1–8. [Google Scholar]

- Havens, T.; Anderson, D.; Wagner, C.; Deilamsalehy, H.; Wonnacott, D. Fuzzy integrals of crowd-sourced intervals using a measure of generalized accord. In Proceedings of the 2013 IEEE International Conference on Fuzzy Systems (FUZZ), Hyderabad, India, 7–10 July 2013; pp. 1–8. [Google Scholar]

- Havens, T.; Anderson, D.; Wagner, C. Data-Informed Fuzzy Measures for Fuzzy Integration of Intervals and Fuzzy Numbers. IEEE Trans. Fuzzy Syst. 2015, 23, 1861–1875. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems; NIPS: Stateline, NV, USA, 2012; pp. 1097–1105. [Google Scholar]

- Zeiler, M.D.; Fergus, R. Visualizing and understanding convolutional networks. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Springer: Cham, Switzerland, 2014; pp. 818–833. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv, 2014; arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. arXiv, 2016; arXiv:1612.08242. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Advances in Neural Information Processing Systems; NIPS: Montreal, Quebec, Canada, 2015; pp. 91–99. [Google Scholar]

- Szegedy, C.; Reed, S.; Erhan, D.; Anguelov, D.; Ioffe, S. Scalable, high-quality object detection. arXiv, 2014; arXiv:1412.1441. [Google Scholar]

- Li, Y.; He, K.; Sun, J. R-fcn: Object detection via region-based fully convolutional networks. In Advances in Neural Information Processing Systems; NIPS: Barcelona, Spain, 2016; pp. 379–387. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Gidaris, S.; Komodakis, N. Locnet: Improving localization accuracy for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 27–30 June 2016; pp. 789–798. [Google Scholar]

- Girshick, R. Fast r-cnn. arXiv, 2015; arXiv:1504.08083. [Google Scholar]

- Felzenszwalb, P.F.; Girshick, R.B.; McAllester, D.; Ramanan, D. Object detection with discriminatively trained part-based models. IEEE Trans. Pattern Anal. Mach. Intel. 2010, 32, 1627–1645. [Google Scholar] [CrossRef] [PubMed]

- Hosang, J.; Benenson, R.; Schiele, B. Learning non-maximum suppression. arXiv, 2017; arXiv:1705.02950. [Google Scholar]

- Bodla, N.; Singh, B.; Chellappa, R.; Davis, L.S. Improving object detection with one line of code. arXiv, 2017; arXiv:1704.04503. [Google Scholar]

- Lin, G.; Milan, A.; Shen, C.; Reid, I. Refinenet: Multi-path refinement networks for high-resolution semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Hawaii Convention Center, HI, USA, 21–26 July 2017. [Google Scholar]

- Howard, A.G. Some improvements on deep convolutional neural network based image classification. arXiv, 2013; arXiv:1312.5402. [Google Scholar]

- Huang, G.; Li, Y.; Pleiss, G.; Liu, Z.; Hopcroft, J.E.; Weinberger, K.Q. Snapshot ensembles: Train 1, get M for free. arXiv, 2017; arXiv:1704.00109. [Google Scholar]

- Malisiewicz, T.; Gupta, A.; Efros, A.A. Ensemble of exemplar-svms for object detection and beyond. In Proceedings of the 2011 IEEE International Conference on Computer Vision (ICCV), Barcelona, Spain, 6–13 November 2011; pp. 89–96. [Google Scholar]

- Maree, R.; Geurts, P.; Piater, J.; Wehenkel, L. Random subwindows for robust image classification. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–25 June 2005; Volume 1, pp. 34–40. [Google Scholar]

- Rafiee, G.; Dlay, S.S.; Woo, W.L. Region-of-interest extraction in low depth of field images using ensemble clustering and difference of Gaussian approaches. Pattern Recognit. 2013, 46, 2685–2699. [Google Scholar] [CrossRef]

- Lloyd, S. Least squares quantization in PCM. IEEE Trans. Inf. Theory 1982, 28, 129–137. [Google Scholar] [CrossRef]

- Islam, M.A.; Anderson, D.T.; Pinar, A.J.; Havens, T.C. Data-Driven Compression and Efficient Learning of the Choquet Integral. IEEE Trans. Fuzzy Syst. 2017. [Google Scholar] [CrossRef]

- Hu, P.; Ramanan, D. Finding tiny faces. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Hawaii Convention Center, HI, USA, 21–26 July 2017; pp. 1522–1530. [Google Scholar]

- De Barros, L.C.; Bassanezi, R.C.; Lodwick, W.A. The Extension Principle of Zadeh and Fuzzy Numbers. In A First Course in Fuzzy Logic, Fuzzy Dynamical Systems, and Biomathematics; Springer: Berlin/Heidelberg, Germany, 2017; pp. 23–41. [Google Scholar]

- Everingham, M.; Winn, J. The PASCAL Visual Object Classes Challenge 2012 (VOC2012) Development Kit. Available online: http://host.robots.ox.ac.uk/pascal/VOC/voc2012/#devkit (accessed on 13 November 2017).

- Jaccard, P. Étude comparative de la distribution florale dans une portion des Alpes et des Jura. Bull. Soc. Vaud. Sci. Nat. 1901, 37, 547–579. [Google Scholar]

- Lundh, F.; Contributors. Python Imaging Library. 2016. Available online: http://pillow.readthedocs.io/en/3.1.x/index.html (accessed on 13 November 2017).

| Variable/Symbol | Description |

|---|---|

| AABB | Axis-Aligned Bounding Box |

| AABBFI | Axis-Aligned Bounding Box Fuzzy Integral |

| ADAS | Advanced Driver Assistance Systems |

| ChI | Choquet Integral |

| FI | Fuzzy Integral |

| FM | Fuzzy Measure |

| FPS | Frames Per Second |

| IoU | Intersection over Union |

| NMS | Non-Maxima Suppression |

| i-th data/information input | |

| Finite set of n data/information inputs | |

| I | Set of intervals, |

| g | Fuzzy measure g: → [0,1] |

| Fuzzy measure on input | |

| Fuzzy measure on inputs | |

| h | Real-valued evidence function |

| Real-valued evidence from input | |

| Interval-valued evidence from input | |

| Left endpoint of | |

| Right endpoint of | |

| Fuzzy integral of h with respect to g | |

| is a permutation of X, such that |

| No Fusion | With Fusion | ||||

|---|---|---|---|---|---|

| Fusion Method | Baseline | NMS | Average | Median | Proposed |

| YOLO Cone | |||||

| Average IoU | 0.6199 | 0.6676 | 0.6676 | 0.6717 | 0.6763 |

| Detection | 260/287 | 287/287 | 287/287 | 287/287 | 287/287 |

| YOLO Box | |||||

| Average IoU | 0.6722 | 0.6756 | 0.6925 | 0.6872 | 0.7031 |

| Detection | 200/200 | 200/200 | 200/200 | 200/200 | 200/200 |

| YOLO Pedestrian | |||||

| Average IoU | 0.7727 | 0.7023 | 0.7995 | 0.7966 | 0.8141 |

| Detection | 208/208 | 208/208 | 208/208 | 208/208 | 208/208 |

| Faster R-CNN Pedestrian | |||||

| Average IoU | 0.7402 | 0.7377 | 0.7660 | 0.7581 | 0.7499 |

| Detection | 206/208 | 206/208 | 206/208 | 206/208 | 206/208 |

| YOLO VOC 2007 All | |||||

| Average IoU | 0.6106 | 0.6654 | 0.6678 | 0.6728 | 0.6746 |

| mAP | 71.10% | 72.36% | 72.82% | 72.41% | 72.87% |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wei, P.; Ball, J.E.; Anderson, D.T. Fusion of an Ensemble of Augmented Image Detectors for Robust Object Detection. Sensors 2018, 18, 894. https://doi.org/10.3390/s18030894

Wei P, Ball JE, Anderson DT. Fusion of an Ensemble of Augmented Image Detectors for Robust Object Detection. Sensors. 2018; 18(3):894. https://doi.org/10.3390/s18030894

Chicago/Turabian StyleWei, Pan, John E. Ball, and Derek T. Anderson. 2018. "Fusion of an Ensemble of Augmented Image Detectors for Robust Object Detection" Sensors 18, no. 3: 894. https://doi.org/10.3390/s18030894