Blind UAV Images Deblurring Based on Discriminative Networks

Abstract

:1. Introduction

2. Background

3. Proposed Method

3.1. The Process of Obtaining Image Prior

3.1.1. The Structure of Discriminative Networks

3.1.2. The Loss Function

3.2. Deblurring the UAV Images

3.2.1. Estimating the Latent Image

3.2.2. Estimating Blur Kernel

| Algorithm 1: Blur kernel estimation |

| Input: Blur image Output: Blur kernel f Initialize f with results from the coarser level. While iteration i ≤ 5 do Solve for f using Equation (14) end while |

4. Experiments

4.1. Training Details of Discriminative Networks

4.2. Comparison and Qualitative Evaluation

4.2.1. Comparison of the Synthetic Blurred UAV Image Results

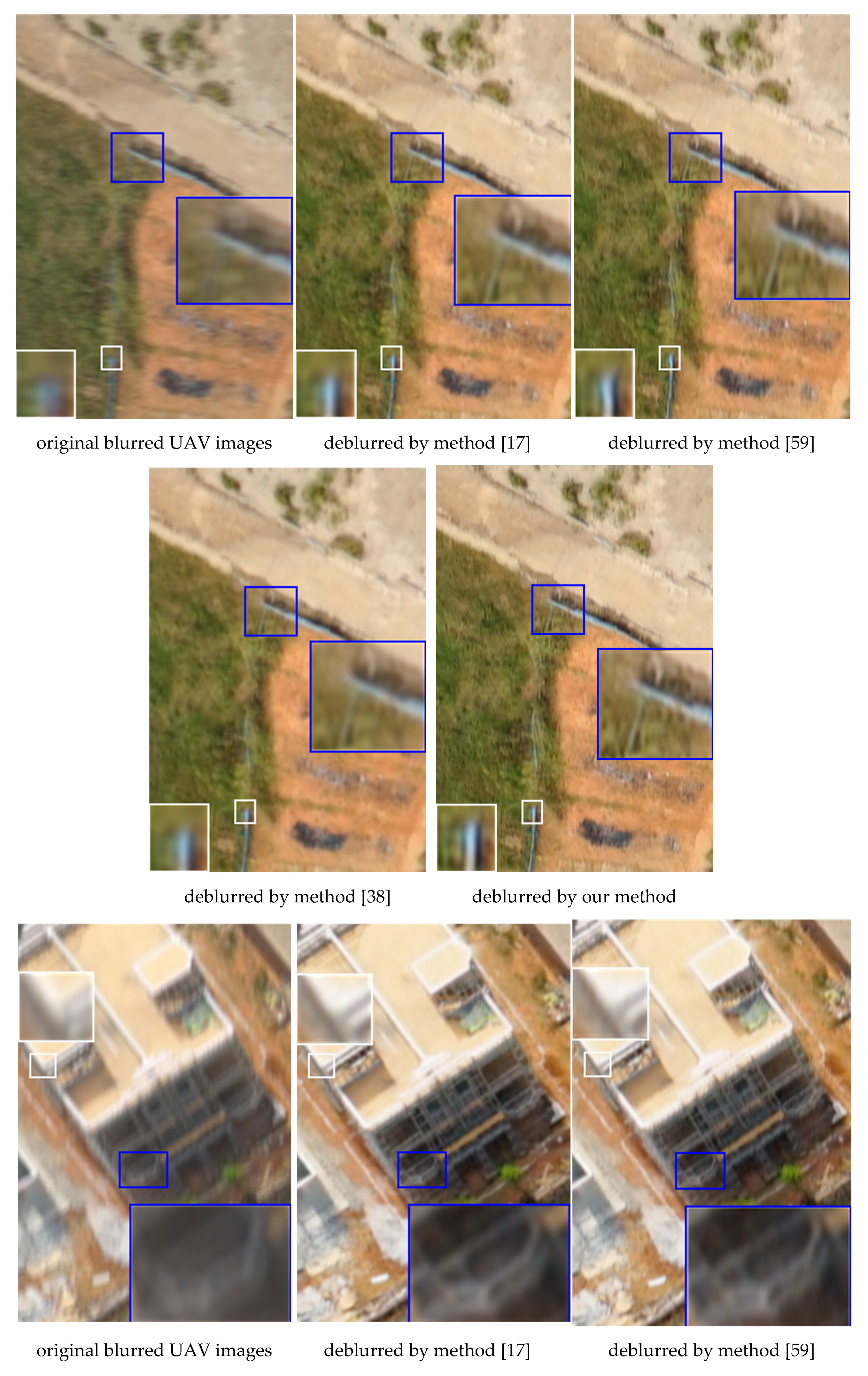

4.2.2. Comparison of the Real Blurred UAV Image

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Li, S.; Zhu, G.; Ge, P. Remote Sensing Image Deblurring Based on Grid Computation. Int. J. Min. Sci. Technol. 2006, 16, 409–412. [Google Scholar] [CrossRef]

- Papa, J.; Mascarenhas, N.; Fonseca, L.; Bensebaa, K. Convex restriction sets for CBERS-2 satellite image restoration. Int. J. Remote Sens. 2008, 29, 443–458. [Google Scholar] [CrossRef]

- Zhao, X.; Wang, F.; Huang, T.; Ng, M.; Plemmons, R. Deblurring and Sparse Unmixing for Hyperspectral Images. IEEE Trans. Geosci. Remote Sens. 2013, 51, 4045–4058. [Google Scholar] [CrossRef]

- Li, Q.; Dong, W.; Xu, Z.; Feng, H.; Chen, Y. Motion Blur Suppression Method Based on Dual Mode Imaging for Remote Sensing Image. Spacecr. Recover. Remote Sens. 2013, 34, 86–92. [Google Scholar]

- Mastriani, M. Denoising based on Wavelets and Deblurring via Self-Organizing Map for Synthetic Aperture Radar Images. arXiv, 2016; arXiv:1608.00274. Available online: https://arxiv.org/abs/1608.00274(accessed on 22 June 2018).

- Shen, H.; Du, L.; Zhang, L.; Gong, W. A Blind Restoration Method for Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2012, 9, 1037–1047. [Google Scholar] [CrossRef]

- Berisha, S.; Nagy, J.; Plemmons, R. Deblurring and Sparse Unmixing of Hyperspectral Images Using Multiple Point Spread Functions. SIAM J. Sci. Comput. 2015, 37, 389–406. [Google Scholar] [CrossRef]

- Liao, W.; Goossens, B.; Aelterman, J.; Luong, H.; Pižurica, A. Hyperspectral image deblurring with PCA and total variation. In Proceedings of the 5th Workshop on Hyperspectral Image & Signal Processing: Evolution in Remote Sensing, Gainesville, FL, USA, 26–28 June 2013; pp. 1–4. [Google Scholar]

- Ma, J.; Dimet, F. Deblurring From Highly Incomplete Measurements for Remote Sensing. IEEE Trans. Geosci. Remote Sens. 2009, 47, 792–802. [Google Scholar] [CrossRef] [Green Version]

- Palsson, F.; Sveinsson, J.; Ulfarsson, M.; Benediktsson, J. MTF-Based Deblurring Using a Wiener Filter for CS and MRA Pansharpening Methods. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 2255–2269. [Google Scholar] [CrossRef]

- Xie, M.; Yan, F. Half-blind remote sensing image restoration with partly unknown degradation. In Proceedings of the Seventh International Conference on Electronics and Information Engineering, Nanjing, China, 17–18 September 2016; p. 1032213. [Google Scholar]

- Wang, Z.; Geng, G. Investigation on Deblurring of Remote Sensing Images Using Bayesian Principle. In Proceedings of the Fourth International Conference on Image and Graphics (ICIG 2007), Washington, DC, USA, 22–24 August 2007; pp. 160–163. [Google Scholar]

- Tang, C.; Chen, Y.; Feng, H.; Xu, Z.; Li, Q. Motion Deblurring based on Local Temporal Compressive Sensing for Remote Sensing Image. Opt. Eng. 2016, 55, 093106. [Google Scholar] [CrossRef]

- Chen, Y.; Wu, J.; Xu, Z.; Li, Q.; Feng, H. Image deblurring by motion estimation for remote sensing. In Proceedings of the Satellite data compression, communications, and processing VI, San Diego, CA, USA, 3–5 August 2010; Volume 7810, p. 78100U. [Google Scholar]

- He, Y.; Liu, J.; Liang, Y. An Improved Robust Blind Motion Deblurring Algorithm for Remote Sensing Images. In Proceedings of the International Symposium on Optoelectronic Technology and Application Conference, Beijing, China, 9–11 May 2016; p. 101573A. [Google Scholar]

- Abrahams, A.; Oram, C.; Lozano-Gracia, N. Deblurring DMSP nighttime lights: A new method using Gaussian filters and frequencies of illumination. Remote Sens. Environ. 2018, 210, 242–258. [Google Scholar] [CrossRef]

- Cao, S.; Tan, W.; Xing, K.; He, H.; Jiang, K. Dark channel inspired deblurring method for remote sensing image. J. Appl. Remote Sens. 2018, 12, 015012. [Google Scholar] [CrossRef]

- Dong, W.; Feng, H.; Xu, Z.; Li, Q. A piecewise local regularized Richardson–Lucy algorithm for remote sensing image deconvolution. Opt. Laser Technol. 2011, 43, 926–933. [Google Scholar] [CrossRef]

- Jidesh, P.; Balaji, B. Deep Learning for Image Denoising. Int. J. Remote Sens. 2018. [Google Scholar] [CrossRef]

- Yu, C.; Tan, H.; Chen, Q. Multi-Scale Image Deblurring Based on Local Region Selection and Image Block Classification. In Proceedings of the 2017 International Conference on Wireless Communications, Networking and Applications WCNA2017, Shenzhen, China, 20–22 October 2017; pp. 256–260. [Google Scholar]

- Xu, Z.; Ye, P.; Cui, G.; Feng, H.; Li, Q. Image Restoration for Large-motion Blurred Lunar Remote Sensing Image. Soc. Photo-Opt. Instrum. Eng. 2016, 10025, 1025534. [Google Scholar] [CrossRef]

- Raskar, R.; Agrawal, A.; Tumblin, J. Coded Exposure Photography: Motion Deblurring using Fluttered Shutter. ACM Trans. Graph. 2006, 25, 795–804. [Google Scholar] [CrossRef]

- Lelégard, L.; Delaygue, E.; Brédif, M.; Vallet, B. Detecting and Correcting Motion Blur from Images Shot with Channel-Dependent Exposure Time. In Proceedings of the ISPRS Congress 2012, Melbourne, Australia, 25 August–1 September 2012; pp. 341–346. [Google Scholar]

- Tai, Y.; Du, H.; Brown, M.; Lin, L. Correction of Spatially Varying Image and Video Motion Blur Using a Hybrid Camera. IEEE Trans. Image Process. 2010, 32, 1012–1028. [Google Scholar] [CrossRef] [Green Version]

- Ioffe, S.; Szegedy, S. Good Image Priors for Non-blind Deconvolution. In Proceedings of the ECCV 2014, Zurich, Switzerland, 6–12 September 2014; pp. 231–246. [Google Scholar]

- Levin, A.; Weiss, Y.; Durand, F.; Freeman, W. Efficient marginal likelihood optimization in blind deconvolution. In Proceedings of the CVPR 2011, Colorado Springs, CO, USA, 20–25 June 2011; pp. 2657–2664. [Google Scholar]

- Cho, H.; Wang, J.; Lee, S. Text Image Deblurring Using Text-Specific Properties. In Proceedings of the ECCV 2012, Florence, Italy, 7–13 October 2012; pp. 524–537. [Google Scholar]

- Cho, H.; Wang, J.; Lee, S. Handling Outliers in Non-Blind Image Deconvolution. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 495–502. [Google Scholar]

- Zhao, J.; Feng, H.; Xu, Z.; Li, Q. An Improved Image Deconvolution Approach Using Local Constraint. Opt. Laser Technol. 2012, 44, 421–427. [Google Scholar] [CrossRef]

- Oliveira, J.; Figueiredo, M.; Bioucas-Dias, J. Parametric Blur Estimation for Blind Restoration of Natural Images: Linear Motion and Out-of-Focus. IEEE Trans. Image Process. 2013, 23, 466–477. [Google Scholar] [CrossRef] [PubMed]

- Amizic, B.; Spinoulas, L.; Molina, R.; Katsaggelos, A. Compressive blind image deconvolution. IEEE Trans. Image Process. 2013, 22, 3994–4006. [Google Scholar] [CrossRef] [PubMed]

- Cho, H.; Lee, S. Fast Motion Deblurring. ACM Trans. Graph. 2009, 28, 1–8. [Google Scholar] [CrossRef]

- Xu, L.; Jia, J. Two-Phase Kernel Estimation for Robust Motion Deblurring. In Proceedings of the ECCV 2010, Heraklion, Greece, 5–11 September 2010; pp. 157–170. [Google Scholar]

- Shan, Q.; Jia, J.; Agarwala, A. High-quality Motion Deblurring from a Single Image. ACM Trans. Graph. 2008, 27, 1–10. [Google Scholar] [CrossRef]

- Krishnan, D.; Fergus, R. Fast Image Deconvolution using Hyper-Laplacian Priors. In Proceedings of the 23rd Annual Conference on Neural Information Processing Systems 2009, Vancouver, BC, Canada, 7–10 December 2009; pp. 1033–1041. [Google Scholar]

- Wang, J.; Lu, K.; Wang, Q.; Jia, J. Kernel Optimization for Blind Motion Deblurring with Image Edge Prior. Math. Probl. Eng. 2012, 2012, 243–253. [Google Scholar] [CrossRef]

- Dong, W.; Feng, H.; Xu, Z.; Li, Q. Blind image deconvolution using the Fields of Experts prior. Opt. Commun. 2012, 258, 5051–5061. [Google Scholar] [CrossRef]

- Michaeli, T.; Michal, I. Blind Deblurring Using Internal Patch Recurrence. In Proceedings of the ECCV 2014, Zurich, Switzerland, 6–12 September 2014; pp. 783–798. [Google Scholar]

- Pan, J.; Sun, D.; Pfister, H.; Yang, M. Blind Image Deblurring Using Dark Channel Prior. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1628–1636. [Google Scholar]

- Xu, L.; Zheng, S.; Jia, J. Unnatural L0 Sparse Representation for Natural Image Deblurring. In Proceedings of the CVPR2013, Washington, DC, USA, 23–28 June 2013; pp. 1107–1114. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. In Proceedings of the International Conference on Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; Volume 60, pp. 1097–1105. [Google Scholar] [CrossRef]

- Kim, J.; Lee, J.; Lee, K. Accurate Image Super-Resolution Using Very Deep Convolutional Networks. In Proceedings of the CVPR2016, Las Vegas, NV, USA, 27–30 June 2016; pp. 1646–1654. [Google Scholar]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired Image-to-Image Translation Using Cycle-Consistent Adversarial Networks. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2242–2251. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the CVPR2014, Washington, DC, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Mao, X.J.; Shen, C.; Yang, Y.B. Image Restoration Using Very Deep Convolutional Encoder-Decoder Networks with Symmetric Skip Connections. In Proceedings of the 29th Conference on Neural Information Processing Systems (NIPS 2016), Barcelona, Spain, 5–10 December 2016. [Google Scholar]

- Zhang, K.; Zuo, W.; Chen, Y.; Meng, D.; Zhang, L. Beyond a Gaussian Denoiser: Residual Learning of Deep CNN for Image Denoising. IEEE Trans. Image Process. 2017, 26, 3142–3155. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dong, C.; Deng, Y.; Chen, C.; Tang, X. Compression Artifacts Reduction by a Deep Convolutional Network. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV2015), Santiago, Chile, 7–13 December 2015; pp. 576–584. [Google Scholar]

- Fiandrotti, A.; Fosson, S.; Ravazzi, C.; MagliAmizic, E. GPU-accelerated algorithms for compressed signals recovery with application to astronomical imagery deblurring. Int. J. Remote Sens. 2017, 39, 3994–4006. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D. Generative Adversarial Networks. Adv. Neural Inf. Process. Syst. 2014, 3, 2672–2680. [Google Scholar]

- Radford, A.; Metz, L.; Chintala, S. Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks. arXiv, 2015; arXiv:1511.06434. Available online: https://arxiv.org/abs/1511.06434(accessed on 22 June 2018).

- Huang, X.; Li, Y.; Poursaeed, O.; Hopcroft, J.; Belongie, S. Stacked Generative Adversarial Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR2017), Honolulu, HI, USA, 21–26 July 2017; pp. 1866–1875. [Google Scholar]

- Wu, H.; Zheng, Z.; Zhang, Z.; Huang, K. GP-GAN: Towards Realistic High-Resolution Image Blending. arXiv, 2017; arXiv:1703.07195v1. Available online: https://arxiv.org/abs/1703.07195(accessed on 22 June 2018).

- Gorijala, M.; Dukkipati, A. Image Generation and Editing with Variational Info Generative Adversarial Networks. arXiv, 2017; arXiv:1701.04568v1. Available online: https://arxiv.org/abs/1701.04568(accessed on 22 June 2018).

- Mogren, O. C-RNN-GAN: Continuous recurrent neural networks with adversarial training. In Proceedings of the NIPS 2016, Barcelona, Spain, 5–10 December 2016. [Google Scholar]

- Ioffe, S.; Szegedy, S. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In Proceedings of the 32nd International Conference on Machine Learning (ICML), Lille, France, 2015; pp. 448–456. [Google Scholar]

- He, K.; Zhang, X.; Ren, R.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- He, K.; Zhang, X.; Ren, R.; Sun, J. Identity Mappings in Deep Residual Networks. In Proceedings of the European Conference on Computer Vision (ECCV2016), Amsterdam, The Netherlands, 22–29 October 2016; pp. 630–645. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv, 2014; arXiv:1409.1556. Available online: https://arxiv.org/abs/1409.1556(accessed on 22 June 2018).

- Pan, J.; Hu, Z.; Su, Z.; Yang, M. Deblurring Text Images via L0-Regularized Intensity and Gradient Prior. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 2901–2908. [Google Scholar]

- Xu, L.; Lu, C.; Xu, Y.; Jia, J. Image smoothing via L0 gradient minimization. ACM Trans. Graph. 2011, 30, 1–12. [Google Scholar] [CrossRef]

- Köhler, R.; Hirsch, M.; Mohler, B.; Schölkopf, B.; Harmeling, S. Recording and playback of camera shake: Benchmarking blind deconvolution with a real-world database. In Proceedings of the 2012 European conference on Computer Vision, Florence, Italy, 7–13 October 2012; pp. 27–40. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- De, K.; Masilamani, V. Image deblurring by motion estimation for remote sensing. Procedia Eng. 2013, 64, 149–158. [Google Scholar] [CrossRef]

- Lowe, D. Distinctive image features from scale-invariant key points. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

| Images | Method [17] | Method [59] | Method [38] | Ours |

|---|---|---|---|---|

| a | 0.8776 | 0.8752 | 0.8548 | 0.8824 |

| b | 0.8749 | 0.8709 | 0.8492 | 0.8782 |

| c | 0.8185 | 0.8157 | 0.7832 | 0.8232 |

| d | 0.8298 | 0.8285 | 0.7853 | 0.8342 |

| Average results 1 | 0.8518 | 0.8496 | 0.8181 | 0.8577 |

| Images | Method [17] | Method [59] | Method [38] | Ours |

|---|---|---|---|---|

| a | 0.0581 | 0.0522 | 0.0497 | 0.0624 |

| b | 0.0479 | 0.0385 | 0.0413 | 0.0545 |

| c | 0.0665 | 0.0541 | 0.0592 | 0.0712 |

| d | 0.0526 | 0.0495 | 0.0436 | 0.0597 |

| Images | Method [17] | Method [59] | Method [38] | Ours |

|---|---|---|---|---|

| a | 0.0322 | 0.0276 | 0.0241 | 0.0392 |

| b | 0.0257 | 0.0188 | 0.0239 | 0.0336 |

| c | 0.0349 | 0.0283 | 0.0217 | 0.0424 |

| d | 0.0276 | 0.0214 | 0.0158 | 0.0297 |

| e | 0.0495 | 0.0473 | 0.0394 | 0.0561 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, R.; Ma, G.; Qin, Q.; Shi, Q.; Huang, J. Blind UAV Images Deblurring Based on Discriminative Networks. Sensors 2018, 18, 2874. https://doi.org/10.3390/s18092874

Wang R, Ma G, Qin Q, Shi Q, Huang J. Blind UAV Images Deblurring Based on Discriminative Networks. Sensors. 2018; 18(9):2874. https://doi.org/10.3390/s18092874

Chicago/Turabian StyleWang, Ruihua, Guorui Ma, Qianqing Qin, Qiang Shi, and Juntao Huang. 2018. "Blind UAV Images Deblurring Based on Discriminative Networks" Sensors 18, no. 9: 2874. https://doi.org/10.3390/s18092874

APA StyleWang, R., Ma, G., Qin, Q., Shi, Q., & Huang, J. (2018). Blind UAV Images Deblurring Based on Discriminative Networks. Sensors, 18(9), 2874. https://doi.org/10.3390/s18092874