Human Motion Recognition by Textile Sensors Based on Machine Learning Algorithms

Abstract

1. Introduction

2. Materials and Methods

2.1. Materials

2.2. Methods

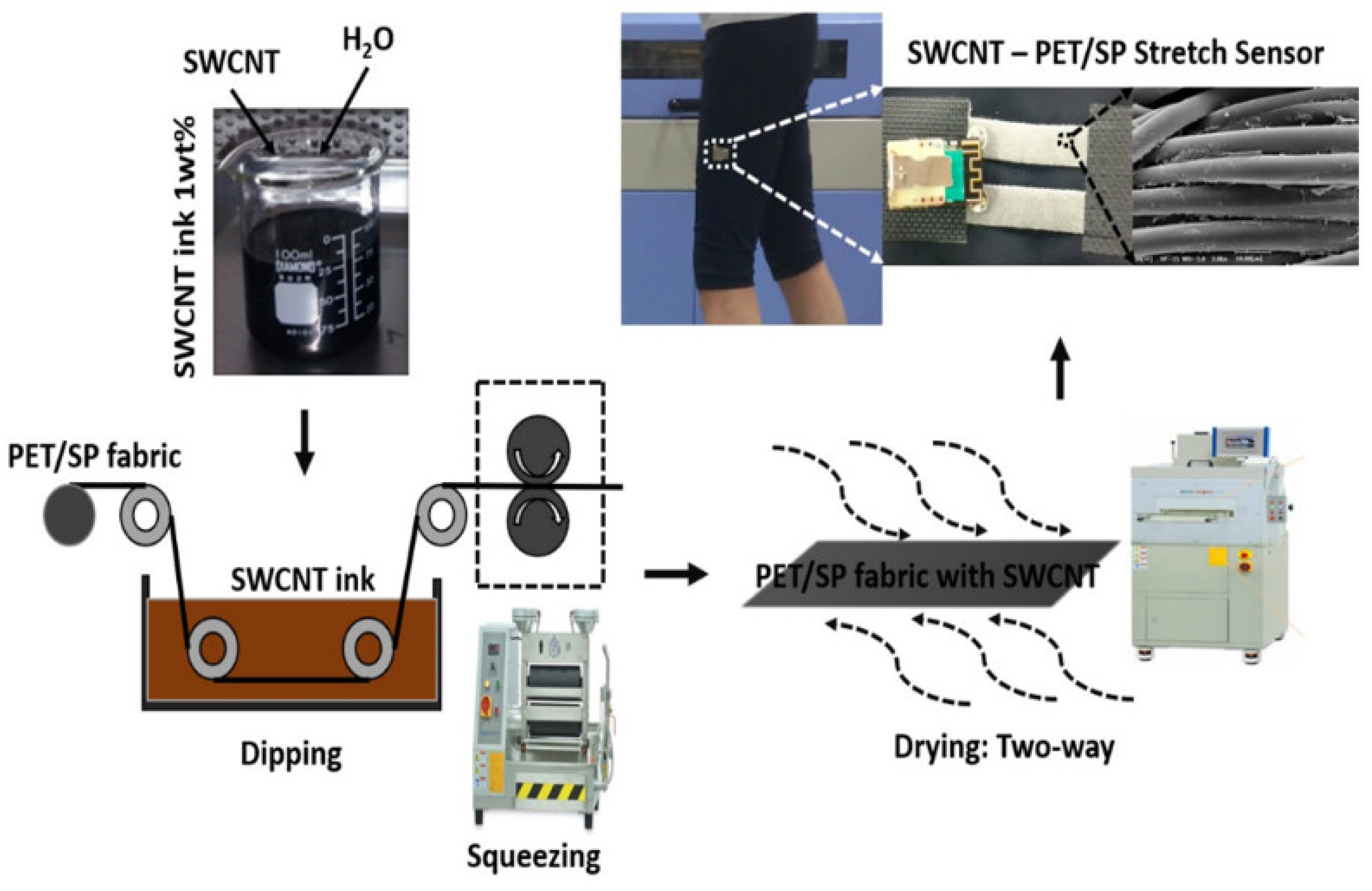

2.2.1. Textile Sensor Fabrication

2.2.2. Human Motion Analysis

- Average amplitude: The average amplitude (AMP) is a commonly used term to indicate the magnitude of a periodic signal and determined by the ratio between the sum of the magnitudes of all instantaneous values and the number of considered instantaneous values. Considering a real signal as shown in Figure 3, A1, A2, A3, etc. are the magnitudes of the signal at instants 1, 2, 3, etc., respectively. The AMP is calculated as follows:

- Standard deviation of the amplitude: Standard deviation (STD) is a measure of the dispersion of data from its mean. It is calculated as the square root of variance by determining the variation between each data point relative to the mean. A low STD indicates that the data points tend to be close to the mean of the set data, while a high STD indicates that the data points are spread out over a wider range of values. Besides the average of amplitude, the STD evaluates the other aspect of the signal:where Ai represents an individual value, represents the mean value, and n represents the total number of values.

- Average cycle: This is the most important parameter for the motion classification method proposed in this research. In the general fields of science and life, the cycle is defined by the shortest period in which an action is repeated. Average cycle (CYC) includes process time, during which a unit was acted upon to bring it closer to an output, and delay time, during which a unit of work was spent waiting to take the next motion. The CYC could be calculated through a threshold as shown in Figure 3.

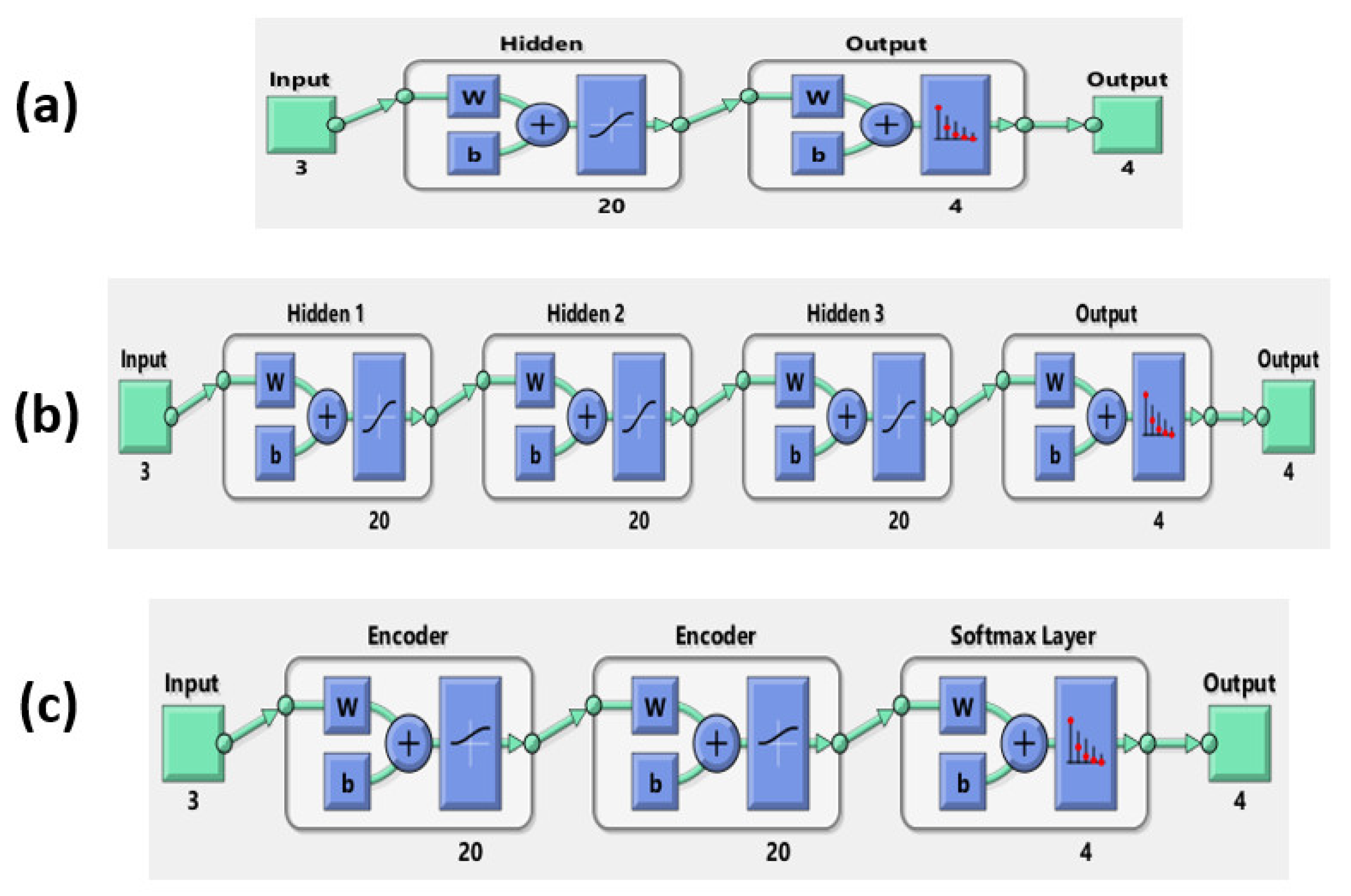

2.2.3. Machine Learning Models

3. Results and Discussion

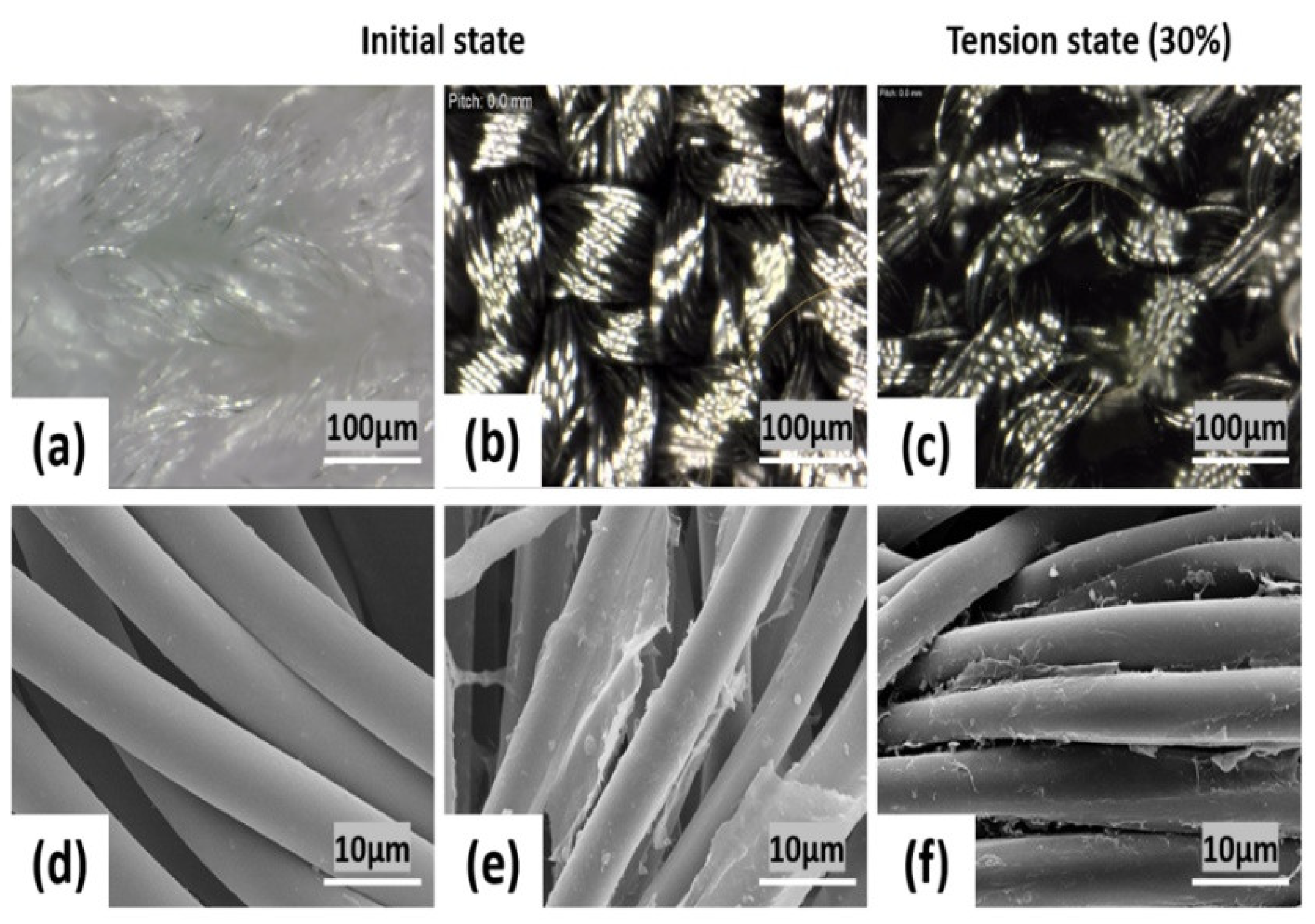

3.1. Structure of the Stretch Textile Sensor

3.2. Stretchability (Yield Point) and Sensitivity (Gauge Factor)

3.3. Current-Voltage (I-V) Curves

3.4. Hysteresis

3.5. Response and Recovery Time

3.6. Durability

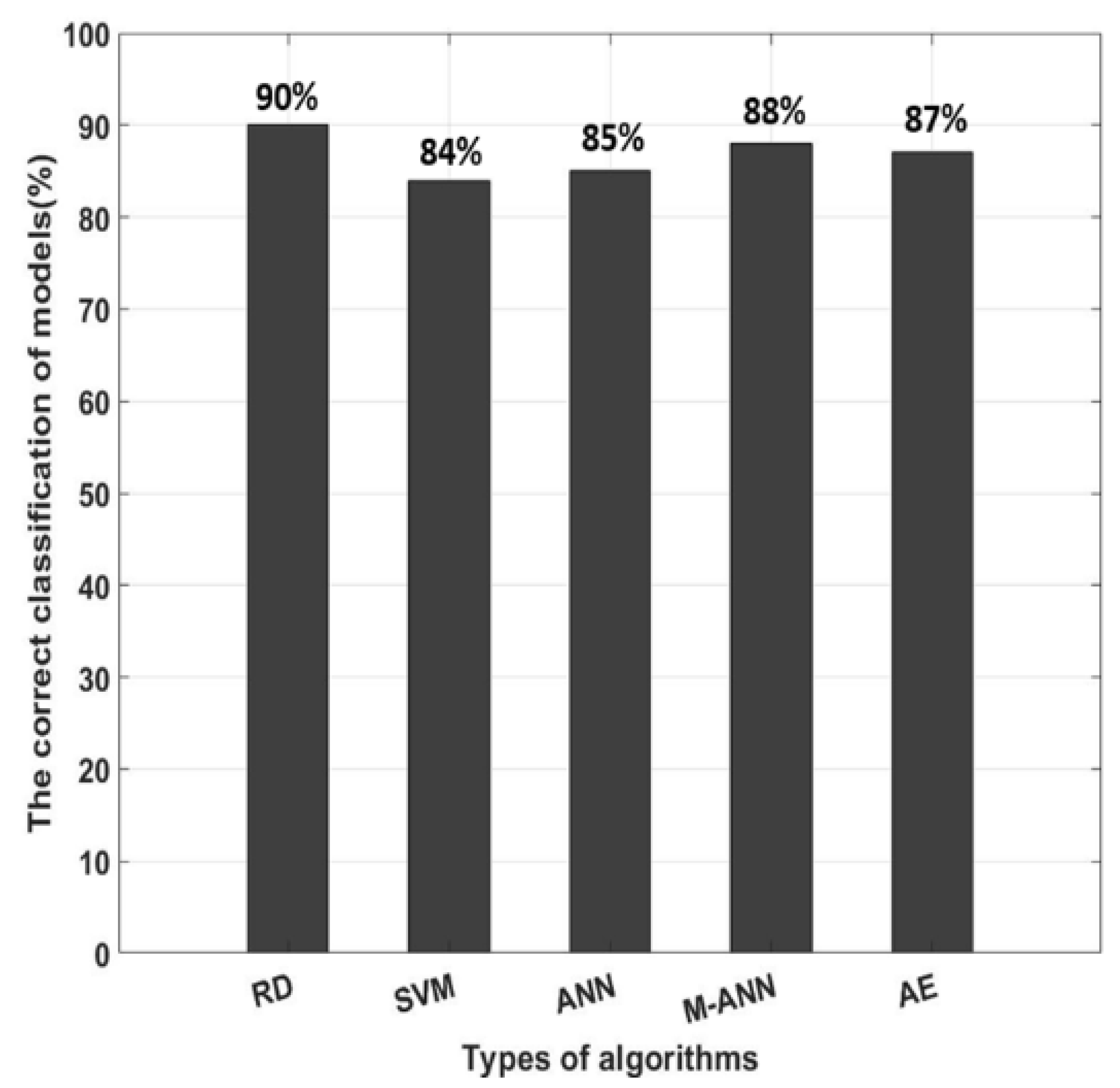

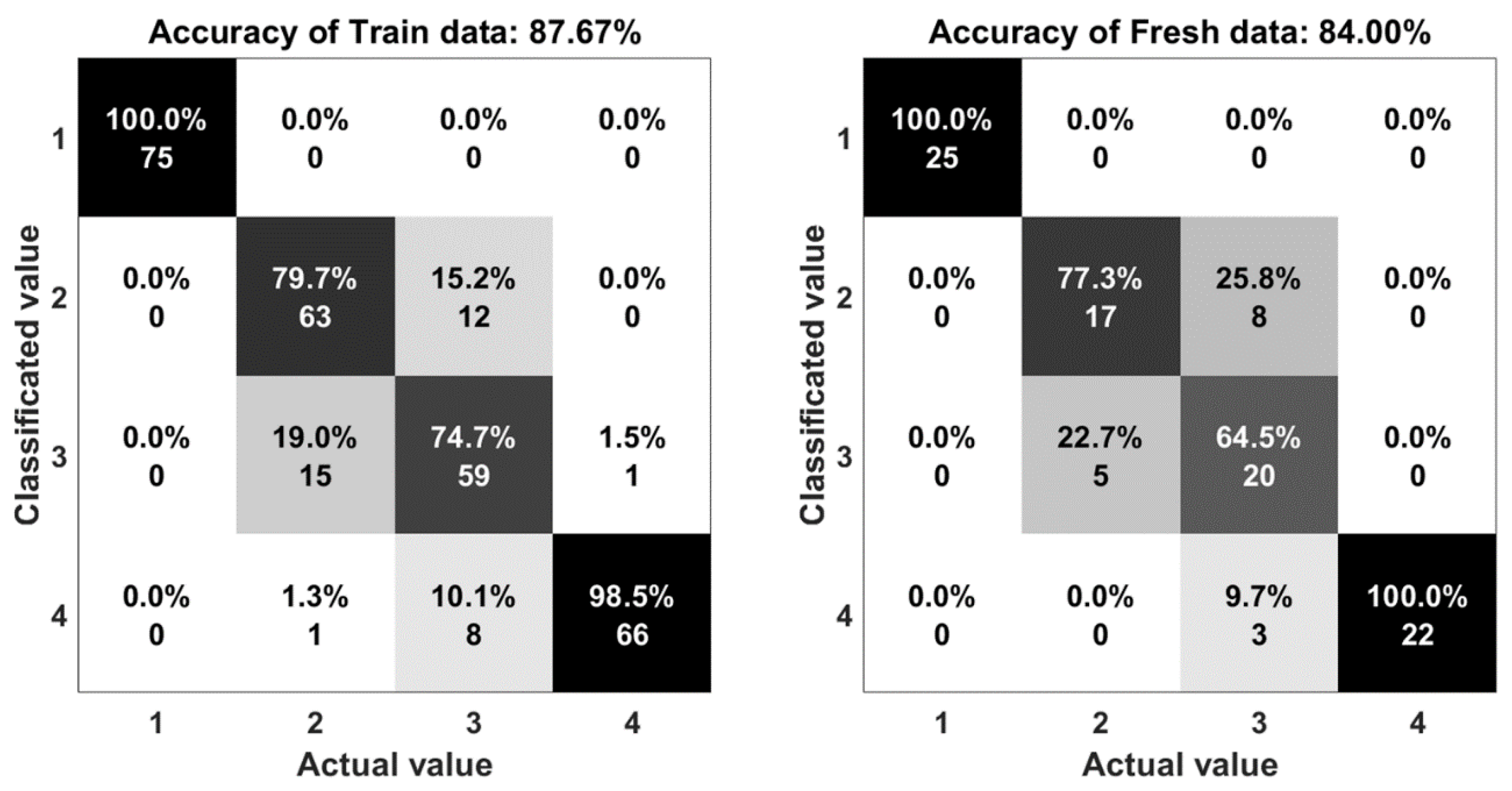

3.7. Human Motion Classification

4. Conclusions

Author Contributions

Acknowledgments

Conflicts of Interest

References

- Son, D.; Lee, J.; Qiao, S.; Ghaffari, R.; Kim, J.; Lee, J.E.; Song, C.; Kim, S.J.; Lee, D.J.; Jun, S.W.; et al. Multifunctional wearable devices for diagnosis and therapy of movement disorders. Nat. Nanotechnol. 2014, 9, 397–404. [Google Scholar] [CrossRef] [PubMed]

- Majumder, S.; Mondal, T.; Deen, M.J. Wearable Sensors for Remote Health Monitoring. Sensors 2017, 17, 130. [Google Scholar] [CrossRef] [PubMed]

- Zamarayeva, M.A.; Ostfeld, A.E.; Wang, M.; Duey, J.K.; Deckman, I.; Lechêne, B.P.; Davies, G.; Steingart, D.A.; Arias, A.C. Flexible and stretchable power sources for wearable electronics. Sci. Adv. 2017, 3, 1602051. [Google Scholar] [CrossRef] [PubMed]

- Wen, Z.; Yeh, M.-H.; Guo, H.; Wang, J.; Zi, Y.; Xu, W.; Deng, J.; Zhu, L.; Wang, X.; Hu, C.; et al. Self-powered textile for wearable electronics by hybridizing fiber-shaped nanogenerators, solar cells, and supercapacitors. Sci. Adv. 2016, 2, 1600097. [Google Scholar] [CrossRef] [PubMed]

- Yu, D.; Goh, K.; Wang, H.; Wei, L.; Jiang, W.; Zhang, Q.; Dai, L.; Chen, Y. Scalable synthesis of hierarchically structured carbon nanotube—graphene fibres for capacitive energy storage. Nat. Nanotechnol. 2014, 9, 555–562. [Google Scholar] [CrossRef] [PubMed]

- Geier, M.L.; McMorrow, J.J.; Xu, W.; Zhu, J.; Kim, C.H.; Marks, T.J.; Hersam, M.C. Solution-processed carbon nanotube thin-film complementary static random access memory. Nat. Nanotechnol. 2015, 10, 944–948. [Google Scholar] [CrossRef] [PubMed]

- Choi, S.; Kwon, S.; Kim, H.; Kim, W.; Kwon, J.H.; Lim, M.S.; Lee, H.S.; Choi, K.C. Highly Flexible and Efficient Fabric-Based Organic Light-Emitting Devices for Clothing-Shaped Wearable Displays. Sci. Rep. 2017, 7, 6424. [Google Scholar] [CrossRef] [PubMed]

- Servati, A.; Zou, L.; Wang, J.Z.; Ko, F.; Servati, P. Novel Flexible Wearable Sensor Materials and Signal Processing for Vital Sign and Human Activity Monitoring. Sensors 2017, 17, 1622. [Google Scholar] [CrossRef] [PubMed]

- Wang, C.; Li, X.; Gao, E.; Jian, M.; Xia, K.; Wang, Q.; Xu, Z.; Ren, T.; Zhang, Y. Carbonized Silk Fabric for Ultrastretchable, Highly Sensitive, and Wearable Strain Sensors. Adv. Mater. 2016, 28, 6640–6648. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Wang, L.; Yang, T.; Li, X.; Zang, X.; Zhu, M.; Wang, K.; Wu, D.; Zhu, H. Wearable and Highly Sensitive Graphene Strain Sensors for Human Motion Monitoring. Adv. Funct. Mater. 2014, 24, 4666–4670. [Google Scholar] [CrossRef]

- Stoppa, M.; Chiolerio, A. Wearable Electronics and Smart Textiles: A Critical Review. Sensors 2014, 14, 11957–11992. [Google Scholar] [CrossRef] [PubMed]

- Hammock, L.M.; Chortos, A.; Tee, C.K.B.; Tok, B.H.J.; Bao, Z. 25th Anniversary Article: The Evolution of Electronic Skin (E-Skin): A Brief History, Design Considerations, and Recent Progress. Adv. Mater. 2013, 25, 5997–6038. [Google Scholar] [CrossRef] [PubMed]

- Ho, D.H.; Sun, Q.; Kim, S.Y.; Han, J.T.; Kim, D.H.; Cho, J.H. Stretchable and Multimodal All Graphene Electronic Skin. Adv. Mater. 2016, 28, 2601–2608. [Google Scholar] [CrossRef] [PubMed]

- Someya, T.; Bao, Z.; Malliaras, G.G. The rise of plastic bioelectronics. Nature 2016, 540, 379–385. [Google Scholar] [CrossRef] [PubMed]

- Lee, H.; Choi, T.K.; Lee, Y.B.; Cho, H.R.; Ghaffari, R.; Wang, L.; Choi, H.J.; Chung, T.D.; Lu, N.; Hyeon, T.; Choi, S.H.; Kim, D.-H. A graphene-based electrochemical device with thermoresponsive microneedles for diabetes monitoring and therapy. Nat. Nanotechnol. 2016, 11, 566–572. [Google Scholar] [CrossRef] [PubMed]

- Gao, W.; Emaminejad, S.; Nyein, H.Y.Y.; Challa, S.; Chen, K.; Peck, A.; Fahad, H.M.; Ota, H.; Shiraki, H.; Kiriya, D.; et al. Fully integrated wearable sensor arrays for multiplexed in situ perspiration analysis. Nature 2016, 529, 509–514. [Google Scholar] [CrossRef] [PubMed]

- Dobkin, H.B. Wearable motion sensors to continuously measure real-world physical activities. Curr. Opin. Neurol. 2013, 26, 602–608. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Gu, Y.; Xiong, Z.; Cui, Z.; Zhang, T. Silk-Molded Flexible, Ultrasensitive, and Highly Stable Electronic Skin for Monitoring Human Physiological Signals. Adv. Mater. 2014, 26, 1336–1342. [Google Scholar] [CrossRef] [PubMed]

- Sarwar, M.S.; Dobashi, Y.; Preston, C.; Wyss, J.K.M.; Mirabbasi, S.; Madden, J.D.W. Bend, stretch, and touch: Locating a finger on an actively deformed transparent sensor array. Sci. Adv. 2017, 3, 1602200. [Google Scholar] [CrossRef] [PubMed]

- Pang, C.; Lee, G.-Y.; Kim, T.; Kim, S.M.; Kim, H.N.; Ahn, S.-H.; Suh, K.-Y. A flexible and highly sensitive strain-gauge sensor using reversible interlocking of nanofibers. Nat. Mater. 2012, 11, 795–801. [Google Scholar] [CrossRef] [PubMed]

- Amjadi, M.; Kyung, K.; Park, I.; Sitti, M. Stretchable, Skin-Mountable, and Wearable Strain Sensors and Their Potential Applications: A Review. Adv. Funct. Mater. 2016, 26, 1678–1698. [Google Scholar] [CrossRef]

- Hsu, P.-C.; Liu, C.; Song, A.Y.; Zhang, Z.; Peng, Y.; Xie, J.; Liu, K.; Wu, C.-L.; Catrysse, P.B.; Cai, L.; et al. A dual-mode textile for human body radiative heating and cooling. Sci. Adv. 2017, 3, 1700895. [Google Scholar] [CrossRef] [PubMed]

- Mao, C.; Zhang, H.; Lu, Z. Flexible and wearable electronic silk fabrics for human physiological monitoring. Smart Mater. Struct. 2017, 26, 095033. [Google Scholar] [CrossRef]

- Ren, J.; Wang, C.; Zhang, X.; Carey, T.; Chen, K.; Yin, Y.; Torrisi, F. Environmentally-friendly conductive cotton fabric as flexible strain sensor based on hot press reduced graphene oxide. Carbon 2017, 111, 622–630. [Google Scholar] [CrossRef]

- Wang, L.; Loh, J.K. Wearable carbon nanotube-based fabric sensors for monitoring human physiological performance. Smart Mater. Struct. 2017, 26, 055018. [Google Scholar] [CrossRef]

- Kim, K.K.; Hong, S.; Cho, H.M.; Lee, J.; Suh, Y.D.; Ham, J.; Ko, S.H. Highly Sensitive and Stretchable Multidimensional Strain Sensor with Prestrained Anisotropic Metal Nanowire Percolation Networks. Nano Lett. 2015, 15, 5240–5247. [Google Scholar] [CrossRef] [PubMed]

- Amjadi, M.; Pichitpajongkit, A.; Lee, S.; Ryu, S.; Park, I. Highly Stretchable and Sensitive Strain Sensor Based on Silver Nanowire–Elastomer Nanocomposite. ACS Nano 2014, 8, 5154–5163. [Google Scholar] [CrossRef] [PubMed]

- Guo, X.; Huang, Y.; Zhao, Y.; Mao, L.; Gao, L.; Pan, W.; Zhang, Y.; Liu, P. Highly stretchable strain sensor based on SWCNTs/CB synergistic conductive network for wearable human-activity monitoring and recognition. Smart Mater. Struct. 2017, 26, 095017. [Google Scholar] [CrossRef]

- Roman, C.; Helbling, T.; Hierold, C. Single-Walled Carbon Nanotube Sensor Concepts. In Springer Handbook of Nanotechnology, 3rd ed.; Bhushan, B., Ed.; Springer: Berlin, Germany, 2010; pp. 403–425. [Google Scholar]

- Bengio, Y.; Courville, A.; Vincent, P. Representation Learning: A Review and New Perspectives. IEEE Trans. Pattern. Anal. Mach. Intell. 2013, 35, 1798–1828. [Google Scholar] [CrossRef] [PubMed]

- Lara, D.O.; Labrador, A.M. A Survey on Human Activity Recognition using Wearable Sensors. IEEE Commun. Surv. Tutor. 2012, 15, 1192–1209. [Google Scholar] [CrossRef]

- Lopez, N.H.I.; Munoz, M.A. Wearable Inertial Sensors for Human Motion Analysis: A Review. IEEE Sens. J. 2016, 16, 7821–7834. [Google Scholar] [CrossRef]

- Guo, F.M.; Cui, X.; Wang, K.L.; Wei, J.Q. Stretchable and compressible strain sensors based on carbon nanotube meshes. Nanoscale 2016, 8, 19352–19358. [Google Scholar] [CrossRef] [PubMed]

- Jiao, Y.; Young, C.W.; Yang, S.; Oren, S.; Ceylan, H.; Kim, S.; Gopalakrishnan, K.; Taylor, P.C.; Dong, L. Wearable Graphene Sensors with Microfluidic Liquid Metal Wiring for Structural Health Monitoring and Human Body Motion Sensing. IEEE Sens. J. 2016, 16, 7870–7875. [Google Scholar] [CrossRef]

- Chetty, G.; White, M. Body sensor networks for human activity recognition. In Proceedings of the 3rd International Conference on Signal Processing and Integrated Networks (SPIN), Noida, India, 11–12 February 2016; pp. 660–665. [Google Scholar]

- He, Z.; Bai, X. A wearable wireless body area network for human activity recognition. In Proceedings of the 2014 Sixth International Conference on Ubiquitous and Future Networks (ICUFN), Shanghai, China, 8–11 July 2014; pp. 115–119. [Google Scholar]

- Sairam, K.V.S.S.S.S.; Gunasekaran, N.; Redd, S.R. Bluetooth in wireless communication. IEEE. Commun. Mag. 2002, 40, 90–96. [Google Scholar] [CrossRef]

- Schilingovski, P.; Vulfin, V.; Sayfan, A.S.; Shavit, R. Wearable antennas design for wireless communication. In Proceedings of the 2017 IEEE International Conference on Microwaves, Antennas, Communications and Electronic Systems (COMCAS), Tel-Aviv, Israel, 13–15 November 2017; pp. 1–3. [Google Scholar]

- Akyildiz, I.F.; Su, W.; Sankarasubramaniam, Y.; Cayirci, E. A survey on sensor networks. IEEE Commun. Mag. 2002, 40, 102–114. [Google Scholar] [CrossRef]

- Biau, G.J. Analysis of a random forests model. Mach. Learn. Res. 2012, 13, 1063–1095. [Google Scholar]

- Brereton, G.R.; Lloyda, R.G. Support Vector Machines for classification and regression. Analyst 2010, 135, 230–267. [Google Scholar] [CrossRef] [PubMed]

- Dias, M.F.; Antunes, A.; Mota, M.A. Artificial neural networks: A review of commercial hardware. Eng. Appl. Artif. Intell. 2004, 17, 945–952. [Google Scholar] [CrossRef]

- Granitto, P.M.; Verdes, P.F.; Ceccatto, H.A. Neural network ensembles: Evaluation of aggregation algorithms. Artif. Intell. 2005, 163, 139–162. [Google Scholar] [CrossRef]

- Ojha, K.V.; Abraham, A.; Snasel, V. Metaheuristic design of feedforward neural networks: A review of two decades of research. Eng. Appl. Artif. Intell. 2017, 60, 97–116. [Google Scholar] [CrossRef]

- Schmidhuber, J. Deep learning in neural networks: An overview. Neural Netw. 2015, 61, 85–117. [Google Scholar] [CrossRef] [PubMed]

- cvpartition. Available online: https://www.mathworks.com/help/stats/cvpartition.html (accessed on 2 September 2018).

- Karpathy, A.; Toderici, G.; Shetty, S.; Leung, T.; Sukthankar, R.; Fei-Fei, L. Large-scale video classification with convolutional neural networks. In Proceedings of the 2014 IEEE conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1725–1732. [Google Scholar]

- Sebastiani, F. Machine learning in automated text categorization. ACM Comput. Surv. 2002, 34, 1–47. [Google Scholar] [CrossRef]

- TreeBagger. Available online: https://www.mathworks.com/help/stats/treebagger.html (accessed on 31 July 2018).

- fitcecoc. Available online: https://www.mathworks.com/help/stats/fitcecoc.html (accessed on 31 July 2018).

- Neural Network Toolbox. Available online: https://www.mathworks.com/help/nnet/index.html (accessed on 31 July 2018).

- Autoencoder class. Available online: https://www.mathworks.com/help/nnet/ref/autoencoder-class.html (accessed on 31 July 2018).

- Shalev-Shwartz, S.; Ben-David, S. Understanding Machine Learning: From Theory to Algorithms, 1st ed.; Cambridge University Press: New York, NY, USA, 2014; pp. 202–283. [Google Scholar]

| Age (year) | Gender | Weight (kg) | Height (m) |

|---|---|---|---|

| 28 | Male | 55 | 1.67 |

| 26 | Male | 62 | 1.70 |

| 32 | Male | 65 | 1.72 |

| Name | Characteristic |

|---|---|

| Response Name | ‘Y’ (Output) |

| Categorical Predictors | [none] |

| Class Names | [‘Walking’ ‘Jumping’ ‘Running’ ‘Sprinting’] |

| Score Transform | ‘none’ |

| Binary Learners | {6 × 1 cell} |

| Coding Name | ‘onevsone’ |

| Characteristic | Velocity (m/s) | Step Size (m) | Frequency (Hz) |

|---|---|---|---|

| Walking | 1.2 | 0.35 | 1.7 |

| Running | 3.2 | 0.45 | 2.4 |

| Sprinting | 5.0 | 0.7 | 3.0 |

| Jumping | 1.5 | 0.75 | 2.0 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vu, C.C.; Kim, J. Human Motion Recognition by Textile Sensors Based on Machine Learning Algorithms. Sensors 2018, 18, 3109. https://doi.org/10.3390/s18093109

Vu CC, Kim J. Human Motion Recognition by Textile Sensors Based on Machine Learning Algorithms. Sensors. 2018; 18(9):3109. https://doi.org/10.3390/s18093109

Chicago/Turabian StyleVu, Chi Cuong, and Jooyong Kim. 2018. "Human Motion Recognition by Textile Sensors Based on Machine Learning Algorithms" Sensors 18, no. 9: 3109. https://doi.org/10.3390/s18093109

APA StyleVu, C. C., & Kim, J. (2018). Human Motion Recognition by Textile Sensors Based on Machine Learning Algorithms. Sensors, 18(9), 3109. https://doi.org/10.3390/s18093109