Performance and Analysis of Feature Tracking Approaches in Laser Speckle Instrumentation

Abstract

1. Introduction

2. Overview of Feature Matching Process

3. Methods

3.1. Feature Detection Methods

3.2. Feature Description and Matching Methods

3.3. Experimental Methods

4. Initial Performance Assessment of Feature Tracking Approaches

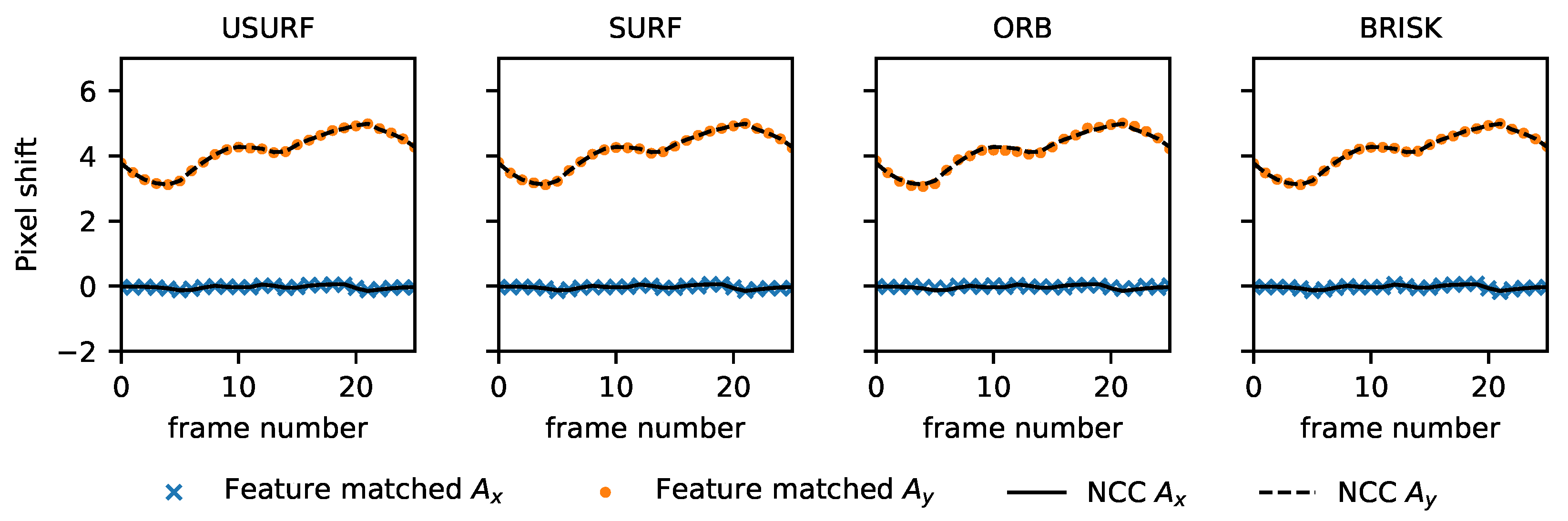

4.1. Translation Performance

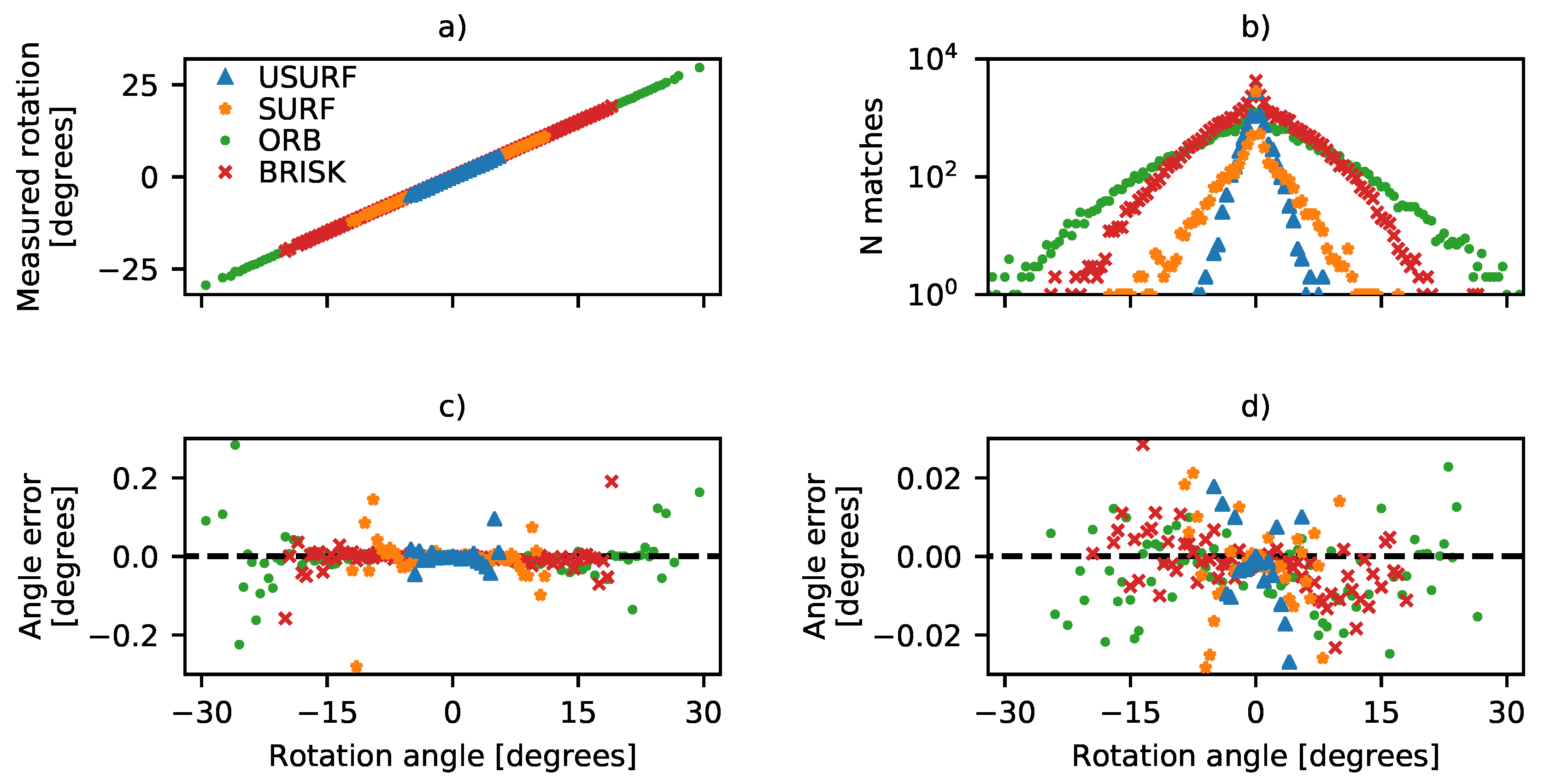

4.2. Rotation Performance

4.3. Processing Time, Measurement Precision, and Image Size

5. Analysis of Individual Feature Tracking Stages

5.1. Analytical Methods

5.2. Feature Detection Speed

5.3. Feature Detection Robustness

5.4. Feature Description Speed

5.5. Feature Matching Robustness

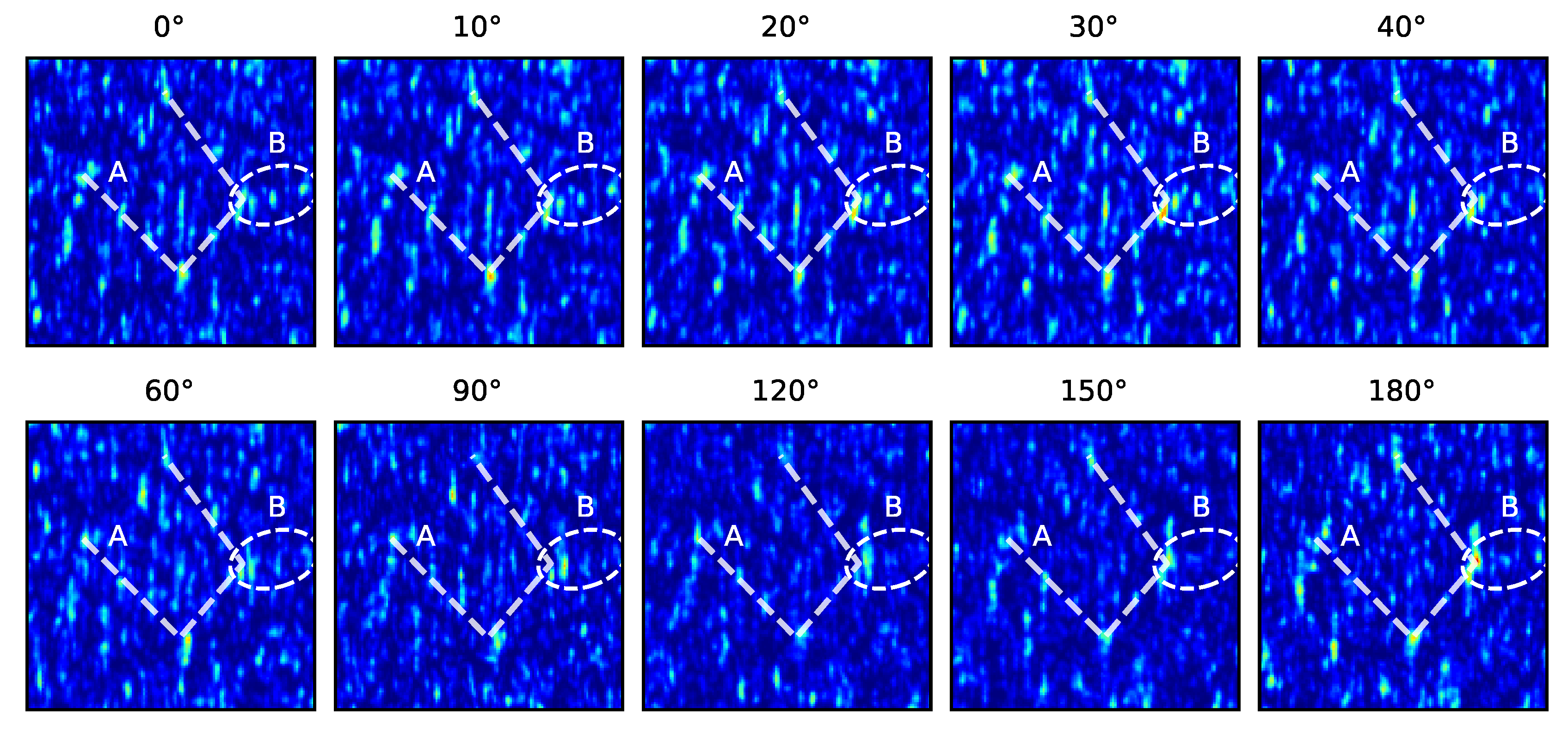

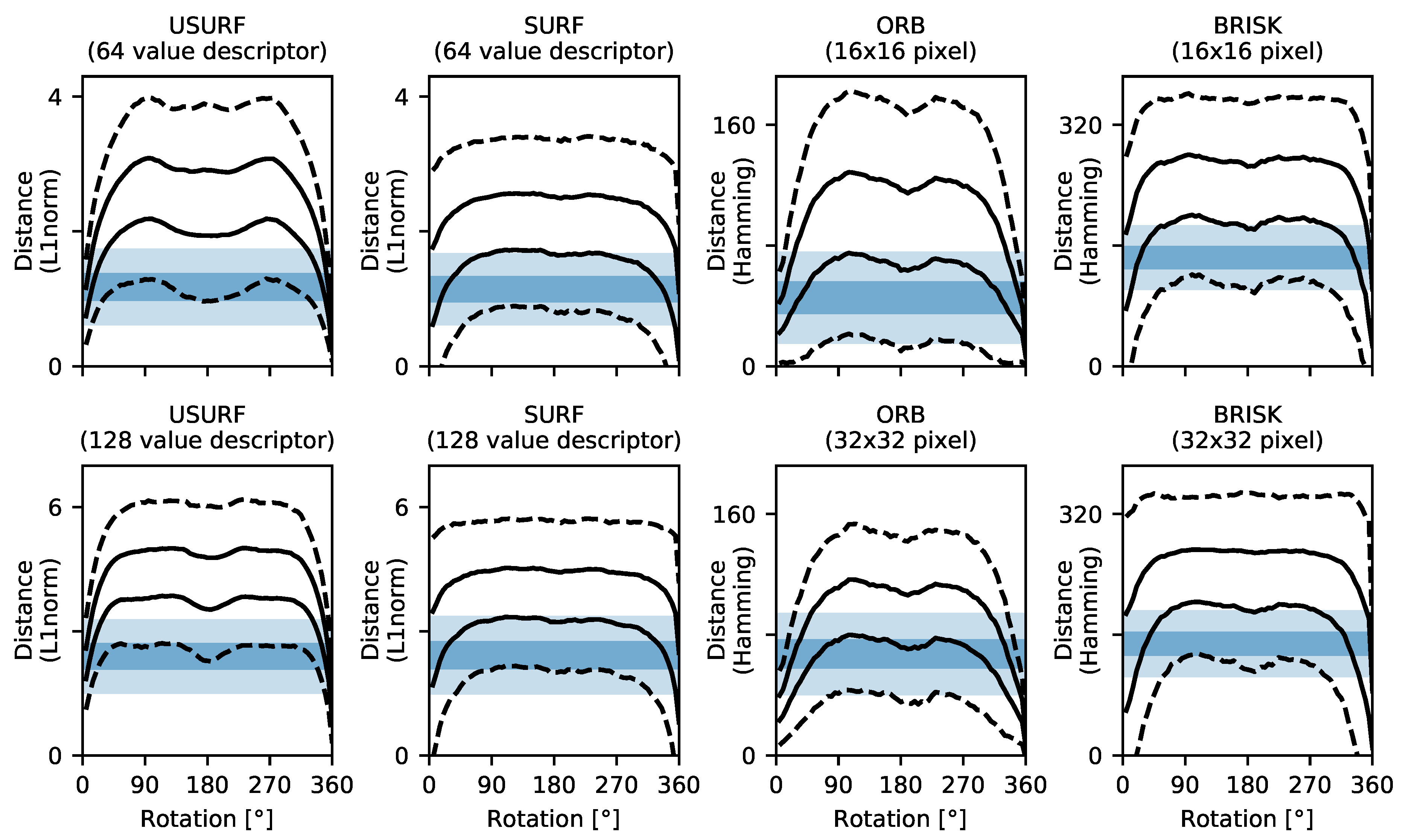

5.5.1. Orientation Robustness

5.5.2. Descriptor Robustness

5.5.3. Descriptor Discrimination

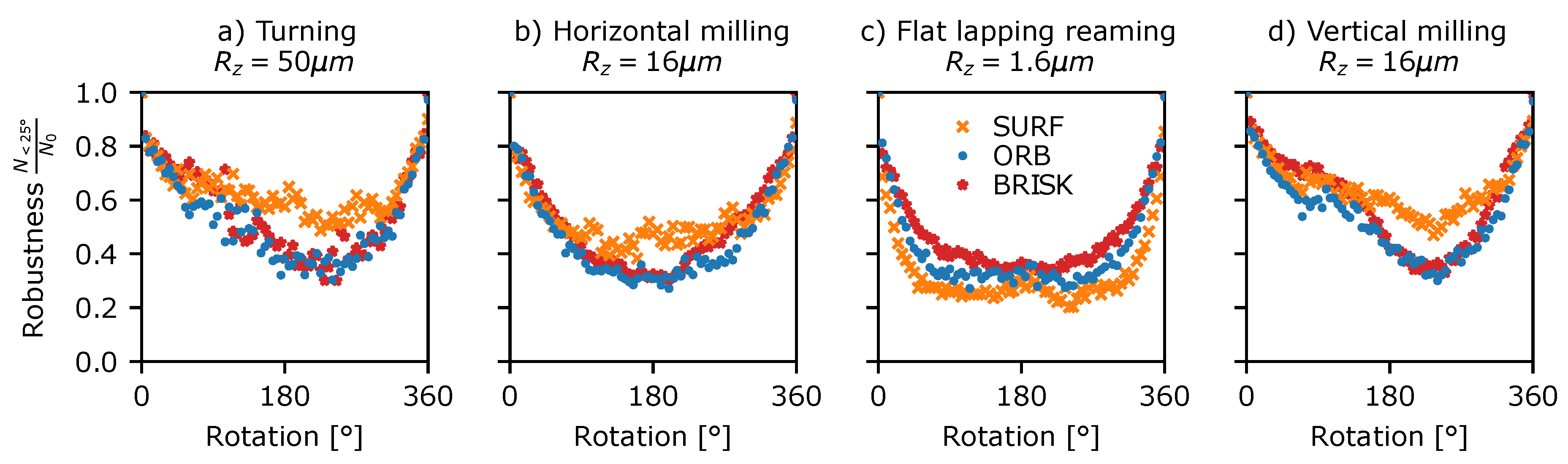

5.5.4. Assessment of Overall Feature Matching Robustness

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Conflicts of Interest

References

- Yamaguchi, I. Measurement and Testing by Digital Speckle Correlation. Proc. SPIE 2008, 7129, 71290Z. [Google Scholar] [CrossRef]

- Charrett, T.O.; Tatam, R.P. Objective speckle displacement: An extended theory for the small deformation of shaped objects. Opt. Express 2014, 22, 25466–25480. [Google Scholar] [CrossRef] [PubMed]

- Farsad, M.; Evans, C.; Farahi, F. Robust sub-micrometer displacement measurement using dual wavelength speckle correlation. Opt. Express 2015, 23, 14960. [Google Scholar] [CrossRef] [PubMed]

- Francis, D.; Charrett, T.O.; Waugh, L.; Tatam, R.P. Objective speckle velocimetry for autonomous vehicle odometry. Appl. Opt. 2012, 51, 3478–3490. [Google Scholar] [CrossRef] [PubMed]

- Bandari, Y.K.; Charrett, T.O.; Michel, F.; Ding, J.; Williams, S.W.; Tatam, R.P. Compensation strategies for robotic motion errors for additive manufacturing (AM). In Proceedings of the International Solid Freeform Fabrication Symposium, Austin, TX, USA, 8–10 August 2016. [Google Scholar]

- Charrett, T.O.; Bandari, Y.K.; Michel, F.; Ding, J.; Williams, S.W.; Tatam, R.P. A non-contact laser speckle sensor for the measurement of robotic tool speed. Robot. Comput.-Integr. Manuf. 2018, 53, 187–196. [Google Scholar] [CrossRef]

- Nagai, I.; Watanabe, K.; Nagatani, K.; Yoshida, K. Noncontact position estimation device with optical sensor and laser sources for mobile robots traversing slippery terrains. In Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010; pp. 3422–3427. [Google Scholar]

- Charrett, T.O.; Kissinger, T.; Tatam, R.P. Workpiece positioning sensor (wPOS): A three-degree-of-freedom relative end-effector positioning sensor for robotic manufacturing. Procedia CIRP 2019, 79, 620–625. [Google Scholar] [CrossRef]

- Shirinzadeh, B.; Teoh, P.; Tian, Y.; Dalvand, M.; Zhong, Y.; Liaw, H. Laser interferometry-based guidance methodology for high precision positioning of mechanisms and robots. Robot. Comput. Integr. Manuf. 2010, 26, 74–82. [Google Scholar] [CrossRef]

- Saleh, B. Speckle correlation measurement of the velocity of a small rotating rough object. Appl. Opt. 1975, 14, 2344–2346. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; Lu, M.; Bilgeri, L.M.; Jakobi, M.; Bloise, F.S.; Koch, A.W. Temporal electronic speckle pattern interferometry for real-time in-plane rotation analysis. Opt. Express 2018, 26, 8744. [Google Scholar] [CrossRef] [PubMed]

- Reddy, B.; Chatterji, B. An FFT-based technique for translation, rotation, and scale-invariant image registration. IEEE Trans. Image Process. 1996, 5, 1266–1271. [Google Scholar] [CrossRef] [PubMed]

- Kazik, T.; Goktogan, A.H. Visual odometry based on the Fourier-Mellin transform for a rover using a monocular ground-facing camera. In Proceedings of the 2011 IEEE International Conference on Mechatronics, Istanbul, Turkey, 13–15 April 2011; pp. 469–474. [Google Scholar]

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Liao, C.M.; Huang, P.S.; Chiu, C.C.; Hwang, Y.Y. Personal identification by extracting SIFT features from laser speckle patterns. In Proceedings of the 2012 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Kyoto, Japan, 25–30 March 2012; pp. 1341–1344. [Google Scholar]

- Yeh, C.H.; Sung, P.Y.; Kuo, C.H.; Yeh, R.N. Robust laser speckle recognition system for authenticity identification. Opt. Express 2012, 20, 24382–24393. [Google Scholar] [CrossRef] [PubMed]

- Charrett, T.O.; Kotowski, K.; Tatam, R.P. Speckle tracking approaches in speckle sensing. Proc. SPIE 2017, 102310L. [Google Scholar]

- Craig, J.J. Introduction to Robotics: Mechanics and Control; Pearson/Prentice Hall: Upper Saddle River, NJ, USA, 2005; Volume 3. [Google Scholar]

- Open Source Computer Vision Library. Available online: http://opencv.org/ (accessed on 23 May 2019).

- Harris, C.; Stephens, M. A Combined Corner and Edge Detector. In Proceedings of the Alvey Vision Conference 1988, Manchester, UK, 31 August–2 September 1988; pp. 147–151. [Google Scholar]

- Rosten, E.; Drummond, T. Machine Learning for High Speed Corner Detection. Comput. Vis. 2006, 1, 430–443. [Google Scholar]

- Shi, J.; Tomasi. Good features to track. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition CVPR-94, Seattle, WA, USA, 21–23 June 1994; pp. 593–600. [Google Scholar]

- Tuytelaars, T.; Mikolajczyk, K. Local Invariant Feature Detectors: A Survey. Comput. Graph. Vis. 2008, 3, 177–280. [Google Scholar] [CrossRef]

- Mair, E.; Hager, G.D.; Burschka, D.; Suppa, M.; Hirzinger, G. Adaptive and Generic Corner Detection Based on the Accelerated Segment Test. In Proceedings of the European Conference on Computer Vision (ECCV’10), Crete, Greece, 5–11 September 2010; pp. 183–196. [Google Scholar]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the IEEE International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar]

- Leutenegger, S.; Chli, M.; Siegwart, R.Y. BRISK: Binary Robust invariant scalable keypoints. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2548–2555. [Google Scholar]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-Up Robust Features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Calonder, M.; Lepetit, V.; Strecha, C.; Fua, P. BRIEF: Binary Robust Independent Elementary Features; Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); 6314 LNCS; Springer: Berlin, Germany, 2010; pp. 778–792. [Google Scholar]

- Raffel, M.; Willert, C.E.; Wereley, S.; Kompenhans, J. Particle Image Velocimetry: A Practical Guide; Experimental Fluid Mechanics; Springer: Berlin/Heidelberg, Germany, 2007. [Google Scholar]

- Lewis, J.P. Fast template matching. Vis. Interface 1995, 95, 15–19. [Google Scholar]

| Detector Method | Arguments |

|---|---|

| Harris corners /Good features to Track (GFTT) Implementation: cv2.GFTTDetector | qualityLevel = 0.01 maxCorners = 5000 minDistance = 1 blockSize = 3 useHarris = True (Harris corners) False (GFTT) Harris_k = 0.04 |

| FAST Implementation: cv2.FastFeatureDetector | threshold = 70% image mean intensity nonmaxSuppression = True type = cv2.FastFeatureDetector_TYPE_9_16 |

| AGAST Implementation: cv2.AgastFeatureDetector | threshold = 70% image mean intensity nonmaxSuppression = True type = cv2.AgastFeatureDetector_OAST_9_16 |

| Difference of Gaussians (DoG) (USURF detector stage) Implementation: cv2.xfeatures2d.SURF | hessianThreshold = 20 nOctaves = 1 (No image scaling) nOctaveLayers = 3 (default) upright = True (do not compute orientation of feature) |

| Orientated FAST (ORB detector stage) Implementation: cv2.ORB | fastThreshold = 70% of image mean edgeThreshold = 32 pixels nFeatures = 5000 (set high to prevent capping) scoreType = FAST_SCORE |

| Orientated FAST (BRISK detector stage) Implementation: cv2.BRISK | thresh = 70% of image mean octaves = 0 (single scale) |

| Orientated DoG (SURF detector) Implementation: cv2.xfeatures2d.SURF | hessianThreshold = 20 nOctaves = 1 (No image scaling) nOctaveLayers = 3 (default) upright = False (compute orientation of feature) |

| Matching Method | Arguments |

|---|---|

| USURF Detection: Difference of Gaussian Description: 4D/8D Vector of Haar wavelet sums Implementation: cv2.xfeatures2d.SURF | hessianThreshold = 30–300 nOctaves = 1 nOctaveLayers = 3 (default) extended = False (64 element descriptor) extended = True (128 element descriptor) upright = True Distance measure = L1-norm Distance threshold = 0.5 |

| SURF Detection: Difference of Gaussian Description: 4D/8D vector of Haar wavelet sums Implementation: cv2.xfeatures2d.SURF | hessianThreshold = 30-300 nOctaves = 1 nOctaveLayers = 3 (default) extended = False (64 element descriptor) extended = True (128 element descriptor) upright = False Distance measure = L1-norm Distance threshold = 0.5 |

| ORB Detection: orientated FAST Description: rotated BRIEF (Binary 256bit) Implementation: cv2.ORB | fastThreshold = 70% of image mean edgeThreshold = 32 pixels nFeatures = 5000 (set high to prevent capping) scoreType = FAST_SCORE nLevels = 1 (single scale) scaleFactor = 1.2 (default) firstLevel = 0 (default) patchSize = 16, 32, 48, 64 (pixels) WTA_K = 2 points Distance measure = Hamming Distance threshold = 30 |

| BRISK Detection: orientated FAST Description: rotated BRIEF (Binary 512bit) Implementation: cv2.BRISK | thresh = 70% of image mean octaves = 0 (single scale) patternScale = 0.43, 0.86, 1.29, 1.73 (16, 32, 48 & 64 pixels) Distance measure = Hamming Distance threshold = 60 |

| Data Set | Description |

|---|---|

| 1: Continuous translation | A set of 100 512 × 512 pixel speckle patterns from a cast aluminium plate during a continuous linear translation with a velocity of ∼5 mm/s applied in the x-direction. Used to assess translation performance. |

| 2: Uncorrelated speckle patterns | A set of 100 uncorrelated speckle patterns 512 × 512 pixel in size from a cast aluminium plate with no translation or rotation applied. Used for simulated translation/rotation tests. |

| 3: Stepped rotation | A set of 512 × 512 pixel speckle patterns from a cast aluminium plate with in-plane rotations applied between 0 and 360° in 0.5° steps. Used to assess rotation performance. |

| 4: Surface finishes: stepped translation | A set of 1280 × 1024 pixel speckle patterns from the different surface preparation samples shown in Figure 1 with a linear translation between 0 and 2.8 mm in 50 um steps applied in the x-direction. Used to assess detector and descriptor robustness. |

| 5: Surface finishes: stepped rotation | A set of 1280 × 1024 pixel speckle patterns from the different surface preparation samples shown in Figure 1 with in-plane rotations applied between 0 and 360°. Used to assess detector and descriptor robustness. |

| Method | Translation | Rotation | ||||||

|---|---|---|---|---|---|---|---|---|

| (Image Size) | dt | dt | ||||||

| (px) | (px) | (°) | (-) | (ms) | (°) | (-) | (ms) | |

| USURF (128, 128 px) | 0.06 | 0.08 | 0.04 | 52 | 3.7 | 0.22 | 22 | 5.0 |

| SURF (128, 128 px) | 0.08 | 0.09 | 0.05 | 25 | 6.8 | 0.09 | 16 | 7.1 |

| ORB (128, 128 px) | 0.27 | 0.28 | 0.16 | 28 | 1.0 | 0.37 | 12 | 1.0 |

| BRISK (128, 128 px) | 0.06 | 0.06 | 0.04 | 81 | 5.4 | 0.22 | 25 | 5.3 |

| USURF (256, 256 px) | 0.02 | 0.03 | 0.01 | 310 | 25.0 | 0.02 | 104 | 20.5 |

| SURF (256, 256 px) | 0.03 | 0.04 | 0.01 | 149 | 43.7 | 0.05 | 48 | 36.4 |

| ORB (256, 256 px) | 0.06 | 0.09 | 0.02 | 267 | 8.1 | 0.08 | 58 | 7.8 |

| BRISK (256, 256 px) | 0.02 | 0.02 | 0.01 | 504 | 36.9 | 0.06 | 141 | 35.4 |

| USURF (512, 512 px) | 0.01 | 0.01 | 0.00 | 1361 | 317.1 | 0.03 | 385 | 153.8 |

| SURF (512, 512 px) | 0.02 | 0.02 | 0.00 | 649 | 445.0 | 0.05 | 145 | 230.3 |

| ORB (512, 512 px) | 0.04 | 0.06 | 0.00 | 1384 | 191.6 | 0.08 | 255 | 104.1 |

| BRISK (512, 512 px) | 0.01 | 0.01 | 0.00 | 2333 | 430.0 | 0.03 | 472 | 280.2 |

| Method | Mean Processing Time | Mean Number of Features | ||||

|---|---|---|---|---|---|---|

| (Image Size) | (ms/frame) | (-) | ||||

| (128 × 128) | (256 × 256) | (512 × 512) | (128 × 128) | (256 × 256) | (512 × 512) | |

| Harris corners | 0.39 | 1.95 | 8.51 | 181 | 547 | 1552 |

| GFTT | 0.55 | 2.63 | 11.44 | 648 | 2537 | 5000 |

| FAST | 0.11 | 0.41 | 1.84 | 274 | 1138 | 4636 |

| AGAST | 0.29 | 1.15 | 5.16 | 294 | 1220 | 4965 |

| Difference of Gaussian (DoG) | 0.68 | 3.14 | 14.24 | 98 | 499 | 2230 |

| Orientated FAST (ORB) | 0.22 | 0.78 | 3.13 | 274 | 1138 | 4558 |

| Orientated FAST (BRISK) | 1.33 | 7.81 | 43.44 | 67 | 681 | 3826 |

| Orientated DoG (SURF) | 1.32 | 6.25 | 26.91 | 98 | 499 | 2230 |

| Method | Description Time |

|---|---|

| (ms per 1000 Features) | |

| USURF (length 64 descriptor) | 14.9 |

| USURF (length 128 descriptor) | 15.7 |

| SURF (length 64 descriptor) | 34.1 |

| SURF (length 128 descriptor) | 34.8 |

| ORB (patchSize = 16) | 1.5 |

| ORB (patchSize = 32) | 1.5 |

| ORB (patchSize = 48) | 1.5 |

| BRISK (patchSize = 16) | 12.2 |

| BRISK (patchSize = 32) | 13.8 |

| BRISK (patchSize = 48) | 15.8 |

| Method | Translation 100 Pixels | Rotation 10° | ||||||

|---|---|---|---|---|---|---|---|---|

| %good | %bad | %failed | %good | %bad | %failed | |||

| USURF 1 | 89 | 2 | 9 | 1.0 | 40 | 23 | 38 | 1.0 |

| SURF | 46 | 21 | 33 | 0.77 | 26 | 30 | 44 | 0.62 |

| ORB | 56 | 13 | 31 | 0.81 | 48 | 18 | 34 | 0.76 |

| BRISK | 68 | 9 | 23 | 0.79 | 49 | 18 | 33 | 0.72 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Charrett, T.; Tatam, R. Performance and Analysis of Feature Tracking Approaches in Laser Speckle Instrumentation. Sensors 2019, 19, 2389. https://doi.org/10.3390/s19102389

Charrett T, Tatam R. Performance and Analysis of Feature Tracking Approaches in Laser Speckle Instrumentation. Sensors. 2019; 19(10):2389. https://doi.org/10.3390/s19102389

Chicago/Turabian StyleCharrett, Thomas, and Ralph Tatam. 2019. "Performance and Analysis of Feature Tracking Approaches in Laser Speckle Instrumentation" Sensors 19, no. 10: 2389. https://doi.org/10.3390/s19102389

APA StyleCharrett, T., & Tatam, R. (2019). Performance and Analysis of Feature Tracking Approaches in Laser Speckle Instrumentation. Sensors, 19(10), 2389. https://doi.org/10.3390/s19102389