Abstract

Nineteen million Americans have significant vision loss. Over 70% of these are not employed full-time, and more than a quarter live below the poverty line. Globally, there are 36 million blind people, but less than half use white canes or more costly commercial sensory substitutions. The quality of life for visually impaired people is hampered by the resultant lack of independence. To help alleviate these challenges this study reports on the development of a low-cost, open-source ultrasound-based navigational support system in the form of a wearable bracelet to allow people with the lost vision to navigate, orient themselves in their surroundings and avoid obstacles when moving. The system can be largely made with digitally distributed manufacturing using low-cost 3-D printing/milling. It conveys point-distance information by utilizing the natural active sensing approach and modulates measurements into haptic feedback with various vibration patterns within the four-meter range. It does not require complex calibrations and training, consists of the small number of available and inexpensive components, and can be used as an independent addition to traditional tools. Sighted blindfolded participants successfully demonstrated the device for nine primary everyday navigation and guidance tasks including indoor and outdoor navigation and avoiding collisions with other pedestrians.

1. Introduction

According to the World Health Organization (WHO) [1,2], approximately 1.3 billion people live with some form of vision impairment, and 36 million of them are totally blind. The vast majority of the world’s blind population live in developing countries [3]. In addition, this challenge is falling on the elderly at an increasing rate, with the group of visually impaired people over 65 years of age growing with a per-decade increase of up to 2 million persons, which is faster than the overall population with visual impairments [3]. However, even in developed countries like the U.S. this is becoming an increasing problem because of several factors. First, the U.S. is aging: Americans 65 and older is projected to more than double from 46 million today to over 98 million by 2060, and their share of the total population will rise to nearly a quarter from 15% [4]. Second, the elderly in the U.S. are increasing financially vulnerable [5]. According to American Foundation for the Blind [6] and National Federation of the Blind [7], more than 19 million American adults between the ages of 18 and 64 report experiencing significant vision loss. For working age adults reporting significant vision loss, over 70% are not employed full-time, and 27.7% of non-institutionalized persons aged 21 to 64 years with a visual disability live below the poverty line [7].

Safe navigation and independent mobility are parts of everyday tasks for visually impaired people [8], and can only partially be resolved with the traditional white cane (or their alternatives such as guide canes or long canes). According to several studies [9,10,11], less than 50% of the blind population use white canes. For those that do use them, they work reasonably well for short distances as they allow users to detect obstacles from the ground to waist level [12]. Some blind people also use mouth clicks to implement human echolocation [13]. Echoes from mouth click sounds can provide information about surrounding features far beyond the reach of a white cane [14], but, unfortunately, not all visually-impaired people are able to use this technique.

Over the past few decades, several approaches have been developed to create sensory augmentation systems to improve the quality of life of people with visual impairments, which will be reviewed in the next section. It is clear developing a sensor augmentation or replacement of the white cane with a sensory substitution device can greatly enhance the safety and mobility of the population of people with lost vision [15]. In addition, there are sensory substitution products that have already been commercialized that surpass the abilities of conventional white canes. However, most of the commercially available sensory substitution products are not accessible to most people from developing countries as well as the poor in developed countries due to costs: (i) UltraCane ($807.35) [16], an ultrasonic-based assistive device with haptic feedback and the range of 1.5 to 4 meters; (ii) Miniguide Mobility Aid ($499.00) [17], a handheld device that uses ultrasonic echolocation to detect objects in front of a person in the range of 0.5 to 7 meters; (iii) LS&S 541035 Sunu Band Mobility Guide and Smart Watch ($373.75) [18] that uses sonar technology to provide haptic feedback regarding the user’s surroundings; (iv) BuzzClip Mobility Guide ($249.00) [19], a SONAR-based hinged clip which has three ranges of detection (1, 2, and 3 meters) and provides haptic feedback; (v) iGlasses Ultrasonic Mobility Aid ($99.95) [20] provides haptic feedback based on ultrasonic sensors with the range of up to 3 meters, (vi) Ray [21] complements the long white cane by detecting barriers up to 2.5 meters and announces them via acoustic signals or vibrations, (vii) SmartCane ($52.00 for India and $90.00 for other countries) [22,23] detects obstacles from knee to head height based on sonic waves and modulates the distance to barriers into intuitive vibratory patterns. It is thus clear that a low-cost sensor augmentation or replacement of the white cane with a sensory substitution device is needed.

In the most recent comparative survey of sensory augmentation systems to improve the quality of life of people with visual impairments [24], assistive visual technologies are divided into three categories: (1) vision enhancement, (2) vision substitution, and (3) vision replacement. In addition, Elmannai et al. [24] provided a quantitative evaluation of wearable and portable assistive devices for the visually impaired population. Additional devices have been developed for white canes are based on ultrasonic distance measurements and haptic [25,26] or audio [27] feedback. Amedi et al. [28] introduced an electronic travel aid with the tactile and audio output, that uses multiple infrared sensors. Bharambe et al. [29] developed a sensory substitution system with two ultrasonic sensors and three vibration motors in form of a hand device. Yi et al. [30] developed an ultrasonic-based cane system with haptic feedback and guidance in audio format. Pereira et al. [12] proposed a wearable jacket as a body area network and Aymaz and Çavdar [31] introduced an assistive headset for obstacle detection based on ultrasonic distance measurements. Agarwal et al. [32] developed an ultrasonic smart glasses. De Alwis and Samarawickrama [33] proposed a low-cost smart walking stick which integrates water and ultrasound sensors.

In addition to the devices based on the use of acoustic waves, there are also a number of projects which utilize more complex systems based on computer vision [34,35,36,37], machine learning technique [38], and GPS/GSM technologies [39] and provide information regarding the navigation and ambient conditions.

Tudor et al. [40] designed an ultrasound-based system with vibration feedback, which could be considered as the closest prototype to the device developed and tested here. The ultrasonic electronic system [40] utilizes two separate ultrasound sensors for near (2 to 40 cm) and far (40 to 180 cm) distance ranges respectively. Tudor et al. [40] use simple linear pulse width modulation dependency for both vibration motors. Most of these projects allow to navigate within the distance range of 4 m, but suffer from drawbacks related to cost and complexity and thus accessibility to the world’s population of visually impaired poor people. One approach recently gaining acceptance for lowering the costs of hardware-based products is the combination of open source development [41,42,43] with distributed digital manufacturing technologies [44,45]. This is clearly seen in the development of the open source self-replicating rapid prototyper (RepRap) 3-D printer project [46,47,48], which radically reduced the cost of additive manufacturing (AM) machines [49] as well as products that can be manufactured using them [50,51,52] including scientific tools [42,53,54,55,56,57], consumer goods [58,59,60,61,62,63], and adaptive aids [64]. In general, these economic savings are greater for the higher percentage of the components able to be 3-D printed [65,66].

In this study, a low-cost, open-source navigational support system using ultrasonic sensors is developed. It utilizes one ultrasound sensor with one or two vibration motors (depend on the designed model) in a 3-D printed case to make the system compact and easily fixed on the wrist as a bracelet. The developed Arduino software performs distance measurements in the full sensor range from 2 cm to 4 m according to the ultrasound sensor specifications and implements an algorithm that uses the motors as low- and high-frequency vibration sources. The distance modulation technique consists of splitting the entire four-meter measurement range into four bands as described in Section 2.4, and of implementing the non-linear duty cycle modulation for the most significant middle band of 35 cm to 150 cm. The system can be largely digitally manufactured including both the electronics and mechanical parts with conventional low-cost RepRap-class PCB milling [67] and 3-D printing. The system is quantified for range and accuracy to help visually-impaired people in distance measurement and obstacle avoidance including the minimal size of the object. According to [24,68], the proposed system partially fulfills the Electronic Travel Aid (ETA) requirements, which consist of providing tactile and/or audible feedback on environmental information that is not available using traditional means such as white cane, guide dog, etc. Sighted blindfolded participants tested the device for primary everyday navigation and guidance tasks including: (a) walk along the corridor with an unknown obstacle, (b) bypass several corners indoors, (c) walk through the staircase, (d) wall following, (e) detect the open door, (f) detect an obstacle on the street, (g) bypass an obstacle on the street, (h) avoid collisions with pedestrians, (i) interact with known objects.

2. Materials and Methods

2.1. Design

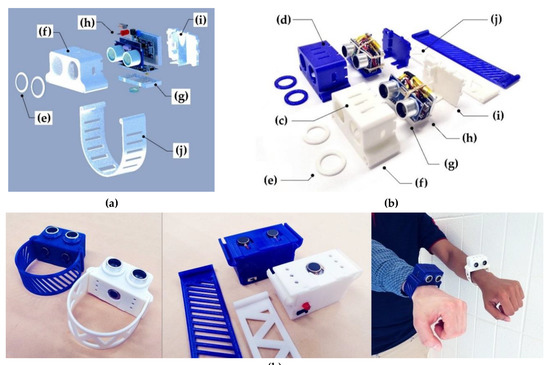

An open-source navigational support with the 3-D printable case components was developed in form of two models of wearable bracelet with one and two vibration motors respectively (Figure 1) to help visually impaired people in distance measurement and obstacle avoidance. The system is based on a 5-volt HC-SR04 ultrasonic sensor [69], which uses SONAR (originally an acronym for sound navigation ranging) to determine the distance to an object in the range of 0.02–4 m with a measuring angle of 15 degrees. It detects obstacles in front of the user’s body from the ground to the head and above, and provides haptic feedback using a 10 mm flat vibration motor [70], which generates oscillations with variable frequency and amplitude depending on the distance to the obstacle. The microcontroller unit allows us to coordinate the operation of the motor and the sensor based on the developed algorithm, uploaded as a C-code to Arduino.

Figure 1.

Parts of an open-source navigational support with 3-D printable case components: (a) 3-D prototype; (b) Components; (c) Model 1 with one vibration motor; (d) Model 2 with two vibration motors; (e) Locking rings; (f) Case; (g) Vibration pad; (h) Sensor core; (i) Back cap; (j) Bracelet; (k) Assembly.

The device can be placed on the right or left hand, and it does not prevent the use of the hand for other tasks. It conveys point-distance information and could be used as a part of an assembly of assistive devices or as an augmentation to a regular white cane. In that way, the active sensing approach [15] was utilized, in which a person constantly scans the ambient environment. This method allows a user to achieve better spatial perception and accuracy [15] due to the similarity to natural sensory processes [71,72].

2.2. Bill of Materials

The system was prototyped for people with no engineering skills and the lack of available materials, so they can finish the assembly with the minimal toolkit. The bill of materials is summarized in Table 1. A 5V DC-DC boost step-up module can be considered as an optional component that can be used for battery life extension.

Table 1.

Bill of materials for the open-source ultrasound-based navigational support.

2.3. Assembly

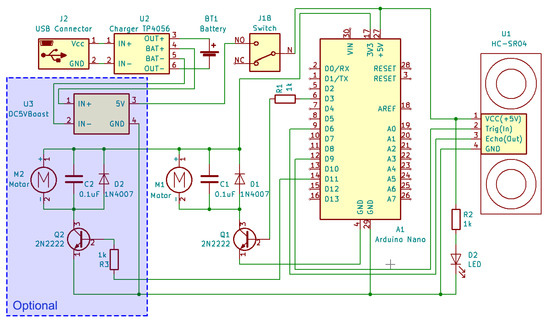

After 3-D printing all the necessary components, electronic parts should be soldered together following Figure 2 and assembled to a sensor core with the vibration motor.

Figure 2.

Electrical circuit.

The Arduino Nano board should be programmed with the code available at [73], and the electronic core assembly should be placed in the 3-D printed case to finish the whole assembly (Figure 1k). All the CAD models and STL files are available online under an open-source CC BY-SA 3.0 license (Creative Commons – Attribution – Share Alike) [74]. The hand bracelet (Figure 1j) has an online option for customization [75], so a person with no experience with complicated 3-D modeling software could print the part after adjusting it to their hand size. For Arduino programming, it is necessary to download free open source Arduino IDE [76]. The final assembly of the sensor core and 3-D printed case components is demonstrated in the supplementary material (Video S1).

2.4. Operational Principles

The ultrasonic sensor emits acoustic waves at the frequency of 40 kHz, which travel through the air and reflects from objects within the working zone. Every measurement cycle it sends a 10 µs trigger input pulse and an 8-cycle sonic burst at the speed of sound and its reflection from an object is received by an echo sounder [69]. It receives a 150 µs – 25 ms (38 ms in case of no obstacle) output echo pulse, Techo, which width linearly depends on the distance to the detected obstacle:

where Techo is the output echo pulse width [µs], D is the measured distance to an obstacle [cm], k = 58 (µs/cm) is the conversion factor given by the sensor datasheet.

Techo / k = D,

The measurement cycle, Tmeasure, specified in the Arduino program and represents a constant time duration declared by a developer, but according to the sensor datasheet, this time duration should be no less than 60 ms [69]. The distance to the object is measured by the time delay between sending and receiving sonic impulses in the Arduino program.

A single exponential filter [77] was used to smooth noisy sensor measurements. It processes the signal with the desired smoothing factor without using a significant amount of memory. Every time a new measured value yt is provided, the exponential filter updates a smoothed observation, St:

where St−1 – is the previous output value of the filter, yt is a new measured value, α = 0.5 is the smoothing constant.

St = α · yt + (1−α) · St-1, 0 < α < 1,

Total measurement time consists of the traveling time caused by the finite speed of sound and the delay necessary for measurements. The time delay caused by the finite speed of sound, Tmax travel, is:

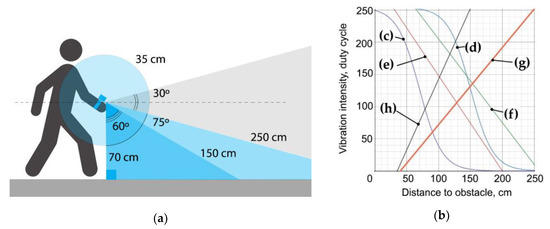

where, Dmax is the maximum measured distance to an obstacle and Vsound is the speed of sound in air. The measured distance is modulated with vibration amplitude and translated in real-time as a duty cycle parameter from the Arduino board (Figure 3). Distances up to 35 cm are characterized by single vibration pulses with a relatively high periodicity. Distances from 150 to 250 cm are characterized by single pulses with low periodicity, and distances above 250 cm are modulated with two-pulse beats.

Tmax travel = Dmax / Vsound = 2 · 4 / 340 = 8 / 340 = 24 (ms),

Figure 3.

The ultrasonic sensor operating principles: (a) The principal distances (not to scale); (b) Calibration of the optimal duty cycle equation for the distance range of 35 cm to 150 cm, where (c) MDC = 127 + 127 · tanh (−(D − 70) / 35); (d) MDC = 127 + 127 · tanh (-(D - 150) / 35); (e) MDC = 296 – 1.5 · D; (f) MDC = 335 – 1.3 · D; (g) MDC = −77 + 2.2 · D; (h) MDC = −48 + 1.2 · D.

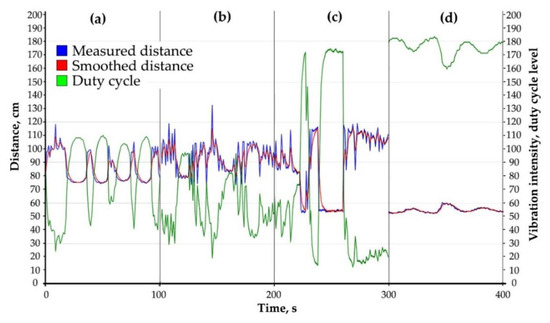

An optimal duty cycle equation (Figure 3c) for the most common distance range of 35–150 cm was found during experiments and calibrations (Figure 3b). The generated duty cycle for the Arduino output, MDC is:

where m = 127, k = 70 and b = 35 are the calibrated parameters, and D is the measured distance in the range of 35 cm to 150 cm. This modulation law is based on hyperbolic tangent function, tanh, (Figure 3c), which is close to the inverse of pain sensitization in its shape [78,79] and demonstrated the best efficiency in most common tasks (Figure 4).

MDC = m + m · tanh (–(D–k) / b) = 127 + 127 · tanh(– (D – 70)/35), 0 < MDC < 255,

Figure 4.

Calibration procedure of the duty cycle modulation based on hyperbolic tangent function (4): (a) Hand swinging; (b) Wall following; (c) Obstacle detection; and (d) Curbs tracking.

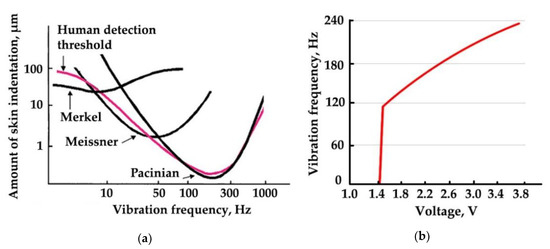

According to [80,81], there are four major types of tactile mechanoreceptors in human skin: (1) Merkel’s disks, (2) Meissner’s corpuscles, (3) Ruffini endings, and (4) Pacinian corpuscles. Meissner’s corpuscles respond to high amplitude incentives with low frequency and Pacinian corpuscles, in turn, respond to low amplitude incentives with high frequency. Thus, varying amplitude and frequency of vibrations, it is possible to activate these mechanoreceptors separately, which increases the working range of sensitivity levels (Figure 5a). The vibration pad (Figure 1g) with the vibration motor are in contact with the skin on the outer area of the wrist. As the nominal frequency characteristics of the motor (Figure 5b) do not cover the full range of human threshold for detection of vibrotactile stimulation, switching the motor on and off at different intervals allows us to simulate low-frequency pulsations.

Figure 5.

Human haptic sensitivity and vibration motor characteristics: (a) Psychophysically determined thresholds for detection of different frequencies of vibrotactile stimulation (adapted from [82]); (b) Vibration motor performance (adapted from [70]).

Estimated current for the whole device is at the level of 50 mA assuming that the vibration motor works 40% of the time. According to this, a 400 mAh battery will provide us with 8 hours of autonomous work, which is an efficient amount of time for test purposes as well as for general use if a blind person was walking throughout the entire working day.

Finally, the cost saving in percent (P) of the device was determined by:

where c is the commercial cost of an equivalent device and m is the cost in materials to fabricate the open source device. All economic values are given in U.S. dollars.

P = (c – m) / c · 100,

2.5. Testing of the Device

Since there are no well-established tests for sensory substitution devices, the experimental setup was based on previous experience. García et al. [26] conducted an experiment with eight blind volunteers and evaluate the results in form of quiz, where participants noted the efficiency in obstacle detection above the waistline. Pereira et al. [12] evaluated their prototype on both blind and sighted participants in five different scenarios to simulate the real-world conditions, including head-, chest-, foot-level obstacles, and stairs. Maidenbaum et al. [15] performed a set of three experiments with 43 participants (38 of them are sighted blindfolded) to evaluate their prototype on basic everyday tasks, including distance estimation, navigation, and obstacle detection. Nau et al. [83] proposed an indoor, portable, standardized course for assessment of visual function that can be used to evaluate obstacle avoidance among people with low or artificial vision.

Summarizing the experience of previous researchers, the set of experiments used to test the devices here consists of indoor and outdoor, structural and natural environment in order to explore the intuitiveness of the developed device and its capabilities in everyday human tasks.

Five sighted blindfolded lab researchers took part in a series of tests, the main purpose of which was to collect the necessary information about adaptation pace, usability, and performance of the developed system. The experiments were conducted in a familiar indoor and outdoor environment for the users.

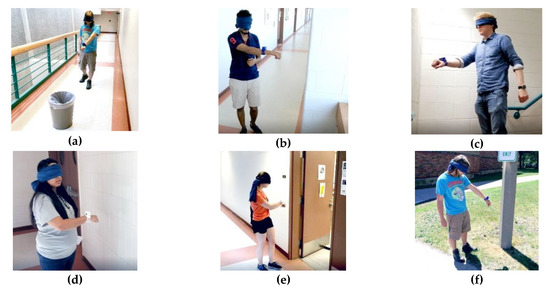

Participants were assigned to the following nine tests (Figure 6):

Figure 6.

Testing procedure. (a) Walk along the corridor with an unknown obstacle; (b) Bypass several corners indoors; (c) Walk through the staircase; (d) Wall fallowing; (e) Detect the open door; (f) Detect an obstacle on the street; (g) Bypass an obstacle on the street; (h) Avoid collisions with pedestrians; (i) Interact with known objects.

- (a)

- Walk along the corridor with an unknown obstacle

- (b)

- Bypass several corners indoors

- (c)

- Navigate a staircase

- (d)

- Wall following

- (e)

- Detect the open door

- (f)

- Detect an obstacle (shrub plant/road sign) outdoors

- (g)

- Bypass an obstacle outdoors

- (h)

- Avoid collisions with pedestrians

- (i)

- Interact with known objects (bin/cardboard boxes)

Each participant had a 10-minute training before nine tests with two attempts, one attempt using the Model 1 (the white device, Figure 1c) and one attempt using the Model 2 (the blue device, Figure 1d). The tasks were to complete a distance of approximately 10 meters (20 steps) with obstacles (pedestrian, road sign, etc.) or with landmarks (wall, door, steps, etc.). Success was counted if the participant has finished the path relying only on the sensing device. Any collision with an obstacle or difficulty in passing the test path was considered a failure. The time spent on passing the test was not taken into account. Results of the experiments presented in Table 2. Some of the experiments were recorded and included in the supplementary material (Video S1).

Table 2.

Results of the experiments.

3. Results and Discussion

All versions of the device were built for less than $24 USD each in readily available purchased components and 3-D printed parts. The economic savings over generally inferior commercial products ranged from 80.2–97.8% for the base system to 73.5–97.0% for the optional module system.

The devices were tested to demonstrate that it has intuitive haptic feedback as outlined above. The device range and accuracy was found to allow a person with a lost eyesight to detect objects with the area of 0.5 m2 or more within the distance range of up to 4 m. To omit head-level obstacles the user must sweep the head area along the direction they are moving. During the experiments was also found that it is possible to track objects with the moving speed of up to 0.5 m/s within the one-meter distance range.

As can be seen from Table 2, the failure rate is 6.7%, with the majority of failures occurring in experiments with Model 2 (the blue device with two vibration motors, Figure 1d). Some participants experienced difficulty navigating a staircase, as well as finding and avoiding unfamiliar obstacles indoors and outdoors.

The preliminary testing of the device was determined to be a success based on the majority of the participants being able to complete the nine tasks outlined in the methods section. All participants during the experiments noted the effectiveness of the haptic interface, the intuitiveness of learning and adaptation processes, and the usability of the device. The system produces fast response and allows a person to detect objects that are moving. It naturally complements primary sensory perception of a person and allows one to detect moving and static objects.

The system has several limitations. First, for the developed system, it is necessary to note a narrow scanning angle and a limited response rate, which is expressed in ignoring the danger posed by small and fast-moving objects. Second, the low spatial resolution of the system is also noted. Thus, in the conditions of an outdoor street environment, it was difficult for the experiment participants to track road curbs and determine the change in the level of the road surface. Third, indoors, soft fabrics, such as furniture and soft curtains, as well as indoor plants, can cause problems with distance estimation caused by acoustic wave absorption. In open outdoor areas, determining the distance can be difficult on lawns with high grass and areas with sand. In addition, given the increase in the threshold of sensitivity with age [84], the performed experiments do not cover the diversity of the entire population of people with visual impairments.

This is a preliminary study on the technical specifications of the new device and a much more complete study is needed on human subjects including blind subjects. Future work is needed for further behavioral experimentation to improve data acquisition methods, obtain more data, and perform a comprehensive statistical analysis of the developed system performance. This will allow designers to utilize achievements in haptic technologies [85] and to improve the efficiency of its tactile feedback, since the alternation of patterns of high-frequency vibrations, low-frequency impulses and beats of different periodicity can significantly expand the range of sensory perception. Similarly, improved sensors could expand range and improved electronics could increase the speed at which objects could be detected. Minor improvements can also be made to the mechanical design to further reduce the size, alter the detector angle to allow for more natural hand movement, and improved customizable design to allow for individual comfort settings as well as aesthetics.

4. Conclusions

The developed low-cost (<$24 USD), open-source navigational support system allows people with lost vision to solve the primary tasks of navigation, orientation, and obstacle detection (>0.5 m2 stationary within the distance range of up to 4 m and moving up to 0.5 m/s within the distance range of up to 1 m) to ensure their safety and mobility. The devices demonstrated intuitive haptic feedback, which becomes easier to use with short practice. It can be largely digitally manufactured as an independent device or as a complementary part to the available means of sensory augmentation (e.g., a white cane). The device operates in similar distance ranges as most of the observed commercial products, and it can be replicated by a person without high technical qualification. Since the prices for available commercial products vary from $90–800 USD, the cost savings ranged from a minimum of 73.5% to over 97%.

Supplementary Materials

The following are available online at https://youtu.be/FA9r2Y27qvY. Video S1: Low-cost open source ultrasound-sensing based navigational support for visually impaired.

Author Contributions

Conceptualization, A.L.P. and J.M.P.; Data curation, A.L.P.; Formal analysis, A.L.P. and J.M.P.; Funding acquisition, J.M.P.; Investigation, A.L.P.; Methodology, A.L.P. and J.M.P.; Resources, J.M.P.; Software, A.L.P.; Supervision, J.M.P.; Validation, A.L.P.; Visualization, A.L.P.; Writing – original draft, A.L.P. and J.M.P.; Writing – review & editing, A.L.P. and J.M.P.

Funding

This research was funded by Aleph Objects and the Richard Witte Endowment.

Acknowledgments

This work was supported by the Witte Endowment. The authors would like to acknowledge Shane Oberloier for helpful discussions, and Apoorv Kulkarni, Nupur Bihari, Tessa Meyer for assistance in conducting experiments.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- World Health Organization. Blindness and Vision Impairment. Available online: https://www.who.int/news-room/fact-sheets/detail/blindness-and-visual-impairment (accessed on 5 July 2019).

- Bourne, R.R.A.; Flaxman, S.R.; Braithwaite, T.; Cicinelli, M.V.; Das, A.; Jonas, J.B.; Keeffe, J.; Kempen, J.H.; Leasher, J.; Limburg, H.; et al. Magnitude, temporal trends, and projections of the global prevalence of blindness and distance and near vision impairment: A systematic review and meta-analysis. Lancet Glob. Health 2017, 5, e888–e897. [Google Scholar] [CrossRef]

- Velázquez, R. Wearable assistive devices for the blind. In Wearable and Autonomous Biomedical Devices and Systems for Smart Environment; Lecture Notes in Electrical Engineering; Springer: Berlin/Heidelberg, Germany, 2010; Volume 75, pp. 331–349. [Google Scholar]

- Population Reference Bureau. Fact Sheet: Aging in the United States. Available online: https://www.prb.org/aging-unitedstates-fact-sheet/ (accessed on 5 July 2019).

- Economic Policy Institute. Financial Security of Elderly Americans at Risk. Available online: https://www.epi.org/publication/economic-security-elderly-americans-risk/ (accessed on 5 July 2019).

- American Foundation for the Blind. Available online: http://www.afb.org (accessed on 5 July 2019).

- National Federation of the Blind. Available online: https://www.nfb.org/resources/blindness-statistics (accessed on 5 July 2019).

- Quinones, P.A.; Greene, T.; Yang, R.; Newman, M.W. Supporting visually impaired navigation: A needs-finding study. In Proceedings of the CHI’11 Extended Abstracts on Human Factors in Computing Systems, Vancouver, BC, Canada, 7–12 May 2011; pp. 1645–1650. [Google Scholar] [CrossRef]

- Gold, D.; Simson, H. Identifying the needs of people in Canada who are blind or visually impaired: Preliminary results of a nation-wide study. In International Congress Series; Elsevier: London, UK, 2015; Volume 1282, pp. 139–142. [Google Scholar]

- Christy, B.; Nirmalan, P.K. Acceptance of the long Cane by persons who are blind in South India. JVIB 2006, 100, 115–119. [Google Scholar] [CrossRef]

- Perkins School for the Blind. 10 Fascinating Facts about the White Cane. Available online: https://www.perkins.org/stories/10-fascinating-facts-about-the-white-cane (accessed on 29 July 2019).

- Pereira, A.; Nunes, N.; Vieira, D.; Costa, N.; Fernandes, H.; Barroso, J. Blind Guide: An Ultrasound Sensor-based Body Area Network for Guiding Blind People. Procedia Comput. Sci. 2015, 67, 403–408. [Google Scholar] [CrossRef]

- Kolarik, A.J.; Cirstea, S.; Pardhan, S.; Moore, B.C.J. A summary of research investigating echolocation abilities of blind and sighted humans. Hear. Res. 2014, 310, 60–68. [Google Scholar] [CrossRef] [PubMed]

- Rosenblum, L.D.; Gordon, M.S.; Jarquin, L. Echolocating distance by moving and stationary listeners. Ecol. Psychol. 2000, 12, 181–206. [Google Scholar] [CrossRef]

- Maidenbaum, S.; Hanassy, S.; Abboud, S.; Buchs, G.; Chebat, D.-R.; Levy-Tzedek, S.; Amedi, A. The “EyeCane”, a new electronic travel aid for the blind: Technology, behavior & swift learning. Restor. Neurol. Neurosci. 2014, 32, 813–824. [Google Scholar] [CrossRef] [PubMed]

- UltraCane. Available online: https://www.ultracane.com/ultracanecat/ultracane (accessed on 5 July 2019).

- Independent Living Aids: Miniguide Mobility Aid. Available online: https://www.independentliving.com/product/Miniguide-Mobility-Aid/mobility-aids (accessed on 5 July 2019).

- LS&S 541035 Sunu Band Mobility Guide and Smart Watch. Available online: https://www.devinemedical.com/541035-Sunu-Band-Mobility-Guide-and-Smart-Watch-p/lss-541035.htm (accessed on 5 July 2019).

- Independent Living Aids: BuzzClip Mobility Guide. Available online: https://www.independentliving.com/product/BuzzClip-Mobility-Guide-2nd-Generation/mobility-aids (accessed on 5 July 2019).

- Independent Living Aids: iGlasses Ultrasonic Mobility Aid. Available online: https://www.independentliving.com/product/iGlasses-Ultrasonic-Mobility-Aid-Clear-Lens/mobility-aids (accessed on 5 July 2019).

- Caretec: Ray—The Handy Mobility Aid. Available online: http://www.caretec.at/Mobility.148.0.html?&cHash=a82f48fd87&detail=3131 (accessed on 5 July 2019).

- Khan, I.; Khusro, S.; Ullah, I. Technology-assisted white cane, evaluation and future directions. PeerJ 2018, 6, e6058. [Google Scholar] [CrossRef]

- SmartCane Device. Available online: http://smartcane.saksham.org/overview (accessed on 29 July 2019).

- Elmannai, W.; Elleithy, K. Sensor-Based Assistive Devices for Visually-Impaired People: Current Status, Challenges, and Future Directions. Sensors 2017, 17, 565. [Google Scholar] [CrossRef]

- Wahab, M.H.A.; Talib, A.A.; Kadir, H.A.; Johari, A.; Noraziah, A.; Sidek, R.M.; Mutalib, A.A. Smart Cane: Assistive Cane for Visually-impaired People. arXiv 2018, arXiv:1110.5156. [Google Scholar]

- García, A.R.; Fonseca, R.; Durán, A. Electronic long cane for locomotion improving on visual impaired people: A case study. In Proceedings of the 2011 Pan American Health Care Exchanges (PAHCE), Rio de Janeiro, Brazil, 28 March–1 April 2011. [Google Scholar] [CrossRef]

- Kumar, K.; Champaty, B.; Uvanesh, K.; Chachan, R.; Pal, K.; Anis, A. Development of an ultrasonic cane as a navigation aid for the blind people. In Proceedings of the 2014 International Conference on Control, Instrumentation, Communication and Computational Technologies (ICCICCT), Kanyakumari District, India, 10–11 July 2014. [Google Scholar] [CrossRef]

- Amedi, A.; Hanassy, S. Infra Red Based Devices for Guiding Blind and Visually Impaired Persons. WO Patent 2012/090114 Al, 19 December 2011. [Google Scholar]

- Bharambe, S.; Thakker, R.; Patil, H.; Bhurchandi, K.M. Substitute Eyes for Blind with Navigator Using Android. In Proceedings of the India Educators Conference (TIIEC), Bangalore, India, 4–6 April 2013; pp. 38–43. [Google Scholar] [CrossRef]

- Yi, Y.; Dong, L. A design of blind-guide crutch based on multi-sensors. In Proceedings of the 2015 12th International Conference on Fuzzy Systems and Knowledge Discovery (FSKD), Zhangjiajie, China, 15–17 August 2015. [Google Scholar] [CrossRef]

- Aymaz, Ş.; Çavdar, T. Ultrasonic Assistive Headset for visually impaired people. In Proceedings of the 2016 39th International Conference on Telecommunications and Signal Processing (TSP), Vienna, Austria, 27–29 June 2016. [Google Scholar] [CrossRef]

- Agarwal, R.; Ladha, N.; Agarwal, M.; Majee, K.K.; Das, A.; Kumar, S.A.; Rai, S.K.; Singh, A.K.; Nayak, S.; Dey, S.; et al. Low cost ultrasonic smart glasses for blind. In Proceedings of the 2017 8th IEEE Annual Information Technology, Electronics and Mobile Communication Conference (IEMCON), Vancouver, BC, Canada, 3–5 October 2017; pp. 210–213. [Google Scholar] [CrossRef]

- De Alwis, D.; Samarawickrama, Y.C. Low Cost Ultrasonic Based Wide Detection Range Smart Walking Stick for Visually Impaired. Int. J. Multidiscip. Stud. 2016, 3, 123–130. [Google Scholar] [CrossRef]

- Landa-Hernández, A.; Bayro-Corrochano, E. Cognitive guidance system for the blind. In Proceedings of the IEEE World Automation Congress (WAC), Puerto Vallarta, Mexico, 24–28 June 2012. [Google Scholar]

- Fradinho Oliveira, J. The path force feedback belt. In Proceedings of the 2013 8th International Conference on Information Technology in Asia (CITA), Kuching, Malaysia, 1–4 July 2013. [Google Scholar] [CrossRef]

- Saputra, M.R.U.; Widyawan; Santosa, P.I. Obstacle Avoidance for Visually Impaired Using Auto-Adaptive Thresholding on Kinect’s Depth Image. In Proceedings of the IEEE 14th International Conference on Scalable Computing and Communications and Its Associated Workshops (UTC-ATC-ScalCom), Bali, Indonesia, 9–12 December 2014. [Google Scholar] [CrossRef]

- Aladrén, A.; López-Nicolás, G.; Puig, L.; Guerrero, J.J. Navigation Assistance for the Visually Impaired Using RGB-D Sensor with Range Expansion. IEEE Syst. J. 2016, 10, 922–932. [Google Scholar] [CrossRef]

- Mocanu, B.; Tapu, R.; Zaharia, T. When Ultrasonic Sensors and Computer Vision Join Forces for Efficient Obstacle Detection and Recognition. Sensors 2016, 16, 1807. [Google Scholar] [CrossRef] [PubMed]

- Prudhvi, B.R.; Bagani, R. Silicon eyes: GPS-GSM based navigation assistant for visually impaired using capacitive touch braille keypad and smart SMS facility. In Proceedings of the 2013 World Congress on Computer and Information Technology (WCCIT), Sousse, Tunisia, 22–24 June 2013. [Google Scholar] [CrossRef]

- Tudor, D.; Dobrescu, L.; Dobrescu, D. Ultrasonic electronic system for blind people navigation. In Proceedings of the E-Health and Bioengineering Conference (EHB), Iasi, Romania, 19–21 November 2015. [Google Scholar] [CrossRef]

- Gibb, A. Building Open Source Hardware: DIY Manufacturing for Hackers and Makers; Pearson Education: Crawfordsville, IN, USA, 2014; ISBN 978-0-321-90604-5. [Google Scholar]

- da Costa, E.T.; Mora, M.F.; Willis, P.A.; do Lago, C.L.; Jiao, H.; Garcia, C.D. Getting started with open-hardware: Development and control of microfluidic devices. Electrophoresis 2014, 35, 2370–2377. [Google Scholar] [CrossRef] [PubMed]

- Ackerman, J.R. Toward Open Source Hardware. Univ. Dayton Law Rev. 2008, 34, 183–222. [Google Scholar]

- Blikstein, P. Digital fabrication and ‘making’ in education: The democratization of invention. In FabLabs: Of Machines, Makers and Inventors; Büching, C., Walter-Herrmann, J., Eds.; Transcript-Verlag: Bielefeld, Germany, 2013; Volume 4, pp. 1–21. [Google Scholar]

- Gershenfeld, N. How to Make Almost Anything: The Digital Fabrication Revolution. Foreign Aff. 2012, 91, 42–57. [Google Scholar]

- Sells, E.; Bailard, S.; Smith, Z.; Bowyer, A.; Olliver, V. RepRap: The Replicating Rapid—Maximizing Customizability by Breeding the Means of Production. In Proceedings of the World Conference on Mass Customization and Personalization, Cambridge, MA, USA, 7–9 October 2007. [Google Scholar] [CrossRef]

- Jones, R.; Haufe, P.; Sells, E.; Iravani, P.; Olliver, V.; Palmer, C.; Bowyer, A. RepRap—The Replicating Rapid Prototyper. Robotica 2011, 29, 177–191. [Google Scholar] [CrossRef]

- Bowyer, A. 3D Printing and Humanity’s First Imperfect Replicator. 3D Print. Addit. Manuf. 2014, 1, 4–5. [Google Scholar] [CrossRef]

- Rundle, G. A Revolution in the Making; Simon and Schuster: New York, NY, USA, 2014; ISBN 978-1-922213-48-8. [Google Scholar]

- Kietzmann, J.; Pitt, L.; Berthon, P. Disruptions, decisions, and destinations: Enter the age of 3-D printing and additive manufacturing. Bus. Horiz. 2015, 58, 209–215. [Google Scholar] [CrossRef]

- Lipson, H.; Kurman, M. Fabricated: The New World of 3D Printing; John Wiley & Sons: Hoboken, NJ, USA, 2013; ISBN 978-1-118-41694-5. [Google Scholar]

- Attaran, M. The rise of 3-D printing: The advantages of additive manufacturing over traditional manufacturing. Bus. Horiz. 2017, 60, 677–688. [Google Scholar] [CrossRef]

- Pearce, J.M. Building Research Equipment with Free, Open-Source Hardware. Science 2012, 337, 1303–1304. [Google Scholar] [CrossRef]

- Pearce, J. Open-Source Lab: How to Build Your Own Hardware and Reduce Research Costs, 1st ed.; Elsevier: Amsterdam, The Netherlands, 2013; ISBN 978-0-12-410462-4. [Google Scholar]

- Baden, T.; Chagas, A.M.; Gage, G.; Marzullo, T.; Prieto-Godino, L.L.; Euler, T. Open Labware: 3-D Printing Your Own Lab Equipment. PLoS Biol. 2015, 13, e1002086. [Google Scholar] [CrossRef] [PubMed]

- Coakley, M.; Hurt, D.E. 3D Printing in the Laboratory: Maximize Time and Funds with Customized and Open-Source Labware. J. Lab. Autom. 2016, 21, 489–495. [Google Scholar] [CrossRef] [PubMed]

- Zhang, C.; Wijnen, B.; Pearce, J.M. Open-Source 3-D Platform for Low-Cost Scientific Instrument Ecosystem. J. Lab. Autom. 2016, 21, 517–525. [Google Scholar] [CrossRef] [PubMed]

- Wittbrodt, B.; Laureto, J.; Tymrak, B.; Pearce, J. Distributed Manufacturing with 3-D Printing: A Case Study of Recreational Vehicle Solar Photovoltaic Mounting Systems. J. Frugal Innov. 2015, 1. [Google Scholar] [CrossRef]

- Gwamuri, J.; Wittbrodt, B.; Anzalone, N.; Pearce, J. Reversing the Trend of Large Scale and Centralization in Manufacturing: The Case of Distributed Manufacturing of Customizable 3-D-Printable Self-Adjustable Glasses. Chall. Sustain. 2014, 2, 30–40. [Google Scholar] [CrossRef]

- Petersen, E.E.; Pearce, J. Emergence of Home Manufacturing in the Developed World: Return on Investment for Open-Source 3-D Printers. Technologies 2017, 5, 7. [Google Scholar] [CrossRef]

- Petersen, E.E.; Kidd, R.W.; Pearce, J.M. Impact of DIY Home Manufacturing with 3D Printing on the Toy and Game Market. Technologies 2017, 5, 45. [Google Scholar] [CrossRef]

- Woern, A.L.; Pearce, J.M. Distributed Manufacturing of Flexible Products: Technical Feasibility and Economic Viability. Technologies 2017, 5, 71. [Google Scholar] [CrossRef]

- Smith, P. Commons people: Additive manufacturing enabled collaborative commons production. In Proceedings of the 15th RDPM Conference, Loughborough, UK, 26–27 April 2015. [Google Scholar]

- Gallup, N.; Bow, J.; Pearce, J. Economic Potential for Distributed Manufacturing of Adaptive Aids for Arthritis Patients in the US. Geriatrics 2018, 3, 89. [Google Scholar] [CrossRef]

- Hietanen, I.; Heikkinen, I.T.S.; Savin, H.; Pearce, J.M. Approaches to open source 3-D printable probe positioners and micromanipulators for probe stations. HardwareX 2018, 4, e00042. [Google Scholar] [CrossRef]

- Sule, S.S.; Petsiuk, A.L.; Pearce, J.M. Open Source Completely 3-D Printable Centrifuge. Instruments 2019, 3, 30. [Google Scholar] [CrossRef]

- Oberloier, S.; Pearce, J.M. Belt-Driven Open Source Circuit Mill Using Low-Cost 3-D Printer Components. Inventions 2018, 3, 64. [Google Scholar] [CrossRef]

- Blasch, B.B.; Wiener, W.R.; Welsh, R.L. Foundations of Orientation and Mobility, 2nd ed.; AFB Press: New York, NY, USA, 1997. [Google Scholar]

- SparkFun Electronics. HC-SR04 Ultrasonic Sensor Datasheet. Available online: https://github.com/sparkfun/HC-SR04_UltrasonicSensor (accessed on 5 July 2019).

- Precision Microdrives. PicoVibe Flat 3 × 10 mm Vibration Motor Datasheet. Available online: https://www.precisionmicrodrives.com/wp-content/uploads/2016/04/310-101-datasheet.pdf (accessed on 5 July 2019).

- Horev, G.; Saig, A.; Knutsen, P.M.; Pietr, M.; Yu, C.; Ahissar, E. Motor–sensory convergence in object localization: A comparative study in rats and humans. Philos. Trans. R. Soc. Lond. B Biol. Sci. 2011, 366, 3070–3076. [Google Scholar] [CrossRef] [PubMed]

- Lenay, C.; Gapenne, O.; Hanneton, S.; Marque, C.; Genouëlle, C. Sensory substitution: Limits and perspectives. In Touching for Knowing: Cognitive Psychology of Haptic Manual Perception; Advances in Consciousness Research; Hatwell, Y., Streri, A., Gentaz, E., Eds.; John Benjamins Publishing Company: Amsterdam, The Netherlands, 2003; pp. 276–292. [Google Scholar]

- MOST: Ultrasound-Based Navigational Support, Arduino Nano Firmware. Available online: https://github.com/apetsiuk/MOST-Ultrasound-based-Navigational-Support (accessed on 7 July 2019).

- CAD Source Models for the Ultrasound-Sensing Based Navigational Support for Visually Impaired. Available online: https://www.thingiverse.com/thing:3717730 (accessed on 5 July 2019).

- Customizable Flexible Bracelet for the Ultrasound-Based Navigational Support. Available online: https://www.thingiverse.com/thing:3733136 (accessed on 7 July 2019).

- Arduino IDE. Available online: https://www.arduino.cc/en/Main/Software (accessed on 5 July 2019).

- NIST/SEMATECH e-Handbook of Statistical Methods. Available online: https://www.itl.nist.gov/div898/handbook//pmc/section4/pmc431.htm (accessed on 5 July 2019).

- Gottschalk, A.; Smith, D.S. New concepts in acute pain therapy: Preemptive analgesia. Am. Fam. Phys. 2001, 63, 1979–1985. [Google Scholar]

- Borstad, J.; Woeste, C. The role of sensitization in musculoskeletal shoulder pain. Braz. J. Phys. Ther. 2015, 19. [Google Scholar] [CrossRef] [PubMed]

- Savindu, H.P.; Iroshan, K.A.; Panangala, C.D.; Perera, W.L.; Silva, A.C. BrailleBand: Blind support haptic wearable band for communication using braille language. In Proceedings of the IEEE International Conference on Systems, Man, and Cybernetics (SMC), Banff, AB, Canada, 5–8 October 2017; pp. 1381–1386. [Google Scholar] [CrossRef]

- Sekuler, R.; Blake, R. Perception; McGraw-Hill: New York, NY, USA, 2002. [Google Scholar]

- Roe, A.W.; Friedman, R.M.; Chen, L.M. Multiple Representation in Primate SI: A View from a Window on the Brain. In Handbook of Neurochemistry and Molecular Neurobiology; Lajtha, A., Johnson, D.A., Eds.; Springer: Boston, MA, USA, 2007; pp. 1–16. [Google Scholar]

- Nau, A.C.; Pintar, C.; Fisher, C.; Jeong, J.H.; Jeong, K. A Standardized Obstacle Course for Assessment of Visual Function in Ultra Low Vision and Artificial Vision. J. Vis. Exp. 2014, 84, e51205. [Google Scholar] [CrossRef] [PubMed]

- Stuart, M.B.; Turman, A.B.; Shaw, J.A.; Walsh, N.; Nguyen, V.A. Effects of aging on vibration detection thresholds at various body regions. BMC Geriatr. 2003, 3. [Google Scholar] [CrossRef]

- Bermejo, C.; Hui, P. A survey on haptic technologies for mobile augmented reality. arXiv 2017, arXiv:1709.00698. [Google Scholar]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).