A Model-Based Method for Estimating the Attitude of Underground Articulated Vehicles

Abstract

:1. Introduction

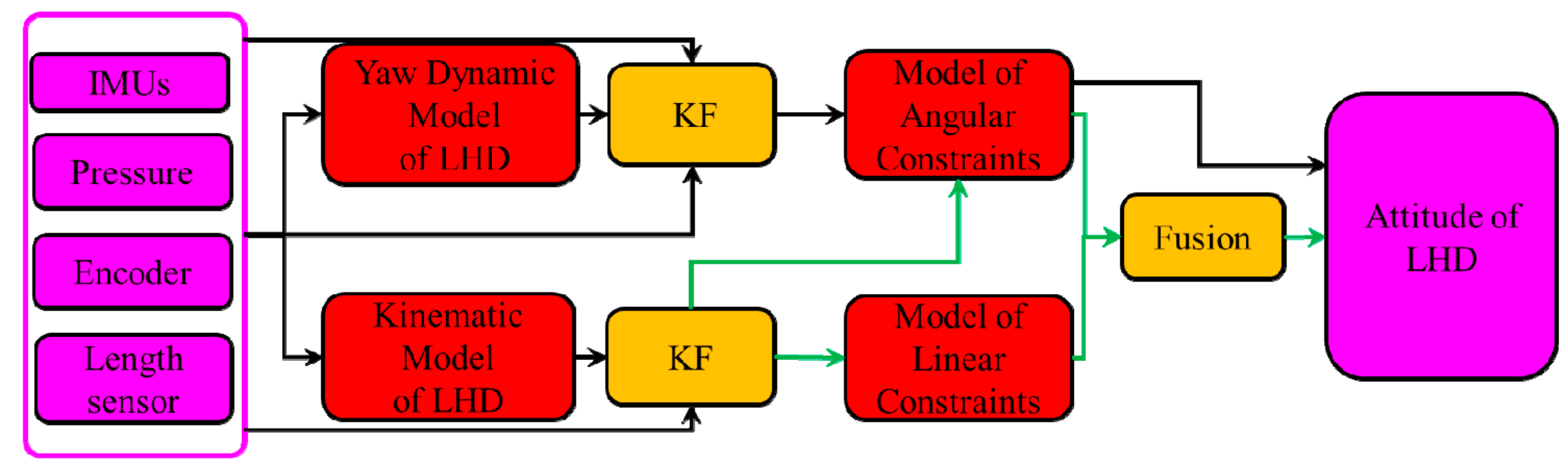

- (1)

- A novel model-based method for estimating the attitudes of UAVs is proposed. In the first step, the kinematic model and the constraint of center articulation models are used to overcome the drift in the IMU.

- (2)

- A model-based algorithm that combines the dynamic model with a KF is developed to estimate the yaw motion of an LHD vehicle.

- (3)

- A fusion of the data from different models and sensors is carried out to improve the accuracy of attitude estimations.

2. Method

2.1. Models

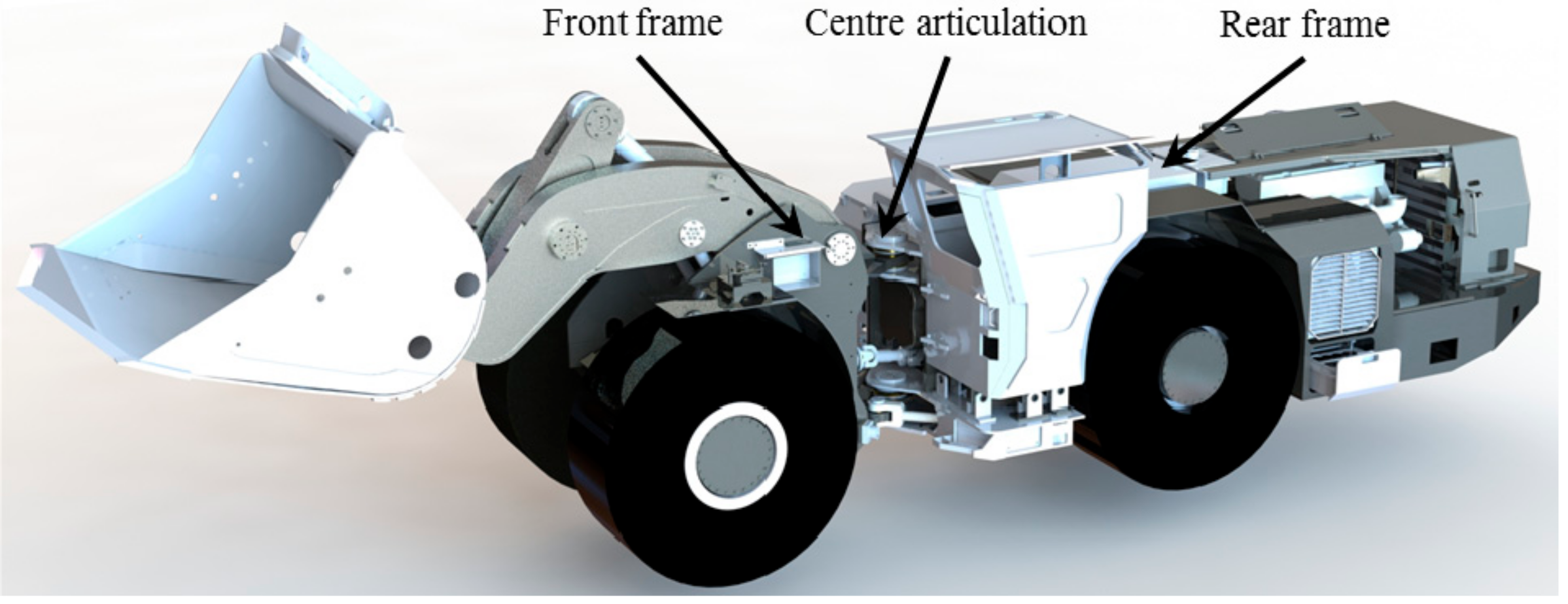

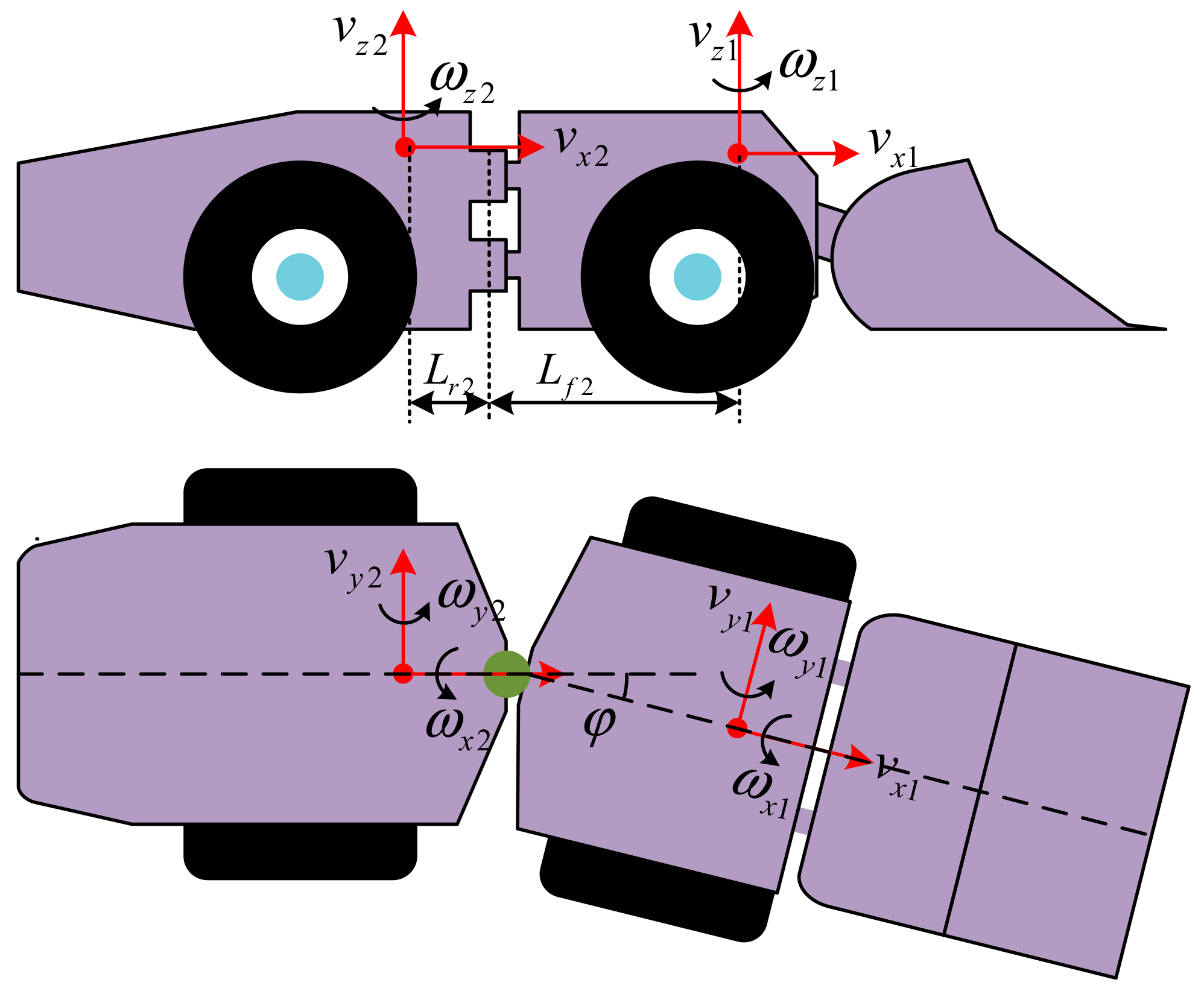

2.1.1. Kinematic Model of the LHD Vehicle

2.1.2. Dynamic Model of Yaw for the LHD Vehicle

2.1.3. Model of the Sensors

2.2. State Vector

2.2.1. State Vector of Pitch and Roll

2.2.2. State Vector of Yaw

2.3. Design of KFs

2.3.1. KF for Pitch and Roll

2.3.2. KF for Yaw

2.4. Fusion of States

3. Simulation Verification

3.1. Simulation Setup

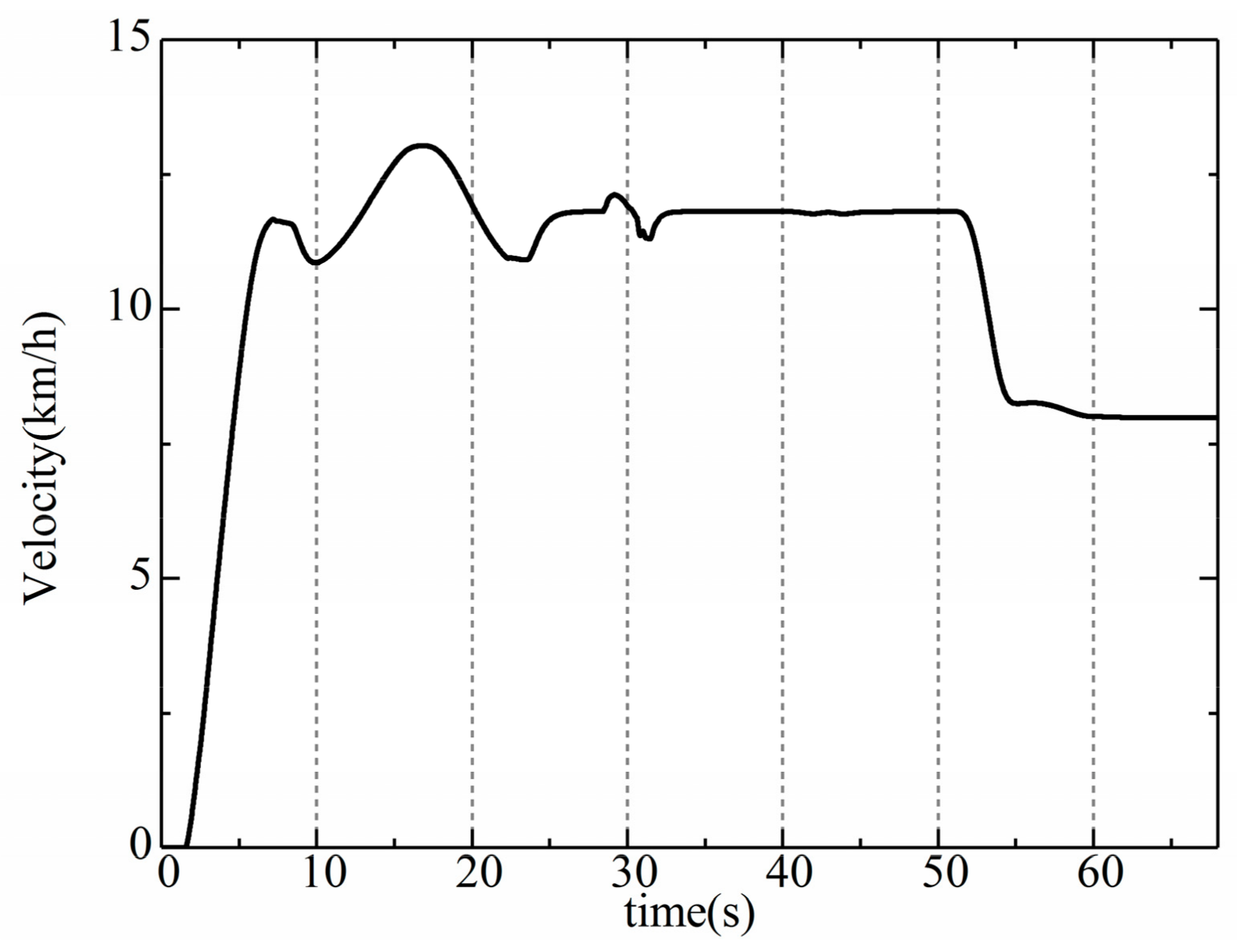

3.2. Simulation Conditions

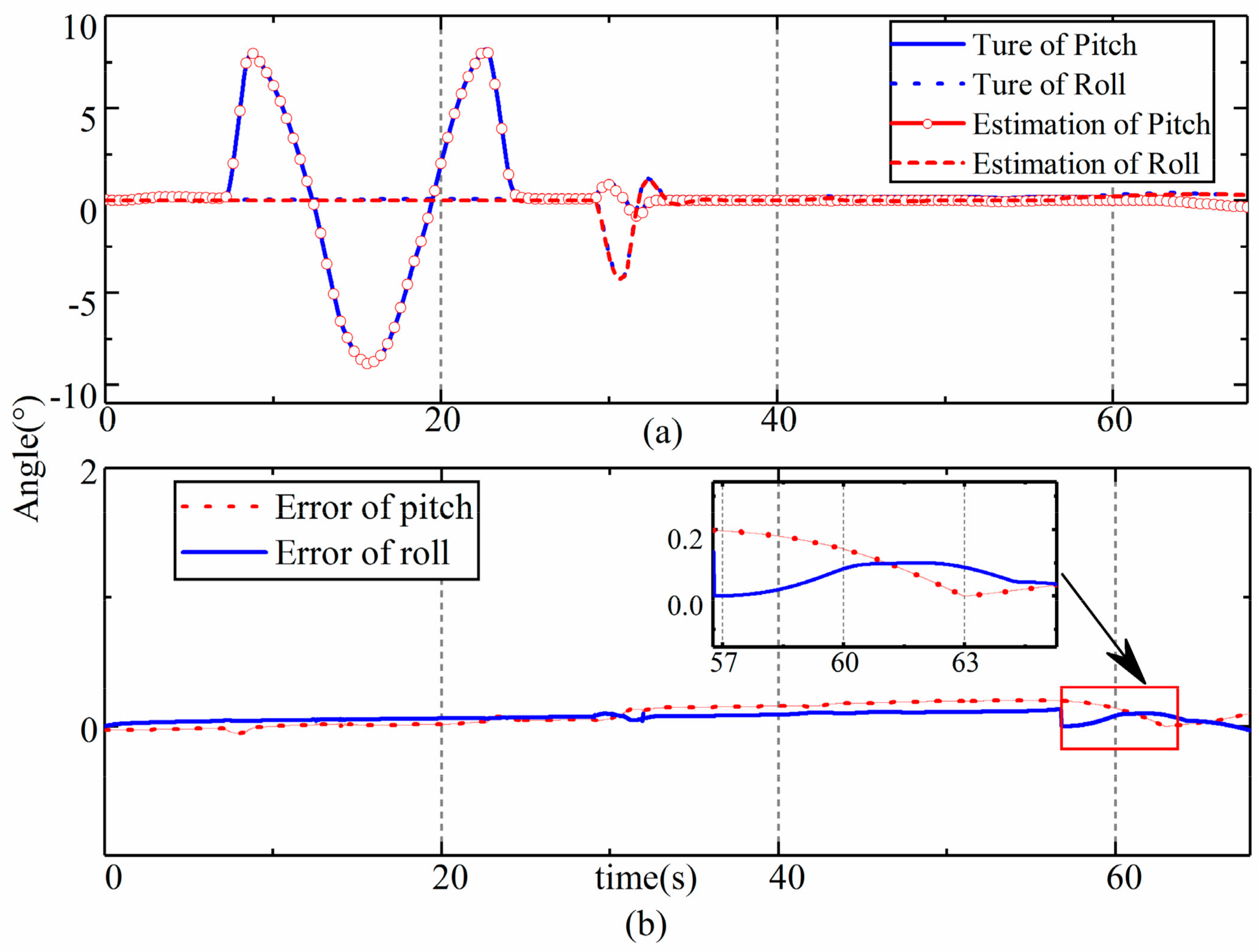

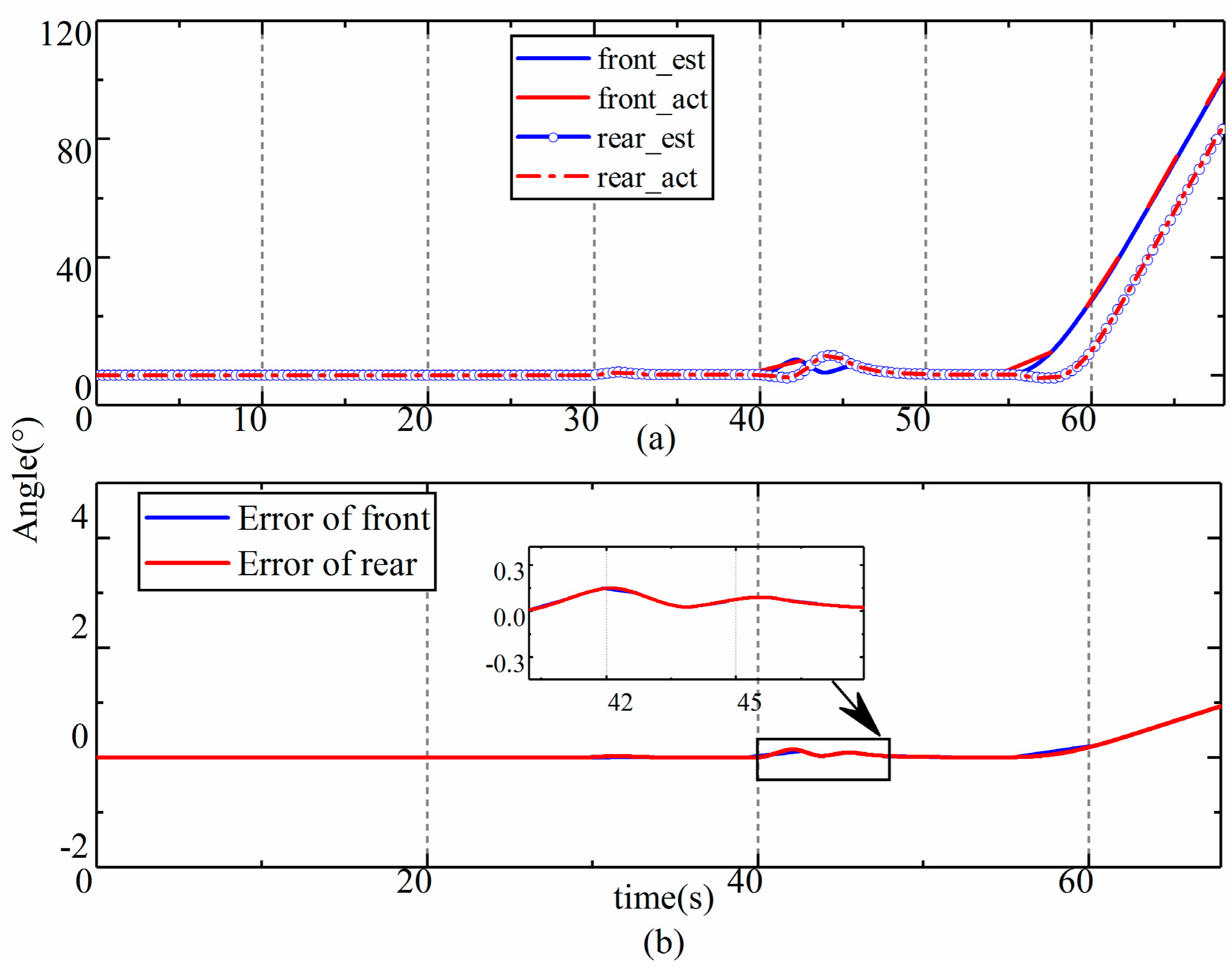

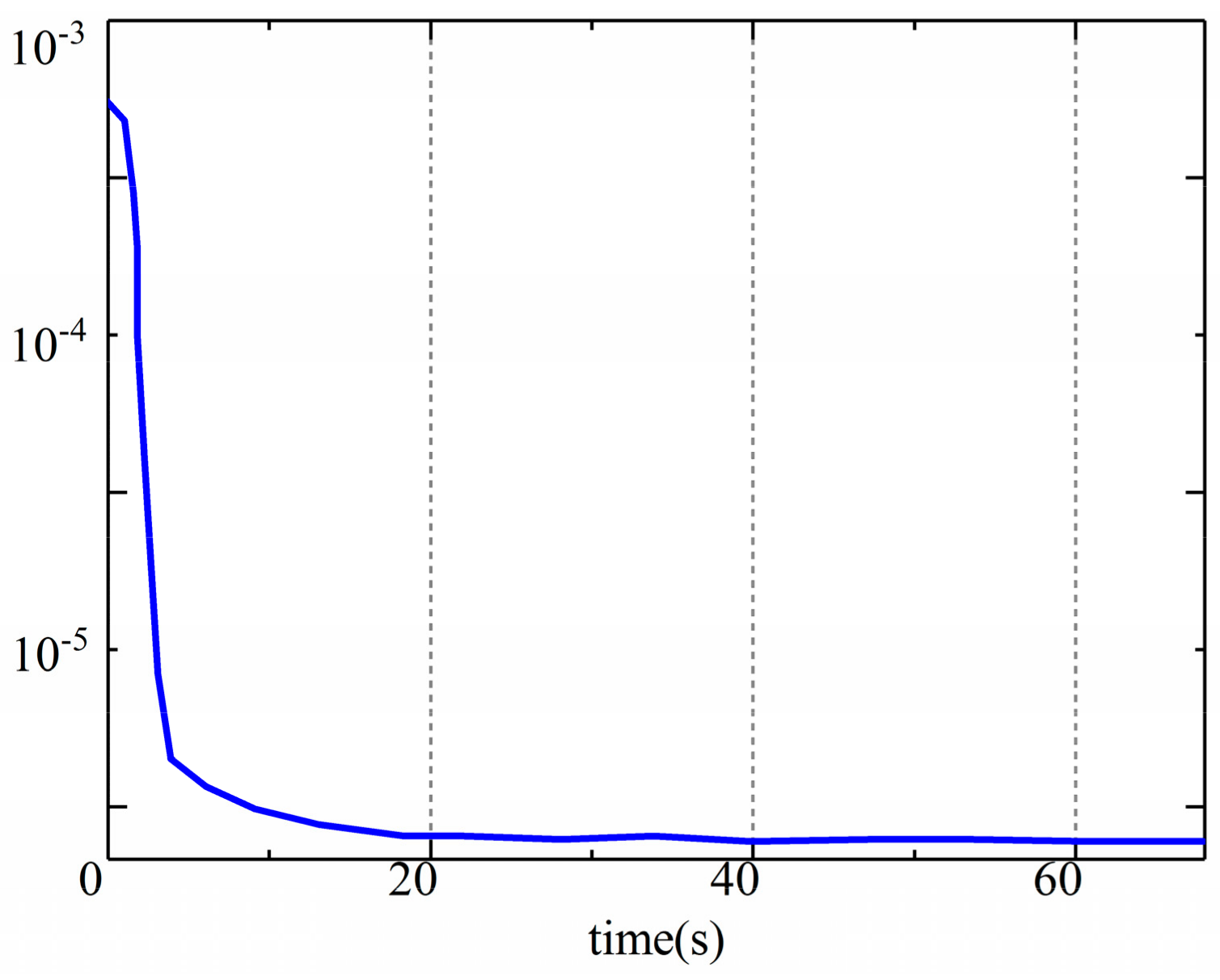

3.3. Estimation Results

4. Experimental Results

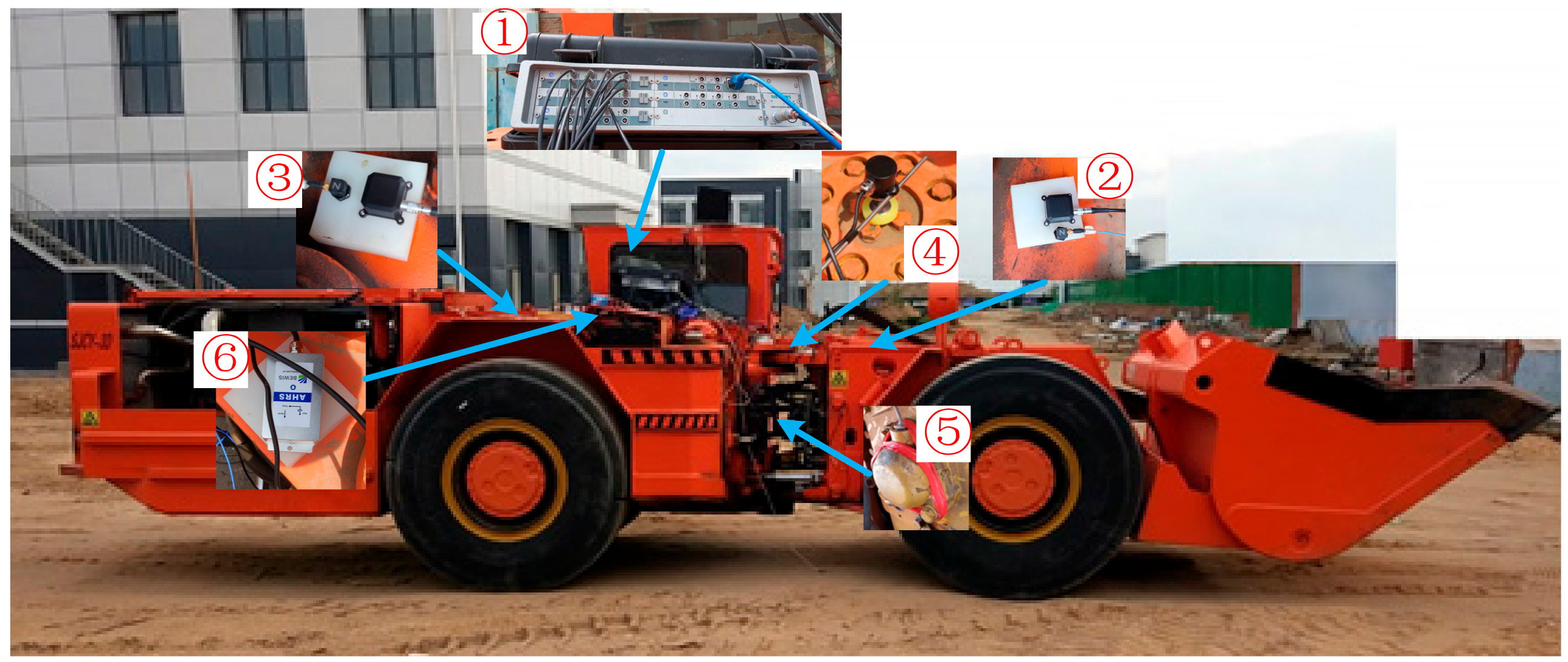

4.1. Experimental Setup

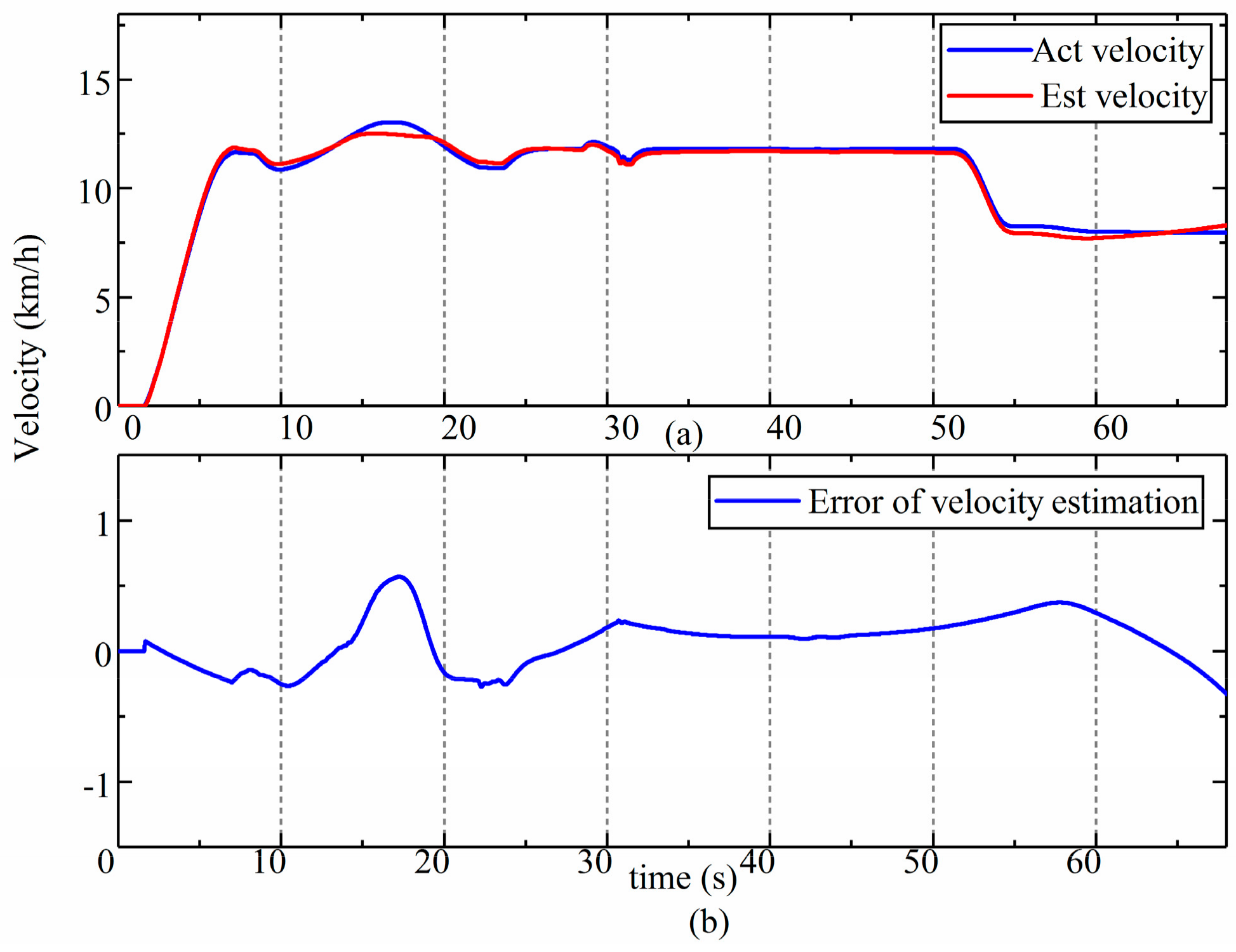

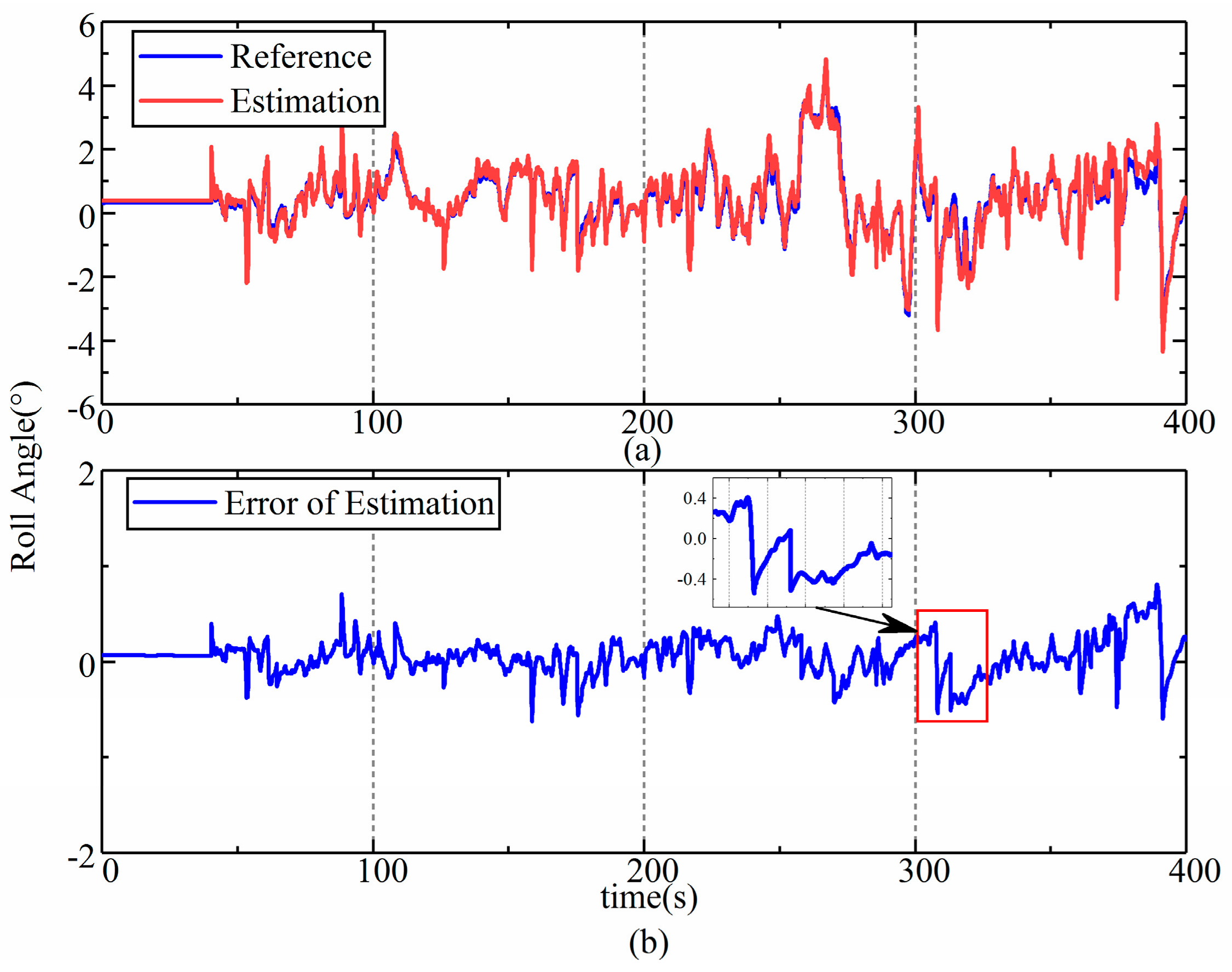

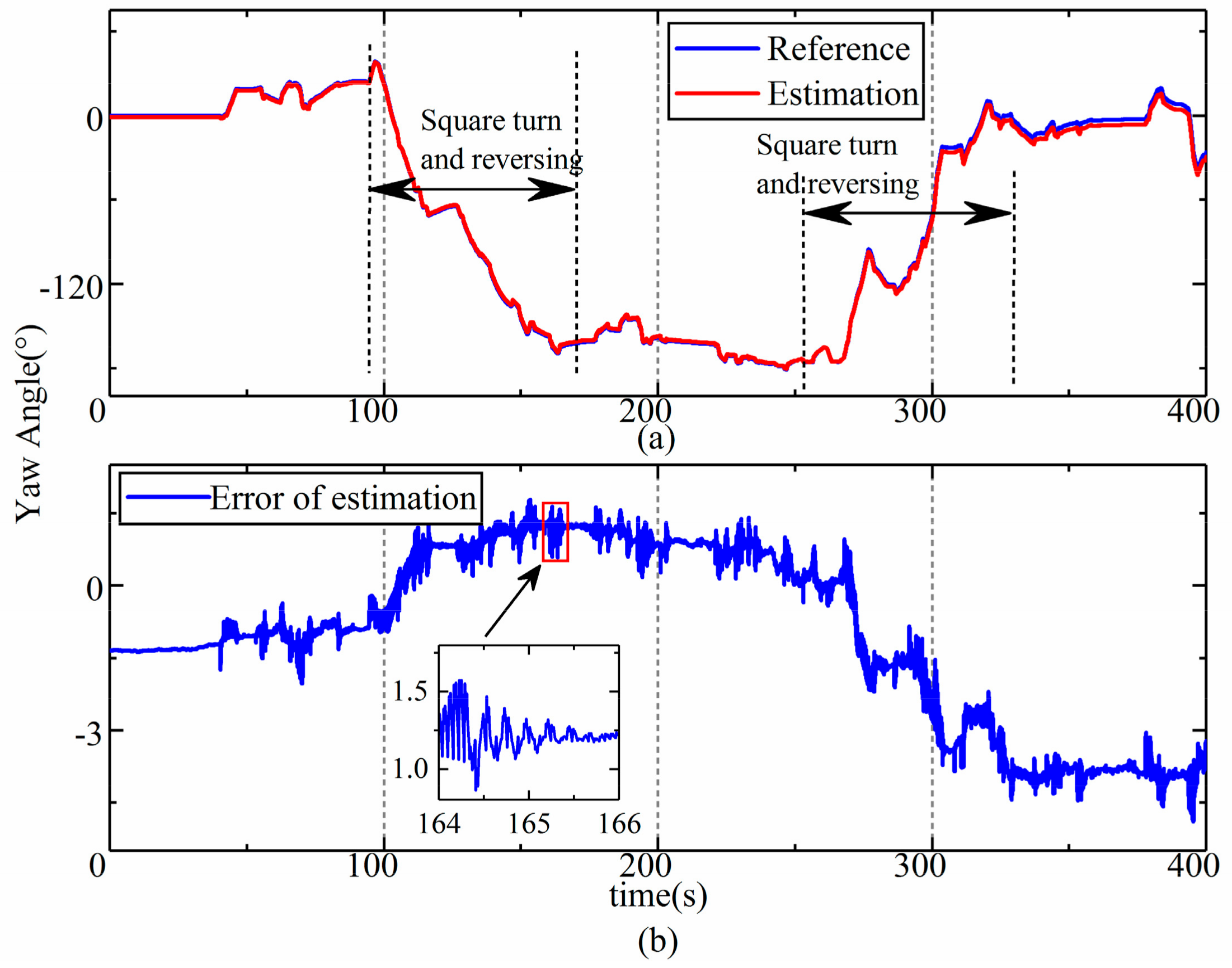

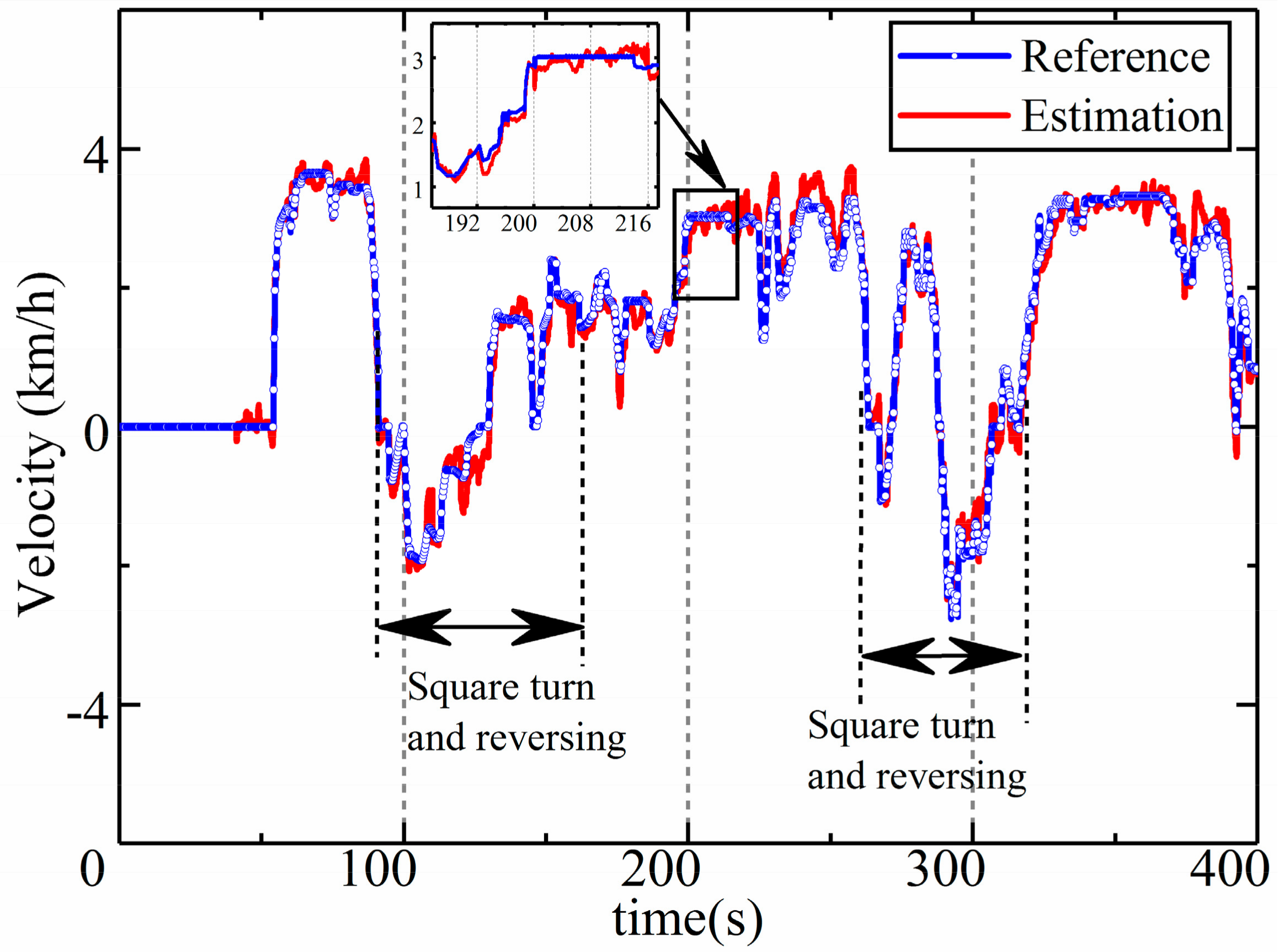

4.2. Experimental Results

4.3. Discussion of Results

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

| Symbol | Parameters | Values |

|---|---|---|

| m1 | mass of front frame | 12,850 kg |

| m2 | mass of rear frame | 15,650 kg |

| Iz1 | moment inertia of front frame | 307,420 kg·m2 |

| Iz2 | moment inertia of rear frame | 380,320 kg·m2 |

| Lf1 | distance of front mass center to bridge | 0.3 m |

| Lf2 | distance of front mass center to articulation point | 2.05 m |

| Lr1 | distance of rear mass center to bridge | 0.16 m |

| Lr2 | distance of rear mass center to articulation point | 1.59 m |

| B | half distance of track | 0.89 m |

| R | free radius of tire | 0.89 m |

| fv | vertical stiffness of tire | 80 KN/m |

References

- Li, J.; Zhan, K. Intelligent Mining Technology for an Underground Metal Mine Based on Unmanned Equipment. Engineering 2018, 4, 381–391. [Google Scholar] [CrossRef]

- Gustafson, A.; Lipsett, M.; Schunnesson, H.; Galar, D.; Kumar, U. Development of a Markov model for production performance optimisation. Application for semi-automatic and manual LHD machines in underground mines. Int. J. Min. Reclam. Environ. 2014, 28, 342–355. [Google Scholar] [CrossRef]

- Gao, Y.; Shen, Y.; Xu, T.; Zhang, W.; Güvenç, L. Oscillatory Yaw Motion Control for Hydraulic Power Steering Articulated Vehicles Considering the Influence of Varying Bulk Modulus. IEEE Trans. Control Syst. Technol. 2018, 99, 1–9. [Google Scholar] [CrossRef]

- Nayl, T.; Nikolakopoulos, G.; Gustafsson, T. Effect of kinematic parameters on MPC based on-line motion planning for an articulated vehicle. Robot. Auton. Syst. 2015, 70, 16–24. [Google Scholar] [CrossRef]

- Yang, L.; Yue, M.; Ma, T. Path Following Predictive Control for Autonomous Vehicles Subject to Uncertain Tire-ground Adhesion and Varied Road Curvature. Int. J. Control. Syst. 2019, 17, 193–202. [Google Scholar] [CrossRef]

- Naisi, Z.; Jun, N.; Jibin, H. Robust H∞ state feedback control for handling stability of intelligent vehicles on a novel all-wheel independent steering mode. IET Intell. Transp. Syst. 2019, 10, 1579–1589. [Google Scholar]

- Gao, S.; Liu, Y.; Wang, J.; Deng, W.; Oh, H. The Joint Adaptive Kalman Filter (JAKF) for Vehicle Motion State Estimation. Sensors 2016, 16, 1103. [Google Scholar] [CrossRef]

- Roberts, J.M.; Duff, E.S.; Corke, P.I. Reactive navigation and opportunistic localization for autonomous underground mining vehicles. Inf. Sci. 2002, 145, 127–146. [Google Scholar] [CrossRef]

- Chun, J.T.L.Y. State Estimation of the Electric Drive Articulated Dump Truck Based on UKF. J. Harbin Inst. Technol. (New Ser.) 2015, 22, 21–30. [Google Scholar]

- Lee, J.K.; Park, E.J.; Robinovitch, S.N. Estimation of Attitude and External Acceleration Using Inertial Sensor Measurement during Various Dynamic Conditions. IEEE Trans Instrum. Meas. 2012, 61, 2262–2273. [Google Scholar] [CrossRef]

- Shi, G.; Li, X.; Jiang, Z. An Improved Yaw Estimation Algorithm for Land Vehicles Using MARG Sensors. Sensors 2018, 18, 3251. [Google Scholar] [CrossRef] [PubMed]

- Lee, C.J.; Kim, K.E.; Lim, M.T. Sensor fusion for vehicle tracking based on the estimated probability. Iet Intell. Transp. Syst. 2018, 10, 1386–1395. [Google Scholar] [CrossRef]

- Xu, Z.; Yang, W.; You, K.; Li, W.; Kim, Y.I. Vehicle autonomous localization in local area of coal mine tunnel based on vision sensors and ultrasonic sensors. PLoS ONE 2017, 12, 1–31. [Google Scholar] [CrossRef] [PubMed]

- Dissanayake, G.; Sukkarieh, S.; Nebot, E.; Durrant-Whyte, H. The aiding of a low-cost strapdown inertial measurement unit using vehicle model constraints for land vehicle applications. IEEE Trans. Robot. Autom. 2001, 17, 731–747. [Google Scholar] [CrossRef]

- Mäkelä, H. Navigation System for LHD Machines. In Proceedings of the IFAC Intelligent Autonomous Vehicles, Espoo, Finland, 12–14 June 1995. [Google Scholar] [CrossRef]

- Chi, H.; Zhan, K.; Shi, B. Automatic guidance of underground mining vehicles using laser sensors. Tunn. Undergr. Space Technol. 2012, 27, 142–148. [Google Scholar] [CrossRef]

- Mäkelä, H. Overview of LHD navigation without artificial beacons. Robot. Auton. Syst. 2001, 36, 21–35. [Google Scholar] [CrossRef]

- Scheding, S.; Dissanayake, G.; Nebot, E.M. An experiment in autonomous navigation of an underground mining vehicle. IEEE Trans. Robot. Autom. 2001, 15, 85–95. [Google Scholar] [CrossRef]

- Paraszczak, J.; Gustafson, A.; Schunnesson, H. Technical and operational aspects of autonomous LHD application in metal mines. Int. J. Min. Reclam. Environ. 2015, 29, 391. [Google Scholar]

- Gustafson, A.; Schunnesson, H.; Kumar, U. Reliability Analysis and Comparison between Automatic and Manual Load Haul Dump Machines. Qual. Reliab. Eng. Int. 2015, 31, 523–531. [Google Scholar] [CrossRef]

- Marshall, J.; Barfoot, T.; Larsson, J. Autonomous underground tramming for center-articulated vehicles. J. Field Robot. 2008, 25, 400–421. [Google Scholar] [CrossRef]

- Wu, D.; Meng, Y.; Gu, Q.; Ma, F.; Zhan, K. A novel method for estimating the heading angle for underground Load-Haul-Dump based on Ultra Wideband. Trans. Inst. Meas. Control 2017, 40, 1608–1614. [Google Scholar] [CrossRef]

- Park, B.; Myung, H. Underground localization using dual magnetic field sequence measurement and pose graph SLAM for directional drilling. Meas. Sci. Technol. 2014, 25, 1–12. [Google Scholar] [CrossRef]

- Zhu, Q.; Xiao, C.; Hu, H.; Liu, Y.; Wu, J. Multi-Sensor Based Online Attitude Estimation and Stability Measurement of Articulated Heavy Vehicles. Sensors 2018, 18, 212. [Google Scholar] [CrossRef] [PubMed]

- Sabatini, A.M. Quaternion-based extended Kalman filter for determining orientation by inertial and magnetic sensing. IEEE Trans. Bio-Med. Eng. 2006, 53, 1346–1356. [Google Scholar] [CrossRef] [PubMed]

- Suh, Y.S. Orientation Estimation Using a Quaternion-Based Indirect Kalman Filter With Adaptive Estimation of External Acceleration. IEEE Trans. Instrum. Meas. 2010, 59, 3296–3305. [Google Scholar] [CrossRef]

- Oh, J.J.; Choi, S.B. Vehicle Velocity Observer Design Using 6-D IMU and Multiple-Observer Approach. IEEE Trans. Intell. Transp. Syst. 2012, 13, 1865–1879. [Google Scholar] [CrossRef]

- Ahmed, H.; Tahir, M. Accurate Attitude Estimation of a Moving Land Vehicle Using Low-Cost MEMS IMU Sensors. IEEE Trans. Intell. Transp. Syst. 2017, 18, 1723–1739. [Google Scholar] [CrossRef]

- Eltrass, A.; Khalil, M. Automotive radar system for multiple-vehicle detection and tracking in urban environments. IET Intell. Transp. Syst. 2018, 12, 783–792. [Google Scholar] [CrossRef]

- He, Y.; Khajepour, A.; McPhee, J.; Wang, X. Dynamic modelling and stability analysis of articulated frame steer vehicles. Int. J. Heavy Veh. Syst. 2005, 12, 28–59. [Google Scholar] [CrossRef]

| Error | RMS |

|---|---|

| Roll | 0.19 deg |

| Pitch | 0.10 deg |

| Yaw | 2.08 deg |

| Velocity | 0.21 km/h |

| Distance | 0.26 m |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gao, L.; Ma, F.; Jin, C. A Model-Based Method for Estimating the Attitude of Underground Articulated Vehicles. Sensors 2019, 19, 5245. https://doi.org/10.3390/s19235245

Gao L, Ma F, Jin C. A Model-Based Method for Estimating the Attitude of Underground Articulated Vehicles. Sensors. 2019; 19(23):5245. https://doi.org/10.3390/s19235245

Chicago/Turabian StyleGao, Lulu, Fei Ma, and Chun Jin. 2019. "A Model-Based Method for Estimating the Attitude of Underground Articulated Vehicles" Sensors 19, no. 23: 5245. https://doi.org/10.3390/s19235245