Off-Site Indoor Localization Competitions Based on Measured Data in a Warehouse †

Abstract

:1. Introduction

2. Survey of the Existing Indoor Localization Competitions and Comparison with PDR Challenges

2.1. Overview of the Existing Indoor Localization Competitions

- Indoor Localization Competitions, organized by Microsoft [8].

- PDR Challenge (original, held at UbiComp2015/International Symposium on Wearable Computers (ISWC2015) [9]);

- PDR Challenge in Warehouse Picking 2017 (PDR Challenge 2017);

- xDR Challenge for Warehouse Operations 2018 (xDR Challenge 2018).

2.2. Survey Items

2.2.1. Scenarios

2.2.2. Types of Contained Motions

2.2.3. Testers

2.2.4. Competition Types

2.2.5. Target Types of Methods for the Competition

2.2.6. Amount of Test Data

2.2.7. Time Length of the Test (Data)

2.2.8. Space of the Test Environment or Length of the Test Course

2.2.9. Evaluation Methods

2.2.10. History of Competitions/Remarks about the Competitions

2.2.11. Number of Participants

2.2.12. Award and Prize for First Place

2.2.13. Connection with Academic Conference

2.3. Comparison of the Existing Indoor Localization Competitions

2.3.1. PerfLoc

2.3.2. EvAAL/IPIN Competition

2.3.3. Microsoft Indoor Localization Competitions

2.4. Competitions in PDR Challenge Series

2.4.1. PDR Challenge@UbiComp/ISWC2015 [9]

2.4.2. PDR Challenge in Warehouse Picking [13]

2.4.3. xDR Challenge for Warehouse Operations [13]

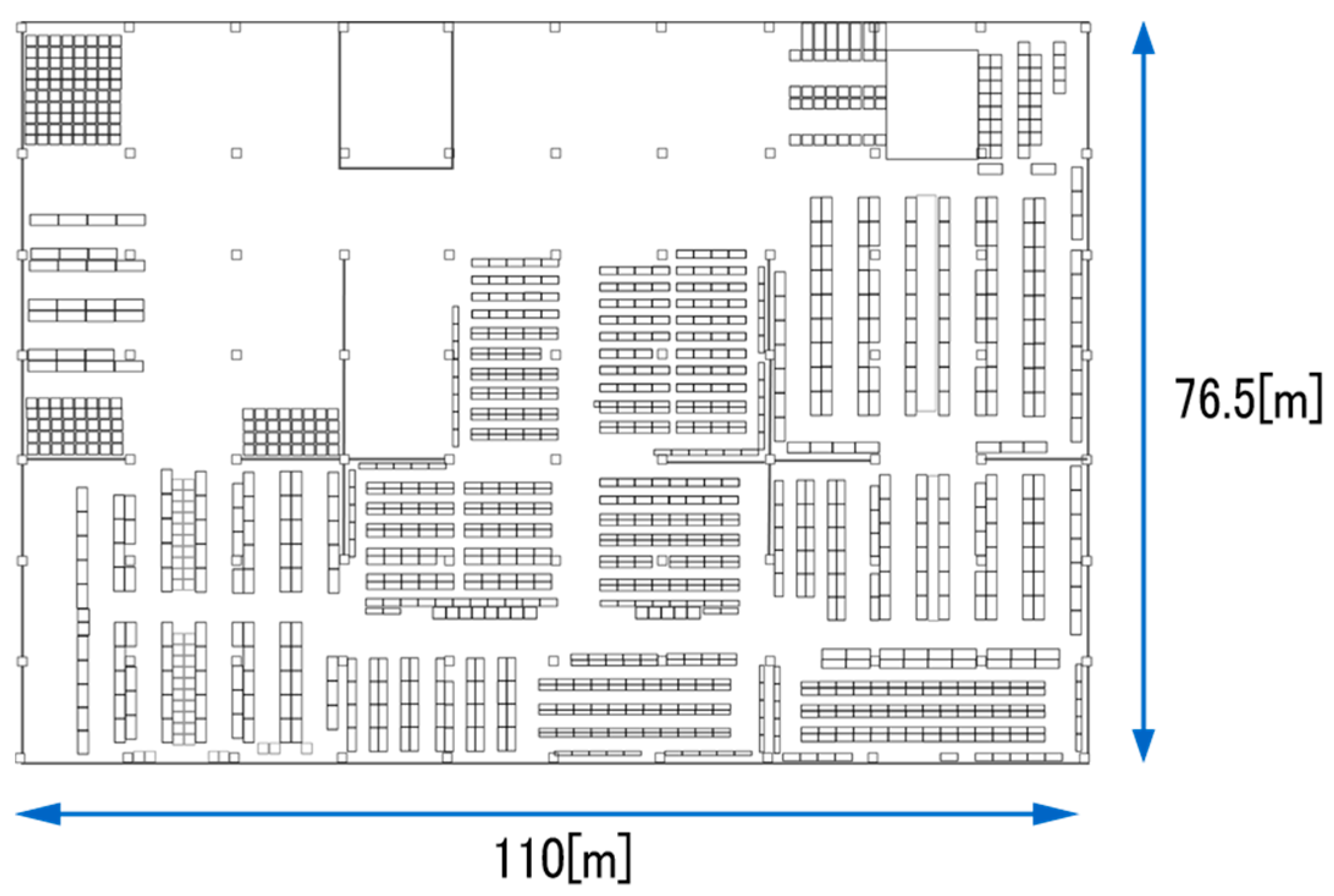

3. PDR Challenge in Warehouse Picking

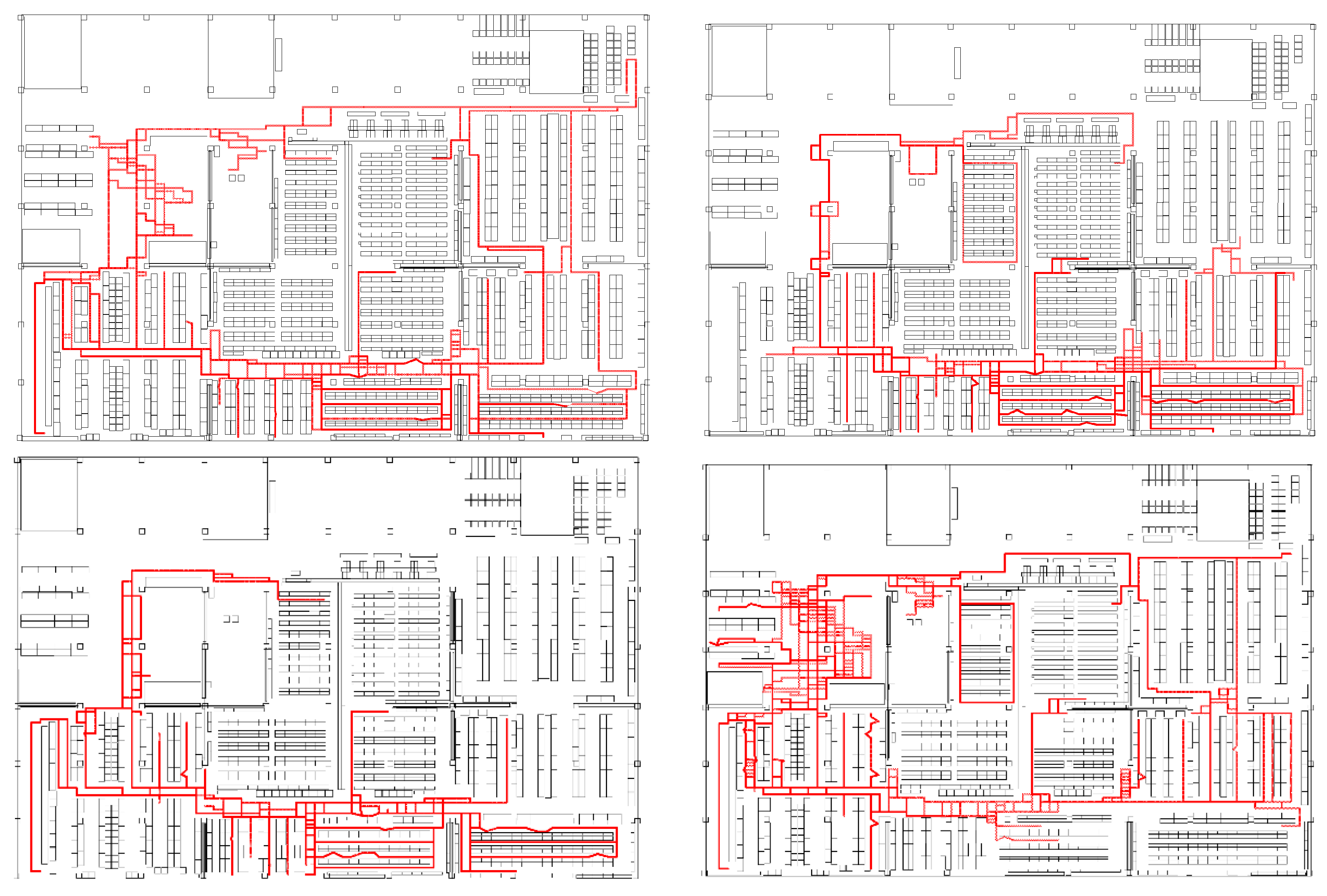

3.1. Overview of the PDR Challenge in Warehouse Picking

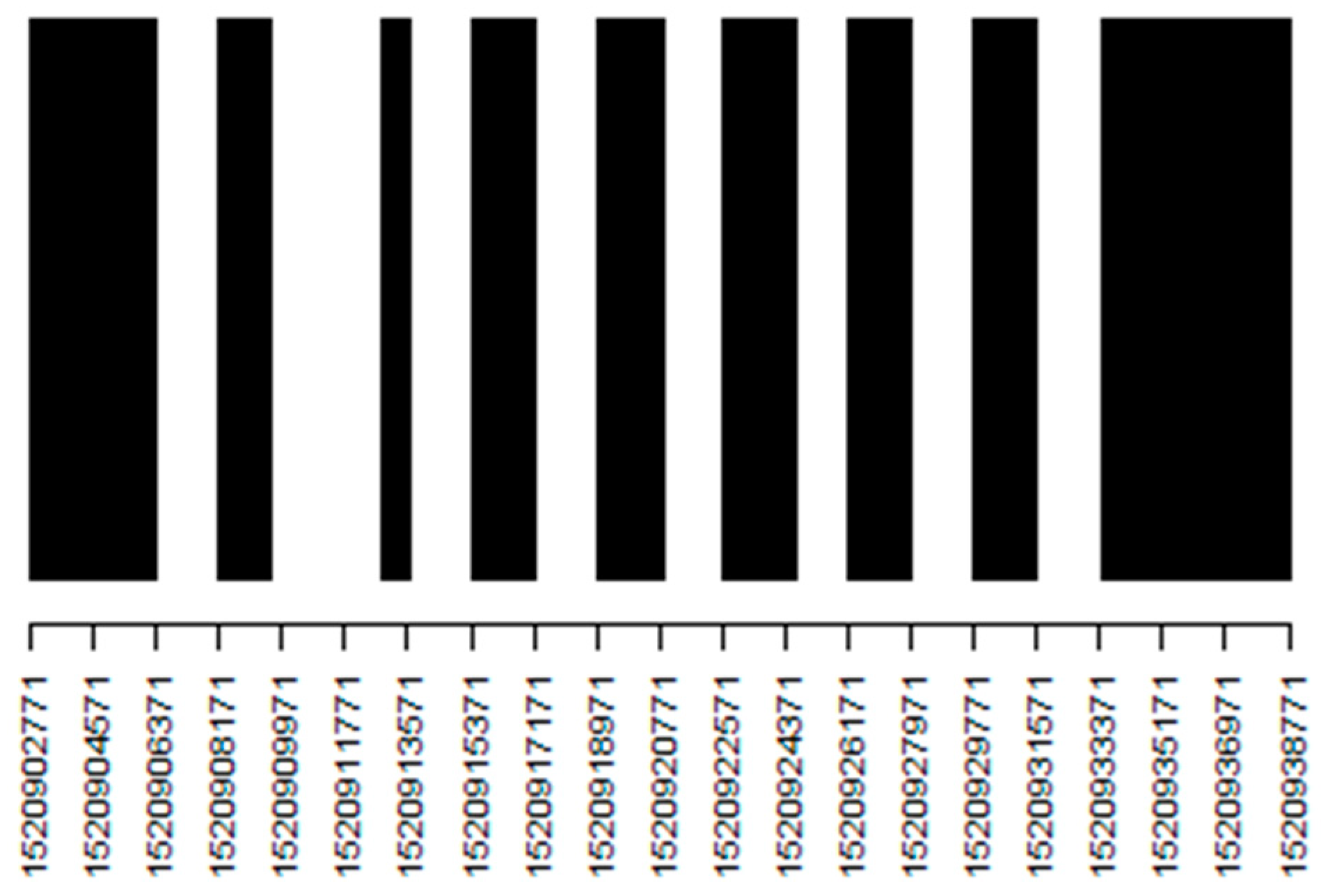

- Raw sensor data for PDR: angular velocity, acceleration, magnetism, and atmospheric pressure (at 100 Hz).

- Received signal strength indicator (RSSI) from the BLE beacons (at about 2 Hz).

3.2. Results of PDR Challenge 2017

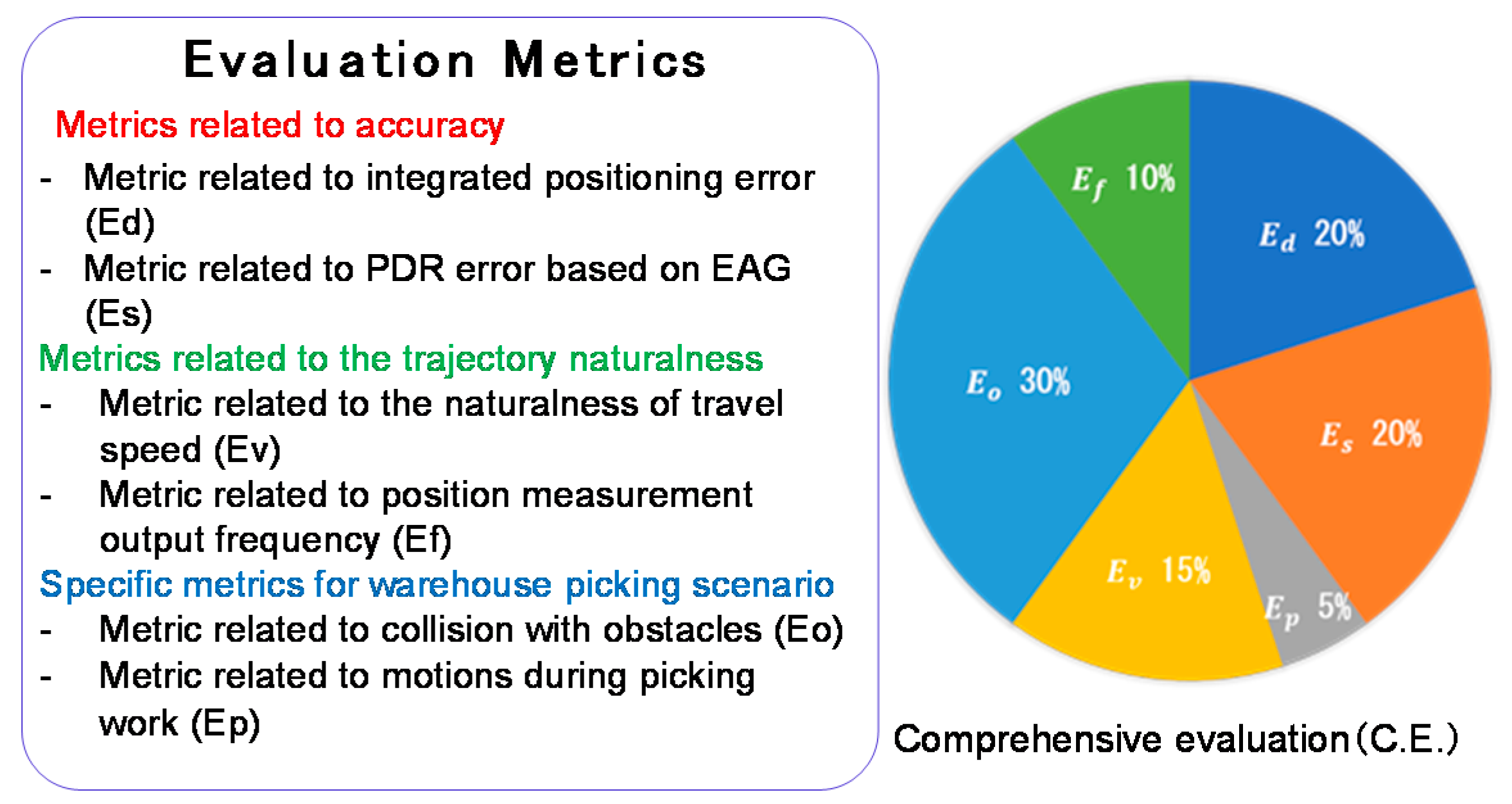

3.2.1. Evaluation Metrics for the PDR Challenge 2017

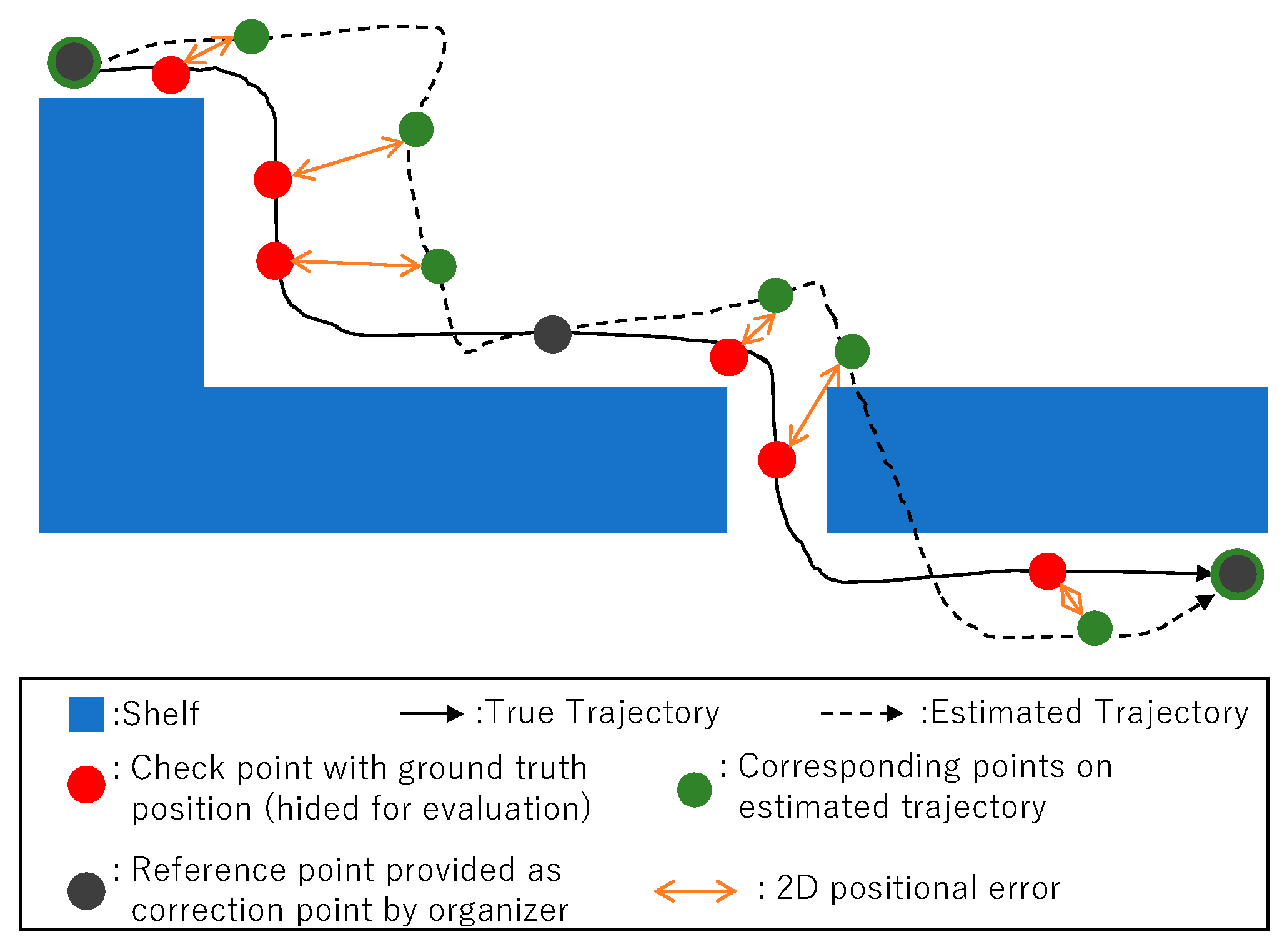

Evaluation Metric for Absolute Positional Error (Ed)

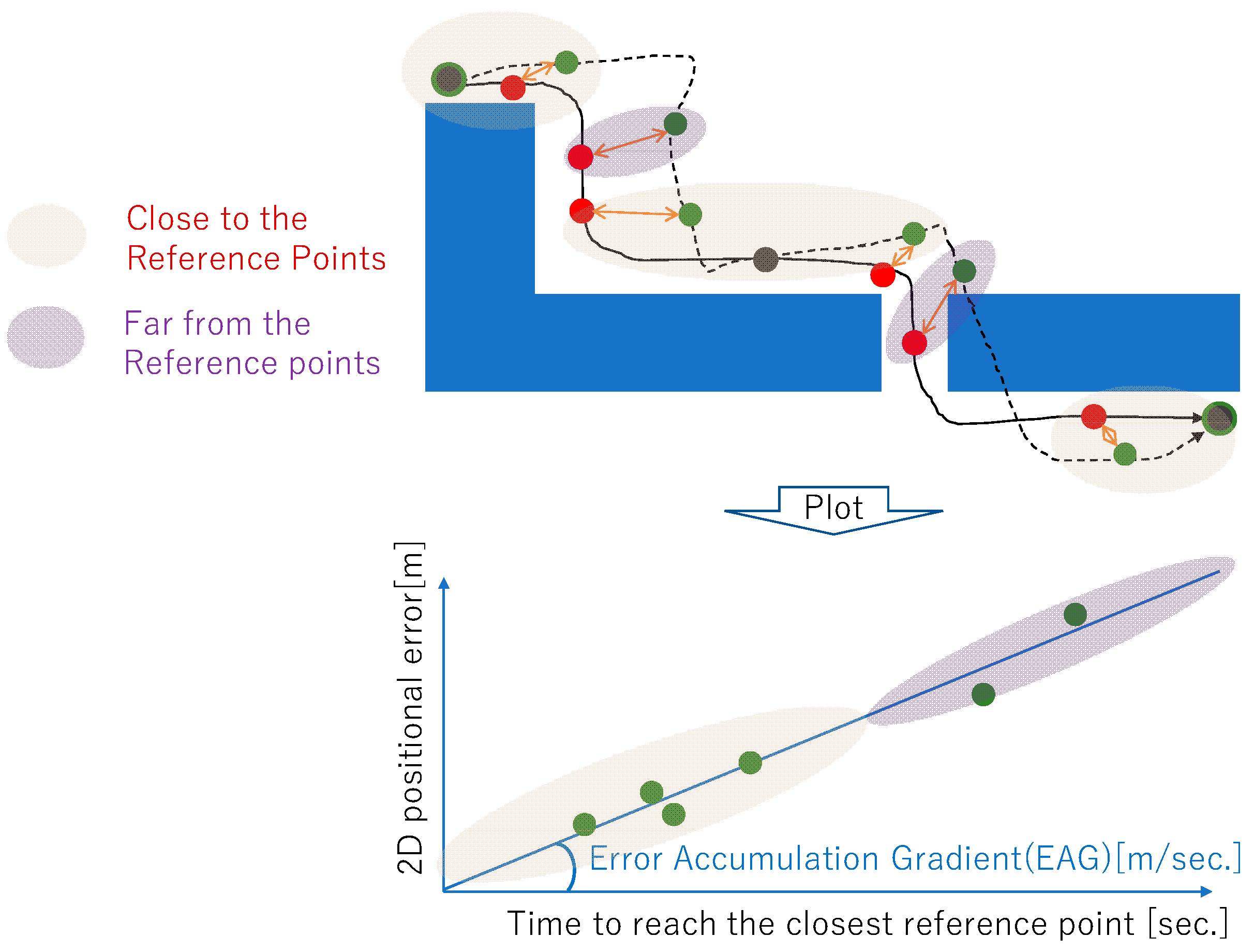

Evaluation Metric for Error Accumulation of PDR (Es)

Evaluation for Naturalness During Picking (Ep)

Evaluation of Velocity Naturalness (Ev)

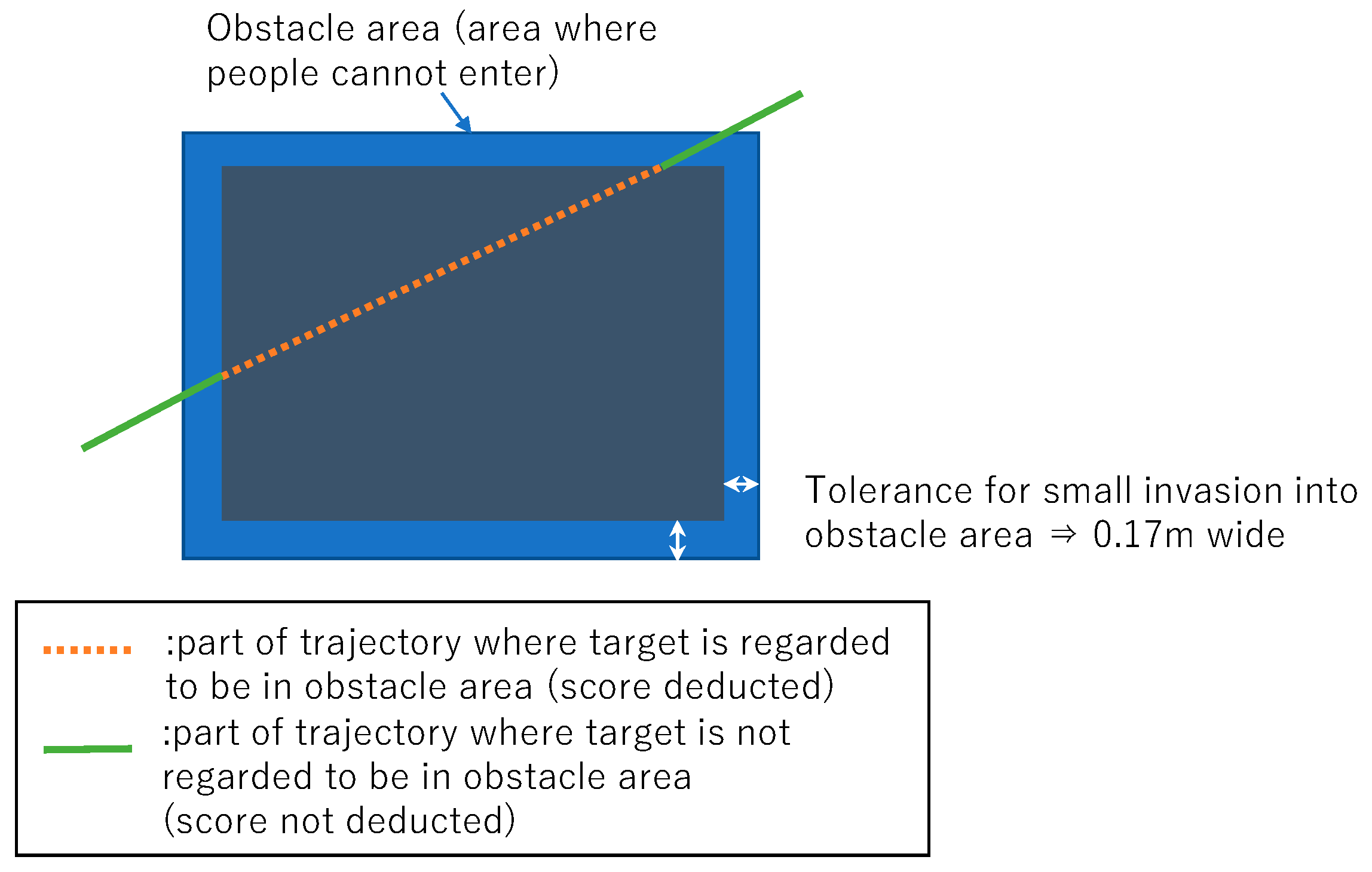

Evaluation for Obstacle Interference (Eo)

Evaluation for Update Frequency (Ef)

Comprehensive Evaluation (C.E.)

3.2.2. Competitors and Their Algorithms

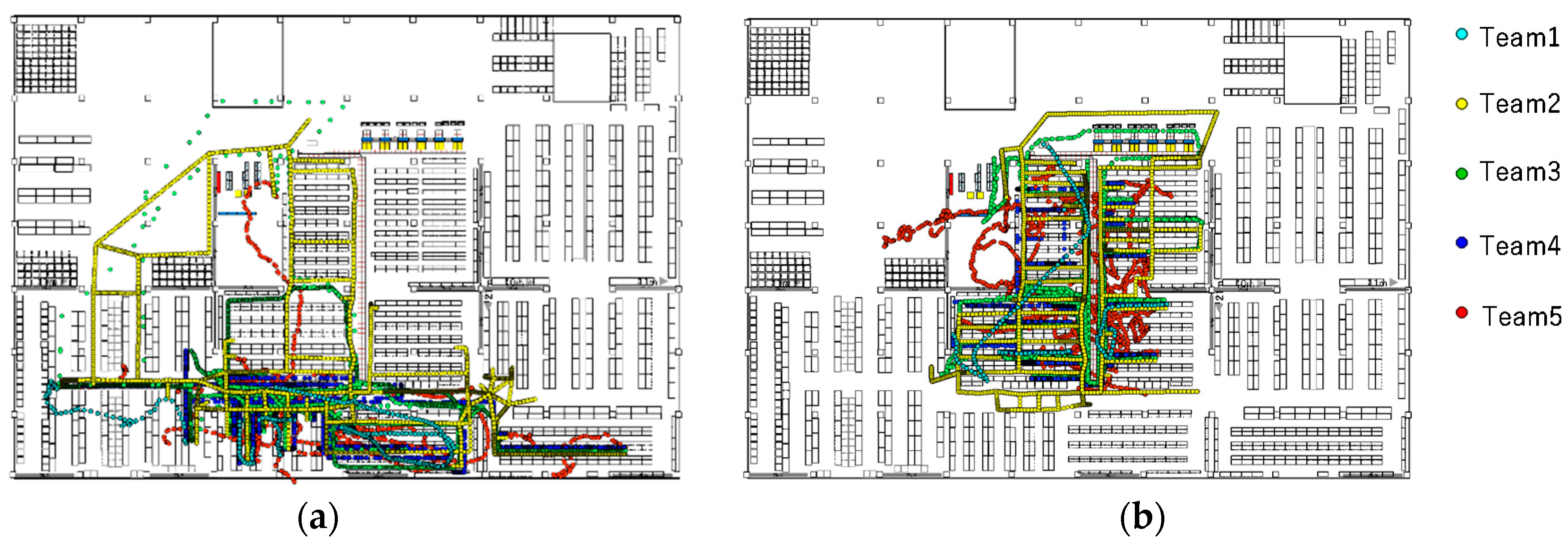

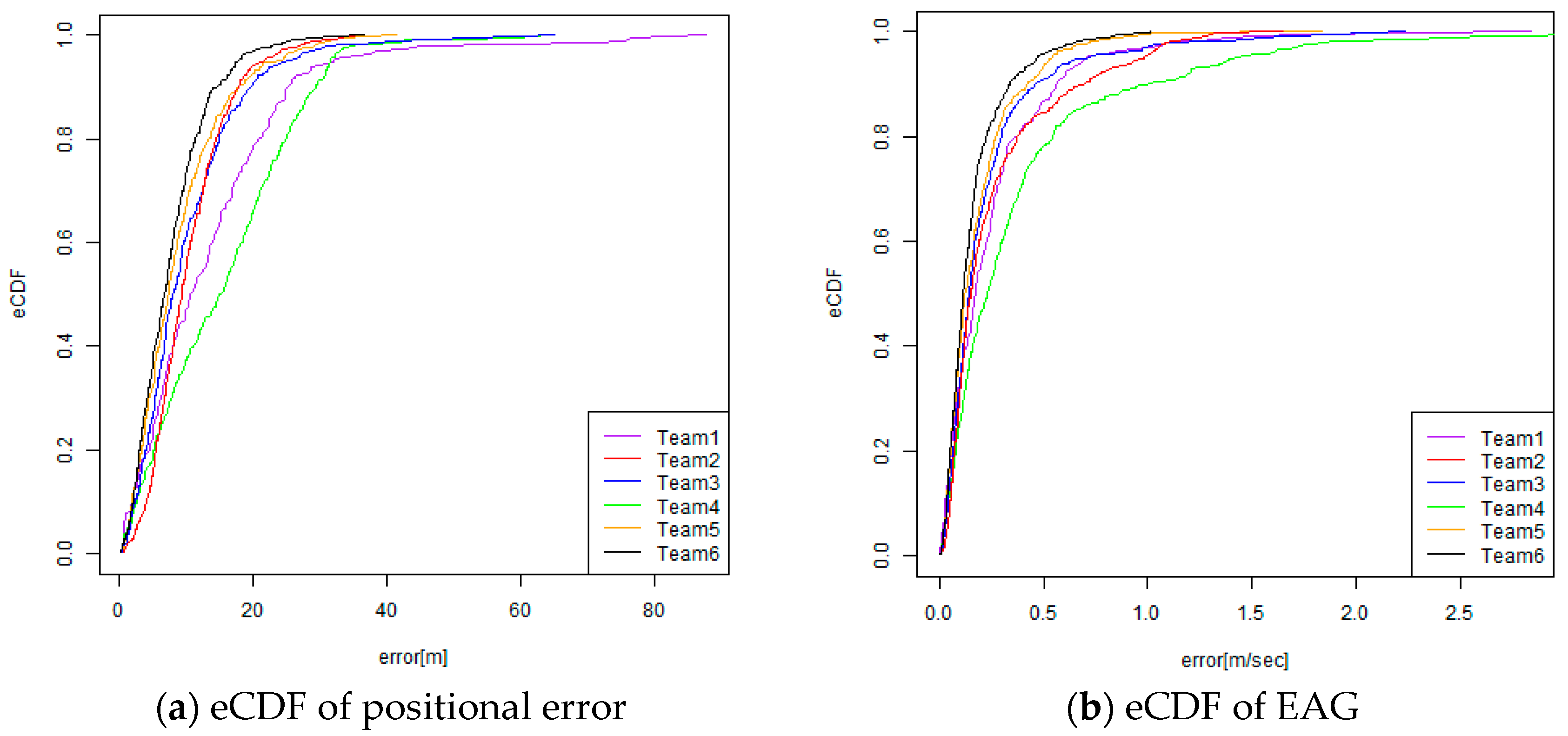

3.2.3. Results of Evaluation

3.3. Findings from PDR Challenge 2017

3.4. Discussion of the Findings

4. xDR Challenge for Warehouse Operations

4.1. Overview of the Competition

4.2. Data Used for Xdr Challenge 2018

4.3. Evaluation Method

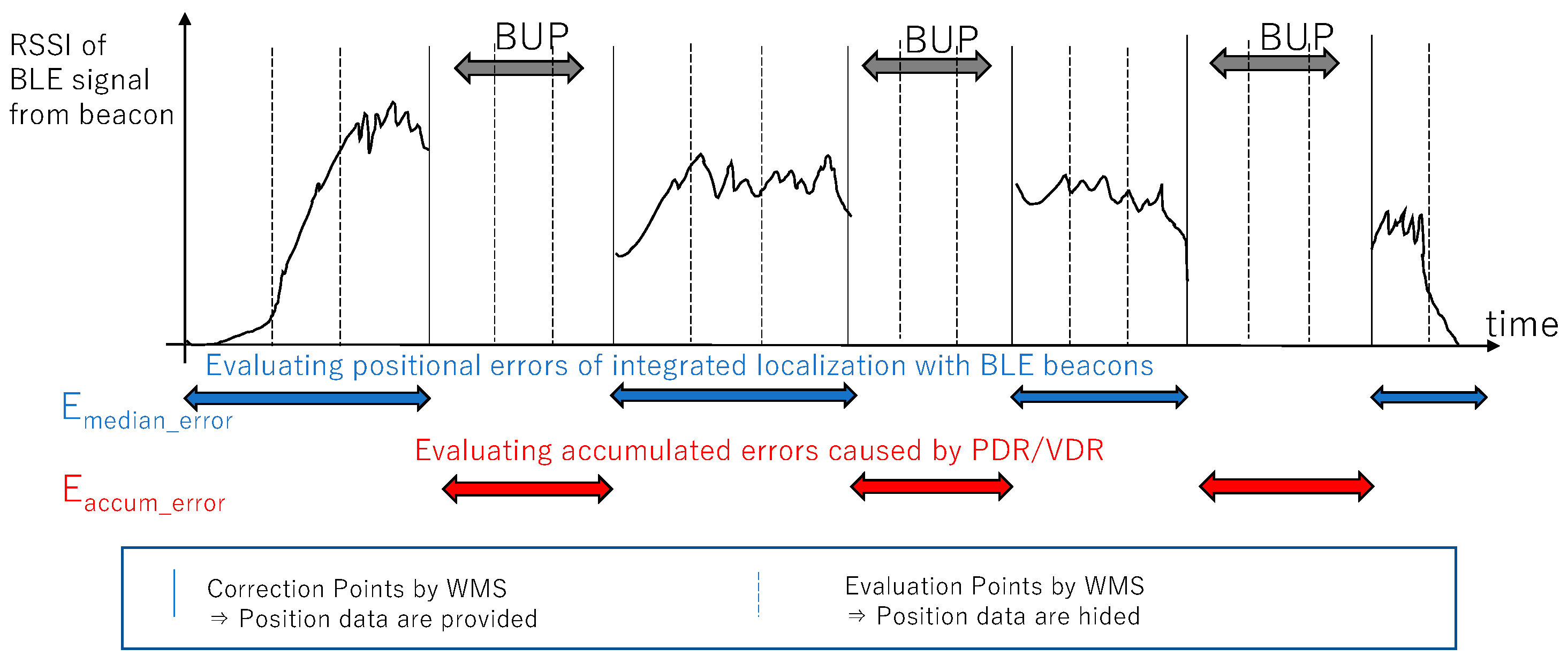

4.3.1. Evaluation Metrics

- Evaluation for accuracy of localization (modified from PDR Challenge 2017)

- ○

- Emedian_error: Evaluation of absolute amount of error of integrated localization

- ○

- Eaccum_error: Evaluation of speed of error accumulation of PDR/VDR

- Evaluation for naturalness of trajectory (same as PDR Challenge 2017)

- ○

- Evelocity: Evaluation of speed assumed for the target

- ○

- Efrequency: Evaluation of frequency of points comprising the trajectory

- Special evaluations for warehouse scenario (same as PDR Challenge 2017)

- ○

- Eobstacle: Metric related to collision with obstacles

- ○

- Epicking: Metric related to motions during picking work

4.3.2. Updates of Evaluation Metrics from the PDR Challenge 2017

4.4. Competitors and Their Algorithms

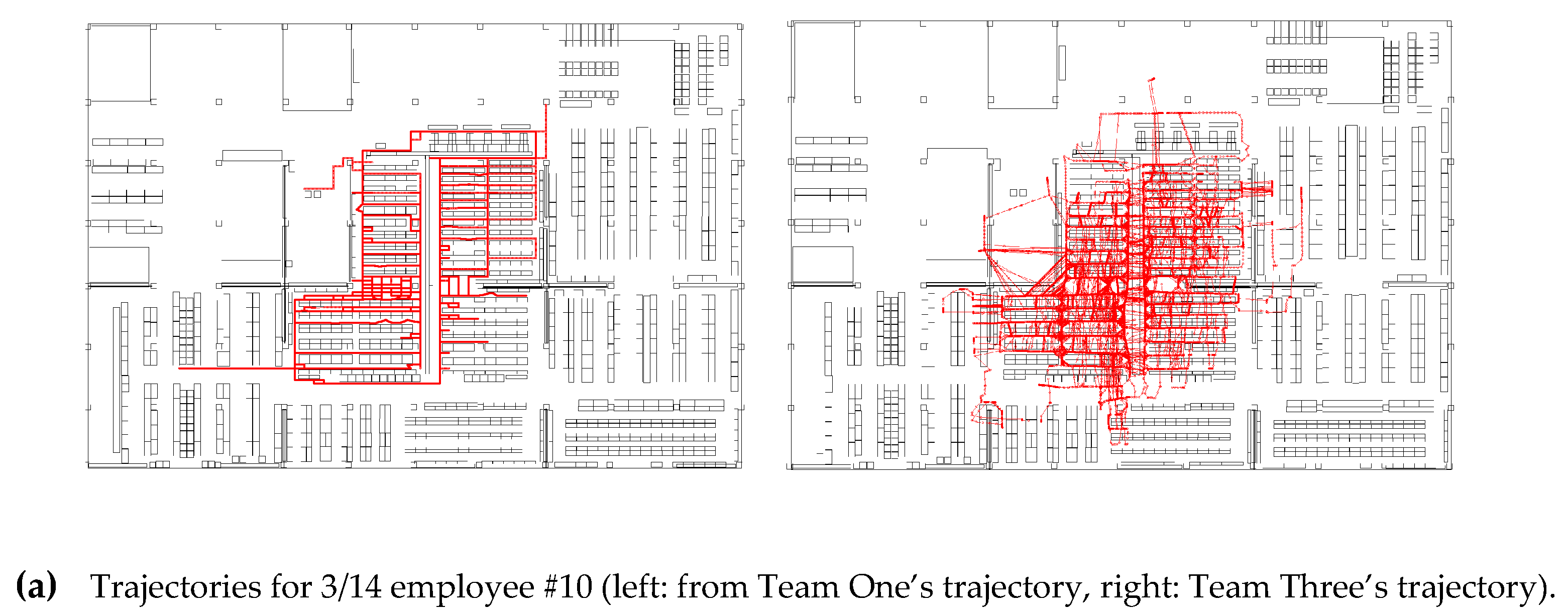

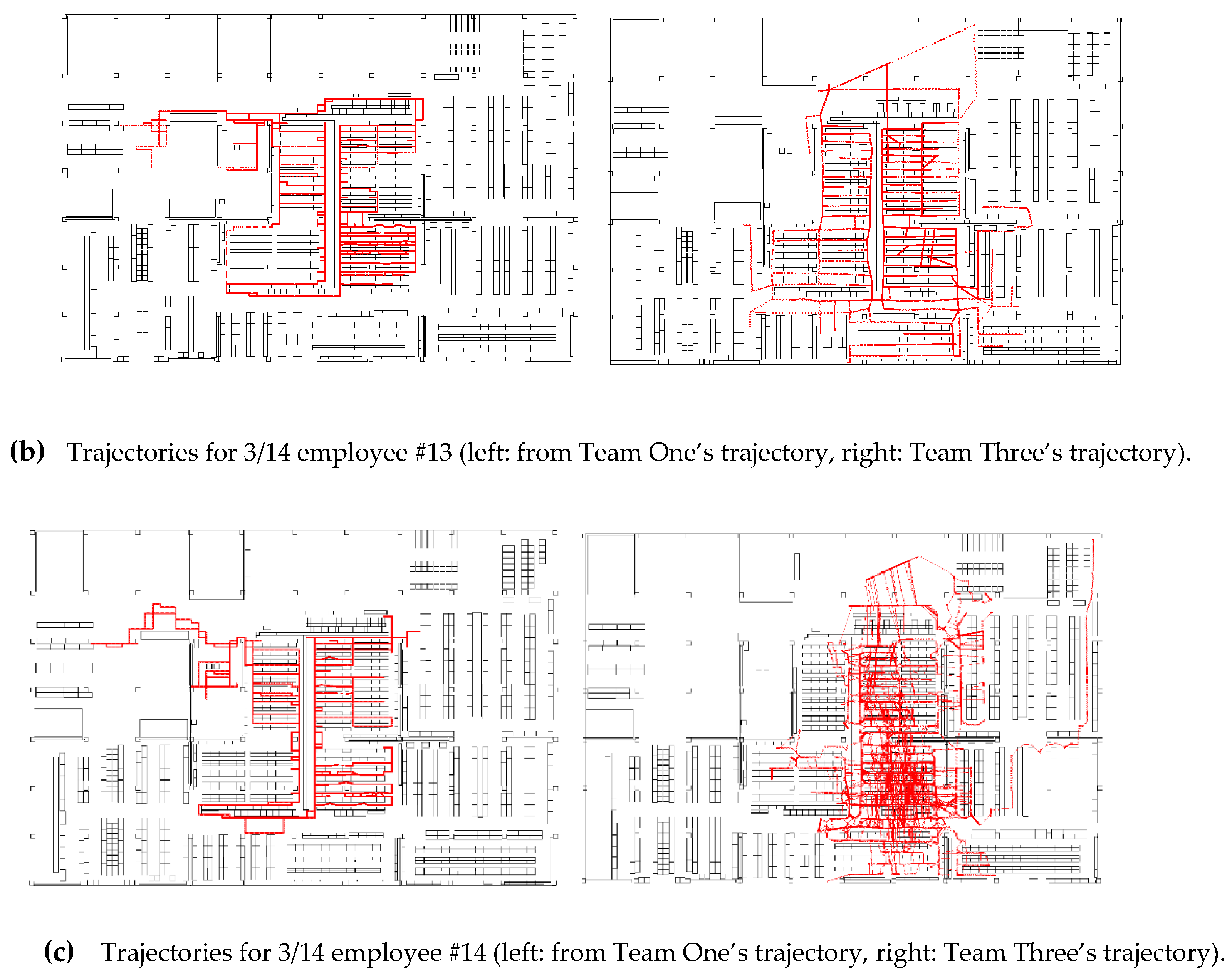

4.5. Evaluation Results

5. Releasing the Evaluation Program Used for PDR Challenge and Xdr Challenge Via Github

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

Appendix A

References

- IPIN Conference. Available online: http://ipin-conference.org/ (accessed on 24 December 2018).

- Ubicomp|ACM SIGCHI. Available online: https://sigchi.org/conferences/conference-history/ubicomp/ (accessed on 24 December 2018).

- Moayeri, N.; Ergin, M.O.; Lemic, F.; Handziski, V.; Wolisz, A. PerfLoc (Part 1): An Extensive Data Repository for Development of Smartphone Indoor Localization Apps. In Proceedings of the IEEE 27th Annual International Symposium on Personal, Indoor, and Mobile Radio Communications (PIMRC), Valencia, Spain, 4–7 September 2016. [Google Scholar]

- Moayeri, N.; Li, C.; Shi, L. PerfLoc (Part 2): Performance Evaluation of the Smartphone Indoor Localization Apps. In Proceedings of the International Conference on Indoor Positioning and Indoor Navigation (IPIN 2018), Nantes, France, 24–27 September 2018. [Google Scholar]

- Torres-Sospedra, J.; Jiménez, A.R.; Knauth, S.; Moreira, A.; Beer, Y.; Fetzer, T.; Ta, V.; Montoliu, R.; Seco, F.; Mendoza-Silva, G.M.; et al. The Smartphone-Based offline indoor location competition at IPIN 2016: Analysis and future work. Sensors 2017, 17, 557. [Google Scholar] [CrossRef] [PubMed]

- Potortì, F.; Park, S.; Jiménez Ruiz, A.R.; Barsocchi, P.; Girolami, M.; Crivello, A.; Lee, S.Y.; Lim, J.H.; Torres-Sospedra, J.; Seco, F.; et al. Comparing the Performance of Indoor Localization Systems through the EvAAL Framework. Sensors 2017, 17, 2327. [Google Scholar] [CrossRef] [PubMed]

- Torres-Sospedra, J.; Jiménez, A.R.; Moreira, A.; Lungenstrass, T.; Lu, W.-C.; Knauth, S.; Mendoza-Silva, G.M.; Seco, F.; Pérez-Navarro, A.; Nicolau, M.J.; et al. Off-Line Evaluation of Mobile-Centric Indoor Positioning Systems: The Experiences from the 2017 IPIN Competition. Sensors 2018, 18, 487. [Google Scholar] [CrossRef] [PubMed]

- Lymberopoulos, D.; Liu, J. The Microsoft Indoor Localization Competition: Experiences and Lessons Learned. IEEE Signal Process. Mag. 2017, 34, 5. [Google Scholar] [CrossRef]

- Kaji, K.; Abe, M.; Wang, W.; Hiroi, K.; Kawaguchi, N. UbiComp/ISWC 2015 PDR Challenge Corpus. In Proceedings of the ACM International Joint Conference on Pervasive and Ubiquitous Computing: Adjunct, Heidelberg, Germany, 12–16 September 2016. [Google Scholar]

- Facebook page for PDR Benchmark Standardization Committee. Available online: https://www.facebook.com/pdr.bms/ (accessed on 24 December 2018).

- ISO/IEC 18305:2016. Available online: https://www.iso.org/standard/62090.html (accessed on 24 December 2018).

- Data hub for HASC. Available online: http://hub.hasc.jp/ (accessed on 24 December 2018).

- Ichikari, R.; Shimomura, R.; Kourogi, M.; Okuma, T.; Kurata, T. Review of PDR Challenge in Warehouse Picking and Advancing to xDR Challenge. In Proceedings of the International Conference on Indoor Positioning and Indoor Navigation (IPIN2018), Nantes, France, 24–27 September 2018. [Google Scholar]

- Kourogi, M.; Fukuhara, T. Case studies of IPIN services in Japan: Advanced trials and implementations in service and manufacturing fields. Available online: http://www.ipin2017.org/documents/SS-5-2.pdf (accessed on 24 December 2018).

- Abe, M.; Kaji, K.; Hiroi, K.; Kawaguchi, N. PIEM: Path Independent Evaluation Metric for Relative Localization. In Proceedings of the International Conference on Indoor Positioning and Indoor Navigation (IPIN2016), Alcalá de Henares, Spain, 4–7 October 2016. [Google Scholar]

- PDR Challenge 2017. Available online: https://unit.aist.go.jp/hiri/pdr-warehouse2017/ (accessed on 29 December 2018).

- Lee, Y.; Lee, H.; Kim, J.; Kang, D.; Moon, K.; Cho, S. A Multi-Sensor Fusion Technique for Pedestrian Localization in a Warehouse. In Proceedings of the International Conference on Indoor Positioning and Indoor Navigation (IPIN2017) only abstract, Sapporo, Japan, 18–21 September 2017. [Google Scholar]

- Ito, Y.; Hoshi, H. Indoor Positioning Using Network Graph of Passageway and BLE Considering Obstacles. In Proceedings of the International Conference on Indoor Positioning and Indoor Navigation (IPIN2017) only abstract, Sapporo, Japan, 18–21 September 2017. [Google Scholar]

- Hananouchi, K.; Nozaki, J.; Urano, K.; Hiroi, K.; Kawaguchi, N. Trajectory Estimation Using PDR and Simulation of Human-Like Movement. In Proceedings of the International Conference on Indoor Positioning and Indoor Navigation (IPIN2017) Work-in-Progress, Sapporo, Japan, 18–21 September 2017. [Google Scholar]

- Zheng, L.; Wang, Y.; Peng, A.; Wu, Z.; Wu, D.; Tang, B.; Lu, H.; Shi, H.; Zheng, H. A Smartphone Based Indoor Positioning System. In Proceedings of the International Conference on Indoor Positioning and Indoor Navigation (IPIN2017) only abstract, Sapporo, Japan, 18–21 September 2017. [Google Scholar]

- Cheng, H.; Lu, W.; Lin, C.; Lai, Y.; Yeh, Y.; Liu, H.; Fang, S.; Chien, Y.; Tsao, Y. Moving Trajectory Estimation Based on Sensors. In Proceedings of the International Conference on Indoor Positioning and Indoor Navigation (IPIN2017) Work-in-Progress, Sapporo, Japan, 18–21 September 2017. [Google Scholar]

- Ban, R.; Kaji, K.; Hiroi, K.; Kawaguchi, N. Indoor Positioning Method Integrating Pedestrian Dead Reckoning with Magnetic Field and WiFi Fingerprints. In Proceedings of the Eighth International Conference on Mobile Computing and Ubiquitous Networking (ICMU2015), Hakodate, Japan, 20–22 January 2015. [Google Scholar]

- Ichikari, R.; Chang, C.-T.; Michitsuji, K.; Kitagawa, T.; Yoshii, S.; Kurata, T. Complementary Integration of PDR with Absolute Positioning Methods Based on Time-Series Consistency. In Proceedings of the International Conference on Indoor Positioning and Indoor Navigation (IPIN2016) Work-in-Progress, Alcalá de Henares, Spain, 4–7 October 2016. [Google Scholar]

- Kourogi, M.; Kurata, T. Personal Positioning Based on Walking Locomotion Analysis with Self-Contained Sensors and a Wearable Camera. In Proceedings of the International Conference on Mixed and Augmented Reality 2003, Tokyo, Japan, 7–10 October 2003. [Google Scholar]

- xDR Challenge 2018. Available online: https://unit.aist.go.jp/hiri/xDR-Challenge-2018/ (accessed on 29 December 2018).

- GitHub. Available online: https://github.com/ (accessed on 27 December 2018).

- Evaluation Program. Available online: https://github.com/PDR-benchmark-standardization-committee/xDR-Challenge-2018-evaluation (accessed on 27 December 2018).

| PerfLoc by NIST | EvAAL /IPIN Competitions | Microsoft’s Competition@IPSN | Ubicomp/ISWC 2015 PDR Challenge | PDR Challenge in Warehouse Picking in IPIN 2017 | xDR Challenge for Warehouse Operations 2018 | |

|---|---|---|---|---|---|---|

| Scenario | 30 Scenarios (Emergency scenario) | Smart House/Assisted Living | Competing maximum accuracy in 2D or 3D | Indoor pedestrian navigation | Picking work inside a logistics warehouse (Specific Industrial Scenario) | General warehouse operations including picking, shipping and driving forklift |

| Walking/Motion | walking/running/ backwards/sidestep/ crawling/pushcart/ elevators (walked by actors on planed path with CPs) | Walking/Stairs/Lift/Phoning /Lateral movement (walked by actors on planed path with CPs) | Depends on operators (developers can operate their devices by themselves | Continuous walking while holding smartphone and looking at navigation screen | Includes many motions involved in picking work, not only walking | Includes many motions involved in picking, shipping operations and, not only walking. Some workers may drive forklift |

| Tester | Tester assigned by the organizer | Tester assigned by the organizer | Participants can run their system by themselves | Conference participants | Employees working in the warehouse | Employees working in the warehouse |

| One-site or Off-site | Off-site competition and Live demo | Separated on-site and off-site tracks | On-site | Data collection: on-site Evaluation: off-site | Off-site | Off-site |

| Target Methods | Arm-mounted smartphone based localization method (IMU, WiFi, GPS, Cellular) | Off-site: Smartphone base On-site: Smartphone based/ any body-mounted device (separated tracks) | 2D:Infra-free methods 3D:Allowed to arrange Infra. (# of anchor and type of devices are limited in 2018) | PDR+MAP | PDR+BLE+MAP+WMS | PDR/VDR+BLE+MAP+WMS |

| # of people and trial | 1 person × 4 devices (at the same time) × 30 scenario | Depends on year and track (e.g. 9 trials, 2016T3) | N/A | 90 people, 229 trials | 8 people, 8 trials | 34 people + 6 forklifts, 170 trials (PDR) + 30 trials (VDR) |

| Time per trial | Total 16 hours | Depends on years and tracks (e.g. 15 mins (2016T1,T2), 2 hours (2016T3)) | N/A | A few minutes | About 3 hours | About 8 hours |

| Area of test environment/ length of test path | 30,000 m2 | Depends on years and tracks (e.g., 7000 m2 @2016T1&T2) | Depends on years (e.g., 600 m2 @ 2018) | 100 m each | 8000 m2 | 8000 m2 |

| Evaluation metric | SE95 (95% Spherical Error) | 75 Percentile Error | Mean error | Mean error, SD of error | Integrated Evaluation (integrated by accuracy, naturalness, warehouse dedicated metrics) | Integrated Evaluation (integrated by accuracy, naturalness, warehouse dedicated metrics) |

| History/Remarks | 1 time (2017–2018) | 7 times (2011, 2012, 2013, (EvAAL), 2014, 2015 (+ETRI), 2016, 2017 (EvAAL/IPIN)) | 5 times (2014, 2015, 2016, 2017, 2018) | Collection of data of participants walking. The data are available at HASC (http://hub.hasc.jp/) as corpus data | Competition over integrated position using not only PDR, but also correction information such as BLE beacon signal, picking log (WMS), and maps | Consists of PDR and VDR tracks. Referential motion captured by MoCap. also shared for introducing typical motions. |

| # of Participants | 169 (registration)/16 (submission) | Depends on years and tracks (T1:5,T2:12, T3:17:T4:5@2018) | Depends on years and tracks (2D:12,3D:22@Y2018) | 4 (+1 Unofficial submission) | 5 | PDR:5, VDR:2 |

| Awards and Prizes for 1st Place | US$20,000 (US related group only) | Depends on years and tracks (e.g. 1500€ @2016) | US$1000 | JP\50,000 | JP\100,000 + prize or JP\150,000 | JP\200,000 (or JP\150,000) + prize(s) |

| Connection with Academic Conference | N/A | IPIN | IPSN | UbiComp/ ISWC2015 | IPIN2017(an official competition track | IPIN (Special Session) |

| Team | Ed | Es | Ep | Ev | Eo | Ef | CE50 (Ref) | EAG50 (Ref) | C.E. |

|---|---|---|---|---|---|---|---|---|---|

| Team 1 | 66.876 | 93.692 | 97.195 | 99.998 | 51.821 | 11.323 | 10.606 | 0.173 | 68.652 |

| Team 2 | 71.524 | 94.872 | 43.545 | 100 | 99.876 | 100 | 9.258 | 0.150 | 90.419 |

| Team 3 | 76.459 | 95.333 | 72.719 | 87.835 | 93.549 | 99.271 | 7.827 | 0.141 | 89.161 |

| Team 4 | 51.934 | 90.769 | 84.965 | 95.657 | 59.623 | 99.239 | 14.939 | 0.230 | 74.948 |

| Team 5 | 78.386 | 96.308 | 97.484 | 99.093 | 45.530 | 100 | 7.268 | 0.122 | 78.336 |

| Team 6 (AIST) | 80.272 | 96.718 | 81.057 | 98.711 | 89.968 | 95.879 | 6.721 | 0.114 | 90.836 |

| Team | EAG by Simple Linear Regression | EAG by Robust Linear Regression | EAG25 | EAG50 | EAG75 |

|---|---|---|---|---|---|

| Team 1 | 0.106 | 0.128 | 0.069 | 0.173 | 0.311 |

| Team 2 | 0.139 | 0.169 | 0.095 | 0.230 | 0.441 |

| Team 3 | 0.089 | 0.100 | 0.064 | 0.122 | 0.240 |

| Team 4 | 0.100 | 0.107 | 0.077 | 0.141 | 0.262 |

| Team 5 | 0.093 | 0.106 | 0.085 | 0.150 | 0.309 |

| Team 6 (AIST) | 0.081 | 0.087 | 0.062 | 0.114 | 0.186 |

| Tracks | # of Trajectories | Total Time Length of Test Data | # of WMS Points Provided | # of WMS Points Hidden for Evaluation |

|---|---|---|---|---|

| PDR | 15 | 180 hours | 271 | 4877 |

| VDR | 8 | 85 hours | 125 | 1027 |

| PDR Track | Emedian_error | CE50 | Eaccum_ error | EAG50 | Evelocity | Efrequency | Eobstacle | Epicking | C.E |

|---|---|---|---|---|---|---|---|---|---|

| Team 1 | 72.14 | 9.08 | 100 | 0.031 | 99.00 | 99.87 | 100 | 98.73 | 91.47 |

| Team 2 | 20.36 | 24.01 | 99.44 | 0.061 | 99.00 | 79.06 | 99.93 | 99.00 | 73.68 |

| Team 3 | 71.99 | 9.12 | 100 | 0.026 | 96.93 | 100.00 | 97.60 | 95.80 | 90.72 |

| VDR Track | Emedian_error | CE50 | Eaccum_error | EAG50 | Evelocity | Efrequency | Eobstacle | Epicking | C.E |

|---|---|---|---|---|---|---|---|---|---|

| Team 1 | 49.30 | 15.70 | 99.86 | 0.053 | 99.75 | 99.25 | 100 | 97.38 | 84.51 |

| Track and Team | CE50 in BUP | CE50 Out of BUP | CE50 in Whole traj. | EAG50 in BUP | EAG50 Out of BUP | EAG50 in Whole traj. |

|---|---|---|---|---|---|---|

| PDR: Team 1 | 11.28 | 9.08 | 10.17 | 0.031 | 0.027 | 0.029 |

| PDR: Team 2 | 25.33 | 24.10 | 24.70 | 0.061 | 0.064 | 0.063 |

| PDR: Team 3 | 9.74 | 9.12 | 9.48 | 0.026 | 0.025 | 0.026 |

| VDR: Team 1 | 18.45 | 15.70 | 16.35 | 0.053 | 0.044 | 0.048 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ichikari, R.; Kaji, K.; Shimomura, R.; Kourogi, M.; Okuma, T.; Kurata, T. Off-Site Indoor Localization Competitions Based on Measured Data in a Warehouse. Sensors 2019, 19, 763. https://doi.org/10.3390/s19040763

Ichikari R, Kaji K, Shimomura R, Kourogi M, Okuma T, Kurata T. Off-Site Indoor Localization Competitions Based on Measured Data in a Warehouse. Sensors. 2019; 19(4):763. https://doi.org/10.3390/s19040763

Chicago/Turabian StyleIchikari, Ryosuke, Katsuhiko Kaji, Ryo Shimomura, Masakatsu Kourogi, Takashi Okuma, and Takeshi Kurata. 2019. "Off-Site Indoor Localization Competitions Based on Measured Data in a Warehouse" Sensors 19, no. 4: 763. https://doi.org/10.3390/s19040763