A Multi-Feature and Multi-Level Matching Algorithm Using Aerial Image and AIS for Vessel Identification

Abstract

:1. Introduction

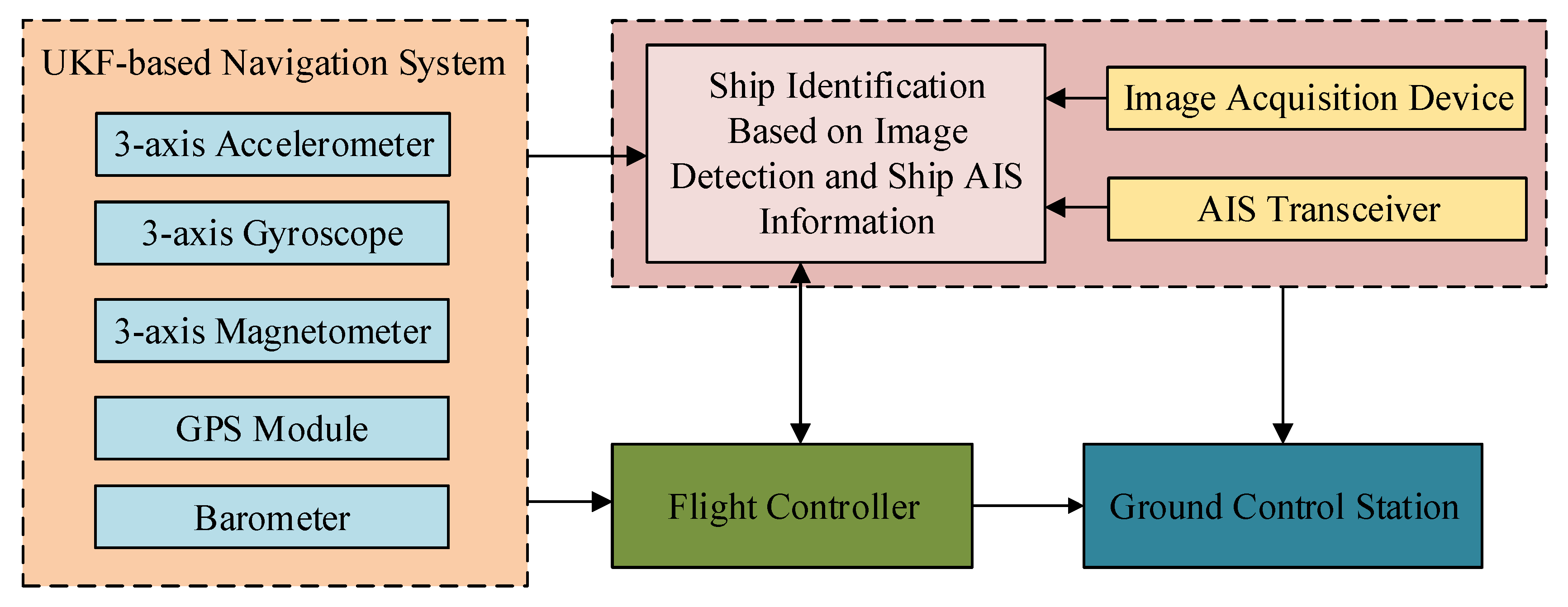

2. Systematic Design

2.1. System Architecture

2.2. Airframe

2.3. Propulsion and Navigation

2.4. Ground Communication System

2.5. Post-Imaging Processing and Video Transmission

3. Matching Algorithms, Image, and AIS Data

3.1. Image and AIS Information Processing

3.1.1. Image-Based Detection and Localization

3.1.2. Processing AIS Information

3.1.3. Calibration of the Image and AIS Information

- (1)

- Since the GPS module is located above the onboard camera, the Mar-UAV is at the center of the image.

- (2)

- Since the onboard camera is mounted on the pan-and-tilt, the camera’s shooting angle should be set perpendicularly to the ground.

- (3)

- ψ is the angular deviation for the transformation of a north-east (NE), world-to-camera frame.

- (1)

- A pulse signal is generated by the hardware to start the onboard camera and the AIS device, thus, ensuring the synchronization of the sampled data head.

- (2)

- Synchronization of the sampling period. The sampling period of the image is 33 ms, and the receiving period of the AIS data is 2–180 s. So it is necessary to synchronize the two different data. Considering that the sensors with different data frequencies need to be time aligned, we employed simplified filtering to interpolate some estimation values between the data of the AIS receiver with a lower frequency. The filtering is based on a linear kinematic model. This assumption is reasonable because the motion of a vessel is thought to be constant for short time periods. In detail, the filtering linearly interpolates the AIS data to 1 Hz and samples the image information to 1 Hz, thus ensuring the synchronization of the data sampling period.

3.2. Multi-Featured and Multi-Level Matching Algorithm

3.2.1. Multiple Feature Selection

3.2.2. Multi-Level Hierarchical Matching

3.2.3. The Multi-Featured and Multi-Level Matching Algorithm

3.2.4. Error Analysis of the Matching Algorithm

4. Experimental Results and Analysis

4.1. Point to Track Matching Results and Analysis

4.2. Track-to-Track Matching Results and Analysis

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Huang, R. Maritime intelligent real-time control system based on UAV. In Proceedings of the IEEE International Conference on Robots & Intelligent System (ICRIS), Changsha, China, 26–27 May 2018; pp. 10–12. [Google Scholar]

- Nyman, E. Techno-optimism and ocean governance: New trends in maritime monitoring. Mar. Policy 2019, 99, 30–33. [Google Scholar] [CrossRef]

- Suzuki, T.; Meguro, J.; Amano, Y.; Hashizume, T. Development of information collecting system using a small unmanned aerial vehicle for disaster prevention and mitigation. J. Robot. Soc. Jpn. 2008, 26, 553–560. [Google Scholar] [CrossRef]

- Candiago, S.; Remondino, F.; De Giglio, M.; Dubbini, M.; Gattelli, M. Evaluating multispectral images and vegetation indices for precision farming applications from UAV images. Remote Sens. 2015, 7, 4026–4047. [Google Scholar] [CrossRef]

- Tomic, T.; Schmid, K.; Lutz, P.; Domel, A.; Kassecker, M.; Mair, E.; Lynne Grixa, I.; Ruess, F.; Suppa, M.; Burschka, D. Toward a fully autonomous UAV: Research platform for indoor and outdoor urban search and rescue. IEEE Robot. Autom. Mag. 2012, 19, 46–56. [Google Scholar] [CrossRef]

- Bernard, M.; Kondak, K.; Maza, I.; Ollero, A. Autonomous transportation and deployment with aerial robots for search and rescue missions. J. Field Robot. 2011, 28, 914–931. [Google Scholar] [CrossRef]

- Lu, Y.; Macias, D.; Dean, Z.S.; Kreger, N.R.; Wong, P.K. A UAV-mounted whole cell biosensor system for environmental monitoring applications. IEEE Trans. Nanobiosci. 2015, 14, 811–817. [Google Scholar] [CrossRef] [PubMed]

- Meo, R.; Roglia, E.; Bottino, A. The exploitation of data from remote and human sensors for environment monitoring in the SMAT project. Sensors 2012, 12, 17504–17535. [Google Scholar] [CrossRef] [PubMed]

- Cruz, H.; Eckert, M.; Meneses, J.; Martínez, J.F. Efficient forest fire detection index for application in unmanned aerial systems (UASs). Sensors 2016, 16, 893. [Google Scholar] [CrossRef] [PubMed]

- Weiss, S.; Achtelik, M.; Kneip, L.; Scaramuzza, D.; Siegwart, R. Intuitive 3D maps for MAV terrain exploration and obstacle avoidance. J. Intel. Robot. Syst. 2011, 61, 473–493. [Google Scholar] [CrossRef]

- Anicet, A.S.; Biu, M.; Lindstrøm, U.; Solbo, S.A.; Broms, F.; Carroll, J. Monitoring marine mammals using unmanned aerial vehicles: Quantifying detection certainty. Ecosphere 2018, 9. [Google Scholar] [CrossRef]

- Comb, L.; Biglia, A.; Aimonino, D.R.; Gay, P. Unsupervised detection of vineyards by 3D point-cloud UAV photogrammetry for precision agriculture. Comput. Electron. Agric. 2018, 155, 84–95. [Google Scholar] [CrossRef]

- Ribeiro, R.; Cruz, G.; Matos, J.; Bernardino, A. A dataset for airborne maritime surveillance environments. IEEE Trans. Circuits Syst. Video Technol. 2017. [Google Scholar] [CrossRef]

- Zhang, Y.; Yuan, X.; Fang, Y.; Chen, S. UAV low altitude photogrammetry for power line inspection. ISPRS Int. J. Geo-Inf. 2017, 6, 14. [Google Scholar] [CrossRef]

- Freitas, S.; Almeida, C.; Silva, H.; Almeida, J.; Silva, E. Supervised classification for hyperspectral imaging in UAV maritime target detection. In Proceedings of the IEEE International Conference on Autonomous Robot Systems and Competitions (ICARSC), Torres Vedras, Portugal, 26–28 April 2017; pp. 84–90. [Google Scholar]

- Mustapa, Z.; Saat, S.; Husin, S.H.; Abas, N. Altitude controller design for multi-copter UAV. In Proceedings of the IEEE International Conference on Computer, Communications, and Control Technology (I4CT), Langkawi, Malaysia, 2–4 September 2014; pp. 382–387. [Google Scholar]

- Dooly, G.; Omerdic, E.; Coleman, J.; Miller, L.; Kaknjo, A.; Hayes, J.; Braga, J.; Farreira, F.; Conlon, H.; Barry, H.; et al. Unmanned vehicles for maritime spill response case study: Exercise cathach. Mar. Pollut. Bull. 2016, 110, 528–538. [Google Scholar] [CrossRef] [PubMed]

- Jung, Y.; Cho, S.; Shim, D.H. A trajectory-tracking controller design using L1 adaptive control for multi-rotor UAVs. In Proceedings of the IEEE International Conference on Unmanned Aircraft Systems (ICUAS), Denver, CO, USA, 9–12 June 2015; pp. 132–138. [Google Scholar]

- Yuan, H.; Xiao, C.; Zhan, W.; Wang, Y.; Shi, C.; Ye, H.; Jiang, K.; Ye, Z.; Zhou, C.; Wen, Y.; et al. Target detection, positioning and tracking using new UAV gas sensor systems: Simulation and analysis. J. Intel. Robot. Syst. 2018, 1–12. [Google Scholar] [CrossRef]

- Ross, E.; Arifin, B.; Brodsky, Y. An information system for ship detection and identification. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Vancouver, BC, Canada, 24–29 July 2011; pp. 2081–2084. [Google Scholar]

- Habtemariam, B.; Tharmarasa, R.; McDonald, M.; Kirubarajan, T. Measurement level AIS/radar fusion. Signal Process. 2015, 106, 348–357. [Google Scholar] [CrossRef]

- Lang, H.; Wu, S.; Xu, Y. Ship classification in SAR images improved by AIS knowledge transfer. IEEE Geosci. Remote Sens. Lett. 2018, 15, 439–443. [Google Scholar] [CrossRef]

- Pelich, R.; Longépé, N.; Mercier, G.; Hajduch, G.; Garello, R. AIS-based evaluation of target detectors and SAR sensors characteristics for maritime surveillance. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 3892–3901. [Google Scholar] [CrossRef]

- Zhao, Z.; Ji, K.; Xing, X.; Zou, H.; Zhou, S. Ship surveillance by integration of space-borne SAR and AIS–review of current research. J. Navig. 2014, 67, 177–189. [Google Scholar] [CrossRef]

- Liu, X.; Cai, Z. Advanced obstacles detection and tracking by fusing millimeter wave radar and image sensor data. In Proceedings of the IEEE International Conference on Control Automation & Systems (ICCAS), Gyeonggi-do, Korea, 27–30 October 2010; pp. 1115–1120. [Google Scholar]

- Qi, J.; Song, D.; Shang, H.; Wang, N.; Hua, C.; Wu, C.; Qi, X.; Han, J. Search and rescue rotary-wing UAV and its application to the lushan ms 7.0 earthquake. J. Field Robot. 2016, 33, 290–321. [Google Scholar] [CrossRef]

- Saadeddin, K.; Abdel-Hafez, M.F.; Jaradat, M.A.; Jarrah, M.A. Optimization of intelligent-based approach for low-cost INS/GPS navigation system. In Proceedings of the IEEE International Conference on Unmanned Aircraft Systems (ICUAS), Atlanta, GA, USA, 28–31 May 2013; pp. 668–677. [Google Scholar]

- Sun, J.; Li, B.; Jiang, Y.; Wen, C.Y. A camera-based target detection and positioning UAV system for search and rescue (SAR) purposes. Sensors 2016, 16, 1778. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Li, M.; Zhang, L. The distortion correction of large view wide-angle lens for image mosaic based on OpenCV. In Proceedings of the IEEE International Conference on Mechatronic Science, Electric Engineering and Computer (MEC), Jilin, China, 19–22 August 2011; pp. 1074–1077. [Google Scholar]

- Dzvonkovskaya, A.; Rohling, H. HF radar performance analysis based on AIS ship information. In Proceedings of the IEEE Radar Conference, Washington, DC, USA, 10–14 May 2010; pp. 1239–1244. [Google Scholar]

| Types | Parameter |

|---|---|

| Maximum size of the whole machine | 1710 ± 20 mm |

| Motor wheelbase | 955 ± 10 mm |

| Standard takeoff weight | 8.1 kg |

| Maximum takeoff weight | 10.7 kg |

| Task load | ≤3 kg |

| No-load hover time | ≥50 min |

| Maximum wind resistance | Level 6 wind |

| Maximum flight speed | 12 m/s |

| Maximum flight height | 1000 m |

| GPS hover accuracy | Vertical direction: ±1.5 m Horizontal direction: ±2 m |

| Remote maximum control distance | 7 km |

| Ground station maximum control distance | 10 km |

| Description | Decoding Information |

|---|---|

| Type of information | 1 |

| Status | Engine in use |

| MMSI | 413791052 |

| Ground heading | 227.9° |

| Ground speed | 3.8 kn |

| Longitude | 114.34549° |

| Latitude | 30.6284433° |

| Description | Decoding Information |

|---|---|

| Type of information | 5 |

| Name | HANGJUN14 |

| MMSI | 412070210 |

| Type | Cargo ship |

| Distance from the reference point to the bow | 48 m |

| Distance from the reference point to the stern | 25 m |

| Distance from the reference point to left chord | 12 m |

| Distance from the reference point to right chord | 2 m |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xiu, S.; Wen, Y.; Yuan, H.; Xiao, C.; Zhan, W.; Zou, X.; Zhou, C.; Shah, S.C. A Multi-Feature and Multi-Level Matching Algorithm Using Aerial Image and AIS for Vessel Identification. Sensors 2019, 19, 1317. https://doi.org/10.3390/s19061317

Xiu S, Wen Y, Yuan H, Xiao C, Zhan W, Zou X, Zhou C, Shah SC. A Multi-Feature and Multi-Level Matching Algorithm Using Aerial Image and AIS for Vessel Identification. Sensors. 2019; 19(6):1317. https://doi.org/10.3390/s19061317

Chicago/Turabian StyleXiu, Supu, Yuanqiao Wen, Haiwen Yuan, Changshi Xiao, Wenqiang Zhan, Xiong Zou, Chunhui Zhou, and Sayed Chhattan Shah. 2019. "A Multi-Feature and Multi-Level Matching Algorithm Using Aerial Image and AIS for Vessel Identification" Sensors 19, no. 6: 1317. https://doi.org/10.3390/s19061317

APA StyleXiu, S., Wen, Y., Yuan, H., Xiao, C., Zhan, W., Zou, X., Zhou, C., & Shah, S. C. (2019). A Multi-Feature and Multi-Level Matching Algorithm Using Aerial Image and AIS for Vessel Identification. Sensors, 19(6), 1317. https://doi.org/10.3390/s19061317