Classifying Image Stacks of Specular Silicon Wafer Back Surface Regions: Performance Comparison of CNNs and SVMs

Abstract

:1. Introduction

2. Material and Methods

2.1. Image Stacks

2.2. Classification

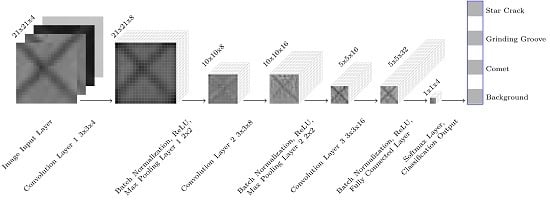

2.2.1. CNN

2.2.2. SVM

2.2.3. CNN Features and SVM

3. Results and Discussion

3.1. CNN

3.2. SVM

3.3. CNN Features and SVM

3.4. Comparison: CNN vs. SVM vs. CNN + SVM

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Werling, S.; Balzer, J.; Beyerer, J. A new approach for specular surface reconstruction using deflectometric methods. Computer 2007, 2, S1. [Google Scholar]

- Kofler, C.; Spöck, G.; Muhr, R. Classifying Defects in Topography Images of Silicon Wafers. In Proceedings of the 2017 Winter Simulation Conference (WSC ’17), Las Vegas, NV, USA, 3–6 December 2017; IEEE Press: Piscataway, NJ, USA, 2017; pp. 300:1–300:12. [Google Scholar]

- Kofler, C.; Muhr, R.; Spöck, G. Detecting Star Cracks in Topography Images of Specular Back Surfaces of Structured Wafers. In Proceedings of the 2018 17th IEEE International Conference on Machine Learning and Applications (ICMLA), Orlando, FL, USA, 17–20 December 2018; pp. 406–412. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G.E. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Leo, M.; Furnari, A.; Medioni, G.G.; Trivedi, M.; Farinella, G.M. Deep Learning for Assistive Computer Vision. In Computer Vision—ECCV 2018 Workshops; Leal-Taixé, L., Roth, S., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 3–14. [Google Scholar]

- Leo, M.; Looney, D.; D’Orazio, T.; Mandic, D.P. Identification of Defective Areas in Composite Materials by Bivariate EMD Analysis of Ultrasound. IEEE Trans. Instrum. Meas. 2012, 61, 221–232. [Google Scholar] [CrossRef]

- Song, L.; Li, X.; Yang, Y.; Zhu, X.; Guo, Q.; Yang, H. Detection of Micro-Defects on Metal Screw Surfaces Based on Deep Convolutional Neural Networks. Sensors 2018, 18, 3709. [Google Scholar] [CrossRef] [PubMed]

- Haselmann, M.; Gruber, D. Supervised Machine Learning Based Surface Inspection by Synthetizing Artificial Defects. In Proceedings of the 2017 16th IEEE International Conference on Machine Learning and Applications (ICMLA), Cancun, Mexico, 18–21 December 2017; pp. 390–395. [Google Scholar]

- Haselmann, M.; Gruber, D.P. Pixel-Wise Defect Detection by CNNs without Manually Labeled Training Data. Appl. Artif. Intell. 2019, 33, 548–566. [Google Scholar] [CrossRef]

- Mei, S.; Wang, Y.; Wen, G. Automatic Fabric Defect Detection with a Multi-Scale Convolutional Denoising Autoencoder Network Model. Sensors 2018, 18, 1064. [Google Scholar] [CrossRef] [PubMed]

- Napoletano, P.; Piccoli, F.; Schettini, R. Anomaly Detection in Nanofibrous Materials by CNN-Based Self-Similarity. Sensors 2018, 18, 209. [Google Scholar] [CrossRef] [PubMed]

- Tao, X.; Zhang, D.; Ma, W.; Liu, X.; Xu, D. Automatic Metallic Surface Defect Detection and Recognition with Convolutional Neural Networks. Appl. Sci. 2018, 8, 1575. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016; Available online: http://www.deeplearningbook.org (accessed on 20 February 2019).

- Bishop, C.M. Pattern Recognition and Machine Learning; Springer: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Perner, P. Machine Learning and Data Mining in Pattern Recognition. In Proceedings of the 6th International Conference (MLDM 2009), Leipzig, Germany, 23–25 July 2009; LNCS Sublibrary: Artificial Intelligence; Springer: Berlin/Heidelberg, Germany, 2009. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.; Li, K.; Fei-Fei, L. ImageNet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Niu, X.X.; Suen, C.Y. A novel hybrid CNN-SVM classifier for recognizing handwritten digits. Pattern Recogn. 2012, 45, 1318–1325. [Google Scholar] [CrossRef]

- Chen, L.; Wang, S.; Fan, W.; Sun, J.; Naoi, S. Beyond human recognition: A CNN-based framework for handwritten character recognition. In Proceedings of the 2015 3rd IAPR Asian Conference on Pattern Recognition (ACPR), Kuala Lumpur, Malaysia, 3–6 November 2015; pp. 695–699. [Google Scholar]

- Ciresan, D.C.; Meier, U.; Gambardella, L.M.; Schmidhuber, J. Convolutional Neural Network Committees for Handwritten Character Classification. In Proceedings of the 2011 International Conference on Document Analysis and Recognition, Beijing, China, 18–21 December 2011; pp. 1135–1139. [Google Scholar]

- Byun, H.; Lee, S.W. Applications of Support Vector Machines for Pattern Recognition: A Survey. In Pattern Recognition with Support Vector Machines; Lee, S.W., Verri, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2002; pp. 213–236. [Google Scholar]

- Duda, R.O.; Hart, P.E.; Stork, D.G. Pattern Classification; John Wiley & Sons: Hoboken, NJ, USA, 2012. [Google Scholar]

- Gonzalez, R.C. Digital Image Processing; Pearson Education: London, UK, 2009. [Google Scholar]

- Waller, L.; Gotway, C. Applied Spatial Statistics for Public Health Data; Wiley Series in Probability and Statistics; Wiley: New York, NY, USA, 2004. [Google Scholar]

- Brownlee, J. Machine Learning Mastery: An Introduction to Feature Selection. 2015. Available online: http://machinelearningmastery.com/an-introduction-to-feature-selection (accessed on 20 February 2019).

- Webb, A. Statistical Pattern Recognition; Wiley InterScience Electronic Collection; Wiley: New York, NY, USA, 2003. [Google Scholar]

- Guyon, I.; Elisseeff, A. An Introduction to Variable and Feature Selection. J. Mach. Learn. Res. 2003, 3, 1157–1182. [Google Scholar]

- Yang, W.; Wang, K.; Zuo, W. Neighborhood Component Feature Selection for High-Dimensional Data. J. Comput. 2012, 7, 161–168. [Google Scholar] [CrossRef]

- MATLAB. Version 9.4.0.813654 (R2018a); The MathWorks Inc.: Natick, MA, USA, 2018.

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kofler, C.; Muhr, R.; Spöck, G. Classifying Image Stacks of Specular Silicon Wafer Back Surface Regions: Performance Comparison of CNNs and SVMs. Sensors 2019, 19, 2056. https://doi.org/10.3390/s19092056

Kofler C, Muhr R, Spöck G. Classifying Image Stacks of Specular Silicon Wafer Back Surface Regions: Performance Comparison of CNNs and SVMs. Sensors. 2019; 19(9):2056. https://doi.org/10.3390/s19092056

Chicago/Turabian StyleKofler, Corinna, Robert Muhr, and Gunter Spöck. 2019. "Classifying Image Stacks of Specular Silicon Wafer Back Surface Regions: Performance Comparison of CNNs and SVMs" Sensors 19, no. 9: 2056. https://doi.org/10.3390/s19092056